Writing Student Learning Outcomes

Student learning outcomes state what students are expected to know or be able to do upon completion of a course or program. Course learning outcomes may contribute, or map to, program learning outcomes, and are required in group instruction course syllabi .

At both the course and program level, student learning outcomes should be clear, observable and measurable, and reflect what will be included in the course or program requirements (assignments, exams, projects, etc.). Typically there are 3-7 course learning outcomes and 3-7 program learning outcomes.

When submitting learning outcomes for course or program approvals, or assessment planning and reporting, please:

- Begin with a verb (exclude any introductory text and the phrase “Students will…”, as this is assumed)

- Limit the length of each learning outcome to 400 characters

- Exclude special characters (e.g., accents, umlats, ampersands, etc.)

- Exclude special formatting (e.g., bullets, dashes, numbering, etc.)

Writing Course Learning Outcomes Video

Watch Video

Steps for Writing Outcomes

The following are recommended steps for writing clear, observable and measurable student learning outcomes. In general, use student-focused language, begin with action verbs and ensure that the learning outcomes demonstrate actionable attributes.

1. Begin with an Action Verb

Begin with an action verb that denotes the level of learning expected. Terms such as know , understand , learn , appreciate are generally not specific enough to be measurable. Levels of learning and associated verbs may include the following:

- Remembering and understanding: recall, identify, label, illustrate, summarize.

- Applying and analyzing: use, differentiate, organize, integrate, apply, solve, analyze.

- Evaluating and creating: Monitor, test, judge, produce, revise, compose.

Consult Bloom’s Revised Taxonomy (below) for more details. For additional sample action verbs, consult this list from The Centre for Learning, Innovation & Simulation at The Michener Institute of Education at UNH.

2. Follow with a Statement

- Identify and summarize the important feature of major periods in the history of western culture

- Apply important chemical concepts and principles to draw conclusions about chemical reactions

- Demonstrate knowledge about the significance of current research in the field of psychology by writing a research paper

- Length – Should be no more than 400 characters.

*Note: Any special characters (e.g., accents, umlats, ampersands, etc.) and formatting (e.g., bullets, dashes, numbering, etc.) will need to be removed when submitting learning outcomes through HelioCampus Assessment and Credentialing (formerly AEFIS) and other digital campus systems.

Revised Bloom’s Taxonomy of Learning: The “Cognitive” Domain

To the right: find a sampling of verbs that represent learning at each level. Find additional action verbs .

*Text adapted from: Bloom, B.S. (Ed.) 1956. Taxonomy of Educational Objectives: The classification of educational goals. Handbook 1, Cognitive Domain. New York.

Anderson, L.W. (Ed.), Krathwohl, D.R. (Ed.), Airasian, P.W., Cruikshank, K.A., Mayer, R.E., Pintrich, P.R., Raths, J., & Wittrock, M.C. (2001). A taxonomy for learning, teaching, and assessing: A revision of Bloom’s Taxonomy of Educational Objectives (Complete edition). New York: Longman.

Examples of Learning Outcomes

Academic program learning outcomes.

The following examples of academic program student learning outcomes come from a variety of academic programs across campus, and are organized in four broad areas: 1) contextualization of knowledge; 2) praxis and technique; 3) critical thinking; and, 4) research and communication.

Student learning outcomes for each UW-Madison undergraduate and graduate academic program can be found in Guide . Click on the program of your choosing to find its designated learning outcomes.

This is an accordion element with a series of buttons that open and close related content panels.

Contextualization of Knowledge

Students will…

- identify, formulate and solve problems using appropriate information and approaches.

- demonstrate their understanding of major theories, approaches, concepts, and current and classical research findings in the area of concentration.

- apply knowledge of mathematics, chemistry, physics, and materials science and engineering principles to materials and materials systems.

- demonstrate an understanding of the basic biology of microorganisms.

Praxis and Technique

- utilize the techniques, skills and modern tools necessary for practice.

- demonstrate professional and ethical responsibility.

- appropriately apply laws, codes, regulations, architectural and interiors standards that protect the health and safety of the public.

Critical Thinking

- recognize, describe, predict, and analyze systems behavior.

- evaluate evidence to determine and implement best practice.

- examine technical literature, resolve ambiguity and develop conclusions.

- synthesize knowledge and use insight and creativity to better understand and improve systems.

Research and Communication

- retrieve, analyze, and interpret the professional and lay literature providing information to both professionals and the public.

- propose original research: outlining a plan, assembling the necessary protocol, and performing the original research.

- design and conduct experiments, and analyze and interpret data.

- write clear and concise technical reports and research articles.

- communicate effectively through written reports, oral presentations and discussion.

- guide, mentor and support peers to achieve excellence in practice of the discipline.

- work in multi-disciplinary teams and provide leadership on materials-related problems that arise in multi-disciplinary work.

Course Learning Outcomes

- identify, formulate and solve integrative chemistry problems. (Chemistry)

- build probability models to quantify risks of an insurance system, and use data and technology to make appropriate statistical inferences. (Actuarial Science)

- use basic vector, raster, 3D design, video and web technologies in the creation of works of art. (Art)

- apply differential calculus to model rates of change in time of physical and biological phenomena. (Math)

- identify characteristics of certain structures of the body and explain how structure governs function. (Human Anatomy lab)

- calculate the magnitude and direction of magnetic fields created by moving electric charges. (Physics)

Additional Resources

- Bloom’s Taxonomy

- The Six Facets of Understanding – Wiggins, G. & McTighe, J. (2005). Understanding by Design (2nd ed.). ASCD

- Taxonomy of Significant Learning – Fink, L.D. (2003). A Self-Directed Guide to Designing Courses for Significant Learning. Jossey-Bass

- College of Agricultural & Life Sciences Undergraduate Learning Outcomes

- College of Letters & Science Undergraduate Learning Outcomes

- Skip to Content

- Skip to Main Navigation

- Skip to Search

Indiana University Indianapolis Indiana University Indianapolis IU Indianapolis

- Center Directory

- Hours, Location, & Contact Info

- Course (Re)Design Institute for Student Success

- Plater-Moore Conference on Teaching and Learning

- Teaching Foundations Webinar Series

- Associate Faculty Development

- Early Career Teaching Academy

- Faculty Fellows Program

- Graduate Student and Postdoc Teaching Development

- Awardees' Expectations

- Request for Proposals

- Proposal Writing Guidelines

- Support Letter

- Proposal Review Process and Criteria

- Support for Developing a Proposal

- Download the Budget Worksheet

- CEG Travel Grant

- Albright and Stewart

- Bayliss and Fuchs

- Glassburn and Starnino

- Rush Hovde and Stella

- Mithun and Sankaranarayanan

- Hollender, Berlin, and Weaver

- Rose and Sorge

- Dawkins, Morrow, Cooper, Wilcox, and Rebman

- Wilkerson and Funk

- Vaughan and Pierce

- CEG Scholars

- Broxton Bird

- Jessica Byram

- Angela and Neetha

- Travis and Mathew

- Kelly, Ron, and Jill

- Allison, David, Angela, Priya, and Kelton

- Pamela And Laura

- Tanner, Sally, and Jian Ye

- Mythily and Twyla

- Learning Environments Grant

- Extended Reality Initiative(XRI)

- Champion for Teaching Excellence Award

- Feedback on Teaching

- Consultations

- Equipment Loans

- Quality Matters@IU

- To Your Door Workshops

- Support for DEI in Teaching

- IU Teaching Resources

- Just-In-Time Course Design

- Teaching Online

- Description and Purpose

- Examples Repository

- Submit Examples

- Using the Taxonomy

- Scholarly Teaching Growth Survey

- The Forum Network

- Media Production Spaces

- CTL Happenings Archive

- Recommended Readings Archive

Center for Teaching and Learning

- Preparing to Teach

Writing and Assessing Student Learning Outcomes

By the end of a program of study, what do you want students to be able to do? How can your students demonstrate the knowledge the program intended them to learn? Student learning outcomes are statements developed by faculty that answer these questions. Typically, Student learning outcomes (SLOs) describe the knowledge, skills, attitudes, behaviors or values students should be able to demonstrate at the end of a program of study. A combination of methods may be used to assess student attainment of learning outcomes.

Characteristics of Student Learning Outcomes (SLOs)

- Describe what students should be able to demonstrate, represent or produce upon completion of a program of study (Maki, 2010)

Student learning outcomes also:

- Should align with the institution’s curriculum and co-curriculum outcomes (Maki, 2010)

- Should be collaboratively authored and collectively accepted (Maki, 2010)

- Should incorporate or adapt professional organizations outcome statements when they exist (Maki, 2010)

- Can be quantitatively and/or qualitatively assessed during a student’s studies (Maki, 2010)

Examples of Student Learning Outcomes

The following examples of student learning outcomes are too general and would be very hard to measure : (T. Banta personal communication, October 20, 2010)

- will appreciate the benefits of exercise science.

- will understand the scientific method.

- will become familiar with correct grammar and literary devices.

- will develop problem-solving and conflict resolution skills.

The following examples, while better are still general and again would be hard to measure. (T. Banta personal communication, October 20, 2010)

- will appreciate exercise as a stress reduction tool.

- will apply the scientific method in problem solving.

- will demonstrate the use of correct grammar and various literary devices.

- will demonstrate critical thinking skills, such as problem solving as it relates to social issues.

The following examples are specific examples and would be fairly easy to measure when using the correct assessment measure: (T. Banta personal communication, October 20, 2010)

- will explain how the science of exercise affects stress.

- will design a grounded research study using the scientific method.

- will demonstrate the use of correct grammar and various literary devices in creating an essay.

- will analyze and respond to arguments about racial discrimination.

Importance of Action Verbs and Examples from Bloom’s Taxonomy

- Action verbs result in overt behavior that can be observed and measured (see list below).

- Verbs that are unclear, and verbs that relate to unobservable or unmeasurable behaviors, should be avoided (e.g., appreciate, understand, know, learn, become aware of, become familiar with). View Bloom’s Taxonomy Action Verbs

Assessing SLOs

Instructors may measure student learning outcomes directly, assessing student-produced artifacts and performances; instructors may also measure student learning indirectly, relying on students own perceptions of learning.

Direct Measures of Assessment

Direct measures of student learning require students to demonstrate their knowledge and skills. They provide tangible, visible and self-explanatory evidence of what students have and have not learned as a result of a course, program, or activity (Suskie, 2004; Palomba & Banta, 1999). Examples of direct measures include:

- Objective tests

- Presentations

- Classroom assignments

This example of a Student Learning Outcome (SLO) from psychology could be assessed by an essay, case study, or presentation: Students will analyze current research findings in the areas of physiological psychology, perception, learning, abnormal and social psychology.

Indirect Measures of Assessment

Indirect measures of student learning capture students’ perceptions of their knowledge and skills; they supplement direct measures of learning by providing information about how and why learning is occurring. Examples of indirect measures include:

- Self assessment

- Peer feedback

- End of course evaluations

- Questionnaires

- Focus groups

- Exit interviews

Using the SLO example from above, an instructor could add questions to an end-of-course evaluation asking students to self-assess their ability to analyze current research findings in the areas of physiological psychology, perception, learning, abnormal and social psychology. Doing so would provide an indirect measure of the same SLO.

- Balances the limitations inherent when using only one method (Maki, 2004).

- Provides students the opportunity to demonstrate learning in an alternative way (Maki, 2004).

- Contributes to an overall interpretation of student learning at both institutional and programmatic levels.

- Values the many ways student learn (Maki, 2004).

Bloom, B. (1956) A taxonomy of educational objectives, The classification of educational goals-handbook I: Cognitive domain . New York: McKay .

Maki, P.L. (2004). Assessing for learning: Building a sustainable commitment across the institution . Sterling, VA: Stylus.

Maki, P.L. (2010 ). Assessing for learning: Building a sustainable commitment across the institution (2nd ed.) . Sterling, VA: Stylus.

Palomba, C.A., & Banta, T.W. (1999). Assessment essentials: Planning, implementing, and improving assessment in higher education . San Francisco: Jossey-Bass.

Suskie, L. (2004). Assessing student learning: A common sense guide. Bolton, MA: Anker Publishing.

Revised by Doug Jerolimov (April, 2016)

Helpful Links

- Revise Bloom's Taxonomy Action Verbs

- Fink's Taxonomy

Related Guides

- Creating a Syllabus

- Assessing Student Learning Outcomes

Recommended Books

Center for Teaching and Learning social media channels

Creating Learning Outcomes

Main navigation.

A learning outcome is a concise description of what students will learn and how that learning will be assessed. Having clearly articulated learning outcomes can make designing a course, assessing student learning progress, and facilitating learning activities easier and more effective. Learning outcomes can also help students regulate their learning and develop effective study strategies.

Defining the terms

Educational research uses a number of terms for this concept, including learning goals, student learning objectives, session outcomes, and more.

In alignment with other Stanford resources, we will use learning outcomes as a general term for what students will learn and how that learning will be assessed. This includes both goals and objectives. We will use learning goals to describe general outcomes for an entire course or program. We will use learning objectives when discussing more focused outcomes for specific lessons or activities.

For example, a learning goal might be “By the end of the course, students will be able to develop coherent literary arguments.”

Whereas a learning objective might be, “By the end of Week 5, students will be able to write a coherent thesis statement supported by at least two pieces of evidence.”

Learning outcomes benefit instructors

Learning outcomes can help instructors in a number of ways by:

- Providing a framework and rationale for making course design decisions about the sequence of topics and instruction, content selection, and so on.

- Communicating to students what they must do to make progress in learning in your course.

- Clarifying your intentions to the teaching team, course guests, and other colleagues.

- Providing a framework for transparent and equitable assessment of student learning.

- Making outcomes concerning values and beliefs, such as dedication to discipline-specific values, more concrete and assessable.

- Making inclusion and belonging explicit and integral to the course design.

Learning outcomes benefit students

Clearly, articulated learning outcomes can also help guide and support students in their own learning by:

- Clearly communicating the range of learning students will be expected to acquire and demonstrate.

- Helping learners concentrate on the areas that they need to develop to progress in the course.

- Helping learners monitor their own progress, reflect on the efficacy of their study strategies, and seek out support or better strategies. (See Promoting Student Metacognition for more on this topic.)

Choosing learning outcomes

When writing learning outcomes to represent the aims and practices of a course or even a discipline, consider:

- What is the big idea that you hope students will still retain from the course even years later?

- What are the most important concepts, ideas, methods, theories, approaches, and perspectives of your field that students should learn?

- What are the most important skills that students should develop and be able to apply in and after your course?

- What would students need to have mastered earlier in the course or program in order to make progress later or in subsequent courses?

- What skills and knowledge would students need if they were to pursue a career in this field or contribute to communities impacted by this field?

- What values, attitudes, and habits of mind and affect would students need if they are to pursue a career in this field or contribute to communities impacted by this field?

- How can the learning outcomes span a wide range of skills that serve students with differing levels of preparation?

- How can learning outcomes offer a range of assessment types to serve a diverse student population?

Use learning taxonomies to inform learning outcomes

Learning taxonomies describe how a learner’s understanding develops from simple to complex when learning different subjects or tasks. They are useful here for identifying any foundational skills or knowledge needed for more complex learning, and for matching observable behaviors to different types of learning.

Bloom’s Taxonomy

Bloom’s Taxonomy is a hierarchical model and includes three domains of learning: cognitive, psychomotor, and affective. In this model, learning occurs hierarchically, as each skill builds on previous skills towards increasingly sophisticated learning. For example, in the cognitive domain, learning begins with remembering, then understanding, applying, analyzing, evaluating, and lastly creating.

Taxonomy of Significant Learning

The Taxonomy of Significant Learning is a non-hierarchical and integral model of learning. It describes learning as a meaningful, holistic, and integral network. This model has six intersecting domains: knowledge, application, integration, human dimension, caring, and learning how to learn.

See our resource on Learning Taxonomies and Verbs for a summary of these two learning taxonomies.

How to write learning outcomes

Writing learning outcomes can be made easier by using the ABCD approach. This strategy identifies four key elements of an effective learning outcome:

Consider the following example: Students (audience) , will be able to label and describe (behavior) , given a diagram of the eye at the end of this lesson (condition) , all seven extraocular muscles, and at least two of their actions (degree) .

Audience

Define who will achieve the outcome. Outcomes commonly include phrases such as “After completing this course, students will be able to...” or “After completing this activity, workshop participants will be able to...”

Keeping your audience in mind as you develop your learning outcomes helps ensure that they are relevant and centered on what learners must achieve. Make sure the learning outcome is focused on the student’s behavior, not the instructor’s. If the outcome describes an instructional activity or topic, then it is too focused on the instructor’s intentions and not the students.

Try to understand your audience so that you can better align your learning goals or objectives to meet their needs. While every group of students is different, certain generalizations about their prior knowledge, goals, motivation, and so on might be made based on course prerequisites, their year-level, or majors.

Use action verbs to describe observable behavior that demonstrates mastery of the goal or objective. Depending on the skill, knowledge, or domain of the behavior, you might select a different action verb. Particularly for learning objectives which are more specific, avoid verbs that are vague or difficult to assess, such as “understand”, “appreciate”, or “know”.

The behavior usually completes the audience phrase “students will be able to…” with a specific action verb that learners can interpret without ambiguity. We recommend beginning learning goals with a phrase that makes it clear that students are expected to actively contribute to progressing towards a learning goal. For example, “through active engagement and completion of course activities, students will be able to…”

Example action verbs

Consider the following examples of verbs from different learning domains of Bloom’s Taxonomy . Generally speaking, items listed at the top under each domain are more suitable for advanced students, and items listed at the bottom are more suitable for novice or beginning students. Using verbs and associated skills from all three domains, regardless of your discipline area, can benefit students by diversifying the learning experience.

For the cognitive domain:

- Create, investigate, design

- Evaluate, argue, support

- Analyze, compare, examine

- Solve, operate, demonstrate

- Describe, locate, translate

- Remember, define, duplicate, list

For the psychomotor domain:

- Invent, create, manage

- Articulate, construct, solve

- Complete, calibrate, control

- Build, perform, execute

- Copy, repeat, follow

For the affective domain:

- Internalize, propose, conclude

- Organize, systematize, integrate

- Justify, share, persuade

- Respond, contribute, cooperate

- Capture, pursue, consume

Often we develop broad goals first, then break them down into specific objectives. For example, if a goal is for learners to be able to compose an essay, break it down into several objectives, such as forming a clear thesis statement, coherently ordering points, following a salient argument, gathering and quoting evidence effectively, and so on.

State the conditions, if any, under which the behavior is to be performed. Consider the following conditions:

- Equipment or tools, such as using a laboratory device or a specified software application.

- Situation or environment, such as in a clinical setting, or during a performance.

- Materials or format, such as written text, a slide presentation, or using specified materials.

The level of specificity for conditions within an objective may vary and should be appropriate to the broader goals. If the conditions are implicit or understood as part of the classroom or assessment situation, it may not be necessary to state them.

When articulating the conditions in learning outcomes, ensure that they are sensorily and financially accessible to all students.

Degree

Degree states the standard or criterion for acceptable performance. The degree should be related to real-world expectations: what standard should the learner meet to be judged proficient? For example:

- With 90% accuracy

- Within 10 minutes

- Suitable for submission to an edited journal

- Obtain a valid solution

- In a 100-word paragraph

The specificity of the degree will vary. You might take into consideration professional standards, what a student would need to succeed in subsequent courses in a series, or what is required by you as the instructor to accurately assess learning when determining the degree. Where the degree is easy to measure (such as pass or fail) or accuracy is not required, it may be omitted.

Characteristics of effective learning outcomes

The acronym SMART is useful for remembering the characteristics of an effective learning outcome.

- Specific : clear and distinct from others.

- Measurable : identifies observable student action.

- Attainable : suitably challenging for students in the course.

- Related : connected to other objectives and student interests.

- Time-bound : likely to be achieved and keep students on task within the given time frame.

Examples of effective learning outcomes

These examples generally follow the ABCD and SMART guidelines.

Arts and Humanities

Learning goals.

Upon completion of this course, students will be able to apply critical terms and methodology in completing a written literary analysis of a selected literary work.

At the end of the course, students will be able to demonstrate oral competence with the French language in pronunciation, vocabulary, and language fluency in a 10 minute in-person interview with a member of the teaching team.

Learning objectives

After completing lessons 1 through 5, given images of specific works of art, students will be able to identify the artist, artistic period, and describe their historical, social, and philosophical contexts in a two-page written essay.

By the end of this course, students will be able to describe the steps in planning a research study, including identifying and formulating relevant theories, generating alternative solutions and strategies, and application to a hypothetical case in a written research proposal.

At the end of this lesson, given a diagram of the eye, students will be able to label all of the extraocular muscles and describe at least two of their actions.

Using chemical datasets gathered at the end of the first lab unit, students will be able to create plots and trend lines of that data in Excel and make quantitative predictions about future experiments.

- How to Write Learning Goals , Evaluation and Research, Student Affairs (2021).

- SMART Guidelines , Center for Teaching and Learning (2020).

- Learning Taxonomies and Verbs , Center for Teaching and Learning (2021).

Writing to Think: Critical Thinking and the Writing Process

“Writing is thinking on paper.” (Zinsser, 1976, p. vii)

Google the term “critical thinking.” How many hits are there? On the day this tutorial was completed, Google found about 65,100,000 results in 0.56 seconds. That’s an impressive number, and it grows more impressively large every day. That’s because the nation’s educators, business leaders, and political representatives worry about the level of critical thinking skills among today’s students and workers.

What is Critical Thinking?

Simply put, critical thinking is sound thinking. Critical thinkers work to delve beneath the surface of sweeping generalizations, biases, clichés, and other quick observations that characterize ineffective thinking. They are willing to consider points of view different from their own, seek and study evidence and examples, root out sloppy and illogical argument, discern fact from opinion, embrace reason over emotion or preference, and change their minds when confronted with compelling reasons to do so. In sum, critical thinkers are flexible thinkers equipped to become active and effective spouses, parents, friends, consumers, employees, citizens, and leaders. Every area of life, in other words, can be positively affected by strong critical thinking.

Released in January 2011, an important study of college students over four years concluded that by graduation “large numbers [of American undergraduates] didn’t learn the critical thinking, complex reasoning and written communication skills that are widely assumed to be at the core of a college education” (Rimer, 2011, para. 1). The University designs curriculum, creates support programs, and hires faculty to help ensure you won’t be one of the students “[showing]no significant gains in . . . ‘higher order’ thinking skills” (Rimer, 2011, para. 4). One way the University works to help you build those skills is through writing projects.

Writing and Critical Thinking

Say the word “writing” and most people think of a completed publication. But say the word “writing” to writers, and they will likely think of the process of composing. Most writers would agree with novelist E. M. Forster, who wrote, “How can I know what I think until I see what I say?” (Forster, 1927, p. 99). Experienced writers know that the act of writing stimulates thinking.

Inexperienced and experienced writers have very different understandings of composition. Novice writers often make the mistake of believing they have to know what they’re going to write before they can begin writing. They often compose a thesis statement before asking questions or conducting research. In the course of their reading, they might even disregard material that counters their pre-formed ideas. This is not writing; it is recording.

In contrast, experienced writers begin with questions and work to discover many different answers before settling on those that are most convincing. They know that the act of putting words on paper or a computer screen helps them invent thought and content. Rather than trying to express what they already think, they express what the act of writing leads them to think as they put down words. More often than not, in other words, experienced writers write their way into ideas, which they then develop, revise, and refine as they go.

What has this notion of writing to do with critical thinking? Everything.

Consider the steps of the writing process: prewriting, outlining, drafting, revising, editing, seeking feedback, and publishing. These steps are not followed in a determined or strict order; instead, the effective writer knows that as they write, it may be necessary to return to an earlier step. In other words, in the process of revision, a writer may realize that the order of ideas is unclear. A new outline may help that writer re-order details. As they write, the writer considers and reconsiders the effectiveness of the work.

The writing process, then, is not just a mirror image of the thinking process: it is the thinking process. Confronted with a topic, an effective critical thinker/writer

- asks questions

- seeks answers

- evaluates evidence

- questions assumptions

- tests hypotheses

- makes inferences

- employs logic

- draws conclusions

- predicts readers’ responses

- creates order

- drafts content

- seeks others’ responses

- weighs feedback

- criticizes their own work

- revises content and structure

- seeks clarity and coherence

Example of Composition as Critical Thinking

“Good writing is fueled by unanswerable questions” (Lane, 1993, p. 15).

Imagine that you have been asked to write about a hero or heroine from history. You must explain what challenges that individual faced and how they conquered them. Now imagine that you decide to write about Rosa Parks and her role in the modern Civil Rights movement. Take a moment and survey what you already know. She refused to get up out of her seat on a bus so a White man could sit in it. She was arrested. As a result, Blacks in Montgomery protested, influencing the Montgomery Bus Boycott. Martin Luther King, Jr. took up leadership of the cause, and ultimately a movement was born.

Is that really all there is to Rosa Parks’s story? What questions might a thoughtful writer ask? Here a few:

- Why did Rosa Parks refuse to get up on that particular day?

- Was hers a spontaneous or planned act of defiance?

- Did she work? Where? Doing what?

- Had any other Black person refused to get up for a White person?

- What happened to that individual or those individuals?

- Why hadn’t that person or those persons received the publicity Parks did?

- Was Parks active in Civil Rights before that day?

- How did she learn about civil disobedience?

Even just these few questions could lead to potentially rich information.

Factual information would not be enough, however, to satisfy an assignment that asks for an interpretation of that information. The writer’s job for the assignment is to convince the reader that Parks was a heroine; in this way the writer must make an argument and support it. The writer must establish standards of heroic behavior. More questions arise:

- What is heroic action?

- What are the characteristics of someone who is heroic?

- What do heroes value and believe?

- What are the consequences of a hero’s actions?

- Why do they matter?

Now the writer has even more research and more thinking to do.

By the time they have raised questions and answered them, raised more questions and answered them, and so on, they are ready to begin writing. But even then, new ideas will arise in the course of planning and drafting, inevitably leading the writer to more research and thought, to more composition and refinement.

Ultimately, every step of the way over the course of composing a project, the writer is engaged in critical thinking because the effective writer examines the work as they develop it.

Why Writing to Think Matters

Writing practice builds critical thinking, which empowers people to “take charge of [their] own minds” so they “can take charge of [their] own lives . . . and improve them, bringing them under [their] self command and direction” (Foundation for Critical Thinking, 2020, para. 12). Writing is a way of coming to know and understand the self and the changing world, enabling individuals to make decisions that benefit themselves, others, and society at large. Your knowledge alone – of law, medicine, business, or education, for example – will not be enough to meet future challenges. You will be tested by new unexpected circumstances, and when they arise, the open-mindedness, flexibility, reasoning, discipline, and discernment you have learned through writing practice will help you meet those challenges successfully.

Forster, E.M. (1927). Aspects of the novel . Harcourt, Brace & Company.

The Foundation for Critical Thinking. (2020, June 17). Our concept and definition of critical thinking . https://www.criticalthinking.org/pages/our-concept-of-critical-thinking/411

Lane, B. (1993). After the end: Teaching and learning creative revision . Heinemann.

Rimer, S. (2011, January 18). Study: Many college students not learning to think critically . The Hechinger Report. https://www.mcclatchydc.com/news/nation-world/national/article24608056.html

Zinsser, W. (1976). On writing well: The classic guide to writing nonfiction . HarperCollins.

Share this:

- Click to email a link to a friend (Opens in new window)

- Click to share on Facebook (Opens in new window)

- Click to share on Reddit (Opens in new window)

- Click to share on Twitter (Opens in new window)

- Click to share on LinkedIn (Opens in new window)

- Click to share on Pinterest (Opens in new window)

- Click to print (Opens in new window)

Follow Blog via Email

Enter your email address to follow this blog and receive email notifications of new posts.

Email Address

- RSS - Posts

- RSS - Comments

- COLLEGE WRITING

- USING SOURCES & APA STYLE

- EFFECTIVE WRITING PODCASTS

- LEARNING FOR SUCCESS

- PLAGIARISM INFORMATION

- FACULTY RESOURCES

- Student Webinar Calendar

- Academic Success Center

- Writing Center

- About the ASC Tutors

- DIVERSITY TRAINING

- PG Peer Tutors

- PG Student Access

Subscribe to Blog via Email

Enter your email address to subscribe to this blog and receive notifications of new posts by email.

- College Writing

- Using Sources & APA Style

- Learning for Success

- Effective Writing Podcasts

- Plagiarism Information

- Faculty Resources

- Tutor Training

Twitter feed

Learning Through Writing - Strategies for Educators

An increased focus on writing is a catalyst for academic success.

How does Writing improve student outcomes?

In numerous educational settings, the scope of writing is often confined to worksheets, short responses, and the occasional essay assignment. This narrow approach overlooks the profound benefits of integrating writing across all areas of study. Fostering literacy development is a collective endeavor that spans all subjects, with writing acting as a pivotal tool for bolstering students' comprehension skills. There is a clear correlation between regular writing exercises and notable advancements in students' academic performance, underscoring the value of encouraging students to articulate their thoughts in writing.

Increase Critical Thinking through Writing tackles the vital role of writing for enhancing literacy as a comprehensive strategy, facilitating improved understanding across various disciplines. This approach advocates for the integration of engaging writing tasks within every lesson, aiming to augment academic performance by prompting students to reflect on the subject matter and articulate their insights through written expression.

Key Takeaways

Writing increases learning.

Learn how Scaffolding Strategies offer a dynamic approach to differentiating instruction, ensuring that each student's immediate learning needs are met. Explore how this tailored temporary support enhances understanding, engagement, and overall academic success.

Essential to Tier 1 Instruction

Recognize the dual nature of writing, which not only fosters a solid groundwork for assimilating and understanding new concepts, but also transforms writing into an essential component of the learning and educational process.

Learning Through Writing: 4 Key Writing Principles

Embrace writing as a foundational learning practice structured around four key principles: Integrating Writing Across the Curriculum, Expanding Writing Opportunities, Instructing the Writing Process, and Refining Writing with Constructive Feedback.

Varied Implementation

Commit to a widespread application of writing by focusing on two primary methodologies:

- Writing to Learn: Reveal how writing serves as an instrument for deepening comprehension and assimilating new information

- Writing to Inform: Demonstrate the use of writing in effectively conveying information, thereby ensuring clarity and understanding

Increase Reading Comprehension

Learn the symbiotic link between reading and writing, appreciating how writing fortifies literacy capabilities and significantly boosts reading skills.

With the right strategies and tools, your lessons will be revolutionized through the power of writing. Gain practical insights, effective strategies, and an adaptable roadmap for accelerating learning through writing, and the dynamic integration of writing across the curriculum. An increased focus on writing is a catalyst for academic success.

Learning Through Writing - PD and Professional Learning Options

At Your School

Schedule a day of Professional Learning at your school. Onsite training with one of our experts provides opportunities for educators to expand their knowledge, refine teaching techniques, and stay up-to-date with the latest curriculum and instruction innovations. By investing in professional learning, your school can cultivate a culture of continuous learning, enhance teacher effectiveness, and ultimately improve student outcomes.

At Our Training Center

Join us in Asheville, North Carolina, at the Learning-Focused Training Center. With seats limited to 22 attendees, you are guaranteed to have a great experience.

At Your Own Pace

Learning at your own pace allows you to tailor your professional learning journey focused on your unique goals and preferences. This option empowers you to set your own pace with the flexibility and autonomy to master new concepts and refine your skills.

Want to learn more? Book a call at your preferred time.

Contact us today to explore how Increase Critical Thinking with Writing can help meet the needs of all students. Let's work together to improve learning through writing, enhance critical thinking, and empower every student to thrive academically!

Increase Critical Thinking Series

If you're exploring ways to transform your teaching methods and enrich the learning experience, don't stop at the Increase Critical Thinking with Writing ! Venture further into our Increase Critical Thinking Professional Learning Series , each uniquely designed to cater to different aspects of teaching and learning through critical thinking strategies .

- Teaching Resources: Commonly Asked Questions about Teaching Practices and Educational Technology

Writing Learning Outcomes

The promise of your course.

Many educators feel that a key to effective and efficient course design is to develop and understand the PROMISE of your course. Why should a student take this course? What will they “get out” of it? Will they gain a more critical or informed way of appreciating the world? A set of skills applicable to their future career? Mastery of a set of concepts that are foundational for more advanced learning?

Students appreciate knowing why they are being asked to learn something. According to Mary Clement’s recent article Three Steps to Better Course Evaluations , “I recommend making invisible expectations explicit. I regularly start class by saying, ‘We are learning this because …’ When students understand why and how the material is relevant to them, they find more motivation to study and end up rating the course more highly.”

If the promise of a course is not clear, it’s often more difficult to articulate learning outcomes that are based on student learning rather than content areas.

For many years, courses were designed based on content areas, by listing the topic areas to be “covered” and breaking that down by the number of class meetings. Today, learning is understood as a much more complex process, and much of the content that used to be available only in the college classroom is now widely available online for free. The classroom is no longer the place to dispense information, but rather the place to help students learn how to use, apply, and understand information.

Today, course design is instead achieved by focusing on student learning goals–what college instructors want their students to learn, to know, and to be able to do by the end of the course. Instructors are asked to focus their course design efforts on what the students are doing in class as much, if not more so, than on what the instructor is doing.

Once the promise of a course is understood and articulated, it is easier to talk about the student learning goals, which are typically written out in the form of learning outcomes.

The task of writing learning outcomes often causes confusion and frustration among faculty members. It can be difficult to articulate in just a few statements all the complex learning that we want to occur in our courses. It is easy to get caught up in the distinctions between terms such as objectives, goals, measurable learning outcomes, or terminal course objectives. However, the bottom line is that it is useful for both instructors and students when the general desired outcomes of a course are stated and shared. Here are some tips and resources about writing learning outcomes.

- Start with the end in mind. What are the main goals of your course for students? What is it that students should be expected to do, or to know, or to apply, by the end of your course? What are those main concepts you want students to retain years after taking your course?

- Ultimately, try to write the objectives from the student’s perspective and tie them in to the promise of your course. Rather than just focusing on the content areas, what do you want the students to be able to do, to understand, or to know, and why is it important that they do so?

- Most courses outcomes consist of a mix of knowledge, skills and attitudes. Think about not only what knowledge students should gain, but what skills they will be developing (critical thinking skills, creative thinking skills, application skills, psychomotor skills, etc.) and what attitudes they might be changing.

- Clear learning outcomes help you align your content, assignments, and grading practices and help you focus on the essential components of the course, rather than trying to fit everything in.

- Connect your course outcomes with your programs’ outcomes, with your Graduate degree outcomes, or with the University of Denver Undergraduate Student Outcomes.

Learning Outcome Examples

(Adapted from Walvoord and Anderson, Effective Grading, 1998)

- Western Civilization I…describe basic historical events and people, argue like a historian does by using historical data as evidence for a position

- Economics…use economic theory to explain government policies and their effects

- Physics…explain physical concepts in your own words

- Speech Pathology…synthesize information from various sources to arrive at intervention tactics for the client

MATC 1200: Calculus for Business and Social Sciences

Generously shared by Deb Carney, Dept of Mathematics

Students should be able to:

- Relate the concept of the limit to the definition of the derivative

- Describe the concept of the derivative as an instantaneous rate of change

- Apply the concepts of the limit and the derivative to solve calculus problems

- Interpret real-world situations in terms of related calculus concepts

- Use and apply mathematical models including logarithmic and exponential functions

Additional Resources

Many instructors find the resources below to be helpful when writing learning outcomes.

- An overview of Bloom’s Taxonomy

- Description of the Revised Bloom’s Taxonomy with sample verbs

- a newer taxonomy comes from L. Dee Fink’s Creating Significant Learning Experiences

The main idea is that most courses should focus on more than just knowledge/remembering outcomes and strive to develop more complex thinking skills among students.

Contact the OTL if you would like someone to review and assist you in writing course learning outcomes.

2150 E. Evans Ave University of Denver Anderson Academic Commons Room 350 Denver, CO 80208

Copyright ©2019 University of Denver Office of Teaching and Learning | All rights reserved | The University of Denver is an equal opportunity affirmative action institution.

Change Password

Your password must have 8 characters or more and contain 3 of the following:.

- a lower case character,

- an upper case character,

- a special character

Password Changed Successfully

Your password has been changed

- Sign in / Register

Request Username

Can't sign in? Forgot your username?

Enter your email address below and we will send you your username

If the address matches an existing account you will receive an email with instructions to retrieve your username

Learning to Improve: Using Writing to Increase Critical Thinking Performance in General Education Biology

- Ian J. Quitadamo

- Martha J. Kurtz

*Department of Biological Sciences, Central Washington University, Ellensburg, WA 98926-7537; and

Search for more papers by this author

Department of Chemistry, Central Washington University, Ellensburg, WA 98926-7539

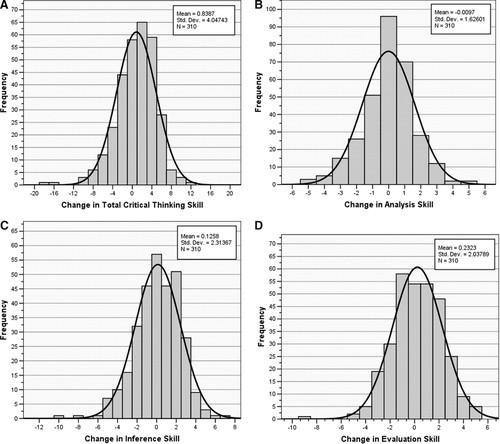

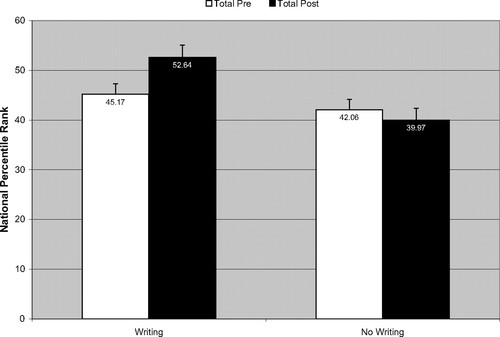

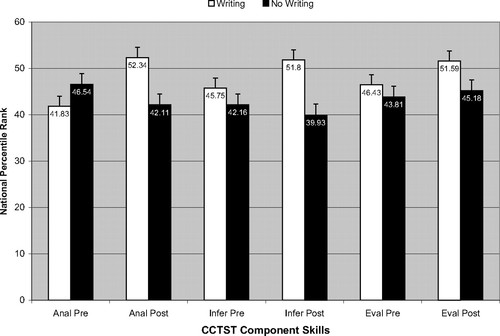

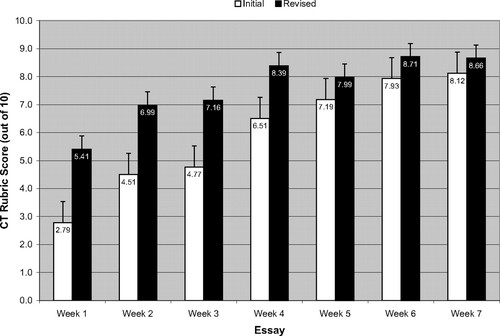

Increasingly, national stakeholders express concern that U.S. college graduates cannot adequately solve problems and think critically. As a set of cognitive abilities, critical thinking skills provide students with tangible academic, personal, and professional benefits that may ultimately address these concerns. As an instructional method, writing has long been perceived as a way to improve critical thinking. In the current study, the researchers compared critical thinking performance of students who experienced a laboratory writing treatment with those who experienced traditional quiz-based laboratory in a general education biology course. The effects of writing were determined within the context of multiple covariables. Results indicated that the writing group significantly improved critical thinking skills whereas the nonwriting group did not. Specifically, analysis and inference skills increased significantly in the writing group but not the nonwriting group. Writing students also showed greater gains in evaluation skills; however, these were not significant. In addition to writing, prior critical thinking skill and instructor significantly affected critical thinking performance, whereas other covariables such as gender, ethnicity, and age were not significant. With improved critical thinking skill, general education biology students will be better prepared to solve problems as engaged and productive citizens.

INTRODUCTION

A national call to improve critical thinking in science.

In the past several years, an increasing number of national reports indicate a growing concern over the effectiveness of higher education teaching practices and the decreased science (and math) performance of U.S. students relative to other industrialized countries ( Project Kaleidoscope, 2006 ). A variety of national stakeholders, including business and educational leaders, politicians, parents, and public agencies, have called for long-term transformation of the K–20 educational system to produce graduates who are well trained in science, can engage intelligently in global issues that require local action, and in general are better able to solve problems and think critically. Specifically, business leaders are calling for graduates who possess advanced analysis and communication skills, for instructional methods that improve lifelong learning, and ultimately for an educational system that builds a nation of innovative and effective thinkers ( Business-Higher Education Forum and American Council on Education, 2003 ). Education leaders are similarly calling for institutions of higher education to produce graduates who think critically, communicate effectively, and who employ lifelong learning skills to address important scientific and civic issues ( Association of American Colleges and Universities, [AACU] 2005 ).

Many college faculty consider critical thinking to be one of the most important indicators of student learning quality. In its 2005 national report, the AACU indicated that 93% of higher education faculty perceived analytical and critical thinking to be an essential learning outcome (AACU, 2005) whereas 87% of undergraduate students indicated that college experiences contributed to their ability to think analytically and creatively. This same AACU report showed that only 6% of undergraduate seniors demonstrated critical thinking proficiency based on Educational Testing Services standardized assessments from 2003 to 2004. During the same time frame, data from the ACT Collegiate Assessment of Academic Proficiency test showed a similar trend, with undergraduates improving their critical thinking less than 1 SD from freshman to senior year. Thus, it appears a discrepancy exists between faculty expectations of critical thinking and students' ability to perceive and demonstrate critical thinking proficiency using standardized assessments (AACU, 2005).

Teaching that supports the development of critical thinking skills has become a cornerstone of nearly every major educational objective since the Department of Education released its six goals for the nation's schools in 1990. In particular, goal three of the National Goals for Education stated that more students should be able to reason, solve problems, and apply knowledge. Goal six specifically stated that college graduates must be able to think critically ( Office of Educational Research and Improvement, 1991 ). Since 1990, American education has tried—with some success—to make a fundamental shift from traditional teacher-focused instruction to more student-centered constructivist learning that encourages discovery, reflection, and in general is thought to improve student critical thinking skill. National science organizations have supported this trend with recommendations to improve the advanced thinking skills that support scientific literacy ( American Association for Higher Education, 1989 ; National Research Council, 1995 ; National Science Foundation, 1996 ).

More recent reports describe the need for improved biological literacy as well as international competitiveness ( Bybee and Fuchs, 2006 ; Klymkowsky, 2006 ). Despite the collective call for enhanced problem solving and critical thinking, educators, researchers, and policymakers are discovering a lack of evidence in existing literature for methods that measurably improve critical thinking skills ( Tsui, 1998 , 2002 ). As more reports call for improved K–20 student performance, it is essential that research-supported teaching and learning practices be used to better help students develop the cognitive skills that underlie effective science learning ( Malcom et al., 2005 ; Bybee and Fuchs, 2006 ).

Critical Thinking

Although they are not always transparent to many college students, the academic and personal benefits of critical thinking are well established; students who can think critically tend to get better grades, are often better able to use reasoning in daily decisions ( U.S. Department of Education, 1990 ), and are generally more employable ( Carnevale and American Society for Training and Development, 1990 ; Holmes and Clizbe, 1997 ; National Academy of Sciences, 2005 ). By focusing on instructional efforts that develop critical thinking skills, it may be possible to increase student performance while satisfying national stakeholder calls for educational improvement and increased ability to solve problems as engaged and productive citizens.

Although academics and business professionals consider critical thinking skill to be a crucial outcome of higher education, many would have difficulty defining exactly what critical thinking is. Historically, there has been little agreement on how to conceptualize critical thinking. Of the literally dozens of definitions that exist, one of the most organized efforts to define (and measure) critical thinking emerged from research done by Peter Facione and others in the early 1990s. Their consensus work, referred to as the Delphi report, was accomplished by a group of 46 leading theorists, teachers, and critical thinking assessment specialists from a variety of academic and business disciplines ( Facione and American Philosophical Association, 1990 ). Initial results from the Delphi report were later confirmed in a national survey and replication study ( Jones et al., 1995 ). In short, the Delphi panel expert consensus describes critical thinking as a “process of purposeful self-regulatory judgment that drives problem-solving and decision-making” ( Facione and American Philosophical Association, 1990 ). This definition implies that critical thinking is an intentional, self-regulated process that provides a mechanism for solving problems and making decisions based on reasoning and logic, which is particularly useful when dealing with issues of national and global significance.

The Delphi conceptualization of critical thinking encompasses several cognitive skills that include: 1) analysis (the ability to break a concept or idea into component pieces in order to understand its structure and inherent relationships), 2) inference (the skills used to arrive at a conclusion by reconciling what is known with what is unknown), and 3) evaluation (the ability to weigh and consider evidence and make reasoned judgments within a given context). Other critical thinking skills that are similarly relevant to science include interpretation, explanation, and self-regulation ( Facione and American Philosophical Association, 1990 ). The concept of critical thinking includes behavioral tendencies or dispositions as well as cognitive skills ( Ennis, 1985 ); these include the tendency to seek truth, to be open-minded, to be analytical, to be orderly and systematic, and to be inquisitive ( Facione and American Philosophical Association, 1990 ). These behavioral tendencies also align closely with behaviors considered to be important in science. Thus, an increased focus on teaching critical thinking may directly benefit students who are engaged in science.

Prior research on critical thinking indicates that students' behavioral dispositions do not change in the short term ( Giancarlo and Facione, 2001 ), but cognitive skills can be developed over a relatively short period of time (Quitadamo, Brahler, and Crouch, unpublished results). In their longitudinal study of behavioral disposition toward critical thinking, Giancarlo and Facione (2001) discovered that undergraduate critical thinking disposition changed significantly after two years. Specifically, significant changes in student tendency to seek truth and confidence in thinking critically occurred during the junior and senior years. Also, females tended to be more open-minded and have more mature judgment than males ( Giancarlo and Facione, 2001 ). Although additional studies are necessary to confirm results from the Giancarlo study, existing research seems to indicate that changes in undergraduate critical thinking disposition are measured in years, not weeks.

In contrast to behavioral disposition, prior research indicates that critical thinking skills can be measurably changed in weeks. In their study of undergraduate critical thinking skill in university science and math courses, Quitadamo, Brahler, and Crouch (unpublished results) showed that critical thinking skills changed within 15 wk in response to Peer Led Team Learning (a national best practice for small group learning). This preliminary study provided some evidence that undergraduate critical thinking skills could be measurably improved within an academic semester, but provided no information about whether critical thinking skills could be changed during a shorter academic quarter. It was also unclear whether the development of critical thinking skills was a function of chronological time or whether it was related to instructional time.

Numerous studies provide anecdotal evidence for pedagogies that improve critical thinking, but much of existing research relies on student self-report, which limits the scope of interpretation. From the literature it is clear that, although critical thinking skills are some of the most valued outcomes of a quality education, additional research investigating the effects of instructional factors on critical thinking performance is necessary ( Tsui, 1998 , 2002 ).

Writing and Critical Thinking

Writing has been widely used as a tool for communicating ideas, but less is known about how writing can improve the thinking process itself ( Rivard, 1994 ; Klein, 2004 ). Writing is thought to be a vehicle for improving student learning ( Champagne and Kouba, 1999 ; Kelly and Chen, 1999 ; Keys, 1999 ; Hand and Prain, 2002 ), but too often is used as a means to regurgitate content knowledge and derive prescribed outcomes ( Keys, 1999 ; Keys et al., 1999 ). Historically, writing is thought to contribute to the development of critical thinking skills ( Kurfiss, and Association for the Study of Higher Education, 1988 ). Applebee (1984) suggested that writing improves thinking because it requires an individual to make his or her ideas explicit and to evaluate and choose among tools necessary for effective discourse. Resnick (1987) stressed that writing should provide an opportunity to think through arguments and that, if used in such a way, could serve as a “cultivator and an enabler of higher order thinking.” Marzano (1991) suggested that writing used as a means to restructure knowledge improves higher-order thinking. In this context, writing may provide opportunity for students to think through arguments and use higher-order thinking skills to respond to complex problems ( Marzano, 1991 ).

Writing has also been used as a strategy to improve conceptual learning. Initial work focused on how the recursive and reflective nature of the writing process contributes to student learning ( Applebee, 1984 ; Langer and Applebee, 1985 , 1987 ; Ackerman, 1993 ). However, conclusions from early writing to learn studies were limited by confounding research designs and mismatches between writing activities and measures of student learning ( Ackerman, 1993 ). Subsequent work has focused on how writing within disciplines helps students to learn content and how to think. Specifically, writing within disciplines is thought to require deeper analytical thinking ( Langer and Applebee, 1987 ), which is closely aligned with critical thinking.

The influence of writing on critical thinking is less defined in science. Researchers have repeatedly called for more empirical investigations of writing in science; however, few provide such evidence ( Rivard, 1994 ; Tsui, 1998 ; Daempfle, 2002 ; Klein, 2004 ). In his extensive review of writing research, Rivard (1994) indicated that gaps in writing research limit its inferential scope, particularly within the sciences. Specifically, Rivard and others indicate that, despite the volume of writing students are asked to produce during their education, they are not learning to use writing to improve their awareness of thinking processes ( Resnick, 1987 ; Howard, 1990 ). Existing studies are limited because writing has been used either in isolation or outside authentic classroom contexts. Factors like gender, ethnicity, and academic ability that are not directly associated with writing but may nonetheless influence its effectiveness have also not been sufficiently accounted for in previous work ( Rivard, 1994 ).

A more recent review by Daempfle (2002) similarly indicates the need for additional research to clarify relationships between writing and critical thinking in science. In his review, Daempfle identified nine empirical studies that generally support the hypothesis that students who experience writing (and other nontraditional teaching methods) have higher reasoning skills than students who experience traditional science instruction. Of the relatively few noninstructional variables identified in those studies, gender and major did not affect critical thinking performance; however, the amount of time spent on and the explicitness of instruction to teach reasoning skills did affect overall critical thinking performance. Furthermore, the use of writing and other nontraditional teaching methods did not appear to negatively affect content knowledge acquisition ( Daempfle, 2002 ). Daempfle justified his conclusions by systematically describing the methodological inconsistencies for each study. Specifically, incomplete sample descriptions, the use of instruments with insufficient validity and reliability, the absence of suitable comparison groups, and the lack of statistical covariate analyses limit the scope and generalizability of existing studies of writing and critical thinking ( Daempfle, 2002 ).

Writing in the Biological Sciences

The conceptual nature and reliance on the scientific method as a means of understanding make the field of biology a natural place to teach critical thinking through writing. Some work has been done in this area, with literature describing various approaches to writing in the biological sciences that range from linked biology and English courses, writing across the biology curriculum, and directed use of writing to improve reasoning in biology courses ( Ebert-May et al., 1997 ; Holyoak, 1998 ; Taylor and Sobota, 1998 ; Steglich, 2000 ; Lawson, 2001 ; Kokkala and Gessell, 2003 ; Tessier, 2006 ). In their work on integrated biology and English, Taylor and Sobota (1998) discussed several problem areas that affected both biology and English students, including anxiety and frustration associated with writing, difficulty expressing thoughts clearly and succinctly, and a tendency to have strong negative responses to writing critique. Although the authors delineate the usefulness of several composition strategies for writing in biology ( Taylor and Sobota, 1998 ), it was unclear whether student data were used to support their recommendations. Kokkala and Gessell (2003) used English students to evaluate articles written by biology students. Biology students first reflected on initial editorial comments made by English students, and then resubmitted their work for an improved grade. In turn, English students had to justify their editorial comments with written work of their own. Qualitative results generated from a list of reflective questions at the end of the writing experience seemed to indicate that both groups of students improved editorial skills and writing logic. However, no formal measures of student editorial skill were collected before biology-English student collaboration, so no definitive conclusions on the usefulness of this strategy could be made.

Taking a slightly different tack, Steglich (2000) informally assessed student attitudes in nonmajors biology courses, and noted that writing produced positive changes in student attitudes toward biology. However, the author acknowledged that this work was not a research study. Finally, Tessier (2006) showed that students enrolled in a nonmajors ecology course significantly improved writing technical skills and committed fewer errors of fact regarding environmental issues in response to a writing treatment. Attitudes toward environmental issues also improved ( Tessier, 2006 ). Although this study surveyed students at the beginning and the end of the academic term and also tracked student progress during the quarter, instrument validity and reliability were not provided. The generalizability of results was further limited because of an overreliance on student self-reports and small sample size.

Each of the studies described above peripherally supports a relationship between writing and critical thinking. Although not explicitly an investigation of critical thinking, results from a relatively recent study support a stronger connection between writing and reasoning ability ( Daempfle, 2002 ). Ebert-May et al. (1997) used a modified learning cycle instructional method and small group collaboration to increase reasoning ability in general education biology students. A quasi-experimental pretest/posttest control group design was used on a comparatively large sample of students, and considerable thought was given to controlling extraneous variables across the treatment and comparison groups. A multifaceted assessment strategy based on writing, standardized tests, and student interviews was used to quantitatively and qualitatively evaluate student content knowledge and thinking skill. Results indicated that students in the treatment group significantly outperformed control group students on reasoning and process skills as indicated by the National Association of Biology Teachers (NABT) content exam. Coincidentally, student content knowledge did not differ significantly between the treatment and control sections, indicating that development of thinking skill did not occur at the expense of content knowledge ( Ebert-May et al., 1997 ). Interview data indicated that students experiencing the writing and collaboration-based instruction changed how they perceived the construction of biological knowledge and how they applied their reasoning skills. Although the Ebert-May study is one of the more complete investigations of writing and critical thinking to date, several questions remain. Supporting validity and reliability data for the NABT test was not included in the study, making interpretation of results somewhat less certain. In addition, the NABT exam is designed to assess high school biology performance, not college performance ( Daempfle, 2002 ). Perhaps more importantly, the NABT exam does not explicitly measure critical thinking skills.

Collectively, it appears that additional research is necessary to establish a more defined relationship between writing and critical thinking in science ( Rivard, 1994 ; Tsui, 1998 , 2002 ; Daempfle, 2002 ). The current study addresses some of the gaps in previous work by evaluating the effects of writing on critical thinking performance using relatively large numbers of students, suitable comparison groups, valid and reliable instruments, a sizable cadre of covariables, and statistical analyses of covariance. This study uses an experimental design similar to that of the Ebert-May et al. (1997) study but incorporates valid and reliable test measures of critical thinking that can be used both within and across different science disciplines.

Purpose of the Study

Currently there is much national discussion about increasing the numbers of students majoring in various science fields ( National Research Council, 2003 ; National Academy of Sciences, 2005 ). Although this is a necessary and worthwhile goal, attention should also be focused on improving student performance in general education science because these students will far outnumber science majors for the foreseeable future. If college instructors want general education students to think critically about science, they will need to use teaching methods that improve student critical thinking performance. In many traditional general education biology courses, students are not expected to work collaboratively, to think about concepts as much as memorize facts, or to develop and support a written thesis or argument. This presents a large problem when one considers the societal role that general education students will play as voters, community members, and global citizens. By improving their critical thinking skills in science, general education students will be better able to deal with the broad scientific, economic, social, and political issues they will face in the future.

Does writing in laboratory affect critical thinking performance in general education biology?

Does the development of analysis, inference, and evaluation skills differ between students who experience writing versus those who experience traditional laboratory instruction?

What measurable effect do factors like gender, ethnicity, and prior thinking skill have on changes in critical thinking in general education biology?

If critical thinking skills change during an academic quarter, when does that take place?

MATERIALS AND METHODS

Study context.

The study took place at a state-funded regional comprehensive university in the Pacific Northwest. All participants were nonmajor undergraduates who were taking biology to satisfy their general education science requirement. Ten total sections of general education biology offered over three academic quarters (one academic year) were included in the study. Four of the 10 sections implemented a writing component during weekly laboratory meetings (N = 158); six traditional quiz-based laboratory sections served as a nonwriting control group (N = 152). Only scores from students who had completed both the initial (pretest) and end-of-quarter (posttest) critical thinking assessments were included in the data analysis. A breakdown of participant demographics for the writing and nonwriting groups is provided in Table 1 .

| Sample | Class distribution (%) | Gender distribution (%) | |||||

|---|---|---|---|---|---|---|---|

| Fr | So | Jr | Sr | 2nd Sr | M | F | |

| Writing (158) | 44.9 | 33.5 | 15.2 | 3.8 | 2.5 | 38.6 | 61.4 |

| No writing (152) | 53.3 | 28.3 | 7.2 | 9.2 | 2.0 | 38.2 | 61.8 |

| Overall (310) | 49.0 | 31.0 | 11.3 | 6.5 | 2.3 | 38.4 | 61.6 |

| Sample | Ethnic distribution (%) | ||||||

| Caucasian | Hispanic | African American | Native American | Asian | Other | ||

| Writing (158) | 84.8 | 1.9 | 2.5 | 0 | 4.4 | 6.3 | |

| No writing (152) | 81.6 | 4.6 | 1.3 | 1.3 | 5.9 | 5.3 | |

| Overall (310) | 83.2 | 3.2 | 1.9 | 0.6 | 5.2 | 5.8 | |

Demographics profile for the study sample. n values in parentheses.

a Other includes the ″choose not to answer″ response.

Each course section included a lecture component offered four times per week for 50 min and a laboratory component that met once a week for 2 h. Course lecture sections were limited to a maximum enrollment of 48 students, with two concurrent lab sections of 24 students. Two different instructors taught five writing sections and five other instructors taught 11 traditional sections over three consecutive quarters. Each course instructor materially participated in teaching laboratory with the help of one graduate assistant per lab section (two graduate students per course section). None of the instructors from treatment sections had implemented writing in the laboratory before the start of this study. Writing instructors were chosen on the basis of personal dissatisfaction with traditional laboratory teaching methods and willingness to try something new.

Strong efforts were made to establish equivalency between writing and nonwriting course sections a priori. Course elements that were highly similar included common lecture rooms, the use of similar (in most cases identical) textbooks, and a lab facility coordinated by a single faculty member. More specifically, three similarly appointed lecture rooms outfitted with contemporary instructional technology including dry erase boards, media cabinets, a networked computer, and digital projection were used to teach the nonmajors biology courses. The same nonmajors biology textbook was used across the writing and most of the nonwriting sections. All laboratory sections used a common lab facility and were taught on the same day of the week. Although the order in which specific labs were taught differed among sections, a common laboratory manual containing prescriptive exercises covering the main themes of biology (scientific method, cellular biology and genetics, natural selection and evolution, kingdoms of life, and a mammalian dissection) was used across all writing and nonwriting lab sections.

Primary course differences included a writing component in the laboratory, and how much time was devoted to laboratory activities. Those sections that experienced the writing treatment completed the prescriptive lab exercises in the first hour and engaged in writing during the second hour of the lab. Nonwriting sections allocated 2 h for the prescriptive lab exercises and included a traditional laboratory quiz rather than a writing assignment. The degree to which the writing and nonwriting sections included small group collaboration in laboratory varied and all course sections differed with regards to individual instructor teaching style. Although all course sections used traditional lecture exams during the quarter to assess content knowledge, the degree to which rote memorization-based exam questions were used to evaluate student learning varied.

Description of the Writing Treatment

On the first day of lecture, students in the writing treatment group were told that their laboratory performance would be evaluated using collaborative essays instead of traditional quizzes. A brief overview of the writing assignments was included in associated course syllabi. During the first laboratory session of the quarter, students were grouped into teams of three or four individuals, and the criteria for completing weekly writing assignments were further explained.

The decision to use collaborative groups to support writing in the laboratory was partly based on existing literature ( Collier, 1980 ; Bruffee, 1984 ; Tobin et al., 1994 ; Jones and Carter, 1998 ; Springer et al., 1999 ) and prior research by Quitadamo, Brahler, and Crouch (unpublished results), who showed that Peer Led Team Learning (one form of collaborative learning) helped to measurably improve undergraduate critical thinking skills. Small group learning was also used in the nonwriting treatment groups to a greater or lesser extent depending on individual instructor preference.

Baseline critical thinking performance was established in the academic quarters preceding the writing experiment to more specifically attribute changes in critical thinking to the writing treatment. Concurrent nonwriting course sections were also used as comparison groups. The historical baseline provided a way to determine what student performance had been before experiencing the writing treatment, whereas the concurrent nonwriting groups allowed for a direct comparison of critical thinking performance during the writing treatment. Pretest scores indicating prior critical thinking skill were also used to further establish comparability between the writing and nonwriting groups.