Shipping Your Product in Iterations: A Guide to Hypothesis Testing

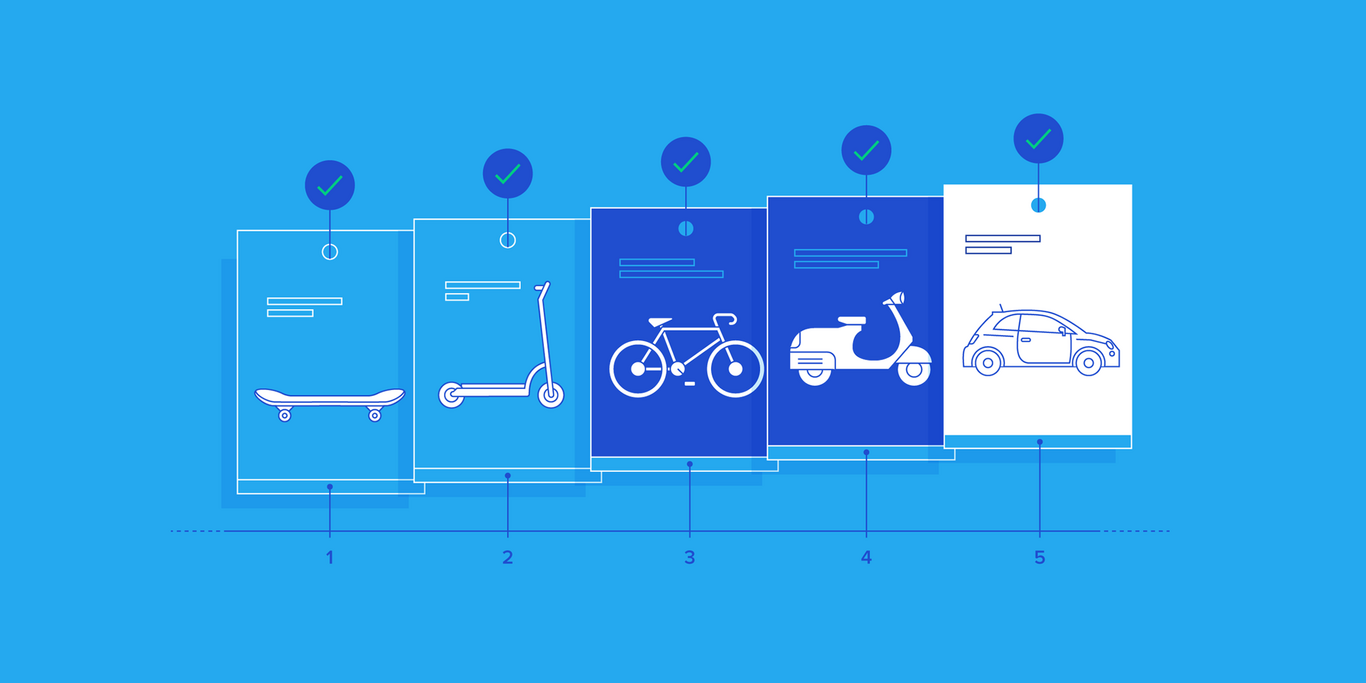

Glancing at the App Store on any phone will reveal that most installed apps have had updates released within the last week. Software products today are shipped in iterations to validate assumptions and hypotheses about what makes the product experience better for users.

By Kumara Raghavendra

Kumara has successfully delivered high-impact products in various industries ranging from eCommerce, healthcare, travel, and ride-hailing.

PREVIOUSLY AT

A look at the App Store on any phone will reveal that most installed apps have had updates released within the last week. A website visit after a few weeks might show some changes in the layout, user experience, or copy.

Today, software is shipped in iterations to validate assumptions and the product hypothesis about what makes a better user experience. At any given time, companies like booking.com (where I worked before) run hundreds of A/B tests on their sites for this very purpose.

For applications delivered over the internet, there is no need to decide on the look of a product 12-18 months in advance, and then build and eventually ship it. Instead, it is perfectly practical to release small changes that deliver value to users as they are being implemented, removing the need to make assumptions about user preferences and ideal solutions—for every assumption and hypothesis can be validated by designing a test to isolate the effect of each change.

In addition to delivering continuous value through improvements, this approach allows a product team to gather continuous feedback from users and then course-correct as needed. Creating and testing hypotheses every couple of weeks is a cheaper and easier way to build a course-correcting and iterative approach to creating product value .

What Is Hypothesis Testing in Product Management?

While shipping a feature to users, it is imperative to validate assumptions about design and features in order to understand their impact in the real world.

This validation is traditionally done through product hypothesis testing , during which the experimenter outlines a hypothesis for a change and then defines success. For instance, if a data product manager at Amazon has a hypothesis that showing bigger product images will raise conversion rates, then success is defined by higher conversion rates.

One of the key aspects of hypothesis testing is the isolation of different variables in the product experience in order to be able to attribute success (or failure) to the changes made. So, if our Amazon product manager had a further hypothesis that showing customer reviews right next to product images would improve conversion, it would not be possible to test both hypotheses at the same time. Doing so would result in failure to properly attribute causes and effects; therefore, the two changes must be isolated and tested individually.

Thus, product decisions on features should be backed by hypothesis testing to validate the performance of features.

Different Types of Hypothesis Testing

A/b testing.

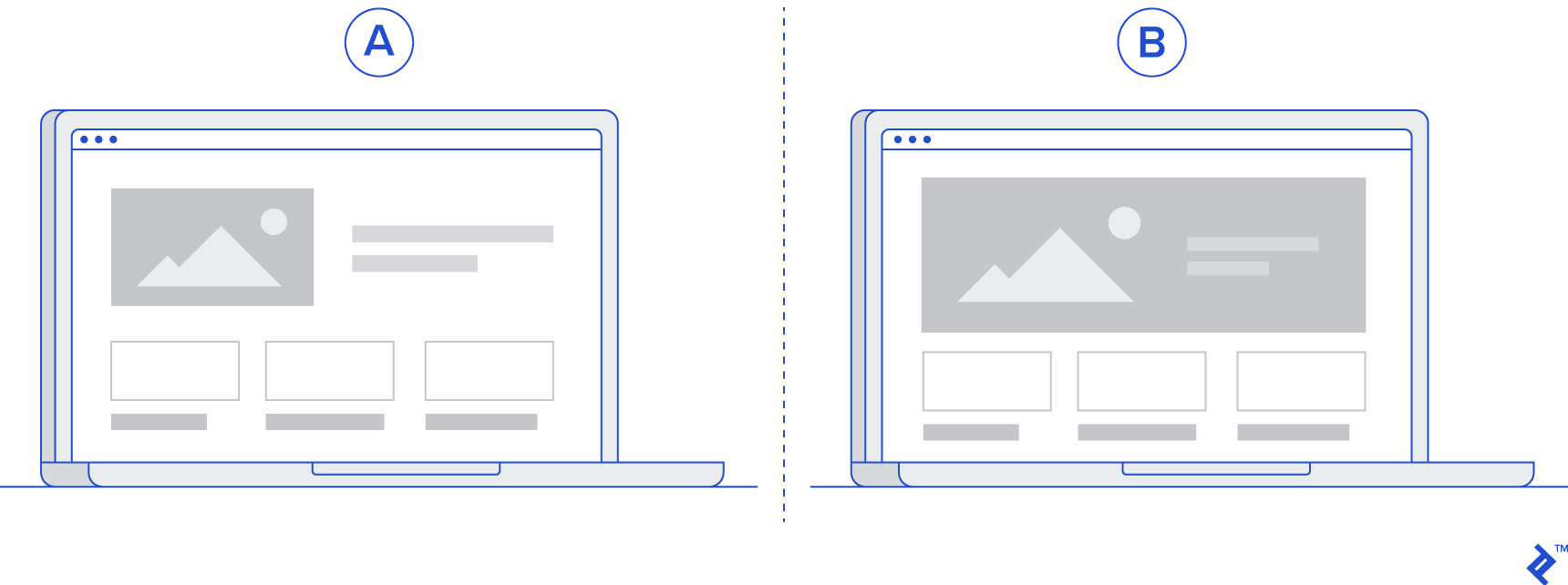

One of the most common use cases to achieve hypothesis validation is randomized A/B testing, in which a change or feature is released at random to one-half of users (A) and withheld from the other half (B). Returning to the hypothesis of bigger product images improving conversion on Amazon, one-half of users will be shown the change, while the other half will see the website as it was before. The conversion will then be measured for each group (A and B) and compared. In case of a significant uplift in conversion for the group shown bigger product images, the conclusion would be that the original hypothesis was correct, and the change can be rolled out to all users.

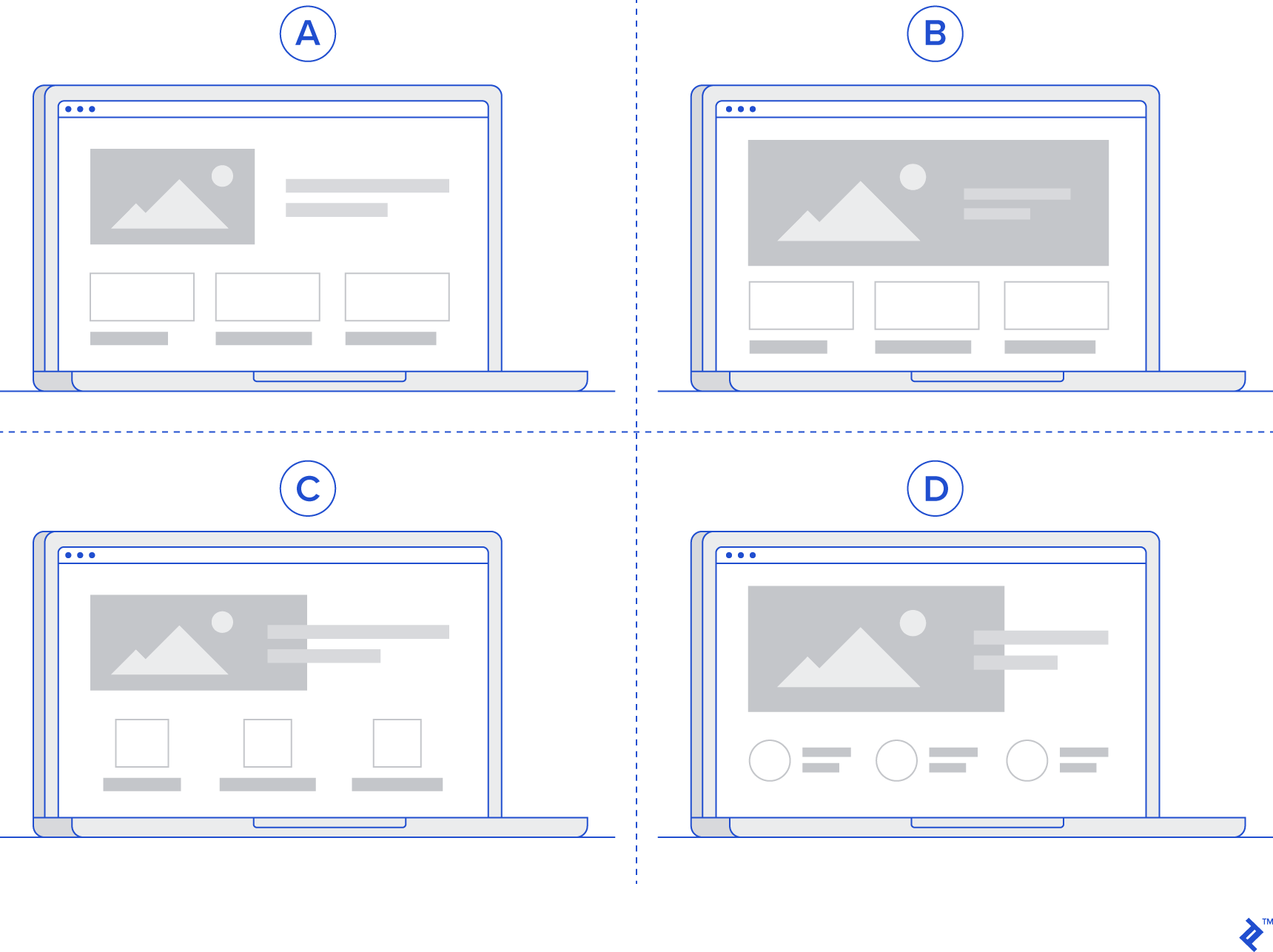

Multivariate Testing

Ideally, each variable should be isolated and tested separately so as to conclusively attribute changes. However, such a sequential approach to testing can be very slow, especially when there are several versions to test. To continue with the example, in the hypothesis that bigger product images lead to higher conversion rates on Amazon, “bigger” is subjective, and several versions of “bigger” (e.g., 1.1x, 1.3x, and 1.5x) might need to be tested.

Instead of testing such cases sequentially, a multivariate test can be adopted, in which users are not split in half but into multiple variants. For instance, four groups (A, B, C, D) are made up of 25% of users each, where A-group users will not see any change, whereas those in variants B, C, and D will see images bigger by 1.1x, 1.3x, and 1.5x, respectively. In this test, multiple variants are simultaneously tested against the current version of the product in order to identify the best variant.

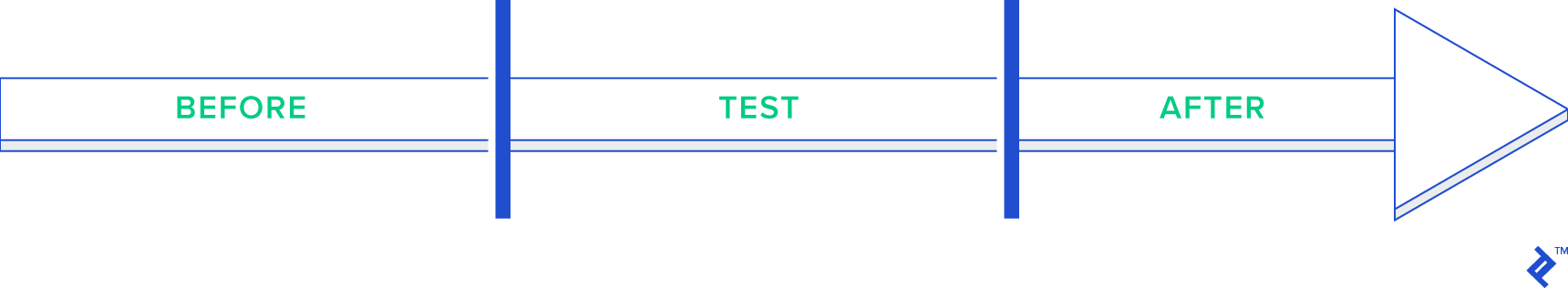

Before/After Testing

Sometimes, it is not possible to split the users in half (or into multiple variants) as there might be network effects in place. For example, if the test involves determining whether one logic for formulating surge prices on Uber is better than another, the drivers cannot be divided into different variants, as the logic takes into account the demand and supply mismatch of the entire city. In such cases, a test will have to compare the effects before the change and after the change in order to arrive at a conclusion.

However, the constraint here is the inability to isolate the effects of seasonality and externality that can differently affect the test and control periods. Suppose a change to the logic that determines surge pricing on Uber is made at time t , such that logic A is used before and logic B is used after. While the effects before and after time t can be compared, there is no guarantee that the effects are solely due to the change in logic. There could have been a difference in demand or other factors between the two time periods that resulted in a difference between the two.

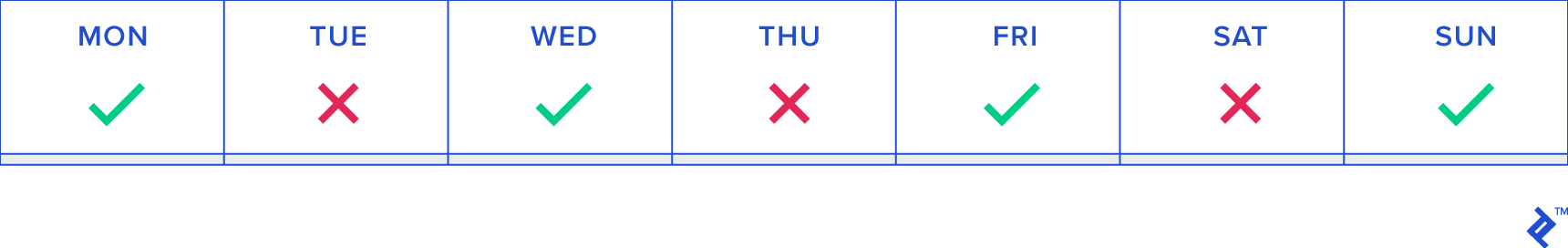

Time-based On/Off Testing

The downsides of before/after testing can be overcome to a large extent by deploying time-based on/off testing, in which the change is introduced to all users for a certain period of time, turned off for an equal period of time, and then repeated for a longer duration.

For example, in the Uber use case, the change can be shown to drivers on Monday, withdrawn on Tuesday, shown again on Wednesday, and so on.

While this method doesn’t fully remove the effects of seasonality and externality, it does reduce them significantly, making such tests more robust.

Test Design

Choosing the right test for the use case at hand is an essential step in validating a hypothesis in the quickest and most robust way. Once the choice is made, the details of the test design can be outlined.

The test design is simply a coherent outline of:

- The hypothesis to be tested: Showing users bigger product images will lead them to purchase more products.

- Success metrics for the test: Customer conversion

- Decision-making criteria for the test: The test validates the hypothesis that users in the variant show a higher conversion rate than those in the control group.

- Metrics that need to be instrumented to learn from the test: Customer conversion, clicks on product images

In the case of the product hypothesis example that bigger product images will lead to improved conversion on Amazon, the success metric is conversion and the decision criteria is an improvement in conversion.

After the right test is chosen and designed, and the success criteria and metrics are identified, the results must be analyzed. To do that, some statistical concepts are necessary.

When running tests, it is important to ensure that the two variants picked for the test (A and B) do not have a bias with respect to the success metric. For instance, if the variant that sees the bigger images already has a higher conversion than the variant that doesn’t see the change, then the test is biased and can lead to wrong conclusions.

In order to ensure no bias in sampling, one can observe the mean and variance for the success metric before the change is introduced.

Significance and Power

Once a difference between the two variants is observed, it is important to conclude that the change observed is an actual effect and not a random one. This can be done by computing the significance of the change in the success metric.

In layman’s terms, significance measures the frequency with which the test shows that bigger images lead to higher conversion when they actually don’t. Power measures the frequency with which the test tells us that bigger images lead to higher conversion when they actually do.

So, tests need to have a high value of power and a low value of significance for more accurate results.

While an in-depth exploration of the statistical concepts involved in product management hypothesis testing is out of scope here, the following actions are recommended to enhance knowledge on this front:

- Data analysts and data engineers are usually adept at identifying the right test designs and can guide product managers, so make sure to utilize their expertise early in the process.

- There are numerous online courses on hypothesis testing, A/B testing, and related statistical concepts, such as Udemy , Udacity , and Coursera .

- Using tools such as Google’s Firebase and Optimizely can make the process easier thanks to a large amount of out-of-the-box capabilities for running the right tests.

Using Hypothesis Testing for Successful Product Management

In order to continuously deliver value to users, it is imperative to test various hypotheses, for the purpose of which several types of product hypothesis testing can be employed. Each hypothesis needs to have an accompanying test design, as described above, in order to conclusively validate or invalidate it.

This approach helps to quantify the value delivered by new changes and features, bring focus to the most valuable features, and deliver incremental iterations.

- How to Conduct Remote User Interviews [Infographic]

- A/B Testing UX for Component-based Frameworks

- Building an AI Product? Maximize Value With an Implementation Framework

Further Reading on the Toptal Blog:

- Evolving UX: Experimental Product Design with a CXO

- How to Conduct Usability Testing in Six Steps

- 3 Product-led Growth Frameworks to Build Your Business

- A Product Designer’s Guide to Competitive Analysis

Understanding the basics

What is a product hypothesis.

A product hypothesis is an assumption that some improvement in the product will bring an increase in important metrics like revenue or product usage statistics.

What are the three required parts of a hypothesis?

The three required parts of a hypothesis are the assumption, the condition, and the prediction.

Why do we do A/B testing?

We do A/B testing to make sure that any improvement in the product increases our tracked metrics.

What is A/B testing used for?

A/B testing is used to check if our product improvements create the desired change in metrics.

What is A/B testing and multivariate testing?

A/B testing and multivariate testing are types of hypothesis testing. A/B testing checks how important metrics change with and without a single change in the product. Multivariate testing can track multiple variations of the same product improvement.

Kumara Raghavendra

Dubai, United Arab Emirates

Member since August 6, 2019

About the author

World-class articles, delivered weekly.

Subscription implies consent to our privacy policy

Toptal Product Managers

- Artificial Intelligence Product Managers

- Blockchain Product Managers

- Business Systems Analysts

- Cloud Product Managers

- Data Science Product Managers

- Digital Marketing Product Managers

- Digital Product Managers

- Directors of Product

- eCommerce Product Managers

- Enterprise Product Managers

- Enterprise Resource Planning Product Managers

- Freelance Product Managers

- Interim CPOs

- Jira Product Managers

- Kanban Product Managers

- Lean Product Managers

- Mobile Product Managers

- Product Consultants

- Product Development Managers

- Product Owners

- Product Portfolio Managers

- Product Strategy Consultants

- Product Tour Consultants

- Robotic Process Automation Product Managers

- Robotics Product Managers

- SaaS Product Managers

- Salesforce Product Managers

- Scrum Product Owner Contractors

- Web Product Managers

- View More Freelance Product Managers

Join the Toptal ® community.

- Product Management

How to Generate and Validate Product Hypotheses

What is a product hypothesis.

A hypothesis is a testable statement that predicts the relationship between two or more variables. In product development, we generate hypotheses to validate assumptions about customer behavior, market needs, or the potential impact of product changes. These experimental efforts help us refine the user experience and get closer to finding a product-market fit.

Product hypotheses are a key element of data-driven product development and decision-making. Testing them enables us to solve problems more efficiently and remove our own biases from the solutions we put forward.

Here’s an example: ‘If we improve the page load speed on our website (variable 1), then we will increase the number of signups by 15% (variable 2).’ So if we improve the page load speed, and the number of signups increases, then our hypothesis has been proven. If the number did not increase significantly (or not at all), then our hypothesis has been disproven.

In general, product managers are constantly creating and testing hypotheses. But in the context of new product development , hypothesis generation/testing occurs during the validation stage, right after idea screening .

Now before we go any further, let’s get one thing straight: What’s the difference between an idea and a hypothesis?

Idea vs hypothesis

Innovation expert Michael Schrage makes this distinction between hypotheses and ideas – unlike an idea, a hypothesis comes with built-in accountability. “But what’s the accountability for a good idea?” Schrage asks. “The fact that a lot of people think it’s a good idea? That’s a popularity contest.” So, not only should a hypothesis be tested, but by its very nature, it can be tested.

At Railsware, we’ve built our product development services on the careful selection, prioritization, and validation of ideas. Here’s how we distinguish between ideas and hypotheses:

Idea: A creative suggestion about how we might exploit a gap in the market, add value to an existing product, or bring attention to our product. Crucially, an idea is just a thought. It can form the basis of a hypothesis but it is not necessarily expected to be proven or disproven.

- We should get an interview with the CEO of our company published on TechCrunch.

- Why don’t we redesign our website?

- The Coupler.io team should create video tutorials on how to export data from different apps, and publish them on YouTube.

- Why not add a new ‘email templates’ feature to our Mailtrap product?

Hypothesis: A way of framing an idea or assumption so that it is testable, specific, and aligns with our wider product/team/organizational goals.

Examples:

- If we add a new ‘email templates’ feature to Mailtrap, we’ll see an increase in active usage of our email-sending API.

- Creating relevant video tutorials and uploading them to YouTube will lead to an increase in Coupler.io signups.

- If we publish an interview with our CEO on TechCrunch, 500 people will visit our website and 10 of them will install our product.

Now, it’s worth mentioning that not all hypotheses require testing . Sometimes, the process of creating hypotheses is just an exercise in critical thinking. And the simple act of analyzing your statement tells whether you should run an experiment or not. Remember: testing isn’t mandatory, but your hypotheses should always be inherently testable.

Let’s consider the TechCrunch article example again. In that hypothesis, we expect 500 readers to visit our product website, and a 2% conversion rate of those unique visitors to product users i.e. 10 people. But is that marginal increase worth all the effort? Conducting an interview with our CEO, creating the content, and collaborating with the TechCrunch content team – all of these tasks take time (and money) to execute. And by formulating that hypothesis, we can clearly see that in this case, the drawbacks (efforts) outweigh the benefits. So, no need to test it.

In a similar vein, a hypothesis statement can be a tool to prioritize your activities based on impact. We typically use the following criteria:

- The quality of impact

- The size of the impact

- The probability of impact

This lets us organize our efforts according to their potential outcomes – not the coolness of the idea, its popularity among the team, etc.

Now that we’ve established what a product hypothesis is, let’s discuss how to create one.

Start with a problem statement

Before you jump into product hypothesis generation, we highly recommend formulating a problem statement. This is a short, concise description of the issue you are trying to solve. It helps teams stay on track as they formalize the hypothesis and design the product experiments. It can also be shared with stakeholders to ensure that everyone is on the same page.

The statement can be worded however you like, as long as it’s actionable, specific, and based on data-driven insights or research. It should clearly outline the problem or opportunity you want to address.

Here’s an example: Our bounce rate is high (more than 90%) and we are struggling to convert website visitors into actual users. How might we improve site performance to boost our conversion rate?

How to generate product hypotheses

Now let’s explore some common, everyday scenarios that lead to product hypothesis generation. For our teams here at Railsware, it’s when:

- There’s a problem with an unclear root cause e.g. a sudden drop in one part of the onboarding funnel. We identify these issues by checking our product metrics or reviewing customer complaints.

- We are running ideation sessions on how to reach our goals (increase MRR, increase the number of users invited to an account, etc.)

- We are exploring growth opportunities e.g. changing a pricing plan, making product improvements , breaking into a new market.

- We receive customer feedback. For example, some users have complained about difficulties setting up a workspace within the product. So, we build a hypothesis on how to help them with the setup.

BRIDGES framework for ideation

When we are tackling a complex problem or looking for ways to grow the product, our teams use BRIDGeS – a robust decision-making and ideation framework. BRIDGeS makes our product discovery sessions more efficient. It lets us dive deep into the context of our problem so that we can develop targeted solutions worthy of testing.

Between 2-8 stakeholders take part in a BRIDGeS session. The ideation sessions are usually led by a product manager and can include other subject matter experts such as developers, designers, data analysts, or marketing specialists. You can use a virtual whiteboard such as Figjam or Miro (see our Figma template ) to record each colored note.

In the first half of a BRIDGeS session, participants examine the Benefits, Risks, Issues, and Goals of their subject in the ‘Problem Space.’ A subject is anything that is being described or dealt with; for instance, Coupler.io’s growth opportunities. Benefits are the value that a future solution can bring, Risks are potential issues they might face, Issues are their existing problems, and Goals are what the subject hopes to gain from the future solution. Each descriptor should have a designated color.

After we have broken down the problem using each of these descriptors, we move into the Solution Space. This is where we develop solution variations based on all of the benefits/risks/issues identified in the Problem Space (see the Uber case study for an in-depth example).

In the Solution Space, we start prioritizing those solutions and deciding which ones are worthy of further exploration outside of the framework – via product hypothesis formulation and testing, for example. At the very least, after the session, we will have a list of epics and nested tasks ready to add to our product roadmap.

How to write a product hypothesis statement

Across organizations, product hypothesis statements might vary in their subject, tone, and precise wording. But some elements never change. As we mentioned earlier, a hypothesis statement must always have two or more variables and a connecting factor.

1. Identify variables

Since these components form the bulk of a hypothesis statement, let’s start with a brief definition.

First of all, variables in a hypothesis statement can be split into two camps: dependent and independent. Without getting too theoretical, we can describe the independent variable as the cause, and the dependent variable as the effect . So in the Mailtrap example we mentioned earlier, the ‘add email templates feature’ is the cause i.e. the element we want to manipulate. Meanwhile, ‘increased usage of email sending API’ is the effect i.e the element we will observe.

Independent variables can be any change you plan to make to your product. For example, tweaking some landing page copy, adding a chatbot to the homepage, or enhancing the search bar filter functionality.

Dependent variables are usually metrics. Here are a few that we often test in product development:

- Number of sign-ups

- Number of purchases

- Activation rate (activation signals differ from product to product)

- Number of specific plans purchased

- Feature usage (API activation, for example)

- Number of active users

Bear in mind that your concept or desired change can be measured with different metrics. Make sure that your variables are well-defined, and be deliberate in how you measure your concepts so that there’s no room for misinterpretation or ambiguity.

For example, in the hypothesis ‘Users drop off because they find it hard to set up a project’ variables are poorly defined. Phrases like ‘drop off’ and ‘hard to set up’ are too vague. A much better way of saying it would be: If project automation rules are pre-defined (email sequence to responsible, scheduled tickets creation), we’ll see a decrease in churn. In this example, it’s clear which dependent variable has been chosen and why.

And remember, when product managers focus on delighting users and building something of value, it’s easier to market and monetize it. That’s why at Railsware, our product hypotheses often focus on how to increase the usage of a feature or product. If users love our product(s) and know how to leverage its benefits, we can spend less time worrying about how to improve conversion rates or actively grow our revenue, and more time enhancing the user experience and nurturing our audience.

2. Make the connection

The relationship between variables should be clear and logical. If it’s not, then it doesn’t matter how well-chosen your variables are – your test results won’t be reliable.

To demonstrate this point, let’s explore a previous example again: page load speed and signups.

Through prior research, you might already know that conversion rates are 3x higher for sites that load in 1 second compared to sites that take 5 seconds to load. Since there appears to be a strong connection between load speed and signups in general, you might want to see if this is also true for your product.

Here are some common pitfalls to avoid when defining the relationship between two or more variables:

Relationship is weak. Let’s say you hypothesize that an increase in website traffic will lead to an increase in sign-ups. This is a weak connection since website visitors aren’t necessarily motivated to use your product; there are more steps involved. A better example is ‘If we change the CTA on the pricing page, then the number of signups will increase.’ This connection is much stronger and more direct.

Relationship is far-fetched. This often happens when one of the variables is founded on a vanity metric. For example, increasing the number of social media subscribers will lead to an increase in sign-ups. However, there’s no particular reason why a social media follower would be interested in using your product. Oftentimes, it’s simply your social media content that appeals to them (and your audience isn’t interested in a product).

Variables are co-dependent. Variables should always be isolated from one another. Let’s say we removed the option “Register with Google” from our app. In this case, we can expect fewer users with Google workspace accounts to register. Obviously, it’s because there’s a direct dependency between variables (no registration with Google→no users with Google workspace accounts).

3. Set validation criteria

First, build some confirmation criteria into your statement . Think in terms of percentages (e.g. increase/decrease by 5%) and choose a relevant product metric to track e.g. activation rate if your hypothesis relates to onboarding. Consider that you don’t always have to hit the bullseye for your hypothesis to be considered valid. Perhaps a 3% increase is just as acceptable as a 5% one. And it still proves that a connection between your variables exists.

Secondly, you should also make sure that your hypothesis statement is realistic . Let’s say you have a hypothesis that ‘If we show users a banner with our new feature, then feature usage will increase by 10%.’ A few questions to ask yourself are: Is 10% a reasonable increase, based on your current feature usage data? Do you have the resources to create the tests (experimenting with multiple variations, distributing on different channels: in-app, emails, blog posts)?

Null hypothesis and alternative hypothesis

In statistical research, there are two ways of stating a hypothesis: null or alternative. But this scientific method has its place in hypothesis-driven development too…

Alternative hypothesis: A statement that you intend to prove as being true by running an experiment and analyzing the results. Hint: it’s the same as the other hypothesis examples we’ve described so far.

Example: If we change the landing page copy, then the number of signups will increase.

Null hypothesis: A statement you want to disprove by running an experiment and analyzing the results. It predicts that your new feature or change to the user experience will not have the desired effect.

Example: The number of signups will not increase if we make a change to the landing page copy.

What’s the point? Well, let’s consider the phrase ‘innocent until proven guilty’ as a version of a null hypothesis. We don’t assume that there is any relationship between the ‘defendant’ and the ‘crime’ until we have proof. So, we run a test, gather data, and analyze our findings — which gives us enough proof to reject the null hypothesis and validate the alternative. All of this helps us to have more confidence in our results.

Now that you have generated your hypotheses, and created statements, it’s time to prepare your list for testing.

Prioritizing hypotheses for testing

Not all hypotheses are created equal. Some will be essential to your immediate goal of growing the product e.g. adding a new data destination for Coupler.io. Others will be based on nice-to-haves or small fixes e.g. updating graphics on the website homepage.

Prioritization helps us focus on the most impactful solutions as we are building a product roadmap or narrowing down the backlog . To determine which hypotheses are the most critical, we use the MoSCoW framework. It allows us to assign a level of urgency and importance to each product hypothesis so we can filter the best 3-5 for testing.

MoSCoW is an acronym for Must-have, Should-have, Could-have, and Won’t-have. Here’s a breakdown:

- Must-have – hypotheses that must be tested, because they are strongly linked to our immediate project goals.

- Should-have – hypotheses that are closely related to our immediate project goals, but aren’t the top priority.

- Could-have – hypotheses of nice-to-haves that can wait until later for testing.

- Won’t-have – low-priority hypotheses that we may or may not test later on when we have more time.

How to test product hypotheses

Once you have selected a hypothesis, it’s time to test it. This will involve running one or more product experiments in order to check the validity of your claim.

The tricky part is deciding what type of experiment to run, and how many. Ultimately, this all depends on the subject of your hypothesis – whether it’s a simple copy change or a whole new feature. For instance, it’s not necessary to create a clickable prototype for a landing page redesign. In that case, a user-wide update would do.

On that note, here are some of the approaches we take to hypothesis testing at Railsware:

A/B testing

A/B or split testing involves creating two or more different versions of a webpage/feature/functionality and collecting information about how users respond to them.

Let’s say you wanted to validate a hypothesis about the placement of a search bar on your application homepage. You could design an A/B test that shows two different versions of that search bar’s placement to your users (who have been split equally into two camps: a control group and a variant group). Then, you would choose the best option based on user data. A/B tests are suitable for testing responses to user experience changes, especially if you have more than one solution to test.

Prototyping

When it comes to testing a new product design, prototyping is the method of choice for many Lean startups and organizations. It’s a cost-effective way of collecting feedback from users, fast, and it’s possible to create prototypes of individual features too. You may take this approach to hypothesis testing if you are working on rolling out a significant new change e.g adding a brand-new feature, redesigning some aspect of the user flow, etc. To control costs at this point in the new product development process , choose the right tools — think Figma for clickable walkthroughs or no-code platforms like Bubble.

Deliveroo feature prototype example

Let’s look at how feature prototyping worked for the food delivery app, Deliveroo, when their product team wanted to ‘explore personalized recommendations, better filtering and improved search’ in 2018. To begin, they created a prototype of the customer discovery feature using web design application, Framer.

One of the most important aspects of this feature prototype was that it contained live data — real restaurants, real locations. For test users, this made the hypothetical feature feel more authentic. They were seeing listings and recommendations for real restaurants in their area, which helped immerse them in the user experience, and generate more honest and specific feedback. Deliveroo was then able to implement this feedback in subsequent iterations.

Asking your users

Interviewing customers is an excellent way to validate product hypotheses. It’s a form of qualitative testing that, in our experience, produces better insights than user surveys or general user research. Sessions are typically run by product managers and involve asking in-depth interview questions to one customer at a time. They can be conducted in person or online (through a virtual call center , for instance) and last anywhere between 30 minutes to 1 hour.

Although CustDev interviews may require more effort to execute than other tests (the process of finding participants, devising questions, organizing interviews, and honing interview skills can be time-consuming), it’s still a highly rewarding approach. You can quickly validate assumptions by asking customers about their pain points, concerns, habits, processes they follow, and analyzing how your solution fits into all of that.

Wizard of Oz

The Wizard of Oz approach is suitable for gauging user interest in new features or functionalities. It’s done by creating a prototype of a fake or future feature and monitoring how your customers or test users interact with it.

For example, you might have a hypothesis that your number of active users will increase by 15% if you introduce a new feature. So, you design a new bare-bones page or simple button that invites users to access it. But when they click on the button, a pop-up appears with a message such as ‘coming soon.’

By measuring the frequency of those clicks, you could learn a lot about the demand for this new feature/functionality. However, while these tests can deliver fast results, they carry the risk of backfiring. Some customers may find fake features misleading, making them less likely to engage with your product in the future.

User-wide updates

One of the speediest ways to test your hypothesis is by rolling out an update for all users. It can take less time and effort to set up than other tests (depending on how big of an update it is). But due to the risk involved, you should stick to only performing these kinds of tests on small-scale hypotheses. Our teams only take this approach when we are almost certain that our hypothesis is valid.

For example, we once had an assumption that the name of one of Mailtrap ’s entities was the root cause of a low activation rate. Being an active Mailtrap customer meant that you were regularly sending test emails to a place called ‘Demo Inbox.’ We hypothesized that the name was confusing (the word ‘demo’ implied it was not the main inbox) and this was preventing new users from engaging with their accounts. So, we updated the page, changed the name to ‘My Inbox’ and added some ‘to-do’ steps for new users. We saw an increase in our activation rate almost immediately, validating our hypothesis.

Feature flags

Creating feature flags involves only releasing a new feature to a particular subset or small percentage of users. These features come with a built-in kill switch; a piece of code that can be executed or skipped, depending on who’s interacting with your product.

Since you are only showing this new feature to a selected group, feature flags are an especially low-risk method of testing your product hypothesis (compared to Wizard of Oz, for example, where you have much less control). However, they are also a little bit more complex to execute than the others — you will need to have an actual coded product for starters, as well as some technical knowledge, in order to add the modifiers ( only when… ) to your new coded feature.

Let’s revisit the landing page copy example again, this time in the context of testing.

So, for the hypothesis ‘If we change the landing page copy, then the number of signups will increase,’ there are several options for experimentation. We could share the copy with a small sample of our users, or even release a user-wide update. But A/B testing is probably the best fit for this task. Depending on our budget and goal, we could test several different pieces of copy, such as:

- The current landing page copy

- Copy that we paid a marketing agency 10 grand for

- Generic copy we wrote ourselves, or removing most of the original copy – just to see how making even a small change might affect our numbers.

Remember, every hypothesis test must have a reasonable endpoint. The exact length of the test will depend on the type of feature/functionality you are testing, the size of your user base, and how much data you need to gather. Just make sure that the experiment running time matches the hypothesis scope. For instance, there is no need to spend 8 weeks experimenting with a piece of landing page copy. That timeline is more appropriate for say, a Wizard of Oz feature.

Recording hypotheses statements and test results

Finally, it’s time to talk about where you will write down and keep track of your hypotheses. Creating a single source of truth will enable you to track all aspects of hypothesis generation and testing with ease.

At Railsware, our product managers create a document for each individual hypothesis, using tools such as Coda or Google Sheets. In that document, we record the hypothesis statement, as well as our plans, process, results, screenshots, product metrics, and assumptions.

We share this document with our team and stakeholders, to ensure transparency and invite feedback. It’s also a resource we can refer back to when we are discussing a new hypothesis — a place where we can quickly access information relating to a previous test.

Understanding test results and taking action

The other half of validating product hypotheses involves evaluating data and drawing reasonable conclusions based on what you find. We do so by analyzing our chosen product metric(s) and deciding whether there is enough data available to make a solid decision. If not, we may extend the test’s duration or run another one. Otherwise, we move forward. An experimental feature becomes a real feature, a chatbot gets implemented on the customer support page, and so on.

Something to keep in mind: the integrity of your data is tied to how well the test was executed, so here are a few points to consider when you are testing and analyzing results:

Gather and analyze data carefully. Ensure that your data is clean and up-to-date when running quantitative tests and tracking responses via analytics dashboards. If you are doing customer interviews, make sure to record the meetings (with consent) so that your notes will be as accurate as possible.

Conduct the right amount of product experiments. It can take more than one test to determine whether your hypothesis is valid or invalid. However, don’t waste too much time experimenting in the hopes of getting the result you want. Know when to accept the evidence and move on.

Choose the right audience segment. Don’t cast your net too wide. Be specific about who you want to collect data from prior to running the test. Otherwise, your test results will be misleading and you won’t learn anything new.

Watch out for bias. Avoid confirmation bias at all costs. Don’t make the mistake of including irrelevant data just because it bolsters your results. For example, if you are gathering data about how users are interacting with your product Monday-Friday, don’t include weekend data just because doing so would alter the data and ‘validate’ your hypothesis.

- Not all failed hypotheses should be treated as losses. Even if you didn’t get the outcome you were hoping for, you may still have improved your product. Let’s say you implemented SSO authentication for premium users, but unfortunately, your free users didn’t end up switching to premium plans. In this case, you still added value to the product by streamlining the login process for paying users.

- Yes, taking a hypothesis-driven approach to product development is important. But remember, you don’t have to test everything . Use common sense first. For example, if your website copy is confusing and doesn’t portray the value of the product, then you should still strive to replace it with better copy – regardless of how this affects your numbers in the short term.

Wrapping Up

The process of generating and validating product hypotheses is actually pretty straightforward once you’ve got the hang of it. All you need is a valid question or problem, a testable statement, and a method of validation. Sure, hypothesis-driven development requires more of a time commitment than just ‘giving it a go.’ But ultimately, it will help you tune the product to the wants and needs of your customers.

If you share our data-driven approach to product development and engineering, check out our services page to learn more about how we work with our clients!

Hypothesis Driven Product Management

- Post author By admin

- Post date September 23, 2020

- No Comments on Hypothesis Driven Product Management

What is Lean Hypothesis Testing?

“The first principle is that you must not fool yourself and you are the easiest person to fool.” – Richard P. Feynman

Lean hypothesis testing is an approach to agile product development that’s designed to minimize risk, increase the speed of development, and hone business outcomes by building and iterating on a minimum viable product (MVP).

The minimum viable product is a concept famously championed by Eric Ries as part of the lean startup methodology. At its core, the concept of the MVP is about creating a cycle of learning. Rather than devoting long development timelines to building a fully polished end product, teams working through lean product development build, in short, iterative cycles. Each cycle is devoted to shipping an MVP, defined as a product that’s built with the least amount of work possible for the purpose of testing and validating that product with users.

In lean hypothesis testing, the MVP itself can be framed as a hypothesis. A well-designed hypothesis breaks down an issue into a problem, solution, and result.

When defining a good hypothesis, start with a meaningful problem: an issue or pain-point that you’d like to solve for your users. Teams often use multiple qualitative and quantitative sources to the scope and describe this problem.

How do you get started?

Two core practices underlie lean:

- Use of the scientific method and

- Use of small batches. Science has brought us many wonderful things.

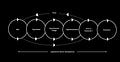

I personally prefer to expand the Build-Measure-Learn loop into the classic view of the scientific method because I find it’s more robust. You can see that process to the right, and we’ll step through the components in the balance of this section.

The use of small batches is critical. It gives you more shots at a successful outcome, particularly valuable when you’re in a high risk, high uncertainty environment.

A great example from Eric Ries’ book is the envelope folding experiment: If you had to stuff 100 envelopes with letters, how would you do it? Would you fold all the sheets of paper and then stuff the envelopes? Or would you fold one sheet of paper, stuff one envelope? It turns out that doing them one by one is vastly more efficient, and that’s just on an operational basis. If you don’t actually know if the envelopes will fit or whether anyone wants them (more analogous to a startup), you’re obviously much better off with the one-by-one approach.

So, how do you do it? In 6 simple (in principle) steps :

- Start with a strong idea , one where you’ve gone out a done customer strong discovery which is packaged into testable personas and problem scenarios. If you’re familiar with design thinking, it’s very much about doing good work in this area.

- Structure your idea(s) in a testable format (as hypotheses).

- Figure out how you’ll prove or disprove these hypotheses with a minimum of time and effort.

- Get focused on testing your hypotheses and collecting whatever metrics you’ll use to make a conclusion.

- Conclude and decide ; did you prove out this idea and is it time to throw more resources at it? Or do you need to reformulate and re-test?

- Pivot or persevere ; If you’re pivoting and revising, the key is to make sure you have a strong foundation in customer discovery so you can pivot in a smart way based on your understanding of the customer/user.

By using a hypothesis-driven development process you:

- Articulate your thinking

- Provide others with an understanding of your thinking

- Create a framework to test your designs against

- Develop a standard way of documenting your work

- Make better stuff

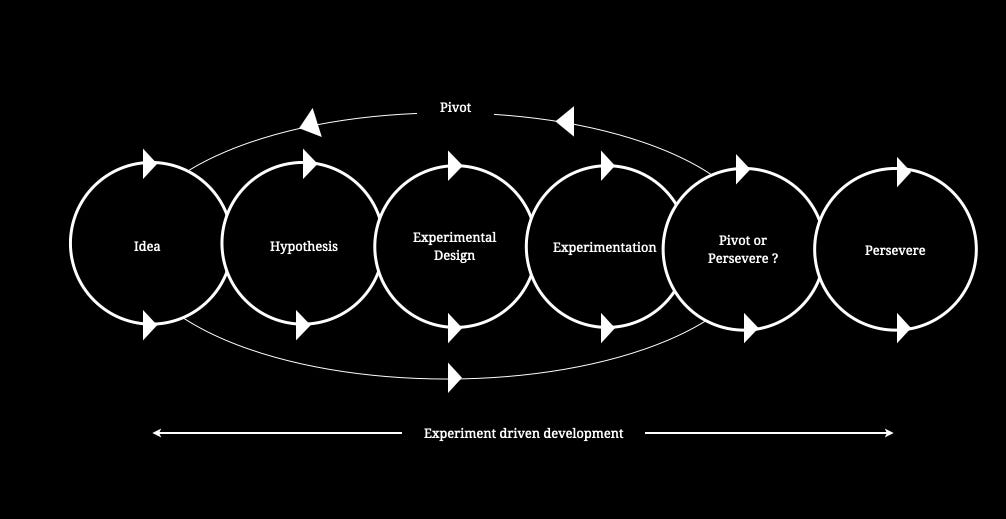

Free Template: Lean Hypothesis template

Eric Ries: Test & experiment, turn your feeling into a hypothesis

5 case studies on experimentation :.

- Adobe takes a customer-centric to innovating Photoshop

- Test Paper prototypes to save time and money: the Mozilla case study

- Walmart.ca increases on-site conversions by 13%

- Icons8 web app. Redesign based on usability testing.

- Experiments at Airbnb

- Tags Hypothesis Driven

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

12 min read

Value Hypothesis 101: A Product Manager's Guide

Talk to Sales

Humans make assumptions every day—it’s our brain’s way of making sense of the world around us, but assumptions are only valuable if they're verifiable . That’s where a value hypothesis comes in as your starting point.

A good hypothesis goes a step beyond an assumption. It’s a verifiable and validated guess based on the value your product brings to your real-life customers. When you verify your hypothesis, you confirm that the product has real-world value, thus you have a higher chance of product success.

What Is a Verifiable Value Hypothesis?

A value hypothesis is an educated guess about the value proposition of your product. When you verify your hypothesis , you're using evidence to prove that your assumption is correct. A hypothesis is verifiable if it does not prove false through experimentation or is shown to have rational justification through data, experiments, observation, or tests.

The most significant benefit of verifying a hypothesis is that it helps you avoid product failure and helps you build your product to your customers’ (and potential customers’) needs.

Verifying your assumptions is all about collecting data. Without data obtained through experiments, observations, or tests, your hypothesis is unverifiable, and you can’t be sure there will be a market need for your product.

A Verifiable Value Hypothesis Minimizes Risk and Saves Money

When you verify your hypothesis, you’re less likely to release a product that doesn’t meet customer expectations—a waste of your company’s resources. Harvard Business School explains that verifying a business hypothesis “...allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.”

If you verify your hypothesis upfront, you’ll lower risk and have time to work out product issues.

UserVoice Validation makes product validation accessible to everyone. Consider using its research feature to speed up your hypothesis verification process.

Value Hypotheses vs. Growth Hypotheses

Your value hypothesis focuses on the value of your product to customers. This type of hypothesis can apply to a product or company and is a building block of product-market fit .

A growth hypothesis is a guess at how your business idea may develop in the long term based on how potential customers may find your product. It’s meant for estimating business model growth rather than individual products.

Because your value hypothesis is really the foundation for your growth hypothesis, you should focus on value hypothesis tests first and complete growth hypothesis tests to estimate business growth as a whole once you have a viable product.

4 Tips to Create and Test a Verifiable Value Hypothesis

A verifiable hypothesis needs to be based on a logical structure, customer feedback data , and objective safeguards like creating a minimum viable product. Validating your value significantly reduces risk . You can prevent wasting money, time, and resources by verifying your hypothesis in early-stage development.

A good value hypothesis utilizes a framework (like the template below), data, and checks/balances to avoid bias.

1. Use a Template to Structure Your Value Hypothesis

By using a template structure, you can create an educated guess that includes the most important elements of a hypothesis—the who, what, where, when, and why. If you don’t structure your hypothesis correctly, you may only end up with a flimsy or leap-of-faith assumption that you can’t verify.

A true hypothesis uses a few guesses about your product and organizes them so that you can verify or falsify your assumptions. Using a template to structure your hypothesis can ensure that you’re not missing the specifics.

You can’t just throw a hypothesis together and think it will answer the question of whether your product is valuable or not. If you do, you could end up with faulty data informed by bias , a skewed significance level from polling the wrong people, or only a vague idea of what your customer would actually pay for your product.

A template will help keep your hypothesis on track by standardizing the structure of the hypothesis so that each new hypothesis always includes the specifics of your client personas, the cost of your product, and client or customer pain points.

A value hypothesis template might look like:

[Client] will spend [cost] to purchase and use our [title of product/service] to solve their [specific problem] OR help them overcome [specific obstacle].

An example of your hypothesis might look like:

B2B startups will spend $500/mo to purchase our resource planning software to solve resource over-allocation and employee burnout.

By organizing your ideas and the important elements (who, what, where, when, and why), you can come up with a hypothesis that actually answers the question of whether your product is useful and valuable to your ideal customer.

2. Turn Customer Feedback into Data to Support Your Hypothesis

Once you have your hypothesis, it’s time to figure out whether it’s true—or, more accurately, prove that it’s valid. Since a hypothesis is never considered “100% proven,” it’s referred to as either valid or invalid based on the information you discover in your experiments or tests. Additionally, your results could lead to an alternative hypothesis, which is helpful in refining your core idea.

To support value hypothesis testing, you need data. To do that, you'll want to collect customer feedback . A customer feedback management tool can also make it easier for your team to access the feedback and create strategies to implement or improve customer concerns.

If you find that potential clients are not expressing pain points that could be solved with your product or you’re not seeing an interest in the features you hope to add, you can adjust your hypothesis and absorb a lower risk. Because you didn’t invest a lot of time and money into creating the product yet, you should have more resources to put toward the product once you work out the kinks.

On the other hand, if you find that customers are requesting features your product offers or pain points your product could solve, then you can move forward with product development, confident that your future customers will value (and spend money on) the product you’re creating.

A customer feedback management tool like UserVoice can empower you to challenge assumptions from your colleagues (often based on anecdotal information) which find their way into team decision making . Having data to reevaluate an assumption helps with prioritization, and it confirms that you’re focusing on the right things as an organization.

3. Validate Your Product

Since you have a clear idea of who your ideal customer is at this point and have verified their need for your product, it’s time to validate your product and decide if it’s better than your competitors’.

At this point, simply asking your customers if they would buy your product (or spend more on your product) instead of a competitor’s isn’t enough confirmation that you should move forward, and customers may be biased or reluctant to provide critical feedback.

Instead, create a minimum viable product (MVP). An MVP is a working, bare-bones version of the product that you can test out without risking your whole budget. Hypothesis testing with an MVP simulates the product experience for customers and, based on their actions and usage, validates that the full product will generate revenue and be successful.

If you take the steps to first verify and then validate your hypothesis using data, your product is more likely to do well. Your focus will be on the aspect that matters most—whether your customer actually wants and would invest money in purchasing the product.

4. Use Safeguards to Remain Objective

One of the pitfalls of believing in your product and attempting to validate it is that you’re subject to confirmation bias . Because you want your product to succeed, you may pay more attention to the answers in the collected data that affirm the value of your product and gloss over the information that may lead you to conclude that your hypothesis is actually false. Confirmation bias could easily cloud your vision or skew your metrics without you even realizing it.

Since it’s hard to know when you’re engaging in confirmation bias, it’s good to have safeguards in place to keep you in check and aligned with the purpose of objectively evaluating your value hypothesis.

Safeguards include sharing your findings with third-party experts or simply putting yourself in the customer’s shoes.

Third-party experts are the business version of seeking a peer review. External parties don’t stand to benefit from the outcome of your verification and validation process, so your work is verified and validated objectively. You gain the benefit of knowing whether your hypothesis is valid in the eyes of the people who aren’t stakeholders without the risk of confirmation bias.

In addition to seeking out objective minds, look into potential counter-arguments , such as customer objections (explicit or imagined). What might your customer think about investing the time to learn how to use your product? Will they think the value is commensurate with the monetary cost of the product?

When running an experiment on validating your hypothesis, it’s important not to elevate the importance of your beliefs over the objective data you collect. While it can be exciting to push for the validity of your idea, it can lead to false assumptions and the permission of weak evidence.

Validation Is the Key to Product Success

With your new value hypothesis in hand, you can confidently move forward, knowing that there’s a true need, desire, and market for your product.

Because you’ve verified and validated your guesses, there’s less of a chance that you’re wrong about the value of your product, and there are fewer financial and resource risks for your company. With this strong foundation and the new information you’ve uncovered about your customers, you can add even more value to your product or use it to make more products that fit the market and user needs.

If you think customer feedback management software would be useful in your hypothesis validation process, consider opting into our free trial to see how UserVoice can help.

Heather Tipton

Start your free trial.

The Product Management Dictionary: hypothesis testing

Learn about hypothesis testing in product management with our comprehensive dictionary.

Hypothesis testing is a vital part of product management, providing a framework to test assumptions and make data-driven decisions. In this article, we'll explore the concept of hypothesis testing, how it works, and some common metrics and KPIs that you can use to measure success.

Understanding Hypothesis Testing in Product Management

At its core, hypothesis testing is a way to validate assumptions by gathering data through experimentation. In product management, it's used to test the effectiveness of new features or product changes, allowing teams to make informed decisions about how to move forward.

The Importance of Hypothesis Testing

Hypothesis testing is critical in product management because it allows teams to determine whether a proposed change or feature will have an impact on key metrics, such as conversion rates or user engagement. By conducting experiments and collecting data, teams can make more informed decisions and minimize risk.

Key Terminology and Concepts

Before we dive into the process of hypothesis testing, it's important to understand some key terminology and concepts.

- Hypothesis: A testable statement about the expected outcome of an experiment.

- Null hypothesis: A hypothesis that proposes there is no significant difference between groups or variables.

- Alternative hypothesis: A hypothesis that proposes there is a significant difference between groups or variables.

- Statistical significance: The likelihood of a result occurring by chance.

One important thing to note is that hypothesis testing is not just about proving or disproving a hypothesis. It's also about gathering data and insights that can inform future product decisions. For example, if a hypothesis is not supported by the data, it's important to understand why and use that information to refine the hypothesis or adjust the product strategy.

Another key concept in hypothesis testing is sample size. The larger the sample size, the more reliable the results will be. However, it's also important to ensure that the sample is representative of the target population. For example, if a product is targeted towards a specific demographic, it's important to ensure that the sample includes a similar demographic.

It's also important to consider the type of experiment being conducted. A randomized controlled trial is often considered the gold standard for hypothesis testing, as it allows for the most control over variables. However, there may be situations where other types of experiments, such as A/B testing or cohort analysis, are more appropriate.

Ultimately, hypothesis testing is a powerful tool for product managers, allowing them to make data-driven decisions and minimize risk. By understanding key terminology and concepts, and carefully designing experiments, product teams can gather valuable insights that can inform future product decisions and drive success.

The Hypothesis Testing Process

The hypothesis testing process is a fundamental aspect of scientific research and experimentation. It is a systematic approach to testing a hypothesis, which involves several key steps. These steps help to ensure that the testing is rigorous, reliable, and can be replicated by others.

Formulating a Hypothesis

The first step in the hypothesis testing process is to formulate a hypothesis. This is a statement that describes the expected outcome of an experiment. A hypothesis should be specific, measurable, and testable. This means that you should be able to track results and draw meaningful conclusions from your data.

For example, if you are testing a new website design, your hypothesis might be: "Changing the website design will lead to a 20% increase in user engagement."

Designing an Experiment

Once you have formulated your hypothesis, the next step is to design an experiment to test it. This involves developing a plan for how you will collect data and what variables you will measure. Your experiment should be designed to test your hypothesis in a controlled and systematic way.

For example, if you are testing the impact of a new website design, you may choose to divide users into two groups: one group that sees the new design, and one group that sees the old design. From there, you can track user engagement for each group to determine the impact of the new design on overall engagement.

Collecting and Analyzing Data

Once you have designed your experiment, the next step is to collect and analyze data. This involves tracking and recording data in a clear and organized way, so that you can draw meaningful conclusions from your results.

For example, you may track user engagement for both the group that sees the new design and the group that sees the old design. You can then compare the results to determine whether the new design has a significant impact on user engagement.

Drawing Conclusions and Making Decisions

The final step in the hypothesis testing process is to draw conclusions from your data and make informed decisions about how to move forward. If your data supports your hypothesis, you may choose to implement the change or feature that you tested. If your data does not support your hypothesis, you may choose to pivot and try a new approach.

Overall, the hypothesis testing process is a powerful tool for scientific research and experimentation. By following these key steps, you can ensure that your testing is rigorous, reliable, and can be replicated by others. This helps to build a strong foundation of knowledge and understanding in your field of study.

Types of Hypothesis Tests

As a product manager, it's important to use hypothesis testing to make data-driven decisions. There are several different types of hypothesis tests that you can use, each with its own strengths and weaknesses. Let's take a closer look at some of the most popular options.

A/B Testing

A/B testing is perhaps the most well-known type of hypothesis test, and involves randomly dividing users into two groups: one group that sees the original version of a feature, and one group that sees a variation of that feature. From there, you can track the performance of each group to determine which version of the feature outperforms the other.

For example, let's say you're testing a new button color on your website. You could randomly show half of your users the original blue button, and the other half a green button. By tracking the click-through rates of each group, you can determine which color is more effective at getting users to take the desired action.

Multivariate Testing

Multivariate testing is similar to A/B testing, but involves testing multiple variations of a feature simultaneously. This can be helpful when testing multiple features at once, or when trying to optimize a particular feature.

For example, let's say you're testing a new landing page for your website. You could test multiple variations of the page, each with different headlines, images, and calls-to-action. By tracking the performance of each variation, you can determine which combination of elements is most effective at converting visitors into customers.

Bayesian Hypothesis Testing

Bayesian hypothesis testing is a more complex type of hypothesis test that allows you to update your beliefs based on new data. This can be particularly useful when testing complex features or changes, where there may be several variables at play.

For example, let's say you're testing a new pricing model for your product. You could use Bayesian hypothesis testing to update your beliefs about the effectiveness of the new model as you collect more data. This would allow you to make more informed decisions about whether to stick with the new model or revert back to the old one.

Overall, there are many different types of hypothesis tests that you can use as a product manager. By understanding the strengths and weaknesses of each approach, you can make more informed decisions and drive better results for your business.

Common Metrics and KPIs in Hypothesis Testing

When it comes to hypothesis testing, there are a variety of metrics and KPIs that can help you measure the success of your experiments. In addition to tracking the specific outcomes you're interested in, it's important to keep an eye on some common metrics and KPIs to get a more complete picture of how your changes are impacting your product or service.

Conversion Rates

One of the most important metrics to track during hypothesis testing is conversion rates. Conversion rates are a measure of how many users take a desired action, such as making a purchase or signing up for a newsletter. By tracking conversion rates, you can determine whether a particular change or feature is leading to more conversions. For example, if you're testing a new checkout process, you might track conversion rates to see if the new process is leading to more completed purchases.

It's important to keep in mind that conversion rates can be impacted by a variety of factors, such as traffic sources, seasonality, and user behavior. To get a more accurate picture of how your changes are impacting conversion rates, it's often helpful to segment your data by different user groups or traffic sources.

User Engagement Metrics

In addition to conversion rates, it's important to track user engagement metrics during hypothesis testing. User engagement metrics, such as time on site or number of pages viewed, can provide insights into how engaged users are with your product. By tracking these metrics, you can determine whether a particular change or feature is leading to more engaged users.

For example, if you're testing a new homepage design, you might track metrics like bounce rate, time on site, and pages per session to see if the new design is leading to more engaged users. Keep in mind that user engagement metrics can also be impacted by a variety of factors, such as the quality of your content and the ease of navigation on your site.

Retention and Churn Rates

Retention and churn rates are also important metrics to track during hypothesis testing. Retention rates measure how many users continue to use your product over time, while churn rates measure how many users stop using it. By tracking these metrics, you can determine whether a particular change or feature is leading to improved retention and reduced churn.

For example, if you're testing a new onboarding process, you might track retention and churn rates to see if the new process is leading to more users sticking around for the long term. Keep in mind that retention and churn rates can be impacted by a variety of factors, such as the quality of your product and the level of competition in your market.

Overall, tracking these common metrics and KPIs can help you get a more complete picture of how your hypothesis testing experiments are impacting your product or service. By analyzing these metrics alongside your specific outcomes of interest, you can make more informed decisions about how to optimize your product or service for success.

Hypothesis testing is a critical part of product management, allowing teams to validate assumptions and make data-driven decisions. By understanding the process of hypothesis testing, as well as common metrics and KPIs, you can make more informed decisions and drive the success of your product.

Join hundreds of teams launching with Ignition

The first platform built explicitly to align product, product marketing, and GTM teams around product updates.

vs. Productboard

vs. Clickup

vs. Monday.com

Battlecard generator

Persona generator

Google ad copy generator

State of GTM Report

Customer stories

Help center

Product Talk

Make better product decisions.

Hypothesis Testing

As you get started with hypothesis testing, be sure to use these resources to make sure you get the most out of your experiments.

Start here to understand the big picture:

- Why You Aren’t Learning as Much as You Could from Your Experiments

And then dive into these to master the tactics:

- The 14 Most Common Hypothesis Testing Mistakes People Make (And How to Avoid Them)

- Not Knowing What You Want To Learn

- How to Improve Your Experiment Design (And Build Trust in Your Product Experiments)

- How to Estimate the Expected Impact of a Product Change

- Putting the 4 Levels of Product Analysis Into Practice: A Halloween-Themed Example

- What to Do When You Don’t Have Enough Traffic to A/B Test

More than just knowing the mechanics of how to run a good experiment, you also need to know what to test and when.

- Don’t Rely on Confidence Alone

- Run Experiments Before You Write Code

- Why You Can (And Should) Experiment When Building Enterprise Products

Hypothesis Testing Video Series

Hypothesis Testing: Levels of Product Analysis

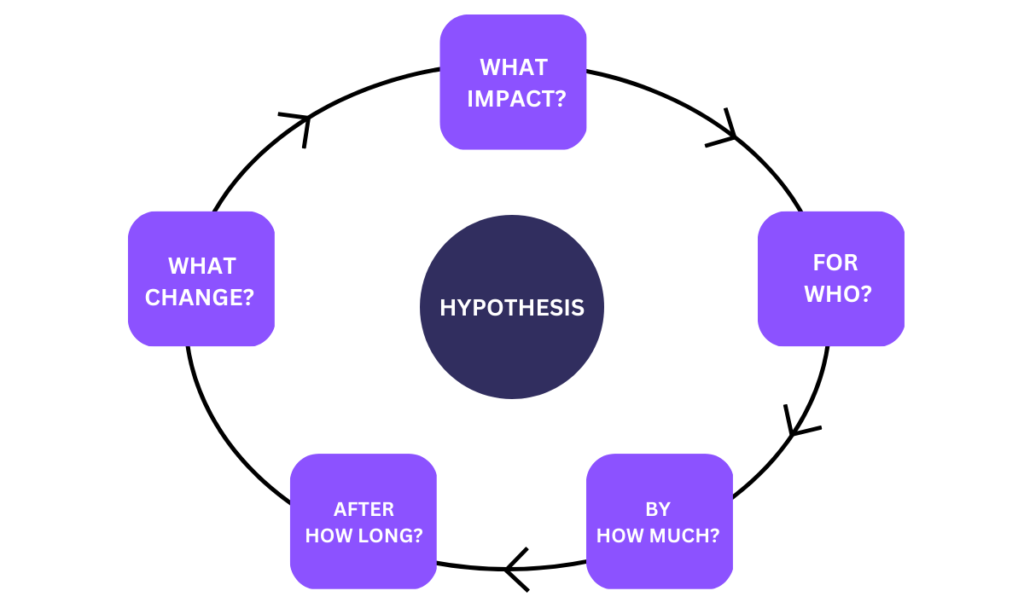

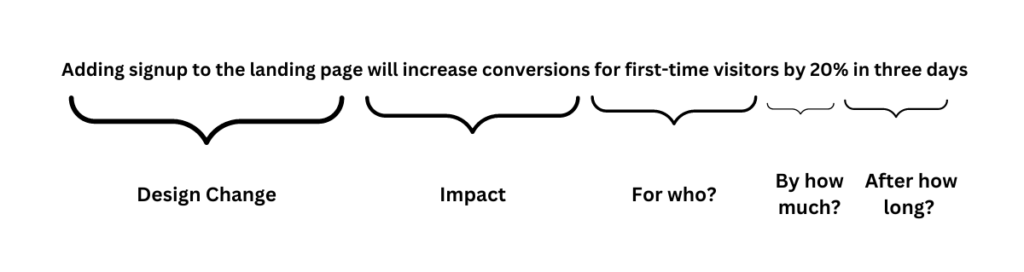

Hypothesis Testing: The 5 Components of a Good Hypothesis

Do you want to learn more about hypothesis testing? Subscribe to the Product Talk mailing list to get new articles and videos delivered to your inbox.

Popular Resources

- Product Discovery Basics: Everything You Need to Know

- Visualize Your Thinking with Opportunity Solution Trees

- Customer Interviews: How to Recruit, What to Ask, and How to Synthesize What You Learn

- Assumption Testing: Everything You Need to Know to Get Started

Recent Posts

- Join 4 New Events on Continuous Discovery with Teresa Torres (March 2024)

- Ask the Community: How Do You Shift From Functional Teams to Value-Driven Teams?

- Tools of the Trade: Using Pendo to Manage Customer Requests

Advisory boards aren’t only for executives. Join the LogRocket Content Advisory Board today →

- Product Management

- Solve User-Reported Issues

- Find Issues Faster

- Optimize Conversion and Adoption

How to write an effective hypothesis

Hypothesis validation is the bread and butter of product discovery. Understanding what should be prioritized and why is the most important task of a product manager. It doesn’t matter how well you validate your findings if you’re trying to answer the wrong question.

A question is as good as the answer it can provide. If your hypothesis is well written, but you can’t read its conclusion, it’s a bad hypothesis. Alternatively, if your hypothesis has embedded bias and answers itself, it’s also not going to help you.

There are several different tools available to build hypotheses, and it would be exhaustive to list them all. Apart from being superficial, focusing on the frameworks alone shifts the attention away from the hypothesis itself.

In this article, you will learn what a hypothesis is, the fundamental aspects of a good hypothesis, and what you should expect to get out of one.

The 4 product risks

Mitigating the four product risks is the reason why product managers exist in the first place and it’s where good hypothesis crafting starts.

The four product risks are assessments of everything that could go wrong with your delivery. Our natural thought process is to focus on the happy path at the expense of unknown traps. The risks are a constant reminder that knowing why something won’t work is probably more important than knowing why something might work.

These are the fundamental questions that should fuel your hypothesis creation:

Is it viable for the business?

Is it relevant for the user, can we build it, is it ethical to deliver.

Is this hypothesis the best one to validate now? Is this the most cost-effective initiative we can take? Will this answer help us achieve our goals? How much money can we make from it?

Has the user manifested interest in this solution? Will they be able to use it? Does it solve our users’ challenges? Is it aesthetically pleasing? Is it vital for the user, or just a luxury?

Do we have the resources and know-how to deliver it? Can we scale this solution? How much will it cost? Will it depreciate fast? Is it the best cost-effective solution? Will it deliver on what the user needs?

Is this solution safe both for the user and for the business? Is it inclusive enough? Is there a risk of public opinion whiplash? Is our solution enabling wrongdoers? Are we jeopardizing some to privilege others?

Over 200k developers and product managers use LogRocket to create better digital experiences

There is an infinite amount of questions that can surface from these risks, and most of those will be context dependent. Your industry, company, marketplace, team composition, and even the type of product you handle will impose different questions, but the risks remain the same.

How to decide whether your hypothesis is worthy of validation

Assuming you came up with a hefty batch of risks to validate, you must now address them. To address a risk, you could do one of three things: collect concrete evidence that you can mitigate that risk, infer possible ways you can mitigate a risk and, finally, deep dive into that risk because you’re not sure about its repercussions.

This three way road can be illustrated by a CSD matrix :

Certainties

Suppositions.

Everything you’re sure can help you to mitigate whatever risk. An example would be, on the risk “how to build it,” assessing if your engineering team is capable of integrating with a certain API. If your team has made it a thousand times in the past, it’s not something worth validating. You can assume it is true and mark this particular risk as solved.

To put it simply, a supposition is something that you think you know, but you’re not sure. This is the most fertile ground to explore hypotheses, since this is the precise type of answer that needs validation. The most common usage of supposition is addressing the “is it relevant for the user” risk. You presume that clients will enjoy a new feature, but before you talk to them, you can’t say you are sure.

Doubts are different from suppositions because they have no answer whatsoever. A doubt is an open question about a risk which you have no clue on how to solve. A product manager that tries to mitigate the “is it ethical to deliver” risk from an industry that they have absolute no familiarity with is poised to generate a lot of doubts, but no suppositions or certainties. Doubts are not good hypothesis sources, since you have no idea on how to validate it.

A hypothesis worth validating comes from a place of uncertainty, not confidence or doubt. If you are sure about a risk mitigation, coming up with a hypothesis to validate it is just a waste of time and resources. Alternatively, trying to come up with a risk assessment for a problem you are clueless about will probably generate hypotheses disconnected with the problem itself.

That said, it’s important to make it clear that suppositions are different from hypotheses. A supposition is merely a mental exercise, creativity executed. A hypothesis is a measurable, cartesian instrument to transform suppositions into certainties, therefore making sure you can mitigate a risk.

How to craft a hypothesis

A good hypothesis comes from a supposed solution to a specific product risk. That alone is good enough to build half of a good hypothesis, but you also need to have measurable confidence.

More great articles from LogRocket:

- How to implement issue management to improve your product

- 8 ways to reduce cycle time and build a better product

- What is a PERT chart and how to make one

- Discover how to use behavioral analytics to create a great product experience

- Explore six tried and true product management frameworks you should know

- Advisory boards aren’t just for executives. Join LogRocket’s Content Advisory Board. You’ll help inform the type of content we create and get access to exclusive meetups, social accreditation, and swag.

You’ll rarely transform a supposition into a certainty without an objective. Returning to the API example we gave when talking about certainties, you know the “can we build it” risk doesn’t need validation because your team has made tens of API integrations before. The “tens” is the quantifiable, measurable indication that gives you the confidence to be sure about mitigating a risk.

What you need from your hypothesis is exactly this quantifiable evidence, the number or hard fact able to give you enough confidence to treat your supposition as a certainty. To achieve that goal, you must come up with a target when creating the hypothesis. A hypothesis without a target can’t be validated, and therefore it’s useless.

Imagine you’re the product manager for an ecommerce app. Your users are predominantly mobile users, and your objective is to increase sales conversions. After some research, you came across the one click check-out experience, made famous by Amazon, but broadly used by ecommerces everywhere.

You know you can build it, but it’s a huge endeavor for your team. You best make sure your bet on one click check-out will work out, otherwise you’ll waste a lot of time and resources on something that won’t be able to influence the sales conversion KPI.

You identify your first risk then: is it valuable to the business?

Literature is abundant on the topic, so you are almost sure that it will bear results, but you’re not sure enough. You only can suppose that implementing the one click functionality will increase sales conversion.

During case study and data exploration, you have reasons to believe that a 30 percent increase of sales conversion is a reasonable target to be achieved. To make sure one click check-out is valuable to the business then, you would have a hypothesis such as this:

We believe that if we implement a one-click checkout on our ecommerce, we can grow our sales conversion by 30 percent

This hypothesis can be played with in all sorts of ways. If you’re trying to improve user-experience, for example, you could make it look something like this:

We believe that if we implement a one-click checkout on our ecommerce, we can reduce the time to conversion by 10 percent

You can also validate different solutions having the same criteria, building an opportunity tree to explore a multitude of hypothesis to find the better one:

We believe that if we implement a user review section on the listing page, we can grow our sales conversion by 30 percent

Sometimes you’re clueless about impact, or maybe any win is a good enough win. In that case, your criteria of validation can be a fact rather than a metric:

We believe that if we implement a one-click checkout on our ecommerce, we can reduce the time to conversion

As long as you are sure of the risk you’re mitigating, the supposition you want to transform into a certainty, and the criteria you’ll use to make that decision, you don’t need to worry so much about “right” or “wrong” when it comes to hypothesis formatting.