- Publication Process

Journal Acceptance Rates: Everything You Need to Know

- 4 minute read

- 71.7K views

Table of Contents

Every journal has a role to publish and disseminate research in their field, and within that role is a sub-role, if you will. That of a “gatekeeper.” In other words, selecting which research is deserving of being published within the journal’s pages. Obviously, not all unsolicited papers can be accepted, so the editorial team of the journal will reject articles, either before or after peer review.

In this article, we’ll discuss what a journal acceptance rate is, and what it measures. We’ll also touch on how to find a journal’s acceptance rate.

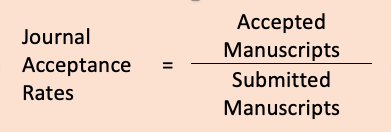

The acceptance rate—the proportion of manuscripts that are selected for publication from the pool of all submitted manuscripts—is an apparently straightforward measure that an author make take into consideration when deciding where to submit a manuscript. Does an acceptance rate have any meaning as an evaluative metric, though?

What do Acceptance Rates Measure?

The acceptance rate of a journal is a measurement of how many manuscripts are accepted for publication, compared to how many are submitted. Even though it may seem to be a straightforward measurement, like most things in the research journal world, it’s a little more complicated than that. But, don’t worry, we’ll sort it all out.

To determine a journal’s acceptance rate, the number of accepted manuscripts is simply divided by the number of submitted manuscripts. For example, if in one year a journal accepts 60 manuscripts, but 500 are submitted that same year, the journal’s acceptance rate is:

60/500 = .12 or 12% acceptance rate

Seems simple enough, right? But, what does that number really mean? If the journal is relatively selective, like this acceptance rate indicates, what does it mean when a manuscript is rejected? It could be because the manuscript was poorly written, or it could be that it was an excellent manuscript, but out of the scope of the journal’s focus. Therefore, is a journal’s acceptance rate really an accurate measurement of a journal’s rigor in selecting manuscripts for publication?

Additionally, some journals calculate their acceptance rate differently. For example, looking at the number of accepted manuscripts divided by the sum of accepted and rejected manuscripts. In this other approach, the publisher is reporting a lower acceptance rate than a publisher with the same number of accepted and rejected articles. You can see how that looks below:

60/560 = .107 or 11% acceptance rate

So, in addition to knowing a journal’s acceptance rate, it helps to know how they’re calculating that rate. Journal’s with lower acceptance rates are generally thought to be more “prestigious,” but is it true? For instance, some journals let their editor select which manuscripts are even sent to the editorial team, and calculate their acceptance rate on those manuscripts – which is much less than the total of the received manuscripts. Other editors don’t keep an accurate count, and submit an estimate of their acceptance rate. Also, if the journal is highly specific, and only a few scientists and researchers can write manuscripts related to the scope of the journal, that would artificially increase the acceptance rate of the journal.

The bigger question might be, though, “Does it matter?” Does a journal’s acceptance really have any meaning, as you’re evaluating which journal to submit your paper to?

What Our Research Shows

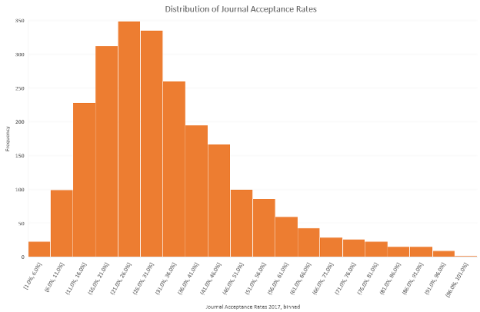

We looked at over 2,300 journals (more than 80% of them published by Elsevier), and calculated that the average acceptance rate was 32%. The range of acceptance was from just over 1% to 93.2%.

However, if we look at the different aspects of the group of journals, we can draw some general conclusions.

- Larger journals have lower acceptance rates than smaller journals, between 10-60%

- Older journals have lower acceptance rates than newer journals, but not by much

- High-impact journals have relatively low acceptance rates, but there’s much variation still (5-50% acceptance)

- We did not see a relationship between the share of review papers that were published compared to the corresponding acceptance rate

- Gold open access journals had higher acceptance rates than other models of open access journals. Take note that newer journals tend to follow the Gold open access model.

- No relationship was found between the breadth of scope for a journal and its acceptance rate. But journals within the scope of formal sciences (mathematics, economics, computer science) had lower acceptance rates than journals that focused on medicine and the life sciences.

For yet another take on this topic, check out our article on Journal Impact Factors .

How to Find Journal Acceptance Rates

While there’s no comprehensive journal acceptance rate list, per se, this information is readily found in journal editor reports, journal finding tools and on metric pages within the journal itself. You can find these rates by utilizing the below tips:

- Contact the journal: Many times, if you contact the editor of the journal, they will share their acceptance rate with you.

- Industry/field publishing resources: Check with library databases within your field. Sometimes you can find acceptance rates there.

- Google: Some journals publish their acceptance rate on their home page. Alternatively, if you Google a specific society, they may also publish the acceptance rates of associated journals.

- Elsevier Journal Acceptance Rate : We keep track of our journals’ acceptance rates by dividing the total of accepted articles by the total of submitted articles.

Language Editing Plus

Improve the flow and writing of your paper and get unlimited editing support for up to one year. We’ll support you at every stage of the submission process, including manuscript formatting for your chosen journal. Learn more about this Language Editing Service , and get started today!

Herbert, Rachel, Accept Me, Accept Me Not: What Do Journal Acceptance Rates Really Mean? (February 15, 2020). International Center for the Study of Research Paper No. Forthcoming, Available at SSRN: https://ssrn.com/abstract=3526365 or http://dx.doi.org/10.2139/ssrn.3526365

Research Data Storage and Retention

- Manuscript Preparation

Know How to Structure Your PhD Thesis

You may also like.

Publishing Biomedical Research: What Rules Should You Follow?

Writing an Effective Cover Letter for Manuscript Resubmission

How to Find and Select Reviewers for Journal Articles

How to Request the Addition of an Extra Author Before Publication

Paper Rejection: Common Reasons

How to Write a Journal Article from a Thesis

Input your search keywords and press Enter.

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Time to publish? Turnaround times, acceptance rates, and impact factors of journals in fisheries science

Roles Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review & editing

* E-mail: [email protected]

Current address: Southeast Fisheries Science Center, National Marine Fisheries Service, Beaufort, North Carolina, United States of America

Affiliation Department of Applied Ecology, North Carolina State University, Morehead City, North Carolina, United States of America

- Brendan J. Runde

- Published: September 23, 2021

- https://doi.org/10.1371/journal.pone.0257841

- Peer Review

- Reader Comments

Selecting a target journal is a universal decision faced by authors of scientific papers. Components of the decision, including expected turnaround time, journal acceptance rate, and journal impact factor, vary in terms of accessibility. In this study, I collated recent turnaround times and impact factors for 82 journals that publish papers in the field of fisheries sciences. In addition, I gathered acceptance rates for the same journals when possible. Findings indicated clear among-journal differences in turnaround time, with median times-to-publication ranging from 79 to 323 days. There was no clear correlation between turnaround time and acceptance rate nor between turnaround time and impact factor; however, acceptance rate and impact factor were negatively correlated. I found no field-wide differences in turnaround time since the beginning of the COVID-19 pandemic, though some individual journals took significantly longer or significantly shorter to publish during the pandemic. Depending on their priorities, authors choosing a target journal should use the results of this study as guidance toward a more informed decision.

Citation: Runde BJ (2021) Time to publish? Turnaround times, acceptance rates, and impact factors of journals in fisheries science. PLoS ONE 16(9): e0257841. https://doi.org/10.1371/journal.pone.0257841

Editor: Charles William Martin, University of Florida, UNITED STATES

Received: July 6, 2021; Accepted: September 10, 2021; Published: September 23, 2021

Copyright: © 2021 Brendan J. Runde. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All relevant data are within the manuscript and its Supporting information files.

Funding: The author(s) received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Settling on a target journal for a completed scientific manuscript can be a non-scientific process. Some critical elements of the decision are intangible, e.g., attempting to reach a certain target audience or how well the paper “fits” within the scope of the journal [ 1 – 3 ]. Others, such as turnaround time, acceptance rate, and journal impact, can be measured but (other than impact) these metrics are often challenging to locate, leading authors to make decisions without full information [ 3 , 4 ].

Timeliness of publication has been reported as among the most important factors in the decision of target journal [ 4 – 8 ]. Prolonged peer review and/or production can be a major hindrance to authors [ 9 ]. Aarssen et al. [ 4 ] surveyed authors of ecological papers and found that 72.2% considered likelihood of a rapid decision a “very important” or “important” factor in choosing a journal. In some fields, research outcomes may be time-sensitive, so lengthy review can render results obsolete even before publication [ 10 ]. Desires and expectations for turnaround time are often not met: Mulligan et al. [ 11 ] found that 43% of survey respondents rated “time-to-first-decision” of their most recent article as “slow” or “very slow.” Allen et al. [ 12 ] found that authors in the life sciences expect peer review to take less than 30 days (although this may be unrealistic). Moreover, Nguyen et al. [ 7 ] conducted a survey of authors in conservation biology in which the vast majority (86%) of respondents reported that their perceived optimal duration for peer review was eight weeks or under, though their experienced peer review time was on average 14.4 weeks. Over half of the respondents in Nguyen et al. [ 7 ] believed that lengthy peer-reviews can have a detrimental impact on their career, including individuals who reported that the lack of timely publication obstructed their acceptance into educational institutions and caused delays to degree conferral.

Despite the obvious and documented importance of journal turnaround time, published per-journal values are almost non-existent (BR, personal observation). Some journals do publicize “time-to-first-decision” on their (or their publisher’s) webpages (e.g., ICES Journal of Marine Science ), but summary statistics of times to acceptance and publication remain generally unavailable to the public. Lewallen and Crane [ 13 ] recognized the importance of turnaround time and recommended authors contact potential target journals and request information directly. However, this approach is time-consuming and unlikely to result in universal acquiescence from potential target journals. Moreover, because the duration of the review process is unpredictable, journals are more likely to give an average or a range—as an indicator—rather than guarantee a specific turnaround time (H. Browman, Ed. in Chief, ICES J . Mar . Sci ., personal communication).

In many biological journals, individual papers contain metadata that can be used to generate turnaround times. Specifically, a majority of journals in the sciences report “Date Received,” “Date Accepted,” and at least one of “Date Published,” “Date Available,” or similar on the webpage or in the downloadable PDF of each paper (BR, personal observation). Aggregating these dates on a per-journal basis allows for the calculation of turnaround time statistics, which would be extremely valuable to authors seeking to identify an ideal target journal.

In this study, I present summary data on turnaround times for over 80 journals that regularly publish papers in fisheries science and the surrounding disciplines. I restrict my analyses to this field out of personal interest and because cross-discipline comparisons may not be apt. Moreover, my goal in this study is to provide field-specific information, and data on journals in other disciplines was beyond that scope. In addition, I provide per-journal information on impact factor and acceptance rate (where available) which are also key factors in deciding on a target journal [ 4 ]. The information presented herein is intended to be used in concert with other factors, including authors’ notions of their paper’s “fit,” to refine the process of selecting a target journal.

Literature review and journal selection

I began by developing a list of journals that regularly publish papers in fisheries science. On 20 March 2021, I searched the Web of Science Core Collection (Clarivate Analytics; v.5.35) for published articles with “fisheries or fishermen or fishes or fish or fishing” as the topic. These terms were used by Branch and Linnell [ 14 ] for a similar purpose. I refined this search by selecting only “Articles” and “Proceedings Papers” thereby excluding reviews, meeting abstracts, brief communications, et cetera. Finally, I truncated the search to include only documents that were published during 2010–2020. This search resulted in 242,280 published works. Using Web of Science’s “Analyze Results” tool, I compiled a list of source titles (i.e., journals) that have published >400 papers meeting the specifics of my query. This threshold was used because it emerged as a natural break in the list of journals. A total of 85 journals met these requirements. I removed from this list journals that publish strictly in the field of food sciences (e.g., Food Chemistry ) as well as hyper-regional journals that may not be of broad interest to authors in the field (though their exclusion is not indicative of their quality). Finally, I added several journals ad hoc that had not met the 400-paper minimum. These additions were included either because of my personal interest (e.g., Marine and Coastal Fisheries and Global Change Biology ) or because of their relevance and value in among-journal comparisons (e.g., Science and Nature ). After removals and additions, the list included 82 total journals.

Turnaround time.

In the spring of 2021, I accessed webpages of each of the 82 journals selected for inclusion. For each journal, I located publication history information (i.e., dates received, accepted, and published) on the webpages or in the PDFs of individual papers. I tabulated these dates for each paper. Generally, I aspired to gather dates for all papers published from present day back to at least the beginning of 2018. It was my explicit goal to compare timeliness of publication only for original research papers. For all journals where possible, I excluded papers if they were not original research articles. Some journals publish a higher proportion of reviews, brief communications, errata, or editorials, all of which likely have a shorter turnaround time than original research. Most journals list the paper type on each document, allowing for easy exclusion of papers that were not original research.

I examined distributions of time-to-acceptance (calculated as date accepted – date received ) and time-to-publication (calculated as date published—date received ). For date published , I used the earliest date after acceptance, i.e., if “date published online” and “date published in an issue” were both provided, I used only “date published online.” Some articles reported acceptance times that are inconsistent with the usual paradigm of peer review (for instance, progressing from received to accepted in 0 days). It is highly unlikely (perhaps impossible) that an unsolicited original research article could be accepted or published within 30 days of submission. I assumed that any implausibly short publication histories either were typographical errors, artifacts of that journal’s methods for tracking papers, or the papers were simply not unsolicited original research articles. I therefore excluded from further analysis any papers with a time-to-acceptance or time-to-publication of fewer than 30 days; by-journal proportions of such papers ranged from zero to 0.06 ( Table 1 ). Similarly, some papers reported publication times on the order of several years or more since receipt. While extreme delays in publication are certainly possible, I assumed that any paper with a time-to-publication of over 600 days was either a typographical error or a result of extenuating circumstances for which the journal staff and reviewers likely played no role. I therefore excluded papers with a time-to-acceptance or a time-to-publication of over 600 days from further analysis; by-journal proportions of such papers ranged from zero to 0.08 ( Table 1 ). Paper-by-paper information on the duration from receipt until reviews are received is generally not available. However, this so-called “time-to-first-decision” is often available on journal websites. Where available, I obtained time-to-first-decision for each journal.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0257841.t001

I generated summary data for each journal in this study in R [ 15 ]. Specifically, I examined median time-to-acceptance, median time-to-publication, median time between acceptance and publication, proportion of papers published in under six months, and proportion of papers published in over one year. For the latter two metrics, I selected six months and one year because, though arbitrary, these durations may be representative of many authors’ notions of short versus long turnaround times. Medians were used because distributions of time-to-acceptance and time-to-publication were usually skewed right (see Results ).

Some journals included in this study have an extremely broad scope. Specifically, Nature , PeerJ , PLOS ONE , Proceedings of the National Academy of Sciences , and Science publish papers on topics reaching far beyond fisheries or ecology. I hypothesized that turnaround times of fisheries papers published in these journals may be dissimilar to turnaround times for these journals overall since internal editorial structure at the journals may differ among disciplines. I queried Web of Science for “fisheries or fishermen or fishes or fish or fishing” for each of these five journals individually, obtained turnaround times for the resulting papers, and compared median times to publication for fisheries papers and for all papers in each journal.

COVID-19 pandemic effects

During the COVID-19 pandemic, some journals offered leniency to authors and reviewers when setting deadlines to account for the increased probability of extenuating personal or professional circumstances (B. Runde, personal observation). Because of this phenomenon, I hypothesized that turnaround times for each journal may be different prior to and after the start of the COVID-19 pandemic. Hobday et al. [ 16 ] showed that for seven leading journals in marine science, times in review were shorter in February–June 2020 as compared to the previous year. For each journal in my study, I compared times-to-publication of all papers published during the year prior to the pandemic (1 March 2019–29 February 2020) and the year following the beginning of the pandemic (1 March 2020–28 February 2021). As above, papers were excluded from this analysis if their time-to-publication was extremely short (< 30 days) or extremely long (> 600 days). I conducted two-sample Wilcoxon tests to examine for differences in publication times between these two periods. Significance was evaluated at the α = 0.05 level. Analyses were performed in R [ 15 ].

Impact factors

The most widely used metric of impact, impact factor, is considered flawed by some scientists due to the disproportionate influence of review articles and its propensity for manipulation [ 17 – 19 ]. Nonetheless, impact factor is still listed on many journal webpages and is relied on by many authors [ 20 – 22 ]. I obtained impact factor for 2018 (the most recent year for which it was available for all journals) from https://www.resurchify.com/impact-factor.php . Impact factor is calculated as the number of citations received in a given year by all articles published in that journal during the previous two years, divided by the number of articles published in that journal during the previous two years.

Acceptance rates

I searched the web for reliable (i.e., not anecdotal) information on per-journal acceptance rates, which was generally limited. Most journals reject a percentage of submissions at the editorial stage prior to peer review (so-called “desk rejections”) due to a lack of fit within the journal’s scope, deficiencies in writing quality, and/or insignificant scientific merit [ 23 ]. Of course, rejections after peer review also occur, and overall rejection rates are increasingly made available on journals’ or publishers’ websites or in compendium papers [e.g., 20 ]. Unfortunately, rates of desk rejections are still rarely available online [ 23 ]. However, many journals’ overall acceptance rates are reported either on their own page or on the publisher’s website. For instance, Elsevier and Springer both offer acceptance rates for some (but not all) of their journals on their JournalFinder ( https://journalfinder.elsevier.com/ ) and Journal suggester ( https://journalsuggester.springer.com/ ) respectively. I extracted reported acceptance rates wherever available and tabulated them per journal. In addition, I sent email correspondence to Editors-in-Chief and/or publishers of each of the journals included in this study asking for their journal’s desk rejection rate and overall acceptance rate. When information was provided, it was tabulated on a per-journal basis. In some cases, acceptance rates provided via email were not equal to the rate provided on the journal’s webpage. In these cases, the value provided by the editor or publisher was used, as it is likely more recent and thus more valid. Such chases did not differ in these figures by more than 10%. It is possible that there are discrepancies in the calculation of acceptance rates, e.g., resubmissions may be tabulated differently among journals. I made no attempt to account for these potential differences in the present study.

Data analysis

I examined summary data for each journal and calculated correlations between median time-to-publication, difference in median publication time during COVID-19 as compared to the prior year, impact factor, and acceptance rate (where available). I plotted correlations using the R package ‘corrplot’ [ 24 ]. In addition, I plotted relationships between median time-to-publication and impact factor.

From the 82 journals in this study, I extracted publication information for 83,797 individual papers. Median times to acceptance ranged from 64 to 269 days and median times-to-publication ranged from 79 to 323 days ( Fig 1 ). Turnaround times did not differ substantially for fisheries papers in any of the five broad-scope journals in this study ( Fig 2 ); therefore, for the other analyses in this study data from these journals were not restricted to fish-only papers. The ranges of times-to-publication for each journal were generally broad ( Fig 3 ); the middle 50% often spanned a range of 100 days or more. Distributions were typically skewed right. Virtually every journal in the study published one or more papers that took close to 600 days to publish (the maximum timespan retained in the analysis). Percentages of papers published in over one year ranged from 0 to 28%; percentages of papers published in under 6 months ranged from 2 to 99% ( Table 1 ). Of 82 journals examined, 28 had significantly different (Wilcoxon p < 0.05) times-to-publication in the year following the start of the COVID-19 pandemic as compared to the previous year. Of these 28, 12 were significantly faster and 16 were significantly slower during the pandemic ( Table 1 ).

https://doi.org/10.1371/journal.pone.0257841.g001

PNAS is Proceedings of the National Academy of Sciences .

https://doi.org/10.1371/journal.pone.0257841.g002

Central vertical lines represent medians, hinges represent the 25 th and 75 th percentiles, and lower and upper whiskers extend to either the lowest and highest values respectively or 1.5 * the inter-quartile range. Black dots represent papers that were outside 1.5 * the inter-quartile range. Boxes are shaded to correspond with 2018 Impact Factor, where darker green represents higher impact.

https://doi.org/10.1371/journal.pone.0257841.g003

I was able to obtain overall acceptance rate information for 60 journals in this study. Of these 60, I gathered desk rejection rates for 27 journals. For each of these 27, I calculated acceptance rates for papers that were peer-reviewed (i.e., not desk rejected). There was a weak positive correlation between this value and the proportion of articles that were peer-reviewed, implying that rates of the two types of rejections are not independent ( Fig 4A ). Higher impact journals tended to have higher desk rejection rates and lower percentages of acceptance given that peer review occurred. Of the 60 journals with overall acceptance rate information, I obtained time-to-first-decision for 48 journals; I plotted overall acceptance rate against these values ( Fig 4B ). There was no clear relationship between these variables; however, journals with higher impact tended to have lower acceptance rates and shorter times-to-first-decision.

A) The proportion of submissions that are peer-reviewed (i.e., 1 minus the desk rejection rate) versus the acceptance rate of submissions given that they are peer-reviewed for 27 journals that publish in fisheries and related topics. B) Time-to-first-decision (d) versus overall acceptance rate for 48 journals that publish in fisheries and aquatic sciences. Points in both panels are shaded to reflect 2018 Impact Factor of each journal, where darker green means higher impact.

https://doi.org/10.1371/journal.pone.0257841.g004

There was no strong correlation between any pairwise combination of median time-to-publication, difference in median publication time during COVID-19 as compared to the prior year, impact factor, and acceptance rate ( Fig 5 ). A moderate correlation (Pearson correlation = -0.43) was found between impact factor and overall acceptance rate, a phenomenon that has been documented previously [ 4 ]. The relationship between a journal’s median time-to-publication and impact factor was broadly scattered ( Fig 6 ).

Correlation bubbles are colored and shaded based on the calculated Pearson correlation coefficient, where negative correlations are pink, positive correlations are green, and darker shades and larger sizes represent stronger correlations.

https://doi.org/10.1371/journal.pone.0257841.g005

Inset panels shows a broader view to include Science and Nature which have high impact factors.

https://doi.org/10.1371/journal.pone.0257841.g006

There are clearly intrinsic differences in turnaround time among journals that publish in fisheries science ( Fig 3 ). The causes for these differences are varied, and some are artifacts of the journal’s specific publishing paradigm. For instance, some journals publish uncorrected, non-typeset versions of accepted manuscripts very shortly after acceptance; for the purposes of this study, such papers were considered published even if they were not yet in their final form. I elected to consider any post-acceptance online version “published” because such versions can be shared and cited, thereby fulfilling the desires of many authors [ 7 ] and meeting one of the overall goals of science—disseminating research results. However, some journals do not publish any manuscript version other than the finalized document. Such journals have inherently longer turnaround times than those hosting unpolished versions online, and I made no attempt to specify or account for those differences in this study.

In addition to differences in which versions are published online first, differences in journal production formats can influence turnaround time. Some journals publish monthly, some publish quarterly, and some publish on a rolling basis (particularly those that are online only). Strictly periodical journals may choose to allow accepted papers to accumulate prior to publishing several in an issue all at once. Such journals, especially those with page limitations, may have a backlog of papers that are accepted but not yet published. I made no attempt to differentiate between journals based on these format differences, which certainly influence time-to-publication.

Similarly, some journals (or publishers) may enter revised manuscripts into their system as new submissions. This practice ostensibly artificially deflates turnaround times and may also artificially deflate acceptance rates. Unfortunately, to my knowledge no journals state publicly whether this is their modus operandi , precluding the possibility of applying any correction factor or per-journal caveat herein.

Beyond these differences in production time that stem from journal structure, the time it takes to publish a paper can be divided into time the paper is with editorial staff, reviewers, and authors after review. Differences may exist in author revision time among journals; it is possible that reviews of manuscripts submitted to higher impact journals are more thorough and therefore require longer response times. However, I found no association between impact factor and turnaround time ( Fig 6 ), so it may be that no such differences exist. Further, extenuating circumstances on the part of the author(s) of a paper may result in extremely lengthy revision times. There is no data available on per-journal rates of extension requests, but presumably it is low and approximately equivalent across journals. I removed from my dataset any papers that took longer than 600 days to publish. Still, I present median turnaround times in this study as a measure that is robust to outliers.

In contrast to time with the authors, it seems likely that among-journal differences in time with editorial staff and reviewers are responsible for a large portion of differences in overall turnaround time. Delays at the editorial and reviewer level may be inherent to each journal, and could be a result of editorial workload (i.e., number of submissions per editor), level of strictness of the editor-in-chief when communicating with the associate editors, or differences in persistence on the part of the editors when asking reviewers to be expeditious. In addition, some journals may have a more difficult time finding a suitable number of agreeable reviewers; this may be especially true for lower-impact journals although no association between IF and turnaround time was found. A majority of authors surveyed by Mulligan et al. [ 11 ] had declined to review at least one paper in the preceding 12 months, mainly due to the paper being outside the reviewer’s area of expertise or the reviewer being too busy with work and/or prior reviewing commitments. If among-journal differences do exist in acceptance rates of review requests, this could possibly alter turnaround times.

In this study, I treated impact factor as a proxy for the quality of individual journals. While impact factor is often still used in this way [ 22 ], its limitations are well-documented by authors across many disciplines [e.g., 25 – 27 ]. For instance, the calculation of how many “citable” documents a single journal has produced is often dubious, as this may or may not include errata, letters, and book reviews depending on the publisher [ 28 ]; misclassification can inflate or deflate a given journal’s impact factor, and the rate of misclassification may depend on the individual journal’s publishing paradigm [ 29 ]. Alternatives to impact factor, such as SCImago Journal Rank (SJR) and H-index, have been proposed and may in some cases be more valid metrics of journal prestige or quality [ 30 , 31 ]. Comparison of these bibliometrics among journals in fisheries was beyond the scope of this paper, and I elected to use only impact factor given its ubiquity and despite its known disadvantages.

The COVID-19 pandemic had no discernable field-wide effect on turnaround time, and differences in turnaround time during the pandemic were not correlated with acceptance rate or impact factor ( Fig 5 ). Hobday et al. [ 16 ] found minor changes in turnaround time during COVID-19 (through June 2020) for seven marine science journals; they reported only slight disruptions to scientific productivity in this field. Overall, my results corroborate those of Hobday et al. [ 16 ], although some journals took significantly longer or significantly shorter to publish during COVID-19. It is unclear whether these correlations were causal, as non-pandemic effects may have affected turnaround times at these individual journals.

The turnaround times, acceptance rates, and impact factors presented in this paper are snapshots and may change over time. The degree to which these metrics change is likely variable among journals. However, barring major changes in journal formats or editorial regimes, the data presented here are probably applicable for the next several years at least. Indeed, median monthly turnaround times for most journals in this study were approximately static for the period from January 2018 to April 2021 ( Fig 7 ). Similarly, acceptance rates and impact factors [ 32 ] are generally strongly auto-correlated from one year to the next. I therefore suggest that the metrics presented here can be used by authors as a baseline, but if more than several years have transpired it may befit the reader to obtain updated information (particularly on impact factor and acceptance rate, which are generally more accessible than turnaround time). In addition, it is theoretically possible that this paper itself may alter turnaround times and/or acceptance rates for some journals. Enlightened readers may elect to change their submission habits in favor of certain journals that are more expeditious or that otherwise meet their priorities for a given paper. Authors without a preconceived notion of a specific target journal should still consider the paper’s “fit” to be the most important factor in their decision [ 1 ]. I suggest that after assembling a shortlist based on fit, authors should use the results of this paper to select a journal that best aligns with their priorities.

The dashed horizontal line at 1.0 represents the baseline proportion.

https://doi.org/10.1371/journal.pone.0257841.g007

Supporting information

https://doi.org/10.1371/journal.pone.0257841.s001

Acknowledgments

This manuscript benefited greatly from discussions with H. I. Browman, D. D. Aday, W. L. Smith, R. C. Chambers, N. M. Bacheler, K. W. Shertzer, S. R. Midway, S. M. Lombardo, and C. A. Harms. My thanks to K. W. Shertzer and H. I. Browman for reviewing early drafts of this paper. I am grateful to my advisor, J. A. Buckel, for allowing me the time to pursue this side project while I worked on my dissertation. Thanks to the numerous editors, publishers, and other journal staff who replied to my requests for journal information.

- View Article

- Google Scholar

- PubMed/NCBI

- 15. R Core Team. R: a language and environment for statistical compujting, Vienna, Austria. URL http://www.R-project.org/ . 2021.

- 24. Wei T, Simko V. R package "corrplot": Visualization of a Correlation Matrix (Version 0.84). https://github.com/taiyun/corrplot . 2017.

- 28. Rossner M, Van Epps H, Hill E. Irreproducible results: a response to Thomson Scientific. Rockefeller University Press; 2008.

A guide to journal acceptance rates

Understand the definition of binomial nomenclature and look at some binomial nomenclature examples from everyday life.

Journals are responsible for publishing and disseminating research relevant to particular fields. The role of achieving the right information, that is selecting the research that should be published within the pages of the journal, is an integral part of that. The journal’s editorial review board will reject unsolicited submissions regardless of whether they have been peer-reviewed or not.

There is no fixed rate of rejection annually; it’s a range that naturally develops over time. Journals sometimes set monthly targets, but they are only used to note when rates fluctuate significantly, which occurs on a regular basis. Our focus in this article will be on the concept of journal acceptance rates, as well as how they are calculated.

Journal acceptance rates are used to measure what?

An acceptance or rejection rate is typically used by journals to assess the rate at which papers are accepted or rejected and to observe any patterns that might be noteworthy. A journal’s acceptance rate depends on the high standard of its submissions. Unlike impact factors, these rates are internally assessed quality control measures. (Learn more about Journal impact factor .)

A journal’s acceptance rate indicates what percentage of all submissions can be published. In deciding where to submit a manuscript, authors should consider this simple indicator. In order to assist submitters, ICSR strongly recommends that journal acceptance rates are easily accessible to the public.

Is it possible to find the acceptance rate of a journal?

In some cases, finding acceptance rates for particular journals based on specialized fields can be challenging. It is nevertheless crucial to enhance and secure a publication’s credibility. There is a common perception that journals with lower acceptance rates are more prestigious and more deserving. Low acceptance rates tend to be seen in large, established, and high-impact journals.

In most cases, journals do not publicly report acceptance rates because they believe low acceptance rates might deter authors from submitting their work. It is also important to note that journal editors decline manuscripts for several factors. Feedback is often provided to researchers in the form of helpful remarks. There are some niche journals that decline papers on the basis of their relevance.

Fake websites abound on the web, just as fraudster journals target academics seeking publication. Make use of well-known journal ranking resources when determining where to publish. In some fields or disciplines, acceptance rates are provided by many sources. These rates can be found using the following approaches:

- You can often find out the acceptance rate of a journal by contacting its editor.

- There may be databases within your field that contain information about acceptance rates for field publications.

- A journal’s acceptance rate can be found on its webpage on Google.

- In addition, you can search for acceptance rates of associated journals on the website of an association.

- For the MLA International Bibliography, the “Directory of Periodicals” provides acceptance data for literature, linguistics, and folklore journals.

How to calculate acceptance rates?

It is not common practice to use the same method to calculate acceptance rates. For a few journals, the rate is calculated based on all manuscripts received. In addition, the editor can choose which manuscripts are forwarded to reviewers so that the acceptance rate can be calculated from those which are reviewed in a shorter time period than the total number of submissions received. Furthermore, many editors use only approximate estimates of this data and do not keep precise records.

Each journal accepts a different number of manuscripts, based on several practical factors, such as the quality of manuscripts, the significance of the paper, and affiliations. The acceptance rate will be affected by each factor.

In order to calculate the acceptance rate of a journal, simply divide the number of accepted submissions by the number of received submissions. A journal’s acceptance rate, for illustration, is 5% if it accepts 50 manuscripts in one year and 1000 are submitted in that year.

50/1000 = 5% acceptance rate

Generally, there is a difference between 10-50% between acceptance rates for larger journals and smaller journals. There is a modest difference in acceptance rates between older and newer journals. While there is still considerable variation in acceptance rates among high-impact journals (3-50% acceptance).

Using the best and free infographic maker, you can communicate science visually

Illustrating your work visually can have a better impact on your readers as well as increase your visibility. You can do it easily and for free. With illustrations and posters, Mind the Graph can help you communicate scientific findings to a large community. We also offer customizations. A scientist’s time is precious, so let’s go!

Subscribe to our newsletter

Exclusive high quality content about effective visual communication in science.

About Aayushi Zaveri

Aayushi Zaveri majored in biotechnology engineering. She is currently pursuing a master's degree in Bioentrepreneurship from Karolinska Institute. She is interested in health and diseases, global health, socioeconomic development, and women's health. As a science enthusiast, she is keen in learning more about the scientific world and wants to play a part in making a difference.

Content tags

Sponsored by

Don’t worry about journal acceptance rates – and here’s why

Deciding where to submit a manuscript? A journal acceptance rate is a useful signal to prospective authors of the probability of acceptance of their manuscript – no more and no less than that

Rachel Herbert

Additional links.

“Gate-keeping” is the selection of research that is deemed worthy of and relevant for publication in a journal. Choosing from unsolicited manuscripts submitted to the journal, the editorial team accepts some and rejects others, often after peer review. A way to quantify this process is the “journal acceptance rate”.

The acceptance rate appears regularly on journal home pages and via journal finder tools. But what does this seemingly straightforward measure signal to an author considering where to submit a manuscript?

What do acceptance rates measure?

Several practical factors influence the number of manuscripts that each journal accepts; these include the quality, interest in or importance of submitted manuscripts, the number of and relationships to other journals in the same field, and any manuscript backlogs or page limitations. Each factor will have a varying impact on acceptance rate.

The drivers of submission rates might include: the size of the field, the number of and relationships among journals, journal “brand” awareness or perceived prestige, and the potential impact of successful publication for the author.

The Metrics Toolkit suggests that the rate can be used as a “proxy for perceived prestige and demand as compared to availability”. Yet overlapping drivers for the two factors determine an acceptance rate.

And the concept of separating the “wheat from the chaff” is pushed to the limit when journals such as Nature and Science have acceptance rates of 10 per cent or less. Being rejected from extremely selective journals surely can’t tell us much about that manuscript.

Comparing acceptance rates with other journal attributes

In 2020, the International Center for the Study of Research at Elsevier explored a set of 2,371 journals – the majority of which (82 per cent) are published by Elsevier – and their acceptance rates in 2017. The journals represent a broad set of subject areas, journal types and ages, with all but the social sciences and arts and humanities well represented, a limitation of the findings presented here. The journals in the dataset had acceptance rates ranging from 1.1 per cent to 93.2 per cent in 2017, with an average of 32 per cent: overall, journals tend to accept fewer articles than they reject (Figure 1).

We then studied various attributes of the journals to see what aspects correlated with high or low acceptance rates.

Low acceptance rates are typically associated with very large, very old and very high-impact journals, as well as those that are not gold open access. That’s a mixed bag of attributes. The relationship to impact is nuanced and not strong enough be a clear signal. Importantly, even where relationships between journal attributes and acceptance rates could be identified, the variance in the acceptance rate is still so high that the findings are unlikely to be useful in the real world.

So where does that leave authors considering which journals they should submit their manuscript to?

We believe that journal acceptance rates do hold meaning; they indicate to prospective authors the probability of acceptance of their manuscript, based on historical success rates at the same journal. As such, we believe that journal acceptance rates have a place in the array of journal metrics. However, acceptance rate is not a signal of other attributes, and so it should be considered alongside other metrics and indicators but not conflated with them.

Rachel Herbert is a senior research evaluation manager, working within the International Center for the Study of Research , at Elsevier.

If you found this interesting and want advice and insight from academics and university staff delivered direct to your inbox each week, sign up for the THE Campus newsletter .

For more about journal acceptances and their interplay with other journal attributes, read the full report from the International Center for the Study of Research at Elsevier: “ Accept me, accept me not: What do journal acceptance rates really mean? ”

You may also like

Emotions and learning: what role do emotions play in how and why students learn?

Global perspectives: navigating challenges in higher education across borders, a diy guide to starting your own journal, universities, ai and the common good, artificial intelligence and academic integrity: striking a balance, create an onboarding programme for neurodivergent students, how to help young women see themselves as coders, contextual learning: linking learning to the real world, authentic assessment in higher education and the role of digital creative technologies, how hard can it be testing ai detection tools.

Register for free

and unlock a host of features on the THE site

Kathryn A. Martin Library

- Research & Collections

- News & Events

Scholarly Publishing

- Author's Rights

- Scholarly Identification

- Author Identifiers

- Google Scholar Profile

- Research Evaluation

- Tools for Finding Open Content

- Knowledge Maps

- Journal Status & Acceptance Rates

- Journal Citation Reports

- Journal Metrics (Powered by Scopus)

- Google Scholar Metrics

- Eigenfactor

- Metrics and Epistemic Injustice

- Paper Mills

- How to Publish in Open Access Journals

- Creative Commons

- Avoid Citing Retracted Papers

Article Processing Charges APCs

An Article Processing Charge (APC) is a publishing fee charged to authors who are publishing in open access journal. The fee shifts the journal production cost to the author and replaces the subscription charge that libraries and researcher would pay to access an article behind a paywall.

Acceptance Rates

The method for computing acceptance rates may vary among journals.

Typically it refers to the number of manuscripts accepted for publication relative to the number of manuscripts submitted within the last year.

- Browse the 'information for authors' section of a journal's website

- Do a Google search for: Journal Title "acceptance rate"

More online and print sources from various fields can be found at these library FAQ pages: St. John's University: Journal Rankings and Acceptance Rate

University of North Texas Library: Journal Acceptance Rates

American Psychological Association (APA) provides historical data on their affiliated journals and the data can found at the Journal Statistics and Operations Data .

Peer Review Status & Distribution

Additional Acceptance Rate Figures

Where to publish, the right journal for your article.

Our scholarly communication blog will provide you with this 10 sources:

https://lib.d.umn.edu/scholarly-communications/right-journal-your-article

- << Previous: Knowledge Maps

- Next: Journal Impact >>

- Last Updated: Nov 7, 2023 3:55 PM

- URL: https://libguides.d.umn.edu/scholarly_publishing

- Give to the Library

- Maps & Floorplans

- Libraries A-Z

- Ellis Library (main)

- Engineering Library

- Geological Sciences

- Journalism Library

- Law Library

- Mathematical Sciences

- MU Digital Collections

- Veterinary Medical

- More Libraries...

- Instructional Services

- Course Reserves

- Course Guides

- Schedule a Library Class

- Class Assessment Forms

- Recordings & Tutorials

- Research & Writing Help

- More class resources

- Places to Study

- Borrow, Request & Renew

- Call Numbers

- Computers, Printers, Scanners & Software

- Digital Media Lab

- Equipment Lending: Laptops, cameras, etc.

- Subject Librarians

- Writing Tutors

- More In the Library...

- Undergraduate Students

- Graduate Students

- Faculty & Staff

- Researcher Support

- Distance Learners

- International Students

- More Services for...

- View my MU Libraries Account (login & click on My Library Account)

- View my MOBIUS Checkouts

- Renew my Books (login & click on My Loans)

- Place a Hold on a Book

- Request Books from Depository

- View my ILL@MU Account

- Set Up Alerts in Databases

- More Account Information...

Measuring Research Impact and Quality

- Times cited counts

- Journal Impact Factor & other journal rankings

All disciplines

- Business Journals

- Education/Psychology Journals

- Health Science/Medical Journals

- Humanities Journals

- Altmetrics This link opens in a new window

- Impact by discipline

- Researcher Profiles

Journal acceptance rates: basics

Definition: The number of manuscripts accepted for publication compared to the total number of manuscripts submitted in one year. The exact method of calculation varies depending on the journal. Journals with lower article acceptance rates are regarded as more prestigious.

More information

- Accept me, accept me not: What do journal acceptance rates really mean? "Study considers what journal acceptance rates can tell a submitting author about a journal"

- How to interpret acceptance rates.

Check the publisher's website for the journal to see if the acceptance rates are listed. Currently, only a few publishers list their journals' acceptance rates online, but the numbers are increasing. Following are a few examples.

- American Association for the Advancement of Science (AAAS) Journals

- Journal Insights (Elsevier) Provides acceptance rates for some of the journals published by Elsevier.

- Taylor and Francis journals Click on Journal Metrics in the left column on the informational page for a specific journal title..

E-mail the editor of the journal to request the acceptance rates.

- The name of the editor should be listed on the journal website.

- In the e-mail include the reason why you are requesting acceptance rates and the years needed.

- Most but not all editors will provide acceptance rates.

Business journals

- Cabell's Directory of Publishing Opportunities in Accounting

- Cabell's Directory of Publishing Opportunities in Computer Science and Business Information Systems

- Cabell's Directory of Publishing Opportunities in Economics and Finance

- Cabell's Directory of Publishing Opportunities in Management

- Cabell's Directory of Publishing Opportunities in Marketing

Education/psychology journals

- Cabell's Directory of Publishing Opportunities in Educational Curriculum and Methods

- Cabell's Directory of Publishing Opportunities in Educational Psychology and Administration

- Cabell's Directory of Publishing Opportunities in Educational Technology and Library Science

- American Psychological Association Journal Statistics and Operations Data Annual report on American Psychological Association journals. Includes information about rejection rates, publication lag time and related statistics.

- Cabell's Directory of Publishing Opportunities in Psychology Print copy shelved in Ellis Library Reference Call Number: BF76.7 .C33 Latest year available: 2006

Health Science/medical journals

The following link is to a page from the Health Sciences Library pertaining to journal acceptance rates in health science journals.

- Promotion & Tenure Resources for Research/Scholarship Assessment

Humanities journals

- MLA Directory of Periodicals Lists the acceptance rate for some selected periodicals in literature, language, linguistics, folklore.

- << Previous: Journal Impact Factor & other journal rankings

- Next: Book data >>

- Last Updated: Apr 2, 2024 11:01 AM

- URL: https://libraryguides.missouri.edu/impact

Acceptance rates of peer-reviewed journals

by Esther van de Vosse | Jan 26, 2021 | Publication | 3 comments

Did you write an excellent manuscript with ground breaking data that will have a great impact on the research in your field? In that case you should definitely submit your manuscript to an extremely high-ranking journal, such as Nature or Science or the top-ranking journal in your field. If your data are however good but are not expected to result in widely shared press releases and interviews in major newspapers, you may want to submit your manuscript to a good or excellent journal where you have a realistic chance of getting it accepted for publication. To select a relevant journal start with reading my tips , as it will greatly improve your chances of getting your manuscript accepted and reduce your frustration caused by rejected articles.

Acceptance rates of high ranking journals are low. For several journals, studies have shown the number of submitted manuscripts, number of manuscripts sent out for peer- review, and the final number of manuscripts accepted for publication. One of these studies analysed the Nature journals . From March 2015 to February 2017 the number of manuscripts submitted was 128,454 of which 79% was rejected outright. After peer review, another 56% were rejected, leading to an overall acceptance rate of about 12%. For the journal Nature itself this rate was less than 7% of the 20,406 manuscripts submitted.

Many journals publish their acceptance rates on their websites, although it is sometimes not clear whether these refer to percentage of peer-reviewed manuscripts or percentage of total manuscripts submitted. Below I have indicated the acceptance rates of a number of life sciences and medical journals, with a link to the source of the information.

Acceptance rates according to journal websites (data from February 2023)

Science rejects about 84% of submitted manuscripts during the initial screening stage, and accepts 6.1% of the original research papers submitted (data of 2022).

Nature has acceptance rates of various years on their website, with the last year with data 2017, that year the acceptance rate was 7.6%.

The British Medical Journal (BMJ) accepts about 7% of all the 7000-8000 manuscripts submitted each year, but many of these are not research articles. Only 4% of research articles are accepted.

Other journals in the BMJ group: Gut 12% acceptance in 2022, BMJ Open 47% in 2022, BMJ Case Reports 38% in 2022, BMJ Global Health 14% in 2022.

The New England Journal Medicine (NEJM) receives more than 16,000 research and other submissions for publication each year. About 5% of the original research submissions are accepted for publication.

PLOS ONE is a respected journal with a very broad scope that has an acceptance rate of over 48% (in 2020).

PLOS Medicine has an acceptance rate of about 10%.

The Journal of the American Medical Association (JAMA)’s acceptance rate is 11% of the more than 7,000 major manuscripts it receives annually, and only 4% of the more than 4,400 research papers received.

The acceptance rate of some other Elsevier journals: The Lancet has an acceptance rate of about 5%. The Journal of Pediatrics : 14.7% in 2022. Biomaterials : 14.2% in 2022. Gastroenterology acceptance rate of 10-12%.

The Journal of Adolescent Health reported an acceptance rate of 15% in 2010, I could not find more recent data.

Acceptance rates of journals known as (previously) predatory journals

Be aware of predatory journals that will accept anything. These are very low quality journals.

The average acceptance rates of Dove press journals was 32% in 2022. Dove Press was also originally on the list of predatory publishers but has since been removed.

The acceptance rates of all Hindawi journals can be found on one page and range from 8% to 62%. Note: some Hindawi journals have been suspected to be predatory journals, but are now considered borderline.

Presubmission inquiries

Authors who would like to know whether their manuscript would be appropriate for publication in a specific journal can sometimes send a presubmission inquiry to the editors. The minimum requirements for presubmission inquiries are often an abstract and a cover letter. Find out on the website of the journal whether this is an option.

Of the published medical papers how many are scholarly articles?

Of the peer reviewed medical research studies published, what percentage are later classified as a Scholarly Article.

Dear Mike, I am sorry I did not see your comment on my blog until now. Somehow the notifications were turned off.

Can you let me know what you mean by ‘Scholarly Article’? I did not hear of this expression being an official designation, it is just a general opinion I think. Esther

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Recent Posts

- How to write an abstract

- How to take notes when reading scientific articles

- Publication: how long does it take after submission?

- Authorship of scientific publications: contribution, order, and number

- Predatory meetings and how to avoid them

Recent Comments

- Prof. Neelam Kotihari on Predatory meetings and how to avoid them

- Sathya Meonah ST on How to take notes when reading scientific articles

- Andres Cuervo on Predatory award organization – yet another scam

- Subiksha Singh on Predatory award organization – yet another scam

- Hurriyat on Predatory award organization – yet another scam

- Entries feed

- Comments feed

- WordPress.org

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

Editorial criteria and processes

This document provides an outline of the editorial process involved in publishing a scientific paper (Article) in Nature , and describes how manuscripts are handled by editors between submission and publication.

Editorial processes are described for the following stages: At submission | After submission | After acceptance

At submission

Criteria for publication

The criteria for publication of scientific papers (Articles) in Nature are that they:

- report original scientific research (the main results and conclusions must not have been published or submitted elsewhere)

- are of outstanding scientific importance

- reach a conclusion of interest to an interdisciplinary readership.

Further editorial criteria may be applicable for different kinds of papers, as follows:

- large dataset papers : should aim to either report a fully comprehensive data set, defined by complete and extensive validation, or provide significant technical advance or scientific insight.

- technical papers: papers that make solely technical advances will be considered in cases where the technique reported will have significant impacts on communities of fellow researchers.

- therapeutic papers: in the absence of novel mechanistic insight, therapeutic papers will be considered if the therapeutic effect reported will provide significant impact on an important disease.

Articles published in Nature have an exceptionally wide impact, both among scientists and, frequently, among the general public.

Who decides which papers to publish?

Nature ’s aim is to publish the best research across a wide range of scientific fields, which means it has to be highly selective. As a result, only about 8% of submitted manuscripts will be accepted for publication. Most submissions are declined without being sent out for peer review.

Nature does not employ an editorial board of senior scientists, nor is it affiliated to a scientific society or institution, thus its decisions are independent, unbiased by scientific or national prejudices of particular individuals. Decisions are quicker, and editorial criteria can be made uniform across disciplines. The judgement about which papers will interest a broad readership is made by Nature 's editors, not its referees. One reason is because each referee sees only a tiny fraction of the papers submitted and is deeply knowledgeable about one field, whereas the editors, who see all the papers submitted, can have a broader perspective and a wider context from which to view the paper.

How to submit an Article

Authors should use the formatting guide section to ensure that the level, length and format (particularly the layout of figures and tables and any Supplementary Information) conforms with Nature 's requirements, at submission and each revision stage. This will reduce delays. Manuscripts should be submitted via our online manuscript submission system . Although optional, the cover letter is an excellent opportunity to briefly discuss the importance of the submitted work and why it is appropriate for the journal. Please avoid repeating information that is already present in the abstract and introduction. The cover letter is not shared with the referees, and should be used to provide confidential information such as conflicts of interest and to declare any related work that is in press or submitted elsewhere. All Nature editors report to the Editor of Nature , who sets Nature 's publication policies. Authors submitting to Nature do so on the understanding that they agree to these policies .

After submission

What happens to a submitted article.

The first stage for a newly submitted Article is that the editorial staff consider whether to send it for peer-review. On submission, the manuscript is assigned to an editor covering the subject area, who seeks informal advice from scientific advisors and editorial colleagues, and who makes this initial decision. The criteria for a paper to be sent for peer-review are that the results seem novel, arresting (illuminating, unexpected or surprising), and that the work described has both immediate and far-reaching implications. The initial judgement is not a reflection on the technical validity of the work described, or on its importance to people in the same field. Special attention is paid by the editors to the readability of submitted material. Editors encourage authors in highly technical disciplines to provide a slightly longer summary paragraph that descries clearly the basic background to the work and how the new results have affected the field, in a way that enables nonspecialist readers to understand what is being described. Editors also strongly encourage authors in appropriate disciplines to include a simple schematic summarizing the main conclusion of the paper, which can be published with the paper as Supplementary Information . Such figures can be particularly helpful to nonspecialist readers of cell, molecular and structural biology papers. Once the decision has been made to peer-review the paper, the choice of referees is made by the editor who has been assigned the manuscript, who will be handling other papers in the same field, in consultation with editors handling submissions in related fields when necessary. Most papers are sent to two or three referees, but some are sent to more or, occasionally, just to one. Referees are chosen for the following reasons:

- independence from the authors and their institutions

- ability to evaluate the technical aspects of the paper fully and fairly

- currently or recently assessing related submissions

- availability to assess the manuscript within the requested time.

Referees' reports

The ideal referee's report indicates

- who will be interested in the new results and why

- any technical failings that need to be addressed before the authors' case is established.

Although Nature 's editors themselves judge whether a paper is likely to interest readers outside its own immediate field, referees often give helpful advice, for example if the work described is not as significant as the editors thought or has undersold its significance. Although Nature 's editors regard it as essential that any technical failings noted by referees are addressed, they are not so strictly bound by referees’ editorial opinions as to whether the work belongs in Nature .

Competitors

Some potential referees may be engaged in competing work that could influence their opinion. To avoid such conflicts of interest, Nature requires potential referees to disclose any professional and commercial competing interests before undertaking to review a paper, and requires referees not to copy papers or to circulate them to un-named colleagues. Although Nature editors go to every effort to ensure manuscripts are assessed fairly, Nature is not responsible for the conduct of its referees. Nature welcomes authors' suggestions for suitable independent referees (with their contact details), but editors are free to decide themselves who to use as referees. Nature editors will normally honour requests that a paper not be sent to one or two (but no more) competing groups for review.

Transparent peer review

Nature uses a transparent peer review system, where for manuscripts submitted from February 2020 we can publish the reviewer comments to the authors and author rebuttal letters of published original research articles. Authors are provided the opportunity to opt out of this scheme at the completion of the peer review process, before the paper is accepted. If the manuscript was transferred to us from another Nature Research journal, we will not publish reviewer reports or author rebuttals of versions of the manuscript considered by the originating Nature Research journal. The peer review file is published online as a supplementary peer review file. Although we hope that the peer review files will provide a detailed and useful view into our peer review process, it is important to note that these files will not contain all the information considered in the editorial decision making process, such as the discussions between editors, editorial decision letters, or any confidential comments made by reviewers or authors to the editors.

This scheme only applies to original research Articles, and not to Review articles or to other published content. For more information, please refer to our FAQ page .

Reviewer information

In recognition of the time and expertise our reviewers provide to Nature ’s editorial process, we formally acknowledge their contribution to the external peer review of articles published in the journal. All peer-reviewed content will carry an anonymous statement of peer reviewer acknowledgement, and for those reviewers who give their consent, we will publish their names alongside the published article. We will continue to publish peer reviewer reports where authors opt in to our separate transparent peer review scheme. In cases where authors opt in to publication of peer reviewer comments and reviewers opt in to being named, we will not link a reviewer’s name to their report unless they choose to sign their comments to the author with their name. For more information, please refer to our FAQ page .

If the reviewers wish to be named their names will appear in alphabetical order at the end of the paper in a statement as below:

- Nature thanks [Name], [Name] and [Name] for their contribution to the peer review of this work.

Any reviewers that wish to remain anonymous will be acknowledged using a slightly modified statement:

- Nature thanks [Name], [Name] and the other, anonymous, reviewer(s) for their contribution to the peer review of this work.

If no reviewers agree to be named, we will still acknowledge their valuable service using the statement below:

- Nature thanks the anonymous reviewers for their contribution to the peer review of this work.

Nature makes decisions about submitted papers as rapidly as possible. All manuscripts are handled electronically throughout the consideration process. Authors are usually informed within a week if the paper is not being considered. Most referees honour their prior agreement with Nature to deliver a report within two weeks or other agreed time limit, and send their reports online. Decisions by editors are routinely made very rapidly after receipt of reports, and Nature offers an advance online publication (AOP) service to an increasing number of manuscripts.

What the decision letter means

All Articles published in Nature go through at least one round of review, usually two or three, sometimes more. At each stage, the editor will discuss the manuscript with editorial colleagues in the light of referees’ reports, and send a letter to the author offering one of the following options:

- The paper is accepted for publication without any further changes required from the authors.

- The paper is accepted for publication in principle once the authors have made some revisions in response to the referees’ comments. Under these circumstances, revised papers are not usually sent back to the referees because further technical work has not been required, but are accepted for publication once the editors have checked that the referees’ suggestions have been implemented and the paper is in the required format (the formatting guide section is helpful to this end).

- A final decision on publication is deferred, pending the authors’ response to the referees’ comments. Under these circumstances, further experiments or technical work are usually required to address some or all of the referees’ concerns, and revised papers are sent back to some or all of the referees for a second opinion. Revised papers should be accompanied by a point-by-point response to all the comments made by all the referees.

- The paper is rejected because the referees have raised considerable technical objections and/or the authors’ claim has not been adequately established. Under these circumstances, the editor’s letter will state explicitly whether or not a resubmitted version would be considered. If the editor has invited the authors to resubmit, authors must ensure that all the referees’ technical comments have been satisfactorily addressed (not just some of them), unless specifically advised otherwise by the editor in the letter, and must accompany the resubmitted version with a point-by-point response to the referees’ comments. Editors will not send resubmitted papers to the reviewers if it seems that the authors have not made a serious attempt to address all the referees’ criticisms.

- The paper is rejected with no offer to reconsider a resubmitted version. Under these circumstances, authors are strongly advised not to resubmit a revised version as it will be declined without further review. If the authors feel that they have a strong scientific case for reconsideration (if the referees have missed the point of the paper, for example) they can appeal the decision in writing. But in view of Nature 's extreme space constraints and the large number of papers under active consideration at any one time, editors cannot assign a high priority to consideration of such appeals. The main grounds for a successful appeal for reconsideration are if the author can identify a specific technical or other point of interest which had been missed by the referees and editors previously. Appeals written in general or vague terms, or that contain arguments not relevant to the content of the particular manuscript, are not likely to be successful. Manuscripts cannot be submitted elsewhere while an appeal is being considered.

Editors’ letters also contain detailed guidance about the paper’s format and style where appropriate (see below), which should be read in conjunction with the manuscript formatting guide when revising and resubmitting. In replying to the referees’ comments, authors are advised to use language that would not cause offence when their paper is shown again to the referees, and to bear in mind that if a point was not clear to the referees and/or editors, it is unlikely that it would be clear to the nonspecialist readers of Nature .

If Nature declines to publish a paper and does not suggest resubmission, authors are strongly advised to submit their paper for publication elsewhere. If an author wishes to appeal against Nature 's decision, the appeal must be made in writing, not by telephone, and should be confined to the scientific case for publication. Nature ’s editors are unable to assign high priority to consideration of appeals. Authors often ask for a new referee to be consulted, particularly in cases where two referees have been used and one is negative, the other positive. Nature is reluctant to consult new referees unless there is a particular, relevant area of scientific expertise that was lacking in the referees already used. Authors should note that as Nature is an interdisciplinary journal, referees for a paper are chosen for different reasons, for example a technical expert and a person who has a general overview of a field might both referee the same paper. A referee might be selected for expertise in only one area, for example to judge if a statistical analysis is appropriate, or if a particular technique that is essential to underpin the conclusion has been undertaken properly. This referee’s opinion must be satisfied for the manuscript to be published, but as this referee may not know about the field concerned, an endorsement in isolation from the other referee(s) would not constitute grounds for publication. Editors’ decisions are weighted according to the expertise of the referees, and not by a “voting” procedure. Hence, Nature prefers to stick with the original referees of a particular paper rather than to call in new referees to arbitrate, unless there is some specific way in which the referee can be shown to be technically lacking or biased in judgement. If Nature 's editors agree to reconsider a paper, the other original referee(s) will have the chance to see and comment on the report of the referee who is the subject of the complaint. New referees can often raise new sets of points, which complicates and lengthens the consideration process instead of simplifying it. If an author remains unsatisfied, he or she can write to the Editor , citing the manuscript reference number. In all these cases, it is likely that some time will elapse before Nature can respond, and the paper must not be submitted for publication elsewhere during this time.

After acceptance

See this document for a full description of what happens after acceptance and before publication.

Formats and lengths of papers

Space in Nature is extremely limited, and so format requirements must be strictly observed, as advised by the editor handling the submission, and detailed in the manuscript formatting guide.

Subediting of accepted papers

After a paper is accepted, it is subedited (copyedited) to ensure maximum clarity and reach, a process that enhances the value of papers in various ways. Nature 's subeditors are happy to advise authors about the format of their Articles after acceptance for publication. Their role is to

- edit the language for maximum clarity and precision for those in other disciplines. Special care is given to papers whose authors’ native language is not English, and special attention is given to summary paragraphs.