- Privacy Policy

Buy Me a Coffee

Home » Regression Analysis – Methods, Types and Examples

Regression Analysis – Methods, Types and Examples

Table of Contents

Regression Analysis

Regression analysis is a set of statistical processes for estimating the relationships among variables . It includes many techniques for modeling and analyzing several variables when the focus is on the relationship between a dependent variable and one or more independent variables (or ‘predictors’).

Regression Analysis Methodology

Here is a general methodology for performing regression analysis:

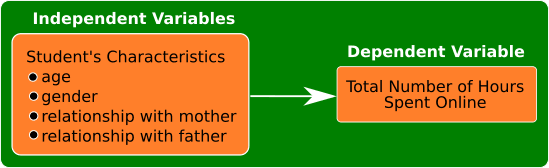

- Define the research question: Clearly state the research question or hypothesis you want to investigate. Identify the dependent variable (also called the response variable or outcome variable) and the independent variables (also called predictor variables or explanatory variables) that you believe are related to the dependent variable.

- Collect data: Gather the data for the dependent variable and independent variables. Ensure that the data is relevant, accurate, and representative of the population or phenomenon you are studying.

- Explore the data: Perform exploratory data analysis to understand the characteristics of the data, identify any missing values or outliers, and assess the relationships between variables through scatter plots, histograms, or summary statistics.

- Choose the regression model: Select an appropriate regression model based on the nature of the variables and the research question. Common regression models include linear regression, multiple regression, logistic regression, polynomial regression, and time series regression, among others.

- Assess assumptions: Check the assumptions of the regression model. Some common assumptions include linearity (the relationship between variables is linear), independence of errors, homoscedasticity (constant variance of errors), and normality of errors. Violation of these assumptions may require additional steps or alternative models.

- Estimate the model: Use a suitable method to estimate the parameters of the regression model. The most common method is ordinary least squares (OLS), which minimizes the sum of squared differences between the observed and predicted values of the dependent variable.

- I nterpret the results: Analyze the estimated coefficients, p-values, confidence intervals, and goodness-of-fit measures (e.g., R-squared) to interpret the results. Determine the significance and direction of the relationships between the independent variables and the dependent variable.

- Evaluate model performance: Assess the overall performance of the regression model using appropriate measures, such as R-squared, adjusted R-squared, and root mean squared error (RMSE). These measures indicate how well the model fits the data and how much of the variation in the dependent variable is explained by the independent variables.

- Test assumptions and diagnose problems: Check the residuals (the differences between observed and predicted values) for any patterns or deviations from assumptions. Conduct diagnostic tests, such as examining residual plots, testing for multicollinearity among independent variables, and assessing heteroscedasticity or autocorrelation, if applicable.

- Make predictions and draw conclusions: Once you have a satisfactory model, use it to make predictions on new or unseen data. Draw conclusions based on the results of the analysis, considering the limitations and potential implications of the findings.

Types of Regression Analysis

Types of Regression Analysis are as follows:

Linear Regression

Linear regression is the most basic and widely used form of regression analysis. It models the linear relationship between a dependent variable and one or more independent variables. The goal is to find the best-fitting line that minimizes the sum of squared differences between observed and predicted values.

Multiple Regression

Multiple regression extends linear regression by incorporating two or more independent variables to predict the dependent variable. It allows for examining the simultaneous effects of multiple predictors on the outcome variable.

Polynomial Regression

Polynomial regression models non-linear relationships between variables by adding polynomial terms (e.g., squared or cubic terms) to the regression equation. It can capture curved or nonlinear patterns in the data.

Logistic Regression

Logistic regression is used when the dependent variable is binary or categorical. It models the probability of the occurrence of a certain event or outcome based on the independent variables. Logistic regression estimates the coefficients using the logistic function, which transforms the linear combination of predictors into a probability.

Ridge Regression and Lasso Regression

Ridge regression and Lasso regression are techniques used for addressing multicollinearity (high correlation between independent variables) and variable selection. Both methods introduce a penalty term to the regression equation to shrink or eliminate less important variables. Ridge regression uses L2 regularization, while Lasso regression uses L1 regularization.

Time Series Regression

Time series regression analyzes the relationship between a dependent variable and independent variables when the data is collected over time. It accounts for autocorrelation and trends in the data and is used in forecasting and studying temporal relationships.

Nonlinear Regression

Nonlinear regression models are used when the relationship between the dependent variable and independent variables is not linear. These models can take various functional forms and require estimation techniques different from those used in linear regression.

Poisson Regression

Poisson regression is employed when the dependent variable represents count data. It models the relationship between the independent variables and the expected count, assuming a Poisson distribution for the dependent variable.

Generalized Linear Models (GLM)

GLMs are a flexible class of regression models that extend the linear regression framework to handle different types of dependent variables, including binary, count, and continuous variables. GLMs incorporate various probability distributions and link functions.

Regression Analysis Formulas

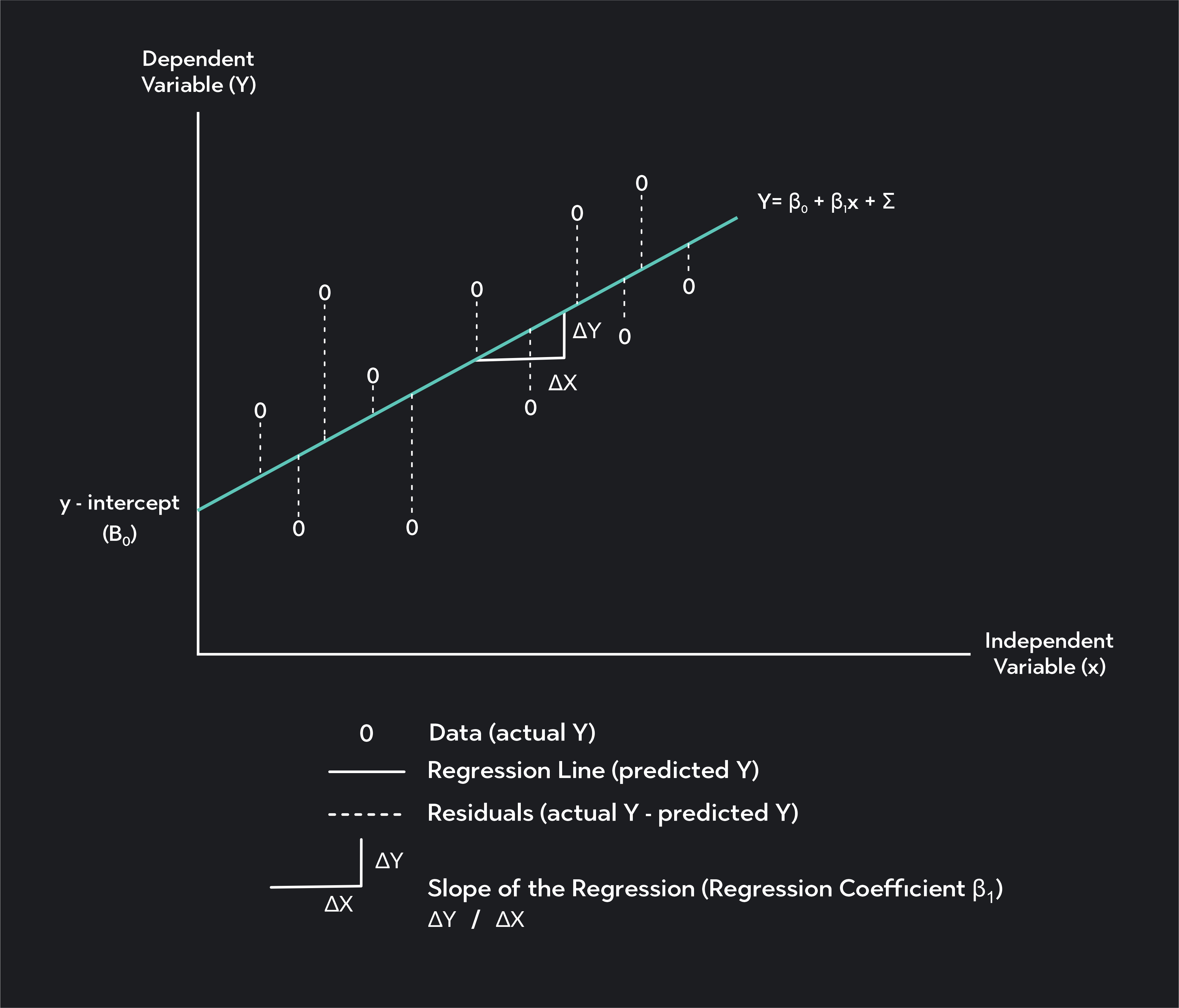

Regression analysis involves estimating the parameters of a regression model to describe the relationship between the dependent variable (Y) and one or more independent variables (X). Here are the basic formulas for linear regression, multiple regression, and logistic regression:

Linear Regression:

Simple Linear Regression Model: Y = β0 + β1X + ε

Multiple Linear Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

In both formulas:

- Y represents the dependent variable (response variable).

- X represents the independent variable(s) (predictor variable(s)).

- β0, β1, β2, …, βn are the regression coefficients or parameters that need to be estimated.

- ε represents the error term or residual (the difference between the observed and predicted values).

Multiple Regression:

Multiple regression extends the concept of simple linear regression by including multiple independent variables.

Multiple Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

The formulas are similar to those in linear regression, with the addition of more independent variables.

Logistic Regression:

Logistic regression is used when the dependent variable is binary or categorical. The logistic regression model applies a logistic or sigmoid function to the linear combination of the independent variables.

Logistic Regression Model: p = 1 / (1 + e^-(β0 + β1X1 + β2X2 + … + βnXn))

In the formula:

- p represents the probability of the event occurring (e.g., the probability of success or belonging to a certain category).

- X1, X2, …, Xn represent the independent variables.

- e is the base of the natural logarithm.

The logistic function ensures that the predicted probabilities lie between 0 and 1, allowing for binary classification.

Regression Analysis Examples

Regression Analysis Examples are as follows:

- Stock Market Prediction: Regression analysis can be used to predict stock prices based on various factors such as historical prices, trading volume, news sentiment, and economic indicators. Traders and investors can use this analysis to make informed decisions about buying or selling stocks.

- Demand Forecasting: In retail and e-commerce, real-time It can help forecast demand for products. By analyzing historical sales data along with real-time data such as website traffic, promotional activities, and market trends, businesses can adjust their inventory levels and production schedules to meet customer demand more effectively.

- Energy Load Forecasting: Utility companies often use real-time regression analysis to forecast electricity demand. By analyzing historical energy consumption data, weather conditions, and other relevant factors, they can predict future energy loads. This information helps them optimize power generation and distribution, ensuring a stable and efficient energy supply.

- Online Advertising Performance: It can be used to assess the performance of online advertising campaigns. By analyzing real-time data on ad impressions, click-through rates, conversion rates, and other metrics, advertisers can adjust their targeting, messaging, and ad placement strategies to maximize their return on investment.

- Predictive Maintenance: Regression analysis can be applied to predict equipment failures or maintenance needs. By continuously monitoring sensor data from machines or vehicles, regression models can identify patterns or anomalies that indicate potential failures. This enables proactive maintenance, reducing downtime and optimizing maintenance schedules.

- Financial Risk Assessment: Real-time regression analysis can help financial institutions assess the risk associated with lending or investment decisions. By analyzing real-time data on factors such as borrower financials, market conditions, and macroeconomic indicators, regression models can estimate the likelihood of default or assess the risk-return tradeoff for investment portfolios.

Importance of Regression Analysis

Importance of Regression Analysis is as follows:

- Relationship Identification: Regression analysis helps in identifying and quantifying the relationship between a dependent variable and one or more independent variables. It allows us to determine how changes in independent variables impact the dependent variable. This information is crucial for decision-making, planning, and forecasting.

- Prediction and Forecasting: Regression analysis enables us to make predictions and forecasts based on the relationships identified. By estimating the values of the dependent variable using known values of independent variables, regression models can provide valuable insights into future outcomes. This is particularly useful in business, economics, finance, and other fields where forecasting is vital for planning and strategy development.

- Causality Assessment: While correlation does not imply causation, regression analysis provides a framework for assessing causality by considering the direction and strength of the relationship between variables. It allows researchers to control for other factors and assess the impact of a specific independent variable on the dependent variable. This helps in determining the causal effect and identifying significant factors that influence outcomes.

- Model Building and Variable Selection: Regression analysis aids in model building by determining the most appropriate functional form of the relationship between variables. It helps researchers select relevant independent variables and eliminate irrelevant ones, reducing complexity and improving model accuracy. This process is crucial for creating robust and interpretable models.

- Hypothesis Testing: Regression analysis provides a statistical framework for hypothesis testing. Researchers can test the significance of individual coefficients, assess the overall model fit, and determine if the relationship between variables is statistically significant. This allows for rigorous analysis and validation of research hypotheses.

- Policy Evaluation and Decision-Making: Regression analysis plays a vital role in policy evaluation and decision-making processes. By analyzing historical data, researchers can evaluate the effectiveness of policy interventions and identify the key factors contributing to certain outcomes. This information helps policymakers make informed decisions, allocate resources effectively, and optimize policy implementation.

- Risk Assessment and Control: Regression analysis can be used for risk assessment and control purposes. By analyzing historical data, organizations can identify risk factors and develop models that predict the likelihood of certain outcomes, such as defaults, accidents, or failures. This enables proactive risk management, allowing organizations to take preventive measures and mitigate potential risks.

When to Use Regression Analysis

- Prediction : Regression analysis is often employed to predict the value of the dependent variable based on the values of independent variables. For example, you might use regression to predict sales based on advertising expenditure, or to predict a student’s academic performance based on variables like study time, attendance, and previous grades.

- Relationship analysis: Regression can help determine the strength and direction of the relationship between variables. It can be used to examine whether there is a linear association between variables, identify which independent variables have a significant impact on the dependent variable, and quantify the magnitude of those effects.

- Causal inference: Regression analysis can be used to explore cause-and-effect relationships by controlling for other variables. For example, in a medical study, you might use regression to determine the impact of a specific treatment while accounting for other factors like age, gender, and lifestyle.

- Forecasting : Regression models can be utilized to forecast future trends or outcomes. By fitting a regression model to historical data, you can make predictions about future values of the dependent variable based on changes in the independent variables.

- Model evaluation: Regression analysis can be used to evaluate the performance of a model or test the significance of variables. You can assess how well the model fits the data, determine if additional variables improve the model’s predictive power, or test the statistical significance of coefficients.

- Data exploration : Regression analysis can help uncover patterns and insights in the data. By examining the relationships between variables, you can gain a deeper understanding of the data set and identify potential patterns, outliers, or influential observations.

Applications of Regression Analysis

Here are some common applications of regression analysis:

- Economic Forecasting: Regression analysis is frequently employed in economics to forecast variables such as GDP growth, inflation rates, or stock market performance. By analyzing historical data and identifying the underlying relationships, economists can make predictions about future economic conditions.

- Financial Analysis: Regression analysis plays a crucial role in financial analysis, such as predicting stock prices or evaluating the impact of financial factors on company performance. It helps analysts understand how variables like interest rates, company earnings, or market indices influence financial outcomes.

- Marketing Research: Regression analysis helps marketers understand consumer behavior and make data-driven decisions. It can be used to predict sales based on advertising expenditures, pricing strategies, or demographic variables. Regression models provide insights into which marketing efforts are most effective and help optimize marketing campaigns.

- Health Sciences: Regression analysis is extensively used in medical research and public health studies. It helps examine the relationship between risk factors and health outcomes, such as the impact of smoking on lung cancer or the relationship between diet and heart disease. Regression analysis also helps in predicting health outcomes based on various factors like age, genetic markers, or lifestyle choices.

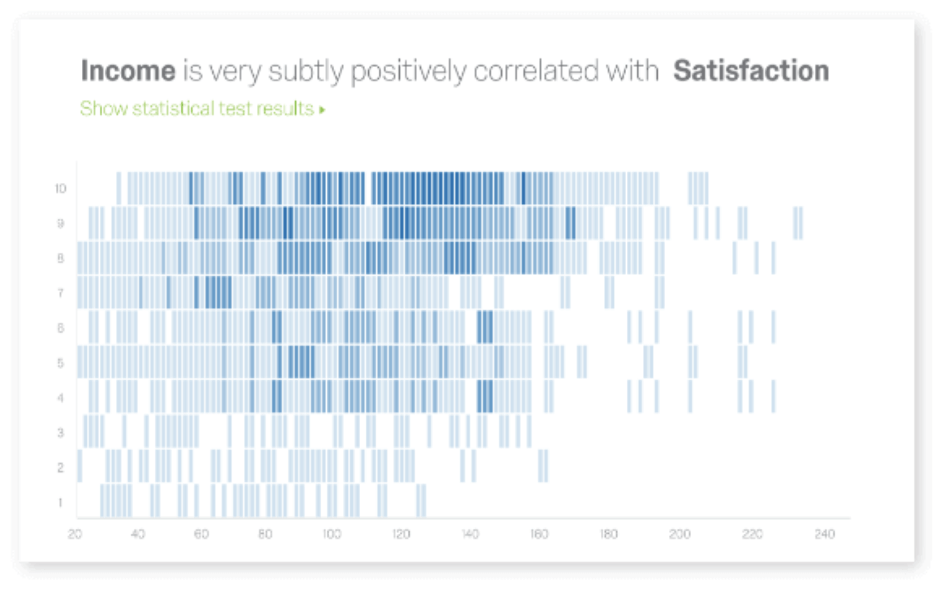

- Social Sciences: Regression analysis is widely used in social sciences like sociology, psychology, and education research. Researchers can investigate the impact of variables like income, education level, or social factors on various outcomes such as crime rates, academic performance, or job satisfaction.

- Operations Research: Regression analysis is applied in operations research to optimize processes and improve efficiency. For example, it can be used to predict demand based on historical sales data, determine the factors influencing production output, or optimize supply chain logistics.

- Environmental Studies: Regression analysis helps in understanding and predicting environmental phenomena. It can be used to analyze the impact of factors like temperature, pollution levels, or land use patterns on phenomena such as species diversity, water quality, or climate change.

- Sports Analytics: Regression analysis is increasingly used in sports analytics to gain insights into player performance, team strategies, and game outcomes. It helps analyze the relationship between various factors like player statistics, coaching strategies, or environmental conditions and their impact on game outcomes.

Advantages and Disadvantages of Regression Analysis

About the author.

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Cluster Analysis – Types, Methods and Examples

Discriminant Analysis – Methods, Types and...

MANOVA (Multivariate Analysis of Variance) –...

Documentary Analysis – Methods, Applications and...

ANOVA (Analysis of variance) – Formulas, Types...

Graphical Methods – Types, Examples and Guide

Regression Analysis

Regression analysis is a quantitative research method which is used when the study involves modelling and analysing several variables, where the relationship includes a dependent variable and one or more independent variables. In simple terms, regression analysis is a quantitative method used to test the nature of relationships between a dependent variable and one or more independent variables.

The basic form of regression models includes unknown parameters (β), independent variables (X), and the dependent variable (Y).

Regression model, basically, specifies the relation of dependent variable (Y) to a function combination of independent variables (X) and unknown parameters (β)

Y ≈ f (X, β)

Regression equation can be used to predict the values of ‘y’, if the value of ‘x’ is given, and both ‘y’ and ‘x’ are the two sets of measures of a sample size of ‘n’. The formulae for regression equation would be

Do not be intimidated by visual complexity of correlation and regression formulae above. You don’t have to apply the formula manually, and correlation and regression analyses can be run with the application of popular analytical software such as Microsoft Excel, Microsoft Access, SPSS and others.

Linear regression analysis is based on the following set of assumptions:

1. Assumption of linearity . There is a linear relationship between dependent and independent variables.

2. Assumption of homoscedasticity . Data values for dependent and independent variables have equal variances.

3. Assumption of absence of collinearity or multicollinearity . There is no correlation between two or more independent variables.

4. Assumption of normal distribution . The data for the independent variables and dependent variable are normally distributed

My e-book, The Ultimate Guide to Writing a Dissertation in Business Studies: a step by step assistance offers practical assistance to complete a dissertation with minimum or no stress. The e-book covers all stages of writing a dissertation starting from the selection to the research area to submitting the completed version of the work within the deadline. John Dudovskiy

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

A Refresher on Regression Analysis

Understanding one of the most important types of data analysis.

You probably know by now that whenever possible you should be making data-driven decisions at work . But do you know how to parse through all the data available to you? The good news is that you probably don’t need to do the number crunching yourself (hallelujah!) but you do need to correctly understand and interpret the analysis created by your colleagues. One of the most important types of data analysis is called regression analysis.

- Amy Gallo is a contributing editor at Harvard Business Review, cohost of the Women at Work podcast , and the author of two books: Getting Along: How to Work with Anyone (Even Difficult People) and the HBR Guide to Dealing with Conflict . She writes and speaks about workplace dynamics. Watch her TEDx talk on conflict and follow her on LinkedIn . amyegallo

Partner Center

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Published: 31 January 2022

The clinician’s guide to interpreting a regression analysis

- Sofia Bzovsky 1 ,

- Mark R. Phillips ORCID: orcid.org/0000-0003-0923-261X 2 ,

- Robyn H. Guymer ORCID: orcid.org/0000-0002-9441-4356 3 , 4 ,

- Charles C. Wykoff 5 , 6 ,

- Lehana Thabane ORCID: orcid.org/0000-0003-0355-9734 2 , 7 ,

- Mohit Bhandari ORCID: orcid.org/0000-0001-9608-4808 1 , 2 &

- Varun Chaudhary ORCID: orcid.org/0000-0002-9988-4146 1 , 2

on behalf of the R.E.T.I.N.A. study group

Eye volume 36 , pages 1715–1717 ( 2022 ) Cite this article

19k Accesses

9 Citations

1 Altmetric

Metrics details

- Outcomes research

Introduction

When researchers are conducting clinical studies to investigate factors associated with, or treatments for disease and conditions to improve patient care and clinical practice, statistical evaluation of the data is often necessary. Regression analysis is an important statistical method that is commonly used to determine the relationship between several factors and disease outcomes or to identify relevant prognostic factors for diseases [ 1 ].

This editorial will acquaint readers with the basic principles of and an approach to interpreting results from two types of regression analyses widely used in ophthalmology: linear, and logistic regression.

Linear regression analysis

Linear regression is used to quantify a linear relationship or association between a continuous response/outcome variable or dependent variable with at least one independent or explanatory variable by fitting a linear equation to observed data [ 1 ]. The variable that the equation solves for, which is the outcome or response of interest, is called the dependent variable [ 1 ]. The variable that is used to explain the value of the dependent variable is called the predictor, explanatory, or independent variable [ 1 ].

In a linear regression model, the dependent variable must be continuous (e.g. intraocular pressure or visual acuity), whereas, the independent variable may be either continuous (e.g. age), binary (e.g. sex), categorical (e.g. age-related macular degeneration stage or diabetic retinopathy severity scale score), or a combination of these [ 1 ].

When investigating the effect or association of a single independent variable on a continuous dependent variable, this type of analysis is called a simple linear regression [ 2 ]. In many circumstances though, a single independent variable may not be enough to adequately explain the dependent variable. Often it is necessary to control for confounders and in these situations, one can perform a multivariable linear regression to study the effect or association with multiple independent variables on the dependent variable [ 1 , 2 ]. When incorporating numerous independent variables, the regression model estimates the effect or contribution of each independent variable while holding the values of all other independent variables constant [ 3 ].

When interpreting the results of a linear regression, there are a few key outputs for each independent variable included in the model:

Estimated regression coefficient—The estimated regression coefficient indicates the direction and strength of the relationship or association between the independent and dependent variables [ 4 ]. Specifically, the regression coefficient describes the change in the dependent variable for each one-unit change in the independent variable, if continuous [ 4 ]. For instance, if examining the relationship between a continuous predictor variable and intra-ocular pressure (dependent variable), a regression coefficient of 2 means that for every one-unit increase in the predictor, there is a two-unit increase in intra-ocular pressure. If the independent variable is binary or categorical, then the one-unit change represents switching from one category to the reference category [ 4 ]. For instance, if examining the relationship between a binary predictor variable, such as sex, where ‘female’ is set as the reference category, and intra-ocular pressure (dependent variable), a regression coefficient of 2 means that, on average, males have an intra-ocular pressure that is 2 mm Hg higher than females.

Confidence Interval (CI)—The CI, typically set at 95%, is a measure of the precision of the coefficient estimate of the independent variable [ 4 ]. A large CI indicates a low level of precision, whereas a small CI indicates a higher precision [ 5 ].

P value—The p value for the regression coefficient indicates whether the relationship between the independent and dependent variables is statistically significant [ 6 ].

Logistic regression analysis

As with linear regression, logistic regression is used to estimate the association between one or more independent variables with a dependent variable [ 7 ]. However, the distinguishing feature in logistic regression is that the dependent variable (outcome) must be binary (or dichotomous), meaning that the variable can only take two different values or levels, such as ‘1 versus 0’ or ‘yes versus no’ [ 2 , 7 ]. The effect size of predictor variables on the dependent variable is best explained using an odds ratio (OR) [ 2 ]. ORs are used to compare the relative odds of the occurrence of the outcome of interest, given exposure to the variable of interest [ 5 ]. An OR equal to 1 means that the odds of the event in one group are the same as the odds of the event in another group; there is no difference [ 8 ]. An OR > 1 implies that one group has a higher odds of having the event compared with the reference group, whereas an OR < 1 means that one group has a lower odds of having an event compared with the reference group [ 8 ]. When interpreting the results of a logistic regression, the key outputs include the OR, CI, and p-value for each independent variable included in the model.

Clinical example

Sen et al. investigated the association between several factors (independent variables) and visual acuity outcomes (dependent variable) in patients receiving anti-vascular endothelial growth factor therapy for macular oedema (DMO) by means of both linear and logistic regression [ 9 ]. Multivariable linear regression demonstrated that age (Estimate −0.33, 95% CI − 0.48 to −0.19, p < 0.001) was significantly associated with best-corrected visual acuity (BCVA) at 100 weeks at alpha = 0.05 significance level [ 9 ]. The regression coefficient of −0.33 means that the BCVA at 100 weeks decreases by 0.33 with each additional year of older age.

Multivariable logistic regression also demonstrated that age and ellipsoid zone status were statistically significant associated with achieving a BCVA letter score >70 letters at 100 weeks at the alpha = 0.05 significance level. Patients ≥75 years of age were at a decreased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those <50 years of age, since the OR is less than 1 (OR 0.96, 95% CI 0.94 to 0.98, p = 0.001) [ 9 ]. Similarly, patients between the ages of 50–74 years were also at a decreased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those <50 years of age, since the OR is less than 1 (OR 0.15, 95% CI 0.04 to 0.48, p = 0.001) [ 9 ]. As well, those with a not intact ellipsoid zone were at a decreased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those with an intact ellipsoid zone (OR 0.20, 95% CI 0.07 to 0.56; p = 0.002). On the other hand, patients with an ungradable/questionable ellipsoid zone were at an increased odds of achieving a BCVA letter score >70 letters at 100 weeks compared to those with an intact ellipsoid zone, since the OR is greater than 1 (OR 2.26, 95% CI 1.14 to 4.48; p = 0.02) [ 9 ].

The narrower the CI, the more precise the estimate is; and the smaller the p value (relative to alpha = 0.05), the greater the evidence against the null hypothesis of no effect or association.

Simply put, linear and logistic regression are useful tools for appreciating the relationship between predictor/explanatory and outcome variables for continuous and dichotomous outcomes, respectively, that can be applied in clinical practice, such as to gain an understanding of risk factors associated with a disease of interest.

Schneider A, Hommel G, Blettner M. Linear Regression. Anal Dtsch Ärztebl Int. 2010;107:776–82.

Google Scholar

Bender R. Introduction to the use of regression models in epidemiology. In: Verma M, editor. Cancer epidemiology. Methods in molecular biology. Humana Press; 2009:179–95.

Schober P, Vetter TR. Confounding in observational research. Anesth Analg. 2020;130:635.

Article Google Scholar

Schober P, Vetter TR. Linear regression in medical research. Anesth Analg. 2021;132:108–9.

Szumilas M. Explaining odds ratios. J Can Acad Child Adolesc Psychiatry. 2010;19:227–9.

Thiese MS, Ronna B, Ott U. P value interpretations and considerations. J Thorac Dis. 2016;8:E928–31.

Schober P, Vetter TR. Logistic regression in medical research. Anesth Analg. 2021;132:365–6.

Zabor EC, Reddy CA, Tendulkar RD, Patil S. Logistic regression in clinical studies. Int J Radiat Oncol Biol Phys. 2022;112:271–7.

Sen P, Gurudas S, Ramu J, Patrao N, Chandra S, Rasheed R, et al. Predictors of visual acuity outcomes after anti-vascular endothelial growth factor treatment for macular edema secondary to central retinal vein occlusion. Ophthalmol Retin. 2021;5:1115–24.

Download references

R.E.T.I.N.A. study group

Varun Chaudhary 1,2 , Mohit Bhandari 1,2 , Charles C. Wykoff 5,6 , Sobha Sivaprasad 8 , Lehana Thabane 2,7 , Peter Kaiser 9 , David Sarraf 10 , Sophie J. Bakri 11 , Sunir J. Garg 12 , Rishi P. Singh 13,14 , Frank G. Holz 15 , Tien Y. Wong 16,17 , and Robyn H. Guymer 3,4

Author information

Authors and affiliations.

Department of Surgery, McMaster University, Hamilton, ON, Canada

Sofia Bzovsky, Mohit Bhandari & Varun Chaudhary

Department of Health Research Methods, Evidence & Impact, McMaster University, Hamilton, ON, Canada

Mark R. Phillips, Lehana Thabane, Mohit Bhandari & Varun Chaudhary

Centre for Eye Research Australia, Royal Victorian Eye and Ear Hospital, East Melbourne, VIC, Australia

Robyn H. Guymer

Department of Surgery, (Ophthalmology), The University of Melbourne, Melbourne, VIC, Australia

Retina Consultants of Texas (Retina Consultants of America), Houston, TX, USA

Charles C. Wykoff

Blanton Eye Institute, Houston Methodist Hospital, Houston, TX, USA

Biostatistics Unit, St. Joseph’s Healthcare Hamilton, Hamilton, ON, Canada

Lehana Thabane

NIHR Moorfields Biomedical Research Centre, Moorfields Eye Hospital, London, UK

Sobha Sivaprasad

Cole Eye Institute, Cleveland Clinic, Cleveland, OH, USA

Peter Kaiser

Retinal Disorders and Ophthalmic Genetics, Stein Eye Institute, University of California, Los Angeles, CA, USA

David Sarraf

Department of Ophthalmology, Mayo Clinic, Rochester, MN, USA

Sophie J. Bakri

The Retina Service at Wills Eye Hospital, Philadelphia, PA, USA

Sunir J. Garg

Center for Ophthalmic Bioinformatics, Cole Eye Institute, Cleveland Clinic, Cleveland, OH, USA

Rishi P. Singh

Cleveland Clinic Lerner College of Medicine, Cleveland, OH, USA

Department of Ophthalmology, University of Bonn, Bonn, Germany

Frank G. Holz

Singapore Eye Research Institute, Singapore, Singapore

Tien Y. Wong

Singapore National Eye Centre, Duke-NUD Medical School, Singapore, Singapore

You can also search for this author in PubMed Google Scholar

- Varun Chaudhary

- , Mohit Bhandari

- , Charles C. Wykoff

- , Sobha Sivaprasad

- , Lehana Thabane

- , Peter Kaiser

- , David Sarraf

- , Sophie J. Bakri

- , Sunir J. Garg

- , Rishi P. Singh

- , Frank G. Holz

- , Tien Y. Wong

- & Robyn H. Guymer

Contributions

SB was responsible for writing, critical review and feedback on manuscript. MRP was responsible for conception of idea, critical review and feedback on manuscript. RHG was responsible for critical review and feedback on manuscript. CCW was responsible for critical review and feedback on manuscript. LT was responsible for critical review and feedback on manuscript. MB was responsible for conception of idea, critical review and feedback on manuscript. VC was responsible for conception of idea, critical review and feedback on manuscript.

Corresponding author

Correspondence to Varun Chaudhary .

Ethics declarations

Competing interests.

SB: Nothing to disclose. MRP: Nothing to disclose. RHG: Advisory boards: Bayer, Novartis, Apellis, Roche, Genentech Inc.—unrelated to this study. CCW: Consultant: Acuela, Adverum Biotechnologies, Inc, Aerpio, Alimera Sciences, Allegro Ophthalmics, LLC, Allergan, Apellis Pharmaceuticals, Bayer AG, Chengdu Kanghong Pharmaceuticals Group Co, Ltd, Clearside Biomedical, DORC (Dutch Ophthalmic Research Center), EyePoint Pharmaceuticals, Gentech/Roche, GyroscopeTx, IVERIC bio, Kodiak Sciences Inc, Novartis AG, ONL Therapeutics, Oxurion NV, PolyPhotonix, Recens Medical, Regeron Pharmaceuticals, Inc, REGENXBIO Inc, Santen Pharmaceutical Co, Ltd, and Takeda Pharmaceutical Company Limited; Research funds: Adverum Biotechnologies, Inc, Aerie Pharmaceuticals, Inc, Aerpio, Alimera Sciences, Allergan, Apellis Pharmaceuticals, Chengdu Kanghong Pharmaceutical Group Co, Ltd, Clearside Biomedical, Gemini Therapeutics, Genentech/Roche, Graybug Vision, Inc, GyroscopeTx, Ionis Pharmaceuticals, IVERIC bio, Kodiak Sciences Inc, Neurotech LLC, Novartis AG, Opthea, Outlook Therapeutics, Inc, Recens Medical, Regeneron Pharmaceuticals, Inc, REGENXBIO Inc, Samsung Pharm Co, Ltd, Santen Pharmaceutical Co, Ltd, and Xbrane Biopharma AB—unrelated to this study. LT: Nothing to disclose. MB: Research funds: Pendopharm, Bioventus, Acumed—unrelated to this study. VC: Advisory Board Member: Alcon, Roche, Bayer, Novartis; Grants: Bayer, Novartis—unrelated to this study.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Reprints and permissions

About this article

Cite this article.

Bzovsky, S., Phillips, M.R., Guymer, R.H. et al. The clinician’s guide to interpreting a regression analysis. Eye 36 , 1715–1717 (2022). https://doi.org/10.1038/s41433-022-01949-z

Download citation

Received : 08 January 2022

Revised : 17 January 2022

Accepted : 18 January 2022

Published : 31 January 2022

Issue Date : September 2022

DOI : https://doi.org/10.1038/s41433-022-01949-z

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

This article is cited by

Factors affecting patient satisfaction at a plastic surgery outpatient department at a tertiary centre in south africa.

- Chrysis Sofianos

BMC Health Services Research (2023)

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

If you could change one thing about college, what would it be?

Graduate faster

Better quality online classes

Flexible schedule

Access to top-rated instructors

The Complete Guide To Simple Regression Analysis

08.08.2023 • 8 min read

Sarah Thomas

Subject Matter Expert

Learn what simple regression analysis means and why it’s useful for analyzing data, and how to interpret the results.

In This Article

What Is Simple Linear Regression Analysis?

Linear regression equation, how to perform linear regression, linear regression assumptions, how do you find the regression line, how to interpret the results of simple regression.

What is the relationship between parental income and educational attainment or hours spent on social media and anxiety levels? Regression is a versatile statistical tool that can help you answer these types of questions. It’s a tool that lets you model the relationship between two or more variables .

The applications of regression are endless. You can use it as a machine learning algorithm to make predictions. You can use it to establish correlations, and in some cases, you can use it to uncover causal links in your data.

In this article, we’ll tell you everything you need to know about the most basic form of regression analysis: the simple linear regression model.

Simple linear regression is a statistical tool you can use to evaluate correlations between a single independent variable (X) and a single dependent variable (Y). The model fits a straight line to data collected for each variable, and using this line, you can estimate the correlation between X and Y and predict values of Y using values of X.

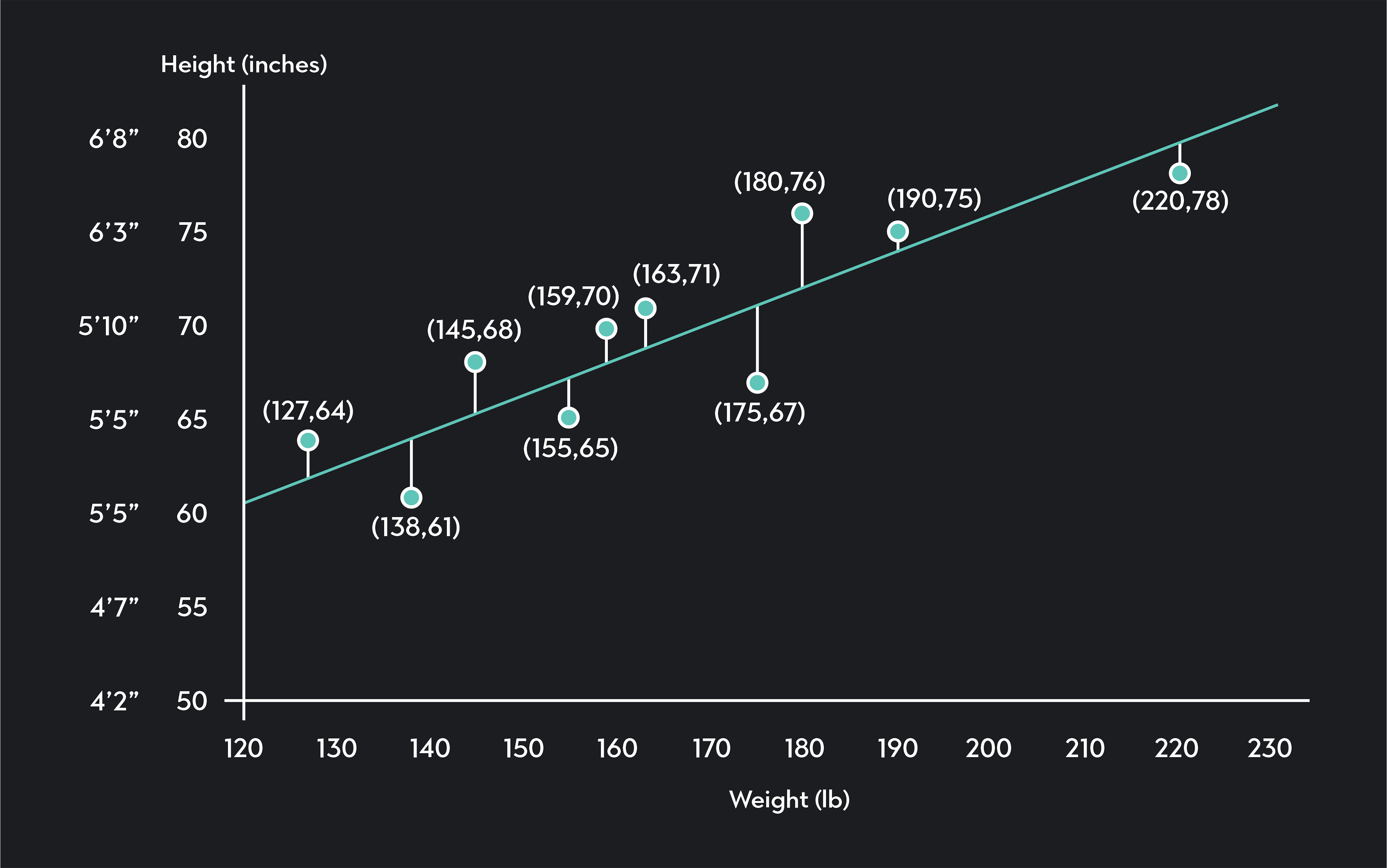

As a quick example, imagine you want to explore the relationship between weight (X) and height (Y). You collect data from ten randomly selected individuals, and you plot your data on a scatterplot like the one below.

In the scatterplot, each point represents data collected for one of the individuals in your sample. The blue line is your regression line. It models the relationship between weight and height using observed data. Not surprisingly, we see the regression line is upward-sloping, indicating a positive correlation between weight and height. Taller people tend to be heavier than shorter people.

Once you have this line, you can measure how strong the correlation is between height and weight. You can estimate the height of somebody not in your sample by plugging their weight into the regression equation.

The equation for a simple linear regression is:

X is your independent variable

Y is an estimate of your dependent variable

β 0 \beta_0 β 0 is the constant or intercept of the regression line, which is the value of Y when X is equal to zero

β 1 \beta_1 β 1 is the regression coefficient, which is the slope of the regression line and your estimate for the change in Y given a 1-unit change in X

ε \varepsilon ε is the error term of the regression

You may notice the formula for a regression looks very similar to the equation of a line (y=mX+b). That’s because linear regression is a line! It’s a line fitted to data that you can use to estimate the values of one variable using the value of a correlated variable.

You can build a simple linear regression model in 5 steps.

1. Collect data

Collect data for two variables (X and Y). Y is your dependent variable, which is the variable you want to estimate using the regression. X is your independent variable—the variable you use as an input in your regression.

2. Plot the data on a scatter plot

Plot the values of X and Y on a scatter plot with values of X plotted along the horizontal x-axis and values of Y plotted on the vertical y-axis.

3. Calculate a correlation coefficient

Calculate a correlation coefficient to determine the strength of the linear relationship between your two variables.

4. Fit a regression to the data

Find the regression line using the ordinary least-squares method. (You can do this by hand; but it’s much easier to use statistical software like Desmos, Excel, R, or Stata.)

5. Assess the regression line

Once you have the regression line, assess how well your model performs by checking to see how well the model predicts values of Y.

The key assumptions we make when using a simple linear regression model are:

The relationship between X and Y (if it exists) is linear.

Independence

The residuals of your model are independent.

Homoscedasticity

The variance of the residual is constant across values of the independent variable.

The residuals are normally distributed .

You should not use a simple linear regression unless it’s reasonable to make these assumptions.

Simple linear regression involves fitting a straight line to your dataset. We call this line the line of best fit or the regression line. The most common method for finding this line is OLS (or the Ordinary Least Squares Method).

In OLS, we find the regression line by minimizing the sum of squared residuals —also called squared errors. Anytime you draw a straight line through your data, there will be a vertical distance between each point on your scatter plot and the regression line. These vertical distances are called residuals (or errors).

They represent the difference between the actual values of your dependent variable Y i Y_i Y i , and the predicted value of that variable, Y ^ i \widehat{Y}_i Y i . The regression you find with OLS is the line that minimizes the sum of squared residuals.

You can calculate the OLS regression line by hand, but it’s much easier to do so using statistical software like Excel, Desmos, R, or Stata. In this video, Professor AnnMaria De Mars explains how to find the OLS regression equation using Desmos.

Depending on the software you use, the results of your regression analysis may look different. In general, however, your software will display output tables summarizing the main characteristics of your regression.

The values you should be looking for in these output tables fall under three categories:

Coefficients

Regression statistics

This is the β 0 \beta_0 β 0 value in your regression equation. It is the y-intercept of your regression line, and it is the estimate of Y when X is equal to zero.

Next to your intercept, you’ll see columns in the table showing additional information about the intercept. These include a standard error, p-value, T-stat, and confidence interval. You can use these values to test whether the estimate of your intercept is statistically significant .

Regression coefficient

This is the β 1 \beta_1 β 1 of your regression equation. It’s the slope of the regression line, and it tells you how much Y should change in response to a 1-unit change in X.

Similar to the intercept, the regression coefficient will have columns to the right of it. They'll show a standard error, p-value , T-stat, and confidence interval. Use these values to test whether your parameter estimate of β 1 \beta_1 β 1 is statistically significant.

Regression Statistics

Correlation coefficient (or multiple r).

This is the Pearson Correlation coefficient. It measures the strength of the correlation between X and Y.

R-squared (or the coefficient of determination)

We calculate this value by squaring the correlation coefficient. The independent variable can explain how much of the variance in your dependent variable. You can convert R 2 R^2 R 2 into a percentage by multiplying it by 100.

Standard error of the residuals

The standard error of the residuals is the average value of the errors in your model. It is the average vertical distance between each point on your scatter plot and the regression line. We measure this value in the same units as your dependent variable.

Degrees of freedom

In simple linear regression, the degrees of freedom equal the number of data points you used minus the two estimated parameters. The parameters are the intercept and regression coefficient.

Some software will also output a 5-number summary of your residuals. It'll show the minimum, first quartile , median , third quartile, and maximum values of your residuals.

P-value (or Significance F) - This is the p-value of your regression model.

It returns a hypothesis test's results where the null hypothesis is that no relationship exists between X and Y. The alternative hypothesis is that a linear relationship exists between X and Y.

If you are using a significance level (or alpha level) of 0.05, you would reject the null hypothesis if the p-value is less than or equal to 0.05. You would fail to reject the null hypothesis if your p-value is greater than 0.05.

What are correlations?

A correlation is a measure of the relationship between two variables.

Positive Correlations - If two variables, X and Y, have a positive linear correlation, Y tends to increase as X increases, and Y tends to decrease as X decreases. In other words, the two variables tend to move together in the same direction.

Negative Correlations - Two variables, X and Y, have a negative correlation if Y tends to increase as X decreases and Y tends to decrease as X increases. (i.e., The values of the two variables tend to move in opposite directions).

What’s the difference between the dependent and independent variables in a regression?

A simple linear regression involves two variables: X, the input or independent variable, and Y, the output or dependent variable. The independent variable is the variable you want to estimate using the regression. Its estimated value “depends” on the parameters and other variables of the model.

The independent variable—also called the predictor variable—is an input in the model. Its value does not depend on the other elements of the model.

Is the correlation coefficient the same as the regression coefficient?

The correlation coefficient and the regression coefficient will both have the same sign (positive or negative), but they are not the same. The only case where these two values will be equal is when the values of X and Y have been standardized to the same scale.

What is a correlation coefficient?

A correlation coefficient—or Pearson’s correlation coefficient —measures the strength of the linear relationship between X and Y. It’s a number ranging between -1 and 1. The closer a coefficient correlation is to 0, the weaker the correlation is between X and Y.

The closer the correlation coefficient is to 1 or -1, the stronger the correlation. Points on a scatter plot will be more dispersed around the regression line when the correlation between X and Y is weak, and the points will be more tightly clustered around the regression line when the correlation is strong.

What is the regression coefficient?

The regression coefficient, β 1 \beta_1 β 1 , is the slope of the regression line. It provides you with an estimate of how much the dependent variable, Y, will change in response to a 1-unit increase in the dependent variable, X.

The regression coefficient can be any number from − ∞ -\infty − ∞ to ∞ \infty ∞ . A positive regression coefficient implies a positive correlation between X and Y, and a negative regression coefficient implies a negative correlation.

Can I use linear regression in Excel?

Yes. The easiest way to add a simple linear regression line in Excel is to install and use Excel’s “Analysis Toolpak” add-in. To do this, go to Tools > Excel Add-ins and select the “Analysis Toolpak.”

Next, follow these steps.

In your spreadsheet, enter your data for X and Y in two columns

Navigate to the “Data” tab and click on the “Data Analysis” icon

From the list of analysis tools, select “Regression” and click “OK”

Select the data for Y and X respectively where it says “Input Y Range” and “Input X Range”

If you’ve labeled your columns with the names of your X and Y variables, click on the “Labels” checkbox.

You can further customize where you want your regression in your workbook and what additional information you would like Excel to display.

Once you’ve finished customizing, click “OK”

Your regression results will display next to your data or in a new sheet.

Is linear regression used to establish causal relationships?

Correlations are not equivalent to causation. If two variables are correlated, you cannot immediately conclude one causes the other to change. A linear regression will immediately indicate whether two variables correlate. But you’ll need to include more variables in your model and use regression with causal theories to draw conclusions about causal relationships.

What are some other types of regression analysis?

Simple linear regression is the most basic form of regression analysis. It involves one independent variable and one dependent variable. Once you get a handle on this model, you can move on to more sophisticated forms of regression analysis. These include multiple linear regression and nonlinear regression.

Multiple linear regression is a model that estimates the linear relationship between variables using one dependent variable and multiple predictor variables. Nonlinear regression is a method used to estimate nonlinear relationships between variables.

Explore Outlier's Award-Winning For-Credit Courses

Outlier (from the co-founder of MasterClass) has brought together some of the world's best instructors, game designers, and filmmakers to create the future of online college.

Check out these related courses:

Intro to Statistics

How data describes our world.

Intro to Microeconomics

Why small choices have big impact.

Intro to Macroeconomics

How money moves our world.

Intro to Psychology

The science of the mind.

Related Articles

Calculating Logarithmic Regression Step-By-Step

Learn about logarithmic regression and the steps to calculate it. We’ll also break down what a logarithmic function is, why it’s useful, and a few examples.

What Is the Interquartile Range (IQR)?

Learn what the interquartile range is, why it’s used in Statistics and how to calculate it. Also read about how it can be helpful for finding outliers.

Calculate Outlier Formula: A Step-By-Step Guide

This article is an overview of the outlier formula and how to calculate it step by step. It’s also packed with examples and FAQs to help you understand it.

Further Reading

What is statistical significance & why learn it, mean absolute deviation (mad) - meaning & formula, discrete & continuous variables with examples, population vs. sample: the big difference, why is statistics important, how to make a box plot.

Root out friction in every digital experience, super-charge conversion rates, and optimize digital self-service

Uncover insights from any interaction, deliver AI-powered agent coaching, and reduce cost to serve

Increase revenue and loyalty with real-time insights and recommendations delivered to teams on the ground

Know how your people feel and empower managers to improve employee engagement, productivity, and retention

Take action in the moments that matter most along the employee journey and drive bottom line growth

Whatever they’re are saying, wherever they’re saying it, know exactly what’s going on with your people

Get faster, richer insights with qual and quant tools that make powerful market research available to everyone

Run concept tests, pricing studies, prototyping + more with fast, powerful studies designed by UX research experts

Track your brand performance 24/7 and act quickly to respond to opportunities and challenges in your market

Explore the platform powering Experience Management

- Free Account

- For Digital

- For Customer Care

- For Human Resources

- For Researchers

- Financial Services

- All Industries

Popular Use Cases

- Customer Experience

- Employee Experience

- Employee Exit Interviews

- Net Promoter Score

- Voice of Customer

- Customer Success Hub

- Product Documentation

- Training & Certification

- XM Institute

- Popular Resources

- Customer Stories

- Market Research

- Artificial Intelligence

- Partnerships

- Marketplace

The annual gathering of the experience leaders at the world’s iconic brands building breakthrough business results, live in Salt Lake City.

- English/AU & NZ

- Español/Europa

- Español/América Latina

- Português Brasileiro

- REQUEST DEMO

- Experience Management

- Survey Data Analysis & Reporting

- Regression Analysis

Try Qualtrics for free

The complete guide to regression analysis.

19 min read What is regression analysis and why is it useful? While most of us have heard the term, understanding regression analysis in detail may be something you need to brush up on. Here’s what you need to know about this popular method of analysis.

When you rely on data to drive and guide business decisions, as well as predict market trends, just gathering and analyzing what you find isn’t enough — you need to ensure it’s relevant and valuable.

The challenge, however, is that so many variables can influence business data: market conditions, economic disruption, even the weather! As such, it’s essential you know which variables are affecting your data and forecasts, and what data you can discard.

And one of the most effective ways to determine data value and monitor trends (and the relationships between them) is to use regression analysis, a set of statistical methods used for the estimation of relationships between independent and dependent variables.

In this guide, we’ll cover the fundamentals of regression analysis, from what it is and how it works to its benefits and practical applications.

Free eBook: 2024 global market research trends report

What is regression analysis?

Regression analysis is a statistical method. It’s used for analyzing different factors that might influence an objective – such as the success of a product launch, business growth, a new marketing campaign – and determining which factors are important and which ones can be ignored.

Regression analysis can also help leaders understand how different variables impact each other and what the outcomes are. For example, when forecasting financial performance, regression analysis can help leaders determine how changes in the business can influence revenue or expenses in the future.

Running an analysis of this kind, you might find that there’s a high correlation between the number of marketers employed by the company, the leads generated, and the opportunities closed.

This seems to suggest that a high number of marketers and a high number of leads generated influences sales success. But do you need both factors to close those sales? By analyzing the effects of these variables on your outcome, you might learn that when leads increase but the number of marketers employed stays constant, there is no impact on the number of opportunities closed, but if the number of marketers increases, leads and closed opportunities both rise.

Regression analysis can help you tease out these complex relationships so you can determine which areas you need to focus on in order to get your desired results, and avoid wasting time with those that have little or no impact. In this example, that might mean hiring more marketers rather than trying to increase leads generated.

How does regression analysis work?

Regression analysis starts with variables that are categorized into two types: dependent and independent variables. The variables you select depend on the outcomes you’re analyzing.

Understanding variables:

1. dependent variable.

This is the main variable that you want to analyze and predict. For example, operational (O) data such as your quarterly or annual sales, or experience (X) data such as your net promoter score (NPS) or customer satisfaction score (CSAT) .

These variables are also called response variables, outcome variables, or left-hand-side variables (because they appear on the left-hand side of a regression equation).

There are three easy ways to identify them:

- Is the variable measured as an outcome of the study?

- Does the variable depend on another in the study?

- Do you measure the variable only after other variables are altered?

2. Independent variable

Independent variables are the factors that could affect your dependent variables. For example, a price rise in the second quarter could make an impact on your sales figures.

You can identify independent variables with the following list of questions:

- Is the variable manipulated, controlled, or used as a subject grouping method by the researcher?

- Does this variable come before the other variable in time?

- Are you trying to understand whether or how this variable affects another?

Independent variables are often referred to differently in regression depending on the purpose of the analysis. You might hear them called:

Explanatory variables

Explanatory variables are those which explain an event or an outcome in your study. For example, explaining why your sales dropped or increased.

Predictor variables

Predictor variables are used to predict the value of the dependent variable. For example, predicting how much sales will increase when new product features are rolled out .

Experimental variables

These are variables that can be manipulated or changed directly by researchers to assess the impact. For example, assessing how different product pricing ($10 vs $15 vs $20) will impact the likelihood to purchase.

Subject variables (also called fixed effects)

Subject variables can’t be changed directly, but vary across the sample. For example, age, gender, or income of consumers.

Unlike experimental variables, you can’t randomly assign or change subject variables, but you can design your regression analysis to determine the different outcomes of groups of participants with the same characteristics. For example, ‘how do price rises impact sales based on income?’

Carrying out regression analysis

So regression is about the relationships between dependent and independent variables. But how exactly do you do it?

Assuming you have your data collection done already, the first and foremost thing you need to do is plot your results on a graph. Doing this makes interpreting regression analysis results much easier as you can clearly see the correlations between dependent and independent variables.

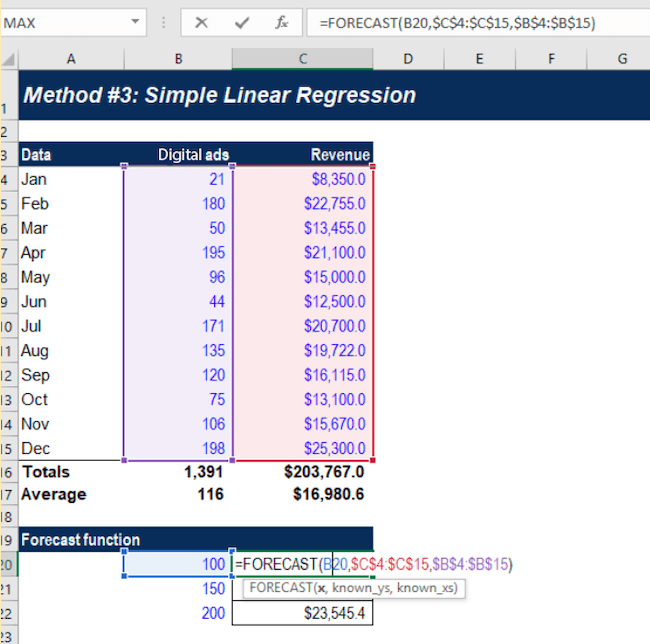

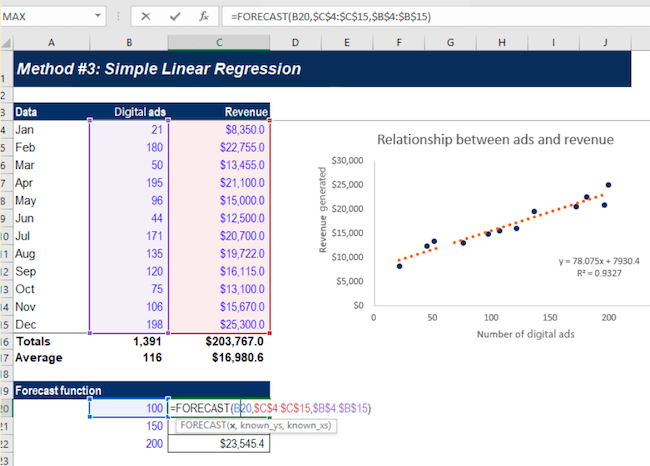

Let’s say you want to carry out a regression analysis to understand the relationship between the number of ads placed and revenue generated.

On the Y-axis, you place the revenue generated. On the X-axis, the number of digital ads. By plotting the information on the graph, and drawing a line (called the regression line) through the middle of the data, you can see the relationship between the number of digital ads placed and revenue generated.

This regression line is the line that provides the best description of the relationship between your independent variables and your dependent variable. In this example, we’ve used a simple linear regression model.

Statistical analysis software can draw this line for you and precisely calculate the regression line. The software then provides a formula for the slope of the line, adding further context to the relationship between your dependent and independent variables.

Simple linear regression analysis

A simple linear model uses a single straight line to determine the relationship between a single independent variable and a dependent variable.

This regression model is mostly used when you want to determine the relationship between two variables (like price increases and sales) or the value of the dependent variable at certain points of the independent variable (for example the sales levels at a certain price rise).

While linear regression is useful, it does require you to make some assumptions.

For example, it requires you to assume that:

- the data was collected using a statistically valid sample collection method that is representative of the target population

- The observed relationship between the variables can’t be explained by a ‘hidden’ third variable – in other words, there are no spurious correlations.

- the relationship between the independent variable and dependent variable is linear – meaning that the best fit along the data points is a straight line and not a curved one

Multiple regression analysis

As the name suggests, multiple regression analysis is a type of regression that uses multiple variables. It uses multiple independent variables to predict the outcome of a single dependent variable. Of the various kinds of multiple regression, multiple linear regression is one of the best-known.

Multiple linear regression is a close relative of the simple linear regression model in that it looks at the impact of several independent variables on one dependent variable. However, like simple linear regression, multiple regression analysis also requires you to make some basic assumptions.

For example, you will be assuming that:

- there is a linear relationship between the dependent and independent variables (it creates a straight line and not a curve through the data points)

- the independent variables aren’t highly correlated in their own right

An example of multiple linear regression would be an analysis of how marketing spend, revenue growth, and general market sentiment affect the share price of a company.

With multiple linear regression models you can estimate how these variables will influence the share price, and to what extent.

Multivariate linear regression

Multivariate linear regression involves more than one dependent variable as well as multiple independent variables, making it more complicated than linear or multiple linear regressions. However, this also makes it much more powerful and capable of making predictions about complex real-world situations.

For example, if an organization wants to establish or estimate how the COVID-19 pandemic has affected employees in its different markets, it can use multivariate linear regression, with the different geographical regions as dependent variables and the different facets of the pandemic as independent variables (such as mental health self-rating scores, proportion of employees working at home, lockdown durations and employee sick days).

Through multivariate linear regression, you can look at relationships between variables in a holistic way and quantify the relationships between them. As you can clearly visualize those relationships, you can make adjustments to dependent and independent variables to see which conditions influence them. Overall, multivariate linear regression provides a more realistic picture than looking at a single variable.

However, because multivariate techniques are complex, they involve high-level mathematics that require a statistical program to analyze the data.

Logistic regression

Logistic regression models the probability of a binary outcome based on independent variables.

So, what is a binary outcome? It’s when there are only two possible scenarios, either the event happens (1) or it doesn’t (0). e.g. yes/no outcomes, pass/fail outcomes, and so on. In other words, if the outcome can be described as being in either one of two categories.

Logistic regression makes predictions based on independent variables that are assumed or known to have an influence on the outcome. For example, the probability of a sports team winning their game might be affected by independent variables like weather, day of the week, whether they are playing at home or away and how they fared in previous matches.

What are some common mistakes with regression analysis?

Across the globe, businesses are increasingly relying on quality data and insights to drive decision-making — but to make accurate decisions, it’s important that the data collected and statistical methods used to analyze it are reliable and accurate.

Using the wrong data or the wrong assumptions can result in poor decision-making, lead to missed opportunities to improve efficiency and savings, and — ultimately — damage your business long term.

- Assumptions

When running regression analysis, be it a simple linear or multiple regression, it’s really important to check that the assumptions your chosen method requires have been met. If your data points don’t conform to a straight line of best fit, for example, you need to apply additional statistical modifications to accommodate the non-linear data. For example, if you are looking at income data, which scales on a logarithmic distribution, you should take the Natural Log of Income as your variable then adjust the outcome after the model is created.

- Correlation vs. causation

It’s a well-worn phrase that bears repeating – correlation does not equal causation. While variables that are linked by causality will always show correlation, the reverse is not always true. Moreover, there is no statistic that can determine causality (although the design of your study overall can).

If you observe a correlation in your results, such as in the first example we gave in this article where there was a correlation between leads and sales, you can’t assume that one thing has influenced the other. Instead, you should use it as a starting point for investigating the relationship between the variables in more depth.

- Choosing the wrong variables to analyze

Before you use any kind of statistical method, it’s important to understand the subject you’re researching in detail. Doing so means you’re making informed choices of variables and you’re not overlooking something important that might have a significant bearing on your dependent variable.

- Model building The variables you include in your analysis are just as important as the variables you choose to exclude. That’s because the strength of each independent variable is influenced by the other variables in the model. Other techniques, such as Key Drivers Analysis, are able to account for these variable interdependencies.

Benefits of using regression analysis

There are several benefits to using regression analysis to judge how changing variables will affect your business and to ensure you focus on the right things when forecasting.

Here are just a few of those benefits:

Make accurate predictions

Regression analysis is commonly used when forecasting and forward planning for a business. For example, when predicting sales for the year ahead, a number of different variables will come into play to determine the eventual result.

Regression analysis can help you determine which of these variables are likely to have the biggest impact based on previous events and help you make more accurate forecasts and predictions.

Identify inefficiencies

Using a regression equation a business can identify areas for improvement when it comes to efficiency, either in terms of people, processes, or equipment.

For example, regression analysis can help a car manufacturer determine order numbers based on external factors like the economy or environment.

Using the initial regression equation, they can use it to determine how many members of staff and how much equipment they need to meet orders.

Drive better decisions

Improving processes or business outcomes is always on the minds of owners and business leaders, but without actionable data, they’re simply relying on instinct, and this doesn’t always work out.

This is particularly true when it comes to issues of price. For example, to what extent will raising the price (and to what level) affect next quarter’s sales?

There’s no way to know this without data analysis. Regression analysis can help provide insights into the correlation between price rises and sales based on historical data.

How do businesses use regression? A real-life example

Marketing and advertising spending are common topics for regression analysis. Companies use regression when trying to assess the value of ad spend and marketing spend on revenue.

A typical example is using a regression equation to assess the correlation between ad costs and conversions of new customers. In this instance,

- our dependent variable (the factor we’re trying to assess the outcomes of) will be our conversions

- the independent variable (the factor we’ll change to assess how it changes the outcome) will be the daily ad spend

- the regression equation will try to determine whether an increase in ad spend has a direct correlation with the number of conversions we have

The analysis is relatively straightforward — using historical data from an ad account, we can use daily data to judge ad spend vs conversions and how changes to the spend alter the conversions.

By assessing this data over time, we can make predictions not only on whether increasing ad spend will lead to increased conversions but also what level of spending will lead to what increase in conversions. This can help to optimize campaign spend and ensure marketing delivers good ROI.

This is an example of a simple linear model. If you wanted to carry out a more complex regression equation, we could also factor in other independent variables such as seasonality, GDP, and the current reach of our chosen advertising networks.

By increasing the number of independent variables, we can get a better understanding of whether ad spend is resulting in an increase in conversions, whether it’s exerting an influence in combination with another set of variables, or if we’re dealing with a correlation with no causal impact – which might be useful for predictions anyway, but isn’t a lever we can use to increase sales.

Using this predicted value of each independent variable, we can more accurately predict how spend will change the conversion rate of advertising.

Regression analysis tools

Regression analysis is an important tool when it comes to better decision-making and improved business outcomes. To get the best out of it, you need to invest in the right kind of statistical analysis software.

The best option is likely to be one that sits at the intersection of powerful statistical analysis and intuitive ease of use, as this will empower everyone from beginners to expert analysts to uncover meaning from data, identify hidden trends and produce predictive models without statistical training being required.

To help prevent costly errors, choose a tool that automatically runs the right statistical tests and visualizations and then translates the results into simple language that anyone can put into action.

With software that’s both powerful and user-friendly, you can isolate key experience drivers, understand what influences the business, apply the most appropriate regression methods, identify data issues, and much more.

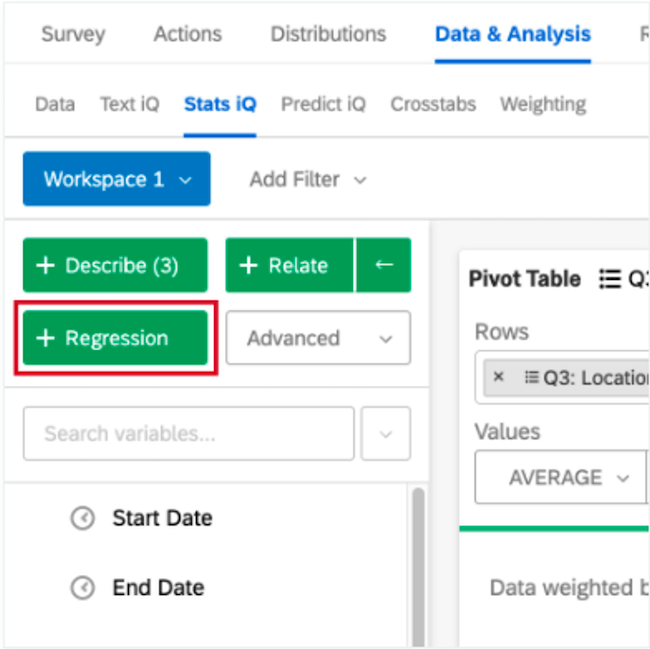

With Qualtrics’ Stats iQ™, you don’t have to worry about the regression equation because our statistical software will run the appropriate equation for you automatically based on the variable type you want to monitor. You can also use several equations, including linear regression and logistic regression, to gain deeper insights into business outcomes and make more accurate, data-driven decisions.

Related resources

Analysis & Reporting

Data Analysis 31 min read

Social media analytics 13 min read, kano analysis 21 min read, margin of error 11 min read, data saturation in qualitative research 8 min read, thematic analysis 11 min read, behavioral analytics 12 min read, request demo.

Ready to learn more about Qualtrics?

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

Lesson 1: simple linear regression, overview section .

Simple linear regression is a statistical method that allows us to summarize and study relationships between two continuous (quantitative) variables. This lesson introduces the concept and basic procedures of simple linear regression.

- Distinguish between a deterministic relationship and a statistical relationship.

- Understand the concept of the least squares criterion.

- Interpret the intercept \(b_{0}\) and slope \(b_{1}\) of an estimated regression equation.

- Know how to obtain the estimates \(b_{0}\) and \(b_{1}\) from Minitab's fitted line plot and regression analysis output.

- Recognize the distinction between a population regression line and the estimated regression line.

- Summarize the four conditions that comprise the simple linear regression model.

- Know what the unknown population variance \(\sigma^{2}\) quantifies in the regression setting.

- Know how to obtain the estimated MSE of the unknown population variance \(\sigma^{2 }\) from Minitab's fitted line plot and regression analysis output.

- Know that the coefficient of determination (\(R^2\)) and the correlation coefficient (r) are measures of linear association. That is, they can be 0 even if there is a perfect nonlinear association.

- Know how to interpret the \(R^2\) value.

- Understand the cautions necessary in using the \(R^2\) value as a way of assessing the strength of the linear association.

- Know how to calculate the correlation coefficient r from the \(R^2\) value.

- Know what various correlation coefficient values mean. There is no meaningful interpretation for the correlation coefficient as there is for the \(R^2\) value.

Lesson 1 Code Files Section

STAT501_Lesson01.zip

- bldgstories.txt

- carstopping.txt

- drugdea.txt

- fev_dat.txt

- heightgpa.txt

- husbandwife.txt

- oldfaithful.txt

- poverty.txt

- practical.txt

- signdist.txt

- skincancer.txt

- student_height_weight.txt

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Regression Analysis: Definition, Types, Usage & Advantages

Regression analysis is perhaps one of the most widely used statistical methods for investigating or estimating the relationship between a set of independent and dependent variables. In statistical analysis , distinguishing between categorical data and numerical data is essential, as categorical data involves distinct categories or labels, while numerical data consists of measurable quantities.

It is also used as a blanket term for various data analysis techniques utilized in a qualitative research method for modeling and analyzing numerous variables. In the regression method, the dependent variable is a predictor or an explanatory element, and the dependent variable is the outcome or a response to a specific query.

LEARN ABOUT: Statistical Analysis Methods

Content Index

Definition of Regression Analysis

Types of regression analysis, regression analysis usage in market research, how regression analysis derives insights from surveys, advantages of using regression analysis in an online survey.

Regression analysis is often used to model or analyze data. Most survey analysts use it to understand the relationship between the variables, which can be further utilized to predict the precise outcome.

For Example – Suppose a soft drink company wants to expand its manufacturing unit to a newer location. Before moving forward, the company wants to analyze its revenue generation model and the various factors that might impact it. Hence, the company conducts an online survey with a specific questionnaire.

After using regression analysis, it becomes easier for the company to analyze the survey results and understand the relationship between different variables like electricity and revenue – here, revenue is the dependent variable.

LEARN ABOUT: Level of Analysis

In addition, understanding the relationship between different independent variables like pricing, number of workers, and logistics with the revenue helps the company estimate the impact of varied factors on sales and profits.