- A-Z Publications

Annual Review of Psychology

Volume 70, 2019, review article, how to do a systematic review: a best practice guide for conducting and reporting narrative reviews, meta-analyses, and meta-syntheses.

- Andy P. Siddaway 1 , Alex M. Wood 2 , and Larry V. Hedges 3

- View Affiliations Hide Affiliations Affiliations: 1 Behavioural Science Centre, Stirling Management School, University of Stirling, Stirling FK9 4LA, United Kingdom; email: [email protected] 2 Department of Psychological and Behavioural Science, London School of Economics and Political Science, London WC2A 2AE, United Kingdom 3 Department of Statistics, Northwestern University, Evanston, Illinois 60208, USA; email: [email protected]

- Vol. 70:747-770 (Volume publication date January 2019) https://doi.org/10.1146/annurev-psych-010418-102803

- First published as a Review in Advance on August 08, 2018

- Copyright © 2019 by Annual Reviews. All rights reserved

Systematic reviews are characterized by a methodical and replicable methodology and presentation. They involve a comprehensive search to locate all relevant published and unpublished work on a subject; a systematic integration of search results; and a critique of the extent, nature, and quality of evidence in relation to a particular research question. The best reviews synthesize studies to draw broad theoretical conclusions about what a literature means, linking theory to evidence and evidence to theory. This guide describes how to plan, conduct, organize, and present a systematic review of quantitative (meta-analysis) or qualitative (narrative review, meta-synthesis) information. We outline core standards and principles and describe commonly encountered problems. Although this guide targets psychological scientists, its high level of abstraction makes it potentially relevant to any subject area or discipline. We argue that systematic reviews are a key methodology for clarifying whether and how research findings replicate and for explaining possible inconsistencies, and we call for researchers to conduct systematic reviews to help elucidate whether there is a replication crisis.

Article metrics loading...

Full text loading...

Literature Cited

- APA Publ. Commun. Board Work. Group J. Artic. Rep. Stand. 2008 . Reporting standards for research in psychology: Why do we need them? What might they be?. Am. Psychol . 63 : 848– 49 [Google Scholar]

- Baumeister RF 2013 . Writing a literature review. The Portable Mentor: Expert Guide to a Successful Career in Psychology MJ Prinstein, MD Patterson 119– 32 New York: Springer, 2nd ed.. [Google Scholar]

- Baumeister RF , Leary MR 1995 . The need to belong: desire for interpersonal attachments as a fundamental human motivation. Psychol. Bull. 117 : 497– 529 [Google Scholar]

- Baumeister RF , Leary MR 1997 . Writing narrative literature reviews. Rev. Gen. Psychol. 3 : 311– 20 Presents a thorough and thoughtful guide to conducting narrative reviews. [Google Scholar]

- Bem DJ 1995 . Writing a review article for Psychological Bulletin. Psychol . Bull 118 : 172– 77 [Google Scholar]

- Borenstein M , Hedges LV , Higgins JPT , Rothstein HR 2009 . Introduction to Meta-Analysis New York: Wiley Presents a comprehensive introduction to meta-analysis. [Google Scholar]

- Borenstein M , Higgins JPT , Hedges LV , Rothstein HR 2017 . Basics of meta-analysis: I 2 is not an absolute measure of heterogeneity. Res. Synth. Methods 8 : 5– 18 [Google Scholar]

- Braver SL , Thoemmes FJ , Rosenthal R 2014 . Continuously cumulating meta-analysis and replicability. Perspect. Psychol. Sci. 9 : 333– 42 [Google Scholar]

- Bushman BJ 1994 . Vote-counting procedures. The Handbook of Research Synthesis H Cooper, LV Hedges 193– 214 New York: Russell Sage Found. [Google Scholar]

- Cesario J 2014 . Priming, replication, and the hardest science. Perspect. Psychol. Sci. 9 : 40– 48 [Google Scholar]

- Chalmers I 2007 . The lethal consequences of failing to make use of all relevant evidence about the effects of medical treatments: the importance of systematic reviews. Treating Individuals: From Randomised Trials to Personalised Medicine PM Rothwell 37– 58 London: Lancet [Google Scholar]

- Cochrane Collab. 2003 . Glossary Rep., Cochrane Collab. London: http://community.cochrane.org/glossary Presents a comprehensive glossary of terms relevant to systematic reviews. [Google Scholar]

- Cohn LD , Becker BJ 2003 . How meta-analysis increases statistical power. Psychol. Methods 8 : 243– 53 [Google Scholar]

- Cooper HM 2003 . Editorial. Psychol. Bull. 129 : 3– 9 [Google Scholar]

- Cooper HM 2016 . Research Synthesis and Meta-Analysis: A Step-by-Step Approach Thousand Oaks, CA: Sage, 5th ed.. Presents a comprehensive introduction to research synthesis and meta-analysis. [Google Scholar]

- Cooper HM , Hedges LV , Valentine JC 2009 . The Handbook of Research Synthesis and Meta-Analysis New York: Russell Sage Found, 2nd ed.. [Google Scholar]

- Cumming G 2014 . The new statistics: why and how. Psychol. Sci. 25 : 7– 29 Discusses the limitations of null hypothesis significance testing and viable alternative approaches. [Google Scholar]

- Earp BD , Trafimow D 2015 . Replication, falsification, and the crisis of confidence in social psychology. Front. Psychol. 6 : 621 [Google Scholar]

- Etz A , Vandekerckhove J 2016 . A Bayesian perspective on the reproducibility project: psychology. PLOS ONE 11 : e0149794 [Google Scholar]

- Ferguson CJ , Brannick MT 2012 . Publication bias in psychological science: prevalence, methods for identifying and controlling, and implications for the use of meta-analyses. Psychol. Methods 17 : 120– 28 [Google Scholar]

- Fleiss JL , Berlin JA 2009 . Effect sizes for dichotomous data. The Handbook of Research Synthesis and Meta-Analysis H Cooper, LV Hedges, JC Valentine 237– 53 New York: Russell Sage Found, 2nd ed.. [Google Scholar]

- Garside R 2014 . Should we appraise the quality of qualitative research reports for systematic reviews, and if so, how. Innovation 27 : 67– 79 [Google Scholar]

- Hedges LV , Olkin I 1980 . Vote count methods in research synthesis. Psychol. Bull. 88 : 359– 69 [Google Scholar]

- Hedges LV , Pigott TD 2001 . The power of statistical tests in meta-analysis. Psychol. Methods 6 : 203– 17 [Google Scholar]

- Higgins JPT , Green S 2011 . Cochrane Handbook for Systematic Reviews of Interventions, Version 5.1.0 London: Cochrane Collab. Presents comprehensive and regularly updated guidelines on systematic reviews. [Google Scholar]

- John LK , Loewenstein G , Prelec D 2012 . Measuring the prevalence of questionable research practices with incentives for truth telling. Psychol. Sci. 23 : 524– 32 [Google Scholar]

- Juni P , Witschi A , Bloch R , Egger M 1999 . The hazards of scoring the quality of clinical trials for meta-analysis. JAMA 282 : 1054– 60 [Google Scholar]

- Klein O , Doyen S , Leys C , Magalhães de Saldanha da Gama PA , Miller S et al. 2012 . Low hopes, high expectations: expectancy effects and the replicability of behavioral experiments. Perspect. Psychol. Sci. 7 : 6 572– 84 [Google Scholar]

- Lau J , Antman EM , Jimenez-Silva J , Kupelnick B , Mosteller F , Chalmers TC 1992 . Cumulative meta-analysis of therapeutic trials for myocardial infarction. N. Engl. J. Med. 327 : 248– 54 [Google Scholar]

- Light RJ , Smith PV 1971 . Accumulating evidence: procedures for resolving contradictions among different research studies. Harvard Educ. Rev. 41 : 429– 71 [Google Scholar]

- Lipsey MW , Wilson D 2001 . Practical Meta-Analysis London: Sage Comprehensive and clear explanation of meta-analysis. [Google Scholar]

- Matt GE , Cook TD 1994 . Threats to the validity of research synthesis. The Handbook of Research Synthesis H Cooper, LV Hedges 503– 20 New York: Russell Sage Found. [Google Scholar]

- Maxwell SE , Lau MY , Howard GS 2015 . Is psychology suffering from a replication crisis? What does “failure to replicate” really mean?. Am. Psychol. 70 : 487– 98 [Google Scholar]

- Moher D , Hopewell S , Schulz KF , Montori V , Gøtzsche PC et al. 2010 . CONSORT explanation and elaboration: updated guidelines for reporting parallel group randomised trials. BMJ 340 : c869 [Google Scholar]

- Moher D , Liberati A , Tetzlaff J , Altman DG PRISMA Group. 2009 . Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. BMJ 339 : 332– 36 Comprehensive reporting guidelines for systematic reviews. [Google Scholar]

- Morrison A , Polisena J , Husereau D , Moulton K , Clark M et al. 2012 . The effect of English-language restriction on systematic review-based meta-analyses: a systematic review of empirical studies. Int. J. Technol. Assess. Health Care 28 : 138– 44 [Google Scholar]

- Nelson LD , Simmons J , Simonsohn U 2018 . Psychology's renaissance. Annu. Rev. Psychol. 69 : 511– 34 [Google Scholar]

- Noblit GW , Hare RD 1988 . Meta-Ethnography: Synthesizing Qualitative Studies Newbury Park, CA: Sage [Google Scholar]

- Olivo SA , Macedo LG , Gadotti IC , Fuentes J , Stanton T , Magee DJ 2008 . Scales to assess the quality of randomized controlled trials: a systematic review. Phys. Ther. 88 : 156– 75 [Google Scholar]

- Open Sci. Collab. 2015 . Estimating the reproducibility of psychological science. Science 349 : 943 [Google Scholar]

- Paterson BL , Thorne SE , Canam C , Jillings C 2001 . Meta-Study of Qualitative Health Research: A Practical Guide to Meta-Analysis and Meta-Synthesis Thousand Oaks, CA: Sage [Google Scholar]

- Patil P , Peng RD , Leek JT 2016 . What should researchers expect when they replicate studies? A statistical view of replicability in psychological science. Perspect. Psychol. Sci. 11 : 539– 44 [Google Scholar]

- Rosenthal R 1979 . The “file drawer problem” and tolerance for null results. Psychol. Bull. 86 : 638– 41 [Google Scholar]

- Rosnow RL , Rosenthal R 1989 . Statistical procedures and the justification of knowledge in psychological science. Am. Psychol. 44 : 1276– 84 [Google Scholar]

- Sanderson S , Tatt ID , Higgins JP 2007 . Tools for assessing quality and susceptibility to bias in observational studies in epidemiology: a systematic review and annotated bibliography. Int. J. Epidemiol. 36 : 666– 76 [Google Scholar]

- Schreiber R , Crooks D , Stern PN 1997 . Qualitative meta-analysis. Completing a Qualitative Project: Details and Dialogue JM Morse 311– 26 Thousand Oaks, CA: Sage [Google Scholar]

- Shrout PE , Rodgers JL 2018 . Psychology, science, and knowledge construction: broadening perspectives from the replication crisis. Annu. Rev. Psychol. 69 : 487– 510 [Google Scholar]

- Stroebe W , Strack F 2014 . The alleged crisis and the illusion of exact replication. Perspect. Psychol. Sci. 9 : 59– 71 [Google Scholar]

- Stroup DF , Berlin JA , Morton SC , Olkin I , Williamson GD et al. 2000 . Meta-analysis of observational studies in epidemiology (MOOSE): a proposal for reporting. JAMA 283 : 2008– 12 [Google Scholar]

- Thorne S , Jensen L , Kearney MH , Noblit G , Sandelowski M 2004 . Qualitative meta-synthesis: reflections on methodological orientation and ideological agenda. Qual. Health Res. 14 : 1342– 65 [Google Scholar]

- Tong A , Flemming K , McInnes E , Oliver S , Craig J 2012 . Enhancing transparency in reporting the synthesis of qualitative research: ENTREQ. BMC Med. Res. Methodol. 12 : 181– 88 [Google Scholar]

- Trickey D , Siddaway AP , Meiser-Stedman R , Serpell L , Field AP 2012 . A meta-analysis of risk factors for post-traumatic stress disorder in children and adolescents. Clin. Psychol. Rev. 32 : 122– 38 [Google Scholar]

- Valentine JC , Biglan A , Boruch RF , Castro FG , Collins LM et al. 2011 . Replication in prevention science. Prev. Sci. 12 : 103– 17 [Google Scholar]

- Article Type: Review Article

Most Read This Month

Most cited most cited rss feed, job burnout, executive functions, social cognitive theory: an agentic perspective, on happiness and human potentials: a review of research on hedonic and eudaimonic well-being, sources of method bias in social science research and recommendations on how to control it, mediation analysis, missing data analysis: making it work in the real world, grounded cognition, personality structure: emergence of the five-factor model, motivational beliefs, values, and goals.

Systematic Literature Review

- First Online: 01 January 2014

Cite this chapter

- Aline Dresch 4 ,

- Daniel Pacheco Lacerda 4 &

- José Antônio Valle Antunes Jr 5

8165 Accesses

This chapter presents a method that can be applied to perform a Systematic Literature Review. The Systematic Literature Review is a critical step in conducting scientific research. This chapter focuses particularly on the importance of this step for research conducted under the Design Science paradigm.

The knowledge of the world is only to be acquired in the world, and not in the closet. (Philip Chesterfield)

Maria Isabel Wolf Motta Morandi — UNISINOS — [email protected] .

Luis Felipe Riehs Camargo — UNISINOS — [email protected] .

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or Ebook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

- Durable hardcover edition

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

Literature Reviews and Systematic Reviews of Research: The Roles and Importance

Literature Reviews: An Overview of Systematic, Integrated, and Scoping Reviews

Literature reviews as independent studies: guidelines for academic practice

Abrami, P. C. et al. (2010, August 1). Issues in conducting and disseminating brief reviews of evidence. Evidence and Policy : A Journal of Research, Debate and Practice , 6 (3), 371–389.

Google Scholar

Adler, M. J., & van Doren, C. (1972). How to read a book . New York: A Touchstone Book Published by Simon & Schuster.

Alturki, A., Gable, G. G., & Bandara, W. (2011). A design science research roadmap DESRIST . Milwaukee: Springer.

Barnett-Page, E., & Thomas, J. (2009). Methods for the synthesis of qualitative research: A critical review. BMC Medical Research Methodology, 9 (59), 1–11.

Bayazit, N. (2004). Investigating design : A review of forty years of design research. Massachusetts Institute of Technology: Design Issues, 20 (1), 16–29.

Beverley, C. A., Booth, A., & Bath, P. A. (2003). The role of the information specialist in the systematic review process: A health information case study. Health information and libraries journal, 20 (2), 65–74.

Article Google Scholar

Brunton, G. et al. (2006). A synthesis of research addressing children’s, young people’s and parents’ views of walking and cycling for transport. London: University of London.

Brunton, G., Stansfield, C., & Thomas, J. (2012). Finding relevant studies. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 107–134). London: Sage Publications Ltd.

Brunton, J., & Thomas, J. (2012). Information management in reviews. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 83–106). London: Sage Publications Ltd.

Cooper, H. M., Hedges, L. V., & Valentine, J. C. (2009). The handbook of research synthesis and meta-analysis . United Kingdom: Russell Sage Foundation Publications.

Coren, E. et al. (2014). Parent-training interventions to support intellectually disabled parents campbell systematic reviews. United Kingdom: [s.n.]. Retrieved from Feb 23, 2014 http://campbellcollaboration.org/lib/project/172/

Dixon-Woods, M. et al. (2006, February 1). How can systematic reviews incorporate qualitative research? A critical perspective. Qualitative Research , 6 (1), 27–44.

EPPI Centre (2013). http://eppi.ioe.ac.uk/cms/

Gough, D., Oliver, S., & Thomas, J. (2012). An introduction to systematic reviews . London: Sage Publications Ltd.

Gough, D., & Thomas, J. (2012). Commonality and diversity in reviews. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 35–65). London: Sage Publications Ltd.

Gregor, S., & Jones, D. (2007). The anatomy of a design theory. Journal of the Association for Information Systems, 8 (5), 312–335.

Hammerstrøm, K., Wade, A., & Jorgensen, A.-M. K. (2010). Searching for studies: A guide to information retrieval for Campbell Systematic Reviews (Vol. 1). Oslo: The Campbell Collaboration.

Harden, A., et al. (2009). Teenage pregnancy and social disadvantage: Systematic review integrating controlled trials and qualitative studies. BMJ, 339 (b424), 1–11.

Harden, A., & Gough, D. (2012). Quality and relevance appraisal. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 153–178). London: SAGE Publications, Inc.

Harris, M. R. (2005). The librarian’s roles in the systematic review process: A case study. Journal of the Medical Library Association : JMLA , 93 (1), 81–87.

Higgins, J. P., & Green, S. (2006). Cochrane handbook for systematic reviews of intervetions 4.2.6. In: J. P. Higgins, & S. Green (Eds.), Cochrane library . Chichester, UK: Wiley.

Keown, K., van Eerd, D., & Irvin, E. (2008). Stakeholder engagement opportunities in systematic reviews: knowledge transfer for policy and practice. Foundations of Continuing Education, 28 (2), 67–72.

Khan, K. S., et al. (2003). Five steps to conducting a systematic review. Journal of the Royal Society of Medicine, 96 (3), 118–121.

Kitchenham, B. (2010). What’s up with software metrics?—A preliminary mapping study. Journal of Systems and Software, 83 (1), 37–51.

Kitchenham, B., et al. (2010). Systematic literature reviews in software engineering—A tertiary study. Information and Software Technology, 52 (8), 792–805.

Lavis, J. N. (2009). How can we support the use of systematic reviews in policymaking? PLoS Medicine, 6 (11), e1000141.

Littel, J. H., Corcoran, J., & Pillai, V. (2008). Systematic reviews and meta-analysis . New York: Oxford University Press.

Book Google Scholar

Lundh, A., & Gøtzsche, P. C. (2008). Recommendations by cochrane review groups for assessment of the risk of bias in studies. BMC Medical Research Methodology, 8 (22), 1–9.

March, S. T., & Smith, G. F. (1995). Design and natural science research on information technology. Decision Support Systems, 15 , 251–266.

March, S. T., & Storey, V. C. (2008). Design Science in the information systems discipline: An introduction to the special issue on design science research. MIS Quaterly , 32 (4), 725–730.

Oliver, S., Dickson, K., & Newman, M. (2012). Getting started with a review. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 66–82). London: Sage Publications Ltd.

Oliver, S., & Sutcliffe, K. (2012). Describing and analysing studies. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 135–152). London: Sage Publications Ltd.

Rees, R., & Oliver, S. (2012). Stakeholder perspectives and participation in systematic reviews. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 17–35). London: Sage Publications Ltd.

Sandelowski, M., et al. (2012). Mapping the mixed methods—mixed research synthesis Terrain. J Mix Method Res Author Manuscript, 6 (4), 317–331.

Saunders, M., Lewis, P., & Thornhill, A. (2012). Research methods for business students (6th ed.). London: Pearson Education Limited.

Schiller, C., et al. (2013). A framework for stakeholder identification in concept mapping and health research: A novel process and its application to older adult mobility and the built environment. BMC Public Health, 13 , 1–9.

Seuring, S., & Gold, S. (2012). Conducting content-analysis based literature reviews in supply chain management. Supply Chain Management: An International Journal, 17 (5), 544–555.

Sinha, M. K., & Montori, V. M. (2006). Reporting bias and other biases affecting systematic reviews and meta-analyses: A methodological commentary. Expert Review of Pharmacoeconomics and Outcomes Research, 6 (5), 603–611.

Smith, V., et al. (2011). Methodology in conducting a systematic review of systematic reviews of healthcare interventions. BMC Medical Research Methodology, 11 (1), 15.

Thomas, J., Harden, A., & Newman, M. (2012). Synthesis: Combining results systematically and appropriately. In: D. Gough, S. Oliver, & J. Thomas (Eds.), An introduction to systematic reviews (pp. 179–226). London: Sage Publications Ltd.

Tranfield, D., Denyer, D., & Smart, P. (2003). Towards a methodology for developing evidence-informed management knowledge by means of systematic review. British Journal of Management, 14 (3), 207–222.

Van Aken, J. E. (2011). The Research Design for Design Science Research in Management Eindhoven.

Van Aken, J. E., & Romme, G. (2009). Reinventing the future : Adding design science to the repertoire of organization and management studies. Organization Management Journal, 6 , 5–12.

Walls, J. G., Wyidmeyer, G. R., & Sawy, O. A. E. (1992). Building an information system design theory for vigilant EIS. Information Systems Research , 3 , 36–60.

Download references

Author information

Authors and affiliations.

GMAP | UNISINOS, Porto Alegre/RS, Brazil

Aline Dresch & Daniel Pacheco Lacerda

UNISINOS, Porto Alegre/RS, Brazil

José Antônio Valle Antunes Jr

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Aline Dresch .

Rights and permissions

Reprints and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this chapter

Dresch, A., Lacerda, D.P., Antunes, J.A.V. (2015). Systematic Literature Review. In: Design Science Research. Springer, Cham. https://doi.org/10.1007/978-3-319-07374-3_7

Download citation

DOI : https://doi.org/10.1007/978-3-319-07374-3_7

Published : 20 August 2014

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-07373-6

Online ISBN : 978-3-319-07374-3

eBook Packages : Business and Economics Business and Management (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Preparing your manuscript

This section provides general style and formatting information only. Formatting guidelines for specific article types can be found below.

- Methodology

- Systematic review update

General formatting guidelines

- Preparing main manuscript text

- Preparing illustrations and figures

- Preparing tables

- Preparing additional files

Preparing figures

When preparing figures, please follow the formatting instructions below.

- Figures should be numbered in the order they are first mentioned in the text, and uploaded in this order. Multi-panel figures (those with parts a, b, c, d etc.) should be submitted as a single composite file that contains all parts of the figure.

- Figures should be uploaded in the correct orientation.

- Figure titles (max 15 words) and legends (max 300 words) should be provided in the main manuscript, not in the graphic file.

- Figure keys should be incorporated into the graphic, not into the legend of the figure.

- Each figure should be closely cropped to minimize the amount of white space surrounding the illustration. Cropping figures improves accuracy when placing the figure in combination with other elements when the accepted manuscript is prepared for publication on our site. For more information on individual figure file formats, see our detailed instructions.

- Individual figure files should not exceed 10 MB. If a suitable format is chosen, this file size is adequate for extremely high quality figures.

- Please note that it is the responsibility of the author(s) to obtain permission from the copyright holder to reproduce figures (or tables) that have previously been published elsewhere. In order for all figures to be open access, authors must have permission from the rights holder if they wish to include images that have been published elsewhere in non open access journals. Permission should be indicated in the figure legend, and the original source included in the reference list.

Figure file types

We accept the following file formats for figures:

- EPS (suitable for diagrams and/or images)

- PDF (suitable for diagrams and/or images)

- Microsoft Word (suitable for diagrams and/or images, figures must be a single page)

- PowerPoint (suitable for diagrams and/or images, figures must be a single page)

- TIFF (suitable for images)

- JPEG (suitable for photographic images, less suitable for graphical images)

- PNG (suitable for images)

- BMP (suitable for images)

- CDX (ChemDraw - suitable for molecular structures)

For information and suggestions of suitable file formats for specific figure types, please see our author academy .

Figure size and resolution

Figures are resized during publication of the final full text and PDF versions to conform to the BioMed Central standard dimensions, which are detailed below.

Figures on the web:

- width of 600 pixels (standard), 1200 pixels (high resolution).

Figures in the final PDF version:

- width of 85 mm for half page width figure

- width of 170 mm for full page width figure

- maximum height of 225 mm for figure and legend

- image resolution of approximately 300 dpi (dots per inch) at the final size

Figures should be designed such that all information, including text, is legible at these dimensions. All lines should be wider than 0.25 pt when constrained to standard figure widths. All fonts must be embedded.

Figure file compression

- Vector figures should if possible be submitted as PDF files, which are usually more compact than EPS files.

- TIFF files should be saved with LZW compression, which is lossless (decreases file size without decreasing quality) in order to minimize upload time.

- JPEG files should be saved at maximum quality.

- Conversion of images between file types (especially lossy formats such as JPEG) should be kept to a minimum to avoid degradation of quality.

If you have any questions or are experiencing a problem with figures, please contact the customer service team at [email protected] .

Preparing main manuscript text

Quick points:

- Use double line spacing

- Include line and page numbering

- Use SI units: Please ensure that all special characters used are embedded in the text, otherwise they will be lost during conversion to PDF

- Do not use page breaks in your manuscript

File formats

The following word processor file formats are acceptable for the main manuscript document:

- Microsoft word (DOC, DOCX)

- Rich text format (RTF)

- TeX/LaTeX

Please note: editable files are required for processing in production. If your manuscript contains any non-editable files (such as PDFs) you will be required to re-submit an editable file when you submit your revised manuscript, or after editorial acceptance in case no revision is necessary.

Additional information for TeX/LaTeX users

You are encouraged to use the Springer Nature LaTeX template when preparing a submission. A PDF of your manuscript files will be compiled during submission using pdfLaTeX and TexLive 2021. All relevant editable source files must be uploaded during the submission process. Failing to submit these source files will cause unnecessary delays in the production process.

Style and language

Improving your written english .

Presenting your work in well-written English gives it its best chance for editors and reviewers to understand it and evaluate it fairly.

We have some editing services that can help you to get your writing ready for submission.

Language quality checker

You can upload your manuscript and get a free language check from our partner AJE. The software uses AI to make suggestions that can improve writing quality. Trained on 300,000+ research manuscripts from more than 400+ areas of study and over 2000 field-specific topics the tool will deliver fast, highly accurate English language improvements. Your paper will be digitally edited and returned to you within approximately 10 minutes.

Try the tool for free now

Language and manuscript preparation services

Let one of our experts assist you with getting your manuscript and language into shape - our services cover:

- English language improvement

- scientific in-depth editing and strategic advice

- figure and tables formatting

- manuscript formatting to match your target journal

- specialist academic translation to English from Spanish, Portuguese, Japanese, or simplified Chinese

Get started and save 15%

Please note that using these tools, or any other service, is not a requirement for publication, nor does it imply or guarantee that editors will accept the article, or even select it for peer review.

您怎么做才有助于改进您的稿件以便顺利发表?

如果在结构精巧的稿件中用精心组织的英语展示您的作品,就能最大限度地让编辑和审稿人理解并公正评估您的作品。许多研究人员发现,获得一些独立支持有助于他们以尽可能美好的方式展示他们的成果。Springer Nature Author Services 的专家可帮助您准备稿件,具体 包括润色英语表述、添加有见地的注释、为稿件排版、设计图表、翻译等 。

开始使用即可节省 15% 的费用

您还可以使用我们的 免费语法检查工具 来评估您的作品。

请注意,使用这些工具或任何其他服务不是发表前必须满足的要求,也不暗示或保证相关文章定会被编辑接受(甚至未必会被选送同行评审)。

発表に備えて、論文を改善するにはどうすればよいでしょうか?

内容が適切に組み立てられ、質の高い英語で書かれた論文を投稿すれば、編集者や査読者が論文を理解し、公正に評価するための最善の機会となります。多くの研究者は、個別のサポートを受けることで、研究結果を可能な限り最高の形で発表できると思っています。Springer Nature Author Servicesのエキスパートが、 英文の編集、建設的な提言、論文の書式、図の調整、翻訳 など、論文の作成をサポートいたします。

今なら15%割引でご利用いただけます

原稿の評価に、無料 の文法チェック ツールもご利用いただけます。

これらのツールや他のサービスをご利用いただくことは、論文を掲載するための要件ではありません。また、編集者が論文を受理したり、査読に選定したりすることを示唆または保証するものではないことにご注意ください。

게재를 위해 원고를 개선하려면 어떻게 해야 할까요?

여러분의 작품을 체계적인 원고로 발표하는 것은 편집자와 심사자가 여러분의 연구를 이해하고 공정하게 평가할 수 있는 최선의 기회를 제공합니다. 많은 연구자들은 어느 정도 독립적인 지원을 받는 것이 가능한 한 최선의 방법으로 자신의 결과를 발표하는 데 도움이 된다고 합니다. Springer Nature Author Services 전문가들은 영어 편집, 발전적인 논평, 원고 서식 지정, 그림 준비, 번역 등과 같은 원고 준비를 도와드릴 수 있습니다.

지금 시작하면 15% 할인됩니다

또한 당사의 무료 문법 검사 도구를 사용하여 여러분의 연구를 평가할 수 있습니다.

이러한 도구 또는 기타 서비스를 사용하는 것은 게재를 위한 필수 요구사항이 아니며, 편집자가 해당 논문을 수락하거나 피어 리뷰에 해당 논문을 선택한다는 것을 암시하거나 보장하지는 않습니다.

¿Cómo puede ayudar a mejorar el artículo para su publicación?

Si presenta su trabajo en un artículo bien estructurado y en inglés bien escrito, los editores y revisores podrán comprenderlo mejor y evaluarlo de forma justa. Muchos investigadores piensan que un poco de apoyo independiente les ayuda a presentar los resultados de la mejor forma posible. Los expertos de Springer Nature Author Services pueden ayudarle a preparar el artículo con la edición en inglés, comentarios para su elaboración, el formato del artículo, la preparación de figuras, la traducción y mucho más.

Empiece ahora y ahorre un 15%

También puede usar nuestra herramienta gratuita Grammar Check para evaluar su trabajo.

Tenga en cuenta que utilizar estas herramientas, así como cualquier otro servicio, no es un requisito para publicación, y tampoco implica ni garantiza que los editores acepten el artículo, ni siquiera que lo seleccionen para revisión científica externa.

Como pode ajudar a melhorar o seu manuscrito para publicação?

Apresentar o seu trabalho num manuscrito bem estruturado e em inglês bem escrito confere-lhe a melhor probabilidade de os editores e revisores o compreenderem e avaliarem de forma justa. Muitos investigadores verificam que obter algum apoio independente os ajuda a apresentar os seus resultados da melhor forma possível. Os especialistas da Springer Nature Author Services podem ajudá-lo na preparação do manuscrito, incluindo edição de língua inglesa, comentários de desenvolvimento, formatação do manuscrito, preparação de figuras, tradução e muito mais.

Comece agora e poupe 15%

Também pode utilizar a nossa ferramenta gratuita de verificação de gramática para efetuar uma avaliação do seu trabalho.

Tenha em conta que a utilização destas ferramentas, ou de qualquer outro serviço, não constitui um requisito para publicação, nem implica nem garante que os editores aceitem o artigo ou o selecionem para revisão por pares.

Data and materials

For all journals, BioMed Central strongly encourages all datasets on which the conclusions of the manuscript rely to be either deposited in publicly available repositories (where available and appropriate) or presented in the main paper or additional supporting files, in machine-readable format (such as spread sheets rather than PDFs) whenever possible. Please see the list of recommended repositories in our editorial policies.

For some journals, deposition of the data on which the conclusions of the manuscript rely is an absolute requirement. Please check the Instructions for Authors for the relevant journal and article type for journal specific policies.

For all manuscripts, information about data availability should be detailed in an ‘Availability of data and materials’ section. For more information on the content of this section, please see the Declarations section of the relevant journal’s Instruction for Authors. For more information on BioMed Centrals policies on data availability, please see our editorial policies .

Formatting the 'Availability of data and materials' section of your manuscript

The following format for the 'Availability of data and materials section of your manuscript should be used:

"The dataset(s) supporting the conclusions of this article is(are) available in the [repository name] repository, [unique persistent identifier and hyperlink to dataset(s) in http:// format]."

The following format is required when data are included as additional files:

"The dataset(s) supporting the conclusions of this article is(are) included within the article (and its additional file(s))."

BioMed Central endorses the Force 11 Data Citation Principles and requires that all publicly available datasets be fully referenced in the reference list with an accession number or unique identifier such as a DOI.

For databases, this section should state the web/ftp address at which the database is available and any restrictions to its use by non-academics.

For software, this section should include:

- Project name: e.g. My bioinformatics project

- Project home page: e.g. http://sourceforge.net/projects/mged

- Archived version: DOI or unique identifier of archived software or code in repository (e.g. enodo)

- Operating system(s): e.g. Platform independent

- Programming language: e.g. Java

- Other requirements: e.g. Java 1.3.1 or higher, Tomcat 4.0 or higher

- License: e.g. GNU GPL, FreeBSD etc.

- Any restrictions to use by non-academics: e.g. licence needed

Information on available repositories for other types of scientific data, including clinical data, can be found in our editorial policies .

See our editorial policies for author guidance on good citation practice.

Please check the submission guidelines for the relevant journal and article type.

What should be cited?

Only articles, clinical trial registration records and abstracts that have been published or are in press, or are available through public e-print/preprint servers, may be cited.

Unpublished abstracts, unpublished data and personal communications should not be included in the reference list, but may be included in the text and referred to as "unpublished observations" or "personal communications" giving the names of the involved researchers. Obtaining permission to quote personal communications and unpublished data from the cited colleagues is the responsibility of the author. Only footnotes are permitted. Journal abbreviations follow Index Medicus/MEDLINE.

Any in press articles cited within the references and necessary for the reviewers' assessment of the manuscript should be made available if requested by the editorial office.

How to format your references

Please check the Instructions for Authors for the relevant journal and article type for examples of the relevant reference style.

Web links and URLs: All web links and URLs, including links to the authors' own websites, should be given a reference number and included in the reference list rather than within the text of the manuscript. They should be provided in full, including both the title of the site and the URL, as well as the date the site was accessed, in the following format: The Mouse Tumor Biology Database. http://tumor.informatics.jax.org/mtbwi/index.do . Accessed 20 May 2013. If an author or group of authors can clearly be associated with a web link, such as for weblogs, then they should be included in the reference.

Authors may wish to make use of reference management software to ensure that reference lists are correctly formatted.

Preparing tables

When preparing tables, please follow the formatting instructions below.

- Tables should be numbered and cited in the text in sequence using Arabic numerals (i.e. Table 1, Table 2 etc.).

- Tables less than one A4 or Letter page in length can be placed in the appropriate location within the manuscript.

- Tables larger than one A4 or Letter page in length can be placed at the end of the document text file. Please cite and indicate where the table should appear at the relevant location in the text file so that the table can be added in the correct place during production.

- Larger datasets, or tables too wide for A4 or Letter landscape page can be uploaded as additional files. Please see [below] for more information.

- Tabular data provided as additional files can be uploaded as an Excel spreadsheet (.xls ) or comma separated values (.csv). Please use the standard file extensions.

- Table titles (max 15 words) should be included above the table, and legends (max 300 words) should be included underneath the table.

- Tables should not be embedded as figures or spreadsheet files, but should be formatted using ‘Table object’ function in your word processing program.

- Color and shading may not be used. Parts of the table can be highlighted using superscript, numbering, lettering, symbols or bold text, the meaning of which should be explained in a table legend.

- Commas should not be used to indicate numerical values.

If you have any questions or are experiencing a problem with tables, please contact the customer service team at [email protected] .

Preparing additional files

As the length and quantity of data is not restricted for many article types, authors can provide datasets, tables, movies, or other information as additional files.

All Additional files will be published along with the accepted article. Do not include files such as patient consent forms, certificates of language editing, or revised versions of the main manuscript document with tracked changes. Such files, if requested, should be sent by email to the journal’s editorial email address, quoting the manuscript reference number. Please do not send completed patient consent forms unless requested.

Results that would otherwise be indicated as "data not shown" should be included as additional files. Since many web links and URLs rapidly become broken, BioMed Central requires that supporting data are included as additional files, or deposited in a recognized repository. Please do not link to data on a personal/departmental website. Do not include any individual participant details. The maximum file size for additional files is 20 MB each, and files will be virus-scanned on submission. Each additional file should be cited in sequence within the main body of text.

If additional material is provided, please list the following information in a separate section of the manuscript text:

- File name (e.g. Additional file 1)

- File format including the correct file extension for example .pdf, .xls, .txt, .pptx (including name and a URL of an appropriate viewer if format is unusual)

- Title of data

- Description of data

Additional files should be named "Additional file 1" and so on and should be referenced explicitly by file name within the body of the article, e.g. 'An additional movie file shows this in more detail [see Additional file 1]'.

For further guidance on how to use Additional files or recommendations on how to present particular types of data or information, please see How to use additional files .

- Editorial Board

- Manuscript editing services

- Instructions for Editors

- Sign up for article alerts and news from this journal

- Follow us on Twitter

Annual Journal Metrics

Citation Impact 2023 Journal Impact Factor: 6.3 5-year Journal Impact Factor: 4.5 Source Normalized Impact per Paper (SNIP): 1.919 SCImago Journal Rank (SJR): 1.620

Speed 2023 Submission to first editorial decision (median days): 92 Submission to acceptance (median days): 296

Usage 2023 Downloads: 3,531,065 Altmetric mentions: 3,533

- More about our metrics

Systematic Reviews

ISSN: 2046-4053

- Submission enquiries: Access here and click Contact Us

- General enquiries: [email protected]

Log in using your username and password

- Search More Search for this keyword Advanced search

- Latest content

- Current issue

- Write for Us

- BMJ Journals

You are here

- Volume 24, Issue 2

- Five tips for developing useful literature summary tables for writing review articles

- Article Text

- Article info

- Citation Tools

- Rapid Responses

- Article metrics

- http://orcid.org/0000-0003-0157-5319 Ahtisham Younas 1 , 2 ,

- http://orcid.org/0000-0002-7839-8130 Parveen Ali 3 , 4

- 1 Memorial University of Newfoundland , St John's , Newfoundland , Canada

- 2 Swat College of Nursing , Pakistan

- 3 School of Nursing and Midwifery , University of Sheffield , Sheffield , South Yorkshire , UK

- 4 Sheffield University Interpersonal Violence Research Group , Sheffield University , Sheffield , UK

- Correspondence to Ahtisham Younas, Memorial University of Newfoundland, St John's, NL A1C 5C4, Canada; ay6133{at}mun.ca

https://doi.org/10.1136/ebnurs-2021-103417

Statistics from Altmetric.com

Request permissions.

If you wish to reuse any or all of this article please use the link below which will take you to the Copyright Clearance Center’s RightsLink service. You will be able to get a quick price and instant permission to reuse the content in many different ways.

Introduction

Literature reviews offer a critical synthesis of empirical and theoretical literature to assess the strength of evidence, develop guidelines for practice and policymaking, and identify areas for future research. 1 It is often essential and usually the first task in any research endeavour, particularly in masters or doctoral level education. For effective data extraction and rigorous synthesis in reviews, the use of literature summary tables is of utmost importance. A literature summary table provides a synopsis of an included article. It succinctly presents its purpose, methods, findings and other relevant information pertinent to the review. The aim of developing these literature summary tables is to provide the reader with the information at one glance. Since there are multiple types of reviews (eg, systematic, integrative, scoping, critical and mixed methods) with distinct purposes and techniques, 2 there could be various approaches for developing literature summary tables making it a complex task specialty for the novice researchers or reviewers. Here, we offer five tips for authors of the review articles, relevant to all types of reviews, for creating useful and relevant literature summary tables. We also provide examples from our published reviews to illustrate how useful literature summary tables can be developed and what sort of information should be provided.

Tip 1: provide detailed information about frameworks and methods

- Download figure

- Open in new tab

- Download powerpoint

Tabular literature summaries from a scoping review. Source: Rasheed et al . 3

The provision of information about conceptual and theoretical frameworks and methods is useful for several reasons. First, in quantitative (reviews synthesising the results of quantitative studies) and mixed reviews (reviews synthesising the results of both qualitative and quantitative studies to address a mixed review question), it allows the readers to assess the congruence of the core findings and methods with the adapted framework and tested assumptions. In qualitative reviews (reviews synthesising results of qualitative studies), this information is beneficial for readers to recognise the underlying philosophical and paradigmatic stance of the authors of the included articles. For example, imagine the authors of an article, included in a review, used phenomenological inquiry for their research. In that case, the review authors and the readers of the review need to know what kind of (transcendental or hermeneutic) philosophical stance guided the inquiry. Review authors should, therefore, include the philosophical stance in their literature summary for the particular article. Second, information about frameworks and methods enables review authors and readers to judge the quality of the research, which allows for discerning the strengths and limitations of the article. For example, if authors of an included article intended to develop a new scale and test its psychometric properties. To achieve this aim, they used a convenience sample of 150 participants and performed exploratory (EFA) and confirmatory factor analysis (CFA) on the same sample. Such an approach would indicate a flawed methodology because EFA and CFA should not be conducted on the same sample. The review authors must include this information in their summary table. Omitting this information from a summary could lead to the inclusion of a flawed article in the review, thereby jeopardising the review’s rigour.

Tip 2: include strengths and limitations for each article

Critical appraisal of individual articles included in a review is crucial for increasing the rigour of the review. Despite using various templates for critical appraisal, authors often do not provide detailed information about each reviewed article’s strengths and limitations. Merely noting the quality score based on standardised critical appraisal templates is not adequate because the readers should be able to identify the reasons for assigning a weak or moderate rating. Many recent critical appraisal checklists (eg, Mixed Methods Appraisal Tool) discourage review authors from assigning a quality score and recommend noting the main strengths and limitations of included studies. It is also vital that methodological and conceptual limitations and strengths of the articles included in the review are provided because not all review articles include empirical research papers. Rather some review synthesises the theoretical aspects of articles. Providing information about conceptual limitations is also important for readers to judge the quality of foundations of the research. For example, if you included a mixed-methods study in the review, reporting the methodological and conceptual limitations about ‘integration’ is critical for evaluating the study’s strength. Suppose the authors only collected qualitative and quantitative data and did not state the intent and timing of integration. In that case, the strength of the study is weak. Integration only occurred at the levels of data collection. However, integration may not have occurred at the analysis, interpretation and reporting levels.

Tip 3: write conceptual contribution of each reviewed article

While reading and evaluating review papers, we have observed that many review authors only provide core results of the article included in a review and do not explain the conceptual contribution offered by the included article. We refer to conceptual contribution as a description of how the article’s key results contribute towards the development of potential codes, themes or subthemes, or emerging patterns that are reported as the review findings. For example, the authors of a review article noted that one of the research articles included in their review demonstrated the usefulness of case studies and reflective logs as strategies for fostering compassion in nursing students. The conceptual contribution of this research article could be that experiential learning is one way to teach compassion to nursing students, as supported by case studies and reflective logs. This conceptual contribution of the article should be mentioned in the literature summary table. Delineating each reviewed article’s conceptual contribution is particularly beneficial in qualitative reviews, mixed-methods reviews, and critical reviews that often focus on developing models and describing or explaining various phenomena. Figure 2 offers an example of a literature summary table. 4

Tabular literature summaries from a critical review. Source: Younas and Maddigan. 4

Tip 4: compose potential themes from each article during summary writing

While developing literature summary tables, many authors use themes or subthemes reported in the given articles as the key results of their own review. Such an approach prevents the review authors from understanding the article’s conceptual contribution, developing rigorous synthesis and drawing reasonable interpretations of results from an individual article. Ultimately, it affects the generation of novel review findings. For example, one of the articles about women’s healthcare-seeking behaviours in developing countries reported a theme ‘social-cultural determinants of health as precursors of delays’. Instead of using this theme as one of the review findings, the reviewers should read and interpret beyond the given description in an article, compare and contrast themes, findings from one article with findings and themes from another article to find similarities and differences and to understand and explain bigger picture for their readers. Therefore, while developing literature summary tables, think twice before using the predeveloped themes. Including your themes in the summary tables (see figure 1 ) demonstrates to the readers that a robust method of data extraction and synthesis has been followed.

Tip 5: create your personalised template for literature summaries

Often templates are available for data extraction and development of literature summary tables. The available templates may be in the form of a table, chart or a structured framework that extracts some essential information about every article. The commonly used information may include authors, purpose, methods, key results and quality scores. While extracting all relevant information is important, such templates should be tailored to meet the needs of the individuals’ review. For example, for a review about the effectiveness of healthcare interventions, a literature summary table must include information about the intervention, its type, content timing, duration, setting, effectiveness, negative consequences, and receivers and implementers’ experiences of its usage. Similarly, literature summary tables for articles included in a meta-synthesis must include information about the participants’ characteristics, research context and conceptual contribution of each reviewed article so as to help the reader make an informed decision about the usefulness or lack of usefulness of the individual article in the review and the whole review.

In conclusion, narrative or systematic reviews are almost always conducted as a part of any educational project (thesis or dissertation) or academic or clinical research. Literature reviews are the foundation of research on a given topic. Robust and high-quality reviews play an instrumental role in guiding research, practice and policymaking. However, the quality of reviews is also contingent on rigorous data extraction and synthesis, which require developing literature summaries. We have outlined five tips that could enhance the quality of the data extraction and synthesis process by developing useful literature summaries.

- Aromataris E ,

- Rasheed SP ,

Twitter @Ahtisham04, @parveenazamali

Funding The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests None declared.

Patient consent for publication Not required.

Provenance and peer review Not commissioned; externally peer reviewed.

Read the full text or download the PDF:

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- My Bibliography

- Collections

- Citation manager

Save citation to file

Email citation, add to collections.

- Create a new collection

- Add to an existing collection

Add to My Bibliography

Your saved search, create a file for external citation management software, your rss feed.

- Search in PubMed

- Search in NLM Catalog

- Add to Search

Systematic literature reviews over the years

Affiliations.

- 1 Assignity, Krakow, Poland.

- 2 Public Health Department, Aix-Marseille University, Marseille, France.

- 3 Clever-Access, Paris, France.

- PMID: 37614556

- PMCID: PMC10443963

- DOI: 10.1080/20016689.2023.2244305

Purpose: Nowadays, systematic literature reviews (SLRs) and meta-analyses are often placed at the top of the study hierarchy of evidence. The main objective of this paper is to evaluate the trends in SLRs of randomized controlled trials (RCTs) throughout the years. Methods: Medline database was searched, using a highly focused search strategy. Each paper was coded according to a specific ICD-10 code; the number of RCTs included in each evaluated SLR was also retrieved. All SLRs analyzing RCTs were included. Protocols, commentaries, or errata were excluded. No restrictions were applied. Results: A total of 7,465 titles and abstracts were analyzed, from which 6,892 were included for further analyses. There was a gradual increase in the number of annual published SLRs, with a significant increase in published articles during the last several years. Overall, the most frequently analyzed areas were diseases of the circulatory system ( n = 750) and endocrine, nutritional, and metabolic diseases ( n = 734). The majority of SLRs included between 11 and 50 RCTs each. Conclusions: The recognition of SLRs' usefulness is growing at an increasing speed, which is reflected by the growing number of published studies. The most frequently evaluated diseases are in alignment with leading causes of death and disability worldwide.

Keywords: ICD-10 classification; Systematic literature review; randomized controlled trial; rapid review.

© 2023 The Author(s). Published by Informa UK Limited, trading as Taylor & Francis Group.

PubMed Disclaimer

Conflict of interest statement

No potential conflict of interest was reported by the author(s).

The number of RCT SLRs…

The number of RCT SLRs published over the years. Source: PubMed (search run…

The number of RCTs published…

The number of RCTs published over the years. Source: PubMed (search run in…

Distribution of RCT SLRs over…

Distribution of RCT SLRs over the years (search run in May 2023).

Number of RCT SLRs stratified…

Number of RCT SLRs stratified by disease area and by the number of…

The number of RCT SLRs stratified by disease area, published in 3 distinct…

The number of SLRs on…

The number of SLRs on COVID-19 published since 2020. Source: PubMed (search run…

The number of epidemiological SLRs…

The number of epidemiological SLRs published over the years. Source: PubMed (search run…

The burden of disease by…

The burden of disease by cause, measured in DALYs. Source: Institute for Health…

Similar articles

- Inter-reviewer reliability of human literature reviewing and implications for the introduction of machine-assisted systematic reviews: a mixed-methods review. Hanegraaf P, Wondimu A, Mosselman JJ, de Jong R, Abogunrin S, Queiros L, Lane M, Postma MJ, Boersma C, van der Schans J. Hanegraaf P, et al. BMJ Open. 2024 Mar 19;14(3):e076912. doi: 10.1136/bmjopen-2023-076912. BMJ Open. 2024. PMID: 38508610 Free PMC article. Review.

- Folic acid supplementation and malaria susceptibility and severity among people taking antifolate antimalarial drugs in endemic areas. Crider K, Williams J, Qi YP, Gutman J, Yeung L, Mai C, Finkelstain J, Mehta S, Pons-Duran C, Menéndez C, Moraleda C, Rogers L, Daniels K, Green P. Crider K, et al. Cochrane Database Syst Rev. 2022 Feb 1;2(2022):CD014217. doi: 10.1002/14651858.CD014217. Cochrane Database Syst Rev. 2022. PMID: 36321557 Free PMC article.

- The future of Cochrane Neonatal. Soll RF, Ovelman C, McGuire W. Soll RF, et al. Early Hum Dev. 2020 Nov;150:105191. doi: 10.1016/j.earlhumdev.2020.105191. Epub 2020 Sep 12. Early Hum Dev. 2020. PMID: 33036834

- Interventions to Prevent Falls in Community-Dwelling Older Adults: A Systematic Review for the U.S. Preventive Services Task Force [Internet]. Guirguis-Blake JM, Michael YL, Perdue LA, Coppola EL, Beil TL, Thompson JH. Guirguis-Blake JM, et al. Rockville (MD): Agency for Healthcare Research and Quality (US); 2018 Apr. Report No.: 17-05232-EF-1. Rockville (MD): Agency for Healthcare Research and Quality (US); 2018 Apr. Report No.: 17-05232-EF-1. PMID: 30234932 Free Books & Documents. Review.

- Airway clearance devices for cystic fibrosis: an evidence-based analysis. Medical Advisory Secretariat. Medical Advisory Secretariat. Ont Health Technol Assess Ser. 2009;9(26):1-50. Epub 2009 Nov 1. Ont Health Technol Assess Ser. 2009. PMID: 23074531 Free PMC article.

- Challenges faced by human resources for health in Morocco: A scoping review. Al Hassani W, Achhab YE, Nejjari C. Al Hassani W, et al. PLoS One. 2024 May 7;19(5):e0296598. doi: 10.1371/journal.pone.0296598. eCollection 2024. PLoS One. 2024. PMID: 38713675 Free PMC article. Review.

- Aromataris E, Pearson A.. The systematic review: an overview. Am J Nurs. 2014;114(3):53–11. doi: 10.1097/01.NAJ.0000444496.24228.2c - DOI - PubMed

- Paré G, Trudel MC, Jaana M, et al. Synthesizing information systems knowledge: a typology of literature reviews. Inf Manag. 2015;52(2):183–199. doi: 10.1016/j.im.2014.08.008 - DOI

- Linnenluecke MK, Marrone M, Singh AK. Conducting systematic literature reviews and bibliometric analyses. Aust J Manag. 2019;45(2):175–194. doi: 10.1177/0312896219877678 - DOI

- Cochrane AL. 1931-1971 a critical review with particular reference to the medical profession. Medicines for the year 2000. London: Office for Health Economics; 1979. pp. 1–11.

- Denyer D, Tranfield D. Producing a systematic review. The sage handbook of organizational research methods. Thousand Oaks, CA: Sage Publications Ltd; 2009. pp. 671–689.

Related information

Linkout - more resources, full text sources.

- Europe PubMed Central

- PubMed Central

- Citation Manager

NCBI Literature Resources

MeSH PMC Bookshelf Disclaimer

The PubMed wordmark and PubMed logo are registered trademarks of the U.S. Department of Health and Human Services (HHS). Unauthorized use of these marks is strictly prohibited.

- Search Menu

- Sign in through your institution

- Advance Articles

- Editor's Choice

- Collections

- Supplements

- InSight Papers

- BSR Registers Papers

- Virtual Roundtables

- Author Guidelines

- Submission Site

- Open Access Options

- Self-Archiving Policy

- About Rheumatology

- About the British Society for Rheumatology

- Editorial Board

- Advertising and Corporate Services

- Journals Career Network

- Dispatch Dates

- Terms and Conditions

- Journals on Oxford Academic

- Books on Oxford Academic

Article Contents

Introduction, supplementary material, data availability, acknowledgements.

- < Previous

Comparative efficacy and safety of bimekizumab in psoriatic arthritis: a systematic literature review and network meta-analysis

- Article contents

- Figures & tables

- Supplementary Data

Philip J Mease, Dafna D Gladman, Joseph F Merola, Peter Nash, Stacy Grieve, Victor Laliman-Khara, Damon Willems, Vanessa Taieb, Adam R Prickett, Laura C Coates, Comparative efficacy and safety of bimekizumab in psoriatic arthritis: a systematic literature review and network meta-analysis, Rheumatology , Volume 63, Issue 7, July 2024, Pages 1779–1789, https://doi.org/10.1093/rheumatology/kead705

- Permissions Icon Permissions

To understand the relative efficacy and safety of bimekizumab, a selective inhibitor of IL-17F in addition to IL-17A, vs other biologic and targeted synthetic DMARDs (b/tsDMARDs) for PsA using network meta-analysis (NMA).

A systematic literature review (most recent update conducted on 1 January 2023) identified randomized controlled trials (RCTs) of b/tsDMARDs in PsA. Bayesian NMAs were conducted for efficacy outcomes at Weeks 12–24 for b/tsDMARD-naïve and TNF inhibitor (TNFi)-experienced patients. Safety at Weeks 12–24 was analysed in a mixed population. Odds ratios (ORs) and differences of mean change with the associated 95% credible interval (CrI) were calculated for the best-fitting models, and the surface under the cumulative ranking curve (SUCRA) values were calculated to determine relative rank.

The NMA included 41 RCTs for 22 b/tsDMARDs. For minimal disease activity (MDA), bimekizumab ranked 1st in b/tsDMARD-naïve patients and 2nd in TNFi-experienced patients. In b/tsDMARD-naïve patients, bimekizumab ranked 6th, 5th and 3rd for ACR response ACR20/50/70, respectively. In TNFi-experienced patients, bimekizumab ranked 1st, 2nd and 1st for ACR20/50/70, respectively. For Psoriasis Area and Severity Index 90/100, bimekizumab ranked 2nd and 1st in b/tsDMARD-naïve patients, respectively, and 1st and 2nd in TNFi-experienced patients, respectively. Bimekizumab was comparable to b/tsDMARDs for serious adverse events.

Bimekizumab ranked favourably among b/tsDMARDs for efficacy on joint, skin and MDA outcomes, and showed comparable safety, suggesting it may be a beneficial treatment option for patients with PsA.

For joint efficacy, bimekizumab ranked highly among approved biologic/targeted synthetic DMARDs (b/tsDMARDs).

Bimekizumab provides better skin efficacy (Psoriasis Area and Severity Index, PASI100 and PASI90) than many other available treatments in PsA.

For minimal disease activity, bimekizumab ranked highest of all available b/tsDMARDs in b/tsDMARD-naïve and TNF inhibitor–experienced patients.

PsA is a chronic, systemic, inflammatory disease in which patients experience a high burden of illness [ 1–3 ]. PsA has multiple articular and extra-articular disease manifestations including peripheral arthritis, axial disease, enthesitis, dactylitis, skin psoriasis (PSO) and psoriatic nail disease [ 4 , 5 ]. Patients with PsA can also suffer from related inflammatory conditions, uveitis and IBD [ 4 , 5 ]. Approximately one fifth of all PSO patients, increasing to one quarter of patients with moderate to severe PSO, will develop PsA over time [ 6 , 7 ].

The goal of treatment is to control inflammation and prevent structural damage to minimize disease burden, normalize function and social participation, and maximize the quality of life of patients [ 1 , 4 ]. As PsA is a heterogeneous disease, the choice of treatment is guided by individual patient characteristics, efficacy against the broad spectrum of skin and joint symptoms, and varying contraindications to treatments [ 1 , 4 ]. There are a number of current treatments classed as conventional DMARDs such as MTX, SSZ, LEF; biologic (b) DMARDs such as TNF inhibitors (TNFi), IL inhibitors and cytotoxic T lymphocyte antigen 4 (CTLA4)-immunoglobulin; and targeted synthetic (ts) DMARDs which include phosphodiesterase-4 (PDE4) and Janus kinase (JAK) inhibitors [ 1 , 8 ].

Despite the number of available treatment options, the majority of patients with PsA report that they do not achieve remission and additional therapeutic options are needed [ 9 , 10 ]. Thus, the treatment landscape for PsA continues to evolve and treatment decisions increase in complexity, especially as direct comparative data are limited [ 2 ].

Bimekizumab is a monoclonal IgG1 antibody that selectively inhibits IL-17F in addition to IL-17A, which is approved for the treatment of adults with active PsA in Europe [ 11 , 12 ]. Both IL-17A and IL-17F are pro-inflammatory cytokines implicated in PsA [ 11 , 13 ]. IL-17F is structurally similar to IL-17A and expressed by the same immune cells; however, the mechanisms that regulate expression and kinetics differ [ 13 , 14 ]. IL-17A and IL-17F are expressed as homodimers and as IL-17A–IL-17F heterodimers that bind to and signal via the same IL-17 receptor A/C complex [ 13 , 15 ].

In vitro studies have demonstrated that the dual inhibition of both IL-17A and IL-17F with bimekizumab was more effective at suppressing PsA inflammatory genes and T cell and neutrophil migration, and periosteal new bone formation, than blocking IL-17A alone [ 11 , 14 , 16 , 17 ]. Furthermore, IL-17A and IL-17F protein levels are elevated in psoriatic lesions and the superiority of bimekizumab 320 mg every 4 weeks (Q4W) or every 8 weeks (Q8W) over the IL-17A inhibitor, secukinumab, in complete clearance of psoriatic skin was demonstrated in a head-to-head trial in PSO [ 16 , 18 ]. Collectively, this evidence suggests that neutralizing both IL-17F and IL-17A may provide more potent abrogation of IL-17-mediated inflammation than IL-17A alone.

Bimekizumab 160 mg Q4W demonstrated significant improvements in efficacy outcomes compared with placebo, and an acceptable safety profile in adults with PsA in the phase 3 RCTs BE OPTIMAL (NCT03895203) (b/tsDMARD-naïve patients) and BE COMPLETE (NCT03896581) (TNFi inadequate responders) [ 19 , 20 ].

The objective of this study was to establish the comparative efficacy and safety of bimekizumab 160 mg Q4W vs other available PsA treatments, using network meta-analysis (NMA).

Search strategy

A systematic literature review (SLR) was conducted according to the Preferred Reporting Items for Systematic Reviews (PRISMA) guidelines [ 21 ] and adhered to the principles outlined in the Cochrane Handbook for Systematic Reviews of Interventions, Centre for Reviews and Dissemination’s Guidance for Undertaking Reviews in Healthcare, and Methods for the Development of National Institute of Health and Care Excellence (NICE) Public Health Guidance [ 22–24 ]. The SLR of English-language publications was originally conducted on 3 December 2015, with updates on 7 January 2020, 2 May 2022 and 1 January 2023 in Medical Literature Analysis and Retrieval System Online (MEDLINE ® ), Excerpta Medica Database (Embase ® ) and the Cochrane Central Register of Controlled Trials (CENTRAL) for literature published from January 1991 onward using the Ovid platform. Additionally, bibliographies of SLRs and meta-analyses identified through database searches were reviewed to ensure any publications not identified in the initial search were included in this SLR. Key clinical conference proceedings not indexed in Ovid (from October 2019 to current) and ClinicalTrials.gov were also manually searched. The search strategy is presented in Supplementary Table S1 (available at Rheumatology online).

Study inclusion

Identified records were screened independently and in duplicate by two reviewers and any discrepancies were reconciled via discussion or a third reviewer. The SLR inclusion criteria were defined by the Patient populations, Interventions, Comparators, Outcome measures, and Study designs (PICOS) Statement ( Supplementary Table S2 , available at Rheumatology online). The SLR included published studies assessing approved therapies for the treatment of PsA. Collected data included study and patient population characteristics, interventions, comparators, and reported clinical and patient-reported outcomes relevant to PsA. For efficacy outcomes, pre-crossover data were extracted in studies where crossover occurred. All publications included in the analysis were evaluated according to the Cochrane risk-of-bias tool for randomized trials as described in the Cochrane Handbook [ 25 ].

Network meta-analysis methods

NMA is the quantitative assessment of relative treatment effects and associated uncertainty of two or more interventions [ 26 , 27 ]. It is used frequently in health technology assessment, guideline development and to inform treatment decision making in clinical practice [ 26 ].

Bimekizumab 160 mg Q4W was compared with current b/tsDMARDs at regulatory-approved doses ( Table 1 ) by NMA. All comparators were selected on the basis they were relevant to clinical practice, i.e. recommended by key clinical guidelines, licensed by key regulatory bodies and/or routinely used.

NMA intervention and comparators

| Therapeutic class . | Drug dose and frequency of administration . |

|---|---|

| Intervention | |

| IL-17A/17Fi | Bimekizumab 160 mg Q4W |

| Comparators | |

| IL-17Ai | Secukinumab 150 mg with or without loading dose Q4W or 300 mg Q4W, ixekizumab 80 mg Q4W |

| IL-23i | Guselkumab 100 mg every Q4W or Q8W, risankizumab 150 mg Q4W |

| IL-12/23i | Ustekinumab 45 mg or 90 mg Q12W |

| TNFi | Adalimumab 40 mg Q2W, certolizumab pegol 200 mg Q2W or 400 mg Q4W pooled, etanercept 25 mg twice a week, golimumab 50 mg s.c. Q4W or 2 mg/kg i.v. Q8W, infliximab 5 mg/kg on weeks 0, 2, 6, 14, 22 |

| CTLA4-Ig | Abatacept 150 mg Q1W |

| JAKi | Tofacitinib 5 mg BID, upadacitinib 15 mg QD |

| PDE-4i | Apremilast 30 mg BID |

| Other | Placebo |

| Therapeutic class . | Drug dose and frequency of administration . |

|---|---|

| Intervention | |

| IL-17A/17Fi | Bimekizumab 160 mg Q4W |

| Comparators | |

| IL-17Ai | Secukinumab 150 mg with or without loading dose Q4W or 300 mg Q4W, ixekizumab 80 mg Q4W |

| IL-23i | Guselkumab 100 mg every Q4W or Q8W, risankizumab 150 mg Q4W |

| IL-12/23i | Ustekinumab 45 mg or 90 mg Q12W |

| TNFi | Adalimumab 40 mg Q2W, certolizumab pegol 200 mg Q2W or 400 mg Q4W pooled, etanercept 25 mg twice a week, golimumab 50 mg s.c. Q4W or 2 mg/kg i.v. Q8W, infliximab 5 mg/kg on weeks 0, 2, 6, 14, 22 |

| CTLA4-Ig | Abatacept 150 mg Q1W |

| JAKi | Tofacitinib 5 mg BID, upadacitinib 15 mg QD |

| PDE-4i | Apremilast 30 mg BID |

| Other | Placebo |

See Supplementary Table S4 , available at Rheumatology online for additional dosing schedules used in included studies. BID: twice daily; CTLA4-Ig: cytotoxic T lymphocyte antigen 4-immunoglobulin; IL-17A/17Fi: IL-17A/17F inhibitor; IL-17Ai: IL-17A inhibitor; IL-12/23i: IL-12/23 inhibitor; IL-23i: IL-23 inhibitor; JAKi: Janus kinase inhibitor; NMA: network meta-analysis; PDE-4i: phosphodiesterase-4 inhibitor; Q1W: once weekly; Q2W: every 2 weeks; Q4W: every 4 weeks; Q8W: every 8 weeks; Q12W: every 12 weeks; QD: once daily; TNFi: TNF inhibitor.

Two sets of primary analyses were conducted, one for a b/tsDMARD-naïve PsA population and one for a TNFi-experienced PsA population. Prior treatment with TNFis has been shown to impact the response to subsequent bDMARD treatments [ 28 ]. In addition, most trials involving b/tsDMARDs for the treatment of PsA (including bimekizumab) report separate data on both b/tsDMARD-naïve and TNFi-experienced subgroups, making NMA in each of these patient populations feasible.

For each population the following outcomes were analysed: American College of Rheumatology response (ACR20/50/70), Psoriasis Area and Severity Index (PASI90/100), and minimal disease activity (MDA). The analysis of serious adverse events (SAE) was conducted using a mixed population (i.e. b/tsDMARD-naïve, TNFi-experienced and mixed population data all were included) as patients’ previous TNFI exposure was not anticipated to impact safety outcomes following discussions with clinicians. The NMA included studies for which data were available at week 16, if 16-week data were not available (or earlier crossover occurred), data available at weeks 12, 14 or 24 were included. Pre-crossover data were included in the analyses for efficacy outcomes to avoid intercurrent events.

Heterogeneity between studies for age, sex, ethnicity, mean time since diagnosis, concomitant MTX, NSAIDs or steroid use was assessed using Grubb’s test, also called the extreme Studentized deviate method, to identify outlier studies.

All univariate analyses involved a 10 000 run-in iteration phase and a 10 000-iteration phase for parameter estimation. All calculations were performed using the R2JAGS package to run Just Another Gibbs Sampler (JAGS) 3.2.3 and the code reported in NICE Decision Support Unit (DSU) Technical Support Document Series [ 29–33 ]. Convergence was confirmed through inspection of the ratios of Monte-Carlo error to the standard deviations of the posteriors; values >5% are strong signs of convergence issues [ 31 ]. In some cases, trials reported outcome results of zero (ACR70, PASI100, SAE) in one or more arms for which a continuity correction was applied to mitigate the issue, as without the correction most models were not convergent or provided a large posterior distribution making little clinical sense [ 31 ].

Four NMA models [fixed effects (FE) unadjusted, FE baseline risk-adjusted, random effects (RE) unadjusted and RE baseline risk-adjusted] were assessed and the best-fit models were chosen using methods described in NICE DSU Technical Support Document 2 [ 31 ]. Odds ratios (ORs) and differences of mean change (MC) with the associated 95% credible intervals (CrIs) were calculated for each treatment comparison in the evidence network for the best fitting models and presented in league tables and forest plots. In addition, the probability of bimekizumab 160 mg Q4W being better than other treatments was calculated using surface under the cumulative ranking curve (SUCRA) to determine relative rank. Conclusions (i.e. better/worse or comparable) for bimekizumab 160 mg Q4W vs comparators were based on whether the pairwise 95% CrIs of the ORs/difference of MC include 1 (dichotomous outcomes), 0 (continuous outcomes) or not. In the case where the 95% CrI included 1 or 0, then bimekizumab 160 mg Q4W and the comparator were considered comparable. If the 95% CrI did not include 1 or 0, then bimekizumab 160 mg Q4W was considered either better or worse depending on the direction of the effect.

Compliance with ethics guidelines

This article is based on previously conducted studies and does not contain any new studies with human participants or animals performed by any of the authors.

Study and patient characteristics

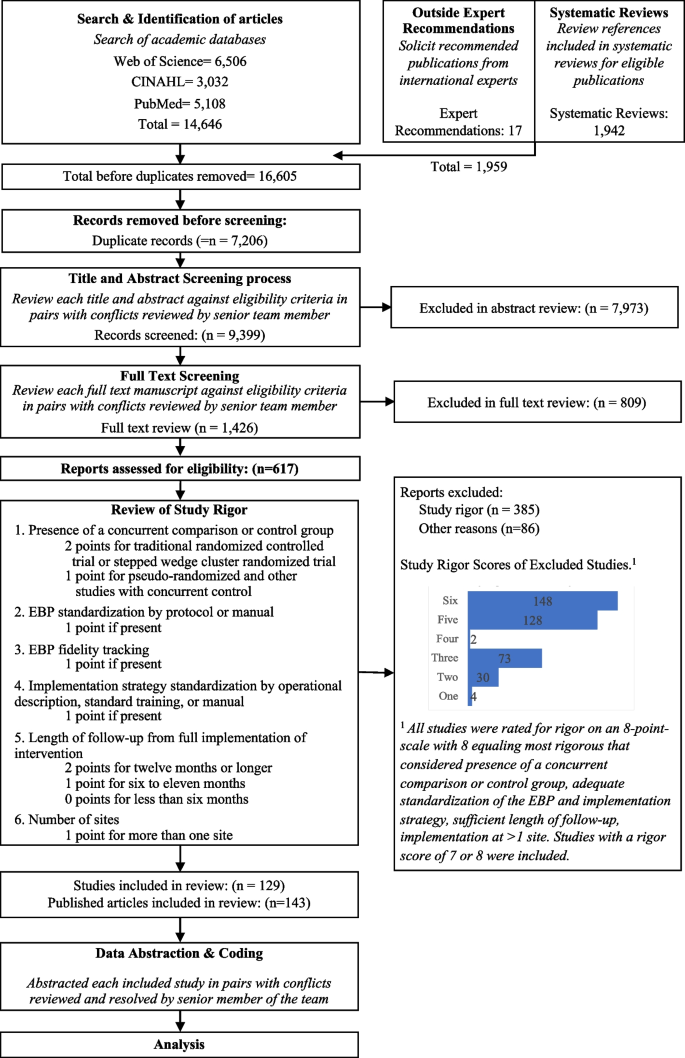

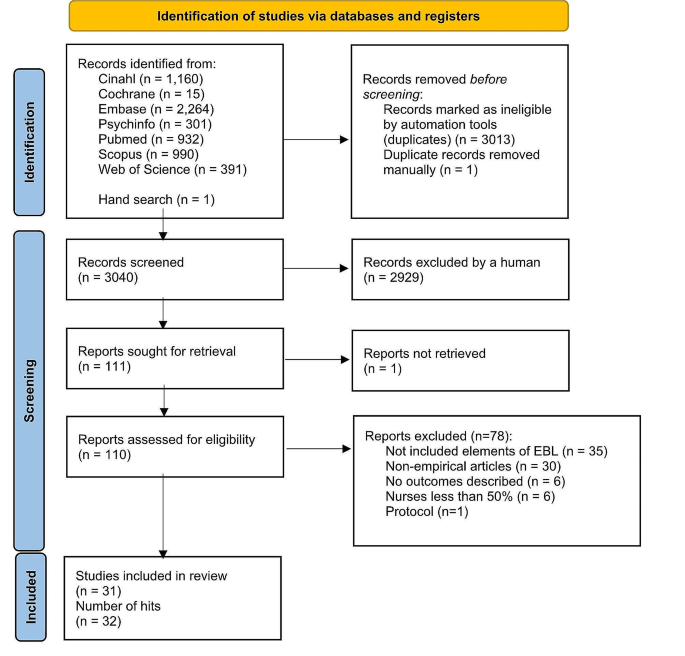

The SLR identified 4576 records through databases and 214 records through grey literature, of which 3143 were included for abstract review. Following the exclusion of a further 1609 records, a total of 1534 records were selected for full-text review. A total of 66 primary studies from 246 records were selected for data extraction. No trial was identified as having a moderate or high risk of bias ( Supplementary Table S3 , available at Rheumatology online).

Of the 66 studies identified in the SLR, 41 studies reported outcomes at weeks 12, 16 or 24 and met the criteria for inclusion in the NMA in either a b/tsDMARD-naïve population ( n = 20), a TNFi-experienced population ( n = 5), a mixed population with subgroups ( n = 13) or a mixed PsA population without subgroups reported ( n = 3). The PRISMA diagram is presented in Fig. 1 . Included and excluded studies are presented in Supplementary Tables S4 and S5 , respectively (available at Rheumatology online).

PRISMA flow diagram. The PRISMA flow diagram for the SLR conducted to identify published studies assessing approved treatments for the treatment of PsA. cDMARD: conventional DMARD; NMA: network meta-analysis; NR: not reported; PD: pharmacodynamic; PK: pharmacokinetic; PRISMA: Preferred Reporting Items for Systematic Reviews and Meta-Analyses; RCT: randomized controlled trial; SLR: systematic literature review