Compiler Optimizations

- Address Optimization

- Alias Optimization by Address

- Alias Optimization Const Qualifier

- Alias Optimization by Type

- Array Bounds Optimization

- Bitfield Optimization

- Branch Elimination

- Loop Collapsing

- Instruction Combining

- Constant Folding

- Constant Propagation

- CSE Elimination

Dead Code Elimination

- Ineger Divide Optimization

- Expression Simplification

- Forward Store

- Loop Fusion

- Garbage Collection

- If Optimization

- Function Inlining

- Invarrant Expression Elimination

- Block Mergin

- Integer Mod Optimization

- Integer Multiply Optimization

- New Optimization

- Pointer Optimization

- Printf Optimization

- Value Range Optimization

- Register Allocation

- Strength Reduction

- String Optimization

- Tail Recursion

- Try/Catch Block Optimization

- Loop Unrolling

- Unswitching

- Volatile Optimization

Code that is unreachable or that does not affect the program (e.g. dead stores) can be eliminated.

In the example below, the value assigned to i is never used, and the dead store can be eliminated. The first assignment to global is dead, and the third assignment to global is unreachable; both can be eliminated.

Below is the code fragment after dead code elimination.

- Engineering Mathematics

- Discrete Mathematics

- Operating System

- Computer Networks

- Digital Logic and Design

- C Programming

- Data Structures

- Theory of Computation

- Compiler Design

- Computer Org and Architecture

- Constant Folding

- Induction Variable and Strength Reduction

- What is Code Motion?

- What is LEX in Compiler Design?

- Various Data Structures Used in Compiler

- Pass By Copy-Restore in Compiler Design

- What is Top-Down Parsing with Backtracking in Compiler Design?

- Global Code Scheduling in Compiler Design

- Function Inlining

- What is USE, IN, and OUT in Compiler Design?

- Common Sub Expression Elimination

- Iterative algorithm for a backward data flow problem

- Reachability in Compiler Design

- Copy Propagation

- What is Error recovery

- Dangling-else Ambiguity

- Constant Propagation in Compiler Design

- Error Recovery in Predictive Parsing

- Evaluation Order For SDD

Dead Code Elimination

In the world of software development, optimizing program efficiency and maintaining clean code are crucial goals. Dead code elimination, an essential technique employed by compilers and interpreters, plays a significant role in achieving these objectives. This article explores the concept of dead code elimination, its importance in program optimization, and its benefits. We will delve into the process of identifying and eliminating dead code, emphasizing original content to ensure a plagiarism-free article.

Understanding Dead Code

Dead code refers to sections of code within a program that is never executed during runtime and has no impact on the program’s output or behavior. Identifying and removing dead code is essential for improving program efficiency, reducing complexity , and enhancing maintainability.

Benefits of Dead Code Elimination

- Enhanced Program Efficiency : By removing dead code, unnecessary computations and memory usage are eliminated, resulting in faster and more efficient program execution.

- Improved Maintainability : Dead code complicates the understanding and maintenance of software systems. By eliminating it, developers can focus on relevant code, improving code readability, and facilitating future updates and bug fixes.

- Reduced Program Siz e: Dead code elimination significantly reduces the size of executable files, optimizing resource usage and improving software distribution.

Process of Dead Code Elimination

Dead code elimination is primarily performed by compilers or interpreters during the compilation or interpretation process. Here’s an overview of the process:

- Static Analysis : The compiler or interpreter analyzes the program’s source code or intermediate representation using various techniques, including control flow analysis and data flow analysis.

- Identification of Dead Code : Through static analysis, the compiler identifies sections of code that are provably unreachable or have no impact on the program’s output.

- Removal of Dead Code : The identified dead code segments are eliminated from the final generated executable, resulting in a more streamlined and efficient program.

Dead code elimination is a vital technique for optimizing program efficiency and enhancing maintainability. By identifying and removing code segments that are never executed or have no impact on the program’s output, developers can improve resource usage, streamline software systems, and facilitate future maintenance and updates. Understanding the process of dead code elimination empowers programmers to write cleaner, more efficient code and contribute to the overall success of software development projects.

Frequently Asked Questions

Q1 : how does dead code end up in a software program in the first place.

Dead code can be a result of code changes, refactoring, or outdated features that are no longer used but were not removed. It can also occur due to conditional statements that are never true or error conditions that are never triggered.

Q2 : Can dead code elimination affect the behavior of a program?

In general, removing true dead code (code that has no impact) should not affect the program’s behaviour. However, it’s crucial to thoroughly test the program after dead code elimination to ensure unintended consequences do not arise.

Please Login to comment...

Similar reads.

- 10 Best Slack Integrations to Enhance Your Team's Productivity

- 10 Best Zendesk Alternatives and Competitors

- 10 Best Trello Power-Ups for Maximizing Project Management

- Google Rolls Out Gemini In Android Studio For Coding Assistance

- 30 OOPs Interview Questions and Answers (2024)

Improve your Coding Skills with Practice

What kind of Experience do you want to share?

- Object-oriented programming

- Software testing

- Agile methodologies

- Version control systems

- Code optimization

- Software development

Dead Code Elimination: Optimizing Software Development through Code Optimization

Dead code elimination is a crucial aspect of optimizing software development through code optimization. By identifying and removing sections of code that are no longer needed or executed, developers can enhance the overall performance and efficiency of their software applications. For instance, consider a hypothetical scenario where a large-scale e-commerce platform has been in operation for several years. Over time, multiple updates and modifications have been made to the system, resulting in unused and redundant sections of code. Through dead code elimination techniques, these unnecessary portions can be identified and eliminated, leading to improved resource utilization and reduced maintenance efforts.

In academic discourse, dead code refers to any part of the program that does not contribute to its final output or functionality but still occupies valuable memory space during execution. These fragments typically arise from changes made during the evolution of software systems, such as additions or revisions to features or bug fixes. However, due to lack of rigorous testing or oversight during development cycles, it is common for inactive segments of code to persist within projects. Dead code elimination aims to address this issue by systematically detecting and eliminating these obsolete components. This process not only helps streamline software applications but also enhances readability, maintainability, and reduces potential bugs caused by interactions with irrelevant parts of the codebase.

Benefits of Dead Code Elimination

Dead code elimination is a crucial optimization technique in software development that offers numerous benefits. By identifying and removing sections of code that are never executed or have become obsolete, developers can significantly enhance the efficiency and performance of their software systems. This section explores the advantages of dead code elimination and highlights its importance in optimizing software development.

One notable benefit of dead code elimination is improved resource utilization. When unnecessary code segments are eliminated, system resources such as memory and processing power can be redirected to more critical tasks. For instance, consider a case where an application contains unused libraries or modules that consume substantial memory resources. Through dead code elimination, these redundant components can be removed, allowing the application to free up memory for other essential operations.

Another advantage lies in enhanced maintainability. As software projects evolve over time, it is common for certain features or functionalities to become outdated or deprecated. These remnants of old code not only clutter the overall structure but also make maintenance and updates cumbersome. Dead code elimination helps streamline the codebase by eliminating irrelevant sections, making it easier for developers to understand and modify the remaining active parts without being encumbered by unnecessary complexity.

Furthermore, dead code elimination leads to improved readability and comprehensibility within software projects. Reducing extraneous lines of code enhances clarity by emphasizing relevant logic flows while minimizing distractions caused by inactive portions. This streamlined approach enables developers to grasp the intended functionality quickly and facilitates collaboration among team members working on different parts of the project.

To emphasize the positive impact further, here are several reasons why incorporating dead code elimination into software development practices should be considered:

- Increased performance: Removing unused code reduces execution time and improves overall program speed.

- Enhanced security: Eliminating dormant or vulnerable sections minimizes potential attack vectors.

- Simplified debugging: With fewer lines of active code, locating bugs becomes less intricate.

- Better scalability: By eliminating redundant dependencies, scaling applications becomes more manageable.

The benefits of dead code elimination are evident, highlighting its significance in optimizing software development. In the subsequent section, we will delve into a deeper understanding of dead code and explore the impact it can have on software systems. Understanding how dead code arises and recognizing its implications is crucial for effectively implementing dead code elimination techniques to unlock further optimization opportunities.

Understanding Dead Code and its Impact

Having explored the benefits of dead code elimination, it is important to understand the detrimental effects that unoptimized code can have on software development. To illustrate this impact, let’s consider a hypothetical scenario where a large-scale e-commerce platform is experiencing performance issues due to an abundance of unused and redundant code.

In such a case, the presence of dead code not only slows down the system but also hinders developers’ productivity by increasing debugging time and affecting overall code maintainability. This results in significant financial losses for the company as customers experience delays in accessing products, leading to decreased user satisfaction and potential loss of sales.

To better comprehend the gravity of these consequences, let us explore some key factors that demonstrate how dead code negatively impacts software development:

- Increased Complexity : Unused or unnecessary code adds complexity to the system, making it harder for developers to navigate through thousands or even millions of lines of irrelevant code. This complexity leads to longer development cycles and increased chances of introducing bugs during maintenance.

- Reduced Performance : Dead code consumes computational resources unnecessarily, resulting in slower execution times and diminished response rates. As a result, users may experience frustratingly long loading times or errors when interacting with the application.

- Higher Debugging Efforts : Identifying bugs becomes more challenging when unnecessary code clutters the system. Developers are forced to spend valuable time sifting through irrelevant sections instead of focusing on critical issues at hand.

- Lower Maintainability : Unoptimized software tends to become less maintainable over time. With each update or addition made without proper dead code elimination practices, further redundancy accumulates, exacerbating future efforts required for modifications or enhancements.

Considering these impactful repercussions caused by dead code accumulation, it becomes evident why organizations should prioritize effective techniques for identifying and removing such extraneous pieces of code. In order to streamline software development processes and enhance overall efficiency, the following section will delve into various techniques employed for identifying dead code.

By implementing these techniques, developers can effectively identify and eliminate dead code, mitigating its detrimental effects on software development.

Techniques for Identifying Dead Code

Understanding the impact of dead code on software development is crucial. Now, let’s explore some effective techniques for identifying dead code and optimizing your coding practices.

One technique widely used in identifying dead code is static analysis. By analyzing the source code without executing it, static analysis tools can detect portions of code that are never reached during program execution. These tools examine control flow paths to identify unreachable or redundant sections within the codebase. For example, consider a hypothetical case study where an e-commerce website has multiple payment options implemented but only one option is ever being used by customers. Static analysis would highlight this unused code, allowing developers to remove it and improve overall performance.

Another useful technique for detecting dead code is dynamic analysis. Unlike static analysis, dynamic analysis involves running the application with test cases or input data that exercises different parts of the program. This allows developers to observe which sections of the code are actually executed and identify any areas that remain untouched during runtime. Dynamic analysis provides valuable insights into real-world usage scenarios and helps pinpoint specific lines or blocks of code that can be safely removed.

To further aid in identifying dead code, consider utilizing these key strategies:

- Tracing Execution Paths: Use debugging tools or profilers to trace the execution path of your program and identify any branches or conditional statements that are never executed.

- Coverage Analysis: Conduct coverage analysis tests to determine how much of your source code is exercised by various test cases, highlighting potential areas with low coverage.

- Peer Code Review: Engage in peer reviews where fellow developers thoroughly examine each other’s codes to collectively spot any unnecessary or unutilized pieces of functionality.

- Automated Testing: Implement automated testing frameworks that ensure comprehensive test coverage and flag any inaccessible portions of the application.

Let us now move forward towards implementing dead code elimination as we delve into improving software efficiency through optimized coding practices. By understanding various techniques for identifying dead code, developers can streamline their coding workflow and enhance overall software performance.

Implementing Dead Code Elimination

Building upon the understanding of techniques for identifying dead code, we now delve into implementing dead code elimination. By employing various optimization strategies, developers can streamline their software development process and improve overall program efficiency.

One example that showcases the benefits of dead code elimination is a web application designed to handle e-commerce transactions. In this hypothetical scenario, the application’s source code contains several unused functions and variables that were remnants of previous iterations or experimental features. These lines of dead code not only clutter the codebase but also potentially impact performance. Through effective implementation of dead code elimination techniques, such as static analysis and runtime profiling, these unnecessary elements are identified and removed, resulting in a leaner and more optimized application.

To achieve successful dead code elimination, developers should consider adopting the following best practices:

- Conduct regular static analysis: Utilize automated tools to scan the entire codebase for potential instances of dead code.

- Perform dynamic testing: Execute comprehensive test suites that simulate real-world scenarios to identify any hidden areas where dead code may be present.

- Collaborate with team members: Encourage open communication among developers to ensure consistent use of coding standards and periodic reviews to identify redundant or obsolete sections.

- Continuously monitor performance metrics: Implement monitoring systems that provide insights into resource utilization and execution statistics to detect any new occurrences of dead code over time.

In conclusion, by diligently applying appropriate techniques for identifying dead code and implementing well-established practices for dead code elimination, developers can significantly optimize their software development process. This leads to improved program efficiency and reduced maintenance efforts. In the subsequent section, we will explore real-life case studies that demonstrate the tangible benefits of implementing dead code elimination techniques.

Through examining real-life examples where dead code elimination has been successfully implemented, we gain valuable insights into its practical implications. Case Studies on Dead Code Elimination provide concrete evidence of how this optimization technique positively impacts software performance and development efficiency.

Case Studies on Dead Code Elimination

Case study: enhancing performance with dead code elimination.

To illustrate the benefits of implementing dead code elimination, let us consider a hypothetical scenario where a software development team is tasked with optimizing the performance of their application. After conducting an analysis, they identify several areas within the codebase that contain redundant and unused code segments.

By applying dead code elimination techniques, the team systematically removes these unnecessary sections from the source code. This process not only improves overall performance but also enhances maintainability by reducing complexity and eliminating potential bugs caused by obsolete or unreachable code.

Implementing dead code elimination in software development offers numerous advantages for both developers and end-users:

- Enhanced Efficiency: Removing dead code reduces memory footprint and CPU usage, leading to improved runtime performance.

- Streamlined Debugging: By removing unused code segments, developers can focus on relevant parts during debugging sessions, making it easier to identify and fix issues.

- Simplified Maintenance: Reducing redundancy simplifies future updates and modifications since developers no longer need to navigate through irrelevant or inaccessible portions of the codebase.

- Improved Reliability: Removing dead code decreases the likelihood of encountering unexpected behaviors or errors due to outdated logic.

Incorporating dead code elimination into software development practices can significantly optimize applications’ performance while streamlining maintenance efforts. The table below summarizes some key considerations when implementing this technique:

As we have seen in this case study, implementing dead code elimination allows for more efficient and streamlined software development.

Section: Best Practices for Dead Code Elimination

[Transition Sentence] Now that we have understood the benefits of implementing dead code elimination in software development, let us delve into some effective strategies to ensure its successful integration.

Best Practices for Dead Code Elimination

By removing unused and redundant code from a program, developers can enhance its efficiency and performance. This section will explore best practices for dead code elimination, highlighting its significance and potential benefits.

One notable example showcasing the impact of dead code elimination is found in the development of an e-commerce platform. During the analysis phase, it was discovered that a significant amount of legacy code remained within the system, even though it was no longer being utilized. By implementing dead code elimination techniques, such as static analysis tools and manual inspection, approximately 30% of the total lines of code were identified as redundant or non-functional. Removing this unnecessary code not only simplified maintenance efforts but also led to a noticeable improvement in overall application performance.

When considering dead code elimination as part of software development optimization strategies, several best practices should be taken into account:

- Regular Code Audits: Conducting regular audits helps identify inactive or obsolete sections within the software.

- Utilize Static Analysis Tools: Employ automated tools to scan source code for unreachable or unused components.

- Test Coverage Analysis: Evaluate test coverage reports to pinpoint areas with low usage frequency or lack of execution paths.

- Collaborative Efforts: Encourage collaboration between developers and testers to ensure effective identification and removal of redundant code.

To highlight these best practices further, consider the following table illustrating their potential benefits:

Incorporating these best practices into the software development process, developers can harness the power of dead code elimination to optimize their projects. By systematically identifying and removing redundant portions within a program, software teams can enhance maintainability, performance, and overall efficiency.

With an understanding of how dead code elimination impacts software development, it is crucial for developers to implement these best practices in order to reap its potential benefits. Taking proactive measures such as regular code audits, utilizing static analysis tools, conducting test coverage analysis, and promoting collaboration among team members will undoubtedly lead to more streamlined and optimized software projects.

Related posts:

- Cache Optimization: Enhancing Software Development through Code Optimization

- Code Optimization in Software Development: Improving Efficiency and Performance

- Constant Folding: Code Optimization in Software Development

- Function Inlining: Revolutionizing Software Development Code Optimization

- Privacy Policy

- Terms and Conditions

A free one-day virtual conference for the whole C++ community.

Optimizing C++ Code : Dead Code Elimination

August 9th, 2013 0 0

If you have arrived in the middle of this blog series, you might want instead to begin at the beginning .

This post examines the optimization called Dead-Code-Elimination, which I’ll abbreviate to DCE. It does what it says: discards any calculations whose results are not actually used by the program.

Now, you will probably assert that your code calculates only results that are used, and never any results that are not used: only an idiot, after all, would gratuitously add useless code – calculating the first 1000 digits of pi , for example, whilst also doing something useful. So when would the DCE optimization ever have an effect?

The reason for describing DCE so early in this series is that it could otherwise wreak havoc and confusion throughout our exploration of other, more interesting optimizations: consider this tiny example program, held in a file called Sum.cpp :

int main() { long long s = 0; for (long long i = 1; i <= 1000000000; ++i) s += i; }

We are interested in how fast the loop executes, calculating the sum of the first billion integers. (Yes, this is a spectacularly silly example, since the result has a closed formula, taught in High school. But that’s not the point)

Build this program with the command: CL /Od /FA Sum.cpp and run with the command Sum . Note that this build disables optimizations, via the /Od switch. On my PC, it takes about 4 seconds to run. Now try compiling optimized-for-speed, using CL /O2 /FA Sum.cpp . On my PC, this version runs so fast there’s no perceptible delay. Has the compiler really done such a fantastic job at optimizing our code? The answer is no (but in a surprising way, also yes):

Let’s look first at the code it generates for the /Od case, stored into Sum.asm . I have trimmed and annotated the text to show only the loop body:

mov QWORD PTR s$[rsp], 0 ;; long long s = 0 mov QWORD PTR i$1[rsp], 1 ;; long long i = 1 jmp SHORT $LN3@main $LN2@main : mov rax, QWORD PTR i$1[rsp] ;; rax = i inc rax ;; rax += 1 mov QWORD PTR i$1[rsp], rax ;; i = rax $LN3@main : cmp QWORD PTR i$1[rsp], 1000000000 ;; i <= 1000000000 ? jg SHORT $LN1@main ;; no – we’re done mov rax, QWORD PTR i$1[rsp] ;; rax = i mov rcx, QWORD PTR s$[rsp] ;; rcx = s add rcx, rax ;; rcx += rax mov rax, rcx ;; rax = rcx mov QWORD PTR s$[rsp], rax ;; s = rax jmp SHORT $LN2@main & nbsp; ;; loop $LN1@main:

The instructions look pretty much like you would expect. The variable i is held on the stack at offset i$1 from the location pointed-to by the RSP register; elsewhere in the asm file, we find that i$1 = 0. We use the RAX register to increment i . Similarly, variable s is held on the stack (at offset s$ from the location pointed-to by the RSP register; elsewhere in the asm file, we find that s$ = 8). The code uses RCX to calculate the running sum each time around the loop.

Notice how we load the value for i from its “home” location on the stack, every time around the loop; and write the new value back to its “home” location. The same for the variable s . We could describe this code as “naïve” – it’s been generated by a dumb compiler (i.e., one with optimizations disabled). For example, it’s wasteful to keep accessing memory for every iteration of the loop, when we could have kept the values for i and s in registers throughout.

So much for the non-optimized code. What about the code generated for the optimized case? Let’s look at the corresponding Sum.asm for the optimized, /O2 , build. Again, I have trimmed the file down to just the part that implements the loop body, and the answer is:

;; there’s nothing here!

Yes – it’s empty! There are no instructions that calculate the value of s .

Well, that answer is clearly wrong, you might say. But how do we know the answer is wrong? The optimizer has reasoned that the program does not actually make use of the answer for s at any point; and so it has not bothered to include any code to calculate it. You can’t say the answer is wrong, unless you check that answer, right?

We have just fallen victim to, been mugged in the street by, and lost our shirt to, the DCE optimization. If you don’t observe an answer, the program (often) won’t calculate that answer.

You might view this effect in the optimizer as analogous, in a profoundly shallow way, to a fundamental result in Quantum Physics, often paraphrased in articles on popular science as “ If a tree falls in the forest, and there’s nobody around to hear, does it make a sound?”

Well, let’s “observe” the answer in our program, by adding a printf of variable s , into our code, as follows:

#include <stdio.h> int main() { long long s = 0; for (long long i = 1; i <= 1000000000; ++i) s += i; printf(“%lld “, s); }

The /Od version of this program prints the correct answer, still taking about 4 seconds to run. The /O2 version prints the same, correct answer, but runs much faster. (See the optional section below for the value of “much faster” – in fact, the speedup is around 7X)

At this point, we have explained the main point of this blog post: always be very careful in analyzing compiler optimizations, and in measuring their benefit, that we are not being misled by DCE. Here are four steps to help notice, and ward off, the unasked-for attention of DCE:

- Check that the timings have not suddenly improved by an order of magnitude

- Examine the generated code (using the /FA switch)

- If in doubt add a strategic printf

- Put the code of interest into its own .CPP file, separate from the one holding main . This works, so long as you do not request whole-program optimization (via the /GL switch, that we’ll cover later)

However, we can wring several more interesting lessons from this tiny example. I have marked these sections below as “Optional-1” through “Optional-4”.

Optional-1 : codegen for /o2.

Why is our /O2 version (including a printf to defeat DCE), so much faster than the /Od version? Here is the code generated for the loop of the /O2 version, extracted from the Sum.asm file:

xor edx, edx mov eax, 1 mov ecx, edx mov r8d, edx mov r9d, edx npad 13 $LL3@main : inc r9 add r8, 2 add rcx, 3 add r9, rax ;; r9 = 2 8 18 32 50 … add r8, rax ;; r8 = 3 10 21 36 55 … add rcx, rax ;; rcx = 4 12 24 40 60 … add rdx, rax ;; rdx = 1 6 15 28 45 … add rax, 4 ;; rax = 1 5 9 13 17 … cmp rax, 1000000000 ;; i <= 1000000000 ? jle SHORT $LL3@main ;; yes, so loop back

Note that the loop body contains approximately the same number of instructions as the non-optimized build, and yet it runs much faster. That’s mostly because the instructions in this optimized loop use registers, rather than memory locations. As we all know, accessing a register is much faster than accessing memory. Here are approximate latencies that demonstrate how memory access can slow your program to a snail’s pace:

So, the non-optimized version is reading and writing to stack locations, which will quickly migrate into L2 (10 cycle access time) and L1 cache (4 cycle access time). Both are slower than if all the calculation is done in registers, with access times around a single cycle.

But there’s more going on here to make the code run faster. Notice how the /Od version increments the loop counter by 1 each time around the loop. But the /O2 version increments the loop counter (held in register RAX) by 4 each time around the loop. Eh?

The optimizer has unrolled the loop. So it adds four items together on each iteration, like this:

s = (1 + 2 + 3 + 4) + (5 + 6 + 7 + 8) + (9 + 10 + 11 + 12) + (13 + . . .

By unrolling this loop, the test for loop-end is made every four iterations, rather than on every iteration – so the CPU spends more time doing useful work than forever checking whether it can stop yet!

Also, rather than accumulate the results into a single location, it has decided to use 4 separate registers, to accumulate 4 partial sums, like this:

RDX = 1 + 5 + 9 + 13 + … = 1, 6, 15, 28 … R9 = 2 + 6 + 10 + 14 + … = 2, 8, 18, 32 … R8 = 3 + 7 + 11 + 15 + … = 3, 10, 21, 36 … RCX = 4 + 8 + 12 + 16 + … = 4, 12, 24, 40 …

At the end of the loop, it adds the partial sums, in these four registers, together, to get the final answer.

(I will leave it as an exercise for the reader how the optimizer copes with a loop whose trip count is not a multiple of 4)

Optional-2 : Accurate Performance Measurements

Earlier, I said that the /O2 version of the program, without a printf inhibitor , “runs so fast there’s no perceptible delay”. Here is a program that makes that statement more precise:

#include <stdio.h> #include <windows.h> int main() { LARGE_INTEGER start, stop; QueryPerformanceCounter(&start); long long s = 0; for (long long i = 1; i <= 1000000000; ++i) s += i; QueryPerformanceCounter(&stop); double diff = stop.QuadPart – start.QuadPart; printf(“%f”, diff); }

It uses QueryPerformanceCounter to measure the elapsed time. (This is a “sawn-off” version of a high-resolution timer described in a previous blog). There are lots of caveats to bear in-mind when measuring performance (you might want to browse a list I wrote previously ), but they don’t matter for this particular case, as we shall see in a moment:

On my PC, the /Od version of this program prints a value for diff of about 7 million somethings . (The units of the answer don’t matter – just know that the number gets bigger as the program takes longer to run). The /O2 version prints a value for diff of 0. And the cause, as explained above, is our friend DCE.

When we add a printf to prevent DCE, the /Od version runs in about 1 million somethings – a speedup of about 7X.

Optional-3 : x64 Assembler “widening”

If we look carefully again at the assembler listings in this post, we find a few surprises in the part of the program that initializes our registers:

xor edx, edx ;; rdx = 0 (64-bit!) mov eax, 1 ;; rax = i = 1 (64-bit!) mov ecx, edx ;; rcx = 0 (64-bit!) mov r8d, edx ;; r8 = 0 (64-bit!) mov r9d, edx ;; r9 = 0 (64-bit!) npad 13 &nbs p; ;; multi-byte nop alignment padding $LL3@main:

Recall first that the original C++ program uses long long variables for both the loop counter and the sum. In the VC++ compiler, these map onto 64-bit integers. So we should expect the generated code to make use of x64’s 64-bit registers.

We already explained, in a previous post, that xor reg, reg is a compact way to zero out the contents of reg . But our first instruction is apply x

Discussion is closed.

Partial Dead Code Elimination Using Extended Value Graph

- Conference paper

- First Online: 01 January 1999

- Cite this conference paper

- Munehiro Takimoto 6 &

- Kenichi Harada 6

Part of the book series: Lecture Notes in Computer Science ((LNCS,volume 1694))

Included in the following conference series:

- International Static Analysis Symposium

512 Accesses

This paper presents an efficient and effective code optimization algorithm for eliminating partially dead assignments, which become redundant on execution of specific program paths. It is one of the most aggressive compiling techniques, including invariant code motion from loop bodies. Since the traditional techniques proposed to this optimization would produce the second-order effects such as sinking-sinking effects, they should be repeatedly applied to eliminate dead code completely, paying higher computation cost. Furthermore, there is a restriction that assignments sunk to a join point on ow of control must be lexically identical.

Our technique proposed here can eliminate possibly more dead assignments without the restriction at join points, using an explicit representation of data dependence relations within a program in a form of SSA (Static Single Assignment). Such representation called Extended Value Graph (EVG), shows the computationally equivalent structure among assignments before and after moving them on the control ow graph. We can get the final result directly by once application of this technique, because it can capture the second-order effects as the main effects, based on EVG.

- Assignment Statement

- Program Point

- Critical Edge

- Code Motion

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Unable to display preview. Download preview PDF.

Aho, A. V., Sethi, R. and Ullman, J. D.: Compilers: Principles, Techniques, and Tools, Addison Wesley, Reading, Mass. (1986).

Google Scholar

Alpern, B., Wegman, M. N. and Zadeck, F. K.: Detecting Equality of Variables in Programs, POPL, ACM, (1988) 1–11.

Appel, A. W.: Modern Compiler Implementation in ML, Cambridge University Press (1998).

Briggs, P. and Cooper, K. D.: Effective Partial Redundancy Elimination, PLDI, ACM, (1994) 159–170.

Clock, C.: Global Code Motion Global Value Numbering, PLDI, ACM, (1995) 246–257.

Cytron, R., Ferrante, J., Rosen, B. K. and Wegman, M. N.: Efficiently Computing Static Single Assignment Form and Control Dependence Graph, ACM TOPLAS, Vol. 13,No. 4, (1991) 451–490.

Article Google Scholar

Dhamdhere, D. M., Rose, B. K. and Zadeck, F. K.: How to Analyze Large Programs Efficiently and Informatively, PLDI, ACM, (1992) 212–223.

Feigen, L., Klappholz, D., Casazza, R. and Xue, X.: The Revival Transformation, POPL, ACM, (1994) 421–434.

Khedker, U. P. and Dhamdhere, D. M.: A Generalized Theory of Bit Bector Data Flow Analysis, ACM TOPLAS, Vol. 16,No. 5, (1994) 1472–1511.

Knoop, J., Rüthing, O. and Steffen, B.: Lazy Code Motion, PLDI, ACM, (1992) 224–234.

Knoop, J., Rüthing, O. and Steffen, B.: Partial Dead Code Elimination, PLDI, ACM, (1994) 147–158.

Muchnick, S. S.: Advanced Compiler Design Implementation, Morgan Kaufmann, Reading, Mass. (1997).

R. Gupta, D. B. and Fang, J.: Path Profile Guided Partial Dead Code Elimination Using Predication, PACT, IEEE, (1997) 102–115.

Bodik, R. and Gupta, R.: Partial Dead Code Elimination Using Slicing Transformations, PLDI, ACM, (1997) 159–170.

Sreedhar, V. C. and Gao, G. R.: A Linear Time Algorithm for Placing φ-Nodes, POPL, ACM, (1995) 62–73.

Steffen, B., Knoop, J. and O. Rüthing: The Value Flow Graph: A rogram representation for optimal program transformations, Proc. 3rd ESOP, Copenhagen, Denmark, Springer-Verlag, (1990) 389–405.

Takimkoto, M. and Harada, K.: Effective Partial Redundancy Elimination based on Extended Value Graph, Information Processing Society of Japan, Vol. 38,No. 11, (1997) 2237–2250.

MathSciNet Google Scholar

Download references

Author information

Authors and affiliations.

Department of Computer Science, Graduate School of Science and Technology, Keio University, Yokohama, 223-8522, Japan

Munehiro Takimoto & Kenichi Harada

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

Dipartimento di Informatica, Università Ca’Foscari di Venezia, via Torino 155, I-30170, Mestre-Venezia, Italy

Agostino Cortesi

Dipartimento di Matematica Pura ed Applicata, Università di Padova, via Belzoni 7, I-35131, Padova, Italy

Gilberto Filé

Rights and permissions

Reprints and permissions

Copyright information

© 1999 Springer-Verlag Berlin Heidelberg

About this paper

Cite this paper.

Takimoto, M., Harada, K. (1999). Partial Dead Code Elimination Using Extended Value Graph. In: Cortesi, A., Filé, G. (eds) Static Analysis. SAS 1999. Lecture Notes in Computer Science, vol 1694. Springer, Berlin, Heidelberg. https://doi.org/10.1007/3-540-48294-6_12

Download citation

DOI : https://doi.org/10.1007/3-540-48294-6_12

Published : 01 October 1999

Publisher Name : Springer, Berlin, Heidelberg

Print ISBN : 978-3-540-66459-8

Online ISBN : 978-3-540-48294-9

eBook Packages : Springer Book Archive

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

CA1 — Dead Code Eliminator

Project overview.

CA1 is due 2/9 at 11PM.

Compilers Assignments 1 through 5 will direct you to design and build an optimizing compiler for Cool. Each assignment will cover an intermediate step along the path to a complete optimizing compiler.

You may do this assignment in OCaml, Haskell, JavaScript, Python, or Ruby. (There are no language restrictions for Compilers Assignments.) (If you don't know what to do, OCaml and Haskell are likely the best languages for the compiler and optimizer. Python is a reasonable third choice.)

You may work in a team of two people for this assignment. You may work in a team for any or all subsequent Compilers Assignments. You do not need to keep the same teammate. The course staff are not responsible for finding you a willing teammate.

For this assignment you will write a dead code eliminator for three-address code based on live variable dataflow analysis of single methods.

- serializes and deserializes Cool Three-Address Code (TAC) format.

- identifies basic blocks in TAC to create a control flow graph (CFG).

- uses basic dataflow analysis to remove dead code.

The Specification

You must create three artifacts:

Your program must ouput a revised, valid .cl-tac file to standard output . The output should be the same as the input but with dead code removed. Your program will consist of a number of source files, all in the same programming language.

- A plain ASCII text file called readme.txt describing your design decisions and choice of test cases. See the grading rubric. A paragraph or two should suffice.

- Test cases test1.cl-tac and test2.cl-tac . These should be samples of code where it is tricky to remove dead code.

Three Address Code Example

Three-address code is a simplified representation focusing on assignments to variables and unary or binary operators.

In three address code, the expression (1+3)*7 must be broken down so that the addition and multiplication operations are each handled separately. (The exact format is described in the next section.)

As a second example, the code below reads in an integer and computes its absolute value (by checking to see if it is negative and subtracting it from zero if so):

Note the use of labels, conditional branches and unconditional jumps. You can use the cool reference compiler to evaluate .cl-tac files:

The .cl-tac File Format

Your program should serialize and deserialize file.cl-tac files representing three-address code.

A .cl-tac file is text-based and line-based. Your program must support Unix, Mac and Windows text files — regardless of where you, personally, developed your program. Note that lines can be delineated by carriage returns, new lines, or both (e.g., the regular expression [\r\n]+ may be helpful).

You can use cool --tac file.cl to produce file.cl-tac for the first method in that Cool file. Thus you can use the Cool reference compiler to produce three address code for you automatically, given Cool source code. You should do this to test your CA1 code.

Three-Address Code Deserialization

Dead code elimination.

A variable is live if it may be needed in the future. That is, an assignment to variable v at point p is live if there exists a path from p to the function exit along which v is read before it is overwritten.

For example:

On the fourth line, the assignment to d is dead because d is not subsequently used. Dead assignments can be removed. Often eliminating one piece of dead code can reveal additional dead code:

In above example, the assignment to d is not dead (yet!) but the assignment to e is dead. Once the assignment to e is eliminated, the assignment to d becomes dead and can then be eliminated. Thus, live variable analysis and dead code elimination must be repeated until nothing further changes.

You will use the live variable analysis form of data-flow analysis to determine which assignments are dead code.

Function calls with I/O side effects should never be eliminated.

Basic Blocks and Control-Flow Graphs

A basic block is a sequence of instructions with only one control-flow entry and one control-flow exit. That is, once you start executing the instructions in a basic block, you must execute all of them — you cannot jump out early or jump in half-way through.

As part of this assignment you will also implement a global dataflow analysis that operates on multiple basic blocks (that together form one entire method). A control-flow graph is a graph in which the nodes are basic blocks and the edges represent potential control flow.

Your assignment will have the following stages:

You can do basic testing as follows:

- $ cool --tac file.cl --out original $ cool --tac --opt file.cl --out ref_optimized $ my-dce original.cl-tac > my_optimized.cl-tac $ cool ref_optimized.cl-tac > optimized.output $ cool my_optimized.cl-tac > my.output $ diff optimized.output my.output $ cool --profile ref_optimized.cl-tac | grep STEPS $ cool --profile my_optimized.cl-tac | grep STEPS

Passing --opt and --tac to the reference compiler will cause the reference compiler to perform dead code elimination before emitting the .cl-tac file.

Video Guides

A number of Video Guides are provided to help you get started on this assignment on your own. The Video Guides are walkthroughs in which the instructor manually completes and narrates, in real time, aspects of this assignment.

If you are still stuck, you can post on the forum, approach the TAs, or approach the professor. The use of online instructional content outside of class weakly approximates a flipped classroom model . Click on a video guide to begin, at which point you can watch it fullscreen or via Youtube if desired.

What To Turn In For CA1

You must turn in a zip file containing these files:

Your zip file may also contain:

Submit the file to the course autograding website.

Working In Pairs

You may complete this project in a team of two. Teamwork imposes burdens of communication and coordination, but has the benefits of more thoughtful designs and cleaner programs. Team programming is also the norm in the professional world.

Students on a team are expected to participate equally in the effort and to be thoroughly familiar with all aspects of the joint work. Both members bear full responsibility for the completion of assignments. Partners turn in one solution for each programming assignment; each member receives the same grade for the assignment. If a partnership is not going well, the teaching assistants will help to negotiate new partnerships. Teams may not be dissolved in the middle of an assignment. If your partner drops the class at the last minute you are still responsible for the entire assignment.

If you are working in a team, exactly one team member should submit a CA1 zipfile. That submission should include the file team.txt , a one-line flat ASCII text file that contains the email address of your teammate. Don't include the @virgnia.edu bit. Example: If ph4u and wrw6y are working together, ph4u would submit ph4u-ca1.zip with a team.txt file that contains the word wrw6y . Then ph4u and wrw6y will both receive the same grade for that submission.

Grading Rubric

- Grading (out of 50)

- 32 points for autograder tests

- +.1 point for each passed test case (320 total)

- 10 points for valid test1.cl-tac and test2.cl-tac files

- +5 points each — high quality tests

- +2 points each — valid tests that do not stress dead code elimination or live variable analysis

- +0 points — test cases showing no effort

- 8 points for README.txt file

- +8 points — a clear, thorough description of design

- +4 points — a vague or unclear description omitting details

- +0 points — little effort or if you submit an RTF/DOC/PDF instead of a plain TXT file

- If you are in a group: -10 points if you do not submit a correct team.txt file

# Programming Assignment 3

A3: dead code detection, # 1 assignment objectives.

- Implement a dead code detector for Java.

Dead code elimination is a common compiler optimization in which dead code is removed from a program, and its most challenging part is the detection of dead code. In this programming assignment, you will implement a dead code detector for Java by incorporating the analyses you wrote in the previous two assignments, i.e., live variable analysis and constant propagation . In this document, we will define the scope of dead code (in this assignment) and your task is to implement a detector to recognize them.

Now, we suggest that you first setup the Tai-e project for Assignment 3 (in A3/tai-e/ of the assignment repo) by following Setup Tai-e Assignments .

# 2 Introduction to Dead Code Detection

Dead code is the part of program which is unreachable (i.e., never be executed) or is executed but whose results are never used in any other computation. Eliminating dead code does not affect the program outputs, meanwhile, it can shrink program size and improve the performance. In this assignment, we focus on two kinds of dead code, i.e., unreachable code and dead assignment .

# 2.1 Unreachable Code

Unreachable code in a program will never be executed. We consider two kinds of unreachable code, control-flow unreachable code and unreachable branch , as introduced below.

Control-flow Unreachable Code . In a method, if there exists no control-flow path to a piece of code from the entry of the method, then the code is control-flow unreachable. An example is the code following return statements . Return statements are exits of a method, so the code following them is unreachable. For example, the code at lines 4 and 5 below is control-flow unreachable:

Detection : such code can be easily detected with control-flow graph (CFG) of the method. We just need to traverse the CFG from the method entry and mark reachable statements. When the traversal finishes, the unmarked statements are control-flow unreachable.

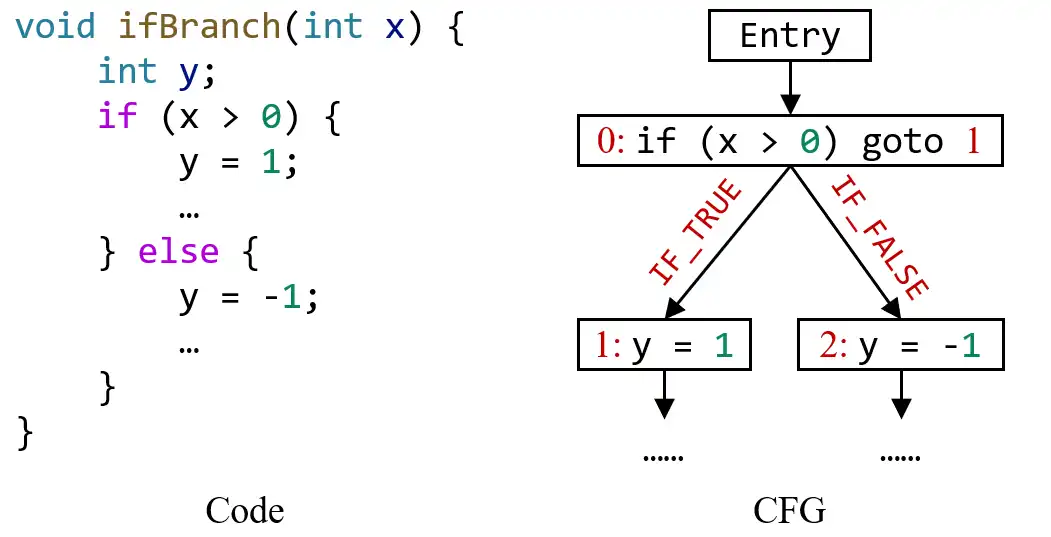

Unreachable Branch . There are two kinds of branching statements in Java: if statement and switch statement, which could form unreachable branches.

For an if-statement, if its condition value is a constant, then one of its two branches is certainly unreachable in any executions, i.e., unreachable branch, and the code inside that branch is unreachable. For example, in the following code snippet, the condition of if-statement at line 3 is always true, so its false-branch (line 6) is an unreachable branch.

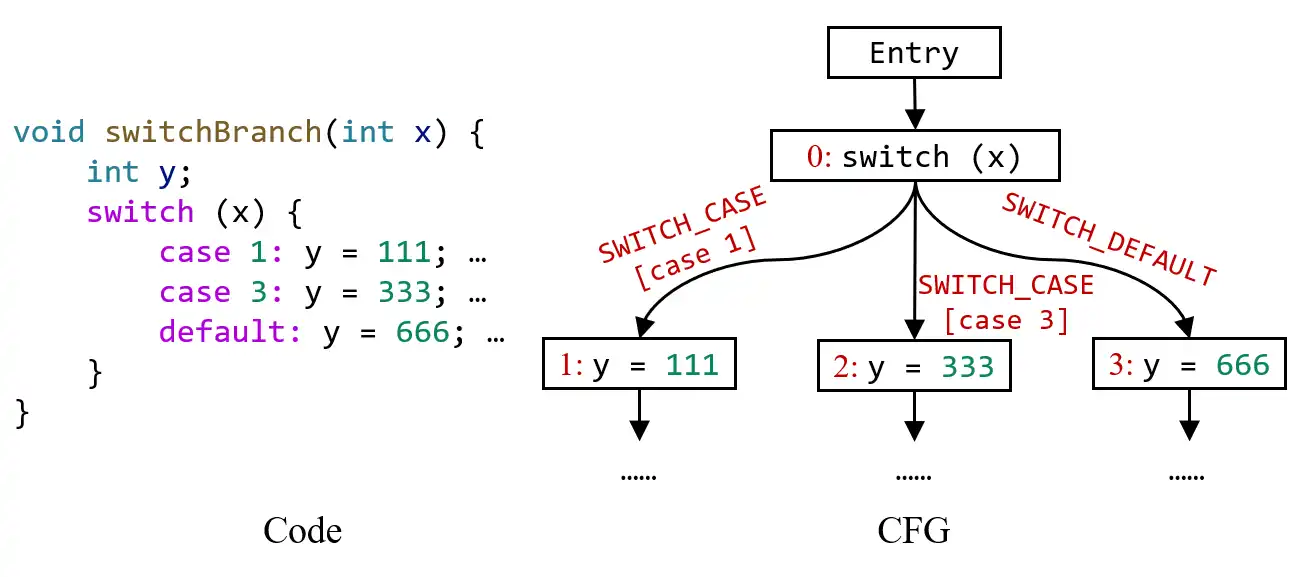

For a switch-statement, if its condition value is a constant, then case branches whose values do not match that condition may be unreachable in any execution and become unreachable branches. For example, in the following code snippet, the condition value ( x ) of switch-statement at line 3 is always 2, thus the branches “ case 1 ” and “ default ” are unreachable. Note that although branch “ case 3 ” does not match the condition value (2) either, it is still reachable as the control flow can fall through to it via branch “ case 2 ”.

Detection : to detect unreachable branches, we need to perform a constant propagation in advance, which tells us whether the condition values are constants, and then during CFG traversal, we do not enter the corresponding unreachable branches.

# 2.2 Dead Assignment

A local variable that is assigned a value but is not read by any subsequent instructions is referred to as a dead assignment, and the assigned variable is dead variable (opposite to live variable ). Dead assignments do not affect program outputs, and thus can be eliminated. For example, the code at lines 3 and 5 below are dead assignments.

Detection : to detect dead assignments, we need to perform a live variable analysis in advance. For an assignment, if its LHS variable is a dead variable (i.e., not live), then we could mark it as a dead assignment, except one case as discussed below.

open in new window . For example, expr is a method call ( x = m() ) which could have many side-effects. For this issue, we have provided an API for you to check whether the RHS expression of an assignment may have side-effects (described in Section 3.2 ). If so, you should not report it as dead code for safety, even x is not live.

# 3 Implementing a Dead Code Detector

# 3.1 tai-e classes you need to know.

To implement a dead code detector, you need to know CFG , IR , and the classes about the analysis results of live variable analysis and constant propagation (such as CPFact , DataflowResult , etc.), which you have been used in previous assignments. Below we will introduce more classes about CFG and IR that you will use in this assignment.

pascal.taie.analysis.graph.cfg.Edge

This class represents the edges in CFG (as a reminder, the nodes of CFG are Stmt ). It provides method getKind() to obtain the kind of the edges (to understand the meaning of each kind, you could read the comments in class Edge.Kind ), and you could check the edge kind like this:

In this assignment, you need to concern four kinds of edges: IF_TRUE , IF_FALSE , SWITCH_CASE , and SWITCH_DEFAULT . IF_TRUE and IF_FALSE represent the two outgoing edges from if-statement to its branches, as shown in this example:

SWITCH_CASE and SWITCH_DEFAULT represent the outgoing edges from switch-statement to its case and default branches, as shown in this example:

For SWITCH_CASE edges, you could obtain their case values (e.g., 1 and 3 in the above example) by getCaseValue() method.

pascal.taie.ir.stmt.If (subclass of Stmt )

This class represents if-statement in the program.

Note that in Tai-e’s IR, while-loop and for-loop are also converted to If statement. For example, this loop (written in Java):

will be converted to Tai-e’s IR like this:

Hence, your implementation should be able to detect dead code related to loops, e.g., if a and b are constants and a <= b , then your analysis should report that x = 233 is dead code.

pascal.taie.ir.stmt.SwitchStmt (subclass of Stmt )

This class represents switch statement in the program. You could read its source code and comments to decide how to use it.

pascal.taie.ir.stmt.AssignStmt (subclass of Stmt )

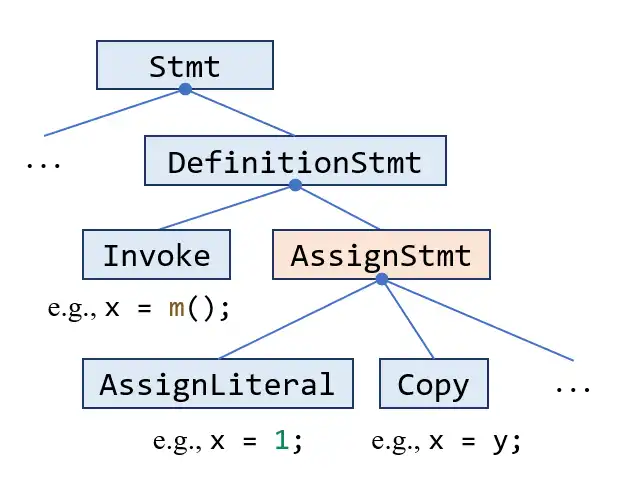

This class represents assignment (i.e., x = ...; ) in the program. You might think it as similar to class DefinitionStmt that you have seen before. The relation between these two classes is shown in this partial class hierarchy:

Actually, AssignStmt is one of two subclasses of DefinitionStmt (another one is Invoke , which represents method call/invocation in the program). It means that AssignStmt represents all assignments except the ones whose right-hand side expression is method call. AssignStmt is used in this assignment to recognize dead assignments. As mentioned in Section 2.2 , method calls could have many side-effects, thus the statements like x = m(); will not be considered as dead assignments even though x is not used later. Thus, you only need to consider AssignStmt as dead assignments.

pascal.taie.analysis.dataflow.analysis.DeadCodeDetection

This class implements dead code detection. It is incomplete, and you need to finish it as explained in Section 3.2 .

# 3.2 Your Task [Important!]

Your task is to implement one API of DeadCodeDetection :

- Set<Stmt> analyze(IR)

This method takes an IR of a method as input, and it is supposed to return a set of dead code in the IR . Your task is to recognize two kinds of dead code described in Section 2 , i.e., unreachable code and dead assignments , and add them into the resulting set.

Dead code detection depends on the analysis results of live variable analysis and constant propagation. Thus, to make dead code detection work, you need to finish LiveVariableAnalysis.java and ConstantPropagation.java . You could copy your implementation from previous assignments. Besides, you also need a complete worklist solver that supports both forward and backward data-flow analyses. You could copy your implementation of Solver.java and WorkListSolver.java from Assignment 2 , and then complete Solver.initializeBackward() and WorkListSolver.doSolveBackward() . Note that you don’t need to submit these source files in this assignment, so that even though your implementation of previous assignments is not entirely correct, it does not affect your score of this assignment.

- In this assignment, Tai-e will automatically run live variable analysis and constant propagation before dead code detection. We have provided the code in DeadCodeDetection.analyze() to obtain the analysis results of these two analyses for the given IR , so that you could directly use them. Besides, this analyze() method contains the code to obtain the CFG for the IR .

- As mentioned in Section 2.2 , the RHS expression of some assignments may have side-effects, and thus cannot be considered as dead assignments. We have provided an auxiliary method hasNoSideEffect(RValue) in DeadCodeDetection for you to check if a RHS expression may have side-effects or not.

- During the CFG traversal, you should use CFG.getOutEdgesOf() to query the successors to be visited later. This API returns the outgoing edges of given node in the CFG, so that you could use the information on the edges (introduced in Section 3.1 ) to help detect unreachable branches.

- You could use ConstantPropagation.evaluate() to evaluate the condition value of if- and switch-statements when detecting unreachable branches.

# 4 Run and Test Your Implementation

You can run the analyses as described in Set Up Tai-e Assignments . In this assignment, Tai-e performs live variable analysis, constant propagation, and dead code detection for each method of input class. To help debugging, it outputs the results of all three analyses:

The OUT facts are null and no dead code is reported as you have not finished the analyses yet. After you implement the analyses, the output should be:

open in new window .

We provide test driver pascal.taie.analysis.dataflow.analysis.DeadCodeTest for this assignment, and you could use it to test your implementation as described in Set Up Tai-e Assignments .

# 5 General Requirements

In this assignment, your only goal is correctness. Efficiency is not your concern.

DO NOT distribute the assignment package to any others.

Last but not least, DO NOT plagiarize. The work must be all your own!

# 6 Submission of Assignment

Your submission should be a zip file, which contains your implementation of

- DeadCodeDetection.java

# 7 Grading

You can refer to Grade Your Programming Assignments to use our online judge to grade your completed assignments.

The points will be allocated for correctness. We will use your submission to analyze the given test files from the src/test/resources/ directory, as well as other tests of our own, and compare your output to that of our solution.

IMAGES

COMMENTS

Code that is unreachable or that does not affect the program (e.g. dead stores) can be eliminated. Example: In the example below, the value assigned to i is never used, and the dead store can be eliminated. The first assignment to global is dead, and the third assignment to global is unreachable; both can be eliminated.

In compiler theory, dead-code elimination (DCE, dead-code removal, dead-code stripping, or dead-code strip) is a compiler optimization to remove dead code (code that does not affect the program results). Removing such code has several benefits: it shrinks program size, an important consideration in some contexts, it reduces resource usage such as the number of bytes to be transferred and it ...

Conclusion. Dead code elimination is a vital technique for optimizing program efficiency and enhancing maintainability. By identifying and removing code segments that are never executed or have no impact on the program's output, developers can improve resource usage, streamline software systems, and facilitate future maintenance and updates.

Once the assignment to e is eliminated, the assignment to d becomes dead and can then be eliminated. Thus, live variable analysis and dead code elimination must be repeated until nothing further changes. You will use the live variable analysis form of data-flow analysis to determine which assignments are dead code.

SSA Aggressive Dead Code Elimination. Turned on by the `-fssa-dce' option. This pass performs elimination of code considered unnecessary because it has no externally visible effects on the program. It operates in linear time. As for helping the linker with dead code elimination go through this presentation. Two major takeaways being:

Dead code elimination is a crucial aspect of optimizing software development through code optimization. By identifying and removing sections of code that are no longer needed or executed, developers can enhance the overall performance and efficiency of their software applications. For instance, consider a hypothetical scenario where a large ...

Build this program with the command: CL /Od /FA Sum.cpp and run with the command Sum. Note that this build disables optimizations, via the /Od switch. On my PC, it takes about 4 seconds to run. Now try compiling optimized-for-speed, using CL /O2 /FA Sum.cpp.

Assignment sinking 2. Dead code elimination Method : Partial Dead Code Elimination Group 16 5 Sinking has two steps Eliminate the definition from one Basic block(A) Insert definition at the entry/exit of other basic blocks reachable from A Step 1 : Assignment Sinking

This paper presents an efficient and effective code optimization algorithm for eliminating partially dead assignments, which become redundant on execution of specific program paths. ... Partial Dead Code Elimination Using Slicing Transformations, PLDI, ACM, (1997) 159-170. Google Scholar Sreedhar, V. C. and Gao, G. R.: A Linear Time Algorithm ...

If it is not & the RTL node has no side effects, we eliminate the RTL node. If the node can not be eliminated, then we must add all symbols which appear on the right hand side to the set of symbols we have seen.

Optimizer note: typically, assignment of actual registers happens later; we assume as many "pseudo registers" tn as we need here; using a new tn every time simplifies tracking.

A dead (faint) code elimination for an assignment pat- tern cs is an assignment elimination for a, where some dead (faint) occurrences of a are eliminated. Figure 9: A 1 2 @ 3 X:=X+1 Faint but not a Dead Assignment It is worth noting that any admissible assignment sink- ing preserves the program semantics.

- If a variable is dead, can reassign its register • Dead code elimination. - Eliminate assignments to variables not read later. - But must not eliminate last assignment to variable (such as instance variable) visible outside CFG. - Can eliminate other dead assignments. - Handle by making all externally visible variables

Once the assignment to e is eliminated, the assignment to d becomes dead and can then be eliminated. Thus, live variable analysis and dead code elimination must be repeated until nothing further changes. You will use the live variable analysis form of data-flow analysis to determine which assignments are dead code.

We can repeat the same process to eliminate partial dead- ness in the assignment a:=b+l. Its use region is shown in Figure 4(b) and the program after the embedding graph for this assignment is inserted is in Figure 4(c). Finally, the program after conditional branches are removed is shown in Figure 4(d).

CSE 401/M501 - Compilers Survey of Code Optimizations Hal Perkins Fall 2023 UW CSE 401/M501 Fall 2023 N-1

#1 Assignment Objectives. Implement a dead code detector for Java. Dead code elimination is a common compiler optimization in which dead code is removed from a program, and its most challenging part is the detection of dead code. In this programming assignment, you will implement a dead code detector for Java by incorporating the analyses you wrote in the previous two assignments, i.e., live ...

However, this partially dead assignment cannot safely be eliminated by moving it to its successors, because this may introduce a new assignment on a path entering node 2 on the left branch. On the other hand, it can safely be eliminated after inserting a synthetic node 81,2 in the critical edge (1, 2), as illustrated in Fig. 10(b). 392 J. Knoop ...

Dead assignment not eliminated The Small Device C Compiler (SDCC), targeting 8-bit architectures Brought to you by: benshi, drdani, epetrich, jesusc, and 3 ... the issue is masked by the peephole optimizer) I wonder why dead code elimination doesn't kill the first assignment. Philipp. Discussion. Ben Shi - 2015-07-22 Category: --> redundancy ...

Whether dead code elimination applies or not, depends on whether the JVM can detect that the result is actually unused. ... The reason is that without volatile, that code is as dead as it was without the assignment. When you write to a non-volatile variable, the result of that operation is not guaranteed to be seen by other threads accessing ...

Usually, for this warning we have following information: Description The optimizer has removed a dead assignment to a local variable whose value is not changed or used subsequently. Such assignments may result from different optimizer stages. Cause The optimizer tracks constant assignments and finally evaluates the constant expression to ...