2024 Guide: 20+ Essential Data Science Case Study Interview Questions

Case studies are often the most challenging aspect of data science interview processes. They are crafted to resemble a company’s existing or previous projects, assessing a candidate’s ability to tackle prompts, convey their insights, and navigate obstacles.

To excel in data science case study interviews, practice is crucial. It will enable you to develop strategies for approaching case studies, asking the right questions to your interviewer, and providing responses that showcase your skills while adhering to time constraints.

The best way of doing this is by using a framework for answering case studies. For example, you could use the product metrics framework and the A/B testing framework to answer most case studies that come up in data science interviews.

There are four main types of data science case studies:

- Product Case Studies - This type of case study tackles a specific product or feature offering, often tied to the interviewing company. Interviewers are generally looking for a sense of business sense geared towards product metrics.

- Data Analytics Case Study Questions - Data analytics case studies ask you to propose possible metrics in order to investigate an analytics problem. Additionally, you must write a SQL query to pull your proposed metrics, and then perform analysis using the data you queried, just as you would do in the role.

- Modeling and Machine Learning Case Studies - Modeling case studies are more varied and focus on assessing your intuition for building models around business problems.

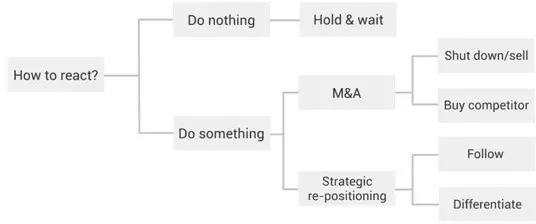

- Business Case Questions - Similar to product questions, business cases tackle issues or opportunities specific to the organization that is interviewing you. Often, candidates must assess the best option for a certain business plan being proposed, and formulate a process for solving the specific problem.

How Case Study Interviews Are Conducted

Oftentimes as an interviewee, you want to know the setting and format in which to expect the above questions to be asked. Unfortunately, this is company-specific: Some prefer real-time settings, where candidates actively work through a prompt after receiving it, while others offer some period of days (say, a week) before settling in for a presentation of your findings.

It is therefore important to have a system for answering these questions that will accommodate all possible formats, such that you are prepared for any set of circumstances (we provide such a framework below).

Why Are Case Study Questions Asked?

Case studies assess your thought process in answering data science questions. Specifically, interviewers want to see that you have the ability to think on your feet, and to work through real-world problems that likely do not have a right or wrong answer. Real-world case studies that are affecting businesses are not binary; there is no black-and-white, yes-or-no answer. This is why it is important that you can demonstrate decisiveness in your investigations, as well as show your capacity to consider impacts and topics from a variety of angles. Once you are in the role, you will be dealing directly with the ambiguity at the heart of decision-making.

Perhaps most importantly, case interviews assess your ability to effectively communicate your conclusions. On the job, data scientists exchange information across teams and divisions, so a significant part of the interviewer’s focus will be on how you process and explain your answer.

Quick tip: Because case questions in data science interviews tend to be product- and company-focused, it is extremely beneficial to research current projects and developments across different divisions , as these initiatives might end up as the case study topic.

How to Answer Data Science Case Study Questions (The Framework)

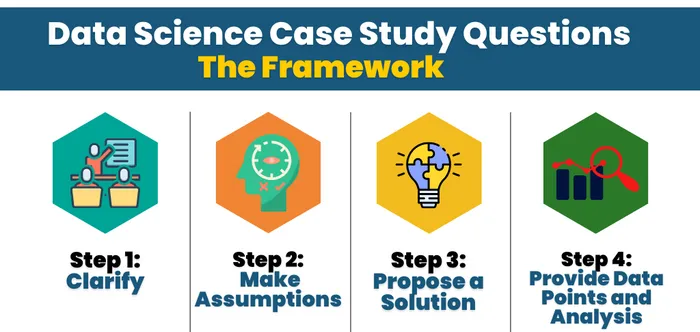

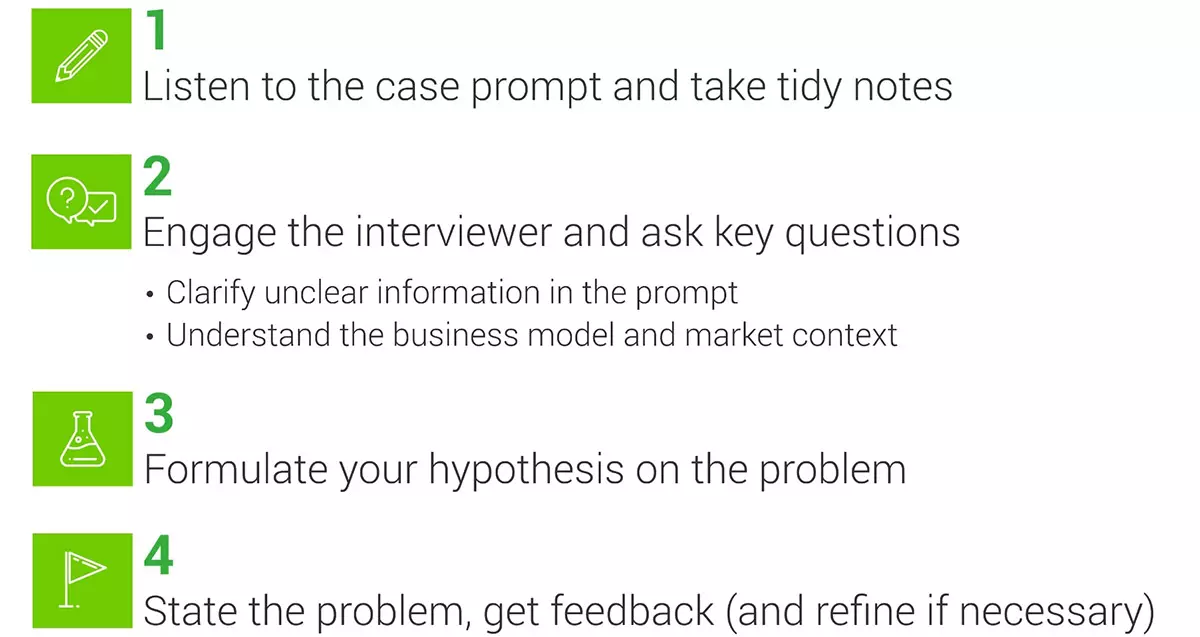

There are four main steps to tackling case questions in Data Science interviews, regardless of the type: clarify, make assumptions, gather context, and provide data points and analysis.

Step 1: Clarify

Clarifying is used to gather more information . More often than not, these case studies are designed to be confusing and vague. There will be unorganized data intentionally supplemented with extraneous or omitted information, so it is the candidate’s responsibility to dig deeper, filter out bad information, and fill gaps. Interviewers will be observing how an applicant asks questions and reach their solution.

For example, with a product question, you might take into consideration:

- What is the product?

- How does the product work?

- How does the product align with the business itself?

Step 2: Make Assumptions

When you have made sure that you have evaluated and understand the dataset, start investigating and discarding possible hypotheses. Developing insights on the product at this stage complements your ability to glean information from the dataset, and the exploration of your ideas is paramount to forming a successful hypothesis. You should be communicating your hypotheses with the interviewer, such that they can provide clarifying remarks on how the business views the product, and to help you discard unworkable lines of inquiry. If we continue to think about a product question, some important questions to evaluate and draw conclusions from include:

- Who uses the product? Why?

- What are the goals of the product?

- How does the product interact with other services or goods the company offers?

The goal of this is to reduce the scope of the problem at hand, and ask the interviewer questions upfront that allow you to tackle the meat of the problem instead of focusing on less consequential edge cases.

Step 3: Propose a Solution

Now that a hypothesis is formed that has incorporated the dataset and an understanding of the business-related context, it is time to apply that knowledge in forming a solution. Remember, the hypothesis is simply a refined version of the problem that uses the data on hand as its basis to being solved. The solution you create can target this narrow problem, and you can have full faith that it is addressing the core of the case study question.

Keep in mind that there isn’t a single expected solution, and as such, there is a certain freedom here to determine the exact path for investigation.

Step 4: Provide Data Points and Analysis

Finally, providing data points and analysis in support of your solution involves choosing and prioritizing a main metric. As with all prior factors, this step must be tied back to the hypothesis and the main goal of the problem. From that foundation, it is important to trace through and analyze different examples– from the main metric–in order to validate the hypothesis.

Quick tip: Every case question tends to have multiple solutions. Therefore, you should absolutely consider and communicate any potential trade-offs of your chosen method. Be sure you are communicating the pros and cons of your approach.

Note: In some special cases, solutions will also be assessed on the ability to convey information in layman’s terms. Regardless of the structure, applicants should always be prepared to solve through the framework outlined above in order to answer the prompt.

The Role of Effective Communication

There have been multiple articles and discussions conducted by interviewers behind the Data Science Case Study portion, and they all boil down success in case studies to one main factor: effective communication.

All the analysis in the world will not help if interviewees cannot verbally work through and highlight their thought process within the case study. Again, interviewers are keyed at this stage of the hiring process to look for well-developed “soft-skills” and problem-solving capabilities. Demonstrating those traits is key to succeeding in this round.

To this end, the best advice possible would be to practice actively going through example case studies, such as those available in the Interview Query questions bank . Exploring different topics with a friend in an interview-like setting with cold recall (no Googling in between!) will be uncomfortable and awkward, but it will also help reveal weaknesses in fleshing out the investigation.

Don’t worry if the first few times are terrible! Developing a rhythm will help with gaining self-confidence as you become better at assessing and learning through these sessions.

Finding the right data science talent for case studies? OutSearch.ai ’s AI-driven platform streamlines this by pinpointing candidates who excel in real-world scenarios. Discover how they can help you match with top problem-solvers.

Product Case Study Questions

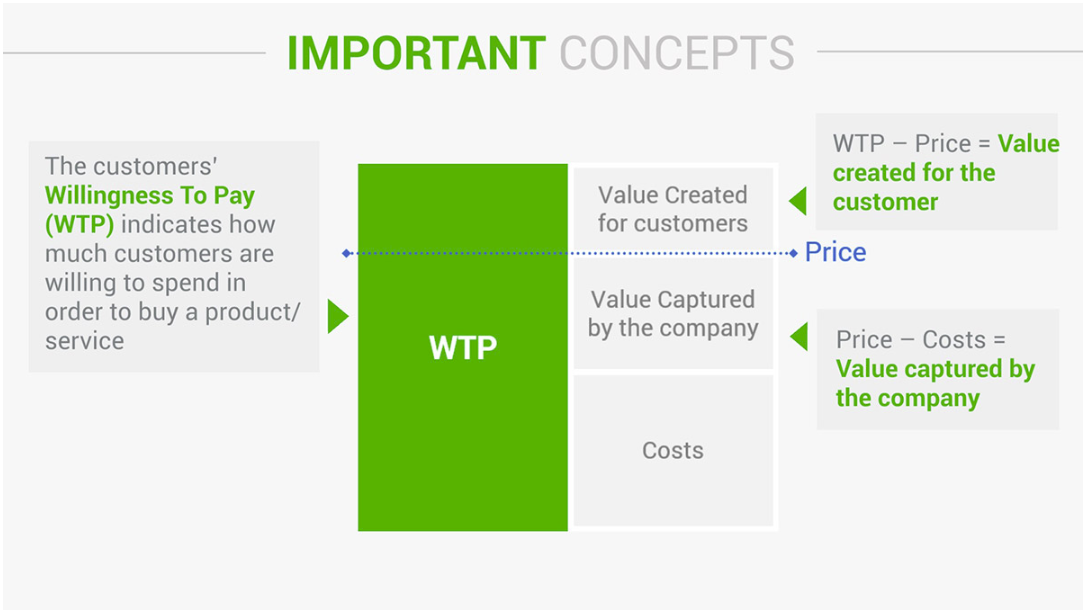

With product data science case questions , the interviewer wants to get an idea of your product sense intuition. Specifically, these questions assess your ability to identify which metrics should be proposed in order to understand a product.

1. How would you measure the success of private stories on Instagram, where only certain close friends can see the story?

Start by answering: What is the goal of the private story feature on Instagram? You can’t evaluate “success” without knowing what the initial objective of the product was, to begin with.

One specific goal of this feature would be to drive engagement. A private story could potentially increase interactions between users, and grow awareness of the feature.

Now, what types of metrics might you propose to assess user engagement? For a high-level overview, we could look at:

- Average stories per user per day

- Average Close Friends stories per user per day

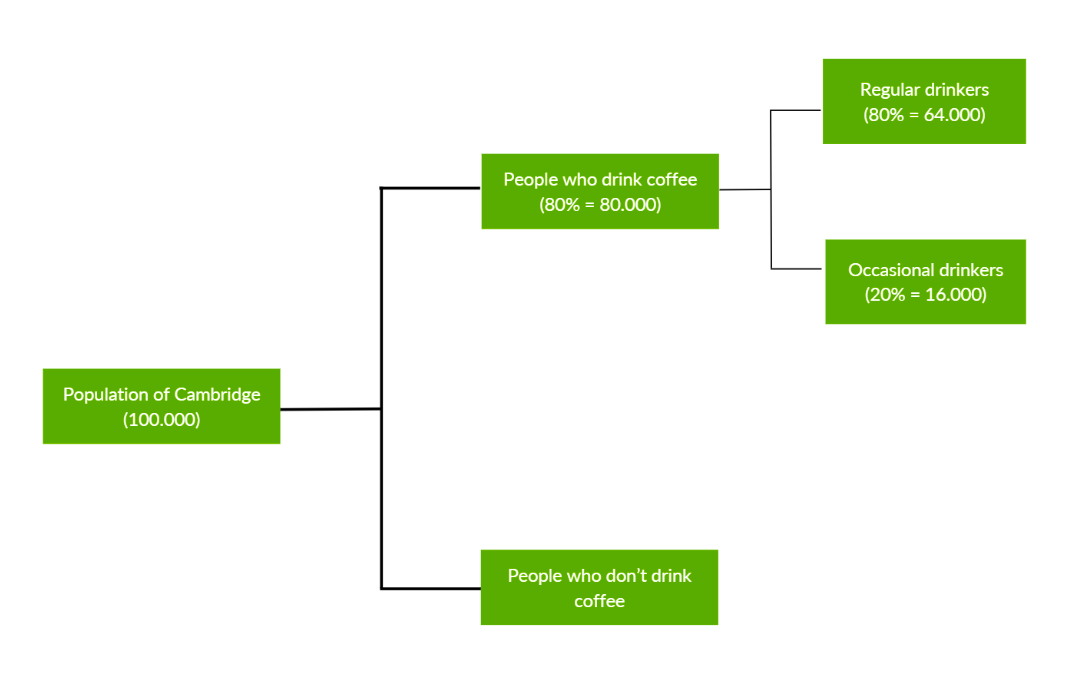

However, we would also want to further bucket our users to see the effect that Close Friends stories have on user engagement. By bucketing users by age, date joined, or another metric, we could see how engagement is affected within certain populations, giving us insight on success that could be lost if looking at the overall population.

2. How would you measure the success of acquiring new users through a 30-day free trial at Netflix?

More context: Netflix is offering a promotion where users can enroll in a 30-day free trial. After 30 days, customers will automatically be charged based on their selected package. How would you measure acquisition success, and what metrics would you propose to measure the success of the free trial?

One way we can frame the concept specifically to this problem is to think about controllable inputs, external drivers, and then the observable output . Start with the major goals of Netflix:

- Acquiring new users to their subscription plan.

- Decreasing churn and increasing retention.

Looking at acquisition output metrics specifically, there are several top-level stats that we can look at, including:

- Conversion rate percentage

- Cost per free trial acquisition

- Daily conversion rate

With these conversion metrics, we would also want to bucket users by cohort. This would help us see the percentage of free users who were acquired, as well as retention by cohort.

3. How would you measure the success of Facebook Groups?

Start by considering the key function of Facebook Groups . You could say that Groups are a way for users to connect with other users through a shared interest or real-life relationship. Therefore, the user’s goal is to experience a sense of community, which will also drive our business goal of increasing user engagement.

What general engagement metrics can we associate with this value? An objective metric like Groups monthly active users would help us see if Facebook Groups user base is increasing or decreasing. Plus, we could monitor metrics like posting, commenting, and sharing rates.

There are other products that Groups impact, however, specifically the Newsfeed. We need to consider Newsfeed quality and examine if updates from Groups clog up the content pipeline and if users prioritize those updates over other Newsfeed items. This evaluation will give us a better sense of if Groups actually contribute to higher engagement levels.

4. How would you analyze the effectiveness of a new LinkedIn chat feature that shows a “green dot” for active users?

Note: Given engineering constraints, the new feature is impossible to A/B test before release. When you approach case study questions, remember always to clarify any vague terms. In this case, “effectiveness” is very vague. To help you define that term, you would want first to consider what the goal is of adding a green dot to LinkedIn chat.

5. How would you diagnose why weekly active users are up 5%, but email notification open rates are down 2%?

What assumptions can you make about the relationship between weekly active users and email open rates? With a case question like this, you would want to first answer that line of inquiry before proceeding.

Hint: Open rate can decrease when its numerator decreases (fewer people open emails) or its denominator increases (more emails are sent overall). Taking these two factors into account, what are some hypotheses we can make about our decrease in the open rate compared to our increase in weekly active users?

Data Analytics Case Study Questions

Data analytics case studies ask you to dive into analytics problems. Typically these questions ask you to examine metrics trade-offs or investigate changes in metrics. In addition to proposing metrics, you also have to write SQL queries to generate the metrics, which is why they are sometimes referred to as SQL case study questions .

6. Using the provided data, generate some specific recommendations on how DoorDash can improve.

In this DoorDash analytics case study take-home question you are provided with the following dataset:

- Customer order time

- Restaurant order time

- Driver arrives at restaurant time

- Order delivered time

- Customer ID

- Amount of discount

- Amount of tip

With a dataset like this, there are numerous recommendations you can make. A good place to start is by thinking about the DoorDash marketplace, which includes drivers, riders and merchants. How could you analyze the data to increase revenue, driver/user retention and engagement in that marketplace?

7. After implementing a notification change, the total number of unsubscribes increases. Write a SQL query to show how unsubscribes are affecting login rates over time.

This is a Twitter data science interview question , and let’s say you implemented this new feature using an A/B test. You are provided with two tables: events (which includes login, nologin and unsubscribe ) and variants (which includes control or variant ).

We are tasked with comparing multiple different variables at play here. There is the new notification system, along with its effect of creating more unsubscribes. We can also see how login rates compare for unsubscribes for each bucket of the A/B test.

Given that we want to measure two different changes, we know we have to use GROUP BY for the two variables: date and bucket variant. What comes next?

8. Write a query to disprove the hypothesis: Data scientists who switch jobs more often end up getting promoted faster.

More context: You are provided with a table of user experiences representing each person’s past work experiences and timelines.

This question requires a bit of creative problem-solving to understand how we can prove or disprove the hypothesis. The hypothesis is that a data scientist that ends up switching jobs more often gets promoted faster.

Therefore, in analyzing this dataset, we can prove this hypothesis by separating the data scientists into specific segments on how often they jump in their careers.

For example, if we looked at the number of job switches for data scientists that have been in their field for five years, we could prove the hypothesis that the number of data science managers increased as the number of career jumps also rose.

- Never switched jobs: 10% are managers

- Switched jobs once: 20% are managers

- Switched jobs twice: 30% are managers

- Switched jobs three times: 40% are managers

9. Write a SQL query to investigate the hypothesis: Click-through rate is dependent on search result rating.

More context: You are given a table with search results on Facebook, which includes query (search term), position (the search position), and rating (human rating from 1 to 5). Each row represents a single search and includes a column has_clicked that represents whether a user clicked or not.

This question requires us to formulaically do two things: create a metric that can analyze a problem that we face and then actually compute that metric.

Think about the data we want to display to prove or disprove the hypothesis. Our output metric is CTR (clickthrough rate). If CTR is high when search result ratings are high and CTR is low when the search result ratings are low, then our hypothesis is proven. However, if the opposite is true, CTR is low when the search result ratings are high, or there is no proven correlation between the two, then our hypothesis is not proven.

With that structure in mind, we can then look at the results split into different search rating buckets. If we measure the CTR for queries that all have results rated at 1 and then measure CTR for queries that have results rated at lower than 2, etc., we can measure to see if the increase in rating is correlated with an increase in CTR.

10. How would you help a supermarket chain determine which product categories should be prioritized in their inventory restructuring efforts?

You’re working as a Data Scientist in a local grocery chain’s data science team. The business team has decided to allocate store floor space by product category (e.g., electronics, sports and travel, food and beverages). Help the team understand which product categories to prioritize as well as answering questions such as how customer demographics affect sales, and how each city’s sales per product category differs.

Check out our Data Analytics Learning Path .

Modeling and Machine Learning Case Questions

Machine learning case questions assess your ability to build models to solve business problems. These questions can range from applying machine learning to solve a specific case scenario to assessing the validity of a hypothetical existing model . The modeling case study requires a candidate to evaluate and explain any certain part of the model building process.

11. Describe how you would build a model to predict Uber ETAs after a rider requests a ride.

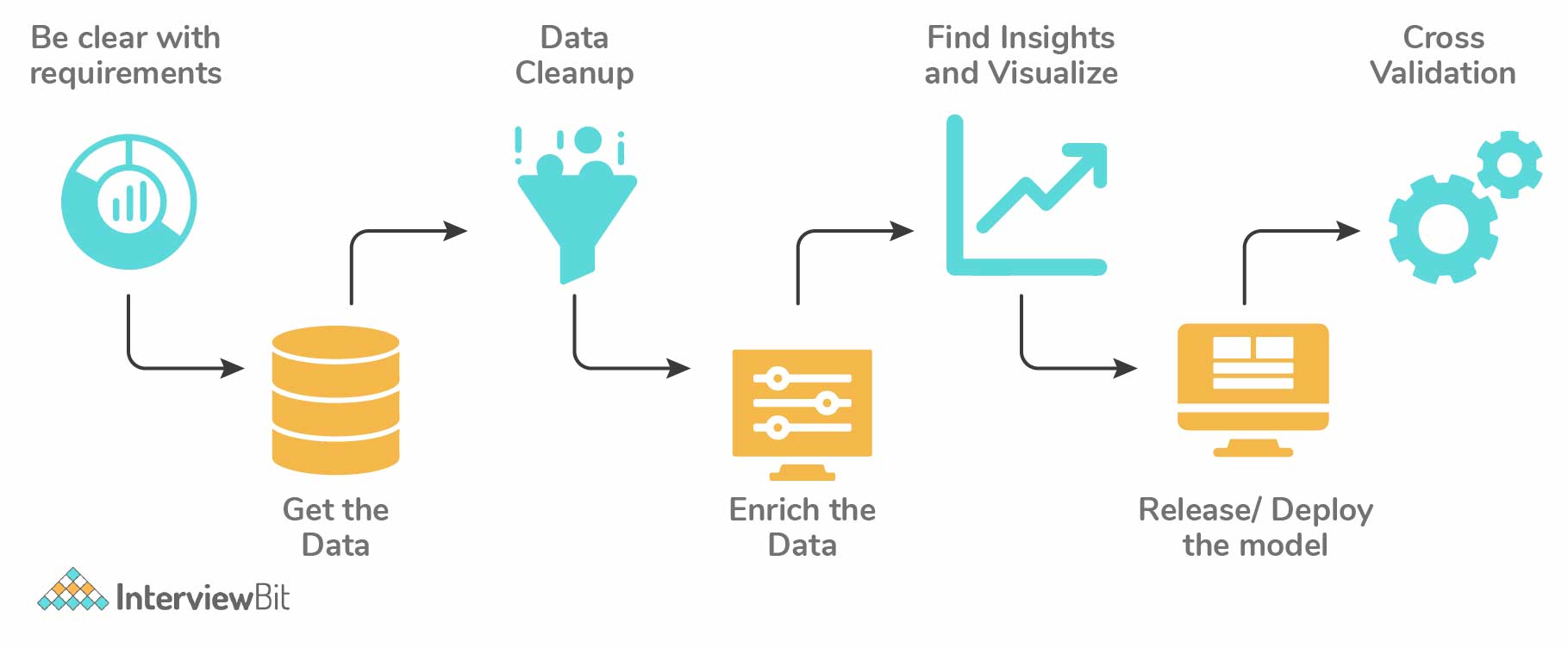

Common machine learning case study problems like this are designed to explain how you would build a model. Many times this can be scoped down to specific parts of the model building process. Examining the example above, we could break it up into:

How would you evaluate the predictions of an Uber ETA model?

What features would you use to predict the Uber ETA for ride requests?

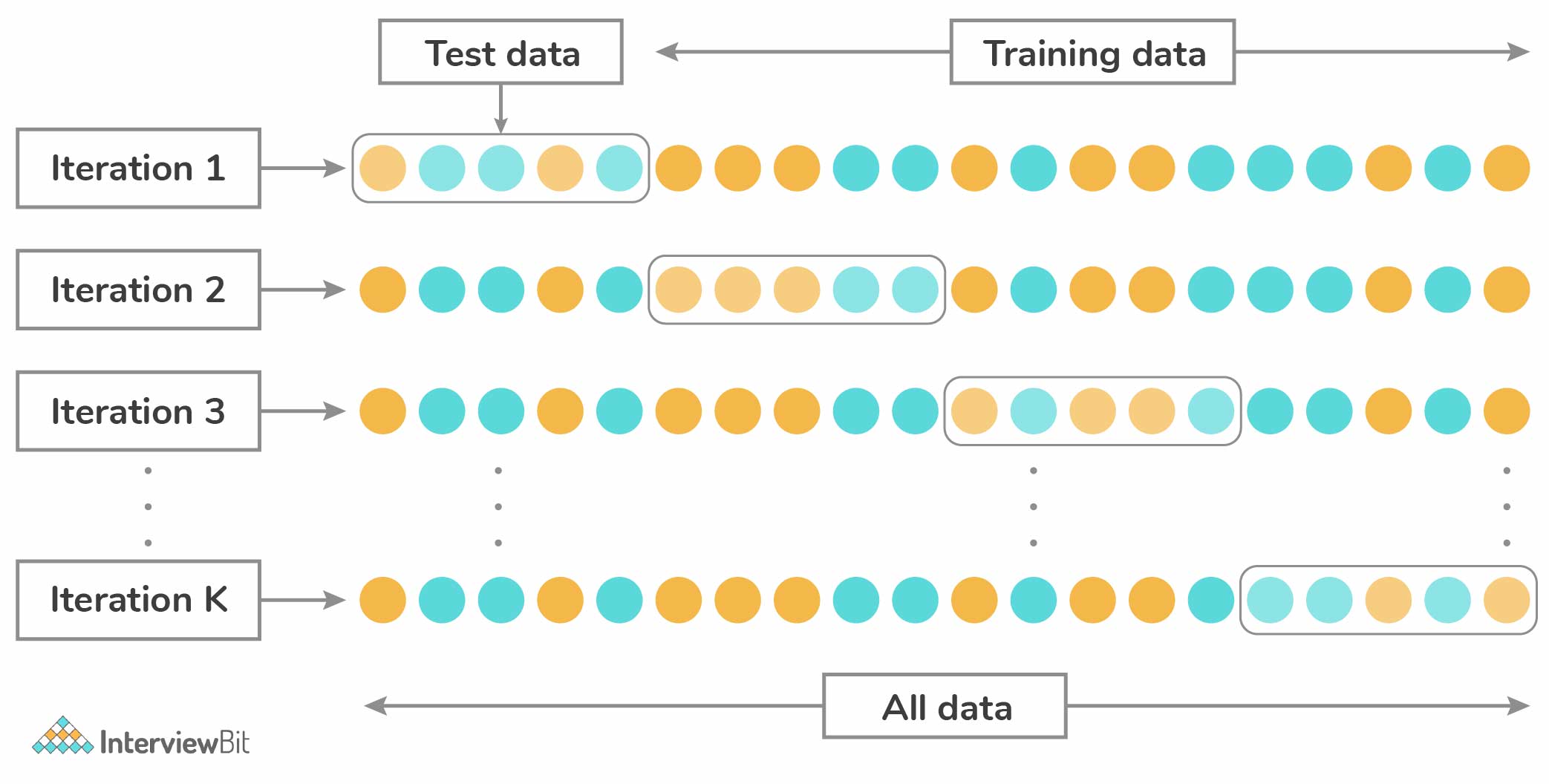

Our recommended framework breaks down a modeling and machine learning case study to individual steps in order to tackle each one thoroughly. In each full modeling case study, you will want to go over:

- Data processing

- Feature Selection

- Model Selection

- Cross Validation

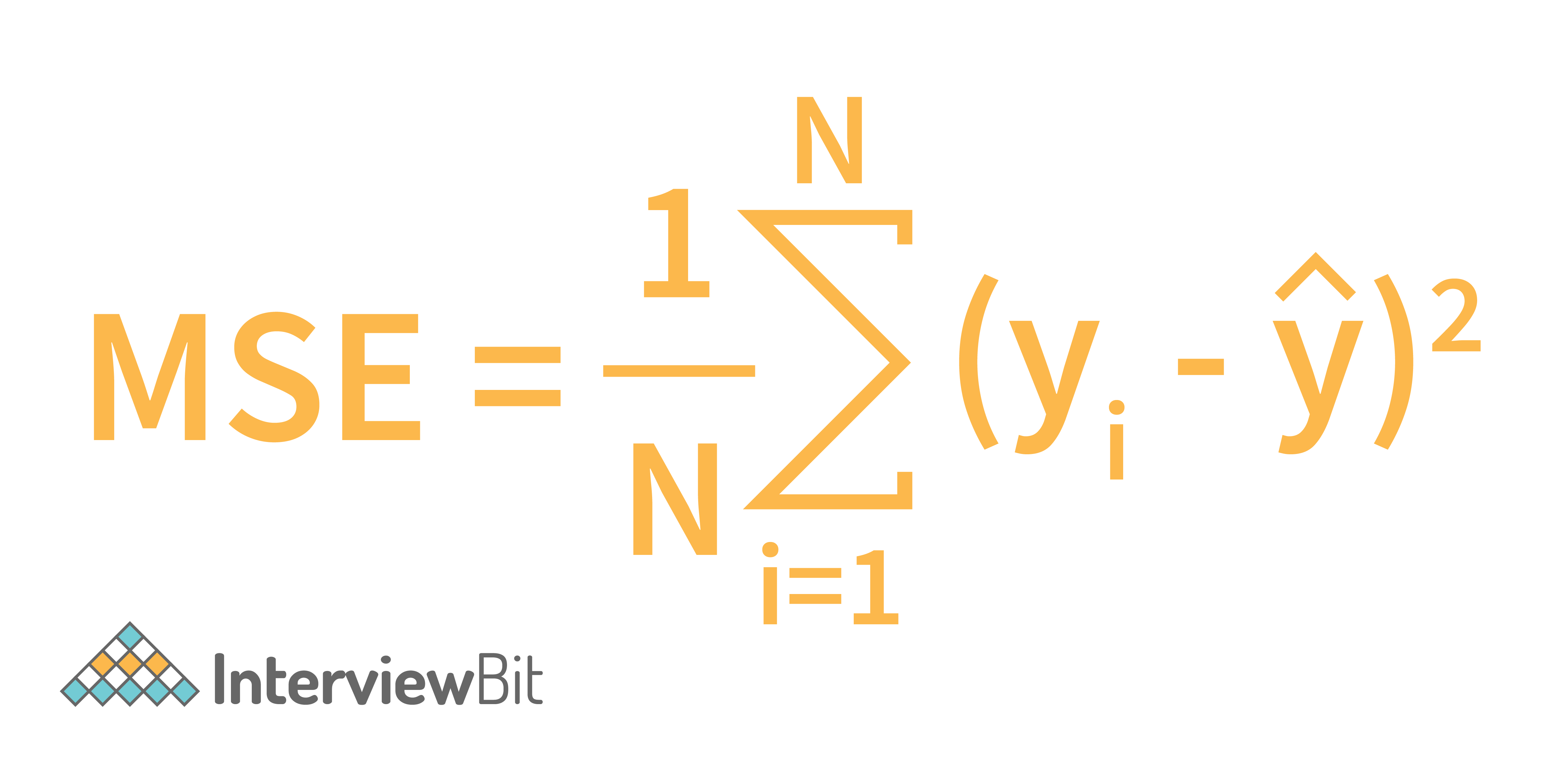

- Evaluation Metrics

- Testing and Roll Out

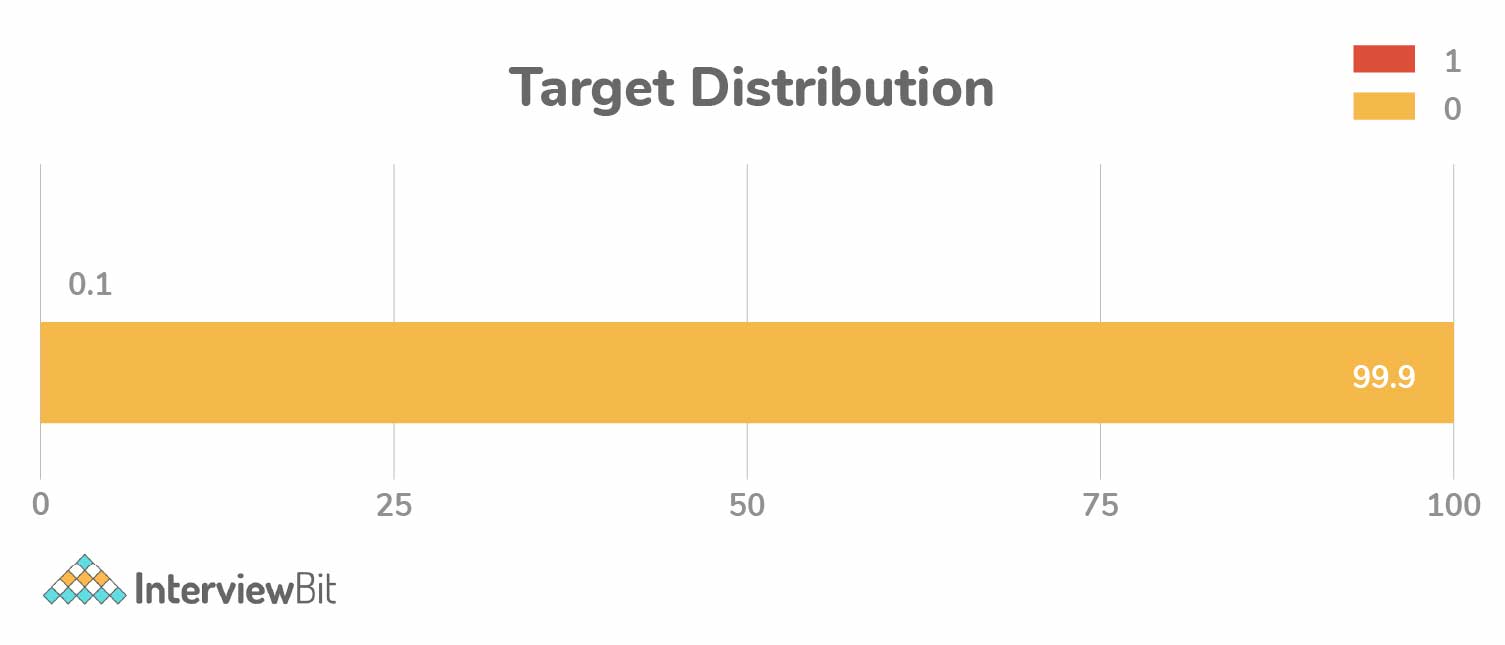

12. How would you build a model that sends bank customers a text message when fraudulent transactions are detected?

Additionally, the customer can approve or deny the transaction via text response.

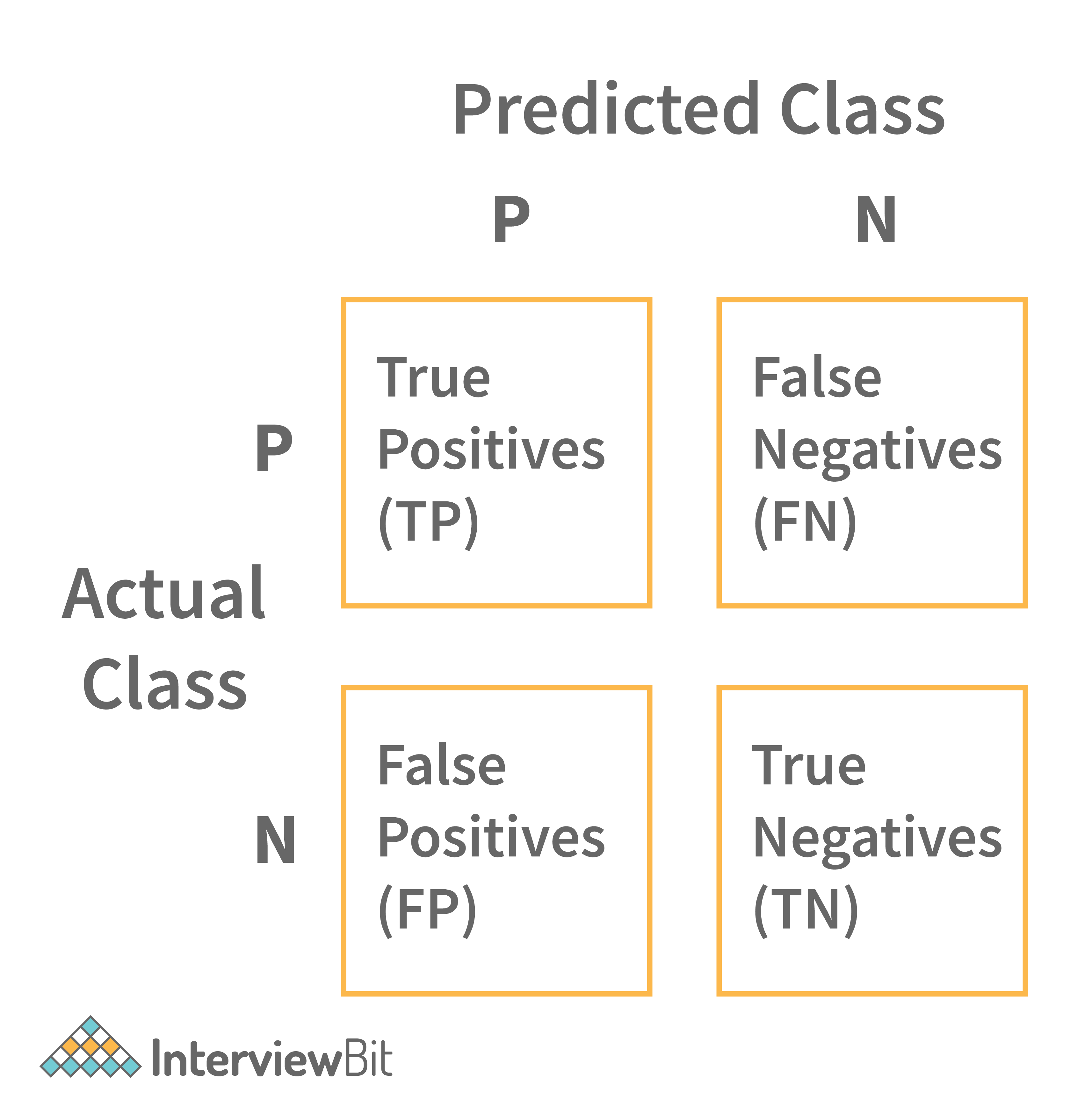

Let’s start out by understanding what kind of model would need to be built. We know that since we are working with fraud, there has to be a case where either a fraudulent transaction is or is not present .

Hint: This problem is a binary classification problem. Given the problem scenario, what considerations do we have to think about when first building this model? What would the bank fraud data look like?

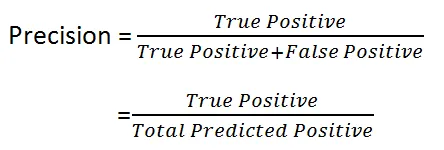

13. How would you design the inputs and outputs for a model that detects potential bombs at a border crossing?

Additional questions. How would you test the model and measure its accuracy? Remember the equation for precision:

Because we can not have high TrueNegatives, recall should be high when assessing the model.

14. Which model would you choose to predict Airbnb booking prices: Linear regression or random forest regression?

Start by answering this question: What are the main differences between linear regression and random forest?

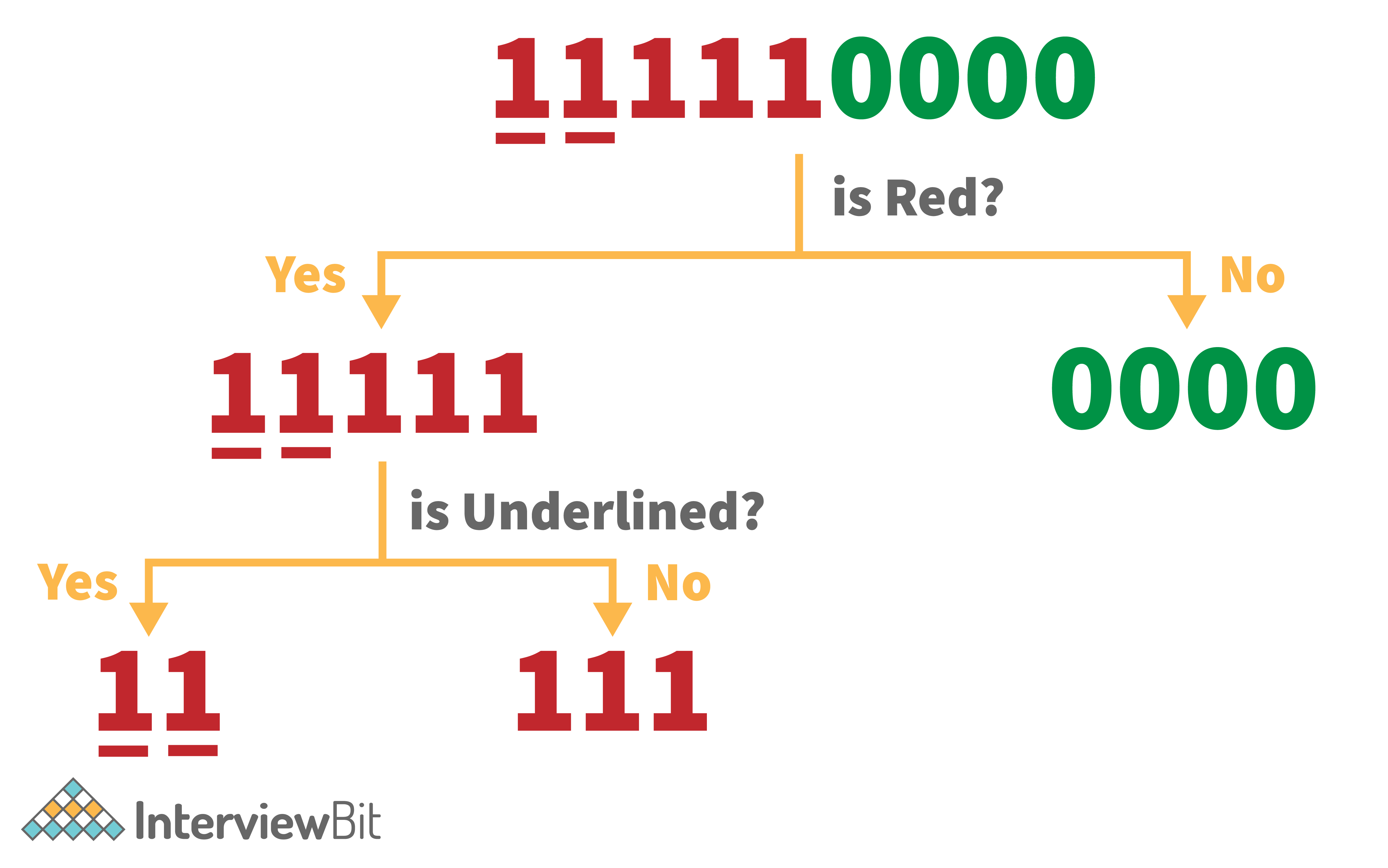

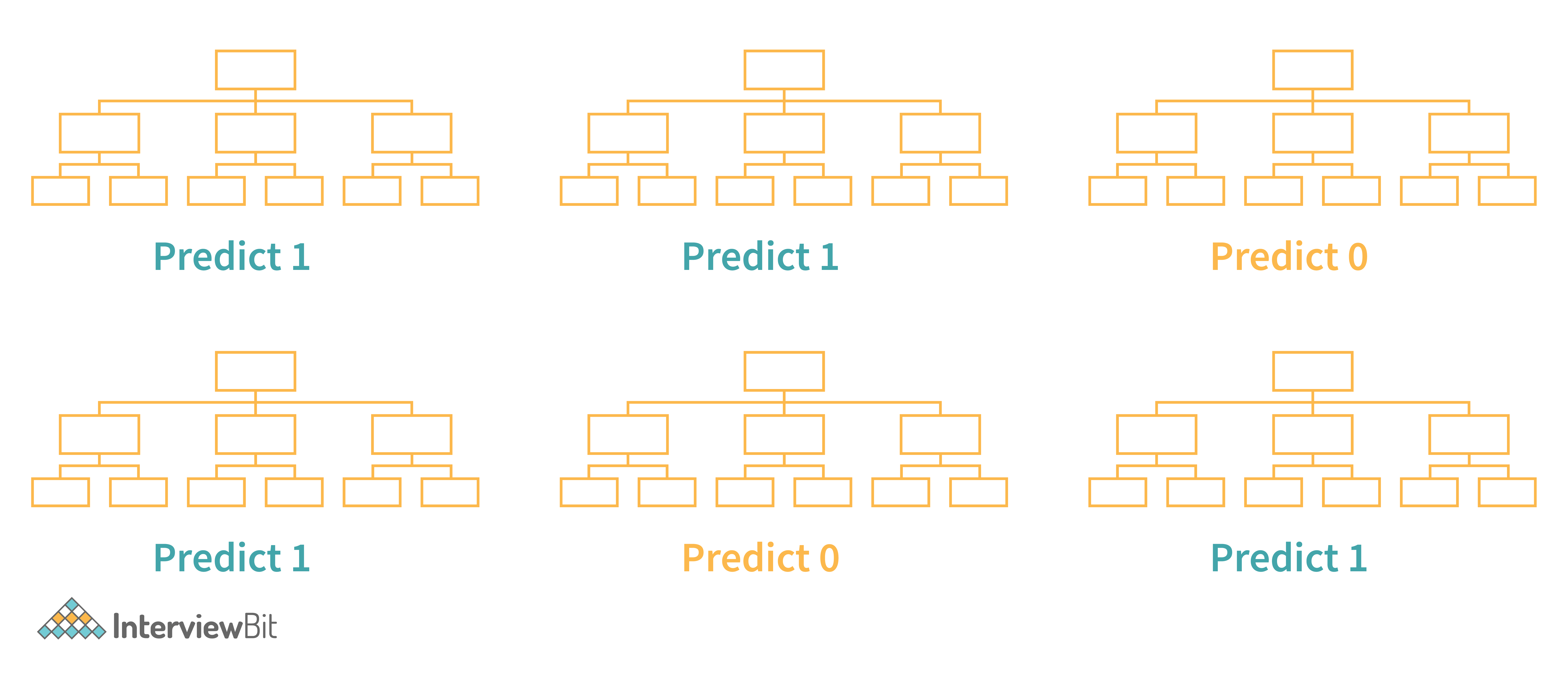

Random forest regression is based on the ensemble machine learning technique of bagging . The two key concepts of random forests are:

- Random sampling of training observations when building trees.

- Random subsets of features for splitting nodes.

Random forest regressions also discretize continuous variables, since they are based on decision trees and can split categorical and continuous variables.

Linear regression, on the other hand, is the standard regression technique in which relationships are modeled using a linear predictor function, the most common example represented as y = Ax + B.

Let’s see how each model is applicable to Airbnb’s bookings. One thing we need to do in the interview is to understand more context around the problem of predicting bookings. To do so, we need to understand which features are present in our dataset.

We can assume the dataset will have features like:

- Location features.

- Seasonality.

- Number of bedrooms and bathrooms.

- Private room, shared, entire home, etc.

- External demand (conferences, festivals, sporting events).

Which model would be the best fit for this feature set?

15. Using a binary classification model that pre-approves candidates for a loan, how would you give each rejected application a rejection reason?

More context: You do not have access to the feature weights. Start by thinking about the problem like this: How would the problem change if we had ten, one thousand, or ten thousand applicants that had gone through the loan qualification program?

Pretend that we have three people: Alice, Bob, and Candace that have all applied for a loan. Simplifying the financial lending loan model, let us assume the only features are the total number of credit cards , the dollar amount of current debt , and credit age . Here is a scenario:

Alice: 10 credit cards, 5 years of credit age, $\$20K$ in debt

Bob: 10 credit cards, 5 years of credit age, $\$15K$ in debt

Candace: 10 credit cards, 5 years of credit age, $\$10K$ in debt

If Candace is approved, we can logically point to the fact that Candace’s $\$10K$ in debt swung the model to approve her for a loan. How did we reason this out?

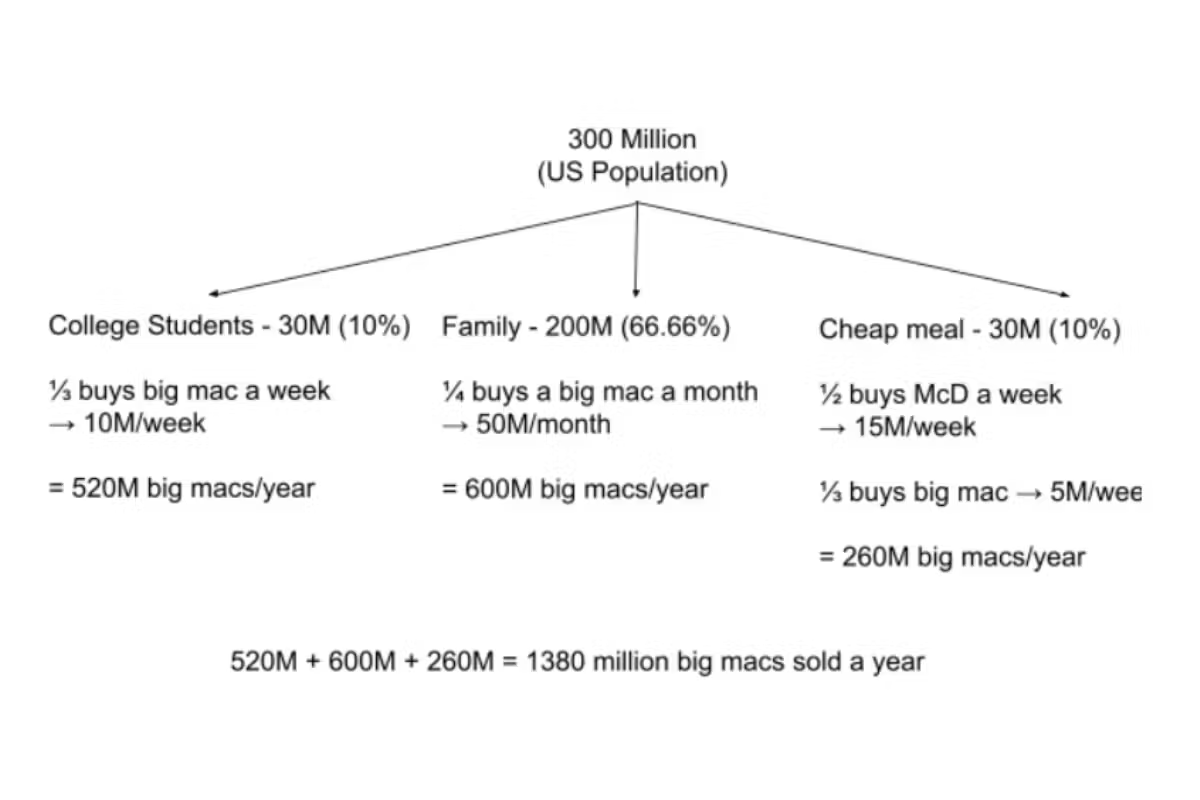

If the sample size analyzed was instead thousands of people who had the same number of credit cards and credit age with varying levels of debt, we could figure out the model’s average loan acceptance rate for each numerical amount of current debt. Then we could plot these on a graph to model the y-value (average loan acceptance) versus the x-value (dollar amount of current debt). These graphs are called partial dependence plots.

Business Case Questions

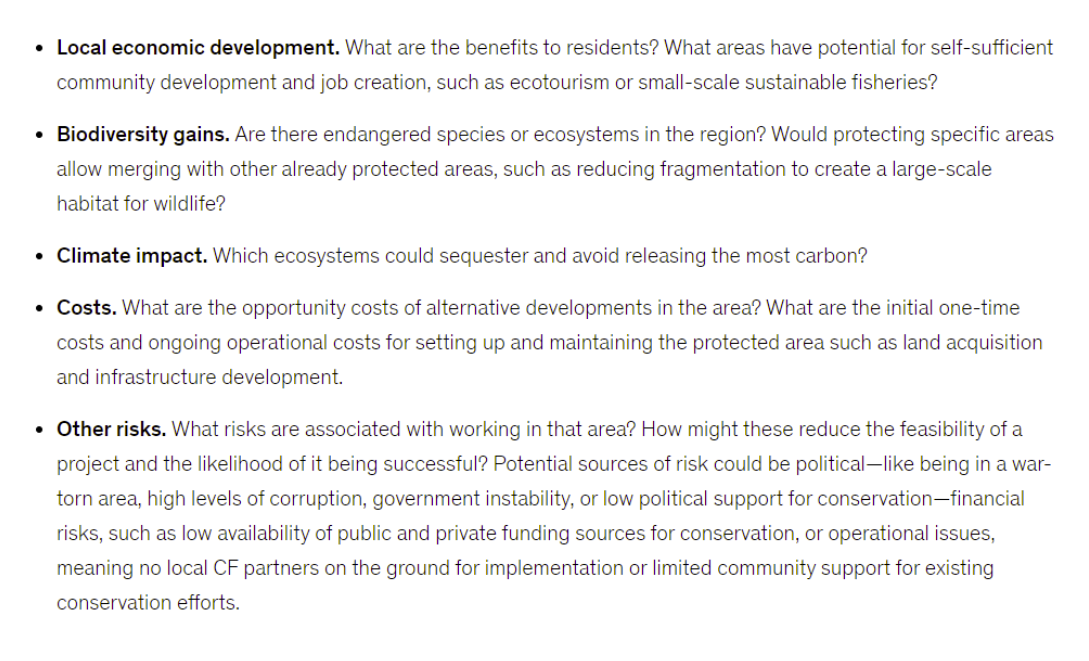

In data science interviews, business case study questions task you with addressing problems as they relate to the business. You might be asked about topics like estimation and calculation, as well as applying problem-solving to a larger case. One tip: Be sure to read up on the company’s products and ventures before your interview to expose yourself to possible topics.

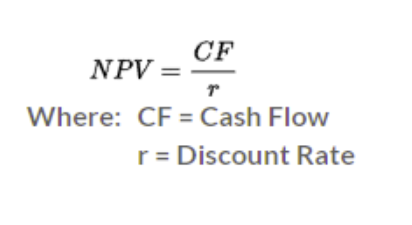

16. How would you estimate the average lifetime value of customers at a business that has existed for just over one year?

More context: You know that the product costs $\$100$ per month, averages 10% in monthly churn, and the average customer stays for 3.5 months.

Remember that lifetime value is defined by the prediction of the net revenue attributed to the entire future relationship with all customers averaged. Therefore, $\$100$ * 3.5 = $\$350$… But is it that simple?

Because this company is so new, our average customer length (3.5 months) is biased from the short possible length of time that anyone could have been a customer (one year maximum). How would you then model out LTV knowing the churn rate and product cost?

17. How would you go about removing duplicate product names (e.g. iPhone X vs. Apple iPhone 10) in a massive database?

See the full solution for this Amazon business case question on YouTube:

18. What metrics would you monitor to know if a 50% discount promotion is a good idea for a ride-sharing company?

This question has no correct answer and is rather designed to test your reasoning and communication skills related to product/business cases. First, start by stating your assumptions. What are the goals of this promotion? It is likely that the goal of the discount is to grow revenue and increase retention. A few other assumptions you might make include:

- The promotion will be applied uniformly across all users.

- The 50% discount can only be used for a single ride.

How would we be able to evaluate this pricing strategy? An A/B test between the control group (no discount) and test group (discount) would allow us to evaluate Long-term revenue vs average cost of the promotion. Using these two metrics how could we measure if the promotion is a good idea?

19. A bank wants to create a new partner card, e.g. Whole Foods Chase credit card). How would you determine what the next partner card should be?

More context: Say you have access to all customer spending data. With this question, there are several approaches you can take. As your first step, think about the business reason for credit card partnerships: they help increase acquisition and customer retention.

One of the simplest solutions would be to sum all transactions grouped by merchants. This would identify the merchants who see the highest spending amounts. However, the one issue might be that some merchants have a high-spend value but low volume. How could we counteract this potential pitfall? Is the volume of transactions even an important factor in our credit card business? The more questions you ask, the more may spring to mind.

20. How would you assess the value of keeping a TV show on a streaming platform like Netflix?

Say that Netflix is working on a deal to renew the streaming rights for a show like The Office , which has been on Netflix for one year. Your job is to value the benefit of keeping the show on Netflix.

Start by trying to understand the reasons why Netflix would want to renew the show. Netflix mainly has three goals for what their content should help achieve:

- Acquisition: To increase the number of subscribers.

- Retention: To increase the retention of active subscribers and keep them on as paying members.

- Revenue: To increase overall revenue.

One solution to value the benefit would be to estimate a lower and upper bound to understand the percentage of users that would be affected by The Office being removed. You could then run these percentages against your known acquisition and retention rates.

21. How would you determine which products are to be put on sale?

Let’s say you work at Amazon. It’s nearing Black Friday, and you are tasked with determining which products should be put on sale. You have access to historical pricing and purchasing data from items that have been on sale before. How would you determine what products should go on sale to best maximize profit during Black Friday?

To start with this question, aggregate data from previous years for products that have been on sale during Black Friday or similar events. You can then compare elements such as historical sales volume, inventory levels, and profit margins.

Learn More About Feature Changes

This course is designed teach you everything you need to know about feature changes:

More Data Science Interview Resources

Case studies are one of the most common types of data science interview questions . Practice with the data science course from Interview Query, which includes product and machine learning modules.

Data science case interviews (what to expect & how to prepare)

Data science case studies are tough to crack: they’re open-ended, technical, and specific to the company. Interviewers use them to test your ability to break down complex problems and your use of analytical thinking to address business concerns.

So we’ve put together this guide to help you familiarize yourself with case studies at companies like Amazon, Google, and Meta (Facebook), as well as how to prepare for them, using practice questions and a repeatable answer framework.

Here’s the first thing you need to know about tackling data science case studies: always start by asking clarifying questions, before jumping in to your plan.

Let’s get started.

- What to expect in data science case study interviews

- How to approach data science case studies

- Sample cases from FAANG data science interviews

- How to prepare for data science case interviews

Click here to practice 1-on-1 with ex-FAANG interviewers

1. what to expect in data science case study interviews.

Before we get into an answer method and practice questions for data science case studies, let’s take a look at what you can expect in this type of interview.

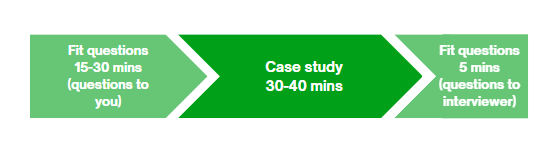

Of course, the exact interview process for data scientist candidates will depend on the company you’re applying to, but case studies generally appear in both the pre-onsite phone screens and during the final onsite or virtual loop.

These questions may take anywhere from 10 to 40 minutes to answer, depending on the depth and complexity that the interviewer is looking for. During the initial phone screens, the case studies are typically shorter and interspersed with other technical and/or behavioral questions. During the final rounds, they will likely take longer to answer and require a more detailed analysis.

While some candidates may have the opportunity to prepare in advance and present their conclusions during an interview round, most candidates work with the information the interviewer offers on the spot.

1.1 The types of data science case studies

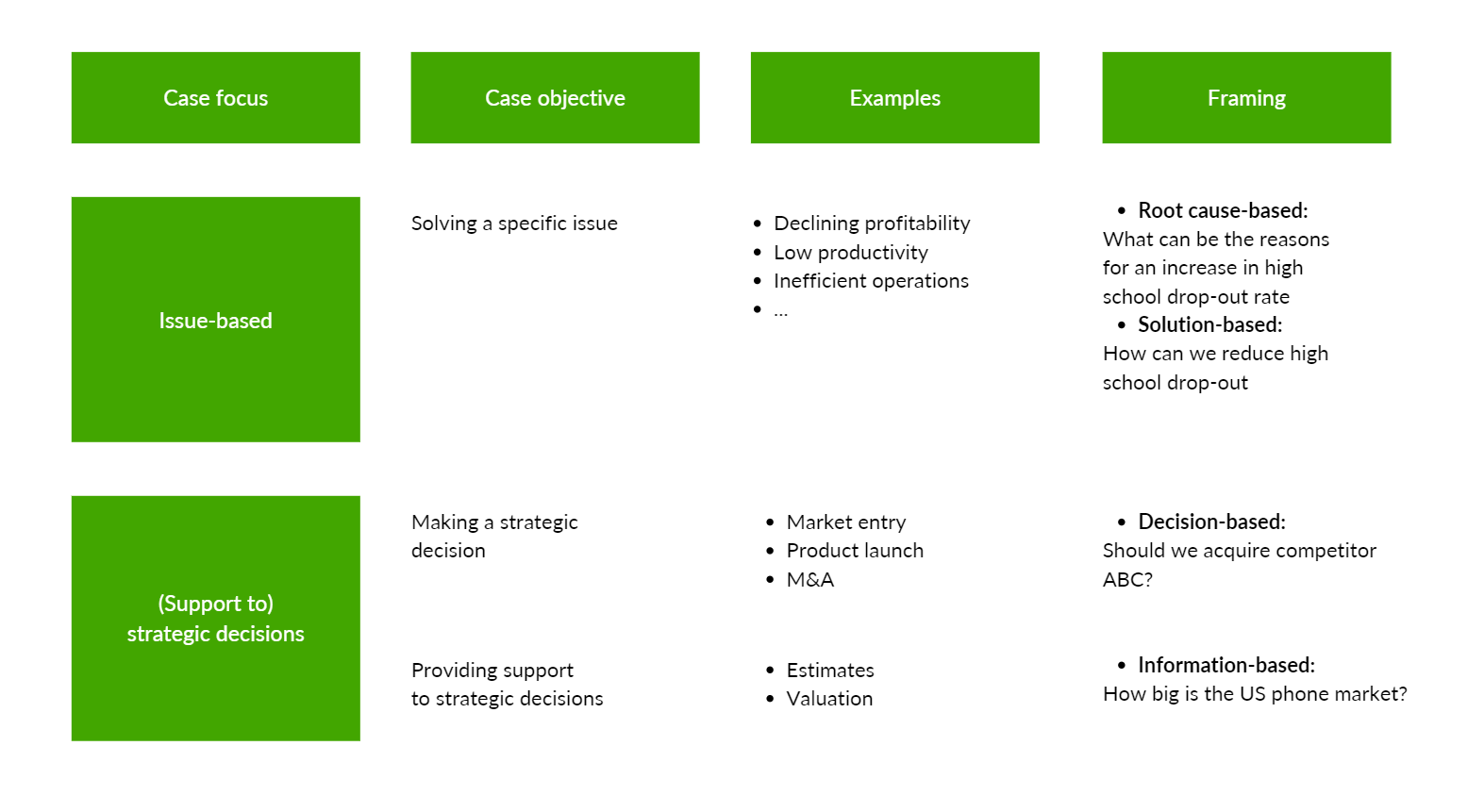

Generally, there are two types of case studies:

- Analysis cases , which focus on how you translate user behavior into ideas and insights using data. These typically center around a product, feature, or business concern that’s unique to the company you’re interviewing with.

- Modeling cases , which are more overtly technical and focus on how you build and use machine learning and statistical models to address business problems.

The number of case studies that you’ll receive in each category will depend on the company and the position that you’ve applied for. Facebook , for instance, typically doesn’t give many machine learning modeling cases, whereas Amazon does.

Also, some companies break these larger groups into smaller subcategories. For example, Facebook divides its analysis cases into two types: product interpretation and applied data .

You may also receive in-depth questions similar to case studies, which test your technical capabilities (e.g. coding, SQL), so if you’d like to learn more about how to answer coding interview questions, take a look here .

We’ll give you a step-by-step method that can be used to answer analysis and modeling cases in section 2 . But first, let’s look at how interviewers will assess your answers.

1.2 What interviewers are looking for

We’ve researched accounts from ex-interviewers and data scientists to pinpoint the main criteria that interviewers look for in your answers. While the exact grading rubric will vary per company, this list from an ex-Google data scientist is a good overview of the biggest assessment areas:

- Structure : candidate can break down an ambiguous problem into clear steps

- Completeness : candidate is able to fully answer the question

- Soundness : candidate’s solution is feasible and logical

- Clarity : candidate’s explanations and methodology are easy to understand

- Speed : candidate manages time well and is able to come up with solutions quickly

You’ll be able to improve your skills in each of these categories by practicing data science case studies on your own, and by working with an answer framework. We’ll get into that next.

2. How to approach data science case studies

Approaching data science cases with a repeatable framework will not only add structure to your answer, but also help you manage your time and think clearly under the stress of interview conditions.

Let’s go over a framework that you can use in your interviews, then break it down with an example answer.

2.1 Data science case framework: CAPER

We've researched popular frameworks used by real data scientists, and consolidated them to be as memorable and useful in an interview setting as possible.

Try using the framework below to structure your thinking during the interview.

- Clarify : Start by asking questions. Case questions are ambiguous, so you’ll need to gather more information from the interviewer, while eliminating irrelevant data. The types of questions you’ll ask will depend on the case, but consider: what is the business objective? What data can I access? Should I focus on all customers or just in X region?

- Assume : Narrow the problem down by making assumptions and stating them to the interviewer for confirmation. (E.g. the statistical significance is X%, users are segmented based on XYZ, etc.) By the end of this step you should have constrained the problem into a clear goal.

- Plan : Now, begin to craft your solution. Take time to outline a plan, breaking it into manageable tasks. Once you’ve made your plan, explain each step that you will take to the interviewer, and ask if it sounds good to them.

- Execute : Carry out your plan, walking through each step with the interviewer. Depending on the type of case, you may have to prepare and engineer data, code, apply statistical algorithms, build a model, etc. In the majority of cases, you will need to end with business analysis.

- Review : Finally, tie your final solution back to the business objectives you and the interviewer had initially identified. Evaluate your solution, and whether there are any steps you could have added or removed to improve it.

Now that you’ve seen the framework, let’s take a look at how to implement it.

2.2 Sample answer using the CAPER framework

Below you’ll find an answer to a Facebook data science interview question from the Applied Data loop. This is an example that comes from Facebook’s data science interview prep materials, which you can find here .

Try this question:

Imagine that Facebook is building a product around high schools, starting with about 300 million users who have filled out a field with the name of their current high school. How would you find out how much of this data is real?

First, we need to clarify the question, eliminating irrelevant data and pinpointing what is the most important. For example:

- What exactly does “real” mean in this context?

- Should we focus on whether the high school itself is real, or whether the user actually attended the high school they’ve named?

After discussing with the interviewer, we’ve decided to focus on whether the high school itself is real first, followed by whether the user actually attended the high school they’ve named.

Next, we’ll narrow the problem down and state our assumptions to the interviewer for confirmation. Here are some assumptions we could make in the context of this problem:

- The 300 million users are likely teenagers, given that they’re listing their current high school

- We can assume that a high school that is listed too few times is likely fake

- We can assume that a high school that is listed too many times (e.g. 10,000+ students) is likely fake

The interviewer has agreed with each of these assumptions, so we can now move on to the plan.

Next, it’s time to make a list of actionable steps and lay them out for the interviewer before moving on.

First, there are two approaches that we can identify:

- A high precision approach, which provides a list of people who definitely went to a confirmed high school

- A high recall approach, more similar to market sizing, which would provide a ballpark figure of people who went to a confirmed high school

As this is for a product that Facebook is currently building, the product use case likely calls for an estimate that is as accurate as possible. So we can go for the first approach, which will provide a more precise estimate of confirmed users listing a real high school.

Now, we list the steps that make up this approach:

- To find whether a high school is real: Draw a distribution with the number of students on the X axis, and the number of high schools on the Y axis, in order to find and eliminate the lower and upper bounds

- To find whether a student really went to a high school: use a user’s friend graph and location to determine the plausibility of the high school they’ve named

The interviewer has approved the plan, which means that it’s time to execute.

4. Execute

Step 1: Determining whether a high school is real

Going off of our plan, we’ll first start with the distribution.

We can use x1 to denote the lower bound, below which the number of times a high school is listed would be too small for a plausible school. x2 then denotes the upper bound, above which the high school has been listed too many times for a plausible school.

Here is what that would look like:

Be prepared to answer follow up questions. In this case, the interviewer may ask, “looking at this graph, what do you think x1 and x2 would be?”

Based on this distribution, we could say that x1 is approximately the 5th percentile, or somewhere around 100 students. So, out of 300 million students, if fewer than 100 students list “Applebee” high school, then this is most likely not a real high school.

x2 is likely around the 95th percentile, or potentially as high as the 99th percentile. Based on intuition, we could estimate that number around 10,000. So, if more than 10,000 students list “Applebee” high school, then this is most likely not real. Here is how that looks on the distribution:

At this point, the interviewer may ask more follow-up questions, such as “how do we account for different high schools that share the same name?”

In this case, we could group by the schools’ name and location, rather than name alone. If the high school does not have a dedicated page that lists its location, we could deduce its location based on the city of the user that lists it.

Step 2: Determining whether a user went to the high school

A strong signal as to whether a user attended a specific high school would be their friend graph: a set number of friends would have to have listed the same current high school. For now, we’ll set that number at five friends.

Don’t forget to call out trade-offs and edge cases as you go. In this case, there could be a student who has recently moved, and so the high school they’ve listed does not reflect their actual current high school.

To solve this, we could rely on users to update their location to reflect the change. If users do not update their location and high school, this would present an edge case that we would need to work out later.

To conclude, we could use the data from both the friend graph and the initial distribution to confirm the two signifiers: a high school is real, and the user really went there.

If enough users in the same location list the same high school, then it is likely that the high school is real, and that the users really attend it. If there are not enough users in the same location that list the same high school, then it is likely that the high school is not real, and the users do not actually attend it.

3. Sample cases from FAANG data science interviews

Having worked through the sample problem above, try out the different kinds of case studies that have been asked in data science interviews at FAANG companies. We’ve divided the questions into types of cases, as well as by company.

For more information about each of these companies’ data science interviews, take a look at these guides:

- Facebook data scientist interview guide

- Amazon data scientist interview guide

- Google data scientist interview guide

Now let’s get into the questions. This is a selection of real data scientist interview questions, according to data from Glassdoor.

Data science case studies

Facebook - Analysis (product interpretation)

- How would you measure the success of a product?

- What KPIs would you use to measure the success of the newsfeed?

- Friends acceptance rate decreases 15% after a new notifications system is launched - how would you investigate?

Facebook - Analysis (applied data)

- How would you evaluate the impact for teenagers when their parents join Facebook?

- How would you decide to launch or not if engagement within a specific cohort decreased while all the rest increased?

- How would you set up an experiment to understand feature change in Instagram stories?

Amazon - modeling

- How would you improve a classification model that suffers from low precision?

- When you have time series data by month, and it has large data records, how will you find significant differences between this month and previous month?

Google - Analysis

- You have a google app and you make a change. How do you test if a metric has increased or not?

- How do you detect viruses or inappropriate content on YouTube?

- How would you compare if upgrading the android system produces more searches?

4. How to prepare for data science case interviews

Understanding the process and learning a method for data science cases will go a long way in helping you prepare. But this information is not enough to land you a data science job offer.

To succeed in your data scientist case interviews, you're also going to need to practice under realistic interview conditions so that you'll be ready to perform when it counts.

For more information on how to prepare for data science interviews as a whole, take a look at our guide on data science interview prep .

4.1 Practice on your own

Start by answering practice questions alone. You can use the list in section 3 , and interview yourself out loud. This may sound strange, but it will significantly improve the way you communicate your answers during an interview.

Play the role of both the candidate and the interviewer, asking questions and answering them, just like two people would in an interview. This will help you get used to the answer framework and get used to answering data science cases in a structured way.

4.2 Practice with peers

Once you’re used to answering questions on your own , then a great next step is to do mock interviews with friends or peers. This will help you adapt your approach to accommodate for follow-ups and answer questions you haven’t already worked through.

This can be especially helpful if your friend has experience with data scientist interviews, or is at least familiar with the process.

4.3 Practice with ex-interviewers

Finally, you should also try to practice data science mock interviews with expert ex-interviewers, as they’ll be able to give you much more accurate feedback than friends and peers.

If you know a data scientist or someone who has experience running interviews at a big tech company, then that's fantastic. But for most of us, it's tough to find the right connections to make this happen. And it might also be difficult to practice multiple hours with that person unless you know them really well.

Here's the good news. We've already made the connections for you. We’ve created a coaching service where you can practice 1-on-1 with ex-interviewers from leading tech companies. Learn more and start scheduling sessions today .

Related articles:

Data Science Case Study Interview: Your Guide to Success

by Sam McKay, CFA | Careers

Ready to crush your next data science interview? Well, you’re in the right place.

This type of interview is designed to assess your problem-solving skills, technical knowledge, and ability to apply data-driven solutions to real-world challenges.

So, how can you master these interviews and secure your next job?

To master your data science case study interview:

Practice Case Studies: Engage in mock scenarios to sharpen problem-solving skills.

Review Core Concepts: Brush up on algorithms, statistical analysis, and key programming languages.

Contextualize Solutions: Connect findings to business objectives for meaningful insights.

Clear Communication: Present results logically and effectively using visuals and simple language.

Adaptability and Clarity: Stay flexible and articulate your thought process during problem-solving.

This article will delve into each of these points and give you additional tips and practice questions to get you ready to crush your upcoming interview!

After you’ve read this article, you can enter the interview ready to showcase your expertise and win your dream role.

Let’s dive in!

Table of Contents

What to Expect in the Interview?

Data science case study interviews are an essential part of the hiring process. They give interviewers a glimpse of how you, approach real-world business problems and demonstrate your analytical thinking, problem-solving, and technical skills.

Furthermore, case study interviews are typically open-ended , which means you’ll be presented with a problem that doesn’t have a right or wrong answer.

Instead, you are expected to demonstrate your ability to:

Break down complex problems

Make assumptions

Gather context

Provide data points and analysis

This type of interview allows your potential employer to evaluate your creativity, technical knowledge, and attention to detail.

But what topics will the interview touch on?

Topics Covered in Data Science Case Study Interviews

In a case study interview , you can expect inquiries that cover a spectrum of topics crucial to evaluating your skill set:

Topic 1: Problem-Solving Scenarios

In these interviews, your ability to resolve genuine business dilemmas using data-driven methods is essential.

These scenarios reflect authentic challenges, demanding analytical insight, decision-making, and problem-solving skills.

Real-world Challenges: Expect scenarios like optimizing marketing strategies, predicting customer behavior, or enhancing operational efficiency through data-driven solutions.

Analytical Thinking: Demonstrate your capacity to break down complex problems systematically, extracting actionable insights from intricate issues.

Decision-making Skills: Showcase your ability to make informed decisions, emphasizing instances where your data-driven choices optimized processes or led to strategic recommendations.

Your adeptness at leveraging data for insights, analytical thinking, and informed decision-making defines your capability to provide practical solutions in real-world business contexts.

Topic 2: Data Handling and Analysis

Data science case studies assess your proficiency in data preprocessing, cleaning, and deriving insights from raw data.

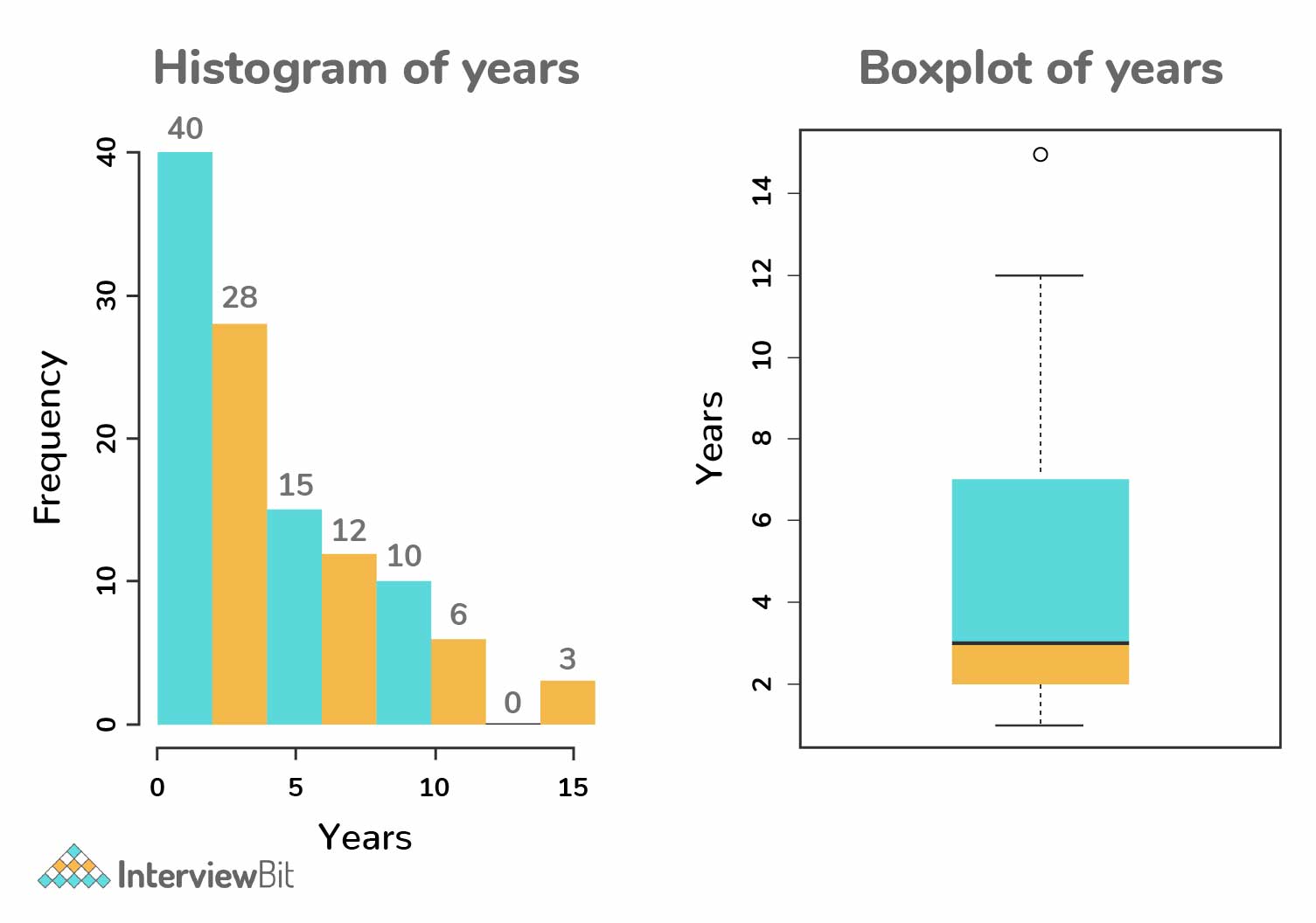

Data Collection and Manipulation: Prepare for data engineering questions involving data collection, handling missing values, cleaning inaccuracies, and transforming data for analysis.

Handling Missing Values and Cleaning Data: Showcase your skills in managing missing values and ensuring data quality through cleaning techniques.

Data Transformation and Feature Engineering: Highlight your expertise in transforming raw data into usable formats and creating meaningful features for analysis.

Mastering data preprocessing—managing, cleaning, and transforming raw data—is fundamental. Your proficiency in these techniques showcases your ability to derive valuable insights essential for data-driven solutions.

Topic 3: Modeling and Feature Selection

Data science case interviews prioritize your understanding of modeling and feature selection strategies.

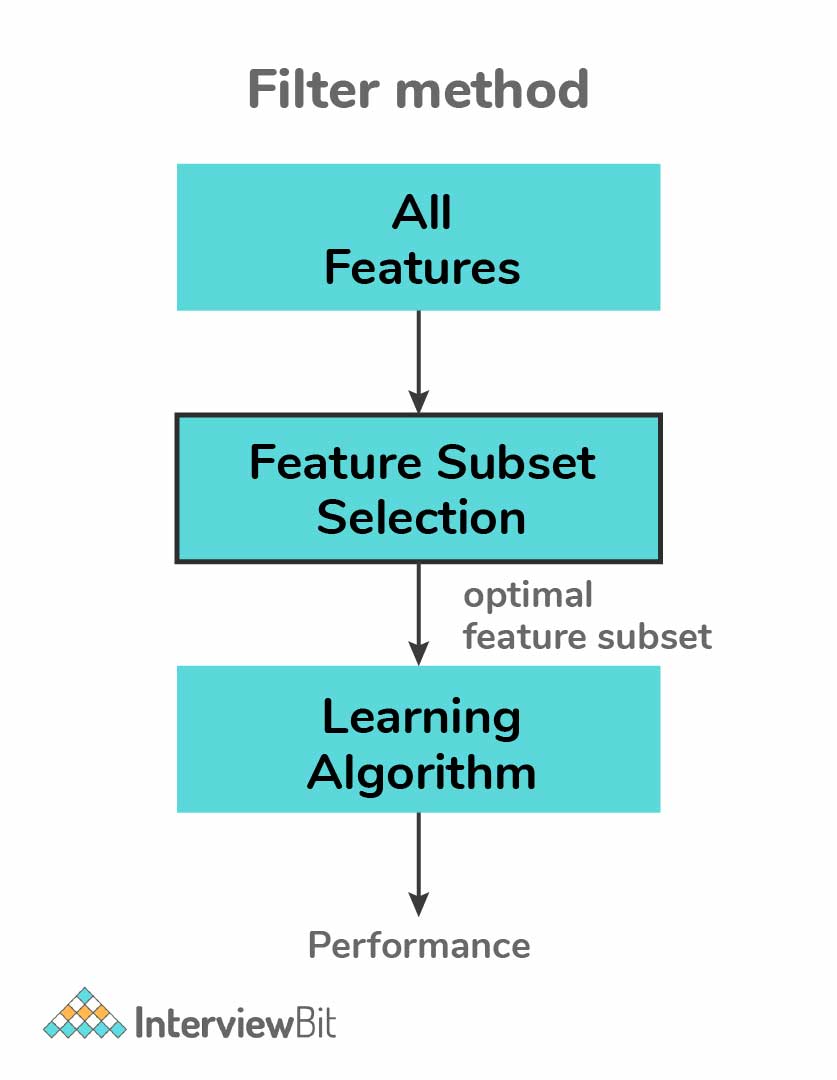

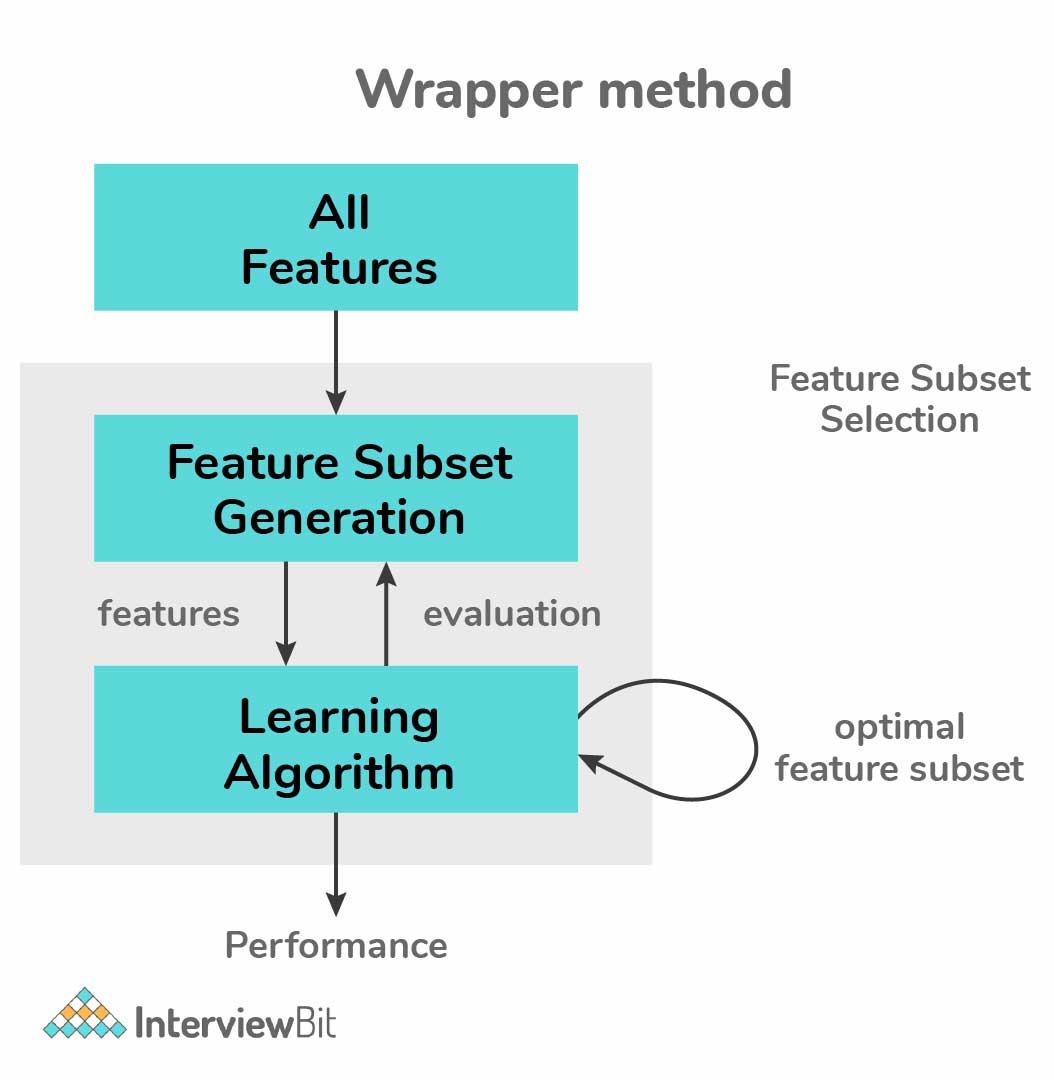

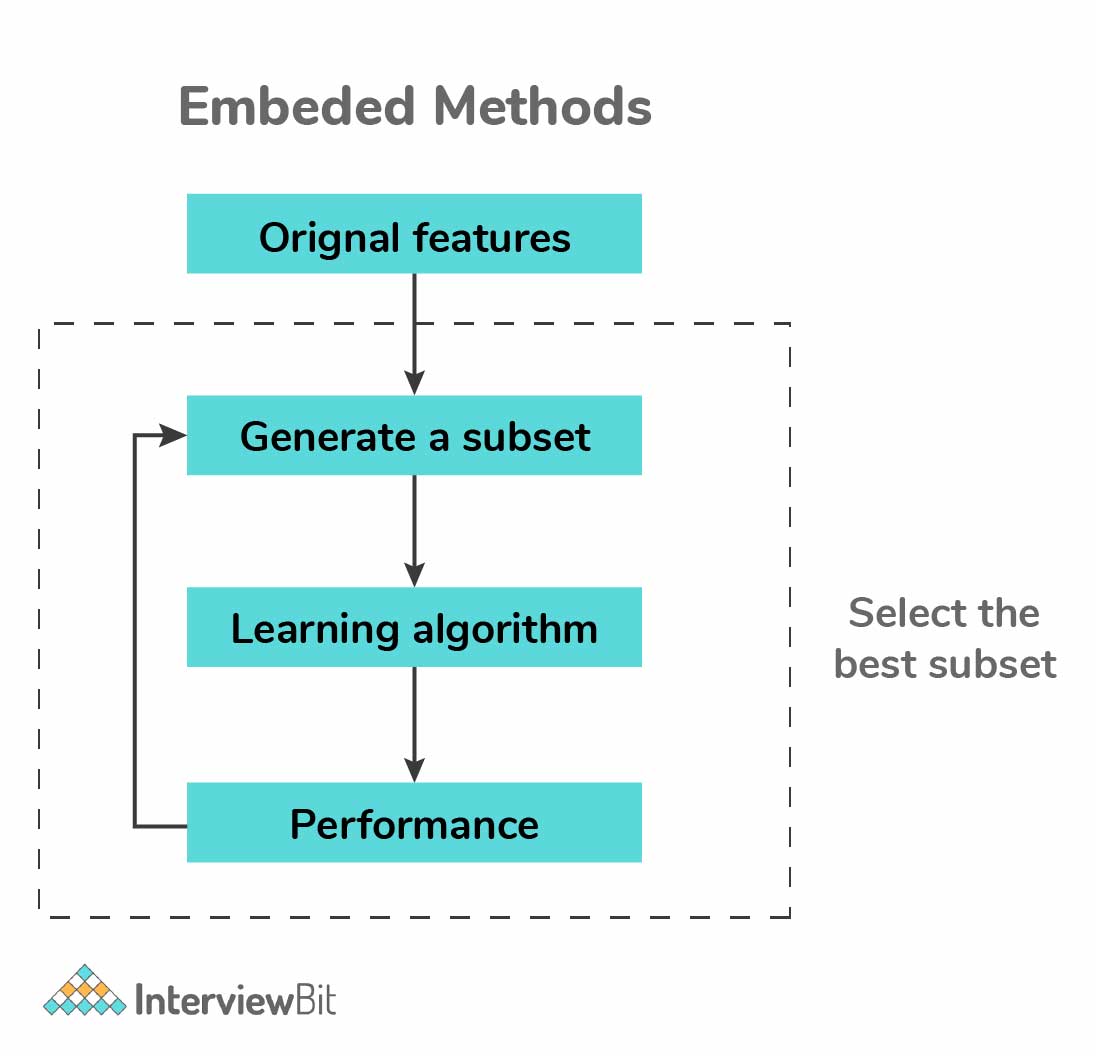

Model Selection and Application: Highlight your prowess in choosing appropriate models, explaining your rationale, and showcasing implementation skills.

Feature Selection Techniques: Understand the importance of selecting relevant variables and methods, such as correlation coefficients, to enhance model accuracy.

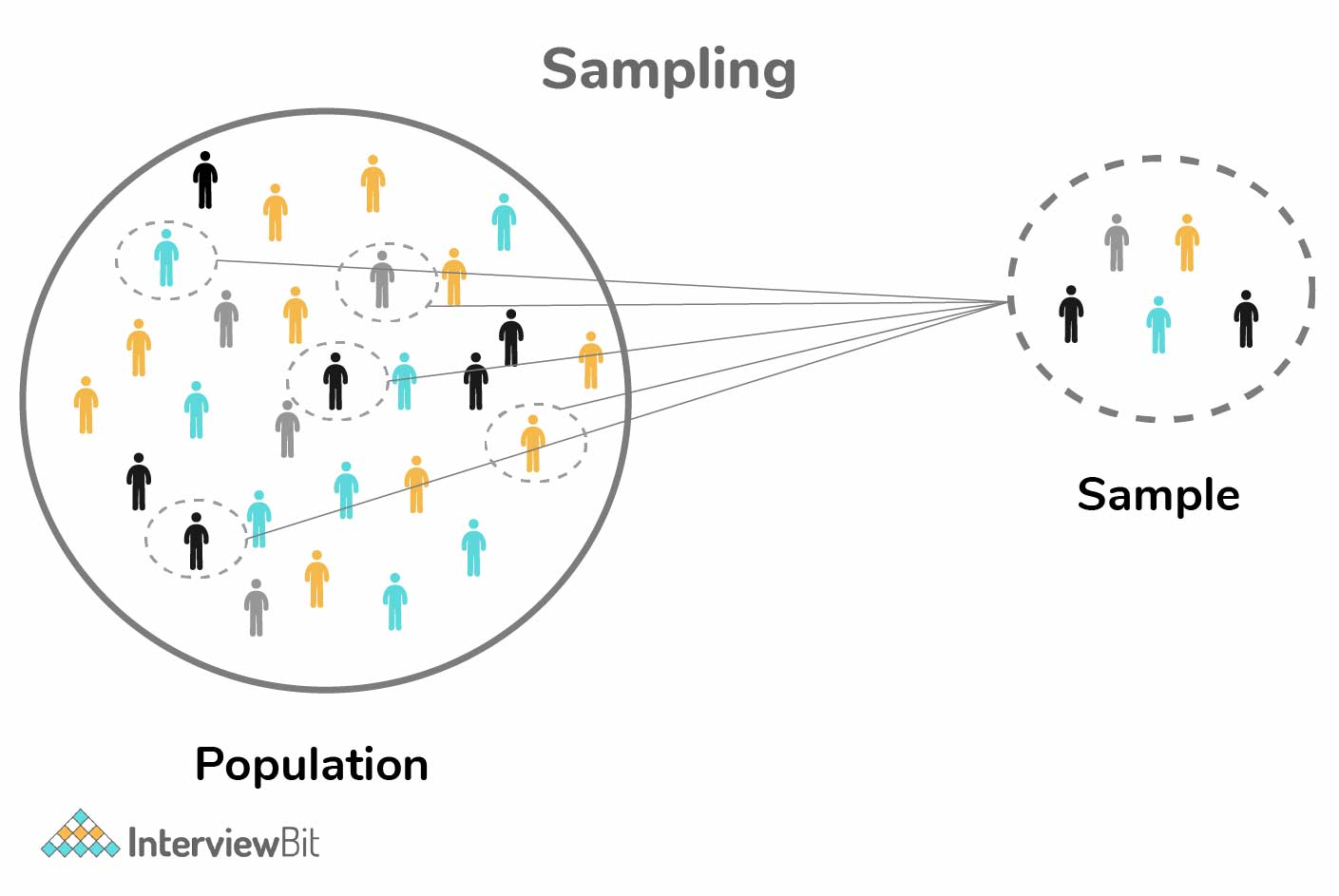

Ensuring Robustness through Random Sampling: Consider techniques like random sampling to bolster model robustness and generalization abilities.

Excel in modeling and feature selection by understanding contexts, optimizing model performance, and employing robust evaluation strategies.

Become a master at data modeling using these best practices:

Topic 4: Statistical and Machine Learning Approach

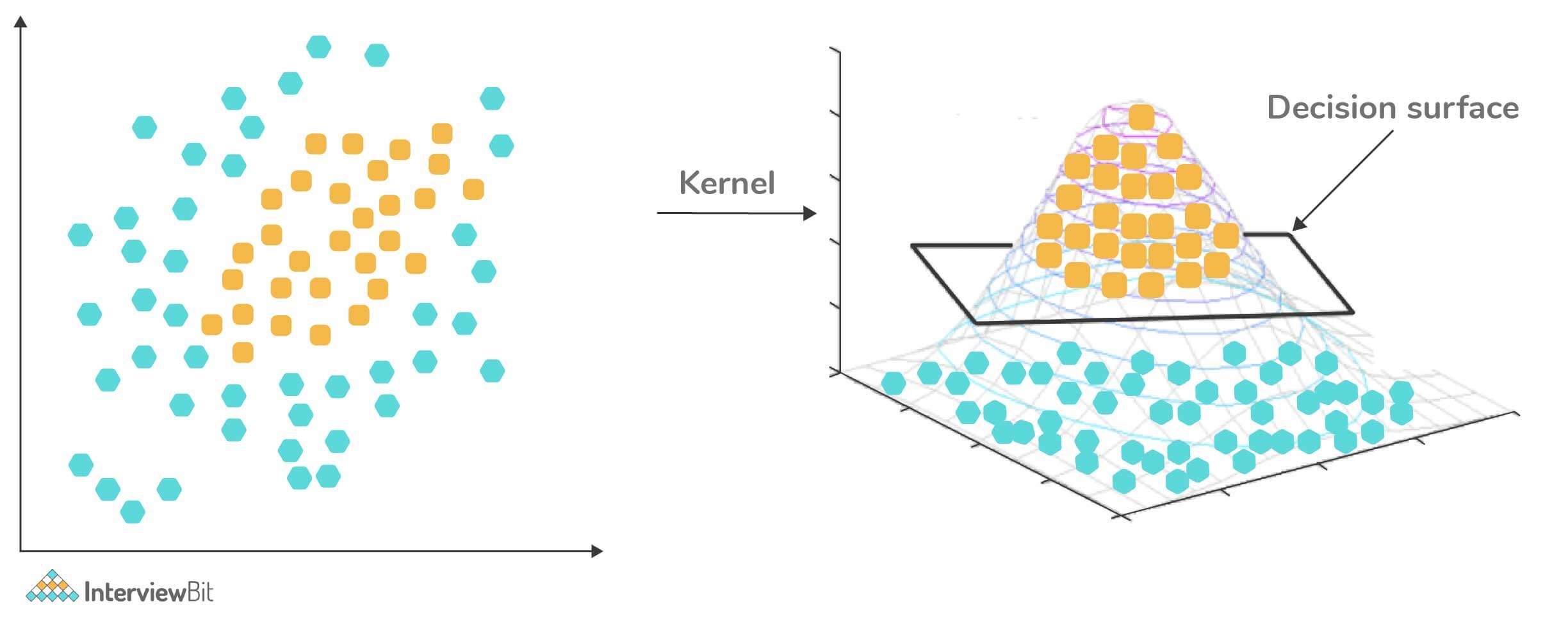

These interviews require proficiency in statistical and machine learning methods for diverse problem-solving. This topic is significant for anyone applying for a machine learning engineer position.

Using Statistical Models: Utilize logistic and linear regression models for effective classification and prediction tasks.

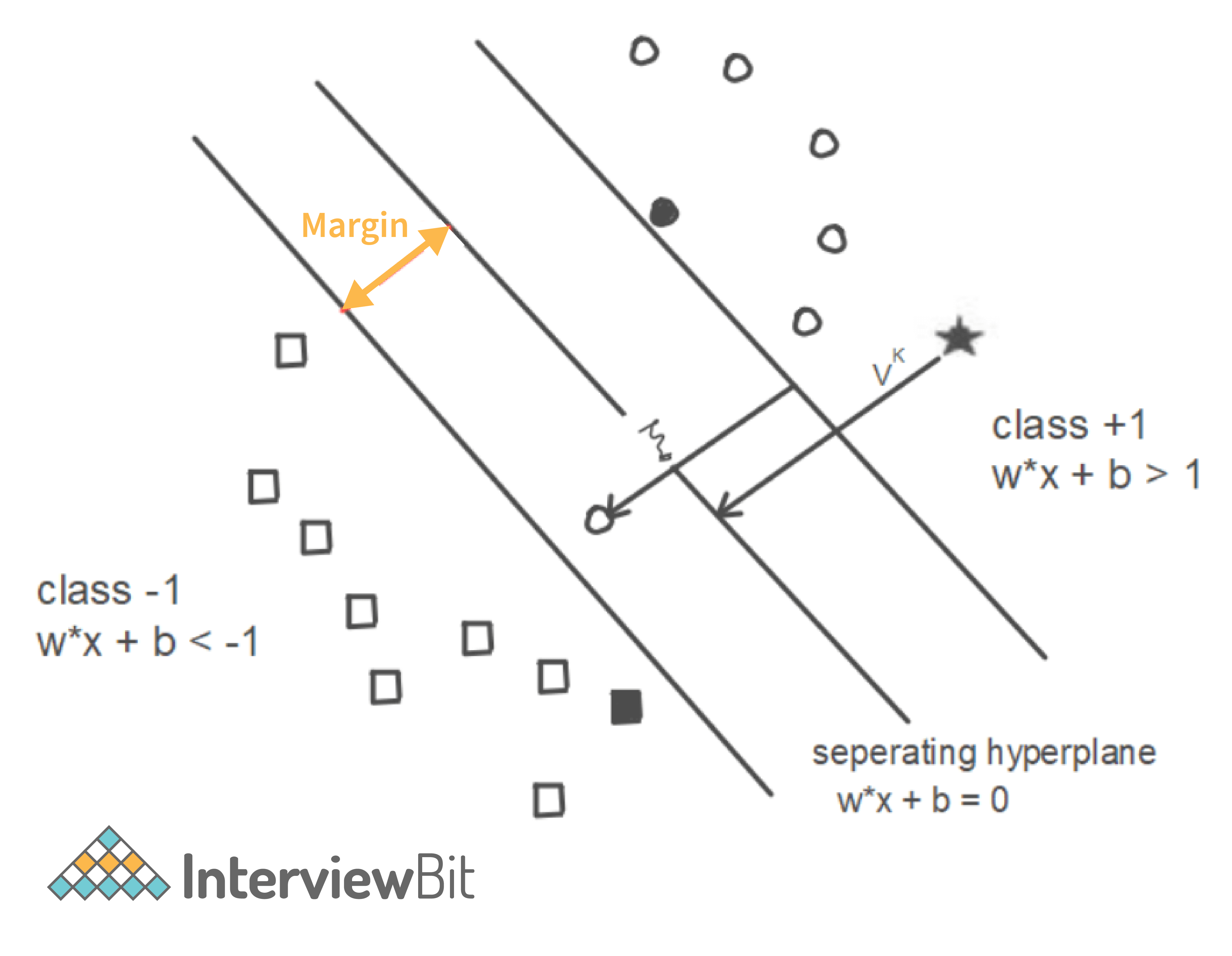

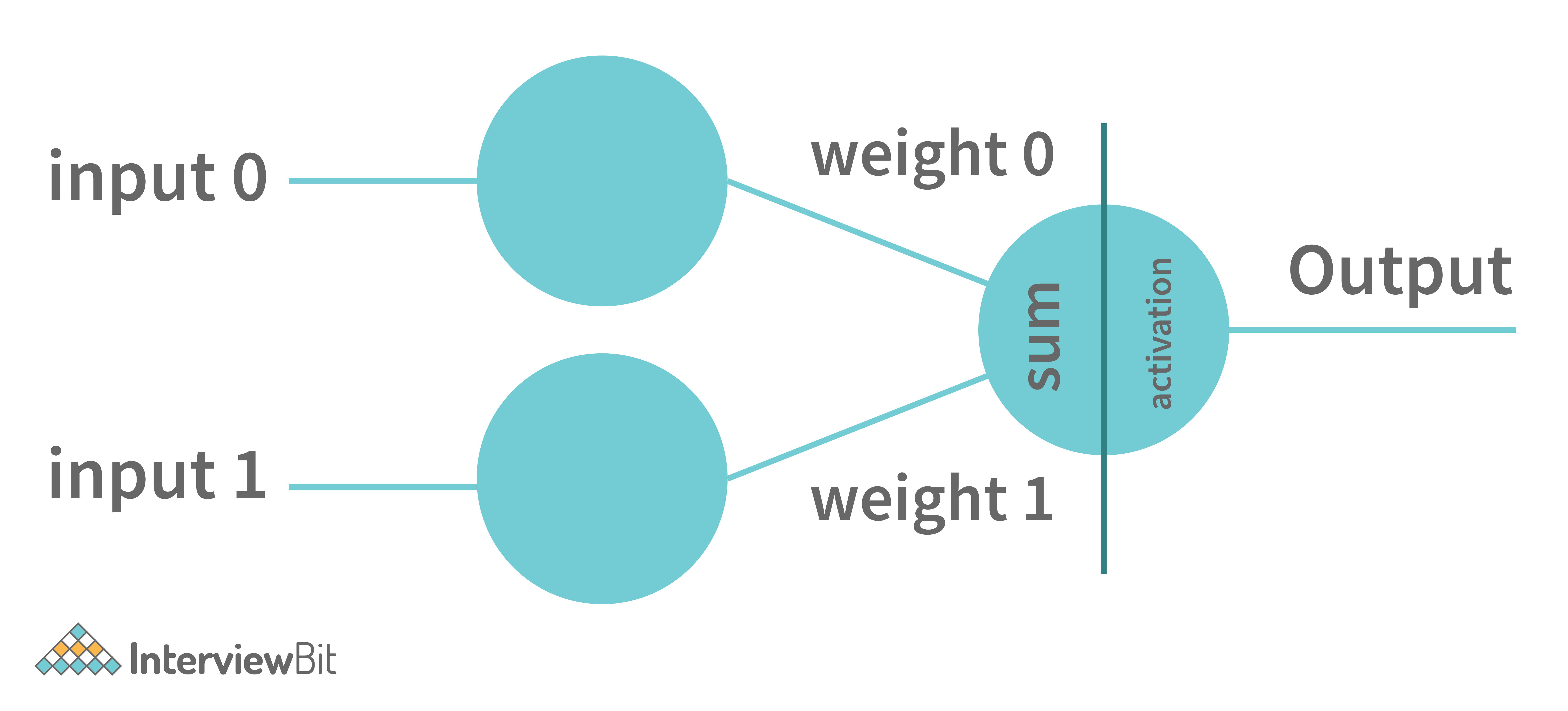

Leveraging Machine Learning Algorithms: Employ models such as support vector machines (SVM), k-nearest neighbors (k-NN), and decision trees for complex pattern recognition and classification.

Exploring Deep Learning Techniques: Consider neural networks, convolutional neural networks (CNN), and recurrent neural networks (RNN) for intricate data patterns.

Experimentation and Model Selection: Experiment with various algorithms to identify the most suitable approach for specific contexts.

Combining statistical and machine learning expertise equips you to systematically tackle varied data challenges, ensuring readiness for case studies and beyond.

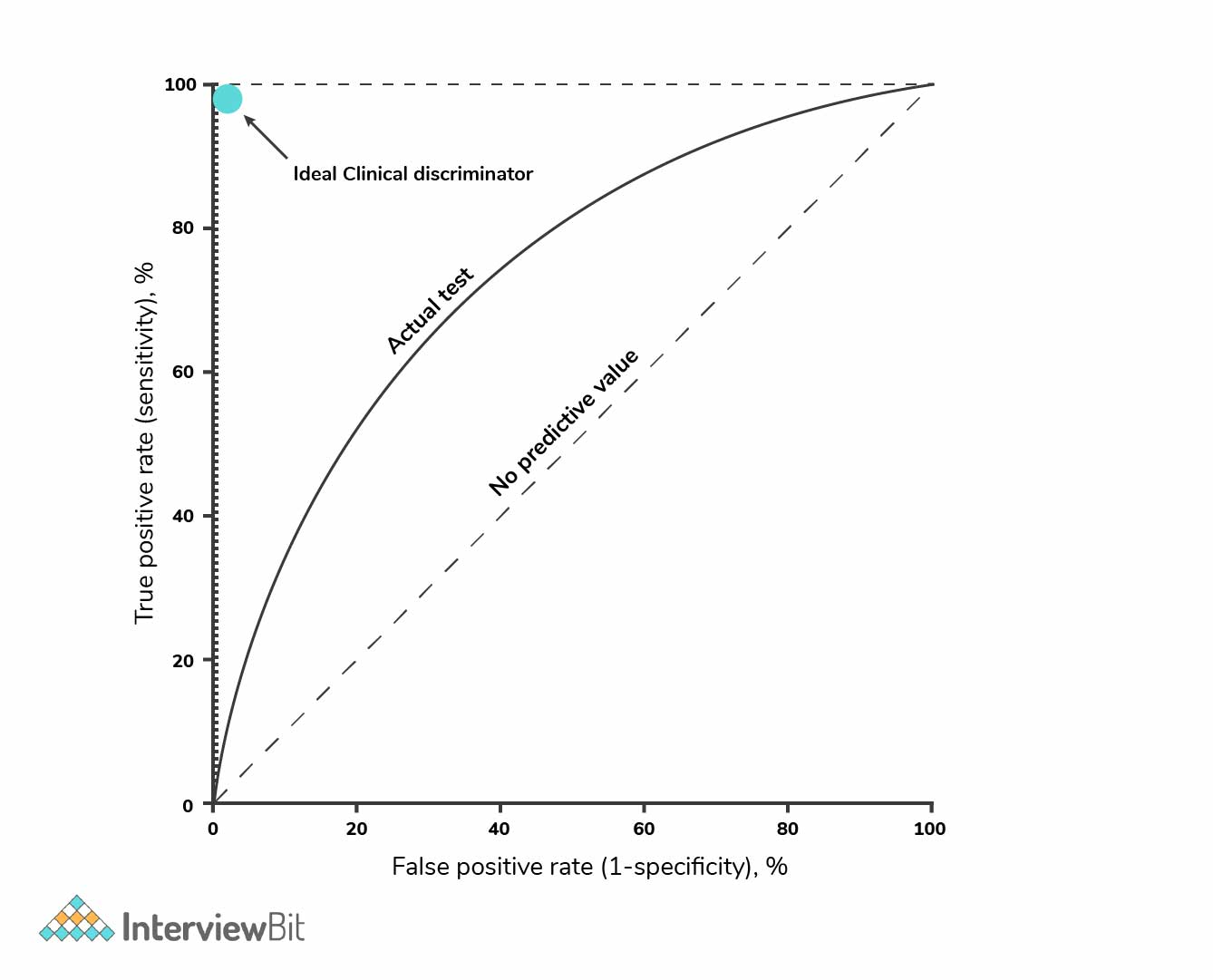

Topic 5: Evaluation Metrics and Validation

In data science interviews, understanding evaluation metrics and validation techniques is critical to measuring how well machine learning models perform.

Choosing the Right Metrics: Select metrics like precision, recall (for classification), or R² (for regression) based on the problem type. Picking the right metric defines how you interpret your model’s performance.

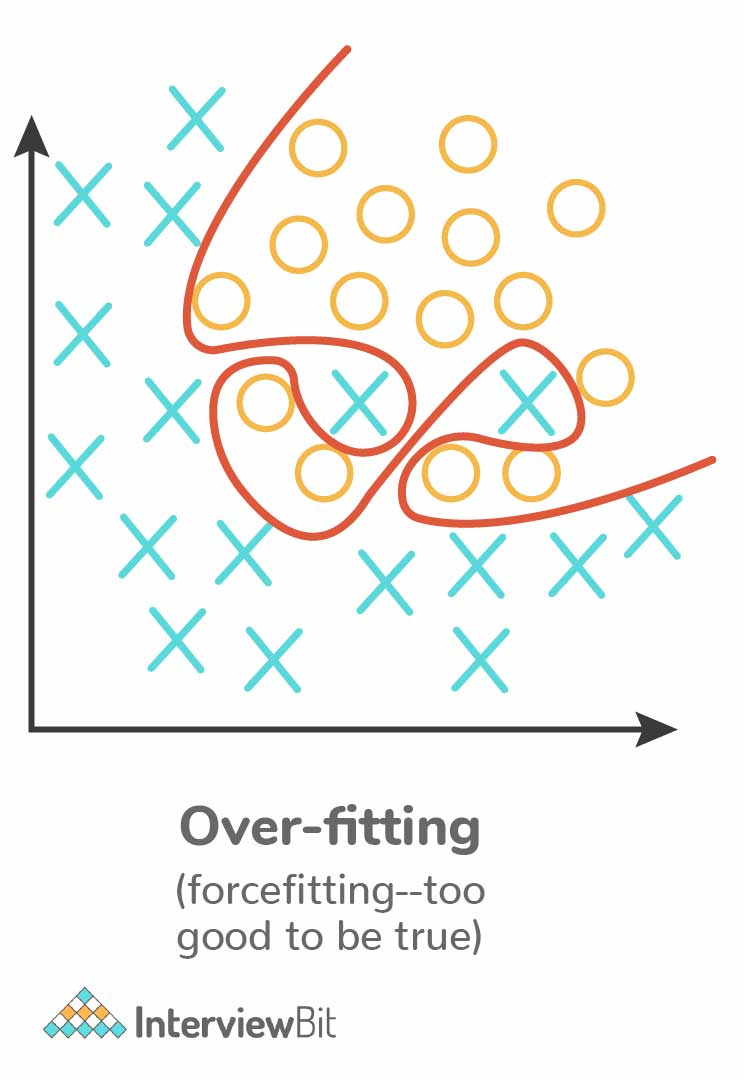

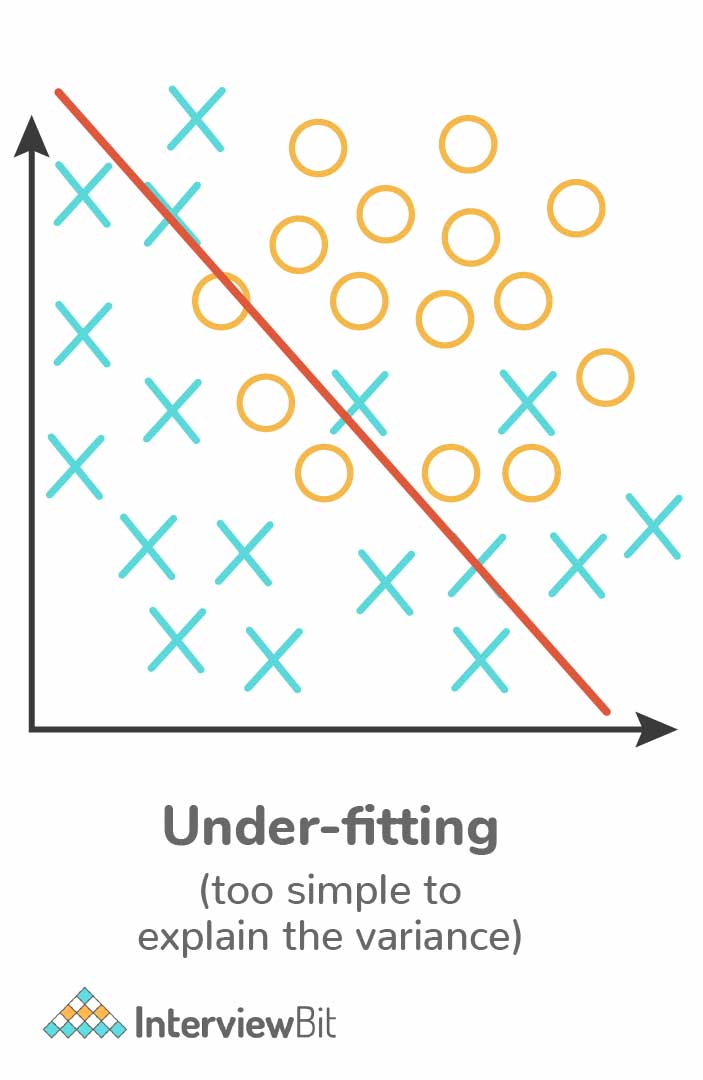

Validating Model Accuracy: Use methods like cross-validation and holdout validation to test your model across different data portions. These methods prevent errors from overfitting and provide a more accurate performance measure.

Importance of Statistical Significance: Evaluate if your model’s performance is due to actual prediction or random chance. Techniques like hypothesis testing and confidence intervals help determine this probability accurately.

Interpreting Results: Be ready to explain model outcomes, spot patterns, and suggest actions based on your analysis. Translating data insights into actionable strategies showcases your skill.

Finally, focusing on suitable metrics, using validation methods, understanding statistical significance, and deriving actionable insights from data underline your ability to evaluate model performance.

Also, being well-versed in these topics and having hands-on experience through practice scenarios can significantly enhance your performance in these case study interviews.

Prepare to demonstrate technical expertise and adaptability, problem-solving, and communication skills to excel in these assessments.

Now, let’s talk about how to navigate the interview.

Here is a step-by-step guide to get you through the process.

Steps by Step Guide Through the Interview

This section’ll discuss what you can expect during the interview process and how to approach case study questions.

Step 1: Problem Statement: You’ll be presented with a problem or scenario—either a hypothetical situation or a real-world challenge—emphasizing the need for data-driven solutions within data science.

Step 2: Clarification and Context: Seek more profound clarity by actively engaging with the interviewer. Ask pertinent questions to thoroughly understand the objectives, constraints, and nuanced aspects of the problem statement.

Step 3: State your Assumptions: When crucial information is lacking, make reasonable assumptions to proceed with your final solution. Explain these assumptions to your interviewer to ensure transparency in your decision-making process.

Step 4: Gather Context: Consider the broader business landscape surrounding the problem. Factor in external influences such as market trends, customer behaviors, or competitor actions that might impact your solution.

Step 5: Data Exploration: Delve into the provided datasets meticulously. Cleanse, visualize, and analyze the data to derive meaningful and actionable insights crucial for problem-solving.

Step 6: Modeling and Analysis: Leverage statistical or machine learning techniques to address the problem effectively. Implement suitable models to derive insights and solutions aligning with the identified objectives.

Step 7: Results Interpretation: Interpret your findings thoughtfully. Identify patterns, trends, or correlations within the data and present clear, data-backed recommendations relevant to the problem statement.

Step 8: Results Presentation: Effectively articulate your approach, methodologies, and choices coherently. This step is vital, especially when conveying complex technical concepts to non-technical stakeholders.

Remember to remain adaptable and flexible throughout the process and be prepared to adapt your approach to each situation.

Now that you have a guide on navigating the interview, let us give you some tips to help you stand out from the crowd.

Top 3 Tips to Master Your Data Science Case Study Interview

Approaching case study interviews in data science requires a blend of technical proficiency and a holistic understanding of business implications.

Here are practical strategies and structured approaches to prepare effectively for these interviews:

1. Comprehensive Preparation Tips

To excel in case study interviews, a blend of technical competence and strategic preparation is key.

Here are concise yet powerful tips to equip yourself for success:

Practice with Mock Case Studies : Familiarize yourself with the process through practice. Online resources offer example questions and solutions, enhancing familiarity and boosting confidence.

Review Your Data Science Toolbox: Ensure a strong foundation in fundamentals like data wrangling, visualization, and machine learning algorithms. Comfort with relevant programming languages is essential.

Simplicity in Problem-solving: Opt for clear and straightforward problem-solving approaches. While advanced techniques can be impressive, interviewers value efficiency and clarity.

Interviewers also highly value someone with great communication skills. Here are some tips to highlight your skills in this area.

2. Communication and Presentation of Results

In case study interviews, communication is vital. Present your findings in a clear, engaging way that connects with the business context. Tips include:

Contextualize results: Relate findings to the initial problem, highlighting key insights for business strategy.

Use visuals: Charts, graphs, or diagrams help convey findings more effectively.

Logical sequence: Structure your presentation for easy understanding, starting with an overview and progressing to specifics.

Simplify ideas: Break down complex concepts into simpler segments using examples or analogies.

Mastering these techniques helps you communicate insights clearly and confidently, setting you apart in interviews.

Lastly here are some preparation strategies to employ before you walk into the interview room.

3. Structured Preparation Strategy

Prepare meticulously for data science case study interviews by following a structured strategy.

Here’s how:

Practice Regularly: Engage in mock interviews and case studies to enhance critical thinking and familiarity with the interview process. This builds confidence and sharpens problem-solving skills under pressure.

Thorough Review of Concepts: Revisit essential data science concepts and tools, focusing on machine learning algorithms, statistical analysis, and relevant programming languages (Python, R, SQL) for confident handling of technical questions.

Strategic Planning: Develop a structured framework for approaching case study problems. Outline the steps and tools/techniques to deploy, ensuring an organized and systematic interview approach.

Understanding the Context: Analyze business scenarios to identify objectives, variables, and data sources essential for insightful analysis.

Ask for Clarification: Engage with interviewers to clarify any unclear aspects of the case study questions. For example, you may ask ‘What is the business objective?’ This exhibits thoughtfulness and aids in better understanding the problem.

Transparent Problem-solving: Clearly communicate your thought process and reasoning during problem-solving. This showcases analytical skills and approaches to data-driven solutions.

Blend technical skills with business context, communicate clearly, and prepare to systematically ace your case study interviews.

Now, let’s really make this specific.

Each company is different and may need slightly different skills and specializations from data scientists.

However, here is some of what you can expect in a case study interview with some industry giants.

Case Interviews at Top Tech Companies

As you prepare for data science interviews, it’s essential to be aware of the case study interview format utilized by top tech companies.

In this section, we’ll explore case interviews at Facebook, Twitter, and Amazon, and provide insight into what they expect from their data scientists.

Facebook predominantly looks for candidates with strong analytical and problem-solving skills. The case study interviews here usually revolve around assessing the impact of a new feature, analyzing monthly active users, or measuring the effectiveness of a product change.

To excel during a Facebook case interview, you should break down complex problems, formulate a structured approach, and communicate your thought process clearly.

Twitter , similar to Facebook, evaluates your ability to analyze and interpret large datasets to solve business problems. During a Twitter case study interview, you might be asked to analyze user engagement, develop recommendations for increasing ad revenue, or identify trends in user growth.

Be prepared to work with different analytics tools and showcase your knowledge of relevant statistical concepts.

Amazon is known for its customer-centric approach and data-driven decision-making. In Amazon’s case interviews, you may be tasked with optimizing customer experience, analyzing sales trends, or improving the efficiency of a certain process.

Keep in mind Amazon’s leadership principles, especially “Customer Obsession” and “Dive Deep,” as you navigate through the case study.

Remember, practice is key. Familiarize yourself with various case study scenarios and hone your data science skills.

With all this knowledge, it’s time to practice with the following practice questions.

Mockup Case Studies and Practice Questions

To better prepare for your data science case study interviews, it’s important to practice with some mockup case studies and questions.

One way to practice is by finding typical case study questions.

Here are a few examples to help you get started:

Customer Segmentation: You have access to a dataset containing customer information, such as demographics and purchase behavior. Your task is to segment the customers into groups that share similar characteristics. How would you approach this problem, and what machine-learning techniques would you consider?

Fraud Detection: Imagine your company processes online transactions. You are asked to develop a model that can identify potentially fraudulent activities. How would you approach the problem and which features would you consider using to build your model? What are the trade-offs between false positives and false negatives?

Demand Forecasting: Your company needs to predict future demand for a particular product. What factors should be taken into account, and how would you build a model to forecast demand? How can you ensure that your model remains up-to-date and accurate as new data becomes available?

By practicing case study interview questions , you can sharpen problem-solving skills, and walk into future data science interviews more confidently.

Remember to practice consistently and stay up-to-date with relevant industry trends and techniques.

Final Thoughts

Data science case study interviews are more than just technical assessments; they’re opportunities to showcase your problem-solving skills and practical knowledge.

Furthermore, these interviews demand a blend of technical expertise, clear communication, and adaptability.

Remember, understanding the problem, exploring insights, and presenting coherent potential solutions are key.

By honing these skills, you can demonstrate your capability to solve real-world challenges using data-driven approaches. Good luck on your data science journey!

Frequently Asked Questions

How would you approach identifying and solving a specific business problem using data.

To identify and solve a business problem using data, you should start by clearly defining the problem and identifying the key metrics that will be used to evaluate success.

Next, gather relevant data from various sources and clean, preprocess, and transform it for analysis. Explore the data using descriptive statistics, visualizations, and exploratory data analysis.

Based on your understanding, build appropriate models or algorithms to address the problem, and then evaluate their performance using appropriate metrics. Iterate and refine your models as necessary, and finally, communicate your findings effectively to stakeholders.

Can you describe a time when you used data to make recommendations for optimization or improvement?

Recall a specific data-driven project you have worked on that led to optimization or improvement recommendations. Explain the problem you were trying to solve, the data you used for analysis, the methods and techniques you employed, and the conclusions you drew.

Share the results and how your recommendations were implemented, describing the impact it had on the targeted area of the business.

How would you deal with missing or inconsistent data during a case study?

When dealing with missing or inconsistent data, start by assessing the extent and nature of the problem. Consider applying imputation methods, such as mean, median, or mode imputation, or more advanced techniques like k-NN imputation or regression-based imputation, depending on the type of data and the pattern of missingness.

For inconsistent data, diagnose the issues by checking for typos, duplicates, or erroneous entries, and take appropriate corrective measures. Document your handling process so that stakeholders can understand your approach and the limitations it might impose on the analysis.

What techniques would you use to validate the results and accuracy of your analysis?

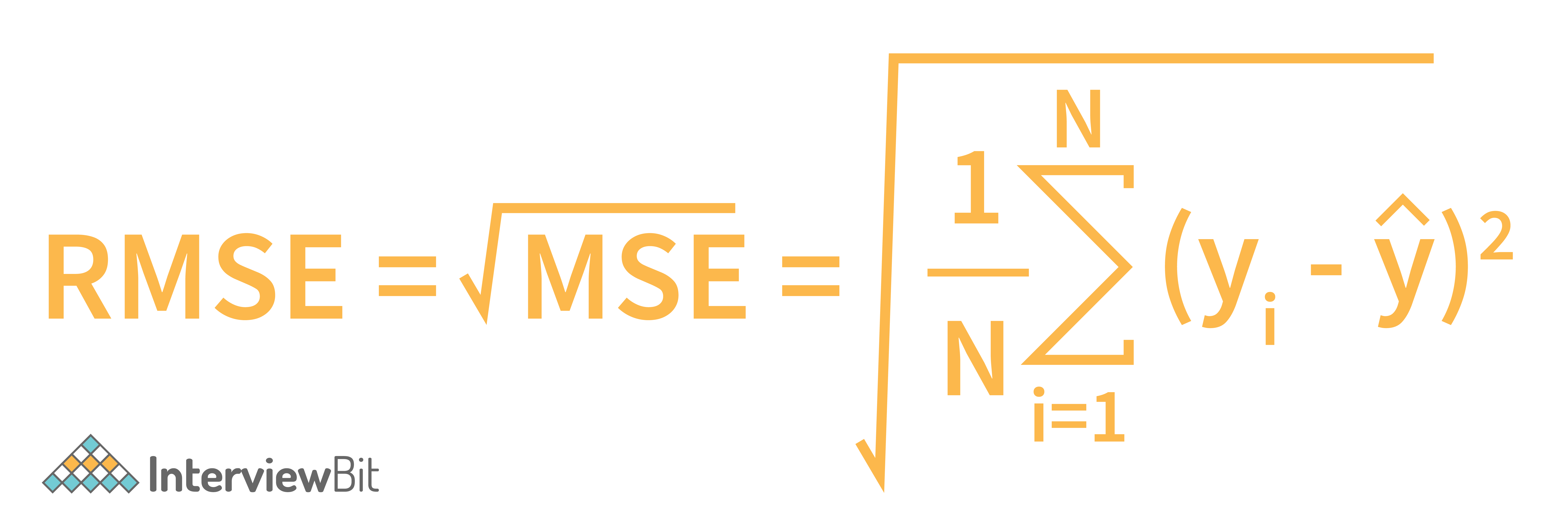

To validate the results and accuracy of your analysis, use techniques like cross-validation or bootstrapping, which can help gauge model performance on unseen data. Employ metrics relevant to your specific problem, such as accuracy, precision, recall, F1-score, or RMSE, to measure performance.

Additionally, validate your findings by conducting sensitivity analyses, sanity checks, and comparing results with existing benchmarks or domain knowledge.

How would you communicate your findings to both technical and non-technical stakeholders?

To effectively communicate your findings to technical stakeholders, focus on the methodology, algorithms, performance metrics, and potential improvements. For non-technical stakeholders, simplify complex concepts and explain the relevance of your findings, the impact on the business, and actionable insights in plain language.

Use visual aids, like charts and graphs, to illustrate your results and highlight key takeaways. Tailor your communication style to the audience, and be prepared to answer questions and address concerns that may arise.

How do you choose between different machine learning models to solve a particular problem?

When choosing between different machine learning models, first assess the nature of the problem and the data available to identify suitable candidate models. Evaluate models based on their performance, interpretability, complexity, and scalability, using relevant metrics and techniques such as cross-validation, AIC, BIC, or learning curves.

Consider the trade-offs between model accuracy, interpretability, and computation time, and choose a model that best aligns with the problem requirements, project constraints, and stakeholders’ expectations.

Keep in mind that it’s often beneficial to try several models and ensemble methods to see which one performs best for the specific problem at hand.

Related Posts

Data Engineer Career Path: Your Guide to Career Success

In today's data-driven world, a career as a data engineer offers countless opportunities for growth and...

How to Become a Data Analyst with No Experience: Let’s Go!

Breaking into the field of data analysis might seem intimidating, especially if you lack experience....

33 Important Data Science Manager Interview Questions

As an aspiring data science manager, you might wonder about the interview questions you'll face. We get...

Top 22 Data Analyst Behavioural Interview Questions & Answers

Data analyst behavioral interviews can be a valuable tool for hiring managers to assess your skills,...

Data Analyst Jobs for Freshers: What You Need to Know

You're fresh out of college, and you want to begin a career in data analysis. Where do you begin? To...

Master’s in Data Science Salary Expectations Explained

Are you pursuing a Master's in Data Science or recently graduated? Great! Having your Master's offers...

Top 22 Database Design Interview Questions Revealed

Database design is a crucial aspect of any software development process. Consequently, companies that...

How To Leverage Expert Guidance for Your Career in AI

So, you’re considering a career in AI. With so much buzz around the industry, it’s no wonder you’re...

Continuous Learning in AI – How To Stay Ahead Of The Curve

Artificial Intelligence (AI) is one of the most dynamic and rapidly evolving fields in the tech...

Learning Interpersonal Skills That Elevate Your Data Science Role

Data science has revolutionized the way businesses operate. It’s not just about the numbers anymore;...

Top 20+ Data Visualization Interview Questions Explained

So, you’re applying for a data visualization or data analytics job? We get it, job interviews can be...

Data Analyst Salary in New York: How Much?

Are you looking at becoming a data analyst in New York? Want to know how much you can possibly earn? In...

Data Science Interview Case Studies: How to Prepare and Excel

In the realm of Data Science Interviews , case studies play a crucial role in assessing a candidate's problem-solving skills and analytical mindset . To stand out and excel in these scenarios, thorough preparation is key. Here's a comprehensive guide on how to prepare and shine in data science interview case studies.

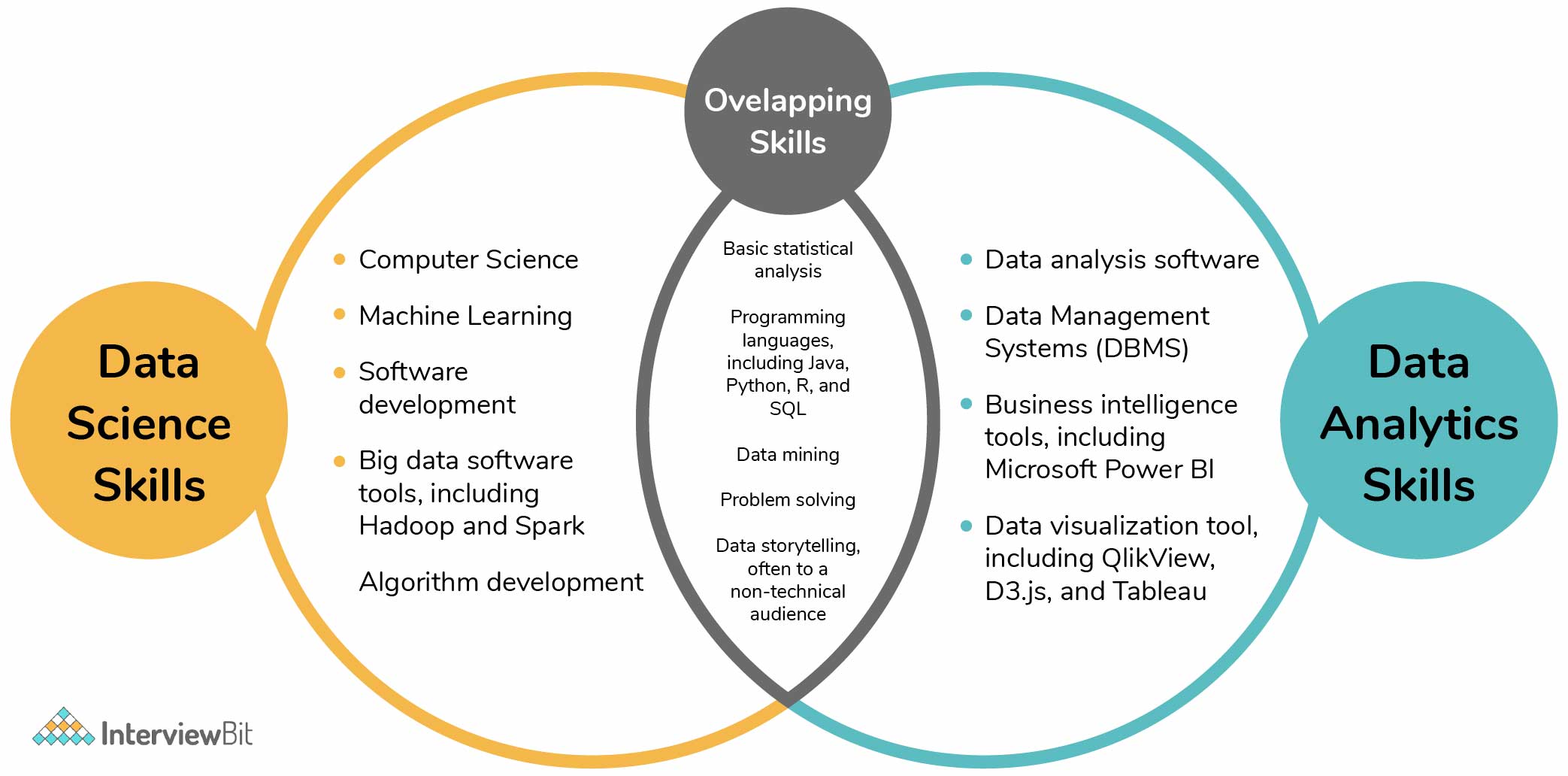

Understanding the Basics

Before delving into case studies, it's essential to have a solid grasp of fundamental data science concepts. Review key topics such as statistical analysis, machine learning algorithms, data manipulation, and data visualization. This foundational knowledge will form the basis of your approach to solving case study problems.

Deconstructing the Case Study

When presented with a case study during the interview, take a structured approach to deconstructing the problem. Begin by defining the business problem or question at hand. Break down the problem into manageable components and identify the key variables involved. This analytical framework will guide your problem-solving process.

🚀 Read more on: "Ultimate Guide: Crafting an Impressive UI/UX Design Portfolio for Success"

Utilizing Data Science Techniques

Apply your data science skills to analyze the provided data and derive meaningful insights. Utilize statistical methods, predictive modeling, and data visualization techniques to explore patterns and trends within the dataset. Clearly communicate your methodology and reasoning to demonstrate your analytical capabilities.

Problem-Solving Strategy

Develop a systematic problem-solving strategy to tackle case study challenges effectively. Start by outlining your approach and assumptions before proceeding to data analysis and interpretation. Implement a logical and structured process to arrive at well-supported conclusions.

Practice Makes Perfect

Engage in regular practice sessions with mock case studies to hone your problem-solving skills. Participate in data science forums and communities to discuss case studies with peers and gain diverse perspectives. The more you practice, the more confident and proficient you will become in tackling complex data science challenges.

Communicating Your Findings

Effectively communicating your findings and insights is crucial in a data science interview case study. Present your analysis in a clear and concise manner, highlighting key takeaways and recommendations. Demonstrate your storytelling ability by structuring your presentation in a logical and engaging manner.

💡 Are you a job seeker in San Francisco? Check out these fresh jobs in your area!

Exceling in data science interview case studies requires a combination of technical proficiency, analytical thinking, and effective communication . By mastering the art of case study preparation and problem-solving, you can showcase your data science skills and secure coveted job opportunities in the field.

Explore, Engage, Elevate: Discover Unlimited Stories on Rise Blog

Let us know your email to read this article and many more, plus get fresh jobs delivered to your inbox every week 🎉

Featured Jobs ⭐️

Get Featured ⭐️ jobs delivered straight to your inbox 📬

Get Fresh Jobs Delivered Straight to Your Inbox

Join our newsletter for free job alerts every Monday!

Jump to explore jobs

Sign up for our weekly newsletter of fresh jobs

Get fresh jobs delivered to your inbox every week 🎉

Network Depth:

Layer Complexity:

Nonlinearity:

Data science case study interview

Many accomplished students and newly minted AI professionals ask us$:$ How can I prepare for interviews? Good recruiters try setting up job applicants for success in interviews, but it may not be obvious how to prepare for them. We interviewed over 100 leaders in machine learning and data science to understand what AI interviews are and how to prepare for them.

TABLE OF CONTENTS

- I What to expect in the data science case study interview

- II Recommended framework

- III Interview tips

- IV Resources

AI organizations divide their work into data engineering, modeling, deployment, business analysis, and AI infrastructure. The necessary skills to carry out these tasks are a combination of technical, behavioral, and decision making skills. The data science case study interview focuses on technical and decision making skills, and you’ll encounter it during an onsite round for a Data Scientist (DS), Data Analyst (DA), Machine Learning Engineer (MLE) or Machine Learning Researcher (MLR). You can learn more about these roles in our AI Career Pathways report and about other types of interviews in The Skills Boost .

I What to expect in the data science case study interview

The interviewer is evaluating your approach to a real-world data science problem. The interview revolves around a technical question which can be open-ended. There is no exact solution to the question; it’s your thought process that the interviewer is evaluating. Here’s a list of interview questions you might be asked:

- How many cashiers should be at a Walmart store at a given time?

- You notice a spike in the number of user-uploaded videos on your platform in June. What do you think is the cause, and how would you test it?

- Your company is thinking of changing its logo. Is it a good idea? How would you test it?

- Could you tell if a coin is biased?

- In a given day, how many birthday posts occur on Facebook?

- What are the different performance metrics for evaluating ride sharing services?

- How will you test if a chosen credit scoring model works or not? What dataset(s) do you need?

- Given a user’s history of purchases, how do you predict their next purchase?

II Recommended framework

All interviews are different, but the ASPER framework is applicable to a variety of case studies:

- Ask . Ask questions to uncover details that were kept hidden by the interviewer. Specifically, you want to answer the following questions: “what are the product requirements and evaluation metrics?”, “what data do I have access to?”, ”how much time and computational resources do I have to run experiments?”.

- Suppose . Make justified assumptions to simplify the problem. Examples of assumptions are: “we are in small data regime”, “events are independent”, “the statistical significance level is 5%”, “the data distribution won’t change over time”, “we have three weeks”, etc.

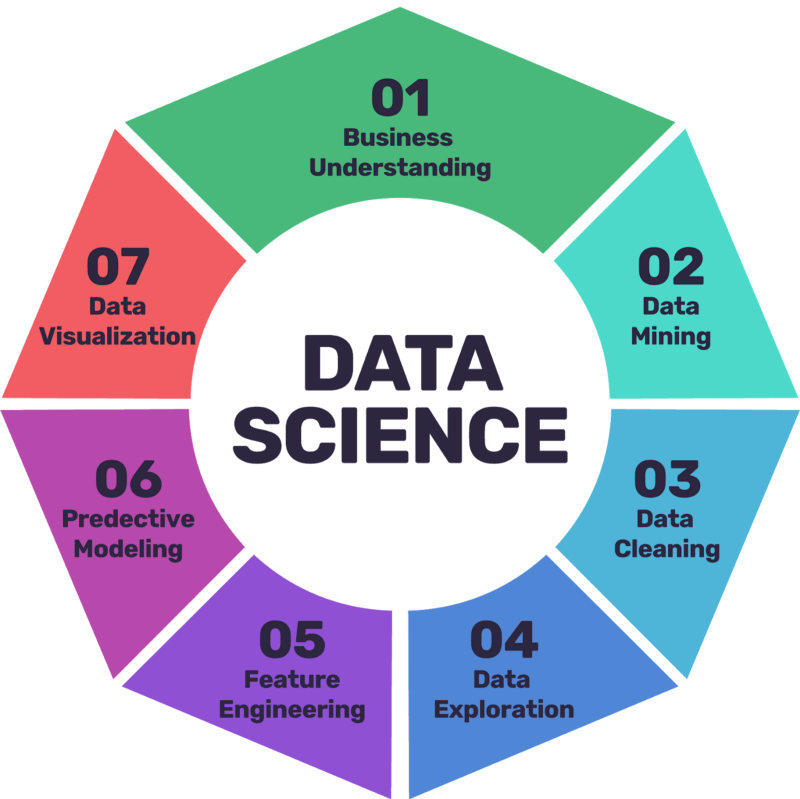

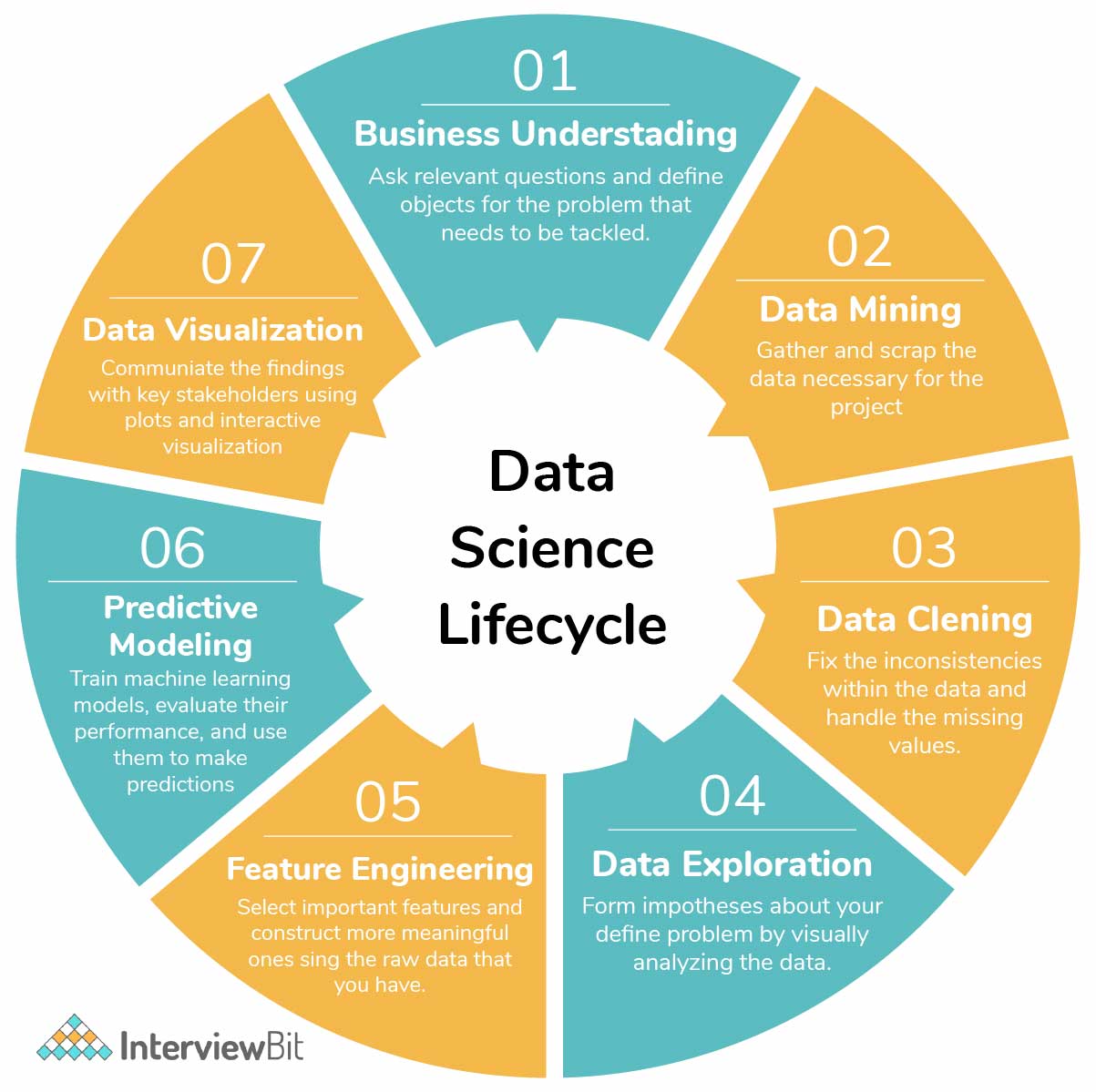

- Plan . Break down the problem into tasks. A common task sequence in the data science case study interview is: (i) data engineering, (ii) modeling, and (iii) business analysis.

- Execute . Announce your plan, and tackle the tasks one by one. In this step, the interviewer might ask you to write code or explain the maths behind your proposed method.

- Recap . At the end of the interview, summarize your answer and mention the tools and frameworks you would use to perform the work. It is also a good time to express your ideas on how the problem can be extended.

III Interview tips

Every interview is an opportunity to show your skills and motivation for the role. Thus, it is important to prepare in advance. Here are useful rules of thumb to follow:

Articulate your thoughts in a compelling narrative.

Data scientists often need to convert data into actionable business insights, create presentations, and convince business leaders. Thus, their communication skills are evaluated in interviews and can be the reason of a rejection. Your interviewer will judge the clarity of your thought process, your scientific rigor, and how comfortable you are using technical vocabulary.

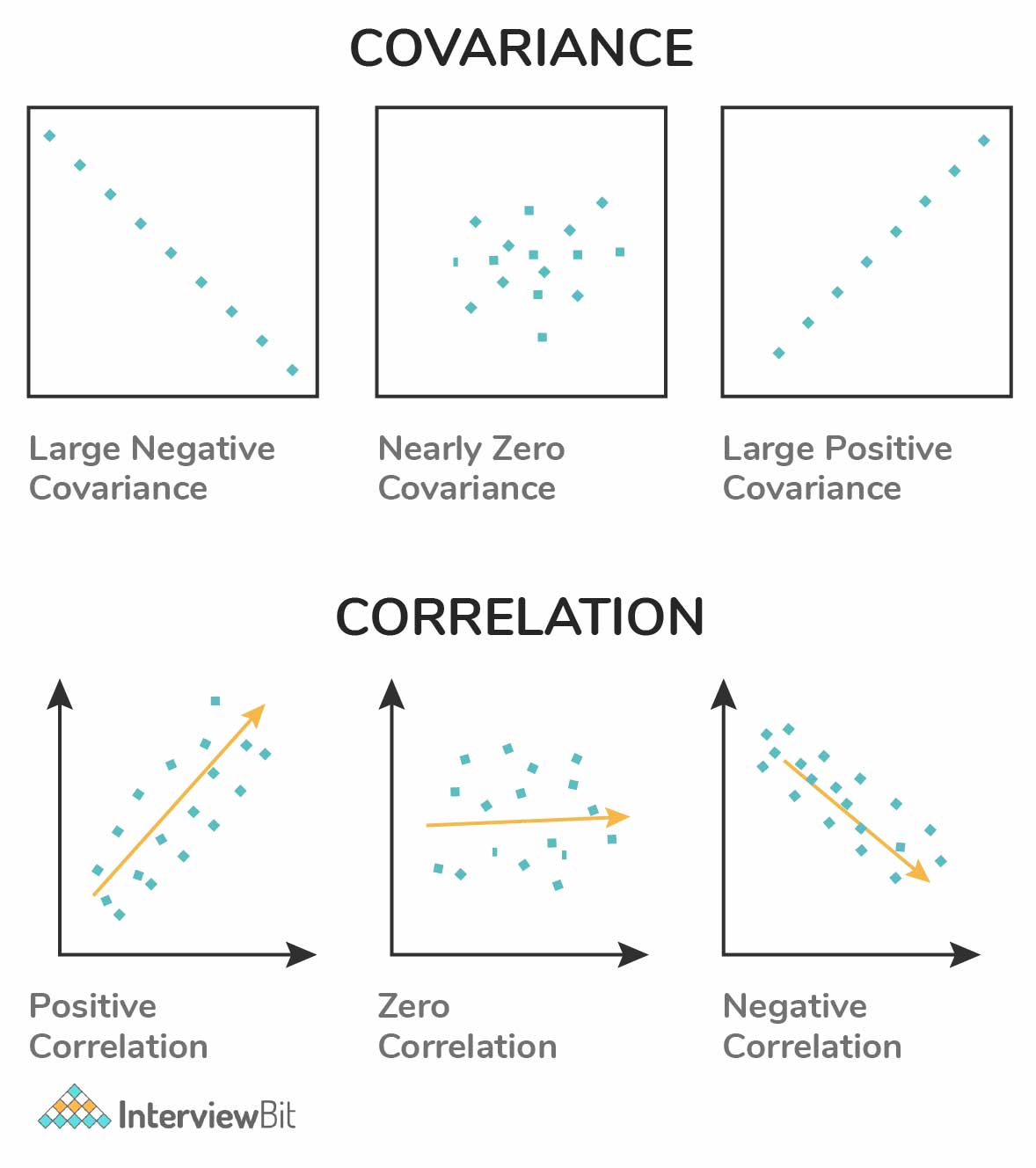

Example 1: Your interviewer will notice if you say “correlation matrix” when you actually meant “covariance matrix”.

Example 2: Mispronouncing a widely used technical word or acronym such as Poisson, ICA, or AUC can affect your credibility. For instance, ICA is pronounced aɪ-siː-eɪ (i.e., “I see A”) rather than “Ika”.

Example 3: Show your ability to strategize by drawing the AI project development life cycle on the whiteboard.

Tie your task to the business logic.

Example 1: If you are asked to improve Instagram’s news feed, identify what’s the goal of the product. Is it to have users spend more time on the app, users click on more ads, or drive interactions between users?

Example 2: You present graphs to show the number of salesperson needed in a retail store at a given time. It is a good idea to also discuss the savings your insight can lead to.

Alternatively, your interviewer might give you the business goal, such as improving retention, engagement or reducing employee churn, but expect you to come up with a metric to optimize.

Example: If the goal is to improve user engagement, you might use daily active users as a proxy and track it using their clicks (shares, likes, etc.).

Brush up your data science foundations before the interview.

You have to leverage concepts from probability and statistics such as correlation vs. causation or statistical significance. You should also be able to read a test table.

Example: You’re a professor currently evaluating students with a final exam, but considering switching to a project-based evaluation. A rumor says that the majority of your students are opposed to the switch. Before making the switch, what would you like to test? In this question, you should introduce notation to state your hypothesis and leverage tools such as confidence intervals, p-values, distributions, and tables. Your interviewer might then give you more information. For instance, you have polled a random sample of 300 students in your class and observed that 60% of them were against the switch.

Avoid clear-cut statements.

Because case studies are often open-ended and can have multiple valid solutions, avoid making categorical statements such as “the correct approach is …” You might offend the interviewer if the approach they are using is different from what you describe. It’s also better to show your flexibility with and understanding of the pros and cons of different approaches.

Study topics relevant to the company.

Data science case studies are often inspired by in-house projects. If the team is working on a domain-specific application, explore the literature.

Example 1: If the team is working on time series forecasting, you can expect questions about ARIMA, and follow-ups on how to test whether a coefficient of your model should be zero.