1 Hypothesis Testing

Biology is a science, but what exactly is science? What does the study of biology share with other scientific disciplines? Science (from the Latin scientia, meaning “knowledge”) can be defined as knowledge about the natural world.

Biologists study the living world by posing questions about it and seeking science-based responses. This approach is common to other sciences as well and is often referred to as the scientific method . The scientific process was used even in ancient times, but it was first documented by England’s Sir Francis Bacon (1561–1626) ( Figure 1 ), who set up inductive methods for scientific inquiry. The scientific method is not exclusively used by biologists but can be applied to almost anything as a logical problem solving method.

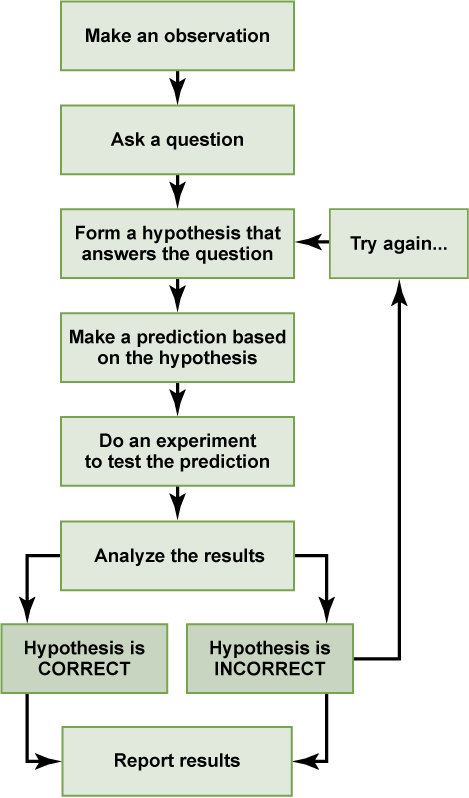

The scientific process typically starts with an observation (often a problem to be solved) that leads to a question. Science is very good at answering questions having to do with observations about the natural world, but is very bad at answering questions having to do with purely moral questions, aesthetic questions, personal opinions, or what can be generally categorized as spiritual questions. Science has cannot investigate these areas because they are outside the realm of material phenomena, the phenomena of matter and energy, and cannot be observed and measured.

Let’s think about a simple problem that starts with an observation and apply the scientific method to solve the problem. Imagine that one morning when you wake up and flip a the switch to turn on your bedside lamp, the light won’t turn on. That is an observation that also describes a problem: the lights won’t turn on. Of course, you would next ask the question: “Why won’t the light turn on?”

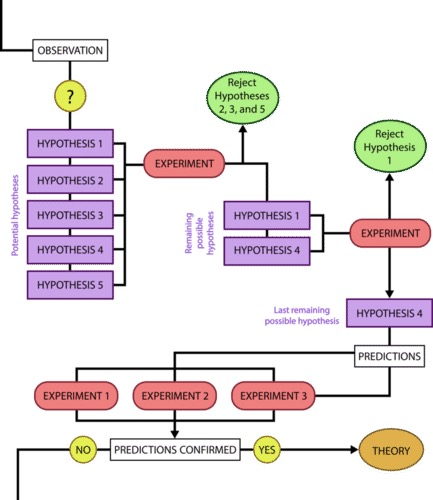

A hypothesis is a suggested explanation that can be tested. A hypothesis is NOT the question you are trying to answer – it is what you think the answer to the question will be and why . Several hypotheses may be proposed as answers to one question. For example, one hypothesis about the question “Why won’t the light turn on?” is “The light won’t turn on because the bulb is burned out.” There are also other possible answers to the question, and therefore other hypotheses may be proposed. A second hypothesis is “The light won’t turn on because the lamp is unplugged” or “The light won’t turn on because the power is out.” A hypothesis should be based on credible background information. A hypothesis is NOT just a guess (not even an educated one), although it can be based on your prior experience (such as in the example where the light won’t turn on). In general, hypotheses in biology should be based on a credible, referenced source of information.

A hypothesis must be testable to ensure that it is valid. For example, a hypothesis that depends on what a dog thinks is not testable, because we can’t tell what a dog thinks. It should also be falsifiable, meaning that it can be disproven by experimental results. An example of an unfalsifiable hypothesis is “Red is a better color than blue.” There is no experiment that might show this statement to be false. To test a hypothesis, a researcher will conduct one or more experiments designed to eliminate one or more of the hypotheses. This is important: a hypothesis can be disproven, or eliminated, but it can never be proven. If an experiment fails to disprove a hypothesis, then that explanation (the hypothesis) is supported as the answer to the question. However, that doesn’t mean that later on, we won’t find a better explanation or design a better experiment that will disprove the first hypothesis and lead to a better one.

A variable is any part of the experiment that can vary or change during the experiment. Typically, an experiment only tests one variable and all the other conditions in the experiment are held constant.

- The variable that is being changed or tested is known as the independent variable .

- The dependent variable is the thing (or things) that you are measuring as the outcome of your experiment.

- A constant is a condition that is the same between all of the tested groups.

- A confounding variable is a condition that is not held constant that could affect the experimental results.

Let’s start with the first hypothesis given above for the light bulb experiment: the bulb is burned out. When testing this hypothesis, the independent variable (the thing that you are testing) would be changing the light bulb and the dependent variable is whether or not the light turns on.

- HINT: You should be able to put your identified independent and dependent variables into the phrase “dependent depends on independent”. If you say “whether or not the light turns on depends on changing the light bulb” this makes sense and describes this experiment. In contrast, if you say “changing the light bulb depends on whether or not the light turns on” it doesn’t make sense.

It would be important to hold all the other aspects of the environment constant, for example not messing with the lamp cord or trying to turn the lamp on using a different light switch. If the entire house had lost power during the experiment because a car hit the power pole, that would be a confounding variable.

You may have learned that a hypothesis can be phrased as an “If..then…” statement. Simple hypotheses can be phrased that way (but they must always also include a “because”), but more complicated hypotheses may require several sentences. It is also very easy to get confused by trying to put your hypothesis into this format. Don’t worry about phrasing hypotheses as “if…then” statements – that is almost never done in experiments outside a classroom.

The results of your experiment are the data that you collect as the outcome. In the light experiment, your results are either that the light turns on or the light doesn’t turn on. Based on your results, you can make a conclusion. Your conclusion uses the results to answer your original question.

We can put the experiment with the light that won’t go in into the figure above:

- Observation: the light won’t turn on.

- Question: why won’t the light turn on?

- Hypothesis: the lightbulb is burned out.

- Prediction: if I change the lightbulb (independent variable), then the light will turn on (dependent variable).

- Experiment: change the lightbulb while leaving all other variables the same.

- Analyze the results: the light didn’t turn on.

- Conclusion: The lightbulb isn’t burned out. The results do not support the hypothesis, time to develop a new one!

- Hypothesis 2: the lamp is unplugged.

- Prediction 2: if I plug in the lamp, then the light will turn on.

- Experiment: plug in the lamp

- Analyze the results: the light turned on!

- Conclusion: The light wouldn’t turn on because the lamp was unplugged. The results support the hypothesis, it’s time to move on to the next experiment!

In practice, the scientific method is not as rigid and structured as it might at first appear. Sometimes an experiment leads to conclusions that favor a change in approach; often, an experiment brings entirely new scientific questions to the puzzle. Many times, science does not operate in a linear fashion; instead, scientists continually draw inferences and make generalizations, finding patterns as their research proceeds. Scientific reasoning is more complex than the scientific method alone suggests.

Control Groups

Another important aspect of designing an experiment is the presence of one or more control groups. A control group allows you to make a comparison that is important for interpreting your results. Control groups are samples that help you to determine that differences between your experimental groups are due to your treatment rather than a different variable – they eliminate alternate explanations for your results (including experimental error and experimenter bias). They increase reliability, often through the comparison of control measurements and measurements of the experimental groups. Often, the control group is a sample that is not treated with the independent variable, but is otherwise treated the same way as your experimental sample. This type of control group is treated the same way as the experimental group except it does not get treated with the independent variable. Therefore, if the results of the experimental group differ from the control group, the difference must be due to the change of the independent, rather than some outside factor. It is common in complex experiments (such as those published in scientific journals) to have more control groups than experimental groups.

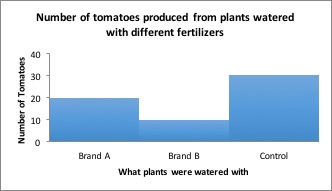

Question: Which fertilizer will produce the greatest number of tomatoes when applied to the plants?

Hypothesis : If I apply different brands of fertilizer to tomato plants, the most tomatoes will be produced from plants watered with Brand A because Brand A advertises that it produces twice as many tomatoes as other leading brands.

Experiment: Purchase 10 tomato plants of the same type from the same nursery. Pick plants that are similar in size and age. Divide the plants into two groups of 5. Apply Brand A to the first group and Brand B to the second group according to the instructions on the packages. After 10 weeks, count the number of tomatoes on each plant.

Independent Variable: Brand of fertilizer.

Dependent Variable : Number of tomatoes.

- The number of tomatoes produced depends on the brand of fertilizer applied to the plants.

Constants: amount of water, type of soil, size of pot, amount of light, type of tomato plant, length of time plants were grown.

Confounding variables : any of the above that are not held constant, plant health, diseases present in the soil or plant before it was purchased.

Results: Tomatoes fertilized with Brand A produced an average of 20 tomatoes per plant, while tomatoes fertilized with Brand B produced an average of 10 tomatoes per plant.

You’d want to use Brand A next time you grow tomatoes, right? But what if I told you that plants grown without fertilizer produced an average of 30 tomatoes per plant! Now what will you use on your tomatoes?

Results including control group : Tomatoes which received no fertilizer produced more tomatoes than either brand of fertilizer.

Conclusion: Although Brand A fertilizer produced more tomatoes than Brand B, neither fertilizer should be used because plants grown without fertilizer produced the most tomatoes!

More examples of control groups:

- You observe growth . Does this mean that your spinach is really contaminated? Consider an alternate explanation for growth: the swab, the water, or the plate is contaminated with bacteria. You could use a control group to determine which explanation is true. If you wet one of the swabs and wiped on a nutrient plate, do bacteria grow?

- You don’t observe growth. Does this mean that your spinach is really safe? Consider an alternate explanation for no growth: Salmonella isn’t able to grow on the type of nutrient you used in your plates. You could use a control group to determine which explanation is true. If you wipe a known sample of Salmonella bacteria on the plate, do bacteria grow?

- You see a reduction in disease symptoms: you might expect a reduction in disease symptoms purely because the person knows they are taking a drug so they believe should be getting better. If the group treated with the real drug does not show more a reduction in disease symptoms than the placebo group, the drug doesn’t really work. The placebo group sets a baseline against which the experimental group (treated with the drug) can be compared.

- You don’t see a reduction in disease symptoms: your drug doesn’t work. You don’t need an additional control group for comparison.

- You would want a “placebo feeder”. This would be the same type of feeder, but with no food in it. Birds might visit a feeder just because they are interested in it; an empty feeder would give a baseline level for bird visits.

- You would want a control group where you knew the enzyme would function. This would be a tube where you did not change the pH. You need this control group so you know your enzyme is working: if you didn’t see a reaction in any of the tubes with the pH adjusted, you wouldn’t know if it was because the enzyme wasn’t working at all or because the enzyme just didn’t work at any of your tested pH values.

- You would also want a control group where you knew the enzyme would not function (no enzyme added). You need the negative control group so you can ensure that there is no reaction taking place in the absence of enzyme: if the reaction proceeds without the enzyme, your results are meaningless.

Text adapted from: OpenStax , Biology. OpenStax CNX. May 27, 2016 http://cnx.org/contents/[email protected]:RD6ERYiU@5/The-Process-of-Science .

MHCC Biology 112: Biology for Health Professions Copyright © 2019 by Lisa Bartee is licensed under a Creative Commons Attribution 4.0 International License , except where otherwise noted.

Share This Book

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

1.4: Basic Concepts of Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 1715

- John H. McDonald

- University of Delaware

Learning Objectives

- One of the main goals of statistical hypothesis testing is to estimate the \(P\) value, which is the probability of obtaining the observed results, or something more extreme, if the null hypothesis were true. If the observed results are unlikely under the null hypothesis, reject the null hypothesis.

- Alternatives to this "frequentist" approach to statistics include Bayesian statistics and estimation of effect sizes and confidence intervals.

Introduction

There are different ways of doing statistics. The technique used by the vast majority of biologists, and the technique that most of this handbook describes, is sometimes called "frequentist" or "classical" statistics. It involves testing a null hypothesis by comparing the data you observe in your experiment with the predictions of a null hypothesis. You estimate what the probability would be of obtaining the observed results, or something more extreme, if the null hypothesis were true. If this estimated probability (the \(P\) value) is small enough (below the significance value), then you conclude that it is unlikely that the null hypothesis is true; you reject the null hypothesis and accept an alternative hypothesis.

Many statisticians harshly criticize frequentist statistics, but their criticisms haven't had much effect on the way most biologists do statistics. Here I will outline some of the key concepts used in frequentist statistics, then briefly describe some of the alternatives.

Null Hypothesis

The null hypothesis is a statement that you want to test. In general, the null hypothesis is that things are the same as each other, or the same as a theoretical expectation. For example, if you measure the size of the feet of male and female chickens, the null hypothesis could be that the average foot size in male chickens is the same as the average foot size in female chickens. If you count the number of male and female chickens born to a set of hens, the null hypothesis could be that the ratio of males to females is equal to a theoretical expectation of a \(1:1\) ratio.

The alternative hypothesis is that things are different from each other, or different from a theoretical expectation.

For example, one alternative hypothesis would be that male chickens have a different average foot size than female chickens; another would be that the sex ratio is different from \(1:1\).

Usually, the null hypothesis is boring and the alternative hypothesis is interesting. For example, let's say you feed chocolate to a bunch of chickens, then look at the sex ratio in their offspring. If you get more females than males, it would be a tremendously exciting discovery: it would be a fundamental discovery about the mechanism of sex determination, female chickens are more valuable than male chickens in egg-laying breeds, and you'd be able to publish your result in Science or Nature . Lots of people have spent a lot of time and money trying to change the sex ratio in chickens, and if you're successful, you'll be rich and famous. But if the chocolate doesn't change the sex ratio, it would be an extremely boring result, and you'd have a hard time getting it published in the Eastern Delaware Journal of Chickenology . It's therefore tempting to look for patterns in your data that support the exciting alternative hypothesis. For example, you might look at \(48\) offspring of chocolate-fed chickens and see \(31\) females and only \(17\) males. This looks promising, but before you get all happy and start buying formal wear for the Nobel Prize ceremony, you need to ask "What's the probability of getting a deviation from the null expectation that large, just by chance, if the boring null hypothesis is really true?" Only when that probability is low can you reject the null hypothesis. The goal of statistical hypothesis testing is to estimate the probability of getting your observed results under the null hypothesis.

Biological vs. Statistical Null Hypotheses

It is important to distinguish between biological null and alternative hypotheses and statistical null and alternative hypotheses. "Sexual selection by females has caused male chickens to evolve bigger feet than females" is a biological alternative hypothesis; it says something about biological processes, in this case sexual selection. "Male chickens have a different average foot size than females" is a statistical alternative hypothesis; it says something about the numbers, but nothing about what caused those numbers to be different. The biological null and alternative hypotheses are the first that you should think of, as they describe something interesting about biology; they are two possible answers to the biological question you are interested in ("What affects foot size in chickens?"). The statistical null and alternative hypotheses are statements about the data that should follow from the biological hypotheses: if sexual selection favors bigger feet in male chickens (a biological hypothesis), then the average foot size in male chickens should be larger than the average in females (a statistical hypothesis). If you reject the statistical null hypothesis, you then have to decide whether that's enough evidence that you can reject your biological null hypothesis. For example, if you don't find a significant difference in foot size between male and female chickens, you could conclude "There is no significant evidence that sexual selection has caused male chickens to have bigger feet." If you do find a statistically significant difference in foot size, that might not be enough for you to conclude that sexual selection caused the bigger feet; it might be that males eat more, or that the bigger feet are a developmental byproduct of the roosters' combs, or that males run around more and the exercise makes their feet bigger. When there are multiple biological interpretations of a statistical result, you need to think of additional experiments to test the different possibilities.

Testing the Null Hypothesis

The primary goal of a statistical test is to determine whether an observed data set is so different from what you would expect under the null hypothesis that you should reject the null hypothesis. For example, let's say you are studying sex determination in chickens. For breeds of chickens that are bred to lay lots of eggs, female chicks are more valuable than male chicks, so if you could figure out a way to manipulate the sex ratio, you could make a lot of chicken farmers very happy. You've fed chocolate to a bunch of female chickens (in birds, unlike mammals, the female parent determines the sex of the offspring), and you get \(25\) female chicks and \(23\) male chicks. Anyone would look at those numbers and see that they could easily result from chance; there would be no reason to reject the null hypothesis of a \(1:1\) ratio of females to males. If you got \(47\) females and \(1\) male, most people would look at those numbers and see that they would be extremely unlikely to happen due to luck, if the null hypothesis were true; you would reject the null hypothesis and conclude that chocolate really changed the sex ratio. However, what if you had \(31\) females and \(17\) males? That's definitely more females than males, but is it really so unlikely to occur due to chance that you can reject the null hypothesis? To answer that, you need more than common sense, you need to calculate the probability of getting a deviation that large due to chance.

In the figure above, I used the BINOMDIST function of Excel to calculate the probability of getting each possible number of males, from \(0\) to \(48\), under the null hypothesis that \(0.5\) are male. As you can see, the probability of getting \(17\) males out of \(48\) total chickens is about \(0.015\). That seems like a pretty small probability, doesn't it? However, that's the probability of getting exactly \(17\) males. What you want to know is the probability of getting \(17\) or fewer males. If you were going to accept \(17\) males as evidence that the sex ratio was biased, you would also have accepted \(16\), or \(15\), or \(14\),… males as evidence for a biased sex ratio. You therefore need to add together the probabilities of all these outcomes. The probability of getting \(17\) or fewer males out of \(48\), under the null hypothesis, is \(0.030\). That means that if you had an infinite number of chickens, half males and half females, and you took a bunch of random samples of \(48\) chickens, \(3.0\%\) of the samples would have \(17\) or fewer males.

This number, \(0.030\), is the \(P\) value. It is defined as the probability of getting the observed result, or a more extreme result, if the null hypothesis is true. So "\(P=0.030\)" is a shorthand way of saying "The probability of getting \(17\) or fewer male chickens out of \(48\) total chickens, IF the null hypothesis is true that \(50\%\) of chickens are male, is \(0.030\)."

False Positives vs. False Negatives

After you do a statistical test, you are either going to reject or accept the null hypothesis. Rejecting the null hypothesis means that you conclude that the null hypothesis is not true; in our chicken sex example, you would conclude that the true proportion of male chicks, if you gave chocolate to an infinite number of chicken mothers, would be less than \(50\%\).

When you reject a null hypothesis, there's a chance that you're making a mistake. The null hypothesis might really be true, and it may be that your experimental results deviate from the null hypothesis purely as a result of chance. In a sample of \(48\) chickens, it's possible to get \(17\) male chickens purely by chance; it's even possible (although extremely unlikely) to get \(0\) male and \(48\) female chickens purely by chance, even though the true proportion is \(50\%\) males. This is why we never say we "prove" something in science; there's always a chance, however miniscule, that our data are fooling us and deviate from the null hypothesis purely due to chance. When your data fool you into rejecting the null hypothesis even though it's true, it's called a "false positive," or a "Type I error." So another way of defining the \(P\) value is the probability of getting a false positive like the one you've observed, if the null hypothesis is true.

Another way your data can fool you is when you don't reject the null hypothesis, even though it's not true. If the true proportion of female chicks is \(51\%\), the null hypothesis of a \(50\%\) proportion is not true, but you're unlikely to get a significant difference from the null hypothesis unless you have a huge sample size. Failing to reject the null hypothesis, even though it's not true, is a "false negative" or "Type II error." This is why we never say that our data shows the null hypothesis to be true; all we can say is that we haven't rejected the null hypothesis.

Significance Levels

Does a probability of \(0.030\) mean that you should reject the null hypothesis, and conclude that chocolate really caused a change in the sex ratio? The convention in most biological research is to use a significance level of \(0.05\). This means that if the \(P\) value is less than \(0.05\), you reject the null hypothesis; if \(P\) is greater than or equal to \(0.05\), you don't reject the null hypothesis. There is nothing mathematically magic about \(0.05\), it was chosen rather arbitrarily during the early days of statistics; people could have agreed upon \(0.04\), or \(0.025\), or \(0.071\) as the conventional significance level.

The significance level (also known as the "critical value" or "alpha") you should use depends on the costs of different kinds of errors. With a significance level of \(0.05\), you have a \(5\%\) chance of rejecting the null hypothesis, even if it is true. If you try \(100\) different treatments on your chickens, and none of them really change the sex ratio, \(5\%\) of your experiments will give you data that are significantly different from a \(1:1\) sex ratio, just by chance. In other words, \(5\%\) of your experiments will give you a false positive. If you use a higher significance level than the conventional \(0.05\), such as \(0.10\), you will increase your chance of a false positive to \(0.10\) (therefore increasing your chance of an embarrassingly wrong conclusion), but you will also decrease your chance of a false negative (increasing your chance of detecting a subtle effect). If you use a lower significance level than the conventional \(0.05\), such as \(0.01\), you decrease your chance of an embarrassing false positive, but you also make it less likely that you'll detect a real deviation from the null hypothesis if there is one.

The relative costs of false positives and false negatives, and thus the best \(P\) value to use, will be different for different experiments. If you are screening a bunch of potential sex-ratio-changing treatments and get a false positive, it wouldn't be a big deal; you'd just run a few more tests on that treatment until you were convinced the initial result was a false positive. The cost of a false negative, however, would be that you would miss out on a tremendously valuable discovery. You might therefore set your significance value to \(0.10\) or more for your initial tests. On the other hand, once your sex-ratio-changing treatment is undergoing final trials before being sold to farmers, a false positive could be very expensive; you'd want to be very confident that it really worked. Otherwise, if you sell the chicken farmers a sex-ratio treatment that turns out to not really work (it was a false positive), they'll sue the pants off of you. Therefore, you might want to set your significance level to \(0.01\), or even lower, for your final tests.

The significance level you choose should also depend on how likely you think it is that your alternative hypothesis will be true, a prediction that you make before you do the experiment. This is the foundation of Bayesian statistics, as explained below.

You must choose your significance level before you collect the data, of course. If you choose to use a different significance level than the conventional \(0.05\), people will be skeptical; you must be able to justify your choice. Throughout this handbook, I will always use \(P< 0.05\) as the significance level. If you are doing an experiment where the cost of a false positive is a lot greater or smaller than the cost of a false negative, or an experiment where you think it is unlikely that the alternative hypothesis will be true, you should consider using a different significance level.

One-tailed vs. Two-tailed Probabilities

The probability that was calculated above, \(0.030\), is the probability of getting \(17\) or fewer males out of \(48\). It would be significant, using the conventional \(P< 0.05\)criterion. However, what about the probability of getting \(17\) or fewer females? If your null hypothesis is "The proportion of males is \(17\) or more" and your alternative hypothesis is "The proportion of males is less than \(0.5\)," then you would use the \(P=0.03\) value found by adding the probabilities of getting \(17\) or fewer males. This is called a one-tailed probability, because you are adding the probabilities in only one tail of the distribution shown in the figure. However, if your null hypothesis is "The proportion of males is \(0.5\)", then your alternative hypothesis is "The proportion of males is different from \(0.5\)." In that case, you should add the probability of getting \(17\) or fewer females to the probability of getting \(17\) or fewer males. This is called a two-tailed probability. If you do that with the chicken result, you get \(P=0.06\), which is not quite significant.

You should decide whether to use the one-tailed or two-tailed probability before you collect your data, of course. A one-tailed probability is more powerful, in the sense of having a lower chance of false negatives, but you should only use a one-tailed probability if you really, truly have a firm prediction about which direction of deviation you would consider interesting. In the chicken example, you might be tempted to use a one-tailed probability, because you're only looking for treatments that decrease the proportion of worthless male chickens. But if you accidentally found a treatment that produced \(87\%\) male chickens, would you really publish the result as "The treatment did not cause a significant decrease in the proportion of male chickens"? I hope not. You'd realize that this unexpected result, even though it wasn't what you and your farmer friends wanted, would be very interesting to other people; by leading to discoveries about the fundamental biology of sex-determination in chickens, in might even help you produce more female chickens someday. Any time a deviation in either direction would be interesting, you should use the two-tailed probability. In addition, people are skeptical of one-tailed probabilities, especially if a one-tailed probability is significant and a two-tailed probability would not be significant (as in our chocolate-eating chicken example). Unless you provide a very convincing explanation, people may think you decided to use the one-tailed probability after you saw that the two-tailed probability wasn't quite significant, which would be cheating. It may be easier to always use two-tailed probabilities. For this handbook, I will always use two-tailed probabilities, unless I make it very clear that only one direction of deviation from the null hypothesis would be interesting.

Reporting your results

In the olden days, when people looked up \(P\) values in printed tables, they would report the results of a statistical test as "\(P< 0.05\)", "\(P< 0.01\)", "\(P>0.10\)", etc. Nowadays, almost all computer statistics programs give the exact \(P\) value resulting from a statistical test, such as \(P=0.029\), and that's what you should report in your publications. You will conclude that the results are either significant or they're not significant; they either reject the null hypothesis (if \(P\) is below your pre-determined significance level) or don't reject the null hypothesis (if \(P\) is above your significance level). But other people will want to know if your results are "strongly" significant (\(P\) much less than \(0.05\)), which will give them more confidence in your results than if they were "barely" significant (\(P=0.043\), for example). In addition, other researchers will need the exact \(P\) value if they want to combine your results with others into a meta-analysis.

Computer statistics programs can give somewhat inaccurate \(P\) values when they are very small. Once your \(P\) values get very small, you can just say "\(P< 0.00001\)" or some other impressively small number. You should also give either your raw data, or the test statistic and degrees of freedom, in case anyone wants to calculate your exact \(P\) value.

Effect Sizes and Confidence Intervals

A fairly common criticism of the hypothesis-testing approach to statistics is that the null hypothesis will always be false, if you have a big enough sample size. In the chicken-feet example, critics would argue that if you had an infinite sample size, it is impossible that male chickens would have exactly the same average foot size as female chickens. Therefore, since you know before doing the experiment that the null hypothesis is false, there's no point in testing it.

This criticism only applies to two-tailed tests, where the null hypothesis is "Things are exactly the same" and the alternative is "Things are different." Presumably these critics think it would be okay to do a one-tailed test with a null hypothesis like "Foot length of male chickens is the same as, or less than, that of females," because the null hypothesis that male chickens have smaller feet than females could be true. So if you're worried about this issue, you could think of a two-tailed test, where the null hypothesis is that things are the same, as shorthand for doing two one-tailed tests. A significant rejection of the null hypothesis in a two-tailed test would then be the equivalent of rejecting one of the two one-tailed null hypotheses.

A related criticism is that a significant rejection of a null hypothesis might not be biologically meaningful, if the difference is too small to matter. For example, in the chicken-sex experiment, having a treatment that produced \(49.9\%\) male chicks might be significantly different from \(50\%\), but it wouldn't be enough to make farmers want to buy your treatment. These critics say you should estimate the effect size and put a confidence interval on it, not estimate a \(P\) value. So the goal of your chicken-sex experiment should not be to say "Chocolate gives a proportion of males that is significantly less than \(50\%\) ((\(P=0.015\))" but to say "Chocolate produced \(36.1\%\) males with a \(95\%\) confidence interval of \(25.9\%\) to \(47.4\%\)." For the chicken-feet experiment, you would say something like "The difference between males and females in mean foot size is \(2.45mm\), with a confidence interval on the difference of \(\pm 1.98mm\)."

Estimating effect sizes and confidence intervals is a useful way to summarize your results, and it should usually be part of your data analysis; you'll often want to include confidence intervals in a graph. However, there are a lot of experiments where the goal is to decide a yes/no question, not estimate a number. In the initial tests of chocolate on chicken sex ratio, the goal would be to decide between "It changed the sex ratio" and "It didn't seem to change the sex ratio." Any change in sex ratio that is large enough that you could detect it would be interesting and worth follow-up experiments. While it's true that the difference between \(49.9\%\) and \(50\%\) might not be worth pursuing, you wouldn't do an experiment on enough chickens to detect a difference that small.

Often, the people who claim to avoid hypothesis testing will say something like "the \(95\%\) confidence interval of \(25.9\%\) to \(47.4\%\) does not include \(50\%\), so we conclude that the plant extract significantly changed the sex ratio." This is a clumsy and roundabout form of hypothesis testing, and they might as well admit it and report the \(P\) value.

Bayesian statistics

Another alternative to frequentist statistics is Bayesian statistics. A key difference is that Bayesian statistics requires specifying your best guess of the probability of each possible value of the parameter to be estimated, before the experiment is done. This is known as the "prior probability." So for your chicken-sex experiment, you're trying to estimate the "true" proportion of male chickens that would be born, if you had an infinite number of chickens. You would have to specify how likely you thought it was that the true proportion of male chickens was \(50\%\), or \(51\%\), or \(52\%\), or \(47.3\%\), etc. You would then look at the results of your experiment and use the information to calculate new probabilities that the true proportion of male chickens was \(50\%\), or \(51\%\), or \(52\%\), or \(47.3\%\), etc. (the posterior distribution).

I'll confess that I don't really understand Bayesian statistics, and I apologize for not explaining it well. In particular, I don't understand how people are supposed to come up with a prior distribution for the kinds of experiments that most biologists do. With the exception of systematics, where Bayesian estimation of phylogenies is quite popular and seems to make sense, I haven't seen many research biologists using Bayesian statistics for routine data analysis of simple laboratory experiments. This means that even if the cult-like adherents of Bayesian statistics convinced you that they were right, you would have a difficult time explaining your results to your biologist peers. Statistics is a method of conveying information, and if you're speaking a different language than the people you're talking to, you won't convey much information. So I'll stick with traditional frequentist statistics for this handbook.

Having said that, there's one key concept from Bayesian statistics that is important for all users of statistics to understand. To illustrate it, imagine that you are testing extracts from \(1000\) different tropical plants, trying to find something that will kill beetle larvae. The reality (which you don't know) is that \(500\) of the extracts kill beetle larvae, and \(500\) don't. You do the \(1000\) experiments and do the \(1000\) frequentist statistical tests, and you use the traditional significance level of \(P< 0.05\). The \(500\) plant extracts that really work all give you \(P< 0.05\); these are the true positives. Of the \(500\) extracts that don't work, \(5\%\) of them give you \(P< 0.05\) by chance (this is the meaning of the \(P\) value, after all), so you have \(25\) false positives. So you end up with \(525\) plant extracts that gave you a \(P\) value less than \(0.05\). You'll have to do further experiments to figure out which are the \(25\) false positives and which are the \(500\) true positives, but that's not so bad, since you know that most of them will turn out to be true positives.

Now imagine that you are testing those extracts from \(1000\) different tropical plants to try to find one that will make hair grow. The reality (which you don't know) is that one of the extracts makes hair grow, and the other \(999\) don't. You do the \(1000\) experiments and do the \(1000\) frequentist statistical tests, and you use the traditional significance level of \(P< 0.05\). The one plant extract that really works gives you P <0.05; this is the true positive. But of the \(999\) extracts that don't work, \(5\%\) of them give you \(P< 0.05\) by chance, so you have about \(50\) false positives. You end up with \(51\) \(P\) values less than \(0.05\), but almost all of them are false positives.

Now instead of testing \(1000\) plant extracts, imagine that you are testing just one. If you are testing it to see if it kills beetle larvae, you know (based on everything you know about plant and beetle biology) there's a pretty good chance it will work, so you can be pretty sure that a \(P\) value less than \(0.05\) is a true positive. But if you are testing that one plant extract to see if it grows hair, which you know is very unlikely (based on everything you know about plants and hair), a \(P\) value less than \(0.05\) is almost certainly a false positive. In other words, if you expect that the null hypothesis is probably true, a statistically significant result is probably a false positive. This is sad; the most exciting, amazing, unexpected results in your experiments are probably just your data trying to make you jump to ridiculous conclusions. You should require a much lower \(P\) value to reject a null hypothesis that you think is probably true.

A Bayesian would insist that you put in numbers just how likely you think the null hypothesis and various values of the alternative hypothesis are, before you do the experiment, and I'm not sure how that is supposed to work in practice for most experimental biology. But the general concept is a valuable one: as Carl Sagan summarized it, "Extraordinary claims require extraordinary evidence."

Recommendations

Here are three experiments to illustrate when the different approaches to statistics are appropriate. In the first experiment, you are testing a plant extract on rabbits to see if it will lower their blood pressure. You already know that the plant extract is a diuretic (makes the rabbits pee more) and you already know that diuretics tend to lower blood pressure, so you think there's a good chance it will work. If it does work, you'll do more low-cost animal tests on it before you do expensive, potentially risky human trials. Your prior expectation is that the null hypothesis (that the plant extract has no effect) has a good chance of being false, and the cost of a false positive is fairly low. So you should do frequentist hypothesis testing, with a significance level of \(0.05\).

In the second experiment, you are going to put human volunteers with high blood pressure on a strict low-salt diet and see how much their blood pressure goes down. Everyone will be confined to a hospital for a month and fed either a normal diet, or the same foods with half as much salt. For this experiment, you wouldn't be very interested in the \(P\) value, as based on prior research in animals and humans, you are already quite certain that reducing salt intake will lower blood pressure; you're pretty sure that the null hypothesis that "Salt intake has no effect on blood pressure" is false. Instead, you are very interested to know how much the blood pressure goes down. Reducing salt intake in half is a big deal, and if it only reduces blood pressure by \(1mm\) Hg, the tiny gain in life expectancy wouldn't be worth a lifetime of bland food and obsessive label-reading. If it reduces blood pressure by \(20mm\) with a confidence interval of \(\pm 5mm\), it might be worth it. So you should estimate the effect size (the difference in blood pressure between the diets) and the confidence interval on the difference.

In the third experiment, you are going to put magnetic hats on guinea pigs and see if their blood pressure goes down (relative to guinea pigs wearing the kind of non-magnetic hats that guinea pigs usually wear). This is a really goofy experiment, and you know that it is very unlikely that the magnets will have any effect (it's not impossible—magnets affect the sense of direction of homing pigeons, and maybe guinea pigs have something similar in their brains and maybe it will somehow affect their blood pressure—it just seems really unlikely). You might analyze your results using Bayesian statistics, which will require specifying in numerical terms just how unlikely you think it is that the magnetic hats will work. Or you might use frequentist statistics, but require a \(P\) value much, much lower than \(0.05\) to convince yourself that the effect is real.

- Picture of giant concrete chicken from Sue and Tony's Photo Site.

- Picture of guinea pigs wearing hats from all over the internet; if you know the original photographer, please let me know.

6 Testing

Hypothesis testing is one of the workhorses of science. It is how we can draw conclusions or make decisions based on finite samples of data. For instance, new treatments for a disease are usually approved on the basis of clinical trials that aim to decide whether the treatment has better efficacy compared to the other available options, and an acceptable trade-off of side effects. Such trials are expensive and can take a long time. Therefore, the number of patients we can enroll is limited, and we need to base our inference on a limited sample of observed patient responses. The data are noisy, since a patient’s response depends not only on the treatment, but on many other factors outside of our control. The sample size needs to be large enough to enable us to make a reliable conclusion. On the other hand, it also must not be too large, so that we do not waste precious resources or time, e.g., by making drugs more expensive than necessary, or by denying patients that would benefit from the new drug access to it. The machinery of hypothesis testing was developed largely with such applications in mind, although today it is used much more widely.

In biological data analysis (and in many other fields 1 ) we see hypothesis testing applied to screen thousands or millions of possible hypotheses to find the ones that are worth following up. For instance, researchers screen genetic variants for associations with a phenotype, or gene expression levels for associations with disease. Here, “worthwhile” is often interpreted as “statistically significant”, although the two concepts are clearly not the same. It is probably fair to say that statistical significance is a necessary condition for making a data-driven decision to find something interesting, but it’s clearly not sufficient. In any case, such large-scale association screening is closely related to multiple hypothesis testing.

1 Detecting credit card fraud, email spam detection, \(...\)

6.1 Goals for this Chapter

In this chapter we will:

Familiarize ourselves with the statistical machinery of hypothesis testing, its vocabulary, its purpose, and its strengths and limitations.

Understand what multiple testing means.

See that multiple testing is not a problem – but rather, an opportunity, as it overcomes many of the limitations of single testing.

Understand the false discovery rate.

Learn how to make diagnostic plots.

Use hypothesis weighting to increase the power of our analyses.

6.1.1 Drinking from the firehose

If statistical testing—decision making with uncertainty—seems a hard task when making a single decision, then brace yourself: in genomics, or more generally with “big data”, we need to accomplish it not once, but thousands or millions of times. In Chapter 2 , we saw the example of epitope detection and the challenges from considering not only one, but several positions. Similarly, in whole genome sequencing, we scan every position in the genome for a difference between the DNA library at hand and a reference (or, another library): that’s in the order of six billion tests if we are looking at human data! In genetic or chemical compound screening, we test each of the reagents for an effect in the assay, compared to a control: that’s again tens of thousands, if not millions of tests. In Chapter 8 , we will analyse RNA-Seq data for differential expression by applying a hypothesis test to each of the thousands of genes assayed.

6.1.2 Testing versus classification

Suppose we measured the expression level of a marker gene to decide whether some cells we are studying are from cell type A or B. First, let’s consider that we have no prior assumption, and it’s equally important to us to get the assignment right no matter whether the true cell type is A or B. This is a classification task. We’ll cover classification in Chapter 12 . In this chapter, we consider the asymmetric case: based on what we already know (we could call this our prior knowledge), we lean towards conservatively calling any cell A, unless there is strong enough evidence for the alternative. Or maybe class B is interesting, rare, and/or worthwhile studying further, whereas A is a “catch-all” class for all the boring rest. In such cases, the machinery of hypothesis testing is for us.

Formally, there are many similarities between hypothesis testing and classification. In both cases, we aim to use data to choose between several possible decisions. It is even possible to think of hypothesis testing as a special case of classification. However, these two approaches are geared towards different objectives and underlying assumptions, and when you encounter a statistical decision problem, it is good to keep that in mind in your choice of methodology.

6.1.3 False discovery rate versus p-value: which is more intuitive?

Hypothesis testing has traditionally been taught with p-values first—introducing them as the primal, basic concept. Multiple testing and false discovery rates are then presented as derived, additional ideas. There are good mathematical and practical reasons for doing so, and the rest of this chapter follows this tradition. However, in this prefacing section we would like to point out that it can be more intuitive and more pedagogical to revert the order, and learn about false discovery rates first and think of p-values as an imperfect proxy.

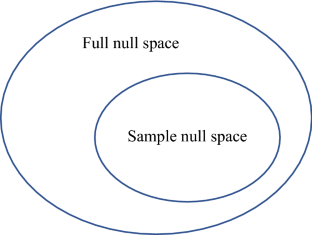

Consider Figure 6.2 , which represents a binary decision problem. Let’s say we call a discovery whenever the summary statistic \(x\) is particularly small, i.e., when it falls to the left of the vertical black bar 2 . Then the false discovery rate 3 (FDR) is simply the fraction of false discoveries among all discoveries, i.e.:

2 This is “without loss of generality”: we could also flip the \(x\) -axis and call something with a high score a discovery.

3 This is a rather informal definition. For more precise definitions, see for instance ( Storey 2003 ; Efron 2010 ) and Section 6.10 .

\[ \text{FDR}=\frac{\text{area shaded in light blue}}{\text{sum of the areas left of the vertical bar (light blue + strong red)}}. \tag{6.1}\]

The FDR depends not only on the position of the decision threshold (the vertical bar), but also on the shape and location of the two distributions, and on their relative sizes. In Figures 6.2 and 6.3 , the overall blue area is twice as big as the overall red area, reflecting the fact that the blue class is (in this example) twice as prevalent (or: a priori, twice as likely) as the red class.

Note that this definition does not require the concept or even the calculation of a p-value. It works for any arbitrarily defined score \(x\) . However, it requires knowledge of three things:

the distribution of \(x\) in the blue class (the blue curve),

the distribution of \(x\) in the red class (the red curve),

the relative sizes of the blue and the red classes.

If we know these, then we are basically done at this point; or we can move on to supervised classification in Chapter 12 , which deals with the extension of Figure 6.2 to multivariate \(x\) .

Very often, however, we do not know all of these, and this is the realm of hypothesis testing. In particular, suppose that one of the two classes (say, the blue one) is easier than the other, and we can figure out its distribution, either from first principles or simulations. We use that fact to transform our score \(x\) to a standardized range between 0 and 1 (see Figures 6.2 — 6.4 ), which we call the p-value . We give the class a fancier name: null hypothesis . This addresses Point 1 in the above list. We do not insist on knowing Point 2 (and we give another fancy name, alternative hypothesis , to the red class). As for Point 3, we can use the conservative upper limit that the null hypothesis is far more prevalent (or: likely) than the alternative and do our calculations under the condition that the null hypothesis is true. This is the traditional approach to hypothesis testing.

Thus, instead of basing our decision-making on the intuitive FDR ( Equation 6.2 ), we base it on the

\[ \text{p-value}=\frac{\text{area shaded in light blue}}{\text{overall blue area}}. \tag{6.2}\]

In other words, the p-value is the precise and often relatively easy-to-compute answer to a rather convoluted question (and perhaps the wrong question). The FDR answers the right question, but requires a lot more input, which we often do not have.

6.1.4 The multiple testing opportunity

Here is the good news about multiple testing: even if we do not know Items 2 and 3 from the bullet list above explicitly for our tests (and perhaps even if we are unsure about Point 1 ( Efron 2010 ) ), we may be able to infer this information from the multiplicity—and thus convert p-values into estimates of the FDR!

Thus, multiple testing tends to make our inference better, and our task simpler. Since we have so much data, we do not only have to rely on abstract assumptions. We can check empirically whether the requirements of the tests are actually met by the data. All this can be incredibly helpful, and we get it because of the multiplicity. So we should think about multiple testing not as a “problem” or a “burden”, but as an opportunity!

6.2 An example: coin tossing

So now let’s dive into hypothesis testing, starting with single testing. To really understand the mechanics, we use one of the simplest possible examples: suppose we are flipping a coin to see if it is fair 4 . We flip the coin 100 times and each time record whether it came up heads or tails. So, we have a record that could look something like this:

4 We don’t look at coin tossing because it’s inherently important, but because it is an easy “model system” (just as we use model systems in biology): everything can be calculated easily, and you do not need a lot of domain knowledge to understand what coin tossing is. All the important concepts come up, and we can apply them, only with more additional details, to other applications.

which we can simulate in R. Let’s assume we are flipping a biased coin, so we set probHead different from 1/2:

Now, if the coin were fair, we would expect half of the time to get heads. Let’s see.

So that is different from 50/50. Suppose we showed the data to a friend without telling them whether the coin is fair, and their prior assumption, i.e., their null hypothesis, is that coins are, by and large, fair. Would the data be strong enough to make them conclude that this coin isn’t fair? They know that random sampling differences are to be expected. To decide, let’s look at the sampling distribution of our test statistic – the total number of heads seen in 100 coin tosses – for a fair coin 5 . As we saw in Chapter 1 , the number, \(k\) , of heads, in \(n\) independent tosses of a coin is

5 We haven’t really defined what we mean be fair – a reasonable definition would be that head and tail are equally likely, and that the outcome of each coin toss does not depend on the previous ones. For more complex applications, nailing down the most suitable null hypothesis can take some thought.

\[ P(K=k\,|\,n, p) = \left(\begin{array}{c}n\\k\end{array}\right) p^k\;(1-p)^{n-k}, \tag{6.3}\]

where \(p\) is the probability of heads (0.5 if we assume a fair coin). We read the left hand side of the above equation as “the probability that the observed value for \(K\) is \(k\) , given the values of \(n\) and \(p\) ”. Statisticians like to make a difference between all the possible values of a statistic and the one that was observed 6 , and we use the upper case \(K\) for the possible values (so \(K\) can be anything between 0 and 100), and the lower case \(k\) for the observed value.

6 In other words, \(K\) is the abstract random variable in our probabilistic model, whereas \(k\) is its realization, that is, a specific data point.

We plot Equation 6.3 in Figure 6.5 ; for good measure, we also mark the observed value numHeads with a vertical blue line.

Suppose we didn’t know about Equation 6.3 . We can still use Monte Carlo simulation to give us something to compare with:

As expected, the most likely number of heads is 50, that is, half the number of coin flips. But we see that other numbers near 50 are also quite likely. How do we quantify whether the observed value, 59, is among those values that we are likely to see from a fair coin, or whether its deviation from the expected value is already large enough for us to conclude with enough confidence that the coin is biased? We divide the set of all possible \(k\) (0 to 100) in two complementary subsets, the rejection region and the region of no rejection. Our choice here 7 is to fill up the rejection region with as many \(k\) as possible while keeping their total probability, assuming the null hypothesis, below some threshold \(\alpha\) (say, 0.05).

7 More on this in Section 6.3.1 .

In the code below, we use the function arrange from the dplyr package to sort the p-values from lowest to highest, then pass the result to mutate , which adds another dataframe column reject that is defined by computing the cumulative sum ( cumsum ) of the p-values and thresholding it against alpha . The logical vector reject therefore marks with TRUE a set of k s whose total probability is less than alpha . These are marked in Figure 6.7 , and we can see that our rejection region is not contiguous – it comprises both the very large and the very small values of k .

The explicit summation over the probabilities is clumsy, we did it here for pedagogic value. For one-dimensional distributions, R provides not only functions for the densities (e.g., dbinom ) but also for the cumulative distribution functions ( pbinom ), which are more precise and faster than cumsum over the probabilities. These should be used in practice.

Do the computations for the rejection region and produce a plot like Figure 6.7 without using dbinom and cumsum , and with using pbinom instead.

We see in Figure 6.7 that the observed value, 59, lies in the grey shaded area, so we would not reject the null hypothesis of a fair coin from these data at a significance level of \(\alpha=0.05\) .

Question 6.1 Does the fact that we don’t reject the null hypothesis mean that the coin is fair?

Question 6.2 Would we have a better chance of detecting that the coin is not fair if we did more coin tosses? How many?

Question 6.3 If we repeated the whole procedure and again tossed the coin 100 times, might we then reject the null hypothesis?

Question 6.4 The rejection region in Figure 6.7 is asymmetric – its left part ends with \(k=40\) , while its right part starts with \(k=61\) . Why is that? Which other ways of defining the rejection region might be useful?

We have just gone through the steps of a binomial test. In fact, this is such a frequent activity in R that it has been wrapped into a single function, and we can compare its output to our results.

6.3 The five steps of hypothesis testing

Let’s summarise the general principles of hypothesis testing:

Decide on the effect that you are interested in, design a suitable experiment or study, pick a data summary function and test statistic .

Set up a null hypothesis , which is a simple, computationally tractable model of reality that lets you compute the null distribution , i.e., the possible outcomes of the test statistic and their probabilities under the assumption that the null hypothesis is true.

Decide on the rejection region , i.e., a subset of possible outcomes whose total probability is small 8 .

Do the experiment and collect the data 9 ; compute the test statistic.

Make a decision: reject the null hypothesis 10 if the test statistic is in the rejection region.

8 More on this in Section 6.3.1 .

9 Or if someone else has already done it, download their data.

10 That is, conclude that it is unlikely to be true.

Note how in this idealized workflow, we make all the important decisions in Steps 1–3 before we have even seen the data. As we already alluded to in the Introduction (Figures 1 and 2 ), this is often not realistic. We will also come back to this question in Section 6.6 .

There was also idealization in our null hypothesis that we used in the example above: we postulated that a fair coin should have a probability of exactly 0.5 (not, say, 0.500001) and that there should be absolutely no dependence between tosses. We did not worry about any possible effects of air drag, elasticity of the material on which the coin falls, and so on. This gave us the advantage that the null hypothesis was computationally tractable, namely, with the binomial distribution. Here, these idealizations may not seem very controversial, but in other situations the trade-off between how tractable and how realistic a null hypothesis is can be more substantial. The problem is that if a null hypothesis is too idealized to start with, rejecting it is not all that interesting. The result may be misleading, and certainly we are wasting our time.

The test statistic in our example was the total number of heads. Suppose we observed 50 tails in a row, and then 50 heads in a row. Our test statistic ignores the order of the outcomes, and we would conclude that this is a perfectly fair coin. However, if we used a different test statistic, say, the number of times we see two tails in a row, we might notice that there is something funny about this coin.

Question 6.5 What is the null distribution of this different test statistic?

Question 6.6 Would a test based on that statistic be generally preferable?

No, while it has more power to detect such correlations between coin tosses, it has less power to detect bias in the outcome.

What we have just done is look at two different classes of alternative hypotheses . The first class of alternatives was that subsequent coin tosses are still independent of each other, but that the probability of heads differed from 0.5. The second one was that the overall probability of heads may still be 0.5, but that subsequent coin tosses were correlated.

Question 6.7 Recall the concept of sufficient statistics from Chapter 1 . Is the total number of heads a sufficient statistic for the binomial distribution? Why might it be a good test statistic for our first class of alternatives, but not for the second?

So let’s remember that we typically have multiple possible choices of test statistic (in principle it could be any numerical summary of the data). Making the right choice is important for getting a test with good power 11 . What the right choice is will depend on what kind of alternatives we expect. This is not always easy to know in advance.

11 See Section 1.4.1 and 6.4 .

Once we have chosen the test statistic we need to compute its null distribution. You can do this either with pencil and paper or by computer simulations. A pencil and paper solution is parametric and leads to a closed form mathematical expression (like Equation 6.3 ), which has the advantage that it holds for a range of model parameters of the null hypothesis (such as \(n\) , \(p\) ). It can also be quickly computed for any specific set of parameters. But it is not always as easy as in the coin tossing example. Sometimes a pencil and paper solution is impossibly difficult to compute. At other times, it may require simplifying assumptions. An example is a null distribution for the \(t\) -statistic (which we will see later in this chapter). We can compute this if we assume that the data are independent and normally distributed: the result is called the \(t\) -distribution. Such modelling assumptions may be more or less realistic. Simulating the null distribution offers a potentially more accurate, more realistic and perhaps even more intuitive approach. The drawback of simulating is that it can take a rather long time, and we need extra work to get a systematic understanding of how varying parameters influence the result. Generally, it is more elegant to use the parametric theory when it applies 12 . When you are in doubt, simulate – or do both.

12 The assumptions don’t need to be exactly true – it is sufficient that the theory’s predictions are an acceptable approximation of the truth.

6.3.1 The rejection region

How to choose the right rejection region for your test? First, what should its size be? That is your choice of the significance level or false positive rate \(\alpha\) , which is the total probability of the test statistic falling into this region even if the null hypothesis is true 13 .

13 Some people at some point in time for a particular set of questions colluded on \(\alpha=0.05\) as being “small”. But there is nothing special about this number, and in any particular case the best choice for a decision threshold may very much depend on context ( Wasserstein and Lazar 2016 ; Altman and Krzywinski 2017 ) .

Given the size, the next question is about its shape. For any given size, there are usually multiple possible shapes. It makes sense to require that the probability of the test statistic falling into the rejection region is as large possible if the alternative hypothesis is true. In other words, we want our test to have high power , or true positive rate.

The criterion that we used in the code for computing the rejection region for Figure 6.7 was to make the region contain as many k as possible. That is because in absence of any information about the alternative distribution, one k is as good as any other, and we maximize their total number.

A consequence of this is that in Figure 6.7 the rejection region is split between the two tails of the distribution. This is because we anticipate that unfair coins could have a bias either towards head or toward tail; we don’t know. If we did know, we would instead concentrate our rejection region all on the appropriate side, e.g., the right tail if we think the bias would be towards head. Such choices are also referred to as two-sided and one-sided tests. More generally, if we have assumptions about the alternative distribution, this can influence our choice of the shape of the rejection region.

6.4 Types of error

Having set out the mechanics of testing, we can assess how well we are doing. Table 6.1 compares reality (whether or not the null hypothesis is in fact true) with our decision whether or not to reject the null hypothesis after we have seen the data.

It is always possible to reduce one of the two error types at the cost of increasing the other one. The real challenge is to find an acceptable trade-off between both of them. This is exemplified in Figure 6.2 . We can always decrease the false positive rate (FPR) by shifting the threshold to the right. We can become more “conservative”. But this happens at the price of higher false negative rate (FNR). Analogously, we can decrease the FNR by shifting the threshold to the left. But then again, this happens at the price of higher FPR. A bit on terminology: the FPR is the same as the probability \(\alpha\) that we mentioned above. \(1 - \alpha\) is also called the specificity of a test. The FNR is sometimes also called \(\beta\) , and \(1 - \beta\) the power , sensitivity or true positive rate of a test.

Question 6.8 At the end of Section 6.3 , we learned about one- and two-sided tests. Why does this distinction exist? Why don’t we always just use the two-sided test, which is sensitive to a larger class of alternatives?

6.5 The t-test

Many experimental measurements are reported as rational numbers, and the simplest comparison we can make is between two groups, say, cells treated with a substance compared to cells that are not. The basic test for such situations is the \(t\) -test. The test statistic is defined as

\[ t = c \; \frac{m_1-m_2}{s}, \tag{6.4}\]

where \(m_1\) and \(m_2\) are the mean of the values in the two groups, \(s\) is the pooled standard deviation and \(c\) is a constant that depends on the sample sizes, i.e., the numbers of observations \(n_1\) and \(n_2\) in the two groups. In formulas 14 ,

14 Everyone should try to remember Equation 6.4 , whereas many people get by with looking up Equation 6.5 when they need it.

\[ \begin{align} m_g &= \frac{1}{n_g} \sum_{i=1}^{n_g} x_{g, i} \quad\quad\quad g=1,2\\ s^2 &= \frac{1}{n_1+n_2-2} \left( \sum_{i=1}^{n_1} \left(x_{1,i} - m_1\right)^2 + \sum_{j=1}^{n_2} \left(x_{2,j} - m_2\right)^2 \right)\\ c &= \sqrt{\frac{n_1n_2}{n_1+n_2}} \end{align} \tag{6.5}\]

where \(x_{g, i}\) is the \(i^{\text{th}}\) data point in the \(g^{\text{th}}\) group. Let’s try this out with the PlantGrowth data from R’s datasets package.

Question 6.9 What do you get from the comparison with trt1 ? What for trt1 versus trt2 ?

Question 6.10 What is the significance of the var.equal = TRUE in the above call to t.test ?

We’ll get back to this in Section 6.5 .

Question 6.11 Rewrite the above call to t.test using the formula interface, i.e., by using the notation weight \(\sim\) group .

To compute the p-value, the t.test function uses the asymptotic theory for the \(t\) -statistic Equation 6.4 ; this theory states that under the null hypothesis of equal means in both groups, the statistic follows a known, mathematical distribution, the so-called \(t\) -distribution with \(n_1+n_2-2\) degrees of freedom. The theory uses additional technical assumptions, namely that the data are independent and come from a normal distribution with the same standard deviation. We could be worried about these assumptions. Clearly they do not hold: weights are always positive, while the normal distribution extends over the whole real axis. The question is whether this deviation from the theoretical assumption makes a real difference. We can use a permutation test to figure this out (we will discuss the idea behind permutation tests in a bit more detail in Section 6.5.1 ).

Question 6.12 Why did we use the absolute value function ( abs ) in the above code?

Plot the (parametric) \(t\) -distribution with the appropriate degrees of freedom.

The \(t\) -test comes in multiple flavors, all of which can be chosen through parameters of the t.test function. What we did above is called a two-sided two-sample unpaired test with equal variance. Two-sided refers to the fact that we were open to reject the null hypothesis if the weight of the treated plants was either larger or smaller than that of the untreated ones.

Two-sample 15 indicates that we compared the means of two groups to each other; another option is to compare the mean of one group against a given, fixed number.

15 It can be confusing that the term sample has a different meaning in statistics than in biology. In biology, a sample is a single specimen on which an assay is performed; in statistics, it is a set of measurements, e.g., the \(n_1\) -tuple \(\left(x_{1,1},...,x_{1,n_1}\right)\) in Equation 6.5 , which can comprise several biological samples. In contexts where this double meaning might create confusion, we refer to the data from a single biological sample as an observation .

Unpaired means that there was no direct 1:1 mapping between the measurements in the two groups. If, on the other hand, the data had been measured on the same plants before and after treatment, then a paired test would be more appropriate, as it looks at the change of weight within each plant, rather than their absolute weights.

Equal variance refers to the way the statistic Equation 6.4 is calculated. That expression is most appropriate if the variances within each group are about the same. If they are very different, an alternative form (Welch’s \(t\) -test) and associated asymptotic theory exist.

The independence assumption . Now let’s try something peculiar: duplicate the data.

Note how the estimates of the group means (and thus, of the difference) are unchanged, but the p-value is now much smaller! We can conclude two things from this:

The power of the \(t\) -test depends on the sample size. Even if the underlying biological differences are the same, a dataset with more observations tends to give more significant results 16 .

The assumption of independence between the measurements is really important. Blatant duplication of the same data is an extreme form of dependence, but to some extent the same thing happens if you mix up different levels of replication. For instance, suppose you had data from 8 plants, but measured the same thing twice on each plant (technical replicates), then pretending that these are now 16 independent measurements is wrong.

16 You can also see this from the way the numbers \(n_1\) and \(n_2\) appear in Equation 6.5 .

6.5.1 Permutation tests

What happened above when we contrasted the outcome of the parametric \(t\) -test with that of the permutation test applied to the \(t\) -statistic? It’s important to realize that these are two different tests, and the similarity of their outcomes is desirable, but coincidental. In the parametric test, the null distribution of the \(t\) -statistic follows from the assumed null distribution of the data, a multivariate normal distribution with unit covariance in the \((n_1+n_2)\) -dimensional space \(\mathbb{R}^{n_1+n_2}\) , and is continuous: the \(t\) -distribution. In contrast, the permutation distribution of our test statistic is discrete, as it is obtained from the finite set of \((n_1+n_2)!\) permutations 17 of the observation labels, from a single instance of the data (the \(n_1+n_2\) observations). All we assume here is that under the null hypothesis, the variables \(X_{1,1},...,X_{1,n_1},X_{2,1},...,X_{2,n_2}\) are exchangeable. Logically, this assumption is implied by that of the parametric test, but is weaker. The permutation test employs the \(t\) -statistic, but not the \(t\) -distribution (nor the normal distribution). The fact that the two tests gave us a very similar result is a consequence of the Central Limit Theorem.

17 Or a random subset, in case we want to save computation time.

6.6 P-value hacking

Let’s go back to the coin tossing example. We did not reject the null hypothesis (that the coin is fair) at a level of 5%—even though we “knew” that it is unfair. After all, probHead was chosen as 0.6 in Section 6.2 . Let’s suppose we now start looking at different test statistics. Perhaps the number of consecutive series of 3 or more heads. Or the number of heads in the first 50 coin flips. And so on. At some point we will find a test that happens to result in a small p-value, even if just by chance (after all, the probability for the p-value to be less than 0.05 under the null hypothesis—fair coin—is one in twenty). We just did what is called p-value hacking 18 ( Head et al. 2015 ) . You see what the problem is: in our zeal to prove our point we tortured the data until some statistic did what we wanted. A related tactic is hypothesis switching or HARKing – hypothesizing after the results are known: we have a dataset, maybe we have invested a lot of time and money into assembling it, so we need results. We come up with lots of different null hypotheses and test statistics, test them, and iterate, until we can report something.

18 http://fivethirtyeight.com/features/science-isnt-broken

These tactics violate the rules of hypothesis testing, as described in Section 6.3 , where we laid out one sequential procedure of choosing the hypothesis and the test, and then collecting the data. But, as we saw in Chapter 2 , such tactics can be tempting in reality. With biological data, we tend to have so many different choices for “normalising” the data, transforming the data, trying to adjust for batch effects, removing outliers, …. The topic is complex and open-ended. Wasserstein and Lazar ( 2016 ) give a readable short summary of the problems with how p-values are used in science, and of some of the misconceptions. They also highlight how p-values can be fruitfully used. The essential message is: be completely transparent about your data, what analyses were tried, and how they were done. Provide the analysis code. Only with such contextual information can a p-value be useful.

Avoid fallacy . Keep in mind that our statistical test is never attempting to prove our null hypothesis is true - we are simply saying whether or not there is evidence for it to be false. If a high p-value were indicative of the truth of the null hypothesis, we could formulate a completely crazy null hypothesis, do an utterly irrelevant experiment, collect a small amount of inconclusive data, find a p-value that would just be a random number between 0 and 1 (and so with some high probability above our threshold \(\alpha\) ) and, whoosh, our hypothesis would be demonstrated!

6.7 Multiple testing

Question 6.13 Look up xkcd cartoon 882 . Why didn’t the newspaper report the results for the other colors?

The quandary illustrated in the cartoon occurs with high-throughput data in biology. And with force! You will be dealing not only with 20 colors of jellybeans, but, say, with 20,000 genes that were tested for differential expression between two conditions, or with 6 billion positions in the genome where a DNA mutation might have happened. So how do we deal with this? Let’s look again at our table relating statistical test results with reality ( Table 6.1 ), this time framing everything in terms of many hypotheses.

\(m\) : total number of tests (and null hypotheses)

\(m_0\) : number of true null hypotheses

\(m-m_0\) : number of false null hypotheses

\(V\) : number of false positives (a measure of type I error)

\(T\) : number of false negatives (a measure of type II error)

\(S\) , \(U\) : number of true positives and true negatives

\(R\) : number of rejections

In the rest of this chapter, we look at different ways of taking care of the type I and II errors.

6.8 The family wise error rate

The family wise error rate (FWER) is the probability that \(V>0\) , i.e., that we make one or more false positive errors. We can compute it as the complement of making no false positive errors at all 19 .

19 Assuming independence.

\[ \begin{align} P(V>0) &= 1 - P(\text{no rejection of any of $m_0$ nulls}) \\ &= 1 - (1 - \alpha)^{m_0} \to 1 \quad\text{as } m_0\to\infty. \end{align} \tag{6.6}\]

For any fixed \(\alpha\) , this probability is appreciable as soon as \(m_0\) is in the order of \(1/\alpha\) , and it tends towards 1 as \(m_0\) becomes larger. This relationship can have serious consequences for experiments like DNA matching, where a large database of potential matches is searched. For example, if there is a one in a million chance that the DNA profiles of two people match by random error, and your DNA is tested against a database of 800000 profiles, then the probability of a random hit with the database (i.e., without you being in it) is: