The following tutorials have been accepted for EMNLP 2018 and will be held as half-day sessions on Wednesday, October 31, 2018 and Thursday, November 1, 2018. Exact timings will be posted later as part of the official program.

October 31, 2018

T1: Joint models for NLP (Morning) Yue Zhang

Joint models have received much research attention in NLP, allowing relevant tasks to share common information while avoiding error propagation in multi-stage pepelines. Several main approaches have been taken by statistical joint modeling, while neual models allow parameter sharing and adversarial training. This tutorial reviews main approaches to joint modeling for both statistical and neural methods.

T2: Graph Formalisms for Meaning Representations (Morning) Adam Lopez and Sorcha Gilroy

In this tutorial we will focus on Hyperedge Replacement Languages (HRL; Drewes et al. 1997), a context-free graph rewriting system. HRL are one of the most popular graph formalisms to be studied in NLP (Chiang et al., 2013; Peng et al., 2015; Bauer and Rambow, 2016). We will discuss HRL by formally defining them, studying several examples, discussing their properties, and providing exercises for the tutorial. While HRL have been used in NLP in the past, there is some speculation that they are more expressive than is necessary for graphs representing natural language (Drewes, 2017). Part of our own research has been exploring what restrictions of HRL could yield languages that are more useful for NLP and also those that have desirable properties for NLP models, such as being closed under intersection.

With that in mind, we also plan to discuss Regular Graph Languages (RGL; Courcelle 1991), a subfamily of HRL which are closed under intersection. The definition of RGL is relatively simple after being introduced to HRL. We do not plan on discussing any proofs of why RGL are also a subfamily of MSOL, as described in Gilroy et al. (2017b). We will briefly mention the other formalisms shown in Figure 1 such as MSOL and DAGAL but this will focus on their properties rather than any formal definitions.

T3: Writing Code for NLP Research (Afternoon) Matt Gardner , Mark Neumann , Joel Grus , and Nicholas Lourie

Doing modern NLP research requires writing code. Good code enables fast prototyping, easy debugging, controlled experiments, and accessible visualizations that help researchers understand what a model is doing. Bad code leads to research that is at best hard to reproduce and extend, and at worst simply incorrect. Indeed, there is a growing recognition of the importance of having good tools to assist good research in our field, as the upcoming workshop on open source software for NLP demonstrates. This tutorial aims to share best practices for writing code for NLP research, drawing on the instructors' experience designing the recently-released AllenNLP toolkit, a PyTorch-based library for deep learning NLP research. We will explain how a library with the right abstractions and components enables better code and better science, using models implemented in AllenNLP as examples. Participants will learn how to write research code in a way that facilitates good science and easy experimentation, regardless of what framework they use.

November 1, 2018

T4: Deep Latent Variable Models of Natural Language (Morning) Alexander Rush , Yoon Kim , and Sam Wiseman

The proposed tutorial will cover deep latent variable models both in the case where exact inference over the latent variables is tractable and when it is not. The former case includes neural extensions of unsupervised tagging and parsing models. Our discussion of the latter case, where inference cannot be performed tractably, will restrict itself to continuous latent variables. In particular, we will discuss recent developments both in neural variational inference (e.g., relating to Variational Auto-encoders) and in implicit density modeling (e.g., relating to Generative Adversarial Networks). We will highlight the challenges of applying these families of methods to NLP problems, and discuss recent successes and best practices.

T5: Standardized Tests as benchmarks for Artificial Intelligence (Morning) Mrinmaya Sachan , Minjoon Seo , Hannaneh Hajishirzi , and Eric Xing

Standardized tests have recently been proposed as replacements to the Turing test as a driver for progress in AI (Clark, 2015). These include tests on understanding passages and stories and answering questions about them (Richardson et al., 2013; Rajpurkar et al., 2016a, inter alia), science question answering (Schoenick et al., 2016, inter alia), algebra word problems (Kushman et al., 2014, inter alia), geometry problems (Seo et al., 2015; Sachan et al., 2016), visual question answering (Antol et al., 2015), etc. Many of these tests require sophisticated understanding of the world, aiming to push the boundaries of AI.

For this tutorial, we broadly categorize these tests into two categories: open domain tests such as reading comprehensions and elementary school tests where the goal is to find the support for an answer from the student curriculum, and closed domain tests such as intermediate level math and science tests (algebra, geometry, Newtonian physics problems, etc.). Unlike open domain tests, closed domain tests require the system to have significant domain knowledge and reasoning capabilities. For example, geometry questions typically involve a number of geometry primitives (lines, quadrilaterals, circles, etc) and require students to use axioms and theorems of geometry (Pythagoras theorem, alternating angles, etc) to solve them. These closed domains often have a formal logical basis and the question can be mapped to a formal language by semantic parsing. The formal question representation can then provided as an input to an expert system to solve the question.

T6: Deep Chit-Chat: Deep Learning for ChatBots (Afternoon) Wei Wu and Rui Yan

The tutorial is based on the long-term efforts on building conversational models with deep learning approaches for chatbots. We will summarize the fundamental challenges in modeling open domain dialogues, clarify the difference from modeling goal-oriented dialogues, and give an overview of state-of-the-art methods for open domain conversation including both retrieval-based methods and generation-based methods. In addition to these, our tutorial will also cover some new trends of research of chatbots, such as how to design a reasonable evaluation system and how to "control" conversations from a chatbot with some specific information such as personas, styles, and emotions, etc.

Search code, repositories, users, issues, pull requests...

Provide feedback.

We read every piece of feedback, and take your input very seriously.

Saved searches

Use saved searches to filter your results more quickly.

To see all available qualifiers, see our documentation .

- Notifications

A companion repository for the "Writing code for NLP Research" Tutorial at EMNLP 2018

allenai/writing-code-for-nlp-research-emnlp2018

Folders and files, repository files navigation, writing-code-for-nlp-research-emnlp2018.

- Python 81.6%

- Dockerfile 18.4%

Writing Code for (Machine Learning) Research

May 25, 2021

2021 · artificial-intelligence

Writing code is an essential task in much of today’s scientific research. In fields such as astronomy, chemistry, economics, and sociology, scientists are writing code in languages such as MATLAB, Python, R, and Fortran to perform simulations and analyze experimental results. These scientists, however, have minimal training as software engineers. The codebase is often nothing more than a disorganized collection of scripts, sometimes not even under source control. No doubt much contemporary research is bottlenecked by poor code quality. While scientists would be well served to read a software engineering book or two, writing code for business is not like writing code for research.

The problems, constraints, and therefore solutions are different. Business code is expected to run for years, sometimes continuously, serving up to billions of users. The code will be updated throughout its lifetime. Research code is run primarily by its author, secondarily by others who wish to build on the author’s work. Thus, the best research code can be easily adapted into unrelated projects. In contrast, many software engineering design patterns seek to hide implementations behind various interfaces. Business code is expected to run on a variety of standard hardware without change in behavior. Research code often runs in unusual specialized environments such as supercomputers or embedded devices.

I have spent the last 3 years doing machine learning research, where code is both a tool and the artifact being studied. After 3 years, you tend to notice what works and what doesn’t. Here is my advice.

1. Use Python

Python has the most extensive ecosystem of scientific packages and tools, too numerous to list here. Some are widely known, like NumPy (linear algebra) or Pandas (dataframes), but many are field specific, like Astropy (astronomy). Many libraries are Python exclusives, such as the 3 previously mentioned. Others, such as PyTorch or Tensorflow have frontends in other languages, but these are not as complete as the Python interface. Beyond the deep pool of great packages are tools like Jupyter or the VSCode Python extension that make programming and viewing results easy.

The language itself has a concise syntax that prevents unnecessary keystrokes by the programmer. This dramatically reduces the time needed to complete first drafts of programs. Just compare the length of Hello World programs between Python, C++, and Java—the 3 most common introductory programming languages. Python’s version is only one line compared to the 5+ lines needed by C++ and Java. In C++, printing to the screen requires an include (import) statement 1 . Compared to specialized scientific languages like MATLAB or R, Python has the advantage of being a general purpose programming language. Python can be used for your machine learning or computational biology research, but it can also be used to download files off the internet or set up a website with the same ease.

When should Python not be used? Often for certain applications, certain languages must be used.

- Interactive educational web apps: JavaScript

- Utilizing Nvidia GPU kernels for machine learning: C++ and CUDA

- Mobile apps: Swift for iOS and Java/Kotlin for Android (unless you use a cross-platform UI framework like React Native or Flutter)

- Climate change simulations on supercomputers: Fortran

High performance code, in general, should not be written in Python. Python is an interpreted language; each line is processed right before it runs. This precludes a number of optimizations that can be made in compiled languages. Because the compiler can see the entire file, it can perform a number of optimizations to make it run faster or consume less memory. Furthermore, Python has a Global Interpreter Lock which prevents multi-threading. While there has been discussion about its removal, that is unlikely to happen.

Unfortunately, since the GIL exists, other features have grown to depend on the guarantees that it enforces. This makes it hard to remove the GIL without breaking many official and unofficial Python packages and modules.

2. Track Dependencies

Nearly every program relies on code authored by external parties. These are dependencies . Importantly, changes to dependencies can break your code. This is unlikely for many of the older, more popular packages. NumPy is not going to suddenly change how np.array works. But for younger packages, instability should be expected. A machine learning example is torchtext which migrated its datasets from subclassing torchtext.data.Dataset in version 0.8 to subclassing torch.utils.data.Dataset in version 0.9. After upgrading torchtext, much of my NLP code crashed in the process.

The solution is to specify the compatible versions of your dependencies. For Python programs, this can be done in a requirements.txt file. These packages and their versions can be installed in a new environment with a single command. An environment is an isolated set of dependencies which can be swapped in or out for different projects.

3. Use Hydra

At least in machine learning, computational experiments may contain a number of hyperparameters 2 that influence the result. Some may be experimental hyperparameters such as the choice of model or learning algorithm which vary for the sake of comparison. Others, such as the learning rate, may need to be tuned to the correct value for the experiment to work. Researchers are interested in the result under the best-case value for these parameters. Tracking which trials used what parameter values can be tricky when launching them in batches across multiple machines. Hydra solves this problem.

Using Hydra is as simple as inserting a single decorator around your main function. Hydra will create a config object from a group of YAML files accessible inside main . Hyperparameters can be accessed as object properties: cfg.prop1 , cfg.prop2 , etc. Parameter values can be overridden by in the command line using the syntax prop1=value1 prop2=value2 when the program is launched. This makes it easy to, say, start several experiments using different learning rates. This config object can be passed to different functions in classes as one argument, irrespective of the number of hyperparameters they access.

Each program run creates a new folder outputs/yyyy-MM-dd/hh-mm-ss (date and time of launch) that becomes the current working directory. Plots, tables, and data saved to relative paths (those not starting with ~, /, or C:/ on Windows) will be placed inside this new folder. Inside, Hydra will save a copy of your config object as config.yaml and create a main.log file. Loggers are automatically configured to write to this file.

4. Document But Don’t Test

In Python and most programming languages, allow explanatory comments to be placed beside confusing code. A special type of comment is a doc comment placed above a class/function definition. In Python, these are triple-quote strings, hence the name docstring , which go below the class/function definition. Docstring provide invaluable context to other researchers looking to build on top of your work. Others will need to understand the inner workings of your code before they can modify it. Docstrings also jog the memory when you revisit your code in the future as a stranger.

Docstrings can be formatted however, but I prefer to use Google’s docstring style . The first section is reserved for a description of the class/function; succeeding sections provide more information about arguments, return values, exceptions, and properties. If you use VSCode, I recommend installing the Python Docstring Generator extension which can create a docstring template upon typing """ . VSCode will display the docstring when hovering over a function call.

While it’s it important to write docstrings, writing unit/integration tests are a waste of time. The test code will call sections of the source code to check its correctness. Usually, the author of the section will write the tests. This doubles the effort required to implement a certain feature. That may already be a dealbreaker but, since research is a messy process of diving into the unknown, research code has a short lifetime. There is no point writing tests for code which might be abandoned or revised in a few days. Moreover, the presence of bugs is not necessarily a problem. Research code is often narrow in scope, not designed to work without flaw in every circumstance.

5. Release Your Code

Whenever possible, code should be made public. The code to generate the plots are as important as the plots themselves. If the latter can be included in a publication, the former can be distributed as well. In many cases, such as in machine learning, the code itself is the labor of your research, developing this algorithm that does $X$ or solves $Y$. To withhold the code is like a withholding a proof in to a theorem. Stating that a statement is proven is not as interesting as the actual mechanics of proving it. Such a central artifact should be shared. There is also a more selfish motive for open sourcing code. Other researcher using it will cite your papers.

- https://da-data.blogspot.com/2016/04/stealing-googles-coding-practices-for.html

- https://github.com/allenai/writing-code-for-nlp-research-emnlp2018/

You can use printf to output the console, but it is not recommended over using std::cout . ↩

Machine learning models contain a number of parameters which are adjusted during training. The hyper in hyperparameter distinguishes values, like learning rate, which do not change over the course of training from model parameters. ↩

Enjoy Reading This Article?

Here are some more articles you might like to read next:

- 2022 in Books

- Implementing a BitTorrent-like P2P File Sharing Protocol

- Conflict Free Replicated Data Types

- Federated Learning of Cohorts

- Economic Possibilities for Ourselves

- University of Texas Libraries

- UT Libraries

Natural Language Processing for Non-English Text

- Further Reading

- Corpora and Resources

- Documentation and Resources

This LibGuide contains resources for preforming analysis on non-English texts using Natural Language Processing methods, including Python scripts to clean, analyze, and visualize text. It is a companion to the GitHub repository located here .

This tutorial is intentionally simple and introductory, and aims to offer users a jumping off point for further exploration of NLP and computational tools for use in text analysis and linguistic research. Due to the varied nature of NLP and text analysis work, there is no one size fits all approach to writing code for these projects; as such, you should be prepared to write your own code when performing any kind of work for your own research. Tasks such as training models for named entity recognition were omitted from this tutorial for simplicity’s sake, but should not be skipped when working on your own project. Notes accompany places in the code where such steps could be performed to help guide you in your own work.

What is Natural Language Processing?

Natural Language Processing (NLP) is a type of Artificial Intelligence. The goal of NLP is to make human, or natural, language understandable to a computer. This is achieved using syntactic and semantic analysis techniques. Though these algorithms are very sophisticated, they do not always yield perfect results. Human languages each have different conventions, and it can be difficult to make these rules machine-understandable. Despite these challenges, the use of NLP in textual analysis can uncover important and interesting information and insights.

This LibGuide was created by Madeline Goebel as part of the UT Libraries' Global Studies Digital Projects Graduate Research Assistantship.

- Last Updated: Aug 29, 2023 12:39 PM

- URL: https://guides.lib.utexas.edu/nlp_non_english

Subscribe to the PwC Newsletter

Join the community, trending research, assisting in writing wikipedia-like articles from scratch with large language models.

stanford-oval/storm • 22 Feb 2024

We study how to apply large language models to write grounded and organized long-form articles from scratch, with comparable breadth and depth to Wikipedia pages.

Mini-Gemini: Mining the Potential of Multi-modality Vision Language Models

We try to narrow the gap by mining the potential of VLMs for better performance and any-to-any workflow from three aspects, i. e., high-resolution visual tokens, high-quality data, and VLM-guided generation.

InstantMesh: Efficient 3D Mesh Generation from a Single Image with Sparse-view Large Reconstruction Models

We present InstantMesh, a feed-forward framework for instant 3D mesh generation from a single image, featuring state-of-the-art generation quality and significant training scalability.

Magic Clothing: Controllable Garment-Driven Image Synthesis

We propose Magic Clothing, a latent diffusion model (LDM)-based network architecture for an unexplored garment-driven image synthesis task.

LLaVA-UHD: an LMM Perceiving Any Aspect Ratio and High-Resolution Images

To address the challenges, we present LLaVA-UHD, a large multimodal model that can efficiently perceive images in any aspect ratio and high resolution.

Megalodon: Efficient LLM Pretraining and Inference with Unlimited Context Length

The quadratic complexity and weak length extrapolation of Transformers limits their ability to scale to long sequences, and while sub-quadratic solutions like linear attention and state space models exist, they empirically underperform Transformers in pretraining efficiency and downstream task accuracy.

Visual Autoregressive Modeling: Scalable Image Generation via Next-Scale Prediction

We present Visual AutoRegressive modeling (VAR), a new generation paradigm that redefines the autoregressive learning on images as coarse-to-fine "next-scale prediction" or "next-resolution prediction", diverging from the standard raster-scan "next-token prediction".

Actions Speak Louder than Words: Trillion-Parameter Sequential Transducers for Generative Recommendations

Large-scale recommendation systems are characterized by their reliance on high cardinality, heterogeneous features and the need to handle tens of billions of user actions on a daily basis.

MyGO: Discrete Modality Information as Fine-Grained Tokens for Multi-modal Knowledge Graph Completion

To overcome their inherent incompleteness, multi-modal knowledge graph completion (MMKGC) aims to discover unobserved knowledge from given MMKGs, leveraging both structural information from the triples and multi-modal information of the entities.

State Space Model for New-Generation Network Alternative to Transformers: A Survey

In this paper, we give the first comprehensive review of these works and also provide experimental comparisons and analysis to better demonstrate the features and advantages of SSM.

- Case Studies

- Reference Architecture

- Supported Software

- Whitepapers

- Return to Homepage

NLP Models for Writing Code: Program Synthesis

Copilot, Codex, and AlphaCode: How Good are Computer Programs that Program Computers Now?

Enabled by the rise of transformers in Natural Language Processing (NLP), we’ve seen a flurry of astounding deep learning models for writing code in recent years. Computer programs that can write computer programs, generally known as the program synthesis problem, have been of research interest since at least the late 1960s and early 1970s.

In the 2010s and 2020s, program synthesis research has been re-invigorated by the success of attention-based models in other sequence domains, namely the strategy of pre-training massive attention-based neural models (transformers) with millions or billions of parameters on hundreds of gigabytes of text.

The pre-trained models show an impressive capacity for meta-learning facilitated by their attention mechanisms, and can seemingly adapt to a textual task with only a few examples included in a prompt (referred to as zero-shot to few-shot learning in the research literature ).

Interested in a deep learning workstation that can handle NLP training? Learn more about Exxact workstations built to accelerate AI development.

Modern Program Synthesis with Deep NLP Models

NLP models can further be trained with specialized datasets to fine-tune performance on specific tasks, with writing code a particularly interesting use case.

GitHub Copilot billed as " Your AI Pair Programmer ," caused no small amount of controversy when it was introduced in June 2021. In large part, this was due to the use of all public GitHub code in the training dataset, including projects with copyleft licenses that may disallow the use of the code for projects like Copilot, unless Copilot itself were to be made open source, according to some interpretations.

Copilot is the result of the relationship between OpenAI and Microsoft, based on a version of GPT-3 trained on code. The version demonstrated by OpenAI and available via their API is called Codex. Formal experiments with Copex are described in Chen et al. 2021 .

Not to be outdone, in early 2022 DeepMind upped the stakes with their own program synthesis deep NLP system: AlphaCode .

Here Comes a New Challenger: AlphaCode

Photograph by Maximalfocus on Unsplash

Like Codex and Copilot before it, AlphaCode is a large NLP model designed and trained to write code. Rather than trying to build AlphaCode as a productivity tool for software engineers like Copilot, AlphaCode was developed to take on the challenge of human-level performance in competitive programming tasks.

The competitive coding challenges used to train and evaluate AlphaCode (making up the new CodeContests dataset) lie somewhere between the difficulty level of previous datasets and real-world software engineering.

For those unfamiliar with the competitive coding challenge websites, the task is somewhat like a simplified version of test-driven development . Based on a text description and several examples, the challenge is to write a program that passes a set of tests, most of which are hidden from the programmer.

Ideally, the hidden tests should be comprehensive, and passing all tests should be representative of solving the problem, but covering every edge case with unit tests is a difficult problem. An important contribution to the program synthesis field is actually the CodeContests dataset itself, as the DeepMind team made significant efforts to reduce the rate of false positives (tests pass but the problem is unsolved) and slow positives (tests pass but the solution is too slow) by generating additional tests through a process of mutation.

AlphaCode was evaluated on solving competitive coding challenges from the competition website CodeForces, and overall AlphaCode was estimated to perform in the “top 54.3%” of (presumably human) competitive programmers participating in contests, on average.

Note that the metric may be a bit misleading, as it actually equates to performance in the 45.7 th percentile. While the ~45 th percentile sounds decidedly less impressive, it’s incredible that the AlphaCode system was able to write any algorithms that passed every hidden test at all. But AlphaCode used a very different strategy to solve programming problems than a human would.

While a human competitor might write an algorithm that solves most of the examples and iteratively improve it until it passes all tests, incorporating insights from running early versions of their solution, AlphaCode takes a more broad-based approach by generating many samples per problem and then choosing ~10 to submit.

A big contributor to AlphaCode’s performance on the CodeContests dataset is the result of post-generative filtering and clustering: after generating some 1,000,000 candidate solutions, AlphaCode filters the candidates to remove those that don’t pass the example tests in the problem statement, eliminating around 99% percent of the candidate population.

The authors mentioned that around 10% of problems have no candidate solutions that pass all example tests at this stage.

The remaining candidates are then winnowed down to 10 submissions or less by clustering. In short, they trained another model to generate additional test inputs based on the problem description (but note they do not have valid outputs for these tests).

The remaining candidate solutions, which may number in the 1000s after filtering, are clustered based on their outputs on the generated test inputs. A single candidate from each cluster is selected for submission, in order from largest to smallest clusters. Clusters are sampled more than once if there are fewer than 10 clusters.

While the filtering/clustering step is unique and AlphaCode was fine-tuned on the new CodeContests dataset, it was initially trained in much the same way as Codex or Copilot. AlphaCode first underwent pre-training on a large dataset of publicly available code from GitHub (retrieved on 2021 July 14 th ). They trained five variants of 284 million to 41 billion parameters.

In the same spirit as the AlphaGo lineage or Starcraft II-playing AlphaStar , AlphaCode is a research project aiming to develop a system approaching human-level capabilities on a specialized task, but the threshold for valuable utility in program synthesis is somewhat lower.

Approaching the problem from the utility angle are the GPT-3 based Codex and Copilot tools. Codex is the OpenAI variant of GPT-3 trained on a corpus of publicly available code. On the HumanEval dataset released alongside the paper, OpenAI reports that Codex is able to solve just over 70% of problems by generating 100 samples in a “docstring to code” formatted task.

We’ll explore this prompt-programming approach to generating code with Codex by programming John Conway’s Game of Life in collaboration with the model below.

GitHub Copilot takes a code-completion approach and is currently packaged as an extension for Visual Studio, VSCode, Neovim, and JetBrains. According to the Copilot landing page , Copilot successfully re-wrote a respectable 57% of a set of well-tested Python functions from their descriptions in a task similar to the HumanEval datasets.

We will investigate a few realistic use cases for Copilot, such as automatic writing tests, using the private beta Copilot extension for VSCode.

Prompt Programming: Writing Conway’s Game of Life with Codex

In this section, we’ll go through the task of programming a cellular automata simulator based on John Conway’s Game of Life. In a minor twist, the rules will not be hard-coded and our program should be able to simulate any set of Life-like cellular automata rules–if it works.

Instead of generating 100 examples and choosing the best one (either manually or by running tests), we’ll take an interactive approach. If Codex gives a bad solution, we’ll adjust the prompt to try and guide a better answer, and, if absolutely necessary, we can go in and modify the code to get a working example if Codex fails entirely.

The first step in programming a Life-like CA simulator is to come up with a function for computing neighborhoods. We wrote the following docstring prompt and gave it to code-davinci-001, the largest Codex model in the OpenAI API Playground:

Lines “# PROMPT” and “# GENERATED” are included to give a clear signifier of where the prompt ends.

Given the fairly comprehensive docstring prompt above, how did Codex do? Codex first attempt was the following:

That might not bode well for our little experiment. Even after adjusting the P-value hyperparameter to enable more lenient nucleus sampling (and hopefully better diversity), Codex seemed to be stuck on the above non-answer.

Luckily, with just a tiny addition to the docstring prompt, Codex produced a much more promising output.

That’s much better than the first attempt, which fell somewhere between cheeky and useless.

It’s not a great solution, as it introduces an unused variable cell_val and delegates most of its job to another function it just made up, get_neighborhood, but overall this looks like a feasible start.

Next, we wrote a docstring for the get_neighborhood function mentioned above:

This output looks feasible as well, but on inspection, it actually includes an important mistake.

In the loop over neighborhood coordinates, it uses the same coordinates to assign values to the Moore neighborhood as it does to retrieve them from the grid.

We didn’t see a clear way to prompt Codex to avoid the mistake, so we modified the code manually:

It also introduced another function, get_neighborhood_coordinates, to handle the “tricky” part.

We are starting to get the feeling that Codex loves delegating, so next, we wrote a prompt for get_neighborhood_coordinates:

That’s a little more functional depth than we expected (compute_neighborhood calls get_neighborhood, which in turn calls get_neighborhood_coordinates), but it looks like we finally have a set of functions that returns a grid of neighborhood sums.

That leaves the update function, and the docstring prompt for that is shown below:

That looks like a pretty reasonable approach to the problem, although we did explicitly suggest an exception be raised if the neighborhood grid contains erroneous values and it is nowhere to be found in the generated output.

With just a few fixes: manual intervention in the get_neighborhood function and a few tries on some of the prompts, we managed to come up with a perfectly workable Life-like cellular automata simulator.

It’s not a particularly fast implementation, but it is rough of a similar quality to the sort of ‘Hello World’ attempt a programmer might make in getting started with a new language, many examples of which were undoubtedly included in the training dataset.

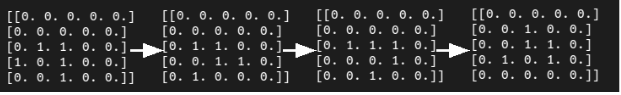

We can visualize the success of the program in the progression of the minimal glider in Conway’s Game of Life.

While we did manage to write a CA simulator in a set of functions, this approach is not a very useful or realistic use case for everyday software engineering. That doesn’t stop startups like SourceAI , essentially a wrapper for the OpenAI Codex API, from advertising their services as aiming to “...give everyone the opportunity to create valuable customized software.

We build a self-contained system that can create software at the level of the world's most skilled engineers.” Interacting with Codex is, however, a potentially useful way to learn or practice programming, especially at the level of coding problems on websites like CodeSignal, CodeForces, or HackerRank.

We’ll next try to assess Codex/Copilot for more realistic use cases of automatically writing tests and docstrings.

Task 2: Writing Tests

For this example, we’ll turn to use GitHub Copilot via the VSCode extension.

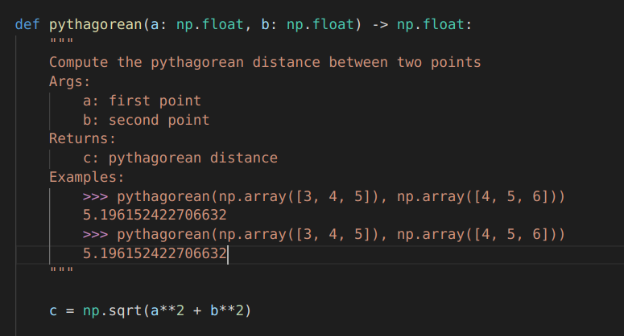

Although perhaps the Pythagorean theorem function is too simple, Copilot suggested a reasonable test and if you run it, it passes. The autocomplete suggestion was able to get both the structure and the numerical content of the test correct.

What if we wanted to write tests in a more systematic way, using a preferred framework? We write plenty of low-level learning models using numpy and automatic differentiation, so while the next example isn’t 100% real world it is reasonably close.

In this example, we’ll set up a simple multilayer perceptron forward pass, loss function, and gradient function using autograd and numpy , and the TestCase class from unittest for testing.

While not perfect, Copilot’s suggestions did make a reasonable outline for a test class. If you try to run the code as is, however, none of the tests will execute, let alone pass.

There is a dimension mismatch between the input data and the first weights matrix, and the data types are wrong (all the arrays are integer dtypes) and won’t work with Autograd’s grad function.

Those problems aren’t too tricky to fix, and if you replace the first entry in the list of weights matrices with a 3x2 matrix the forward pass should be able to run. For the grad test to go, you will need to either add decimal points to the numbers in the np.array definitions or define the array data types explicitly.

With those changes in place, the tests can execute and fail successfully, but the expected values aren’t numerically correct.

Task 3: Automatic Docstrings

A task where Copilot has significant potential is automatically writing documentation, specifically in the form of filling in docstrings for functions that have already been written. This almost works.

For the Pythagorean theorem example, it gets pretty close, but it describes the problem as finding the distance between two points a and b, rather than finding side lengths c to side lengths a and b. Unsurprisingly, the example Copilot comes up with in the docstring also doesn’t match the actual content of the function, returning a scalar instead of an array of values for c.

Copilot’s suggestions for docstrings for the forward MLP function were also close, but not quite right.

Docstring suggested by Copilot

Can a Machine Take My Job Yet?

For software engineers, each new advance in program synthesis may elicit a mix of economic trepidation and cathartic relief.

After all, if computer programs can program computers almost as well as human-computer programmers, doesn’t that mean machines should “take our jobs” someday soon?

By all appearances the answer seems to be “not yet,” but that doesn’t mean that the nature of software engineering is likely to remain static as these tools become more mature. In the future, reasoning successfully with sophisticated autocomplete tools may be as important as using a linter.

Copilot is still being tested in beta release, with a limited number of options on how to use it. Codex, likewise, is available in beta through the OpenAI API. The terms of use and privacy considerations of the pilot (heh) programs do limit the potential use cases of the technology.

Under current privacy policies, any code input to these systems may be used for fine-tuning models and can be reviewed by staff at GitHub/Microsoft or OpenAI. That precludes using Codex or Copilot for sensitive projects.

Copilot does add a lot of utility to the Codex model it’s based on. You can write a framework or outline for the code you want (as in the test-writing example with the unittest framework) and move the cursor to the middle of the outline to get reasonably OK autocomplete suggestions.

For anything more complicated than a simple coding practice problem, it’s unlikely to suggest a completion that’s correct, but it can often create a reasonable outline and save some typing.

It also should be noted that Copilot runs in the cloud, which means it won’t work offline and the autocomplete suggestions are somewhat slow. You can cycle through suggestions by pressing alt + ], but sometimes there are only a few, or even a single, suggestions to choose from.

When it works well, it’s actually good enough to be a little dangerous. The tests suggested in the unittest example and the docstring suggested for the Pythagorean function look correct at first glance, and might pass the scrutiny of a tired software engineer, but when they contain cryptic mistakes, this can only lead to pain later.

All things considered, while Copilot/Codex is more of a toy or a learning tool in its current state, it’s incredible that it works at all. If you come across a waltzing bear, the impressive thing isn’t that the bear dances well , and if you come across an intelligent code completion tool the impressive thing isn’t that it writes perfect code.

It’s likely that with additional development of the technology, and a fair bit of adaptation on the side of human developers using NLP autocomplete tools, there will be significant killer applications for program synthesis models in the near future.

Have any questions? Contact Exxact Today

Sign up for our newsletter.

Related posts.

Deep Learning

Sxm vs pcie: gpus best for training llms like gpt-4.

Diffusion and Denoising - Explaining Text-to-Image Generative AI

Managing Python Dependencies with Poetry vs Conda & Pip

Have any questions.

We’re developing this blog to help engineers, developers, researchers, and hobbyists on the cutting edge cultivate knowledge, uncover compelling new ideas, and find helpful instruction all in one place.

Extractive Summarization with LLM using BERT

Help | Advanced Search

Computer Science > Machine Learning

Title: detecting ai generated text based on nlp and machine learning approaches.

Abstract: Recent advances in natural language processing (NLP) may enable artificial intelligence (AI) models to generate writing that is identical to human written form in the future. This might have profound ethical, legal, and social repercussions. This study aims to address this problem by offering an accurate AI detector model that can differentiate between electronically produced text and human-written text. Our approach includes machine learning methods such as XGB Classifier, SVM, BERT architecture deep learning models. Furthermore, our results show that the BERT performs better than previous models in identifying information generated by AI from information provided by humans. Provide a comprehensive analysis of the current state of AI-generated text identification in our assessment of pertinent studies. Our testing yielded positive findings, showing that our strategy is successful, with the BERT emerging as the most probable answer. We analyze the research's societal implications, highlighting the possible advantages for various industries while addressing sustainability issues pertaining to morality and the environment. The XGB classifier and SVM give 0.84 and 0.81 accuracy in this article, respectively. The greatest accuracy in this research is provided by the BERT model, which provides 0.93% accuracy.

Submission history

Access paper:.

- Other Formats

References & Citations

- Google Scholar

- Semantic Scholar

BibTeX formatted citation

Bibliographic and Citation Tools

Code, data and media associated with this article, recommenders and search tools.

- Institution

arXivLabs: experimental projects with community collaborators

arXivLabs is a framework that allows collaborators to develop and share new arXiv features directly on our website.

Both individuals and organizations that work with arXivLabs have embraced and accepted our values of openness, community, excellence, and user data privacy. arXiv is committed to these values and only works with partners that adhere to them.

Have an idea for a project that will add value for arXiv's community? Learn more about arXivLabs .

pdf bib abs A llen NLP : A Deep Semantic Natural Language Processing Platform Matt Gardner | Joel Grus | Mark Neumann | Oyvind Tafjord | Pradeep Dasigi | Nelson F. Liu | Matthew Peters | Michael Schmitz | Luke Zettlemoyer Proceedings of Workshop for NLP Open Source Software (NLP-OSS)

pdf bib abs Reasoning about Actions and State Changes by Injecting Commonsense Knowledge Niket Tandon | Bhavana Dalvi | Joel Grus | Wen-tau Yih | Antoine Bosselut | Peter Clark Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing

bib abs Writing Code for NLP Research Matt Gardner | Mark Neumann | Joel Grus | Nicholas Lourie Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing: Tutorial Abstracts

- Matt Gardner 2

- Mark Neumann 2

- Oyvind Tafjord 1

- Pradeep Dasigi 1

- Nelson F. Liu 1

- show all...

- Matthew E. Peters 1

- Michael Schmitz 1

- Luke Zettlemoyer 1

- Niket Tandon 1

- Bhavana Dalvi 1

- Wen-tau Yih 1

- Antoine Bosselut 1

- Peter Clark 1

- Nicholas Lourie 1

IMAGES

VIDEO

COMMENTS

This tutorial aims to share best practices for writing code for NLP research, drawing on the instructors' experience designing the recently-released AllenNLP toolkit, a PyTorch-based library for deep learning NLP research. We will explain how a library with the right abstractions and components enables better code and better science, using ...

This tutorial aims to share best practices for writing code for NLP research, drawing on the instructors' experience designing the recently-released AllenNLP toolkit, a PyTorch-based library for deep learning NLP research. We will explain how a library with the right abstractions and components enables better code and better science, using ...

A companion repository for the "Writing code for NLP Research" Tutorial at EMNLP 2018 - allenai/writing-code-for-nlp-research-emnlp2018

(NLP) research requires writing code. Ideally this code would provide a pre-cise definition of the approach, easy repeatability of results, and a basis for extending the research. However, many research codebases bury high-level pa-rameters under implementation details, are challenging to run and debug, and are difficult enough to extend that ...

Modern natural language processing (NLP) research requires writing code. Ideally this code would provide a precise definition of the approach, easy repeatability of results, and a basis for extending the research. However, many research codebases bury high-level parameters under implementation details, are challenging to run and debug, and are ...

This tutorial aims to share best practices for writing code for NLP research, drawing on the instructors' experience designing the recently-released AllenNLP toolkit, a PyTorch-based library for deep learning NLP research. We will explain how a library with the right abstractions and components enables better code and better science, using ...

This tutorial aims to share best practices for writing code for NLP research, drawing on the instructors' experience designing the recently-released AllenNLP toolkit, a PyTorch-based library for deep learning NLP research. We will explain how a library with the right abstractions and components enables better code and better science, using ...

The outline of a typical NLP paperAdditional notes General advice Additional notes 1.Intro: Tell the full story of your paper at a high-level. 2.Prior literature: Contextualize your work and provide insights into major relevant themes of the literature as a whole. Use each paper (or theme) as a chance to articulate what is special about your paper.

{"payload":{"allShortcutsEnabled":false,"fileTree":{"":{"items":[{"name":".github","path":".github","contentType":"directory"},{"name ...

Exploring Features of NLTK: a. Open the text file for processing: First, we are going to open and read the file which we want to analyze. Figure 11: Small code snippet to open and read the text file and analyze it. Figure 12: Text string file. Next, notice that the data type of the text file read is a String.

A companion repository for the "Writing code for NLP Research" Tutorial at EMNLP 2018 - allenai/writing-code-for-nlp-research-emnlp2018

Writing Code for (Machine Learning) Research. May 25, 2021. 2021 · ai. Writing code is an essential task in much of today's scientific research. In fields such as astronomy, chemistry, economics, and sociology, scientists are writing code in languages such as MATLAB, Python, R, and Fortran to perform simulations and analyze experimental results.

This tutorial is intentionally simple and introductory, and aims to offer users a jumping off point for further exploration of NLP and computational tools for use in text analysis and linguistic research. Due to the varied nature of NLP and text analysis work, there is no one size fits all approach to writing code for these projects; as such ...

(NLP) research requires writing code. Ideally this code would provide a pre-cise definition of the approach, easy repeatability of results, and a basis for extending the research. However, many research codebases bury high-level pa-rameters under implementation details, are challenging to run and debug, and are difficult enough to extend that ...

Stay informed on the latest trending ML papers with code, research developments, libraries, methods, and datasets. ... Processing Natural Language Processing. 2340 benchmarks • 669 tasks • 2023 datasets • 28103 papers with code Representation Learning ... Multilingual NLP Multilingual NLP. 34 papers with code Spam detection

Writing Code for NLP Research.pdf - Free ebook download as PDF File (.pdf), Text File (.txt) or read book online for free. Scribd is the world's largest social reading and publishing site.

Writing code for NLP research ⚒ Slides of the tutorial on writing code for NLP research by the AllenAI team at EMNLP 2018. Transfer learning 🗣 In case you're interested in slides about transfer learning, I've put the slides of all my talks on one page.

nus-apr/auto-code-rover • 8 Apr 2024. Recent progress in Large Language Models (LLMs) has significantly impacted the development process, where developers can use LLM-based programming assistants to achieve automated coding. Bug fixing Code Search +1. 1,795.

Enabled by the rise of transformers in Natural Language Processing (NLP), we've seen a flurry of astounding deep learning models for writing code in recent years. Computer programs that can write computer programs, generally known as the program synthesis problem, have been of research interest since at least the late 1960s and early 1970s.

This tutorial aims to share best practices for writing code for NLP research, drawing on the instructors' experience designing the recently-released AllenNLP toolkit, a PyTorch-based library for deep learning NLP research. We will explain how a library with the right abstractions and components enables better code and better science, using ...

Next, call nlp by passing in a string and converting it to a spaCy Doc, a sequence of tokens (line 2 below).The syntactic structure of Doc can be constructed by accessing the following attributes of its component tokens:. pos_: The coarse-grained part-of-speech tag. tag_: The fine-grained part-of-speech tag. dep_: The syntactic dependency tag, i.e. the relation between tokens.

Recent advances in natural language processing (NLP) may enable artificial intelligence (AI) models to generate writing that is identical to human written form in the future. This might have profound ethical, legal, and social repercussions. This study aims to address this problem by offering an accurate AI detector model that can differentiate between electronically produced text and human ...

NLP Highlights podcast. Matt was an instructor at the Neural Semantic Parsing Tutorial (Gardner et al.,2018a) at ACL 2018, and the Writing Code for NLP Research Tutorial (Gardner et al.,2018c) at EMNLP 2018. Website: https://matt-gardner.github.io/ Sameer Singh is an Assistant Professor of Com-puter Science at the University of California, Irvine.

The state will save more than $15 million by using technology similar to ChatGPT to give initial scores, reducing the number of human graders needed. The decision caught some educators by surprise.

This tutorial aims to share best practices for writing code for NLP research, drawing on the instructors' experience designing the recently-released AllenNLP toolkit, a PyTorch-based library for deep learning NLP research. We will explain how a library with the right abstractions and components enables better code and better science, using ...