- Research Process

- Manuscript Preparation

- Manuscript Review

- Publication Process

- Publication Recognition

- Language Editing Services

- Translation Services

Why is data validation important in research?

- 3 minute read

- 63.1K views

Table of Contents

Data collection and analysis is one of the most important aspects of conducting research. High-quality data allows researchers to interpret findings accurately, act as a foundation for future studies, and give credibility to their research. As such, research often needs to go under the scanner to be free of suspicions of fraud and data falsification . At times, even unintentional errors in data could be viewed as research misconduct. Hence, data integrity is essential to protect your reputation and the reliability of your study.

Owing to the very nature of research and the sheer volume of data collected in large-scale studies, errors are bound to occur. One way to avoid “bad” or erroneous data is through data validation.

What is data validation?

Data validation is the process of examining the quality and accuracy of the collected data before processing and analysing it. It not only ensures the accuracy but also confirms the completeness of your data. However, data validation is time-consuming and can delay analysis significantly. So, is this step really important?

Importance of data validation

Data validation is important for several aspects of a well-conducted study:

- To ensure a robust dataset: The primary aim of data validation is to ensure an error-free dataset for further analysis. This is especially important if you or other researchers plan to use the dataset for future studies or to train machine learning models.

- To get a clearer picture of the data: Data validation also includes ‘cleaning-up’ of data, i.e., removing inputs that are incomplete, not standardized, or not within the range specified for your study. This process could also shed light on previously unknown patterns in the data and provide additional insights regarding the findings.

- To get accurate results: If your dataset has discrepancies, it will impact the final results and lead to inaccurate interpretations. Data validation can help identify errors, thus increasing the accuracy of your results.

- To mitigate the risk of forming incorrect hypotheses: Only those inferences and hypotheses that are backed by solid data are considered valid. Thus, data validation can help you form logical and reasonable speculations .

- To ensure the legitimacy of your findings: The integrity of your study is often determined by how reproducible it is. Data validation can enhance the reproducibility of your findings.

Data validation in research

Data validation is necessary for all types of research. For quantitative research, which utilizes measurable data points, the quality of data can be enhanced by selecting the correct methodology, avoiding biases in the study design, choosing an appropriate sample size and type, and conducting suitable statistical analyses.

In contrast, qualitative research , which includes surveys or behavioural studies, is prone to the use of incomplete and/or poor-quality data. This is because of the likelihood that the responses provided by survey participants are inaccurate and due to the subjective nature of observational studies. Thus, it is extremely important to validate data by incorporating a range of clear and objective questions in surveys, bullet-proofing multiple-choice questions, and setting standard parameters for data collection.

Importantly, for studies that utilize machine learning approaches or mathematical models, validating the data model is as important as validating the data inputs. Thus, for the generation of automated data validation protocols, one must rely on appropriate data structures, content, and file types to avoid errors due to automation.

Although data validation may seem like an unnecessary or time-consuming step, it is absolutely critical to validate the integrity of your study and is absolutely worth the effort. To learn more about how to validate data effectively, head over to Elsevier Author Services !

How to write the results section of a research paper

Choosing the Right Research Methodology: A Guide for Researchers

You may also like.

Descriptive Research Design and Its Myriad Uses

Five Common Mistakes to Avoid When Writing a Biomedical Research Paper

Making Technical Writing in Environmental Engineering Accessible

To Err is Not Human: The Dangers of AI-assisted Academic Writing

When Data Speak, Listen: Importance of Data Collection and Analysis Methods

Writing a good review article

Scholarly Sources: What are They and Where can You Find Them?

Input your search keywords and press Enter.

- Search Menu

Sign in through your institution

- Browse content in Arts and Humanities

- Browse content in Archaeology

- Anglo-Saxon and Medieval Archaeology

- Archaeological Methodology and Techniques

- Archaeology by Region

- Archaeology of Religion

- Archaeology of Trade and Exchange

- Biblical Archaeology

- Contemporary and Public Archaeology

- Environmental Archaeology

- Historical Archaeology

- History and Theory of Archaeology

- Industrial Archaeology

- Landscape Archaeology

- Mortuary Archaeology

- Prehistoric Archaeology

- Underwater Archaeology

- Zooarchaeology

- Browse content in Architecture

- Architectural Structure and Design

- History of Architecture

- Residential and Domestic Buildings

- Theory of Architecture

- Browse content in Art

- Art Subjects and Themes

- History of Art

- Industrial and Commercial Art

- Theory of Art

- Biographical Studies

- Byzantine Studies

- Browse content in Classical Studies

- Classical Numismatics

- Classical Literature

- Classical Reception

- Classical History

- Classical Philosophy

- Classical Mythology

- Classical Art and Architecture

- Classical Oratory and Rhetoric

- Greek and Roman Archaeology

- Greek and Roman Epigraphy

- Greek and Roman Law

- Greek and Roman Papyrology

- Late Antiquity

- Religion in the Ancient World

- Social History

- Digital Humanities

- Browse content in History

- Colonialism and Imperialism

- Diplomatic History

- Environmental History

- Genealogy, Heraldry, Names, and Honours

- Genocide and Ethnic Cleansing

- Historical Geography

- History by Period

- History of Agriculture

- History of Education

- History of Emotions

- History of Gender and Sexuality

- Industrial History

- Intellectual History

- International History

- Labour History

- Legal and Constitutional History

- Local and Family History

- Maritime History

- Military History

- National Liberation and Post-Colonialism

- Oral History

- Political History

- Public History

- Regional and National History

- Revolutions and Rebellions

- Slavery and Abolition of Slavery

- Social and Cultural History

- Theory, Methods, and Historiography

- Urban History

- World History

- Browse content in Language Teaching and Learning

- Language Learning (Specific Skills)

- Language Teaching Theory and Methods

- Browse content in Linguistics

- Applied Linguistics

- Cognitive Linguistics

- Computational Linguistics

- Forensic Linguistics

- Grammar, Syntax and Morphology

- Historical and Diachronic Linguistics

- History of English

- Language Variation

- Language Families

- Language Acquisition

- Language Evolution

- Language Reference

- Lexicography

- Linguistic Theories

- Linguistic Typology

- Linguistic Anthropology

- Phonetics and Phonology

- Psycholinguistics

- Sociolinguistics

- Translation and Interpretation

- Writing Systems

- Browse content in Literature

- Bibliography

- Children's Literature Studies

- Literary Studies (Modernism)

- Literary Studies (Asian)

- Literary Studies (European)

- Literary Studies (Eco-criticism)

- Literary Studies (Romanticism)

- Literary Studies (American)

- Literary Studies - World

- Literary Studies (1500 to 1800)

- Literary Studies (19th Century)

- Literary Studies (20th Century onwards)

- Literary Studies (African American Literature)

- Literary Studies (British and Irish)

- Literary Studies (Early and Medieval)

- Literary Studies (Fiction, Novelists, and Prose Writers)

- Literary Studies (Gender Studies)

- Literary Studies (Graphic Novels)

- Literary Studies (History of the Book)

- Literary Studies (Plays and Playwrights)

- Literary Studies (Poetry and Poets)

- Literary Studies (Postcolonial Literature)

- Literary Studies (Queer Studies)

- Literary Studies (Science Fiction)

- Literary Studies (Travel Literature)

- Literary Studies (War Literature)

- Literary Studies (Women's Writing)

- Literary Theory and Cultural Studies

- Mythology and Folklore

- Shakespeare Studies and Criticism

- Browse content in Media Studies

- Browse content in Music

- Applied Music

- Dance and Music

- Ethics in Music

- Ethnomusicology

- Gender and Sexuality in Music

- Medicine and Music

- Music Cultures

- Music and Culture

- Music and Religion

- Music and Media

- Music Education and Pedagogy

- Music Theory and Analysis

- Musical Scores, Lyrics, and Libretti

- Musical Structures, Styles, and Techniques

- Musicology and Music History

- Performance Practice and Studies

- Race and Ethnicity in Music

- Sound Studies

- Browse content in Performing Arts

- Browse content in Philosophy

- Aesthetics and Philosophy of Art

- Epistemology

- Feminist Philosophy

- History of Western Philosophy

- Metaphysics

- Moral Philosophy

- Non-Western Philosophy

- Philosophy of Action

- Philosophy of Law

- Philosophy of Religion

- Philosophy of Science

- Philosophy of Language

- Philosophy of Mind

- Philosophy of Perception

- Philosophy of Mathematics and Logic

- Practical Ethics

- Social and Political Philosophy

- Browse content in Religion

- Biblical Studies

- Christianity

- East Asian Religions

- History of Religion

- Judaism and Jewish Studies

- Qumran Studies

- Religion and Education

- Religion and Health

- Religion and Politics

- Religion and Science

- Religion and Law

- Religion and Art, Literature, and Music

- Religious Studies

- Browse content in Society and Culture

- Cookery, Food, and Drink

- Cultural Studies

- Customs and Traditions

- Ethical Issues and Debates

- Hobbies, Games, Arts and Crafts

- Natural world, Country Life, and Pets

- Popular Beliefs and Controversial Knowledge

- Sports and Outdoor Recreation

- Technology and Society

- Travel and Holiday

- Visual Culture

- Browse content in Law

- Arbitration

- Browse content in Company and Commercial Law

- Commercial Law

- Company Law

- Browse content in Comparative Law

- Systems of Law

- Competition Law

- Browse content in Constitutional and Administrative Law

- Government Powers

- Judicial Review

- Local Government Law

- Military and Defence Law

- Parliamentary and Legislative Practice

- Construction Law

- Contract Law

- Browse content in Criminal Law

- Criminal Procedure

- Criminal Evidence Law

- Sentencing and Punishment

- Employment and Labour Law

- Environment and Energy Law

- Browse content in Financial Law

- Banking Law

- Insolvency Law

- History of Law

- Human Rights and Immigration

- Intellectual Property Law

- Browse content in International Law

- Private International Law and Conflict of Laws

- Public International Law

- IT and Communications Law

- Jurisprudence and Philosophy of Law

- Law and Society

- Law and Politics

- Browse content in Legal System and Practice

- Courts and Procedure

- Legal Skills and Practice

- Legal System - Costs and Funding

- Primary Sources of Law

- Regulation of Legal Profession

- Medical and Healthcare Law

- Browse content in Policing

- Criminal Investigation and Detection

- Police and Security Services

- Police Procedure and Law

- Police Regional Planning

- Browse content in Property Law

- Personal Property Law

- Restitution

- Study and Revision

- Terrorism and National Security Law

- Browse content in Trusts Law

- Wills and Probate or Succession

- Browse content in Medicine and Health

- Browse content in Allied Health Professions

- Arts Therapies

- Clinical Science

- Dietetics and Nutrition

- Occupational Therapy

- Operating Department Practice

- Physiotherapy

- Radiography

- Speech and Language Therapy

- Browse content in Anaesthetics

- General Anaesthesia

- Browse content in Clinical Medicine

- Acute Medicine

- Cardiovascular Medicine

- Clinical Genetics

- Clinical Pharmacology and Therapeutics

- Dermatology

- Endocrinology and Diabetes

- Gastroenterology

- Genito-urinary Medicine

- Geriatric Medicine

- Infectious Diseases

- Medical Oncology

- Medical Toxicology

- Pain Medicine

- Palliative Medicine

- Rehabilitation Medicine

- Respiratory Medicine and Pulmonology

- Rheumatology

- Sleep Medicine

- Sports and Exercise Medicine

- Clinical Neuroscience

- Community Medical Services

- Critical Care

- Emergency Medicine

- Forensic Medicine

- Haematology

- History of Medicine

- Medical Ethics

- Browse content in Medical Dentistry

- Oral and Maxillofacial Surgery

- Paediatric Dentistry

- Restorative Dentistry and Orthodontics

- Surgical Dentistry

- Browse content in Medical Skills

- Clinical Skills

- Communication Skills

- Nursing Skills

- Surgical Skills

- Medical Statistics and Methodology

- Browse content in Neurology

- Clinical Neurophysiology

- Neuropathology

- Nursing Studies

- Browse content in Obstetrics and Gynaecology

- Gynaecology

- Occupational Medicine

- Ophthalmology

- Otolaryngology (ENT)

- Browse content in Paediatrics

- Neonatology

- Browse content in Pathology

- Chemical Pathology

- Clinical Cytogenetics and Molecular Genetics

- Histopathology

- Medical Microbiology and Virology

- Patient Education and Information

- Browse content in Pharmacology

- Psychopharmacology

- Browse content in Popular Health

- Caring for Others

- Complementary and Alternative Medicine

- Self-help and Personal Development

- Browse content in Preclinical Medicine

- Cell Biology

- Molecular Biology and Genetics

- Reproduction, Growth and Development

- Primary Care

- Professional Development in Medicine

- Browse content in Psychiatry

- Addiction Medicine

- Child and Adolescent Psychiatry

- Forensic Psychiatry

- Learning Disabilities

- Old Age Psychiatry

- Psychotherapy

- Browse content in Public Health and Epidemiology

- Epidemiology

- Public Health

- Browse content in Radiology

- Clinical Radiology

- Interventional Radiology

- Nuclear Medicine

- Radiation Oncology

- Reproductive Medicine

- Browse content in Surgery

- Cardiothoracic Surgery

- Gastro-intestinal and Colorectal Surgery

- General Surgery

- Neurosurgery

- Paediatric Surgery

- Peri-operative Care

- Plastic and Reconstructive Surgery

- Surgical Oncology

- Transplant Surgery

- Trauma and Orthopaedic Surgery

- Vascular Surgery

- Browse content in Science and Mathematics

- Browse content in Biological Sciences

- Aquatic Biology

- Biochemistry

- Bioinformatics and Computational Biology

- Developmental Biology

- Ecology and Conservation

- Evolutionary Biology

- Genetics and Genomics

- Microbiology

- Molecular and Cell Biology

- Natural History

- Plant Sciences and Forestry

- Research Methods in Life Sciences

- Structural Biology

- Systems Biology

- Zoology and Animal Sciences

- Browse content in Chemistry

- Analytical Chemistry

- Computational Chemistry

- Crystallography

- Environmental Chemistry

- Industrial Chemistry

- Inorganic Chemistry

- Materials Chemistry

- Medicinal Chemistry

- Mineralogy and Gems

- Organic Chemistry

- Physical Chemistry

- Polymer Chemistry

- Study and Communication Skills in Chemistry

- Theoretical Chemistry

- Browse content in Computer Science

- Artificial Intelligence

- Computer Architecture and Logic Design

- Game Studies

- Human-Computer Interaction

- Mathematical Theory of Computation

- Programming Languages

- Software Engineering

- Systems Analysis and Design

- Virtual Reality

- Browse content in Computing

- Business Applications

- Computer Games

- Computer Security

- Computer Networking and Communications

- Digital Lifestyle

- Graphical and Digital Media Applications

- Operating Systems

- Browse content in Earth Sciences and Geography

- Atmospheric Sciences

- Environmental Geography

- Geology and the Lithosphere

- Maps and Map-making

- Meteorology and Climatology

- Oceanography and Hydrology

- Palaeontology

- Physical Geography and Topography

- Regional Geography

- Soil Science

- Urban Geography

- Browse content in Engineering and Technology

- Agriculture and Farming

- Biological Engineering

- Civil Engineering, Surveying, and Building

- Electronics and Communications Engineering

- Energy Technology

- Engineering (General)

- Environmental Science, Engineering, and Technology

- History of Engineering and Technology

- Mechanical Engineering and Materials

- Technology of Industrial Chemistry

- Transport Technology and Trades

- Browse content in Environmental Science

- Applied Ecology (Environmental Science)

- Conservation of the Environment (Environmental Science)

- Environmental Sustainability

- Environmentalist Thought and Ideology (Environmental Science)

- Management of Land and Natural Resources (Environmental Science)

- Natural Disasters (Environmental Science)

- Nuclear Issues (Environmental Science)

- Pollution and Threats to the Environment (Environmental Science)

- Social Impact of Environmental Issues (Environmental Science)

- History of Science and Technology

- Browse content in Materials Science

- Ceramics and Glasses

- Composite Materials

- Metals, Alloying, and Corrosion

- Nanotechnology

- Browse content in Mathematics

- Applied Mathematics

- Biomathematics and Statistics

- History of Mathematics

- Mathematical Education

- Mathematical Finance

- Mathematical Analysis

- Numerical and Computational Mathematics

- Probability and Statistics

- Pure Mathematics

- Browse content in Neuroscience

- Cognition and Behavioural Neuroscience

- Development of the Nervous System

- Disorders of the Nervous System

- History of Neuroscience

- Invertebrate Neurobiology

- Molecular and Cellular Systems

- Neuroendocrinology and Autonomic Nervous System

- Neuroscientific Techniques

- Sensory and Motor Systems

- Browse content in Physics

- Astronomy and Astrophysics

- Atomic, Molecular, and Optical Physics

- Biological and Medical Physics

- Classical Mechanics

- Computational Physics

- Condensed Matter Physics

- Electromagnetism, Optics, and Acoustics

- History of Physics

- Mathematical and Statistical Physics

- Measurement Science

- Nuclear Physics

- Particles and Fields

- Plasma Physics

- Quantum Physics

- Relativity and Gravitation

- Semiconductor and Mesoscopic Physics

- Browse content in Psychology

- Affective Sciences

- Clinical Psychology

- Cognitive Neuroscience

- Cognitive Psychology

- Criminal and Forensic Psychology

- Developmental Psychology

- Educational Psychology

- Evolutionary Psychology

- Health Psychology

- History and Systems in Psychology

- Music Psychology

- Neuropsychology

- Organizational Psychology

- Psychological Assessment and Testing

- Psychology of Human-Technology Interaction

- Psychology Professional Development and Training

- Research Methods in Psychology

- Social Psychology

- Browse content in Social Sciences

- Browse content in Anthropology

- Anthropology of Religion

- Human Evolution

- Medical Anthropology

- Physical Anthropology

- Regional Anthropology

- Social and Cultural Anthropology

- Theory and Practice of Anthropology

- Browse content in Business and Management

- Business History

- Business Strategy

- Business Ethics

- Business and Government

- Business and Technology

- Business and the Environment

- Comparative Management

- Corporate Governance

- Corporate Social Responsibility

- Entrepreneurship

- Health Management

- Human Resource Management

- Industrial and Employment Relations

- Industry Studies

- Information and Communication Technologies

- International Business

- Knowledge Management

- Management and Management Techniques

- Operations Management

- Organizational Theory and Behaviour

- Pensions and Pension Management

- Public and Nonprofit Management

- Social Issues in Business and Management

- Strategic Management

- Supply Chain Management

- Browse content in Criminology and Criminal Justice

- Criminal Justice

- Criminology

- Forms of Crime

- International and Comparative Criminology

- Youth Violence and Juvenile Justice

- Development Studies

- Browse content in Economics

- Agricultural, Environmental, and Natural Resource Economics

- Asian Economics

- Behavioural Finance

- Behavioural Economics and Neuroeconomics

- Econometrics and Mathematical Economics

- Economic Methodology

- Economic Systems

- Economic History

- Economic Development and Growth

- Financial Markets

- Financial Institutions and Services

- General Economics and Teaching

- Health, Education, and Welfare

- History of Economic Thought

- International Economics

- Labour and Demographic Economics

- Law and Economics

- Macroeconomics and Monetary Economics

- Microeconomics

- Public Economics

- Urban, Rural, and Regional Economics

- Welfare Economics

- Browse content in Education

- Adult Education and Continuous Learning

- Care and Counselling of Students

- Early Childhood and Elementary Education

- Educational Equipment and Technology

- Educational Strategies and Policy

- Higher and Further Education

- Organization and Management of Education

- Philosophy and Theory of Education

- Schools Studies

- Secondary Education

- Teaching of a Specific Subject

- Teaching of Specific Groups and Special Educational Needs

- Teaching Skills and Techniques

- Browse content in Environment

- Applied Ecology (Social Science)

- Climate Change

- Conservation of the Environment (Social Science)

- Environmentalist Thought and Ideology (Social Science)

- Management of Land and Natural Resources (Social Science)

- Natural Disasters (Environment)

- Pollution and Threats to the Environment (Social Science)

- Social Impact of Environmental Issues (Social Science)

- Sustainability

- Browse content in Human Geography

- Cultural Geography

- Economic Geography

- Political Geography

- Browse content in Interdisciplinary Studies

- Communication Studies

- Museums, Libraries, and Information Sciences

- Browse content in Politics

- African Politics

- Asian Politics

- Chinese Politics

- Comparative Politics

- Conflict Politics

- Elections and Electoral Studies

- Environmental Politics

- Ethnic Politics

- European Union

- Foreign Policy

- Gender and Politics

- Human Rights and Politics

- Indian Politics

- International Relations

- International Organization (Politics)

- Irish Politics

- Latin American Politics

- Middle Eastern Politics

- Political Theory

- Political Methodology

- Political Communication

- Political Philosophy

- Political Sociology

- Political Behaviour

- Political Economy

- Political Institutions

- Politics and Law

- Politics of Development

- Public Administration

- Public Policy

- Qualitative Political Methodology

- Quantitative Political Methodology

- Regional Political Studies

- Russian Politics

- Security Studies

- State and Local Government

- UK Politics

- US Politics

- Browse content in Regional and Area Studies

- African Studies

- Asian Studies

- East Asian Studies

- Japanese Studies

- Latin American Studies

- Middle Eastern Studies

- Native American Studies

- Scottish Studies

- Browse content in Research and Information

- Research Methods

- Browse content in Social Work

- Addictions and Substance Misuse

- Adoption and Fostering

- Care of the Elderly

- Child and Adolescent Social Work

- Couple and Family Social Work

- Direct Practice and Clinical Social Work

- Emergency Services

- Human Behaviour and the Social Environment

- International and Global Issues in Social Work

- Mental and Behavioural Health

- Social Justice and Human Rights

- Social Policy and Advocacy

- Social Work and Crime and Justice

- Social Work Macro Practice

- Social Work Practice Settings

- Social Work Research and Evidence-based Practice

- Welfare and Benefit Systems

- Browse content in Sociology

- Childhood Studies

- Community Development

- Comparative and Historical Sociology

- Disability Studies

- Economic Sociology

- Gender and Sexuality

- Gerontology and Ageing

- Health, Illness, and Medicine

- Marriage and the Family

- Migration Studies

- Occupations, Professions, and Work

- Organizations

- Population and Demography

- Race and Ethnicity

- Social Theory

- Social Movements and Social Change

- Social Research and Statistics

- Social Stratification, Inequality, and Mobility

- Sociology of Religion

- Sociology of Education

- Sport and Leisure

- Urban and Rural Studies

- Browse content in Warfare and Defence

- Defence Strategy, Planning, and Research

- Land Forces and Warfare

- Military Administration

- Military Life and Institutions

- Naval Forces and Warfare

- Other Warfare and Defence Issues

- Peace Studies and Conflict Resolution

- Weapons and Equipment

- < Previous

- Next chapter >

1 Validity and Validation in Research and Assessment

- Published: October 2013

- Cite Icon Cite

- Permissions Icon Permissions

This chapter first sets out the book's purpose, namely to further define validity and to explore the factors that should be considered when evaluating claims from research and assessment. It then discusses validity theory and its philosophical foundations, with connections between the philosophical foundations and specific ways validation is considered in research and measurement. An overview of the subsequent chapters is also presented.

Personal account

- Sign in with email/username & password

- Get email alerts

- Save searches

- Purchase content

- Activate your purchase/trial code

- Add your ORCID iD

Institutional access

Sign in with a library card.

- Sign in with username/password

- Recommend to your librarian

- Institutional account management

- Get help with access

Access to content on Oxford Academic is often provided through institutional subscriptions and purchases. If you are a member of an institution with an active account, you may be able to access content in one of the following ways:

IP based access

Typically, access is provided across an institutional network to a range of IP addresses. This authentication occurs automatically, and it is not possible to sign out of an IP authenticated account.

Choose this option to get remote access when outside your institution. Shibboleth/Open Athens technology is used to provide single sign-on between your institution’s website and Oxford Academic.

- Click Sign in through your institution.

- Select your institution from the list provided, which will take you to your institution's website to sign in.

- When on the institution site, please use the credentials provided by your institution. Do not use an Oxford Academic personal account.

- Following successful sign in, you will be returned to Oxford Academic.

If your institution is not listed or you cannot sign in to your institution’s website, please contact your librarian or administrator.

Enter your library card number to sign in. If you cannot sign in, please contact your librarian.

Society Members

Society member access to a journal is achieved in one of the following ways:

Sign in through society site

Many societies offer single sign-on between the society website and Oxford Academic. If you see ‘Sign in through society site’ in the sign in pane within a journal:

- Click Sign in through society site.

- When on the society site, please use the credentials provided by that society. Do not use an Oxford Academic personal account.

If you do not have a society account or have forgotten your username or password, please contact your society.

Sign in using a personal account

Some societies use Oxford Academic personal accounts to provide access to their members. See below.

A personal account can be used to get email alerts, save searches, purchase content, and activate subscriptions.

Some societies use Oxford Academic personal accounts to provide access to their members.

Viewing your signed in accounts

Click the account icon in the top right to:

- View your signed in personal account and access account management features.

- View the institutional accounts that are providing access.

Signed in but can't access content

Oxford Academic is home to a wide variety of products. The institutional subscription may not cover the content that you are trying to access. If you believe you should have access to that content, please contact your librarian.

For librarians and administrators, your personal account also provides access to institutional account management. Here you will find options to view and activate subscriptions, manage institutional settings and access options, access usage statistics, and more.

Our books are available by subscription or purchase to libraries and institutions.

| Month: | Total Views: |

|---|---|

| October 2022 | 16 |

| November 2022 | 10 |

| December 2022 | 9 |

| January 2023 | 3 |

| February 2023 | 10 |

| March 2023 | 31 |

| April 2023 | 14 |

| May 2023 | 15 |

| June 2023 | 3 |

| July 2023 | 6 |

| August 2023 | 10 |

| September 2023 | 10 |

| October 2023 | 9 |

| November 2023 | 7 |

| December 2023 | 7 |

| January 2024 | 11 |

| February 2024 | 10 |

| March 2024 | 12 |

| April 2024 | 24 |

| May 2024 | 29 |

| June 2024 | 6 |

| July 2024 | 5 |

- About Oxford Academic

- Publish journals with us

- University press partners

- What we publish

- New features

- Open access

- Rights and permissions

- Accessibility

- Advertising

- Media enquiries

- Oxford University Press

- Oxford Languages

- University of Oxford

Oxford University Press is a department of the University of Oxford. It furthers the University's objective of excellence in research, scholarship, and education by publishing worldwide

- Copyright © 2024 Oxford University Press

- Cookie settings

- Cookie policy

- Privacy policy

- Legal notice

This Feature Is Available To Subscribers Only

Sign In or Create an Account

This PDF is available to Subscribers Only

For full access to this pdf, sign in to an existing account, or purchase an annual subscription.

How to Improve Data Validation in Five Steps

Mercatus Working Paper Series

18 Pages Posted: 26 Mar 2021

Danilo Freire

Independent Researcher

Date Written: March 25, 2021

Social scientists are awash with new data sources. Though data preprocessing and storage methods have developed considerably over the past few years, there is little agreement on what constitutes the best set of data validation practices in the social sciences. In this paper I provide five simple steps that can help students and practitioners improve their data validation processes. I discuss how to create testable validation functions, how to increase construct validity, and how to incorporate qualitative knowledge in statistical measurements. I present the concepts according to their level of abstraction, and I provide practical examples on how scholars can add my suggestions to their work.

Keywords: computational social science, computer programming, content validity, data validation, tidy data

JEL Classification: A20, C80, C88

Suggested Citation: Suggested Citation

Danilo Freire (Contact Author)

Independent researcher ( email ), do you have a job opening that you would like to promote on ssrn, paper statistics, related ejournals, mercatus center research paper series.

Subscribe to this free journal for more curated articles on this topic

Innovation Educator: Courses, Cases & Teaching eJournal

Subscribe to this fee journal for more curated articles on this topic

Sociology of Innovation eJournal

Information systems ejournal, other innovation research & policy ejournal.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 22 April 2024

Practical approaches in evaluating validation and biases of machine learning applied to mobile health studies

- Johannes Allgaier ORCID: orcid.org/0000-0002-9051-2004 1 &

- Rüdiger Pryss ORCID: orcid.org/0000-0003-1522-785X 1

Communications Medicine volume 4 , Article number: 76 ( 2024 ) Cite this article

844 Accesses

1 Altmetric

Metrics details

- Health services

- Medical research

Machine learning (ML) models are evaluated in a test set to estimate model performance after deployment. The design of the test set is therefore of importance because if the data distribution after deployment differs too much, the model performance decreases. At the same time, the data often contains undetected groups. For example, multiple assessments from one user may constitute a group, which is usually the case in mHealth scenarios.

In this work, we evaluate a model’s performance using several cross-validation train-test-split approaches, in some cases deliberately ignoring the groups. By sorting the groups (in our case: Users) by time, we additionally simulate a concept drift scenario for better external validity. For this evaluation, we use 7 longitudinal mHealth datasets, all containing Ecological Momentary Assessments (EMA). Further, we compared the model performance with baseline heuristics, questioning the essential utility of a complex ML model.

Hidden groups in the dataset leads to overestimation of ML performance after deployment. For prediction, a user’s last completed questionnaire is a reasonable heuristic for the next response, and potentially outperforms a complex ML model. Because we included 7 studies, low variance appears to be a more fundamental phenomenon of mHealth datasets.

Conclusions

The way mHealth-based data are generated by EMA leads to questions of user and assessment level and appropriate validation of ML models. Our analysis shows that further research needs to follow to obtain robust ML models. In addition, simple heuristics can be considered as an alternative for ML. Domain experts should be consulted to find potentially hidden groups in the data.

Plain Language Summary

Computational approaches can be used to analyse health-related data collected using mobile applications from thousands of participants. We tested the impact of some participants being represented multiple times or some not being counted properly within the analysis. In this context, we label a multi-represented participant a group. We find that ignoring such groups can lead to false estimation of health-related predictions. In some cases, simpler quantitative methods can outperform complex computational models. This highlights the importance of monitoring and validating results conducted by complex computational models and confers the use of simpler analytical methods in its place.

Similar content being viewed by others

Using remotely monitored patient activity patterns after hospital discharge to predict 30 day hospital readmission: a randomized trial

Harnessing EHR data for health research

Detecting the impact of subject characteristics on machine learning-based diagnostic applications

Introduction.

When machine learning models are applied to medical data, an important question is whether the model learns subject-specific characteristics (not desired effect) or disease-related characteristics (desired effect) between an input and output. A recent paper by Kunjan et al. 1 describes this very well at the example of classification and EEG disease diagnosis. In the Kunjan et al. paper, this is discussed using different variants of cross-validation. It is well shown that the type of validation can cause extreme differences. Older work has evaluated different cross-validation techniques on datasets with different recommendations for the number of optimal folds 2 , 3 . We transfer and adapt this idea to mHealth data and the application of machine-learning-based classification and raise new questions about this. To this end, we will briefly explain the background. Using simple, understandable models rather than complex black box models is a clamor of Rudin et. al., which motivates us to evaluate simple heuristics against complex models 4 . The Cross-Industry Standard Process for Data Mining (CRISP-DM) highlights the importance of subject matter experts to get familiar with a dataset 5 . In turn, familiarity with the dataset is necessary to detect hidden groups in the dataset. In our mHealth use cases, one app user that fills out more several questionnaires constitutes a group.

We have developed numerous applications in mobile health in recent years (e.g. 6 , 7 ) and the issue of disease-related or subject-specific characteristics is particularly pronounced in these applications. mHealth applications very often use the principles of Patient-reported Outcome Measures (PROMs) or/and Ecological Momentary Assessments (EMAs) 8 . EMAs have the major goal that users record symptoms several times a day over a longer period. As a result, users of an mHealth solution generate longitudinal data with many assessments. Since not all users respond equally frequently in the applications (as shown by many applications that have been in operation for a long time 9 ), the result is a very different number of assessments per user. Therefore, the question arises in the application of machine learning, how the actual learning takes place. In learning, should we group the ratings per user so that a user only appears in either the training set or the testing set, which is correct by design. Or, can we accept that a user’s ratings appear in both the training and test sets, since users with many ratings have such a high variance in ratings. Finally, individual users may undergo concept drift in the way they answer questions in many assessments over a long period of time. In such a case, the question also arises as to whether it makes sense to use an individual’s ratings separately in the training and testing sets.

In this context, we also see another question as relevant that is not given enough attention: What is an appropriate baseline for a machine learning outcome in studies? As mentioned earlier, some mHealth users fill out thousands of assessments, and do so for years. In this case, there may be questions about whether a previous assessment can reliably predict the next one, and the use of machine learning may be poorly targeted.

With respect to the above research questions, we use another component to further promote the results. We selected seven studies from the pool of developed apps that we will use for the analysis of this paper. Since a total of 7 studies are used, a more representative picture should emerge. However, since the studies do not all have the same research goals, classification tasks need to be found per app to make the overall results comparable. The studies also do not all have the same duration. Even though the studies are not always directly comparable, the setting is very promising as the results will show in the end. Before deriving specific research questions against this background, related work and technical background information will be briefly discussed.

This section surveys relevant literature to contextualize our contributions within the broader field of study. Cawley et al. also address the question of how to minimize the error in the estimator of performance in ground truth. Using synthetic data sets, they argue that overfitting a model is as problematic as selection bias in the training data 10 . However, they do not address the phenomenon of groups in the data. Refaeilzadeh et al. give an overview of common cross-validation techniques such as leave-one-out, repeated k-fold, or hold-out validation 11 . They discuss pros and cons of each kind and mention an underestimated performance variance for repeated k-fold cross-validation, but they also do not address the problem with (unknown) groups in the dataset 11 . Schratz et. al. focus on spatial auto-correlation and spatial cross-validation rather than on groups and splitting approaches 12 . Spatial cross-validation is sometimes also referred to as block cross-validation13. They observe large performance differences in the use or non-use of spatial cross validation. By random sampling of train and test samples, a train and test sample might be too close to each other on a geographical space, which induces a selection bias and thus an overoptimistic estimate of the generalization error. They then use spatial cross-validation. We would like to briefly differentiate between space and group . Two samples belong to the same space if they are geographically close to each other 13 . They belong to the same group if a domain expert assigns them to a group. In our work, multiple assessments belonging to one user form a group. Meyer et al. also evaluate using a spatial cross-validation approach, but also add a time dimension using Leave-Time-Out cross-validation where samples belong to one fold if they fall into a specific time range 14 . This leave-time-out approach is like our time-cut approach, which will be introduced in the methods section. Yet, we are not aware of any related approach on mHealth data like the one we are pursuing in this work.

As written at the beginning of the introduction, we want to evaluate how much the model’s performance depends on specific users (syn. subjects, patients, persons ) that are represented several times within our dataset, but with a varying number of assessments per user. From previous work, we already know that so-called power-users with many more assessments than most of the other users have a high impact on the models training procedure 15 . We would further like to investigate whether a simple heuristic can outperform complex ensemble methods. Simple heuristics are interesting because they are easy to understand, have a low maintenance requirement, and have low variance, but also generate high bias.

Technically, across studies (i.e., across the seven studies), we investigate simple heuristics at the user and assessment level and compare them to tree-based non-tuned ML ensembles. Tree-based methods have already been proven in the literature on the specific mHealth data used, that is why we use only tree-based methods. The reason for not tuning these models is that we want to be more comparable across the used studies. With these levels of consideration, we would like to elaborate on the following research two questions: First, what is the variance in performance when using different splitting methods for train and test set of mHealth data (RQ1)? Second, in which cases is the development, deployment and maintenance of a ML model compared to a simple baseline heuristic worthwhile when being used on mHealth data?

The present work compares the performance of a tree-based ensemble method if the split of the data happens on two different levels: User and assessment. It further compares this performance to non-ML approaches that uses simple heuristics to also predict the target on a user- or assessment level. To summarize the major findings: First, ignoring users in datasets during cross-validation leads to an overestimation of the model’s performance and robustness. Second, for some use cases, simple heuristics are as good as complicated tree-based ensemble methods. Within this domain, heuristics are more advantageous if they are trained or applied at the user level. ML models also work at the assessment level. And third, sorting users can simulate concept drift in training if the time span of data collection is large enough. The results in the test set change due to the shuffling of users.

In this section, we first describe how Ecological Momentary Assessments work and how they differentiate from assessments that are collected within a clinical environment. Second, we present the studies and ML use cases for each dataset. Next, we introduce the non-ML baseline heuristics and explain the ML preprocessing steps. Finally, we describe existing train-test-split approaches (cross-validation) and the splitting approaches at the user- and assessment levels.

Ecological momentary assessments

Within this context, ecological means “within the subject’s natural environment", and momentary “within this moment" and ideally, in real time 16 . Assessments collected in research or clinical environments may cause recall bias of the subject’s answers and are not primarily designed to track changes in mood or behavior longitudinally. Ecological Momentary Assessments (EMA) thus increase validity and decrease recall bias. They are suitable for asking users in their daily environment about their state of being, which can change over time, by random or interval time sampling. Combining EMAs and mobile crowdsensing sensor measurements allows for multimodal analyses, which can gain new insights in, e.g., chronic diseases 8 , 15 . The datasets used within this work have EMA in common and are described in the following subsection.

The ML use cases

From ongoing projects of our team, we are constantly collecting mHealth data as well as Ecological Momentary Assessments 6 , 17 , 18 , 19 . To investigate how the machine learning performance varies based on the splits, we wanted different datasets with different use cases. However, to increase comparability between the use cases, we created multi-class classification tasks.

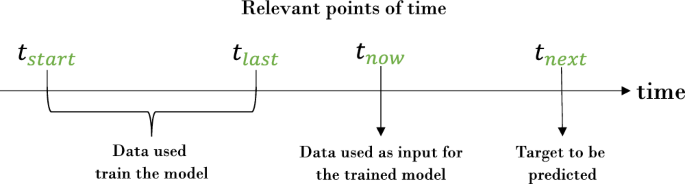

We train each model using historical assessments, the oldest assessment was collected at time t s t a r t , the latest historical assessment at time t l a s t . A current assessment is created and collected at time t n o w , a future assessment at time t n e x t . Depending on the study design, the actual point of time t n e x t may be in some hours or in a few weeks from t n o w . For each dataset and for each user, we want to predict a feature (synonym, a question of an assessment) at time t n e x t using the features at time t n o w . This feature at time t n e x t is then called the target. For each use case, a model is trained using data between t s t a r t and t l a s t , and given the input data from t n o w , it predicts the target at t n e x t . Figure 1 gives a schematic representation of the relevant points of time t s t a r t , t l a s t , t n o w , and t n e x t .

At time t s t a r t , the first assessment is given; t l a s t is the last known assessment used for training, whereas t n o w is the currently available assessment as input for the classifier and the target is predicted at time t t e x t .

To increase comparability between the approaches, we used the same model architecture with the same pseudo-random initialisation. The model is a Random Forest classifier with 100 trees and the Gini impurity as the splitting criterion. The whole coding was in Python 3.9, using mostly scikit-learn , pandas and Jupyter Notebooks . Details can be found on GitHub in the supplementary material.

The included apps and studies in more detail

For all datasets that we used in this study, we have ethical approvals (UNITI No. 20-1936-101, TYT No. 15-101-0204, Corona Check No. 71/20-me, and Corona Health No. 130/20-me). The following section provides an overview of the studies, the available datasets with characteristics, and then describes each use case in more detail. An brief overview is given in Table 1 with baseline statistics for each dataset in Table 2 .

To provide some more background info about the studies: The analyses happen with all apps on the so-called EMA questionnaires (synonym: assessment), i.e., the questionnaires that are filled out multiple times in all apps and the respective studies. This can happen several times a day (e.g., for the tinnitus study TrackYourTinnitus (TYT)) or at weekly intervals (e.g., studies in the Corona Health (CH) app). Nevertheless, the analysis happens on the recurring questionnaires, which collect symptoms over time and in the real environment through unforeseen (i.e., random) notifications.

The TrackYourTinnitus (TYT) dataset has the most filled-out assessments with more than 110,000 questionnaires as by 2022-10-24. The Corona Check (CC) study has the most users. This is because each time an assessment is filled out, a new user can optionally be created. Notably, this app has the largest ratio of non-German users and the youngest user group with the largest standard deviation. The Corona Health (CH) app with its studies Mental health for adults, adolescents and physical health for adults has the highest proportion of German users because it was developed in collaboration with the Robert Koch Institute and was primarily promoted in Germany. Unification of treatments and Interventions for Tinnitus patients (UNITI) is a European Union-wide project, which overall aim is to deliver a predictive computational model based on existing and longitudinal data 19 . The dataset from the UNITI randomized controlled trial is described by Simoes et al. 20 .

TrackYourTinnitus (TYT)

With this app, it is possible to record the individual fluctuations in tinnitus perception. With the help of a mobile device, users can systematically measure the fluctuations of their tinnitus. Via the TYT website or the app, users can also view the progress of their own data and, if necessary, discuss it with their physician.

The ML task at hand is a classification task with target variable Tinnitus distress at time t n o w and the questions from the daily questionnaire as the features of the problem. The target’s values range in [0, 1] on a continuous scale. To make it a classification task, we created bins with step size of 0.2 resulting in 5 classes. The features are perception , loudness , and stressfulness of tinnitus, as well as the current mood , arousal and stress level of a user, the concentration level while filling out the questionnaire, and perception of the worst tinnitus symptom . A detailed description of the features was already done in previous works 21 . Of note, the time delta of two assessments of one user at t n e x t and t n o w varies between users. Its median value is 11 hours.

Unification of Treatments and Interventions for Tinnitus Patients (UNITI)

The overall goal of UNITI is to treat the heterogeneity of tinnitus patients on an individual basis. This requires understanding more about the patient-specific symptoms that are captured by EMA in real time.

The use case we created at UNITI is like that of TYT. The target variable encumbrance, coded as cumberness , which was also continuously recorded, was divided into an ordinal scale from 0 to 1 in 5 steps. Features also include momentary assessments of the user during completion, such as jawbone, loudness, movement, stress, emotion , and questions about momentary tinnitus. The data was collected using our mobile apps 7 . Here, of note: on average, the median time gap between two assessment is 24 hours for each user.

Corona Check (CC)

At the beginning of the COVID-19 pandemic, it was not easy to get initial feedback about an infection, given the lack of knowledge about the novel virus and the absence of widely available tests. To assist all citizens in this regard, we launched the mobile health app Corona Check together with the Bavarian State Office for Health and Food Safety 22 .

The Corona Check dataset predicts whether a user has a Covid infection based on a list of given symptoms 23 . It was developed in the early pandemic back in 2020 and helped people to get quick estimate for an infection without having an antigen test. The target variable has four classes: First, “suspected coronavirus (COVID-19) case", second, “symptoms, but no known contact with confirmed corona case", third, “contact with confirmed corona case, but currently no symptoms", and last, “neither symptoms nor contact".

The features are a list of Boolean variables, which were known at this time to be typically related with a Covid infection, such as fever, a sore throat, a runny nose, cough, loss of smell, loss of taste, shortness of breath, headache, muscle pain, diarrhea, and general weakness. Depending on the answers given by a user, the application programming interface returned one of the classes. The median time gap of two assessments for the same user is 8 hours on average with a much larger standard deviation of 24.6 days.

Corona Health ∣ Mental health for adults (CHA)

The last four use cases are all derived from a bigger Covid-related mHealth project called Corona Health 6 , 24 . The app was developed in collaboration with the Robert Koch-Institute and was primarily promoted in Germany, it includes several studies about the mental or physical health, or the stress level of a user. A user can download the app and then sign up for a study. He or she will then receive a baseline one-time questionnaire, followed by recurring follow-ups with between-study varying time gaps. The follow-up assessment of CHA has a total of 159 questions including a full PHQ9 questionnaire 25 . We then used the nine questions of PHQ9 as features at t n o w to predict the level of depression for this user for t n e x t . Depression levels are ordinally scaled from None to Severe in a total of 5 classes. The median time gap of two assessments for the same user is 7.5 days. That is, the models predict the future in this time interval.

Corona Health ∣ Mental health for adolescents (CHY)

Similar to the adult cohort, the mental health of adolescents during the pandemic and its lock-downs is also captured by our app using EMA.

A lightweight version of the mental health questionnaire for adults was also offered to adolescents. However, this did not include a full PHQ9 questionnaire, so we created a different use case. The target variable to be classified on a 4-level ordinal scale is perceived dejection coming from the PHQ instruments, features are a subset of quality of live assessments and PHQ questions, such as concernment, tremor, comfort, leisure quality, lethargy, prostration, and irregular sleep. For this study, the median time gap of two follow up assessments is 7.3 days.

Corona Health ∣ Physical health for adults (CHP)

Analogous to the mental health of adults, this study aims to track how the physical health of adults changes during the pandemic period.

Adults had the option to sign up for a study with recurring assessments asking for their physical health. The target variable to be classified asks about the constraints in everyday life that arise due to physical pain at t n e x t . The features for this use case include aspects like sport, nutrition, and pain at t n o w . The median time gap of two assessments for the same user is 14.0 days.

Corona Health ∣ Stress (CHS)

This additional study within the Corona Health app asks users about their stress level on a weekly basis. Both features and target are assessed on a five-level ordinal scale from never to very often . The target asks for the ability of stress management, features include the first nine questions of the perceived stress scale instrument 26 . The median time gap of two assessments for the same user on average is 7.0 days.

Baseline heuristics instead of complex ML models?

We also want to compare the ML approaches with a baseline heuristic ( synonym: Baseline model ). A baseline heuristic can be a simple ML model like a linear regression or a small Decision Tree, or alternatively, depending on the use case, it could also be a simple statement like “The next value equals the last one". The typical approach for improving ML models is to estimate the generalization error of the model on a benchmark data set when compared to a baseline heuristic. However, it is often not clear, which baseline heuristic to consider, i.e.: The same model architecture as the benchmark model, but without tuned hyperparameters? A simple, intrinsically explainable model with or without hyperparameter tuning? A random guess? A naive guess, in which the majority class is predicted? Since we have approaches on a user-level (i.e., we consider users when splitting) and on an assessment-level (i.e., we ignore users when splitting), we also should create baseline heuristics on both levels. We additionally account for within-user variance in Ecological Momentary Assessments by averaging a user’s previously known assessments. Previously known here means that we calculate the mode or median of all assessments of a user that are older than the given timestamp. In total, this leads to four baseline heuristics (user-level latest, user-level average, assessment-level latest, assessment-level average) that do not use any machine learning but simple heuristics. On the assessment-level, the latest known target or the mean of all known targets so far is taken to predict the next target, no matter of the user-id of this assessment. On the user-level, either the last known, or median, or mode value of this user is taken to predict the target. This, in turn, leads to a cold-start problem for users that appear for the first time in a dataset. In this case, either the last known, or mode, or median of all assessments that are known so far are taken to predict the target.

ML preprocessing

Before the data and approaches could be compared, it was necessary to homogenize them. In order for all approaches to work on all data sets, at least the following information is necessary: Assessment_id, user_id, timestamp, features, and the target. Any other information such as GPS data, or additional answers to questions of the assessment, we did not include into the ML pipeline. Additionally, targets that were collected on a continuous scale, had to be binned into an ordinal scale of five classes. For an easier interpretation and readability of the outputs, we also created label encodings for each target. To ensure consistency of the pre-processing, we created helper utilities within Python to ensure that the same function was applied on each dataset. For missing values, we created a user-wise missing value treatment. More precisely, if a user skipped a question in an assessment, we filled the missing value with the mean or mode ( mode = most common value) of all other answers of this user for this assessment. If a user had only one assessment, we filled it with the overall mean for this question.

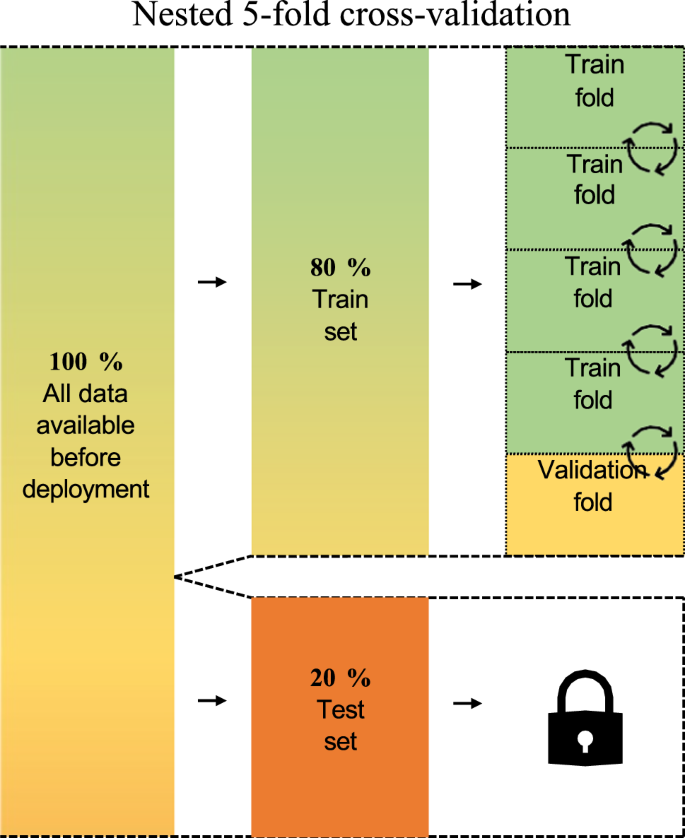

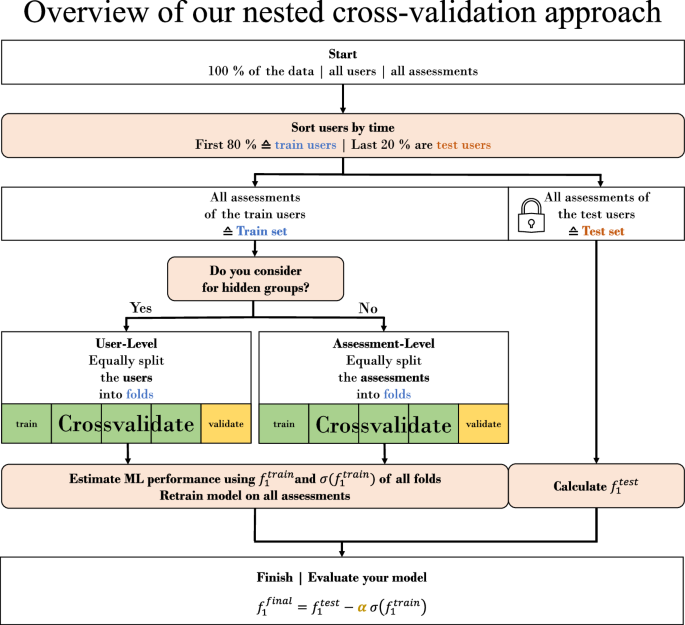

For each dataset and for each script, we set random states and seeds to enhance reproducibility. For the outer validation set, we assigned the first 80 % of all users that signed up for a study to the train set, the latest 20% to the test set. To ensure comparability, the test users were the same for all approaches. We did not shuffle the users to simulate a deployment scenario where new users join the study. This would also add potential concept drift from the train to the test set and thus improve the simulation quality.

For the cross-validation within the training set, which we call internal validation, we chose a total of 5 folds with 1 validation fold. We then applied the four baseline heuristics (on user level and assessment level with either latest target or average target as prediction) to calculate the within-train-set performance standard deviation and the mean of the weighted F1 scores for each train fold. The mean and standard deviation of the weighted F1 score are then the estimator of the performance of our model in the test set.

We call one approach superior to another if the final score is higher. The final score to evaluate an approach is calculated as:

If the standard deviation between the folds during training is large, the final score is lower. The test set must not contain any selection bias against the underlying population. The pre-factor α of the standard deviation is another hyperparameter. The more important model robustness for the use case, the higher α should be set.

Existing train-test-split approaches

Within cross-validation, there exist several approaches on how to split up the data into folds and validate them, such as the k -fold approach with k as the number of folds in the training set. Here, k − 1 folds form the training folds and one fold is the validation fold 27 . One can then calculate k performance scores and their standard deviation to get an estimator for the performance of the model in the test set, which itself is an estimator for the model’s performance after deployment (see also Fig. 2 ).

Schematic visualisation of the steps required to perform a k -fold cross-validation, here with k = 5.

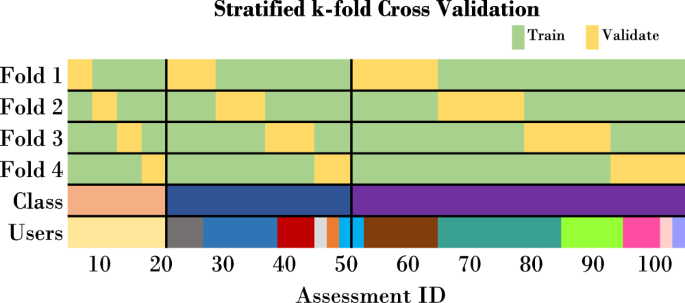

In addition, there exist the following strategies: First, (repeated) stratified k -fold, in which the target distribution is retained in each fold, which can also be seen in Fig. 3 . After shuffling the samples, the stratified split can be repeated 3 . Second, leave- one -out cross-validation 28 , in which the validation fold contains only one sample while the model has been trained on all other samples. And third, leave- p -out cross-validation, in which \(\left(\begin{array}{c}n\\ p\end{array}\right)\) train-test-pairs are created with n equals number of assessments (synonym sample ) 29 .

While this approach retains the class distribution in each fold, it still ignores user groups. Each color represents a different class or user id.

These approaches, however, do not always focus on samples that might belong to our mHealth data peculiarities. To be more specific, they do not account for users (syn. groups, subjects) that generate daily assessments (syn. samples) with a high variance.

Splitting approaches related to EMA

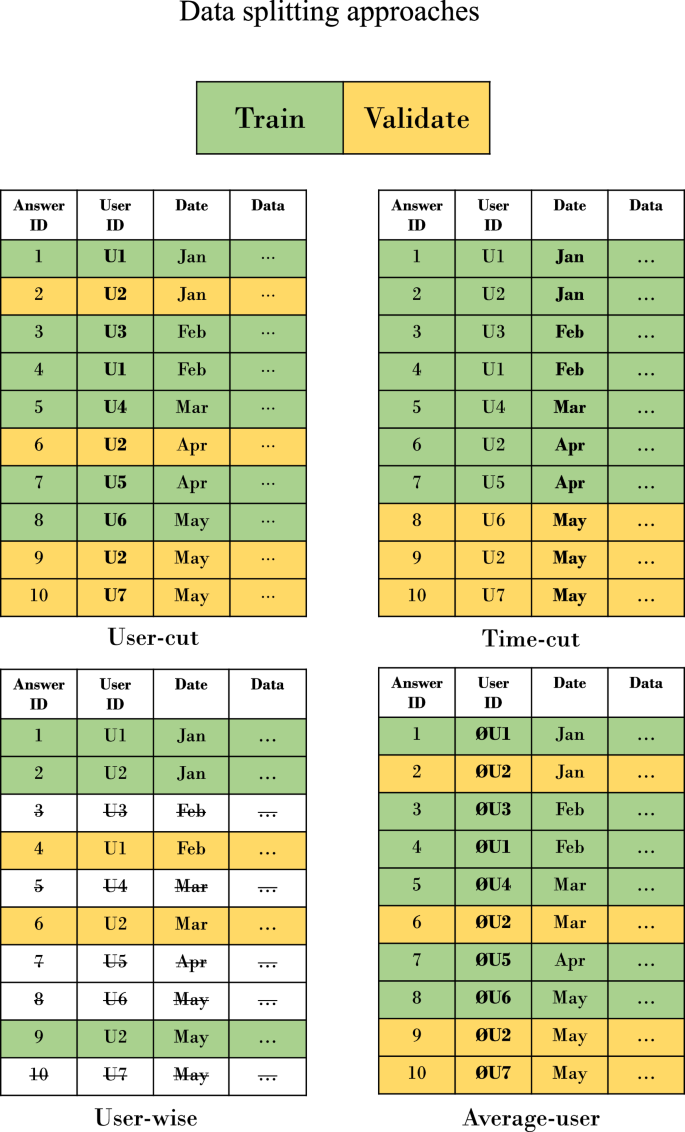

To precisely explain the splitting approaches, we would like to differentiate between the terms folds and sets . We call a chunk of samples (synonym: assessments, filled-out questionnaires) a set on the outer split of the data, for which we cut-off the final test set . However, within the training set, we then split further to create training and validation folds . That is, using the term fold , we are in the context of cross validation. When we use the term set , then we are in the outer split of the ML pipeline. Figure 4 visualizes this approach. Following this, we define 4 different approaches to split the data. For one of them we ignore the fact that there are users, for the other three we do not. We call these approaches user-cut, average-user, user-wise and time-cut . All approaches have in common that the first 80 % of all users are always in the training set and the remaining 20 % are in the test set. A schematic visualization of the splitting approaches is shown in Fig. 5 . Within the training set, we then split on user-level for the approaches user-cut, average-user and user-wise , and on assessment-level for the approach time-cut .

In the second step, users are ordered by their study registration time, with the initial 80 % designated as training users and the remaining 20 % as test users. Subsequently, assessments by training users are allocated to the training set, and those by test users to the test set. Within the training set, user grouping dictates the validation approach: group-cross-validation is applied if users are declared as a group, otherwise, standard cross-validation is utilized. We compute the average f 1 score, \({f}_{1}^{train}\) , from training folds and the f 1 score on the test set, \({f}_{1}^{test}\) . The standard deviation of \({f}_{1}^{train},\sigma ({f}_{1}^{train})\) , indicates model robustness. The hyperparameter α adjusts the emphasis on robustness, with higher α values prioritizing it. Ultimately, \({f}_{1}^{final}\) , which is a more precise estimate if group-cross-validation is applied, offers a refined measure of model performance in real-world scenarios.

Yellow means that this sample is part of the validation fold, green means it is part of a training fold. Crossed out means that the sample has been dropped in that approach because it does not meet the requirements. Users can be sorted by time to accommodate any concept drift.

In the following section, we will explain the splitting approaches in more detail. The time-cut approach ignores the fact of given groups in the dataset and simply creates validation folds based on the time the assessments arrive in the database. In this example, the month, in which a sample was collected, is known. More precisely, all samples from January until April are in the training set while May is in the test set. The user-cut approach shuffles all user ids and creates five data folds with distinct user-groups. It ignores the time dimension of the data, but provides user-distinct training and validation folds, which is like the GroupKFold cross-validation approach as implemented in scikit-learn 30 . The average-user approach is very similar to the user-cut approach. However, each answer of a user is replaced by the median or mode answer of this user up to the point in question to reduce within-user-variance. While all the above-mentioned approaches require only one single model to be trained, the user-wise approach requires as many models as distinct users are given in the dataset. Therefore, for each user, 80 % of his or her assessments are used to train a user-specific model, and the remaining 20% of the time-sorted assessments are used to test the model. This means that for this approach, we can directly evaluate on the test set as each model is user specific and we solved the cold-start problem by training the model on the first assessments of this user. If a user has less than 10 assessments, he or she is not evaluated on that approach.

Approval for the UNITI randomized controlled trial and the UNITI app was obtained by the Ethics Committee of the University Clinic of Regensburg (ethical approval No. 20-1936-101). All users read and approved the informed consent before participating in the study. The study was carried out in accordance with relevant guidelines and regulations. The procedures used in this study adhere to the tenets of the Declaration of Helsinki. The Track Your Tinnitus (TYT) study was approved by the Ethics Committee of the University Clinic of Regensburg (ethical approval No. 15-101-0204). The Corona Check (CH) study was approved by the Ethics Committee of the University of Würzburg (ethical approval no. 71/20-me) and the university’s data protection officer and was carried out in accordance with the General Data Protection Regulations of the European Union. The procedures used in the Corona Health (CH) study were in accordance with the 1964 Helsinki declaration and its later amendments and was approved by the ethics committee of the University of Würzburg, Germany (No. 130/20-me). Ethical approvals include secondary use. The data from this study are available on request from the corresponding author. The data are not publicly available, as the informed consent of the participants did not provide for public publication of the data.

Reporting summary

Further information on research design is available in the Nature Portfolio Reporting Summary linked to this article.

We will see in this results section that ignoring users in training leads to an underestimation of the generalizability of the model, the standard deviation is then too small. To further explain, a model is ranked first in the comparison of all computations if it has the highest final score, and last if it has the lowest final score. We recall the formula of the final score from the methods section: \({f}_{1}^{final}={f}_{1}^{test}-0.5{\sigma }\left(\,{f}_{1}^{train}\right)\) . For these use cases, we set α = 0.5. The greater the emphasis on model robustness and the increased concerns regarding concept drift, the greater the alpha value should be set.

RQ1: What is the variance in performance when using different splitting methods for train and test set?

Considering performance aspects and ignoring the user groups in the data, the time cut approach has on average the best performance on assessment level. As an additional variant, we have sorted users once by time and once by random. When sorting by time, the baseline heuristic with the last known assessment of a user follows at rank 2, whereas with randomly sorted users, the user cut approach takes rank 2. The baseline heuristic with all known assessments on the user-level has the highest standard deviation in ranks, which means that this approach is highly dependent on the use case: For some datasets, it works better, for other it does not. The user-wise model approach has also a higher standard deviation in the ranking score, which means that the success of this approach is more use-case specific. As we set the threshold of users to be included into this approach to a minimum of 10 assessments, we have a high chance of a selection bias for the train-test split for users with only a few assessments, which could be a reason for the larger variance in performance. Details for the result are given in Table 3 .

Could there be a selection bias of users that are sorted and split by time? To answer this, we randomly draw 5 different user test sets for the whole pipeline and compared the approaches’ rankings with the variation where users were sorted by time. The approaches’ ranking changes by .44, which is less than one rank and can be calculated from Table 3 . This shows that there is no easily classifiable group of test users.

Cross-validation within the train helps to estimate the generalization error of the model for unseen data. On assessment-level, the standard deviations of the weighted F1 score within the train set for all datasets varies between 0.25 % for TrackYourTinnitus and 1.29 % for Corona Health Stress. On user-level, depending on the splitting approach, the standard deviation varies from 1.42 % to 4.69 %. However, on the test set, the estimator of the generalization error (i.e., the standard deviation of the F1 scores of the validation folds within the train set) is too low for all 7 datasets on assessment-level. On user-level, the estimator of the generalization error is too low for 4 out of 7 datasets. We define the estimator of the generalization error as in range if its smaller or equals the performance drop between validation and test set. Details for the result are given in Table 4 .

Both approaches, user- and assessment, overestimate the performance of the model during training. However, the quality of estimator of the generalization error increases if users are split on user-level.

RQ2: In which cases is the development, deployment and maintenance of a ML model compared to a simple baseline heuristic worthwhile?

For our 7 datasets, the baseline heuristics on a user-level perform better than those on assessment-level. For the datasets Corona Check (CC), Corona Health Stress (CH), TrackYourTinnitus (TYT) and UNITI , the last known user assessment is the best predictor within the baseline heuristics. For the psychological Corona Health study with adolescents (CHY) and adults (CHA), and physical health for adults (CHP), the average of the historic assessments is the best baseline predictor. The last known assessment on an assessment-level as a baseline heuristic performs worse for each dataset compared to the assessment level. The average of all so far known assessment as a predictor for the next assessment - independent from the user - has worst performance within the baseline heuristics for all datasets except CHA. Notably, the larger the number of assessments, the more the all-instances-approach on assessment-level converts to the mean of the target, which has high bias and minimum variance.

These results lead us to conclude that recognizing user groups in datasets leads to an improved baseline when trying to predict future ones from historical assessments. When these non-machine-learning baseline heuristics are then compared to machine learning models without hyperparameter tuning, it is found that they sometimes outperform or similarly outperform the machine learning model.

The approaches ranking in Table 5 shows the general overestimation of the performance of the time-cut approach as this approach is ranked best on average. It can be also seen that these approaches are ranked closely to each other. We chose α to be 0.5. Because we only subtract 0.5 (0.5 = α , our hyperparameter to control importance of model robustness) of the standard deviation of the f 1 scores of the validation folds, approaches with a higher standard deviation are less punished. This means, in turn, that the overestimation of the performance of the splits on assessment-level would be higher if α was higher. Another reason for the similarity of the approaches is that the same model architecture has been finally trained on all assessments of all train users to be evaluated on the test set. Thus, the only difference of the rankings results from the standard deviation of the f 1 scores of the validation folds.

To answer the question whether it is worthwhile to turn a prediction task into an ML project, further constraints should be considered. The above analysis shows that the baseline heuristics are competitive to the non-tuned random forest with much lower complexity. At the same time, the overall results are an f1 score between 55 and 65 for a multi-class classification with potential for improvement. Thus, the question should be additionally asked, from which f 1 score can be deployed, which depends on the use case, and in addition it is not clear whether the ML approach can be significantly improved by a different model or the right tuning.