Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Type I & Type II Errors | Differences, Examples, Visualizations

Type I & Type II Errors | Differences, Examples, Visualizations

Published on January 18, 2021 by Pritha Bhandari . Revised on June 22, 2023.

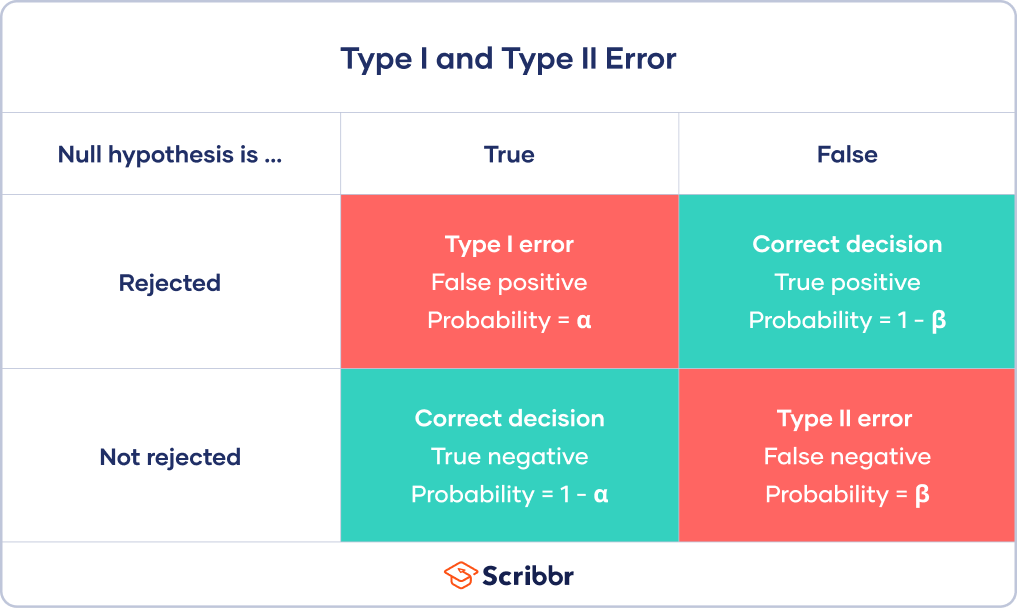

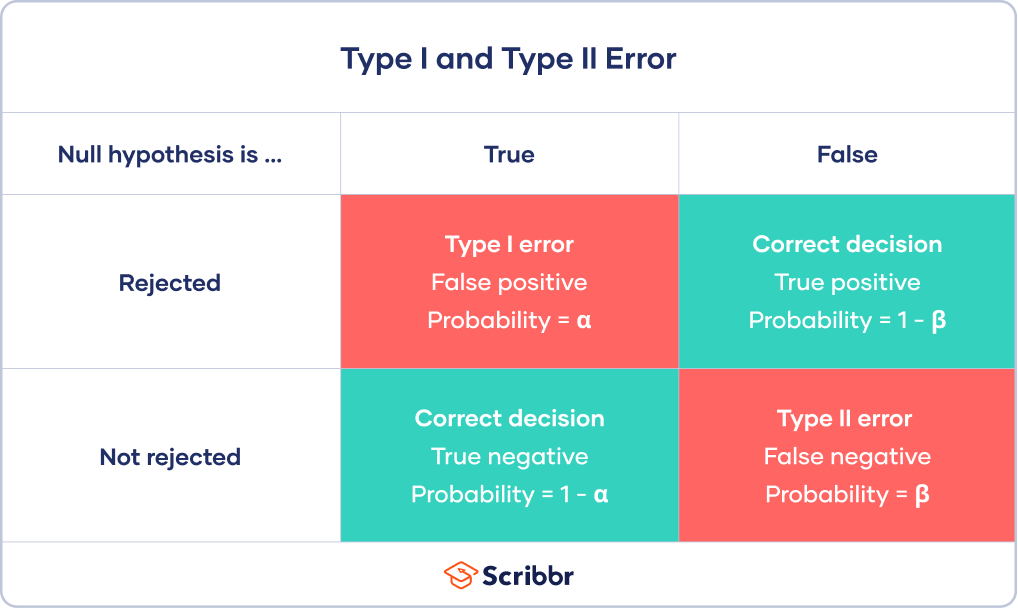

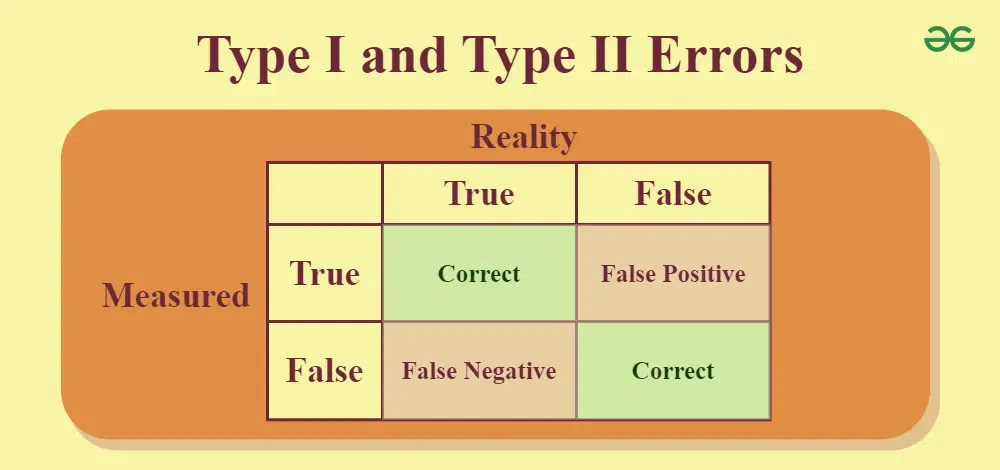

In statistics , a Type I error is a false positive conclusion, while a Type II error is a false negative conclusion.

Making a statistical decision always involves uncertainties, so the risks of making these errors are unavoidable in hypothesis testing .

The probability of making a Type I error is the significance level , or alpha (α), while the probability of making a Type II error is beta (β). These risks can be minimized through careful planning in your study design.

- Type I error (false positive) : the test result says you have coronavirus, but you actually don’t.

- Type II error (false negative) : the test result says you don’t have coronavirus, but you actually do.

Table of contents

Error in statistical decision-making, type i error, type ii error, trade-off between type i and type ii errors, is a type i or type ii error worse, other interesting articles, frequently asked questions about type i and ii errors.

Using hypothesis testing, you can make decisions about whether your data support or refute your research predictions with null and alternative hypotheses .

Hypothesis testing starts with the assumption of no difference between groups or no relationship between variables in the population—this is the null hypothesis . It’s always paired with an alternative hypothesis , which is your research prediction of an actual difference between groups or a true relationship between variables .

In this case:

- The null hypothesis (H 0 ) is that the new drug has no effect on symptoms of the disease.

- The alternative hypothesis (H 1 ) is that the drug is effective for alleviating symptoms of the disease.

Then , you decide whether the null hypothesis can be rejected based on your data and the results of a statistical test . Since these decisions are based on probabilities, there is always a risk of making the wrong conclusion.

- If your results show statistical significance , that means they are very unlikely to occur if the null hypothesis is true. In this case, you would reject your null hypothesis. But sometimes, this may actually be a Type I error.

- If your findings do not show statistical significance, they have a high chance of occurring if the null hypothesis is true. Therefore, you fail to reject your null hypothesis. But sometimes, this may be a Type II error.

Receive feedback on language, structure, and formatting

Professional editors proofread and edit your paper by focusing on:

- Academic style

- Vague sentences

- Style consistency

See an example

A Type I error means rejecting the null hypothesis when it’s actually true. It means concluding that results are statistically significant when, in reality, they came about purely by chance or because of unrelated factors.

The risk of committing this error is the significance level (alpha or α) you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

If the p value of your test is lower than the significance level, it means your results are statistically significant and consistent with the alternative hypothesis. If your p value is higher than the significance level, then your results are considered statistically non-significant.

To reduce the Type I error probability, you can simply set a lower significance level.

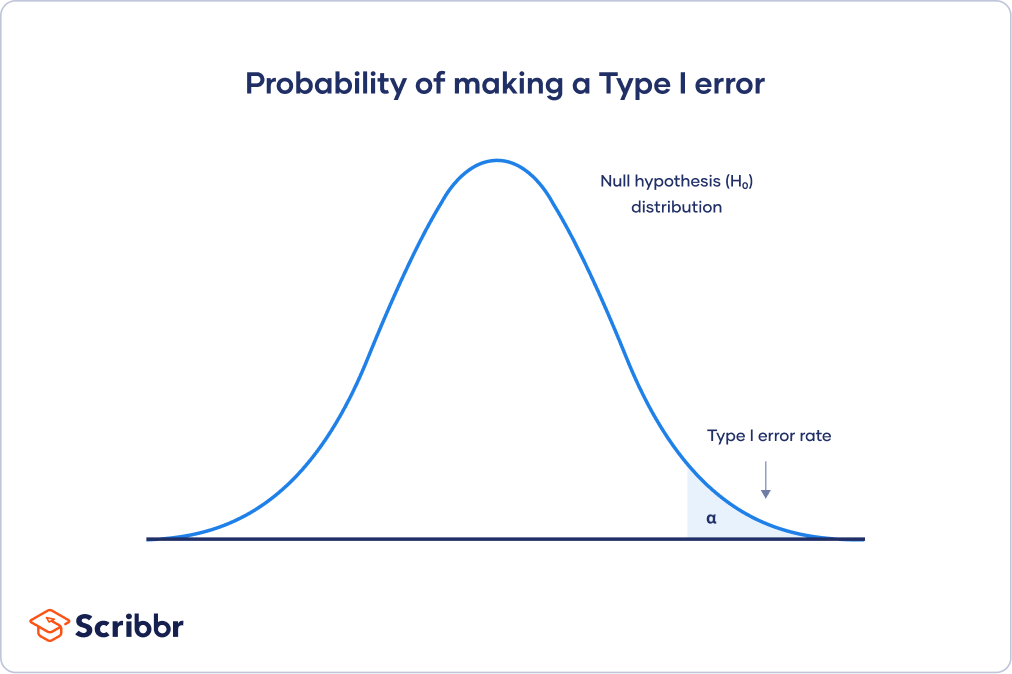

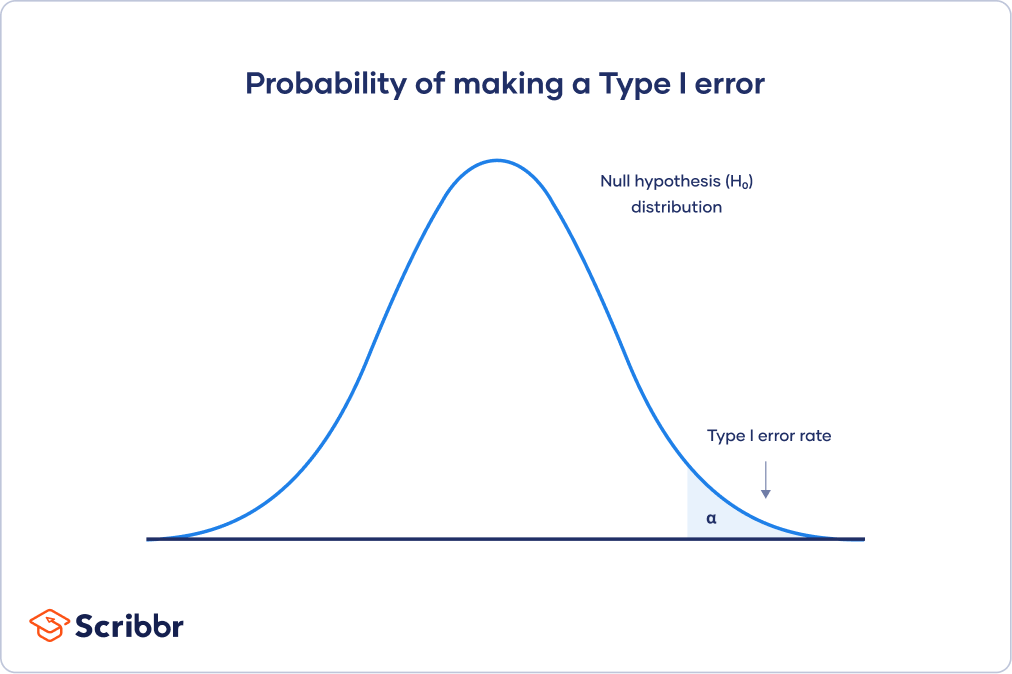

Type I error rate

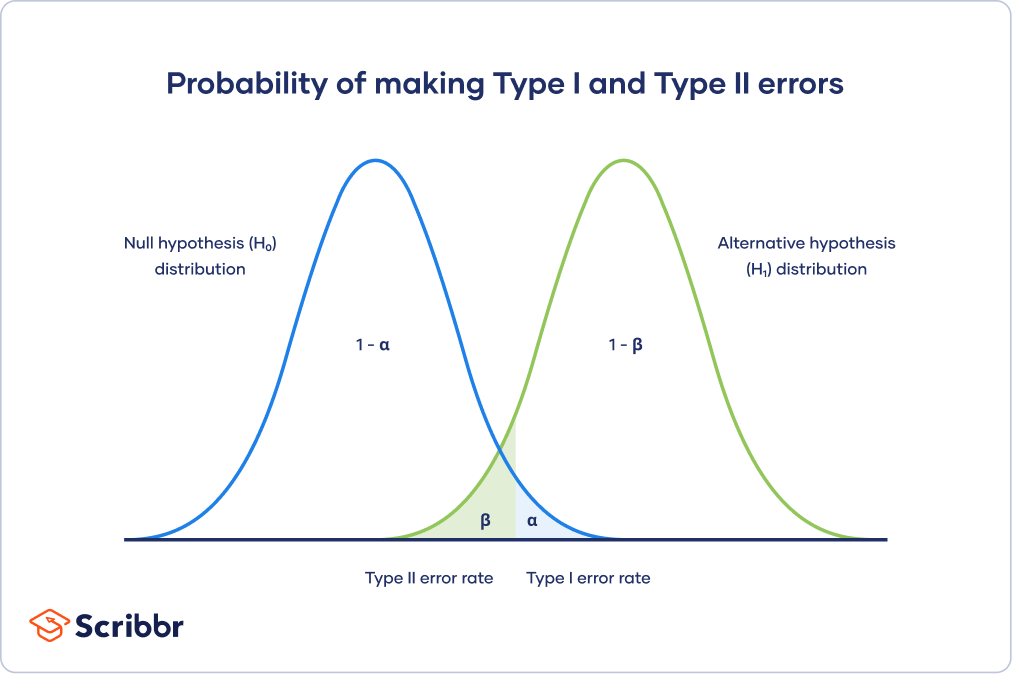

The null hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the null hypothesis were true in the population .

At the tail end, the shaded area represents alpha. It’s also called a critical region in statistics.

If your results fall in the critical region of this curve, they are considered statistically significant and the null hypothesis is rejected. However, this is a false positive conclusion, because the null hypothesis is actually true in this case!

A Type II error means not rejecting the null hypothesis when it’s actually false. This is not quite the same as “accepting” the null hypothesis, because hypothesis testing can only tell you whether to reject the null hypothesis.

Instead, a Type II error means failing to conclude there was an effect when there actually was. In reality, your study may not have had enough statistical power to detect an effect of a certain size.

Power is the extent to which a test can correctly detect a real effect when there is one. A power level of 80% or higher is usually considered acceptable.

The risk of a Type II error is inversely related to the statistical power of a study. The higher the statistical power, the lower the probability of making a Type II error.

Statistical power is determined by:

- Size of the effect : Larger effects are more easily detected.

- Measurement error : Systematic and random errors in recorded data reduce power.

- Sample size : Larger samples reduce sampling error and increase power.

- Significance level : Increasing the significance level increases power.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level.

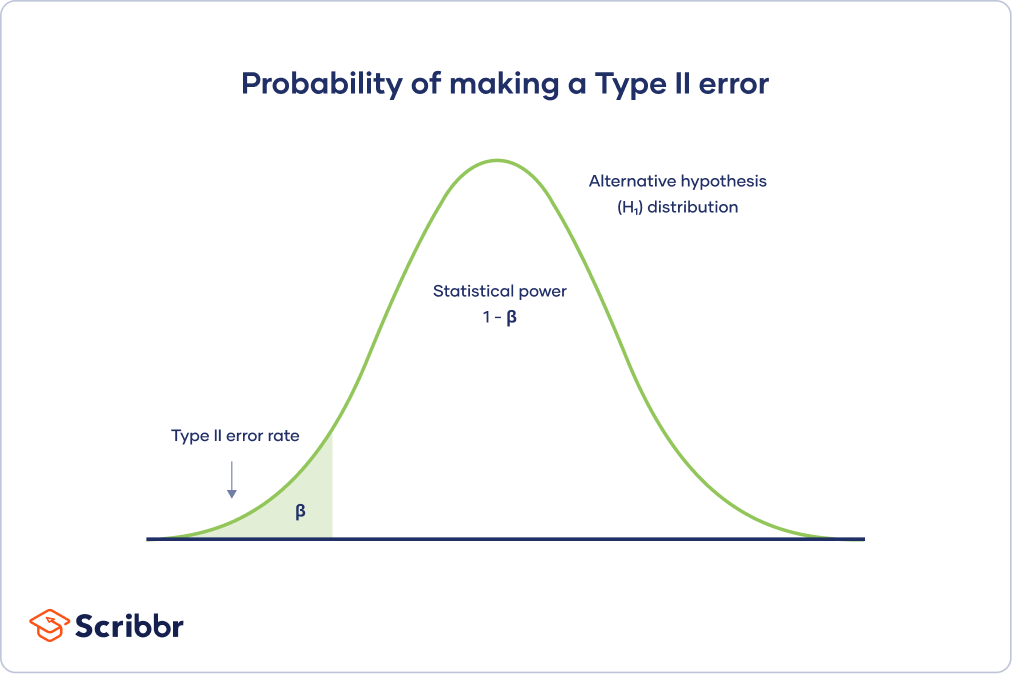

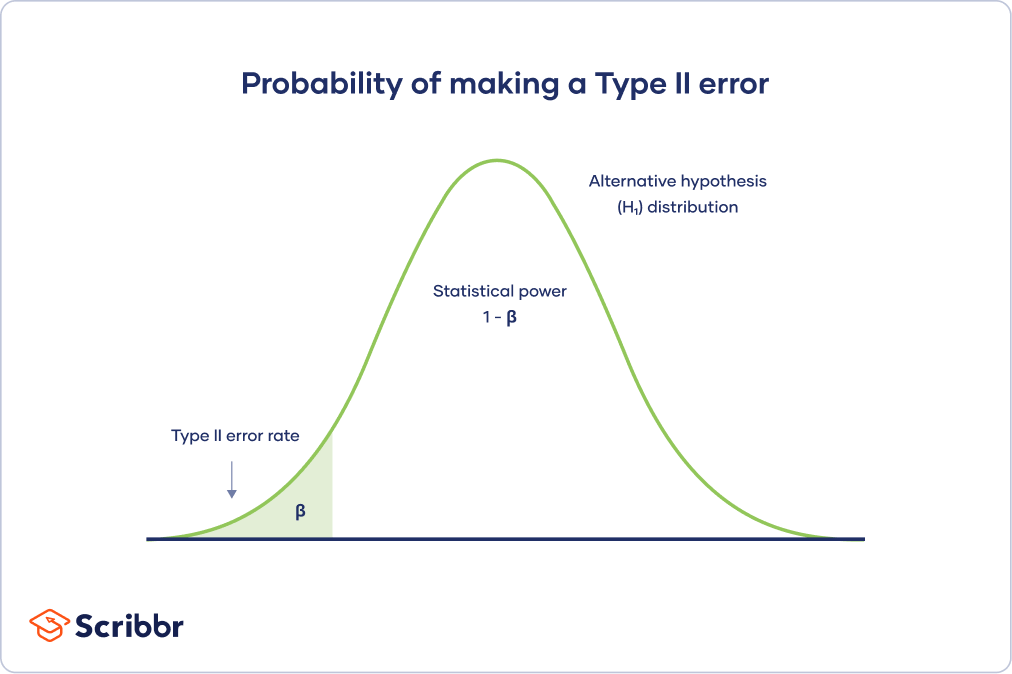

Type II error rate

The alternative hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the alternative hypothesis were true in the population .

The Type II error rate is beta (β), represented by the shaded area on the left side. The remaining area under the curve represents statistical power, which is 1 – β.

Increasing the statistical power of your test directly decreases the risk of making a Type II error.

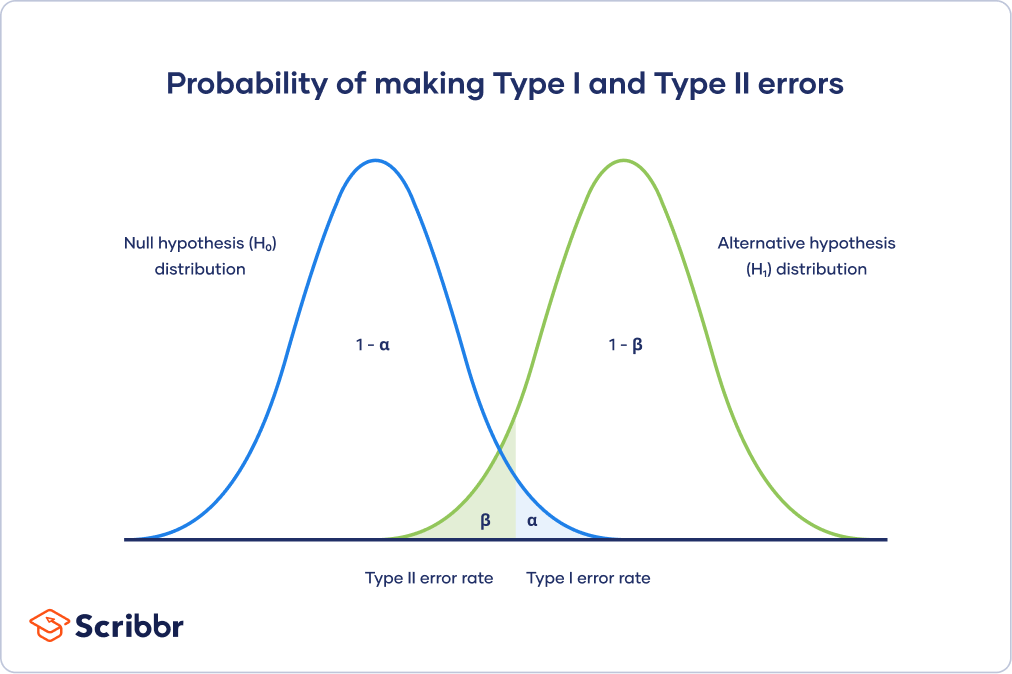

The Type I and Type II error rates influence each other. That’s because the significance level (the Type I error rate) affects statistical power, which is inversely related to the Type II error rate.

This means there’s an important tradeoff between Type I and Type II errors:

- Setting a lower significance level decreases a Type I error risk, but increases a Type II error risk.

- Increasing the power of a test decreases a Type II error risk, but increases a Type I error risk.

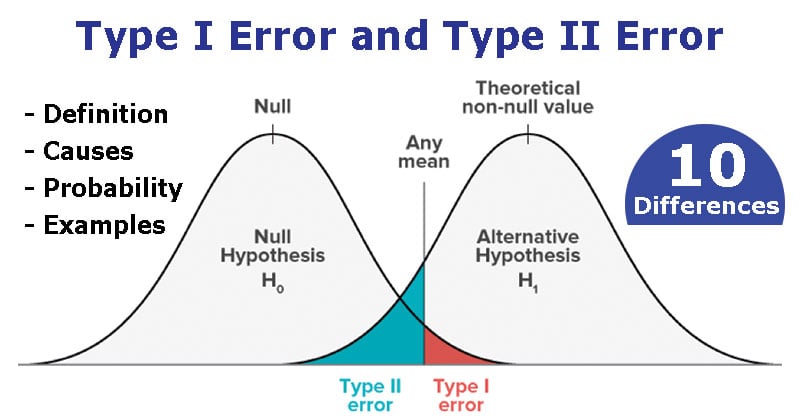

This trade-off is visualized in the graph below. It shows two curves:

- The null hypothesis distribution shows all possible results you’d obtain if the null hypothesis is true. The correct conclusion for any point on this distribution means not rejecting the null hypothesis.

- The alternative hypothesis distribution shows all possible results you’d obtain if the alternative hypothesis is true. The correct conclusion for any point on this distribution means rejecting the null hypothesis.

Type I and Type II errors occur where these two distributions overlap. The blue shaded area represents alpha, the Type I error rate, and the green shaded area represents beta, the Type II error rate.

By setting the Type I error rate, you indirectly influence the size of the Type II error rate as well.

It’s important to strike a balance between the risks of making Type I and Type II errors. Reducing the alpha always comes at the cost of increasing beta, and vice versa .

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

For statisticians, a Type I error is usually worse. In practical terms, however, either type of error could be worse depending on your research context.

A Type I error means mistakenly going against the main statistical assumption of a null hypothesis. This may lead to new policies, practices or treatments that are inadequate or a waste of resources.

In contrast, a Type II error means failing to reject a null hypothesis. It may only result in missed opportunities to innovate, but these can also have important practical consequences.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Normal distribution

- Descriptive statistics

- Measures of central tendency

- Correlation coefficient

- Null hypothesis

Methodology

- Cluster sampling

- Stratified sampling

- Types of interviews

- Cohort study

- Thematic analysis

Research bias

- Implicit bias

- Cognitive bias

- Survivorship bias

- Availability heuristic

- Nonresponse bias

- Regression to the mean

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

To reduce the Type I error probability, you can set a lower significance level.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bhandari, P. (2023, June 22). Type I & Type II Errors | Differences, Examples, Visualizations. Scribbr. Retrieved July 27, 2024, from https://www.scribbr.com/statistics/type-i-and-type-ii-errors/

Is this article helpful?

Pritha Bhandari

Other students also liked, an easy introduction to statistical significance (with examples), understanding p values | definition and examples, statistical power and why it matters | a simple introduction, what is your plagiarism score.

Type 1 and Type 2 Errors in Statistics

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

On This Page:

A statistically significant result cannot prove that a research hypothesis is correct (which implies 100% certainty). Because a p -value is based on probabilities, there is always a chance of making an incorrect conclusion regarding accepting or rejecting the null hypothesis ( H 0 ).

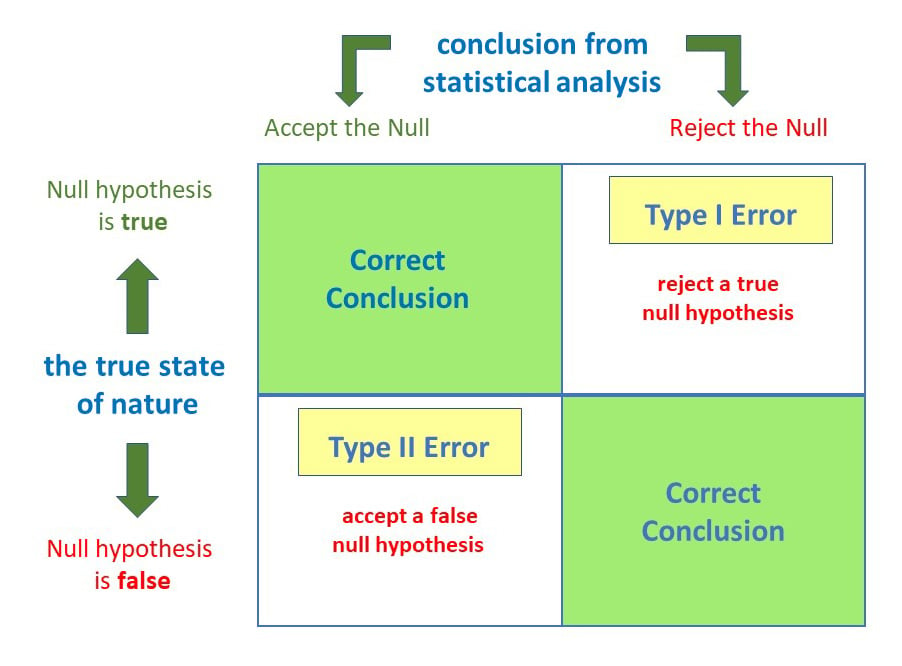

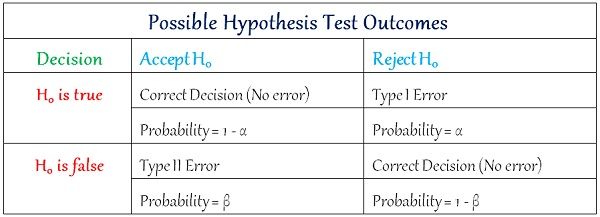

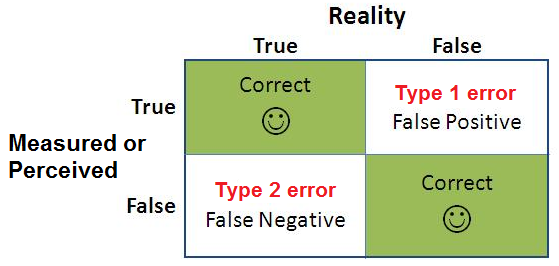

Anytime we make a decision using statistics, there are four possible outcomes, with two representing correct decisions and two representing errors.

The chances of committing these two types of errors are inversely proportional: that is, decreasing type I error rate increases type II error rate and vice versa.

As the significance level (α) increases, it becomes easier to reject the null hypothesis, decreasing the chance of missing a real effect (Type II error, β). If the significance level (α) goes down, it becomes harder to reject the null hypothesis , increasing the chance of missing an effect while reducing the risk of falsely finding one (Type I error).

Type I error

A type 1 error is also known as a false positive and occurs when a researcher incorrectly rejects a true null hypothesis. Simply put, it’s a false alarm.

This means that you report that your findings are significant when they have occurred by chance.

The probability of making a type 1 error is represented by your alpha level (α), the p- value below which you reject the null hypothesis.

A p -value of 0.05 indicates that you are willing to accept a 5% chance of getting the observed data (or something more extreme) when the null hypothesis is true.

You can reduce your risk of committing a type 1 error by setting a lower alpha level (like α = 0.01). For example, a p-value of 0.01 would mean there is a 1% chance of committing a Type I error.

However, using a lower value for alpha means that you will be less likely to detect a true difference if one really exists (thus risking a type II error).

Scenario: Drug Efficacy Study

Imagine a pharmaceutical company is testing a new drug, named “MediCure”, to determine if it’s more effective than a placebo at reducing fever. They experimented with two groups: one receives MediCure, and the other received a placebo.

- Null Hypothesis (H0) : MediCure is no more effective at reducing fever than the placebo.

- Alternative Hypothesis (H1) : MediCure is more effective at reducing fever than the placebo.

After conducting the study and analyzing the results, the researchers found a p-value of 0.04.

If they use an alpha (α) level of 0.05, this p-value is considered statistically significant, leading them to reject the null hypothesis and conclude that MediCure is more effective than the placebo.

However, MediCure has no actual effect, and the observed difference was due to random variation or some other confounding factor. In this case, the researchers have incorrectly rejected a true null hypothesis.

Error : The researchers have made a Type 1 error by concluding that MediCure is more effective when it isn’t.

Implications

Resource Allocation : Making a Type I error can lead to wastage of resources. If a business believes a new strategy is effective when it’s not (based on a Type I error), they might allocate significant financial and human resources toward that ineffective strategy.

Unnecessary Interventions : In medical trials, a Type I error might lead to the belief that a new treatment is effective when it isn’t. As a result, patients might undergo unnecessary treatments, risking potential side effects without any benefit.

Reputation and Credibility : For researchers, making repeated Type I errors can harm their professional reputation. If they frequently claim groundbreaking results that are later refuted, their credibility in the scientific community might diminish.

Type II error

A type 2 error (or false negative) happens when you accept the null hypothesis when it should actually be rejected.

Here, a researcher concludes there is not a significant effect when actually there really is.

The probability of making a type II error is called Beta (β), which is related to the power of the statistical test (power = 1- β). You can decrease your risk of committing a type II error by ensuring your test has enough power.

You can do this by ensuring your sample size is large enough to detect a practical difference when one truly exists.

Scenario: Efficacy of a New Teaching Method

Educational psychologists are investigating the potential benefits of a new interactive teaching method, named “EduInteract”, which utilizes virtual reality (VR) technology to teach history to middle school students.

They hypothesize that this method will lead to better retention and understanding compared to the traditional textbook-based approach.

- Null Hypothesis (H0) : The EduInteract VR teaching method does not result in significantly better retention and understanding of history content than the traditional textbook method.

- Alternative Hypothesis (H1) : The EduInteract VR teaching method results in significantly better retention and understanding of history content than the traditional textbook method.

The researchers designed an experiment where one group of students learns a history module using the EduInteract VR method, while a control group learns the same module using a traditional textbook.

After a week, the student’s retention and understanding are tested using a standardized assessment.

Upon analyzing the results, the psychologists found a p-value of 0.06. Using an alpha (α) level of 0.05, this p-value isn’t statistically significant.

Therefore, they fail to reject the null hypothesis and conclude that the EduInteract VR method isn’t more effective than the traditional textbook approach.

However, let’s assume that in the real world, the EduInteract VR truly enhances retention and understanding, but the study failed to detect this benefit due to reasons like small sample size, variability in students’ prior knowledge, or perhaps the assessment wasn’t sensitive enough to detect the nuances of VR-based learning.

Error : By concluding that the EduInteract VR method isn’t more effective than the traditional method when it is, the researchers have made a Type 2 error.

This could prevent schools from adopting a potentially superior teaching method that might benefit students’ learning experiences.

Missed Opportunities : A Type II error can lead to missed opportunities for improvement or innovation. For example, in education, if a more effective teaching method is overlooked because of a Type II error, students might miss out on a better learning experience.

Potential Risks : In healthcare, a Type II error might mean overlooking a harmful side effect of a medication because the research didn’t detect its harmful impacts. As a result, patients might continue using a harmful treatment.

Stagnation : In the business world, making a Type II error can result in continued investment in outdated or less efficient methods. This can lead to stagnation and the inability to compete effectively in the marketplace.

How do Type I and Type II errors relate to psychological research and experiments?

Type I errors are like false alarms, while Type II errors are like missed opportunities. Both errors can impact the validity and reliability of psychological findings, so researchers strive to minimize them to draw accurate conclusions from their studies.

How does sample size influence the likelihood of Type I and Type II errors in psychological research?

Sample size in psychological research influences the likelihood of Type I and Type II errors. A larger sample size reduces the chances of Type I errors, which means researchers are less likely to mistakenly find a significant effect when there isn’t one.

A larger sample size also increases the chances of detecting true effects, reducing the likelihood of Type II errors.

Are there any ethical implications associated with Type I and Type II errors in psychological research?

Yes, there are ethical implications associated with Type I and Type II errors in psychological research.

Type I errors may lead to false positive findings, resulting in misleading conclusions and potentially wasting resources on ineffective interventions. This can harm individuals who are falsely diagnosed or receive unnecessary treatments.

Type II errors, on the other hand, may result in missed opportunities to identify important effects or relationships, leading to a lack of appropriate interventions or support. This can also have negative consequences for individuals who genuinely require assistance.

Therefore, minimizing these errors is crucial for ethical research and ensuring the well-being of participants.

Further Information

- Publication manual of the American Psychological Association

- Statistics for Psychology Book Download

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

Types I & Type II Errors in Hypothesis Testing

By Jim Frost 8 Comments

In hypothesis testing, a Type I error is a false positive while a Type II error is a false negative. In this blog post, you will learn about these two types of errors, their causes, and how to manage them.

Hypothesis tests use sample data to make inferences about the properties of a population . You gain tremendous benefits by working with random samples because it is usually impossible to measure the entire population.

However, there are tradeoffs when you use samples. The samples we use are typically a minuscule percentage of the entire population. Consequently, they occasionally misrepresent the population severely enough to cause hypothesis tests to make Type I and Type II errors.

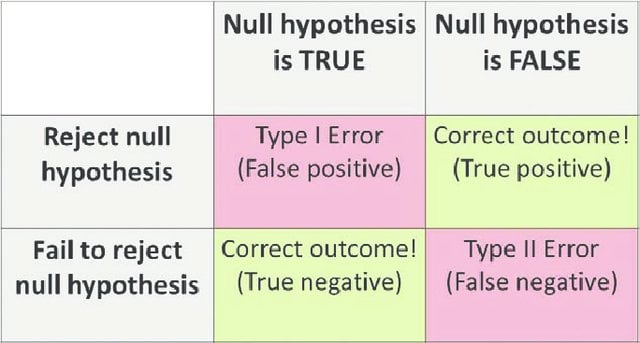

Potential Outcomes in Hypothesis Testing

Hypothesis testing is a procedure in inferential statistics that assesses two mutually exclusive theories about the properties of a population. For a generic hypothesis test, the two hypotheses are as follows:

- Null hypothesis : There is no effect

- Alternative hypothesis : There is an effect.

The sample data must provide sufficient evidence to reject the null hypothesis and conclude that the effect exists in the population. Ideally, a hypothesis test fails to reject the null hypothesis when the effect is not present in the population, and it rejects the null hypothesis when the effect exists.

Statisticians define two types of errors in hypothesis testing. Creatively, they call these errors Type I and Type II errors. Both types of error relate to incorrect conclusions about the null hypothesis.

The table summarizes the four possible outcomes for a hypothesis test.

|

|

| |

|

|

|

Related post : How Hypothesis Tests Work: P-values and the Significance Level

Fire alarm analogy for the types of errors

Using hypothesis tests correctly improves your chances of drawing trustworthy conclusions. However, errors are bound to occur.

Unlike the fire alarm analogy, there is no sure way to determine whether an error occurred after you perform a hypothesis test. Typically, a clearer picture develops over time as other researchers conduct similar studies and an overall pattern of results appears. Seeing how your results fit in with similar studies is a crucial step in assessing your study’s findings.

Now, let’s take a look at each type of error in more depth.

Type I Error: False Positives

When you see a p-value that is less than your significance level , you get excited because your results are statistically significant. However, it could be a type I error . The supposed effect might not exist in the population. Again, there is usually no warning when this occurs.

Why do these errors occur? It comes down to sample error. Your random sample has overestimated the effect by chance. It was the luck of the draw. This type of error doesn’t indicate that the researchers did anything wrong. The experimental design, data collection, data validity , and statistical analysis can all be correct, and yet this type of error still occurs.

Even though we don’t know for sure which studies have false positive results, we do know their rate of occurrence. The rate of occurrence for Type I errors equals the significance level of the hypothesis test, which is also known as alpha (α).

The significance level is an evidentiary standard that you set to determine whether your sample data are strong enough to reject the null hypothesis. Hypothesis tests define that standard using the probability of rejecting a null hypothesis that is actually true. You set this value based on your willingness to risk a false positive.

Related post : How to Interpret P-values Correctly

Using the significance level to set the Type I error rate

When the significance level is 0.05 and the null hypothesis is true, there is a 5% chance that the test will reject the null hypothesis incorrectly. If you set alpha to 0.01, there is a 1% of a false positive. If 5% is good, then 1% seems even better, right? As you’ll see, there is a tradeoff between Type I and Type II errors. If you hold everything else constant, as you reduce the chance for a false positive, you increase the opportunity for a false negative.

Type I errors are relatively straightforward. The math is beyond the scope of this article, but statisticians designed hypothesis tests to incorporate everything that affects this error rate so that you can specify it for your studies. As long as your experimental design is sound, you collect valid data, and the data satisfy the assumptions of the hypothesis test, the Type I error rate equals the significance level that you specify. However, if there is a problem in one of those areas, it can affect the false positive rate.

Warning about a potential misinterpretation of Type I errors and the Significance Level

When the null hypothesis is correct for the population, the probability that a test produces a false positive equals the significance level. However, when you look at a statistically significant test result, you cannot state that there is a 5% chance that it represents a false positive.

Why is that the case? Imagine that we perform 100 studies on a population where the null hypothesis is true. If we use a significance level of 0.05, we’d expect that five of the studies will produce statistically significant results—false positives. Afterward, when we go to look at those significant studies, what is the probability that each one is a false positive? Not 5 percent but 100%!

That scenario also illustrates a point that I made earlier. The true picture becomes more evident after repeated experimentation. Given the pattern of results that are predominantly not significant, it is unlikely that an effect exists in the population.

Type II Error: False Negatives

When you perform a hypothesis test and your p-value is greater than your significance level, your results are not statistically significant. That’s disappointing because your sample provides insufficient evidence for concluding that the effect you’re studying exists in the population. However, there is a chance that the effect is present in the population even though the test results don’t support it. If that’s the case, you’ve just experienced a Type II error . The probability of making a Type II error is known as beta (β).

What causes Type II errors? Whereas Type I errors are caused by one thing, sample error, there are a host of possible reasons for Type II errors—small effect sizes, small sample sizes, and high data variability. Furthermore, unlike Type I errors, you can’t set the Type II error rate for your analysis. Instead, the best that you can do is estimate it before you begin your study by approximating properties of the alternative hypothesis that you’re studying. When you do this type of estimation, it’s called power analysis.

To estimate the Type II error rate, you create a hypothetical probability distribution that represents the properties of a true alternative hypothesis. However, when you’re performing a hypothesis test, you typically don’t know which hypothesis is true, much less the specific properties of the distribution for the alternative hypothesis. Consequently, the true Type II error rate is usually unknown!

Type II errors and the power of the analysis

The Type II error rate (beta) is the probability of a false negative. Therefore, the inverse of Type II errors is the probability of correctly detecting an effect. Statisticians refer to this concept as the power of a hypothesis test. Consequently, 1 – β = the statistical power. Analysts typically estimate power rather than beta directly.

If you read my post about power and sample size analysis , you know that the three factors that affect power are sample size, variability in the population, and the effect size. As you design your experiment, you can enter estimates of these three factors into statistical software and it calculates the estimated power for your test.

Suppose you perform a power analysis for an upcoming study and calculate an estimated power of 90%. For this study, the estimated Type II error rate is 10% (1 – 0.9). Keep in mind that variability and effect size are based on estimates and guesses. Consequently, power and the Type II error rate are just estimates rather than something you set directly. These estimates are only as good as the inputs into your power analysis.

Low variability and larger effect sizes decrease the Type II error rate, which increases the statistical power. However, researchers usually have less control over those aspects of a hypothesis test. Typically, researchers have the most control over sample size, making it the critical way to manage your Type II error rate. Holding everything else constant, increasing the sample size reduces the Type II error rate and increases power.

Learn more about Power in Statistics .

Graphing Type I and Type II Errors

The graph below illustrates the two types of errors using two sampling distributions. The critical region line represents the point at which you reject or fail to reject the null hypothesis. Of course, when you perform the hypothesis test, you don’t know which hypothesis is correct. And, the properties of the distribution for the alternative hypothesis are usually unknown. However, use this graph to understand the general nature of these errors and how they are related.

The distribution on the left represents the null hypothesis. If the null hypothesis is true, you only need to worry about Type I errors, which is the shaded portion of the null hypothesis distribution. The rest of the null distribution represents the correct decision of failing to reject the null.

On the other hand, if the alternative hypothesis is true, you need to worry about Type II errors. The shaded region on the alternative hypothesis distribution represents the Type II error rate. The rest of the alternative distribution represents the probability of correctly detecting an effect—power.

Moving the critical value line is equivalent to changing the significance level. If you move the line to the left, you’re increasing the significance level (e.g., α 0.05 to 0.10). Holding everything else constant, this adjustment increases the Type I error rate while reducing the Type II error rate. Moving the line to the right reduces the significance level (e.g., α 0.05 to 0.01), which decreases the Type I error rate but increases the type II error rate.

Is One Error Worse Than the Other?

As you’ve seen, the nature of the two types of error, their causes, and the certainty of their rates of occurrence are all very different.

A common question is whether one type of error is worse than the other? Statisticians designed hypothesis tests to control Type I errors while Type II errors are much less defined. Consequently, many statisticians state that it is better to fail to detect an effect when it exists than it is to conclude an effect exists when it doesn’t. That is to say, there is a tendency to assume that Type I errors are worse.

However, reality is more complex than that. You should carefully consider the consequences of each type of error for your specific test.

Suppose you are assessing the strength of a new jet engine part that is under consideration. Peoples lives are riding on the part’s strength. A false negative in this scenario merely means that the part is strong enough but the test fails to detect it. This situation does not put anyone’s life at risk. On the other hand, Type I errors are worse in this situation because they indicate the part is strong enough when it is not.

Now suppose that the jet engine part is already in use but there are concerns about it failing. In this case, you want the test to be more sensitive to detecting problems even at the risk of false positives. Type II errors are worse in this scenario because the test fails to recognize the problem and leaves these problematic parts in use for longer.

Using hypothesis tests effectively requires that you understand their error rates. By setting the significance level and estimating your test’s power, you can manage both error rates so they meet your requirements.

The error rates in this post are all for individual tests. If you need to perform multiple comparisons, such as comparing group means in ANOVA, you’ll need to use post hoc tests to control the experiment-wise error rate or use the Bonferroni correction .

Share this:

Reader Interactions

June 4, 2024 at 2:04 pm

Very informative.

June 9, 2023 at 9:54 am

Hi Jim- I just signed up for your newsletter and this is my first question to you. I am not a statistician but work with them in my professional life as a QC consultant in biopharmaceutical development. I have a question about Type I and Type II errors in the realm of equivalence testing using two one sided difference testing (TOST). In a recent 2020 publication that I co-authored with a statistician, we stated that the probability of concluding non-equivalence when that is the truth, (which is the opposite of power, the probability of concluding equivalence when it is correct) is 1-2*alpha. This made sense to me because one uses a 90% confidence interval on a mean to evaluate whether the result is within established equivalence bounds with an alpha set to 0.05. However, it appears that specificity (1-alpha) is always the case as is power always being 1-beta. For equivalence testing the latter is 1-2*beta/2 but for specificity it stays as 1-alpha because only one of the null hypotheses in a two-sided test can fail at one time. I still see 1-2*alpha as making more sense as we show in Figure 3 of our paper which shows the white space under the distribution of the alternative hypothesis as 1-2 alpha. The paper can be downloaded as open access here if that would make my question more clear. https://bioprocessingjournal.com/index.php/article-downloads/890-vol-19-open-access-2020-defining-therapeutic-window-for-viral-vectors-a-statistical-framework-to-improve-consistency-in-assigning-product-dose-values I have consulted with other statistical colleagues and cannot get consensus so I would love your opinion and explanation! Thanks in advance!

June 10, 2023 at 1:00 am

Let me preface my response by saying that I’m not an expert in equivalence testing. But here’s my best guess about your question.

The alpha is for each of the hypothesis tests. Each one has a type I error rate of 0.05. Or, as you say, a specificity of 1-alpha. However, there are two tests so we need to consider the family-wise error rate. The formula is the following:

FWER = 1 – (1 – α)^N

Where N is the number of hypothesis tests.

For two tests, there’s a family-wise error rate of 0.0975. Or a family-wise specificity of 0.9025.

However, I believe they use 90% CI for a different reason (although it’s a very close match to the family-wise error rate). The 90% CI provides consistent results with the two one-side 95% tests. In other words, if the 90% CI is within the equivalency bounds, then the two tests will be significant. If the CI extends above the upper bound, the corresponding test won’t be significant. Etc.

However, using either rational, I’d say the overall type I error rate is about 0.1.

I hope that answers your question. And, again, I’m not an expert in this particular test.

July 18, 2022 at 5:15 am

Thank you for your valuable content. I have a question regarding correcting for multiple tests. My question is: for exactly how many tests should I correct in the scenario below?

Background: I’m testing for differences between groups A (patient group) and B (control group) in variable X. Variable X is a biological variable present in the body’s left and right side. Variable Y is a questionnaire for group A.

Step 1. Is there a significant difference within groups in the weight of left and right variable X? (I will conduct two paired sample t-tests)

If I find a significant difference in step 1, then I will conduct steps 2A and 2B. However, if I don’t find a significant difference in step 1, then I will only conduct step 2C.

Step 2A. Is there a significant difference between groups in left variable X? (I will conduct one independent sample t-test) Step 2B. Is there a significant difference between groups in right variable X? (I will conduct one independent sample t-test)

Step 2C. Is there a significant difference between groups in total variable X (left + right variable X)? (I will conduct one independent sample t-test)

If I find a significant difference in step 1, then I will conduct with steps 3A and 3B. However, if I don’t find a significant difference in step 1, then I will only conduct step 3C.

Step 3A. Is there a significant correlation between left variable X in group A and variable Y? (I will conduct Pearson correlation) Step 3B. Is there a significant correlation between right variable X in group A and variable Y? (I will conduct Pearson correlation)

Step 3C. Is there a significant correlation between total variable X in group A and variable Y? (I will conduct a Pearson correlation)

Regards, De

January 2, 2021 at 1:57 pm

I should say that being a budding statistician, this site seems to be pretty reliable. I have few doubts in here. It would be great if you can clarify it:

“A significance level of 0.05 indicates a 5% risk of concluding that a difference exists when there is no actual difference. ”

My understanding : When we say that the significance level is 0.05 then it means we are taking 5% risk to support alternate hypothesis even though there is no difference ?( I think i am not allowed to say Null is true, because null is assumed to be true/ Right)

January 2, 2021 at 6:48 pm

The sentence as I write it is correct. Here’s a simple way to understand it. Imagine you’re conducting a computer simulation where you control the population parameters and have the computer draw random samples from the populations that you define. Now, imagine you draw samples from two populations where the means and standard deviations are equal. You know this for a fact because you set the parameters yourself. Then you conduct a series of 2-sample t-tests.

In this example, you know the null hypothesis is correct. However, thanks to random sampling error, some proportion of the t-tests will have statistically significant results (i.e., false positives or Type I errors). The proportion of false positives will equal your significance level over the long run.

Of course, in real-world experiments, you never know for sure whether the null is true or not. However, given the properties of the hypothesis, you do know what proportion of tests will give you a false positive IF the null is true–and that’s the significance level.

I’m thinking through the wording of how you wrote it and I believe it is equivalent to what I wrote. If there is no difference (the null is true), then you have a 5% chance of incorrectly supporting the alternative. And, again, you’re correct that in the real world you don’t know for sure whether the null is true. But, you can still know the false positive (Type I) error rate. For more information about that property, read my post about how hypothesis tests work .

July 9, 2018 at 11:43 am

I like to use the analogy of a trial. The null hypothesis is that the defendant is innocent. A type I error would be convicting an innocent person and a type II error would be acquitting a guilty one. I like to think that our system makes a type I error very unlikely with the trade off being that a type II error is greater.

July 9, 2018 at 12:03 pm

Hi Doug, I think that is an excellent analogy on multiple levels. As you mention, a trial would set a high bar for the significance level by choosing a very low value for alpha. This helps prevent innocent people from being convicted (Type I error) but does increase the probability of allowing the guilty to go free (Type II error). I often refer to the significant level as a evidentiary standard with this legalistic analogy in mind.

Additionally, in the justice system in the U.S., there is a presumption of innocence and the prosecutor must present sufficient evidence to prove that the defendant is guilty. That’s just like in a hypothesis test where the assumption is that the null hypothesis is true and your sample must contain sufficient evidence to be able to reject the null hypothesis and suggest that the effect exists in the population.

This analogy even works for the similarities behind the phrases “Not guilty” and “Fail to reject the null hypothesis.” In both cases, you aren’t proving innocence or that the null hypothesis is true. When a defendant is “not guilty” it might be that the evidence was insufficient to convince the jury. In a hypothesis test, when you fail to reject the null hypothesis, it’s possible that an effect exists in the population but you have insufficient evidence to detect it. Perhaps the effect exists but the sample size or effect size is too small, or the variability might be too high.

Comments and Questions Cancel reply

Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Type I & Type II Errors | Differences, Examples, Visualizations

Type I & Type II Errors | Differences, Examples, Visualizations

Published on 18 January 2021 by Pritha Bhandari . Revised on 2 February 2023.

In statistics , a Type I error is a false positive conclusion, while a Type II error is a false negative conclusion.

Making a statistical decision always involves uncertainties, so the risks of making these errors are unavoidable in hypothesis testing .

The probability of making a Type I error is the significance level , or alpha (α), while the probability of making a Type II error is beta (β). These risks can be minimized through careful planning in your study design.

- Type I error (false positive) : the test result says you have coronavirus, but you actually don’t.

- Type II error (false negative) : the test result says you don’t have coronavirus, but you actually do.

Table of contents

Error in statistical decision-making, type i error, type ii error, trade-off between type i and type ii errors, is a type i or type ii error worse, frequently asked questions about type i and ii errors.

Using hypothesis testing, you can make decisions about whether your data support or refute your research predictions with null and alternative hypotheses .

Hypothesis testing starts with the assumption of no difference between groups or no relationship between variables in the population—this is the null hypothesis . It’s always paired with an alternative hypothesis , which is your research prediction of an actual difference between groups or a true relationship between variables .

In this case:

- The null hypothesis (H 0 ) is that the new drug has no effect on symptoms of the disease.

- The alternative hypothesis (H 1 ) is that the drug is effective for alleviating symptoms of the disease.

Then , you decide whether the null hypothesis can be rejected based on your data and the results of a statistical test . Since these decisions are based on probabilities, there is always a risk of making the wrong conclusion.

- If your results show statistical significance , that means they are very unlikely to occur if the null hypothesis is true. In this case, you would reject your null hypothesis. But sometimes, this may actually be a Type I error.

- If your findings do not show statistical significance, they have a high chance of occurring if the null hypothesis is true. Therefore, you fail to reject your null hypothesis. But sometimes, this may be a Type II error.

A Type I error means rejecting the null hypothesis when it’s actually true. It means concluding that results are statistically significant when, in reality, they came about purely by chance or because of unrelated factors.

The risk of committing this error is the significance level (alpha or α) you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value).

The significance level is usually set at 0.05 or 5%. This means that your results only have a 5% chance of occurring, or less, if the null hypothesis is actually true.

If the p value of your test is lower than the significance level, it means your results are statistically significant and consistent with the alternative hypothesis. If your p value is higher than the significance level, then your results are considered statistically non-significant.

To reduce the Type I error probability, you can simply set a lower significance level.

Type I error rate

The null hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the null hypothesis were true in the population .

At the tail end, the shaded area represents alpha. It’s also called a critical region in statistics.

If your results fall in the critical region of this curve, they are considered statistically significant and the null hypothesis is rejected. However, this is a false positive conclusion, because the null hypothesis is actually true in this case!

A Type II error means not rejecting the null hypothesis when it’s actually false. This is not quite the same as “accepting” the null hypothesis, because hypothesis testing can only tell you whether to reject the null hypothesis.

Instead, a Type II error means failing to conclude there was an effect when there actually was. In reality, your study may not have had enough statistical power to detect an effect of a certain size.

Power is the extent to which a test can correctly detect a real effect when there is one. A power level of 80% or higher is usually considered acceptable.

The risk of a Type II error is inversely related to the statistical power of a study. The higher the statistical power, the lower the probability of making a Type II error.

Statistical power is determined by:

- Size of the effect : Larger effects are more easily detected.

- Measurement error : Systematic and random errors in recorded data reduce power.

- Sample size : Larger samples reduce sampling error and increase power.

- Significance level : Increasing the significance level increases power.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level.

Type II error rate

The alternative hypothesis distribution curve below shows the probabilities of obtaining all possible results if the study were repeated with new samples and the alternative hypothesis were true in the population .

The Type II error rate is beta (β), represented by the shaded area on the left side. The remaining area under the curve represents statistical power, which is 1 – β.

Increasing the statistical power of your test directly decreases the risk of making a Type II error.

The Type I and Type II error rates influence each other. That’s because the significance level (the Type I error rate) affects statistical power, which is inversely related to the Type II error rate.

This means there’s an important tradeoff between Type I and Type II errors:

- Setting a lower significance level decreases a Type I error risk, but increases a Type II error risk.

- Increasing the power of a test decreases a Type II error risk, but increases a Type I error risk.

This trade-off is visualized in the graph below. It shows two curves:

- The null hypothesis distribution shows all possible results you’d obtain if the null hypothesis is true. The correct conclusion for any point on this distribution means not rejecting the null hypothesis.

- The alternative hypothesis distribution shows all possible results you’d obtain if the alternative hypothesis is true. The correct conclusion for any point on this distribution means rejecting the null hypothesis.

Type I and Type II errors occur where these two distributions overlap. The blue shaded area represents alpha, the Type I error rate, and the green shaded area represents beta, the Type II error rate.

By setting the Type I error rate, you indirectly influence the size of the Type II error rate as well.

It’s important to strike a balance between the risks of making Type I and Type II errors. Reducing the alpha always comes at the cost of increasing beta, and vice versa .

For statisticians, a Type I error is usually worse. In practical terms, however, either type of error could be worse depending on your research context.

A Type I error means mistakenly going against the main statistical assumption of a null hypothesis. This may lead to new policies, practices or treatments that are inadequate or a waste of resources.

In contrast, a Type II error means failing to reject a null hypothesis. It may only result in missed opportunities to innovate, but these can also have important practical consequences.

In statistics, a Type I error means rejecting the null hypothesis when it’s actually true, while a Type II error means failing to reject the null hypothesis when it’s actually false.

The risk of making a Type I error is the significance level (or alpha) that you choose. That’s a value that you set at the beginning of your study to assess the statistical probability of obtaining your results ( p value ).

To reduce the Type I error probability, you can set a lower significance level.

The risk of making a Type II error is inversely related to the statistical power of a test. Power is the extent to which a test can correctly detect a real effect when there is one.

To (indirectly) reduce the risk of a Type II error, you can increase the sample size or the significance level to increase statistical power.

Statistical significance is a term used by researchers to state that it is unlikely their observations could have occurred under the null hypothesis of a statistical test . Significance is usually denoted by a p -value , or probability value.

Statistical significance is arbitrary – it depends on the threshold, or alpha value, chosen by the researcher. The most common threshold is p < 0.05, which means that the data is likely to occur less than 5% of the time under the null hypothesis .

When the p -value falls below the chosen alpha value, then we say the result of the test is statistically significant.

In statistics, power refers to the likelihood of a hypothesis test detecting a true effect if there is one. A statistically powerful test is more likely to reject a false negative (a Type II error).

If you don’t ensure enough power in your study, you may not be able to detect a statistically significant result even when it has practical significance. Your study might not have the ability to answer your research question.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Bhandari, P. (2023, February 02). Type I & Type II Errors | Differences, Examples, Visualizations. Scribbr. Retrieved 27 July 2024, from https://www.scribbr.co.uk/stats/type-i-and-type-ii-error/

Is this article helpful?

Pritha Bhandari

- Type I vs Type II Errors: Causes, Examples & Prevention

There are two common types of errors, type I and type II errors you’ll likely encounter when testing a statistical hypothesis. The mistaken rejection of the finding or the null hypothesis is known as a type I error. In other words, type I error is the false-positive finding in hypothesis testing . Type II error on the other hand is the false-negative finding in hypothesis testing.

To better understand the two types of errors, here’s an example:

Let’s assume you notice some flu-like symptoms and decide to go to a hospital to get tested for the presence of malaria. There is a possibility of two errors occurring:

- In type I error (False positive): The result of the test shows you have malaria but you actually don’t have it.

- Type II error (false negative): The test result indicates that you don’t have malaria when you in fact do.

Type I error and Type II error are extensively used in areas such as computer science, Engineering, Statistics, and many more.

The chance of committing a type I error is known as alpha (α), while the chance of committing a type II error is known as beta (β). If you carefully plan your study design, you can minimize the probability of committing either of the errors.

Read: Survey Errors To Avoid: Types, Sources, Examples, Mitigation

What are Type I Errors?

Type I error is an omission that happens when a null hypothesis is reprobated during hypothesis testing. This is when it is indeed precise or positive and should not have been initially disapproved. So if a null hypothesis is erroneously rejected when it is positive, it is called a Type I error.

What this means is that results are concluded to be significant when in actual fact, it was obtained by chance.

When conducting hypothesis testing, a null hypothesis is determined before carrying out the actual test. The null hypothesis may presume that there is no chain of circumstances between the items being tested which may cause an outcome for the test.

When a null hypothesis is rejected, it means a chain of circumstances has been established between the items being tested even though it is a false alarm or false positive. This could lead to an error or many errors in a test, known as a Type I error.

It is worthy of note that statistical outcomes of every testing involve uncertainties, so making errors while performing these hypothesis testings is unavoidable. It is inherent that type I error may be considered as an error of commission in the sense that the producer or researcher mistakenly decides on a false outcome.

Read: Systematic Errors in Research: Definition, Examples

Causes of Type I Error

- When a factor other than the variable affects the variables being tested. This factor that causes the effect produces a result that supports the decision to reject the null hypothesis.

- When the result of a hypothesis testing is caused by chance, it is a Type I error.

- Lastly, because a null hypothesis and the significance level are decided before conducting a hypothesis test, and also the sample size is not considered, a type I error may occur due to chance.

Read: Margin of error – Definition, Formula + Application

Risk Factor and Probability of Type I Error

- The risk factor and probability of Type I error are mostly set in advance and the level of significance of the hypothesis testing is known.

- The level of significance in a test is represented by α and it signifies the rate of the possibility of Type I error.

- While it is possible to reduce the rate of Type I error by using a determined sample size. The consequence of this, however, is that the possibility of a Type II error occurring in a test will increase.

- In a case where Type I error is decided at 5 percent, it means in the null hypothesis ( H 0), chances are there that 5 in the 100 hypotheses even if true will be rejected.

- Another risk factor is that both Type I and Type II errors can not be changed simultaneously. To reduce the possibility of one error occurring means the possibility of the other error will increase. Hence changing the outcome of one test inherently affects the outcome of the other test.

Read: Sampling Bias: Definition, Types + [Examples]

Consequences of a Type I Error

A type I error will result in a false alarm. The outcome of the hypothesis testing will be a false positive. This implies that the researcher decided the result of a hypothesis testing is true when in fact, it is not.

For a sales group, the consequences of a type I error may result in losing potential market and missing out on probable sales because the findings of a test are faulty.

What are Type II Errors?

A Type II error means a researcher or producer did not disapprove of the alternate hypothesis when it is in fact negative or false. This does not mean the null hypothesis is accepted as positive as hypothesis testing only indicates if a null hypothesis should be rejected.

A Type II error means a conclusion on the effect of the test wasn’t recognized when an effect truly existed. Before a test can be said to have a real effect, it has to have a power level that is 80% or more.

This implies the statistical power of a test determines the risk of a type II error. The probability of a type II error occurring largely depends on how high the statistical power is.

Note: Null hypothesis is represented as (H0) and alternative hypothesis is represented as (H1)

Causes of Type II Error

- Type II error is mainly caused by the statistical power of a test being low. A Type II error will occur if the statistical test is not powerful enough.

- The size of the sample can also lead to a Type I error because the outcome of the test will be affected. A small sample size might hide the significant level of the items being tested.

- Another cause of Type Ii error is the possibility that a researcher may disapprove of the actual outcome of a hypothesis even when it is correct.

Probability of Type II Error

- To arrive at the possibility of a Type II error occurring, the power of the test must be deducted from type 1.

- The level of significance in a test is represented by β and it shows the rate of the possibility of Type I error.

- It is possible to reduce the rate of Type II error if the significance level of the test is increased.

- In a case where Type II error is decided at 5 percent, it means in the null hypothesis ( H 0), chances are there that 5 in the 100 hypotheses even if it is false will be accepted.

- Type I error and Type II error are connected. Hence, to reduce the possibility of one type of error from occurring means the possibility of the other error will increase.

- It is important to decide which error has lesser effects on the test.

Consequences of a Type II Error

Type II errors can also result in a wrong decision that will affect the outcomes of a test and have real-life consequences.

Note that even if you proved your test hypothesis, your conversion result can invalidate the outcome unintended. This turn of events can be discouraging, hence the need to be extra careful when conducting hypothesis testing.

How to Avoid Type I and II errors

Type I error and type II errors can not be entirely avoided in hypothesis testing, but the researcher can reduce the probability of them occurring.

For Type I error, minimize the significance level to avoid making errors. This can be determined by the researcher.

To avoid type II errors, ensure the test has high statistical power. The higher the statistical power, the higher the chance of avoiding an error. Set your statistical power to 80% and above and conduct your test.

Increase the sample size of the hypothesis testing.

The Type II error can also be avoided if the significance level of the test hypothesis is chosen.

How to Detect Type I and Type II Errors in Data

After completing a study, the researcher can conduct any of the available statistical tests to reject the default hypothesis in favor of its alternative. If the study is free of bias, there are four possible outcomes. See the image below;

Image source: IPJ

If the findings in the sample and reality in the population match, the researchers’ inferences will be correct. However, if in any of the situations a type I or II error has been made, the inference will be incorrect.

Key Differences between Type I & II Errors

- In statistical hypothesis testing, a type I error is caused by disapproving a null hypothesis that is otherwise correct while in contrast, Type II error occurs when the null hypothesis is not rejected even though it is not true.

- Type I error is the same as a false alarm or false positive while Type II error is also referred to as false negative.

- A Type I error is represented by α while a Type II error is represented by β.

- The level of significance determines the possibility of a type I error while type II error is the possibility of deducting the power of the test from 1.

- You can decrease the possibility of Type I error by reducing the level of significance. The same way you can reduce the probability of a Type II error by increasing the significance level of the test.

- Type I error occurs when you reject the null hypothesis, in contrast, Type II error occurs when you accept an incorrect outcome of a false hypothesis

Examples of Type I & II errors

Type i error examples.

To understand the statistical significance of Type I error, let us look at this example.

In this hypothesis, a driver wants to determine the relationship between him getting a new driving wheel and the number of passengers he carries in a week.

Now, if the number of passengers he carries in a week increases after he got a new driving wheel than the number of passengers he carried in a week with the old driving wheel, this driver might assume that there is a relationship between the new wheel and the increase in the number of passengers and support the alternative hypothesis.

However, the increment in the number of passengers he carried in a week, might have been influenced by chance and not by the new wheel which results in type I error.

By this indication, the driver should have supported the null hypothesis because the increment of his passengers might have been due to chance and not fact.

Type II error examples

For Type II error and statistical power, let us assume a hypothesis where a farmer that rears birds assumes none of his birds have bird-flu. He observes his birds for four days to find out if there are symptoms of the flu.

If after four days, the farmer sees no symptoms of the flu in his birds, he might assume his birds are indeed free from bird flu whereas the bird flu might have affected his birds and the symptoms are obvious on the sixth day.

By this indication, the farmer accepts that no flu exists in his birds. This leads to a type II error where it supports the null hypothesis when it is in fact false.

Frequently Asked Questions about Type I and II Errors

- Is a Type I or Type II error worse?

Both Type I and type II errors could be worse based on the type of research being conducted.

A Type I error means an incorrect assumption has been made when the assumption is in reality not true. The consequence of this is that other alternatives are disapproved of to accept this conclusion. A type II error implies that a null hypothesis was not rejected. This means that a significant outcome wouldn’t have any benefit in reality.

A Type I error however may be terrible for statisticians. It is difficult to decide which of the errors is worse than the other but both types of errors could do enough damage to your research.

- Does sample size affect type 1 error?

Small or large sample size does not affect type I error . So sample size will not increase the occurrence of Type I error.

The only principle is that your test has a normal sample size. If the sample size is small in Type II errors, the level of significance will decrease.

This may cause a false assumption from the researcher and discredit the outcome of the hypothesis testing.

- What is statistical power as it relates to Type I or Type II errors

Statistical power is used in type II to deduce the measurement error. This is because random errors reduce the statistical power of hypothesis testing. Not only that, the larger the size of the effect, the more detectable the errors are.

The statistical power of a hypothesis increases when the level of significance increases. The statistical power also increases when a larger sample size is being tested thereby reducing the errors. If you want the risk of Type II error to reduce, increase the level of significance of the test.

- What is statistical significance as it relates to Type I or Type II errors

Statistical significance relates to Type I error. Researchers sometimes assume that the outcome of a test is statistically significant when they are not and the researcher then rejects the null hypothesis. The fact is, the outcome might have happened due to chance.

A type I error decreases when a lower significance level is set.

If your test power is lower compared to the significance level, then the alternative hypothesis is relevant to the statistical significance of your test, then the outcome is relevant.

In this article, we have extensively discussed Type I error and Type II error. We have also discussed their causes, the probabilities of their occurrence, and how to avoid them. We have seen that both Types of errors have their usefulness and limitations. The best approach as a researcher is to know which to apply and when.

Connect to Formplus, Get Started Now - It's Free!

- alternative vs null hypothesis

- hypothesis testing

- level of errors

- level of significance

- statistical hypothesis

- statistical power

- type i errors

- type ii errors

- busayo.longe

You may also like:

Alternative vs Null Hypothesis: Pros, Cons, Uses & Examples

We are going to discuss alternative hypotheses and null hypotheses in this post and how they work in research.

Hypothesis Testing: Definition, Uses, Limitations + Examples

The process of research validation involves testing and it is in this context that we will explore hypothesis testing.

What is Pure or Basic Research? + [Examples & Method]

Simple guide on pure or basic research, its methods, characteristics, advantages, and examples in science, medicine, education and psychology

Internal Validity in Research: Definition, Threats, Examples

In this article, we will discuss the concept of internal validity, some clear examples, its importance, and how to test it.

Formplus - For Seamless Data Collection

Collect data the right way with a versatile data collection tool. try formplus and transform your work productivity today..

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

6.1 - type i and type ii errors.

When conducting a hypothesis test there are two possible decisions: reject the null hypothesis or fail to reject the null hypothesis. You should remember though, hypothesis testing uses data from a sample to make an inference about a population. When conducting a hypothesis test we do not know the population parameters. In most cases, we don't know if our inference is correct or incorrect.

When we reject the null hypothesis there are two possibilities. There could really be a difference in the population, in which case we made a correct decision. Or, it is possible that there is not a difference in the population (i.e., \(H_0\) is true) but our sample was different from the hypothesized value due to random sampling variation. In that case we made an error. This is known as a Type I error.

When we fail to reject the null hypothesis there are also two possibilities. If the null hypothesis is really true, and there is not a difference in the population, then we made the correct decision. If there is a difference in the population, and we failed to reject it, then we made a Type II error.

Rejecting \(H_0\) when \(H_0\) is really true, denoted by \(\alpha\) ("alpha") and commonly set at .05

\(\alpha=P(Type\;I\;error)\)

Failing to reject \(H_0\) when \(H_0\) is really false, denoted by \(\beta\) ("beta")

\(\beta=P(Type\;II\;error)\)

| Decision | Reality | |

|---|---|---|

| \(H_0\) is true | \(H_0\) is false | |

| Reject \(H_0\), (conclude \(H_a\)) | Type I error | Correct decision |

| Fail to reject \(H_0\) | Correct decision | Type II error |

Example: Trial Section

A man goes to trial where he is being tried for the murder of his wife.

We can put it in a hypothesis testing framework. The hypotheses being tested are:

- \(H_0\) : Not Guilty

- \(H_a\) : Guilty

Type I error is committed if we reject \(H_0\) when it is true. In other words, did not kill his wife but was found guilty and is punished for a crime he did not really commit.

Type II error is committed if we fail to reject \(H_0\) when it is false. In other words, if the man did kill his wife but was found not guilty and was not punished.

Example: Culinary Arts Study Section

A group of culinary arts students is comparing two methods for preparing asparagus: traditional steaming and a new frying method. They want to know if patrons of their school restaurant prefer their new frying method over the traditional steaming method. A sample of patrons are given asparagus prepared using each method and asked to select their preference. A statistical analysis is performed to determine if more than 50% of participants prefer the new frying method:

- \(H_{0}: p = .50\)

- \(H_{a}: p>.50\)

Type I error occurs if they reject the null hypothesis and conclude that their new frying method is preferred when in reality is it not. This may occur if, by random sampling error, they happen to get a sample that prefers the new frying method more than the overall population does. If this does occur, the consequence is that the students will have an incorrect belief that their new method of frying asparagus is superior to the traditional method of steaming.

Type II error occurs if they fail to reject the null hypothesis and conclude that their new method is not superior when in reality it is. If this does occur, the consequence is that the students will have an incorrect belief that their new method is not superior to the traditional method when in reality it is.

- En español – ExME

- Em português – EME

What are Type I and Type II Errors?

Posted on 21st April 2017 by Priscilla Wittkopf

When conducting a hypothesis test, we could:

- Reject the null hypothesis when there is a genuine effect in the population;

- Fail to reject the null hypothesis when there isn’t a genuine effect in the population.

However, as we are inferring results from samples and using probabilities to do so, we are never working with 100% certainty of the presence or absence of an effect. There are two other possible outcomes of a hypothesis test.

- Reject the null hypothesis when there isn’t a genuine effect – we have a false positive result and this is called Type I error .

- Fail to reject the null hypothesis when there is a genuine effect – we have a false negative result and this is called Type II error .

So in simple terms, a type I error is erroneously detecting an effect that is not present , while a type II error is the failure to detect an effect that is present.

Type I error

This error occurs when we reject the null hypothesis when we should have retained it. That means that we believe we found a genuine effect when in reality there isn’t one. The probability of a type I error occurring is represented by α and as a convention the threshold is set at 0.05 (also known as significance level). When setting a threshold at 0.05 we are accepting that there is a 5% probability of identifying an effect when actually there isn’t one.

Type II error

This error occurs when we fail to reject the null hypothesis. In other words, we believe that there isn’t a genuine effect when actually there is one. The probability of a Type II error is represented as β and this is related to the power of the test (power = 1- β). Cohen (1998) proposed that the maximum accepted probability of a Type II error should be 20% (β = 0.2).

When designing and planning a study the researcher should decide the values of α and β, bearing in mind that inferential statistics involve a balance between Type I and Type II errors. If α is set at a very small value the researcher is more rigorous with the standards of rejection of the null hypothesis. For example, if α = 0.01 the researcher is accepting a probability of 1% of erroneously rejecting the null hypothesis, but there is an increase in the probability of a Type II error.

In summary, we can see on the table the possible outcomes of a hypothesis test:

Have this table in mind when designing, analysing and reading studies, it will help when interpreting findings.

COHEN, J. 1990. Things I have learned (so far). American psychologist, 45 , 1304.

COHEN, J. 1998. Statistical Power Analysis for the Behavioral Sciences , Lawrence Erlbaum Associates.

FIELD, A. 2013. Discovering statistics using IBM SPSS statistics , Sage.

Priscilla Wittkopf

Leave a reply cancel reply.

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

No Comments on What are Type I and Type II Errors?

I’m pretty sure “erroneous” is not the word you’re looking for in the opening sentence!

You’re quite right. This was an editorial issue rather than the fault of the author and has now been amended. Many thanks.

Subscribe to our newsletter

You will receive our monthly newsletter and free access to Trip Premium.

Related Articles

Making sense of medical statistics: a bite sized visual guide

This blog is a review of a newly published book, which has the overall aim of giving health professionals a ‘working understanding’ of medical statistics.

A Brief Introduction to Statistical Averages

This tutorial provides an introduction to statistical averages (mean, median and mode) for beginners to the topic.

An Introduction to Probability

A tutorial for understanding and calculating probability. We go back to basics for beginners or for those just wanting a refresher.

The Difference Between Type I and Type II Errors in Hypothesis Testing

- Inferential Statistics

- Statistics Tutorials

- Probability & Games

- Descriptive Statistics

- Applications Of Statistics

- Math Tutorials

- Pre Algebra & Algebra

- Exponential Decay

- Worksheets By Grade

- Ph.D., Mathematics, Purdue University

- M.S., Mathematics, Purdue University

- B.A., Mathematics, Physics, and Chemistry, Anderson University

The statistical practice of hypothesis testing is widespread not only in statistics but also throughout the natural and social sciences. When we conduct a hypothesis test there a couple of things that could go wrong. There are two kinds of errors, which by design cannot be avoided, and we must be aware that these errors exist. The errors are given the quite pedestrian names of type I and type II errors. What are type I and type II errors, and how we distinguish between them? Briefly:

- Type I errors happen when we reject a true null hypothesis

- Type II errors happen when we fail to reject a false null hypothesis

We will explore more background behind these types of errors with the goal of understanding these statements.

Hypothesis Testing

The process of hypothesis testing can seem to be quite varied with a multitude of test statistics. But the general process is the same. Hypothesis testing involves the statement of a null hypothesis and the selection of a level of significance . The null hypothesis is either true or false and represents the default claim for a treatment or procedure. For example, when examining the effectiveness of a drug, the null hypothesis would be that the drug has no effect on a disease.

After formulating the null hypothesis and choosing a level of significance, we acquire data through observation. Statistical calculations tell us whether or not we should reject the null hypothesis.

In an ideal world, we would always reject the null hypothesis when it is false, and we would not reject the null hypothesis when it is indeed true. But there are two other scenarios that are possible, each of which will result in an error.

Type I Error

The first kind of error that is possible involves the rejection of a null hypothesis that is actually true. This kind of error is called a type I error and is sometimes called an error of the first kind.

Type I errors are equivalent to false positives. Let’s go back to the example of a drug being used to treat a disease. If we reject the null hypothesis in this situation, then our claim is that the drug does, in fact, have some effect on a disease. But if the null hypothesis is true, then, in reality, the drug does not combat the disease at all. The drug is falsely claimed to have a positive effect on a disease.