P-Value in Statistical Hypothesis Tests: What is it?

P value definition.

A p value is used in hypothesis testing to help you support or reject the null hypothesis . The p value is the evidence against a null hypothesis . The smaller the p-value, the stronger the evidence that you should reject the null hypothesis.

P values are expressed as decimals although it may be easier to understand what they are if you convert them to a percentage . For example, a p value of 0.0254 is 2.54%. This means there is a 2.54% chance your results could be random (i.e. happened by chance). That’s pretty tiny. On the other hand, a large p-value of .9(90%) means your results have a 90% probability of being completely random and not due to anything in your experiment. Therefore, the smaller the p-value, the more important (“ significant “) your results.

When you run a hypothesis test , you compare the p value from your test to the alpha level you selected when you ran the test. Alpha levels can also be written as percentages.

P Value vs Alpha level

Alpha levels are controlled by the researcher and are related to confidence levels . You get an alpha level by subtracting your confidence level from 100%. For example, if you want to be 98 percent confident in your research, the alpha level would be 2% (100% – 98%). When you run the hypothesis test, the test will give you a value for p. Compare that value to your chosen alpha level. For example, let’s say you chose an alpha level of 5% (0.05). If the results from the test give you:

- A small p (≤ 0.05), reject the null hypothesis . This is strong evidence that the null hypothesis is invalid.

- A large p (> 0.05) means the alternate hypothesis is weak, so you do not reject the null.

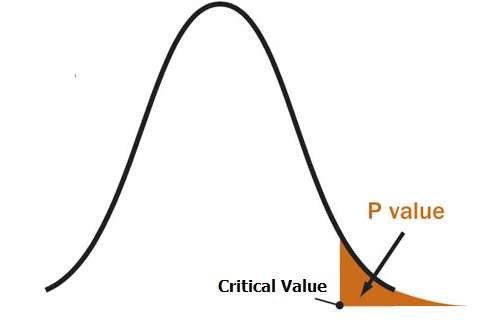

P Values and Critical Values

What if I Don’t Have an Alpha Level?

In an ideal world, you’ll have an alpha level. But if you do not, you can still use the following rough guidelines in deciding whether to support or reject the null hypothesis:

- If p > .10 → “not significant”

- If p ≤ .10 → “marginally significant”

- If p ≤ .05 → “significant”

- If p ≤ .01 → “highly significant.”

How to Calculate a P Value on the TI 83

Example question: The average wait time to see an E.R. doctor is said to be 150 minutes. You think the wait time is actually less. You take a random sample of 30 people and find their average wait is 148 minutes with a standard deviation of 5 minutes. Assume the distribution is normal. Find the p value for this test.

- Press STAT then arrow over to TESTS.

- Press ENTER for Z-Test .

- Arrow over to Stats. Press ENTER.

- Arrow down to μ0 and type 150. This is our null hypothesis mean.

- Arrow down to σ. Type in your std dev: 5.

- Arrow down to xbar. Type in your sample mean : 148.

- Arrow down to n. Type in your sample size : 30.

- Arrow to <μ0 for a left tail test . Press ENTER.

- Arrow down to Calculate. Press ENTER. P is given as .014, or about 1%.

The probability that you would get a sample mean of 148 minutes is tiny, so you should reject the null hypothesis.

Note : If you don’t want to run a test, you could also use the TI 83 NormCDF function to get the area (which is the same thing as the probability value).

Dodge, Y. (2008). The Concise Encyclopedia of Statistics . Springer. Gonick, L. (1993). The Cartoon Guide to Statistics . HarperPerennial.

Warning: The NCBI web site requires JavaScript to function. more...

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

StatPearls [Internet].

Hypothesis testing, p values, confidence intervals, and significance.

Jacob Shreffler ; Martin R. Huecker .

Affiliations

Last Update: March 13, 2023 .

- Definition/Introduction

Medical providers often rely on evidence-based medicine to guide decision-making in practice. Often a research hypothesis is tested with results provided, typically with p values, confidence intervals, or both. Additionally, statistical or research significance is estimated or determined by the investigators. Unfortunately, healthcare providers may have different comfort levels in interpreting these findings, which may affect the adequate application of the data.

- Issues of Concern

Without a foundational understanding of hypothesis testing, p values, confidence intervals, and the difference between statistical and clinical significance, it may affect healthcare providers' ability to make clinical decisions without relying purely on the research investigators deemed level of significance. Therefore, an overview of these concepts is provided to allow medical professionals to use their expertise to determine if results are reported sufficiently and if the study outcomes are clinically appropriate to be applied in healthcare practice.

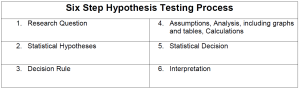

Hypothesis Testing

Investigators conducting studies need research questions and hypotheses to guide analyses. Starting with broad research questions (RQs), investigators then identify a gap in current clinical practice or research. Any research problem or statement is grounded in a better understanding of relationships between two or more variables. For this article, we will use the following research question example:

Research Question: Is Drug 23 an effective treatment for Disease A?

Research questions do not directly imply specific guesses or predictions; we must formulate research hypotheses. A hypothesis is a predetermined declaration regarding the research question in which the investigator(s) makes a precise, educated guess about a study outcome. This is sometimes called the alternative hypothesis and ultimately allows the researcher to take a stance based on experience or insight from medical literature. An example of a hypothesis is below.

Research Hypothesis: Drug 23 will significantly reduce symptoms associated with Disease A compared to Drug 22.

The null hypothesis states that there is no statistical difference between groups based on the stated research hypothesis.

Researchers should be aware of journal recommendations when considering how to report p values, and manuscripts should remain internally consistent.

Regarding p values, as the number of individuals enrolled in a study (the sample size) increases, the likelihood of finding a statistically significant effect increases. With very large sample sizes, the p-value can be very low significant differences in the reduction of symptoms for Disease A between Drug 23 and Drug 22. The null hypothesis is deemed true until a study presents significant data to support rejecting the null hypothesis. Based on the results, the investigators will either reject the null hypothesis (if they found significant differences or associations) or fail to reject the null hypothesis (they could not provide proof that there were significant differences or associations).

To test a hypothesis, researchers obtain data on a representative sample to determine whether to reject or fail to reject a null hypothesis. In most research studies, it is not feasible to obtain data for an entire population. Using a sampling procedure allows for statistical inference, though this involves a certain possibility of error. [1] When determining whether to reject or fail to reject the null hypothesis, mistakes can be made: Type I and Type II errors. Though it is impossible to ensure that these errors have not occurred, researchers should limit the possibilities of these faults. [2]

Significance

Significance is a term to describe the substantive importance of medical research. Statistical significance is the likelihood of results due to chance. [3] Healthcare providers should always delineate statistical significance from clinical significance, a common error when reviewing biomedical research. [4] When conceptualizing findings reported as either significant or not significant, healthcare providers should not simply accept researchers' results or conclusions without considering the clinical significance. Healthcare professionals should consider the clinical importance of findings and understand both p values and confidence intervals so they do not have to rely on the researchers to determine the level of significance. [5] One criterion often used to determine statistical significance is the utilization of p values.

P values are used in research to determine whether the sample estimate is significantly different from a hypothesized value. The p-value is the probability that the observed effect within the study would have occurred by chance if, in reality, there was no true effect. Conventionally, data yielding a p<0.05 or p<0.01 is considered statistically significant. While some have debated that the 0.05 level should be lowered, it is still universally practiced. [6] Hypothesis testing allows us to determine the size of the effect.

An example of findings reported with p values are below:

Statement: Drug 23 reduced patients' symptoms compared to Drug 22. Patients who received Drug 23 (n=100) were 2.1 times less likely than patients who received Drug 22 (n = 100) to experience symptoms of Disease A, p<0.05.

Statement:Individuals who were prescribed Drug 23 experienced fewer symptoms (M = 1.3, SD = 0.7) compared to individuals who were prescribed Drug 22 (M = 5.3, SD = 1.9). This finding was statistically significant, p= 0.02.

For either statement, if the threshold had been set at 0.05, the null hypothesis (that there was no relationship) should be rejected, and we should conclude significant differences. Noticeably, as can be seen in the two statements above, some researchers will report findings with < or > and others will provide an exact p-value (0.000001) but never zero [6] . When examining research, readers should understand how p values are reported. The best practice is to report all p values for all variables within a study design, rather than only providing p values for variables with significant findings. [7] The inclusion of all p values provides evidence for study validity and limits suspicion for selective reporting/data mining.

While researchers have historically used p values, experts who find p values problematic encourage the use of confidence intervals. [8] . P-values alone do not allow us to understand the size or the extent of the differences or associations. [3] In March 2016, the American Statistical Association (ASA) released a statement on p values, noting that scientific decision-making and conclusions should not be based on a fixed p-value threshold (e.g., 0.05). They recommend focusing on the significance of results in the context of study design, quality of measurements, and validity of data. Ultimately, the ASA statement noted that in isolation, a p-value does not provide strong evidence. [9]

When conceptualizing clinical work, healthcare professionals should consider p values with a concurrent appraisal study design validity. For example, a p-value from a double-blinded randomized clinical trial (designed to minimize bias) should be weighted higher than one from a retrospective observational study [7] . The p-value debate has smoldered since the 1950s [10] , and replacement with confidence intervals has been suggested since the 1980s. [11]

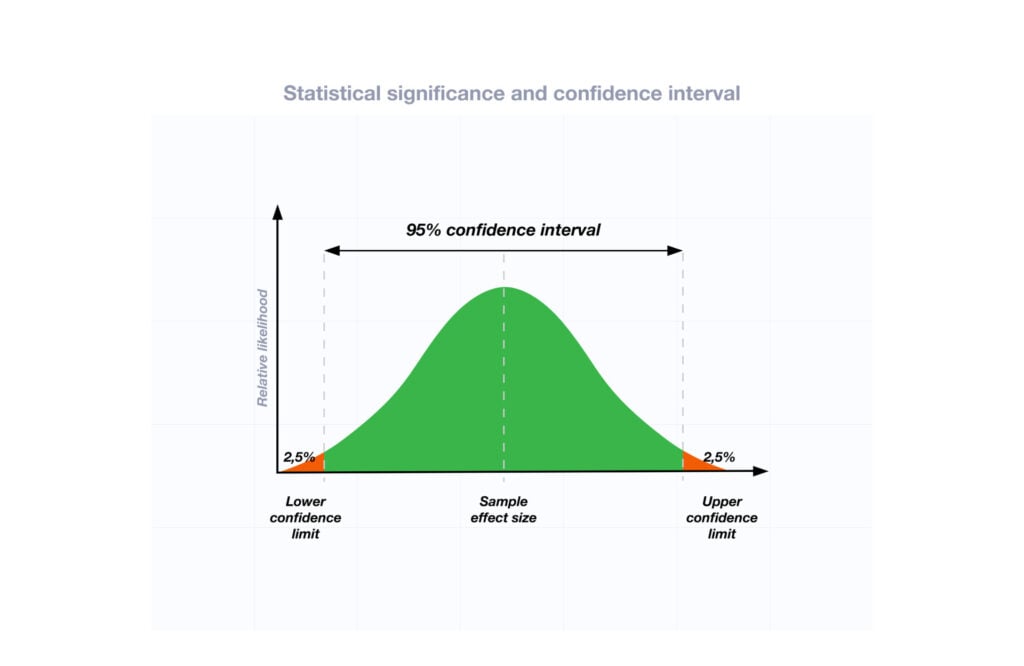

Confidence Intervals

A confidence interval provides a range of values within given confidence (e.g., 95%), including the accurate value of the statistical constraint within a targeted population. [12] Most research uses a 95% CI, but investigators can set any level (e.g., 90% CI, 99% CI). [13] A CI provides a range with the lower bound and upper bound limits of a difference or association that would be plausible for a population. [14] Therefore, a CI of 95% indicates that if a study were to be carried out 100 times, the range would contain the true value in 95, [15] confidence intervals provide more evidence regarding the precision of an estimate compared to p-values. [6]

In consideration of the similar research example provided above, one could make the following statement with 95% CI:

Statement: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22; there was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

It is important to note that the width of the CI is affected by the standard error and the sample size; reducing a study sample number will result in less precision of the CI (increase the width). [14] A larger width indicates a smaller sample size or a larger variability. [16] A researcher would want to increase the precision of the CI. For example, a 95% CI of 1.43 – 1.47 is much more precise than the one provided in the example above. In research and clinical practice, CIs provide valuable information on whether the interval includes or excludes any clinically significant values. [14]

Null values are sometimes used for differences with CI (zero for differential comparisons and 1 for ratios). However, CIs provide more information than that. [15] Consider this example: A hospital implements a new protocol that reduced wait time for patients in the emergency department by an average of 25 minutes (95% CI: -2.5 – 41 minutes). Because the range crosses zero, implementing this protocol in different populations could result in longer wait times; however, the range is much higher on the positive side. Thus, while the p-value used to detect statistical significance for this may result in "not significant" findings, individuals should examine this range, consider the study design, and weigh whether or not it is still worth piloting in their workplace.

Similarly to p-values, 95% CIs cannot control for researchers' errors (e.g., study bias or improper data analysis). [14] In consideration of whether to report p-values or CIs, researchers should examine journal preferences. When in doubt, reporting both may be beneficial. [13] An example is below:

Reporting both: Individuals who were prescribed Drug 23 had no symptoms after three days, which was significantly faster than those prescribed Drug 22, p = 0.009. There was a mean difference between the two groups of days to the recovery of 4.2 days (95% CI: 1.9 – 7.8).

- Clinical Significance

Recall that clinical significance and statistical significance are two different concepts. Healthcare providers should remember that a study with statistically significant differences and large sample size may be of no interest to clinicians, whereas a study with smaller sample size and statistically non-significant results could impact clinical practice. [14] Additionally, as previously mentioned, a non-significant finding may reflect the study design itself rather than relationships between variables.

Healthcare providers using evidence-based medicine to inform practice should use clinical judgment to determine the practical importance of studies through careful evaluation of the design, sample size, power, likelihood of type I and type II errors, data analysis, and reporting of statistical findings (p values, 95% CI or both). [4] Interestingly, some experts have called for "statistically significant" or "not significant" to be excluded from work as statistical significance never has and will never be equivalent to clinical significance. [17]

The decision on what is clinically significant can be challenging, depending on the providers' experience and especially the severity of the disease. Providers should use their knowledge and experiences to determine the meaningfulness of study results and make inferences based not only on significant or insignificant results by researchers but through their understanding of study limitations and practical implications.

- Nursing, Allied Health, and Interprofessional Team Interventions

All physicians, nurses, pharmacists, and other healthcare professionals should strive to understand the concepts in this chapter. These individuals should maintain the ability to review and incorporate new literature for evidence-based and safe care.

- Review Questions

- Access free multiple choice questions on this topic.

- Comment on this article.

Disclosure: Jacob Shreffler declares no relevant financial relationships with ineligible companies.

Disclosure: Martin Huecker declares no relevant financial relationships with ineligible companies.

This book is distributed under the terms of the Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International (CC BY-NC-ND 4.0) ( http://creativecommons.org/licenses/by-nc-nd/4.0/ ), which permits others to distribute the work, provided that the article is not altered or used commercially. You are not required to obtain permission to distribute this article, provided that you credit the author and journal.

- Cite this Page Shreffler J, Huecker MR. Hypothesis Testing, P Values, Confidence Intervals, and Significance. [Updated 2023 Mar 13]. In: StatPearls [Internet]. Treasure Island (FL): StatPearls Publishing; 2024 Jan-.

In this Page

Bulk download.

- Bulk download StatPearls data from FTP

Related information

- PMC PubMed Central citations

- PubMed Links to PubMed

Similar articles in PubMed

- The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). [PeerJ. 2021] The reporting of p values, confidence intervals and statistical significance in Preventive Veterinary Medicine (1997-2017). Messam LLM, Weng HY, Rosenberger NWY, Tan ZH, Payet SDM, Santbakshsing M. PeerJ. 2021; 9:e12453. Epub 2021 Nov 24.

- Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. [J Pharm Pract. 2010] Review Clinical versus statistical significance: interpreting P values and confidence intervals related to measures of association to guide decision making. Ferrill MJ, Brown DA, Kyle JA. J Pharm Pract. 2010 Aug; 23(4):344-51. Epub 2010 Apr 13.

- Interpreting "statistical hypothesis testing" results in clinical research. [J Ayurveda Integr Med. 2012] Interpreting "statistical hypothesis testing" results in clinical research. Sarmukaddam SB. J Ayurveda Integr Med. 2012 Apr; 3(2):65-9.

- Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. [Dermatol Surg. 2005] Confidence intervals in procedural dermatology: an intuitive approach to interpreting data. Alam M, Barzilai DA, Wrone DA. Dermatol Surg. 2005 Apr; 31(4):462-6.

- Review Is statistical significance testing useful in interpreting data? [Reprod Toxicol. 1993] Review Is statistical significance testing useful in interpreting data? Savitz DA. Reprod Toxicol. 1993; 7(2):95-100.

Recent Activity

- Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearl... Hypothesis Testing, P Values, Confidence Intervals, and Significance - StatPearls

Your browsing activity is empty.

Activity recording is turned off.

Turn recording back on

Connect with NLM

National Library of Medicine 8600 Rockville Pike Bethesda, MD 20894

Web Policies FOIA HHS Vulnerability Disclosure

Help Accessibility Careers

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

S.3.2 hypothesis testing (p-value approach).

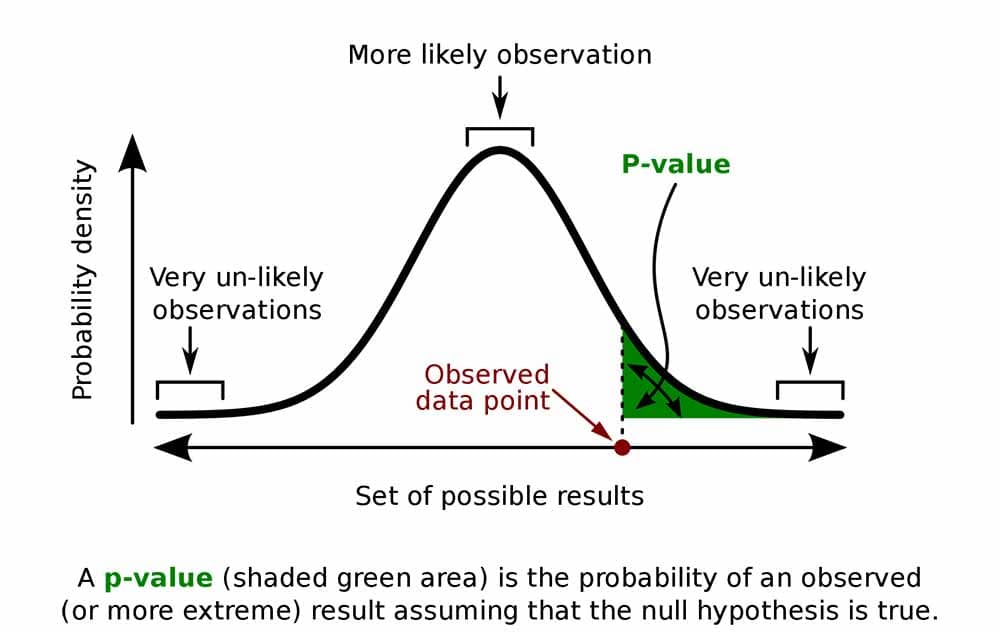

The P -value approach involves determining "likely" or "unlikely" by determining the probability — assuming the null hypothesis was true — of observing a more extreme test statistic in the direction of the alternative hypothesis than the one observed. If the P -value is small, say less than (or equal to) \(\alpha\), then it is "unlikely." And, if the P -value is large, say more than \(\alpha\), then it is "likely."

If the P -value is less than (or equal to) \(\alpha\), then the null hypothesis is rejected in favor of the alternative hypothesis. And, if the P -value is greater than \(\alpha\), then the null hypothesis is not rejected.

Specifically, the four steps involved in using the P -value approach to conducting any hypothesis test are:

- Specify the null and alternative hypotheses.

- Using the sample data and assuming the null hypothesis is true, calculate the value of the test statistic. Again, to conduct the hypothesis test for the population mean μ , we use the t -statistic \(t^*=\frac{\bar{x}-\mu}{s/\sqrt{n}}\) which follows a t -distribution with n - 1 degrees of freedom.

- Using the known distribution of the test statistic, calculate the P -value : "If the null hypothesis is true, what is the probability that we'd observe a more extreme test statistic in the direction of the alternative hypothesis than we did?" (Note how this question is equivalent to the question answered in criminal trials: "If the defendant is innocent, what is the chance that we'd observe such extreme criminal evidence?")

- Set the significance level, \(\alpha\), the probability of making a Type I error to be small — 0.01, 0.05, or 0.10. Compare the P -value to \(\alpha\). If the P -value is less than (or equal to) \(\alpha\), reject the null hypothesis in favor of the alternative hypothesis. If the P -value is greater than \(\alpha\), do not reject the null hypothesis.

Example S.3.2.1

Mean gpa section .

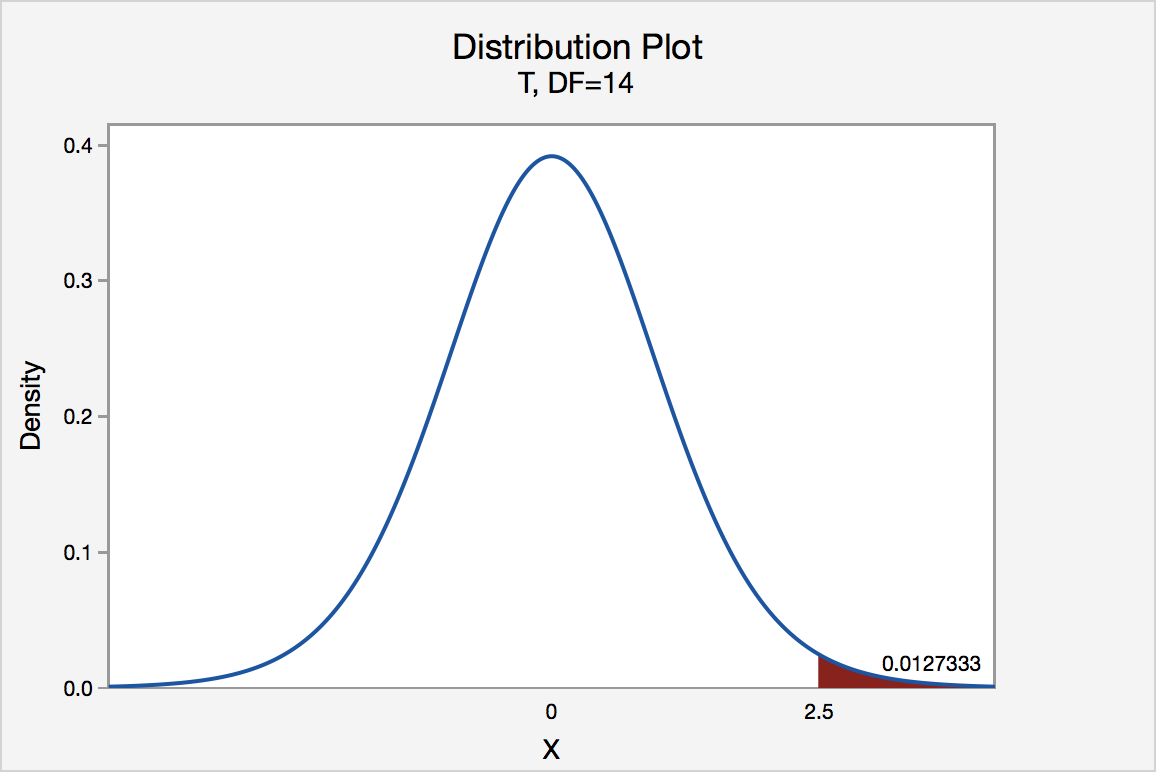

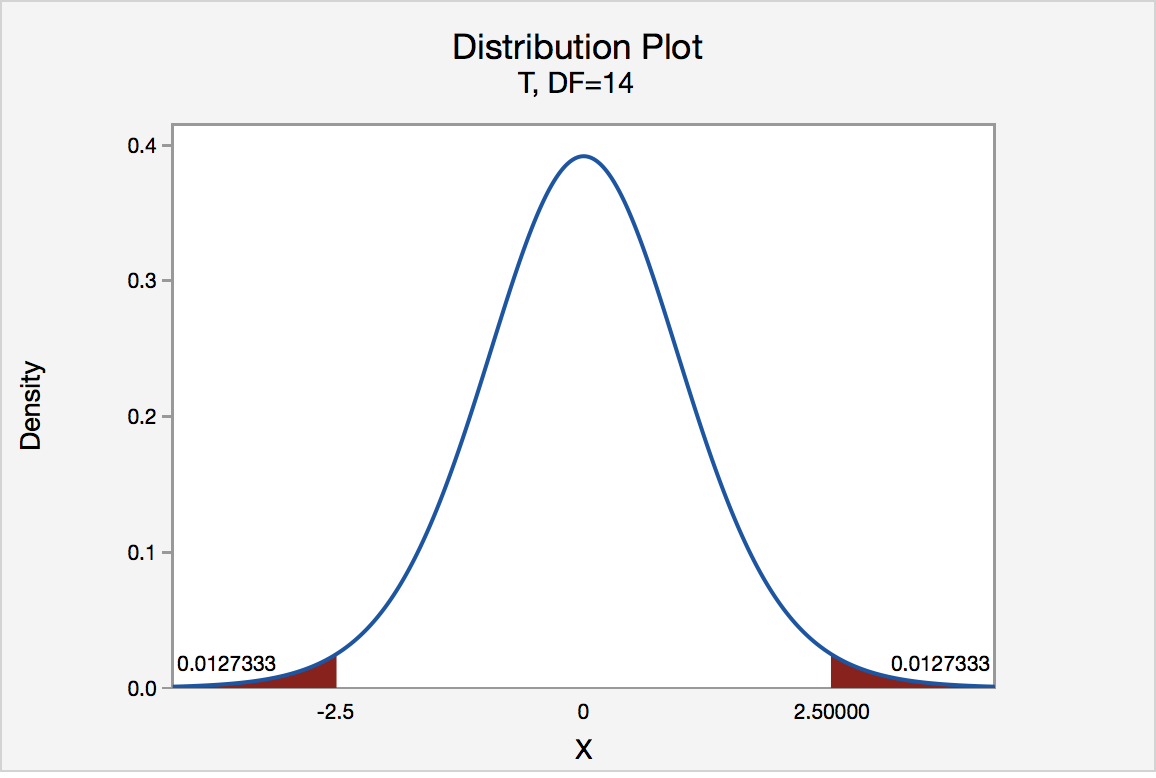

In our example concerning the mean grade point average, suppose that our random sample of n = 15 students majoring in mathematics yields a test statistic t * equaling 2.5. Since n = 15, our test statistic t * has n - 1 = 14 degrees of freedom. Also, suppose we set our significance level α at 0.05 so that we have only a 5% chance of making a Type I error.

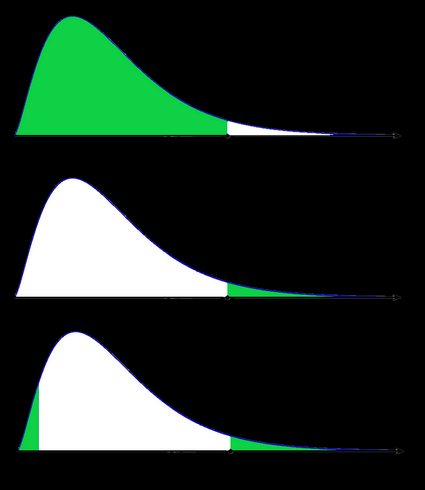

Right Tailed

The P -value for conducting the right-tailed test H 0 : μ = 3 versus H A : μ > 3 is the probability that we would observe a test statistic greater than t * = 2.5 if the population mean \(\mu\) really were 3. Recall that probability equals the area under the probability curve. The P -value is therefore the area under a t n - 1 = t 14 curve and to the right of the test statistic t * = 2.5. It can be shown using statistical software that the P -value is 0.0127. The graph depicts this visually.

The P -value, 0.0127, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0127, is less than \(\alpha\) = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ > 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ > 3 if we lowered our willingness to make a Type I error to \(\alpha\) = 0.01 instead, as the P -value, 0.0127, is then greater than \(\alpha\) = 0.01.

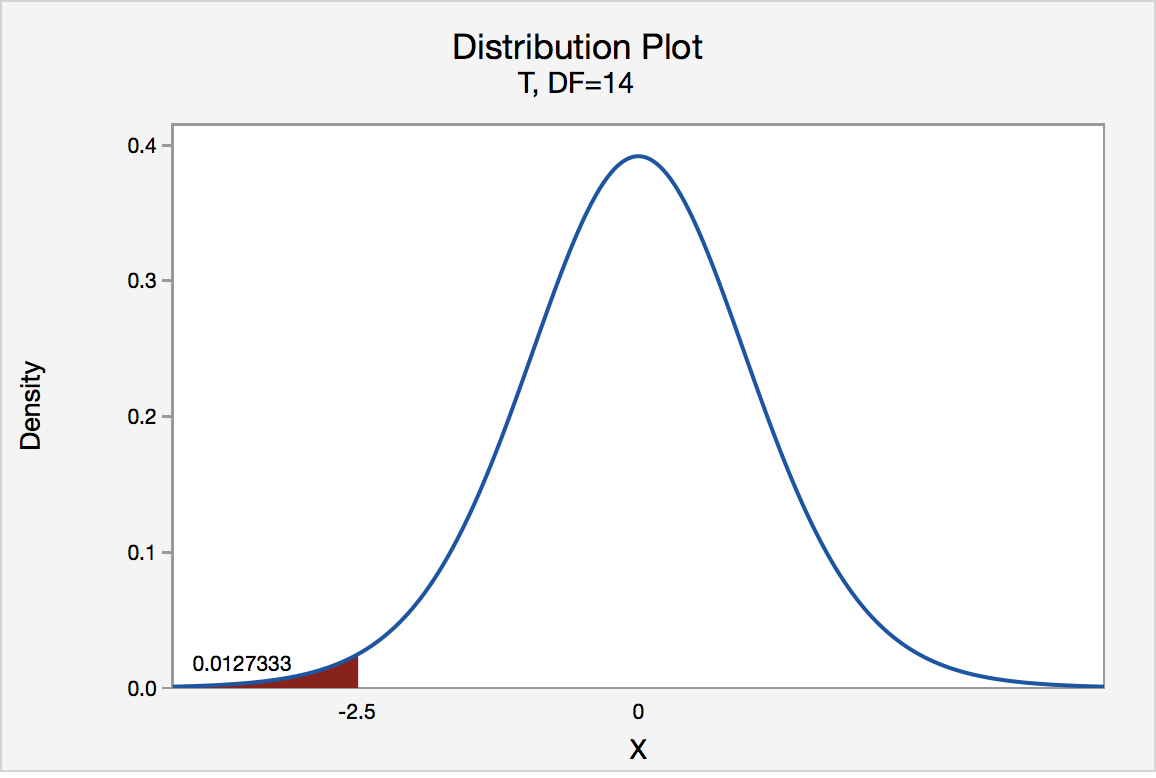

Left Tailed

In our example concerning the mean grade point average, suppose that our random sample of n = 15 students majoring in mathematics yields a test statistic t * instead of equaling -2.5. The P -value for conducting the left-tailed test H 0 : μ = 3 versus H A : μ < 3 is the probability that we would observe a test statistic less than t * = -2.5 if the population mean μ really were 3. The P -value is therefore the area under a t n - 1 = t 14 curve and to the left of the test statistic t* = -2.5. It can be shown using statistical software that the P -value is 0.0127. The graph depicts this visually.

The P -value, 0.0127, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0127, is less than α = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ < 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ < 3 if we lowered our willingness to make a Type I error to α = 0.01 instead, as the P -value, 0.0127, is then greater than \(\alpha\) = 0.01.

In our example concerning the mean grade point average, suppose again that our random sample of n = 15 students majoring in mathematics yields a test statistic t * instead of equaling -2.5. The P -value for conducting the two-tailed test H 0 : μ = 3 versus H A : μ ≠ 3 is the probability that we would observe a test statistic less than -2.5 or greater than 2.5 if the population mean μ really was 3. That is, the two-tailed test requires taking into account the possibility that the test statistic could fall into either tail (hence the name "two-tailed" test). The P -value is, therefore, the area under a t n - 1 = t 14 curve to the left of -2.5 and to the right of 2.5. It can be shown using statistical software that the P -value is 0.0127 + 0.0127, or 0.0254. The graph depicts this visually.

Note that the P -value for a two-tailed test is always two times the P -value for either of the one-tailed tests. The P -value, 0.0254, tells us it is "unlikely" that we would observe such an extreme test statistic t * in the direction of H A if the null hypothesis were true. Therefore, our initial assumption that the null hypothesis is true must be incorrect. That is, since the P -value, 0.0254, is less than α = 0.05, we reject the null hypothesis H 0 : μ = 3 in favor of the alternative hypothesis H A : μ ≠ 3.

Note that we would not reject H 0 : μ = 3 in favor of H A : μ ≠ 3 if we lowered our willingness to make a Type I error to α = 0.01 instead, as the P -value, 0.0254, is then greater than \(\alpha\) = 0.01.

Now that we have reviewed the critical value and P -value approach procedures for each of the three possible hypotheses, let's look at three new examples — one of a right-tailed test, one of a left-tailed test, and one of a two-tailed test.

The good news is that, whenever possible, we will take advantage of the test statistics and P -values reported in statistical software, such as Minitab, to conduct our hypothesis tests in this course.

P-Value And Statistical Significance: What It Is & Why It Matters

Saul McLeod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul McLeod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

The p-value in statistics quantifies the evidence against a null hypothesis. A low p-value suggests data is inconsistent with the null, potentially favoring an alternative hypothesis. Common significance thresholds are 0.05 or 0.01.

Hypothesis testing

When you perform a statistical test, a p-value helps you determine the significance of your results in relation to the null hypothesis.

The null hypothesis (H0) states no relationship exists between the two variables being studied (one variable does not affect the other). It states the results are due to chance and are not significant in supporting the idea being investigated. Thus, the null hypothesis assumes that whatever you try to prove did not happen.

The alternative hypothesis (Ha or H1) is the one you would believe if the null hypothesis is concluded to be untrue.

The alternative hypothesis states that the independent variable affected the dependent variable, and the results are significant in supporting the theory being investigated (i.e., the results are not due to random chance).

What a p-value tells you

A p-value, or probability value, is a number describing how likely it is that your data would have occurred by random chance (i.e., that the null hypothesis is true).

The level of statistical significance is often expressed as a p-value between 0 and 1.

The smaller the p -value, the less likely the results occurred by random chance, and the stronger the evidence that you should reject the null hypothesis.

Remember, a p-value doesn’t tell you if the null hypothesis is true or false. It just tells you how likely you’d see the data you observed (or more extreme data) if the null hypothesis was true. It’s a piece of evidence, not a definitive proof.

Example: Test Statistic and p-Value

Suppose you’re conducting a study to determine whether a new drug has an effect on pain relief compared to a placebo. If the new drug has no impact, your test statistic will be close to the one predicted by the null hypothesis (no difference between the drug and placebo groups), and the resulting p-value will be close to 1. It may not be precisely 1 because real-world variations may exist. Conversely, if the new drug indeed reduces pain significantly, your test statistic will diverge further from what’s expected under the null hypothesis, and the p-value will decrease. The p-value will never reach zero because there’s always a slim possibility, though highly improbable, that the observed results occurred by random chance.

P-value interpretation

The significance level (alpha) is a set probability threshold (often 0.05), while the p-value is the probability you calculate based on your study or analysis.

A p-value less than or equal to your significance level (typically ≤ 0.05) is statistically significant.

A p-value less than or equal to a predetermined significance level (often 0.05 or 0.01) indicates a statistically significant result, meaning the observed data provide strong evidence against the null hypothesis.

This suggests the effect under study likely represents a real relationship rather than just random chance.

For instance, if you set α = 0.05, you would reject the null hypothesis if your p -value ≤ 0.05.

It indicates strong evidence against the null hypothesis, as there is less than a 5% probability the null is correct (and the results are random).

Therefore, we reject the null hypothesis and accept the alternative hypothesis.

Example: Statistical Significance

Upon analyzing the pain relief effects of the new drug compared to the placebo, the computed p-value is less than 0.01, which falls well below the predetermined alpha value of 0.05. Consequently, you conclude that there is a statistically significant difference in pain relief between the new drug and the placebo.

What does a p-value of 0.001 mean?

A p-value of 0.001 is highly statistically significant beyond the commonly used 0.05 threshold. It indicates strong evidence of a real effect or difference, rather than just random variation.

Specifically, a p-value of 0.001 means there is only a 0.1% chance of obtaining a result at least as extreme as the one observed, assuming the null hypothesis is correct.

Such a small p-value provides strong evidence against the null hypothesis, leading to rejecting the null in favor of the alternative hypothesis.

A p-value more than the significance level (typically p > 0.05) is not statistically significant and indicates strong evidence for the null hypothesis.

This means we retain the null hypothesis and reject the alternative hypothesis. You should note that you cannot accept the null hypothesis; we can only reject it or fail to reject it.

Note : when the p-value is above your threshold of significance, it does not mean that there is a 95% probability that the alternative hypothesis is true.

One-Tailed Test

Two-Tailed Test

How do you calculate the p-value ?

Most statistical software packages like R, SPSS, and others automatically calculate your p-value. This is the easiest and most common way.

Online resources and tables are available to estimate the p-value based on your test statistic and degrees of freedom.

These tables help you understand how often you would expect to see your test statistic under the null hypothesis.

Understanding the Statistical Test:

Different statistical tests are designed to answer specific research questions or hypotheses. Each test has its own underlying assumptions and characteristics.

For example, you might use a t-test to compare means, a chi-squared test for categorical data, or a correlation test to measure the strength of a relationship between variables.

Be aware that the number of independent variables you include in your analysis can influence the magnitude of the test statistic needed to produce the same p-value.

This factor is particularly important to consider when comparing results across different analyses.

Example: Choosing a Statistical Test

If you’re comparing the effectiveness of just two different drugs in pain relief, a two-sample t-test is a suitable choice for comparing these two groups. However, when you’re examining the impact of three or more drugs, it’s more appropriate to employ an Analysis of Variance ( ANOVA) . Utilizing multiple pairwise comparisons in such cases can lead to artificially low p-values and an overestimation of the significance of differences between the drug groups.

How to report

A statistically significant result cannot prove that a research hypothesis is correct (which implies 100% certainty).

Instead, we may state our results “provide support for” or “give evidence for” our research hypothesis (as there is still a slight probability that the results occurred by chance and the null hypothesis was correct – e.g., less than 5%).

Example: Reporting the results

In our comparison of the pain relief effects of the new drug and the placebo, we observed that participants in the drug group experienced a significant reduction in pain ( M = 3.5; SD = 0.8) compared to those in the placebo group ( M = 5.2; SD = 0.7), resulting in an average difference of 1.7 points on the pain scale (t(98) = -9.36; p < 0.001).

The 6th edition of the APA style manual (American Psychological Association, 2010) states the following on the topic of reporting p-values:

“When reporting p values, report exact p values (e.g., p = .031) to two or three decimal places. However, report p values less than .001 as p < .001.

The tradition of reporting p values in the form p < .10, p < .05, p < .01, and so forth, was appropriate in a time when only limited tables of critical values were available.” (p. 114)

- Do not use 0 before the decimal point for the statistical value p as it cannot equal 1. In other words, write p = .001 instead of p = 0.001.

- Please pay attention to issues of italics ( p is always italicized) and spacing (either side of the = sign).

- p = .000 (as outputted by some statistical packages such as SPSS) is impossible and should be written as p < .001.

- The opposite of significant is “nonsignificant,” not “insignificant.”

Why is the p -value not enough?

A lower p-value is sometimes interpreted as meaning there is a stronger relationship between two variables.

However, statistical significance means that it is unlikely that the null hypothesis is true (less than 5%).

To understand the strength of the difference between the two groups (control vs. experimental) a researcher needs to calculate the effect size .

When do you reject the null hypothesis?

In statistical hypothesis testing, you reject the null hypothesis when the p-value is less than or equal to the significance level (α) you set before conducting your test. The significance level is the probability of rejecting the null hypothesis when it is true. Commonly used significance levels are 0.01, 0.05, and 0.10.

Remember, rejecting the null hypothesis doesn’t prove the alternative hypothesis; it just suggests that the alternative hypothesis may be plausible given the observed data.

The p -value is conditional upon the null hypothesis being true but is unrelated to the truth or falsity of the alternative hypothesis.

What does p-value of 0.05 mean?

If your p-value is less than or equal to 0.05 (the significance level), you would conclude that your result is statistically significant. This means the evidence is strong enough to reject the null hypothesis in favor of the alternative hypothesis.

Are all p-values below 0.05 considered statistically significant?

No, not all p-values below 0.05 are considered statistically significant. The threshold of 0.05 is commonly used, but it’s just a convention. Statistical significance depends on factors like the study design, sample size, and the magnitude of the observed effect.

A p-value below 0.05 means there is evidence against the null hypothesis, suggesting a real effect. However, it’s essential to consider the context and other factors when interpreting results.

Researchers also look at effect size and confidence intervals to determine the practical significance and reliability of findings.

How does sample size affect the interpretation of p-values?

Sample size can impact the interpretation of p-values. A larger sample size provides more reliable and precise estimates of the population, leading to narrower confidence intervals.

With a larger sample, even small differences between groups or effects can become statistically significant, yielding lower p-values. In contrast, smaller sample sizes may not have enough statistical power to detect smaller effects, resulting in higher p-values.

Therefore, a larger sample size increases the chances of finding statistically significant results when there is a genuine effect, making the findings more trustworthy and robust.

Can a non-significant p-value indicate that there is no effect or difference in the data?

No, a non-significant p-value does not necessarily indicate that there is no effect or difference in the data. It means that the observed data do not provide strong enough evidence to reject the null hypothesis.

There could still be a real effect or difference, but it might be smaller or more variable than the study was able to detect.

Other factors like sample size, study design, and measurement precision can influence the p-value. It’s important to consider the entire body of evidence and not rely solely on p-values when interpreting research findings.

Can P values be exactly zero?

While a p-value can be extremely small, it cannot technically be absolute zero. When a p-value is reported as p = 0.000, the actual p-value is too small for the software to display. This is often interpreted as strong evidence against the null hypothesis. For p values less than 0.001, report as p < .001

Further Information

- P Value Calculator From T Score

- P-Value Calculator For Chi-Square

- P-values and significance tests (Kahn Academy)

- Hypothesis testing and p-values (Kahn Academy)

- Wasserstein, R. L., Schirm, A. L., & Lazar, N. A. (2019). Moving to a world beyond “ p “< 0.05”.

- Criticism of using the “ p “< 0.05”.

- Publication manual of the American Psychological Association

- Statistics for Psychology Book Download

Bland, J. M., & Altman, D. G. (1994). One and two sided tests of significance: Authors’ reply. BMJ: British Medical Journal , 309 (6958), 874.

Goodman, S. N., & Royall, R. (1988). Evidence and scientific research. American Journal of Public Health , 78 (12), 1568-1574.

Goodman, S. (2008, July). A dirty dozen: twelve p-value misconceptions . In Seminars in hematology (Vol. 45, No. 3, pp. 135-140). WB Saunders.

Lang, J. M., Rothman, K. J., & Cann, C. I. (1998). That confounded P-value. Epidemiology (Cambridge, Mass.) , 9 (1), 7-8.

p-value Calculator

What is p-value, how do i calculate p-value from test statistic, how to interpret p-value, how to use the p-value calculator to find p-value from test statistic, how do i find p-value from z-score, how do i find p-value from t, p-value from chi-square score (χ² score), p-value from f-score.

Welcome to our p-value calculator! You will never again have to wonder how to find the p-value, as here you can determine the one-sided and two-sided p-values from test statistics, following all the most popular distributions: normal, t-Student, chi-squared, and Snedecor's F.

P-values appear all over science, yet many people find the concept a bit intimidating. Don't worry – in this article, we will explain not only what the p-value is but also how to interpret p-values correctly . Have you ever been curious about how to calculate the p-value by hand? We provide you with all the necessary formulae as well!

🙋 If you want to revise some basics from statistics, our normal distribution calculator is an excellent place to start.

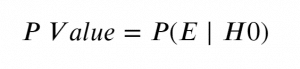

Formally, the p-value is the probability that the test statistic will produce values at least as extreme as the value it produced for your sample . It is crucial to remember that this probability is calculated under the assumption that the null hypothesis H 0 is true !

More intuitively, p-value answers the question:

Assuming that I live in a world where the null hypothesis holds, how probable is it that, for another sample, the test I'm performing will generate a value at least as extreme as the one I observed for the sample I already have?

It is the alternative hypothesis that determines what "extreme" actually means , so the p-value depends on the alternative hypothesis that you state: left-tailed, right-tailed, or two-tailed. In the formulas below, S stands for a test statistic, x for the value it produced for a given sample, and Pr(event | H 0 ) is the probability of an event, calculated under the assumption that H 0 is true:

Left-tailed test: p-value = Pr(S ≤ x | H 0 )

Right-tailed test: p-value = Pr(S ≥ x | H 0 )

Two-tailed test:

p-value = 2 × min{Pr(S ≤ x | H 0 ), Pr(S ≥ x | H 0 )}

(By min{a,b} , we denote the smaller number out of a and b .)

If the distribution of the test statistic under H 0 is symmetric about 0 , then: p-value = 2 × Pr(S ≥ |x| | H 0 )

or, equivalently: p-value = 2 × Pr(S ≤ -|x| | H 0 )

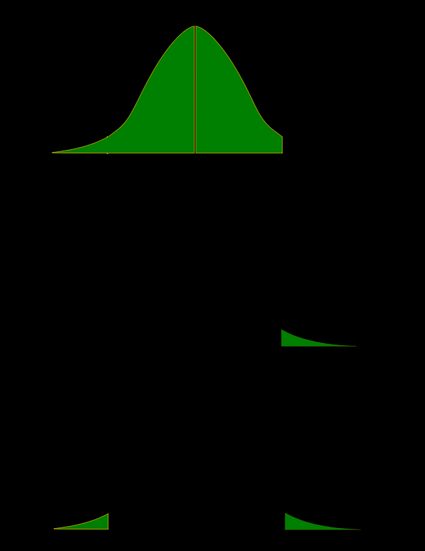

As a picture is worth a thousand words, let us illustrate these definitions. Here, we use the fact that the probability can be neatly depicted as the area under the density curve for a given distribution. We give two sets of pictures: one for a symmetric distribution and the other for a skewed (non-symmetric) distribution.

- Symmetric case: normal distribution:

- Non-symmetric case: chi-squared distribution:

In the last picture (two-tailed p-value for skewed distribution), the area of the left-hand side is equal to the area of the right-hand side.

To determine the p-value, you need to know the distribution of your test statistic under the assumption that the null hypothesis is true . Then, with the help of the cumulative distribution function ( cdf ) of this distribution, we can express the probability of the test statistics being at least as extreme as its value x for the sample:

Left-tailed test:

p-value = cdf(x) .

Right-tailed test:

p-value = 1 - cdf(x) .

p-value = 2 × min{cdf(x) , 1 - cdf(x)} .

If the distribution of the test statistic under H 0 is symmetric about 0 , then a two-sided p-value can be simplified to p-value = 2 × cdf(-|x|) , or, equivalently, as p-value = 2 - 2 × cdf(|x|) .

The probability distributions that are most widespread in hypothesis testing tend to have complicated cdf formulae, and finding the p-value by hand may not be possible. You'll likely need to resort to a computer or to a statistical table, where people have gathered approximate cdf values.

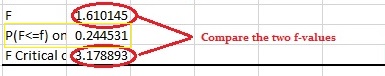

Well, you now know how to calculate the p-value, but… why do you need to calculate this number in the first place? In hypothesis testing, the p-value approach is an alternative to the critical value approach . Recall that the latter requires researchers to pre-set the significance level, α, which is the probability of rejecting the null hypothesis when it is true (so of type I error ). Once you have your p-value, you just need to compare it with any given α to quickly decide whether or not to reject the null hypothesis at that significance level, α. For details, check the next section, where we explain how to interpret p-values.

As we have mentioned above, the p-value is the answer to the following question:

What does that mean for you? Well, you've got two options:

- A high p-value means that your data is highly compatible with the null hypothesis; and

- A small p-value provides evidence against the null hypothesis , as it means that your result would be very improbable if the null hypothesis were true.

However, it may happen that the null hypothesis is true, but your sample is highly unusual! For example, imagine we studied the effect of a new drug and got a p-value of 0.03 . This means that in 3% of similar studies, random chance alone would still be able to produce the value of the test statistic that we obtained, or a value even more extreme, even if the drug had no effect at all!

The question "what is p-value" can also be answered as follows: p-value is the smallest level of significance at which the null hypothesis would be rejected. So, if you now want to make a decision on the null hypothesis at some significance level α , just compare your p-value with α :

- If p-value ≤ α , then you reject the null hypothesis and accept the alternative hypothesis; and

- If p-value ≥ α , then you don't have enough evidence to reject the null hypothesis.

Obviously, the fate of the null hypothesis depends on α . For instance, if the p-value was 0.03 , we would reject the null hypothesis at a significance level of 0.05 , but not at a level of 0.01 . That's why the significance level should be stated in advance and not adapted conveniently after the p-value has been established! A significance level of 0.05 is the most common value, but there's nothing magical about it. Here, you can see what too strong a faith in the 0.05 threshold can lead to. It's always best to report the p-value, and allow the reader to make their own conclusions.

Also, bear in mind that subject area expertise (and common reason) is crucial. Otherwise, mindlessly applying statistical principles, you can easily arrive at statistically significant, despite the conclusion being 100% untrue.

As our p-value calculator is here at your service, you no longer need to wonder how to find p-value from all those complicated test statistics! Here are the steps you need to follow:

Pick the alternative hypothesis : two-tailed, right-tailed, or left-tailed.

Tell us the distribution of your test statistic under the null hypothesis: is it N(0,1), t-Student, chi-squared, or Snedecor's F? If you are unsure, check the sections below, as they are devoted to these distributions.

If needed, specify the degrees of freedom of the test statistic's distribution.

Enter the value of test statistic computed for your data sample.

Our calculator determines the p-value from the test statistic and provides the decision to be made about the null hypothesis. The standard significance level is 0.05 by default.

Go to the advanced mode if you need to increase the precision with which the calculations are performed or change the significance level .

In terms of the cumulative distribution function (cdf) of the standard normal distribution, which is traditionally denoted by Φ , the p-value is given by the following formulae:

Left-tailed z-test:

p-value = Φ(Z score )

Right-tailed z-test:

p-value = 1 - Φ(Z score )

Two-tailed z-test:

p-value = 2 × Φ(−|Z score |)

p-value = 2 - 2 × Φ(|Z score |)

🙋 To learn more about Z-tests, head to Omni's Z-test calculator .

We use the Z-score if the test statistic approximately follows the standard normal distribution N(0,1) . Thanks to the central limit theorem, you can count on the approximation if you have a large sample (say at least 50 data points) and treat your distribution as normal.

A Z-test most often refers to testing the population mean , or the difference between two population means, in particular between two proportions. You can also find Z-tests in maximum likelihood estimations.

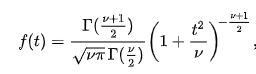

The p-value from the t-score is given by the following formulae, in which cdf t,d stands for the cumulative distribution function of the t-Student distribution with d degrees of freedom:

Left-tailed t-test:

p-value = cdf t,d (t score )

Right-tailed t-test:

p-value = 1 - cdf t,d (t score )

Two-tailed t-test:

p-value = 2 × cdf t,d (−|t score |)

p-value = 2 - 2 × cdf t,d (|t score |)

Use the t-score option if your test statistic follows the t-Student distribution . This distribution has a shape similar to N(0,1) (bell-shaped and symmetric) but has heavier tails – the exact shape depends on the parameter called the degrees of freedom . If the number of degrees of freedom is large (>30), which generically happens for large samples, the t-Student distribution is practically indistinguishable from the normal distribution N(0,1).

The most common t-tests are those for population means with an unknown population standard deviation, or for the difference between means of two populations , with either equal or unequal yet unknown population standard deviations. There's also a t-test for paired (dependent) samples .

🙋 To get more insights into t-statistics, we recommend using our t-test calculator .

Use the χ²-score option when performing a test in which the test statistic follows the χ²-distribution .

This distribution arises if, for example, you take the sum of squared variables, each following the normal distribution N(0,1). Remember to check the number of degrees of freedom of the χ²-distribution of your test statistic!

How to find the p-value from chi-square-score ? You can do it with the help of the following formulae, in which cdf χ²,d denotes the cumulative distribution function of the χ²-distribution with d degrees of freedom:

Left-tailed χ²-test:

p-value = cdf χ²,d (χ² score )

Right-tailed χ²-test:

p-value = 1 - cdf χ²,d (χ² score )

Remember that χ²-tests for goodness-of-fit and independence are right-tailed tests! (see below)

Two-tailed χ²-test:

p-value = 2 × min{cdf χ²,d (χ² score ), 1 - cdf χ²,d (χ² score )}

(By min{a,b} , we denote the smaller of the numbers a and b .)

The most popular tests which lead to a χ²-score are the following:

Testing whether the variance of normally distributed data has some pre-determined value. In this case, the test statistic has the χ²-distribution with n - 1 degrees of freedom, where n is the sample size. This can be a one-tailed or two-tailed test .

Goodness-of-fit test checks whether the empirical (sample) distribution agrees with some expected probability distribution. In this case, the test statistic follows the χ²-distribution with k - 1 degrees of freedom, where k is the number of classes into which the sample is divided. This is a right-tailed test .

Independence test is used to determine if there is a statistically significant relationship between two variables. In this case, its test statistic is based on the contingency table and follows the χ²-distribution with (r - 1)(c - 1) degrees of freedom, where r is the number of rows, and c is the number of columns in this contingency table. This also is a right-tailed test .

Finally, the F-score option should be used when you perform a test in which the test statistic follows the F-distribution , also known as the Fisher–Snedecor distribution. The exact shape of an F-distribution depends on two degrees of freedom .

To see where those degrees of freedom come from, consider the independent random variables X and Y , which both follow the χ²-distributions with d 1 and d 2 degrees of freedom, respectively. In that case, the ratio (X/d 1 )/(Y/d 2 ) follows the F-distribution, with (d 1 , d 2 ) -degrees of freedom. For this reason, the two parameters d 1 and d 2 are also called the numerator and denominator degrees of freedom .

The p-value from F-score is given by the following formulae, where we let cdf F,d1,d2 denote the cumulative distribution function of the F-distribution, with (d 1 , d 2 ) -degrees of freedom:

Left-tailed F-test:

p-value = cdf F,d1,d2 (F score )

Right-tailed F-test:

p-value = 1 - cdf F,d1,d2 (F score )

Two-tailed F-test:

p-value = 2 × min{cdf F,d1,d2 (F score ), 1 - cdf F,d1,d2 (F score )}

Below we list the most important tests that produce F-scores. All of them are right-tailed tests .

A test for the equality of variances in two normally distributed populations . Its test statistic follows the F-distribution with (n - 1, m - 1) -degrees of freedom, where n and m are the respective sample sizes.

ANOVA is used to test the equality of means in three or more groups that come from normally distributed populations with equal variances. We arrive at the F-distribution with (k - 1, n - k) -degrees of freedom, where k is the number of groups, and n is the total sample size (in all groups together).

A test for overall significance of regression analysis . The test statistic has an F-distribution with (k - 1, n - k) -degrees of freedom, where n is the sample size, and k is the number of variables (including the intercept).

With the presence of the linear relationship having been established in your data sample with the above test, you can calculate the coefficient of determination, R 2 , which indicates the strength of this relationship . You can do it by hand or use our coefficient of determination calculator .

A test to compare two nested regression models . The test statistic follows the F-distribution with (k 2 - k 1 , n - k 2 ) -degrees of freedom, where k 1 and k 2 are the numbers of variables in the smaller and bigger models, respectively, and n is the sample size.

You may notice that the F-test of an overall significance is a particular form of the F-test for comparing two nested models: it tests whether our model does significantly better than the model with no predictors (i.e., the intercept-only model).

Can p-value be negative?

No, the p-value cannot be negative. This is because probabilities cannot be negative, and the p-value is the probability of the test statistic satisfying certain conditions.

What does a high p-value mean?

A high p-value means that under the null hypothesis, there's a high probability that for another sample, the test statistic will generate a value at least as extreme as the one observed in the sample you already have. A high p-value doesn't allow you to reject the null hypothesis.

What does a low p-value mean?

A low p-value means that under the null hypothesis, there's little probability that for another sample, the test statistic will generate a value at least as extreme as the one observed for the sample you already have. A low p-value is evidence in favor of the alternative hypothesis – it allows you to reject the null hypothesis.

Index of qualitative variation

Social media time alternatives.

- Biology (103)

- Chemistry (101)

- Construction (148)

- Conversion (304)

- Ecology (32)

- Everyday life (263)

- Finance (596)

- Health (443)

- Physics (513)

- Sports (108)

- Statistics (184)

- Other (186)

- Discover Omni (40)

- Skip to secondary menu

- Skip to main content

- Skip to primary sidebar

Statistics By Jim

Making statistics intuitive

How Hypothesis Tests Work: Significance Levels (Alpha) and P values

By Jim Frost 45 Comments

Hypothesis testing is a vital process in inferential statistics where the goal is to use sample data to draw conclusions about an entire population . In the testing process, you use significance levels and p-values to determine whether the test results are statistically significant.

You hear about results being statistically significant all of the time. But, what do significance levels, P values, and statistical significance actually represent? Why do we even need to use hypothesis tests in statistics?

In this post, I answer all of these questions. I use graphs and concepts to explain how hypothesis tests function in order to provide a more intuitive explanation. This helps you move on to understanding your statistical results.

Hypothesis Test Example Scenario

To start, I’ll demonstrate why we need to use hypothesis tests using an example.

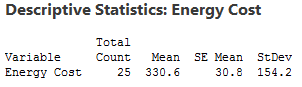

A researcher is studying fuel expenditures for families and wants to determine if the monthly cost has changed since last year when the average was $260 per month. The researcher draws a random sample of 25 families and enters their monthly costs for this year into statistical software. You can download the CSV data file: FuelsCosts . Below are the descriptive statistics for this year.

We’ll build on this example to answer the research question and show how hypothesis tests work.

Descriptive Statistics Alone Won’t Answer the Question

The researcher collected a random sample and found that this year’s sample mean (330.6) is greater than last year’s mean (260). Why perform a hypothesis test at all? We can see that this year’s mean is higher by $70! Isn’t that different?

Regrettably, the situation isn’t as clear as you might think because we’re analyzing a sample instead of the full population. There are huge benefits when working with samples because it is usually impossible to collect data from an entire population. However, the tradeoff for working with a manageable sample is that we need to account for sample error.

The sampling error is the gap between the sample statistic and the population parameter. For our example, the sample statistic is the sample mean, which is 330.6. The population parameter is μ, or mu, which is the average of the entire population. Unfortunately, the value of the population parameter is not only unknown but usually unknowable. Learn more about Sampling Error .

We obtained a sample mean of 330.6. However, it’s conceivable that, due to sampling error, the mean of the population might be only 260. If the researcher drew another random sample, the next sample mean might be closer to 260. It’s impossible to assess this possibility by looking at only the sample mean. Hypothesis testing is a form of inferential statistics that allows us to draw conclusions about an entire population based on a representative sample. We need to use a hypothesis test to determine the likelihood of obtaining our sample mean if the population mean is 260.

Background information : The Difference between Descriptive and Inferential Statistics and Populations, Parameters, and Samples in Inferential Statistics

A Sampling Distribution Determines Whether Our Sample Mean is Unlikely

It is very unlikely for any sample mean to equal the population mean because of sample error. In our case, the sample mean of 330.6 is almost definitely not equal to the population mean for fuel expenditures.

If we could obtain a substantial number of random samples and calculate the sample mean for each sample, we’d observe a broad spectrum of sample means. We’d even be able to graph the distribution of sample means from this process.

This type of distribution is called a sampling distribution. You obtain a sampling distribution by drawing many random samples of the same size from the same population. Why the heck would we do this?

Because sampling distributions allow you to determine the likelihood of obtaining your sample statistic and they’re crucial for performing hypothesis tests.

Luckily, we don’t need to go to the trouble of collecting numerous random samples! We can estimate the sampling distribution using the t-distribution, our sample size, and the variability in our sample.

We want to find out if the average fuel expenditure this year (330.6) is different from last year (260). To answer this question, we’ll graph the sampling distribution based on the assumption that the mean fuel cost for the entire population has not changed and is still 260. In statistics, we call this lack of effect, or no change, the null hypothesis . We use the null hypothesis value as the basis of comparison for our observed sample value.

Sampling distributions and t-distributions are types of probability distributions.

Related posts : Sampling Distributions and Understanding Probability Distributions

Graphing our Sample Mean in the Context of the Sampling Distribution

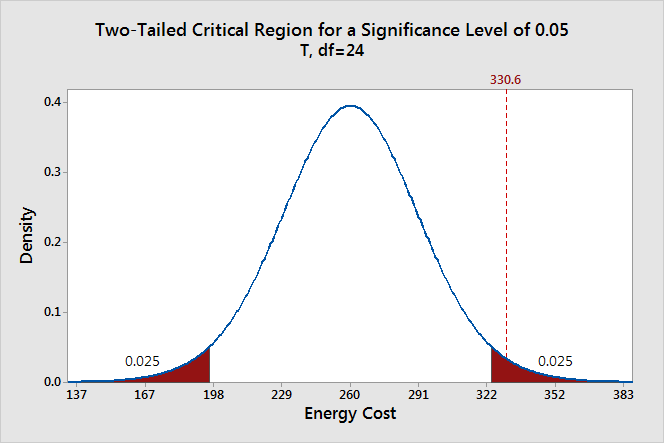

The graph below shows which sample means are more likely and less likely if the population mean is 260. We can place our sample mean in this distribution. This larger context helps us see how unlikely our sample mean is if the null hypothesis is true (μ = 260).

The graph displays the estimated distribution of sample means. The most likely values are near 260 because the plot assumes that this is the true population mean. However, given random sampling error, it would not be surprising to observe sample means ranging from 167 to 352. If the population mean is still 260, our observed sample mean (330.6) isn’t the most likely value, but it’s not completely implausible either.

The Role of Hypothesis Tests

The sampling distribution shows us that we are relatively unlikely to obtain a sample of 330.6 if the population mean is 260. Is our sample mean so unlikely that we can reject the notion that the population mean is 260?

In statistics, we call this rejecting the null hypothesis. If we reject the null for our example, the difference between the sample mean (330.6) and 260 is statistically significant. In other words, the sample data favor the hypothesis that the population average does not equal 260.

However, look at the sampling distribution chart again. Notice that there is no special location on the curve where you can definitively draw this conclusion. There is only a consistent decrease in the likelihood of observing sample means that are farther from the null hypothesis value. Where do we decide a sample mean is far away enough?

To answer this question, we’ll need more tools—hypothesis tests! The hypothesis testing procedure quantifies the unusualness of our sample with a probability and then compares it to an evidentiary standard. This process allows you to make an objective decision about the strength of the evidence.

We’re going to add the tools we need to make this decision to the graph—significance levels and p-values!

These tools allow us to test these two hypotheses:

- Null hypothesis: The population mean equals the null hypothesis mean (260).

- Alternative hypothesis: The population mean does not equal the null hypothesis mean (260).

Related post : Hypothesis Testing Overview

What are Significance Levels (Alpha)?

A significance level, also known as alpha or α, is an evidentiary standard that a researcher sets before the study. It defines how strongly the sample evidence must contradict the null hypothesis before you can reject the null hypothesis for the entire population. The strength of the evidence is defined by the probability of rejecting a null hypothesis that is true. In other words, it is the probability that you say there is an effect when there is no effect.

For instance, a significance level of 0.05 signifies a 5% risk of deciding that an effect exists when it does not exist.

Lower significance levels require stronger sample evidence to be able to reject the null hypothesis. For example, to be statistically significant at the 0.01 significance level requires more substantial evidence than the 0.05 significance level. However, there is a tradeoff in hypothesis tests. Lower significance levels also reduce the power of a hypothesis test to detect a difference that does exist.

The technical nature of these types of questions can make your head spin. A picture can bring these ideas to life!

To learn a more conceptual approach to significance levels, see my post about Understanding Significance Levels .

Graphing Significance Levels as Critical Regions

On the probability distribution plot, the significance level defines how far the sample value must be from the null value before we can reject the null. The percentage of the area under the curve that is shaded equals the probability that the sample value will fall in those regions if the null hypothesis is correct.

To represent a significance level of 0.05, I’ll shade 5% of the distribution furthest from the null value.

The two shaded regions in the graph are equidistant from the central value of the null hypothesis. Each region has a probability of 0.025, which sums to our desired total of 0.05. These shaded areas are called the critical region for a two-tailed hypothesis test.

The critical region defines sample values that are improbable enough to warrant rejecting the null hypothesis. If the null hypothesis is correct and the population mean is 260, random samples (n=25) from this population have means that fall in the critical region 5% of the time.

Our sample mean is statistically significant at the 0.05 level because it falls in the critical region.

Related posts : One-Tailed and Two-Tailed Tests Explained , What Are Critical Values? , and T-distribution Table of Critical Values

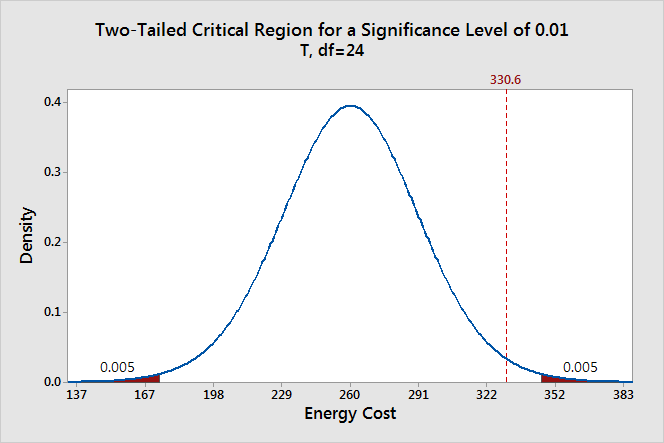

Comparing Significance Levels

Let’s redo this hypothesis test using the other common significance level of 0.01 to see how it compares.

This time the sum of the two shaded regions equals our new significance level of 0.01. The mean of our sample does not fall within with the critical region. Consequently, we fail to reject the null hypothesis. We have the same exact sample data, the same difference between the sample mean and the null hypothesis value, but a different test result.

What happened? By specifying a lower significance level, we set a higher bar for the sample evidence. As the graph shows, lower significance levels move the critical regions further away from the null value. Consequently, lower significance levels require more extreme sample means to be statistically significant.

You must set the significance level before conducting a study. You don’t want the temptation of choosing a level after the study that yields significant results. The only reason I compared the two significance levels was to illustrate the effects and explain the differing results.

The graphical version of the 1-sample t-test we created allows us to determine statistical significance without assessing the P value. Typically, you need to compare the P value to the significance level to make this determination.

Related post : Step-by-Step Instructions for How to Do t-Tests in Excel

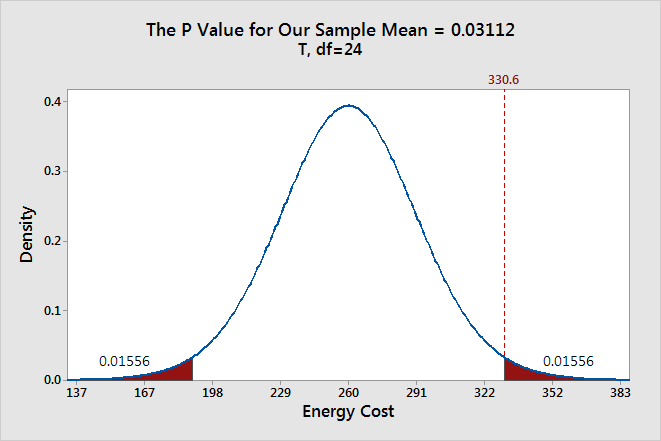

What Are P values?

P values are the probability that a sample will have an effect at least as extreme as the effect observed in your sample if the null hypothesis is correct.

This tortuous, technical definition for P values can make your head spin. Let’s graph it!

First, we need to calculate the effect that is present in our sample. The effect is the distance between the sample value and null value: 330.6 – 260 = 70.6. Next, I’ll shade the regions on both sides of the distribution that are at least as far away as 70.6 from the null (260 +/- 70.6). This process graphs the probability of observing a sample mean at least as extreme as our sample mean.

The total probability of the two shaded regions is 0.03112. If the null hypothesis value (260) is true and you drew many random samples, you’d expect sample means to fall in the shaded regions about 3.1% of the time. In other words, you will observe sample effects at least as large as 70.6 about 3.1% of the time if the null is true. That’s the P value!

Learn more about How to Find the P Value .

Using P values and Significance Levels Together

If your P value is less than or equal to your alpha level, reject the null hypothesis.

The P value results are consistent with our graphical representation. The P value of 0.03112 is significant at the alpha level of 0.05 but not 0.01. Again, in practice, you pick one significance level before the experiment and stick with it!

Using the significance level of 0.05, the sample effect is statistically significant. Our data support the alternative hypothesis, which states that the population mean doesn’t equal 260. We can conclude that mean fuel expenditures have increased since last year.

P values are very frequently misinterpreted as the probability of rejecting a null hypothesis that is actually true. This interpretation is wrong! To understand why, please read my post: How to Interpret P-values Correctly .

Discussion about Statistically Significant Results

Hypothesis tests determine whether your sample data provide sufficient evidence to reject the null hypothesis for the entire population. To perform this test, the procedure compares your sample statistic to the null value and determines whether it is sufficiently rare. “Sufficiently rare” is defined in a hypothesis test by:

- Assuming that the null hypothesis is true—the graphs center on the null value.

- The significance (alpha) level—how far out from the null value is the critical region?

- The sample statistic—is it within the critical region?

There is no special significance level that correctly determines which studies have real population effects 100% of the time. The traditional significance levels of 0.05 and 0.01 are attempts to manage the tradeoff between having a low probability of rejecting a true null hypothesis and having adequate power to detect an effect if one actually exists.

The significance level is the rate at which you incorrectly reject null hypotheses that are actually true ( type I error ). For example, for all studies that use a significance level of 0.05 and the null hypothesis is correct, you can expect 5% of them to have sample statistics that fall in the critical region. When this error occurs, you aren’t aware that the null hypothesis is correct, but you’ll reject it because the p-value is less than 0.05.

This error does not indicate that the researcher made a mistake. As the graphs show, you can observe extreme sample statistics due to sample error alone. It’s the luck of the draw!

Related posts : Statistical Significance: Definition & Meaning and Types of Errors in Hypothesis Testing

Hypothesis tests are crucial when you want to use sample data to make conclusions about a population because these tests account for sample error. Using significance levels and P values to determine when to reject the null hypothesis improves the probability that you will draw the correct conclusion.

Keep in mind that statistical significance doesn’t necessarily mean that the effect is important in a practical, real-world sense. For more information, read my post about Practical vs. Statistical Significance .

If you like this post, read the companion post: How Hypothesis Tests Work: Confidence Intervals and Confidence Levels .

You can also read my other posts that describe how other tests work:

- How t-Tests Work

- How the F-test works in ANOVA

- How Chi-Squared Tests of Independence Work

To see an alternative approach to traditional hypothesis testing that does not use probability distributions and test statistics, learn about bootstrapping in statistics !

Share this:

Reader Interactions

December 11, 2022 at 10:56 am

For very easy concept about level of significance & p-value 1.Teacher has given a one assignment to student & asked how many error you have doing this assignment? Student reply, he can has error ≤ 5% (it is level of significance). After completion of assignment, teacher checked his error which is ≤ 5% (may be 4% or 3% or 2% even less, it is p-value) it means his results are significant. Otherwise he has error > 5% (may be 6% or 7% or 8% even more, it is p-value) it means his results are non-significant. 2. Teacher has given a one assignment to student & asked how many error you have doing this assignment? Student reply, he can has error ≤ 1% (it is level of significance). After completion of assignment, teacher checked his error which is ≤ 1% (may be 0.9% or 0.8% or 0.7% even less, it is p-value) it means his results are significant. Otherwise he has error > 1% (may be 1.1% or 1.5% or 2% even more, it is p-value) it means his results are non-significant. p-value is significant or not mainly dependent upon the level of significance.

December 11, 2022 at 7:50 pm

I think that approach helps explain how to determine statistical significance–is the p-value less than or equal to the significance level. However, it doesn’t really explain what statistical significance means. I find that comparing the p-value to the significance level is the easy part. Knowing what it means and how to choose your significance level is the harder part!

December 3, 2022 at 5:54 pm

What would you say to someone who believes that a p-value higher than the level of significance (alpha) means the null hypothesis has been proven? Should you support that statement or deny it?

December 3, 2022 at 10:18 pm

Hi Emmanuel,

When the p-value is greater than the significance level, you fail to reject the null hypothesis . That is different than proving it. To learn why and what it means, click the link to read a post that I’ve written that will answer your question!

April 19, 2021 at 12:27 am

Thank you so much Sir

April 18, 2021 at 2:37 pm

Hi sir, your blogs are much more helpful for clearing the concepts of statistics, as a researcher I find them much more useful. I have some quarries:

1. In many research papers I have seen authors using the statement ” means or values are statically at par at p = 0.05″ when they do some pair wise comparison between the treatments (a kind of post hoc) using some value of CD (critical difference) or we can say LSD which is calculated using alpha not using p. So with this article I think this should be alpha =0.05 or 5%, not p = 0.05 earlier I thought p and alpha are same. p it self is compared with alpha 0.05. Correct me if I am wrong.

2. When we can draw a conclusion using critical value based on critical values (CV) which is based on alpha values in different tests (e.g. in F test CV is at F (0.05, t-1, error df) when alpha is 0.05 which is table value of F and is compared with F calculated for drawing the conclusion); then why we go for p values, and draw a conclusion based on p values, even many online software do not give p value, they just mention CD (LSD)

3. can you please help me in interpreting interaction in two factor analysis (Factor A X Factor b) in Anova.

Thank You so much!

(Commenting again as I have not seen my comment in comment list; don’t know why)

April 18, 2021 at 10:57 pm

Hi Himanshu,

I manually approve comments so there will be some time lag involved before they show up.

Regarding your first question, yes, you’re correct. Test results are significant at particular significance levels or alpha. They should not use p to define the significance level. You’re also correct in that you compare p to alpha.

Critical values are a different (but related) approach for determining significance. It was more common before computer analysis took off because it reduced the calculations. Using this approach in its simplest form, you only know whether a result is significant or not at the given alpha. You just determine whether the test statistic falls within a critical region to determine statistical significance or not significant. However, it is ok to supplement this type of result with the actual p-value. Knowing the precise p-value provides additional information that significant/not significant does not provide. The critical value and p-value approaches will always agree too. For more information about why the exact p-value is useful, read my post about Five Tips for Interpreting P-values .

Finally, I’ve written about two-way ANOVA in my post, How to do Two-Way ANOVA in Excel . Additionally, I write about it in my Hypothesis Testing ebook .

January 28, 2021 at 3:12 pm

Thank you for your answer, Jim, I really appreciate it. I’m taking a Coursera stats course and online learning without being able to ask questions of a real teacher is not my forte!

You’re right, I don’t think I’m ready for that calculation! However, I think I’m struggling with something far more basic, perhaps even the interpretation of the t-table? I’m just not sure how you came up with the p-value as .03112, with the 24 degrees of freedom. When I pull up a t-table and look at the 24-degrees of freedom row, I’m not sure how any of those numbers correspond with your answer? Either the single tail of 0.01556 or the combined of 0.03112. What am I not getting? (which, frankly, could be a lot!!) Again, thank you SO much for your time.

January 28, 2021 at 11:19 pm

Ah ok, I see! First, let me point you to several posts I’ve written about t-values and the t-distribution. I don’t cover those in this post because I wanted to present a simplified version that just uses the data in its regular units. The basic idea is that the hypothesis tests actually convert all your raw data down into one value for a test statistic, such as the t-value. And then it uses that test statistic to determine whether your results are statistically significant. To be significant, the t-value must exceed a critical value, which is what you lookup in the table. Although, nowadays you’d typically let your software just tell you.