Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

| Qualitative approach | Quantitative approach |

|---|---|

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

| Type of design | Purpose and characteristics |

|---|---|

| Experimental | |

| Quasi-experimental | |

| Correlational | |

| Descriptive |

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

| Type of design | Purpose and characteristics |

|---|---|

| Grounded theory | |

| Phenomenology |

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

| Probability sampling | Non-probability sampling |

|---|---|

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

| Questionnaires | Interviews |

|---|---|

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

| Quantitative observation | |

|---|---|

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

| Field | Examples of data collection methods |

|---|---|

| Media & communication | Collecting a sample of texts (e.g., speeches, articles, or social media posts) for data on cultural norms and narratives |

| Psychology | Using technologies like neuroimaging, eye-tracking, or computer-based tasks to collect data on things like attention, emotional response, or reaction time |

| Education | Using tests or assignments to collect data on knowledge and skills |

| Physical sciences | Using scientific instruments to collect data on things like weight, blood pressure, or chemical composition |

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced , while validity means that you’re actually measuring the concept you’re interested in.

| Reliability | Validity |

|---|---|

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method, you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample – by mail, online, by phone, or in person?

If you’re using a probability sampling method, it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method, how will you avoid bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organising and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymise and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well organised will save time when it comes to analysing them. It can also help other researchers validate and add to your findings.

On their own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyse the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarise your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarise your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

| Approach | Characteristics |

|---|---|

| Thematic analysis | |

| Discourse analysis |

There are many other ways of analysing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, March 20). Research Design | Step-by-Step Guide with Examples. Scribbr. Retrieved 16 July 2024, from https://www.scribbr.co.uk/research-methods/research-design/

Is this article helpful?

Shona McCombes

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

Methodology

- Guide to Experimental Design | Overview, Steps, & Examples

Guide to Experimental Design | Overview, 5 steps & Examples

Published on December 3, 2019 by Rebecca Bevans . Revised on June 21, 2023.

Experiments are used to study causal relationships . You manipulate one or more independent variables and measure their effect on one or more dependent variables.

Experimental design create a set of procedures to systematically test a hypothesis . A good experimental design requires a strong understanding of the system you are studying.

There are five key steps in designing an experiment:

- Consider your variables and how they are related

- Write a specific, testable hypothesis

- Design experimental treatments to manipulate your independent variable

- Assign subjects to groups, either between-subjects or within-subjects

- Plan how you will measure your dependent variable

For valid conclusions, you also need to select a representative sample and control any extraneous variables that might influence your results. If random assignment of participants to control and treatment groups is impossible, unethical, or highly difficult, consider an observational study instead. This minimizes several types of research bias, particularly sampling bias , survivorship bias , and attrition bias as time passes.

Table of contents

Step 1: define your variables, step 2: write your hypothesis, step 3: design your experimental treatments, step 4: assign your subjects to treatment groups, step 5: measure your dependent variable, other interesting articles, frequently asked questions about experiments.

You should begin with a specific research question . We will work with two research question examples, one from health sciences and one from ecology:

To translate your research question into an experimental hypothesis, you need to define the main variables and make predictions about how they are related.

Start by simply listing the independent and dependent variables .

| Research question | Independent variable | Dependent variable |

|---|---|---|

| Phone use and sleep | Minutes of phone use before sleep | Hours of sleep per night |

| Temperature and soil respiration | Air temperature just above the soil surface | CO2 respired from soil |

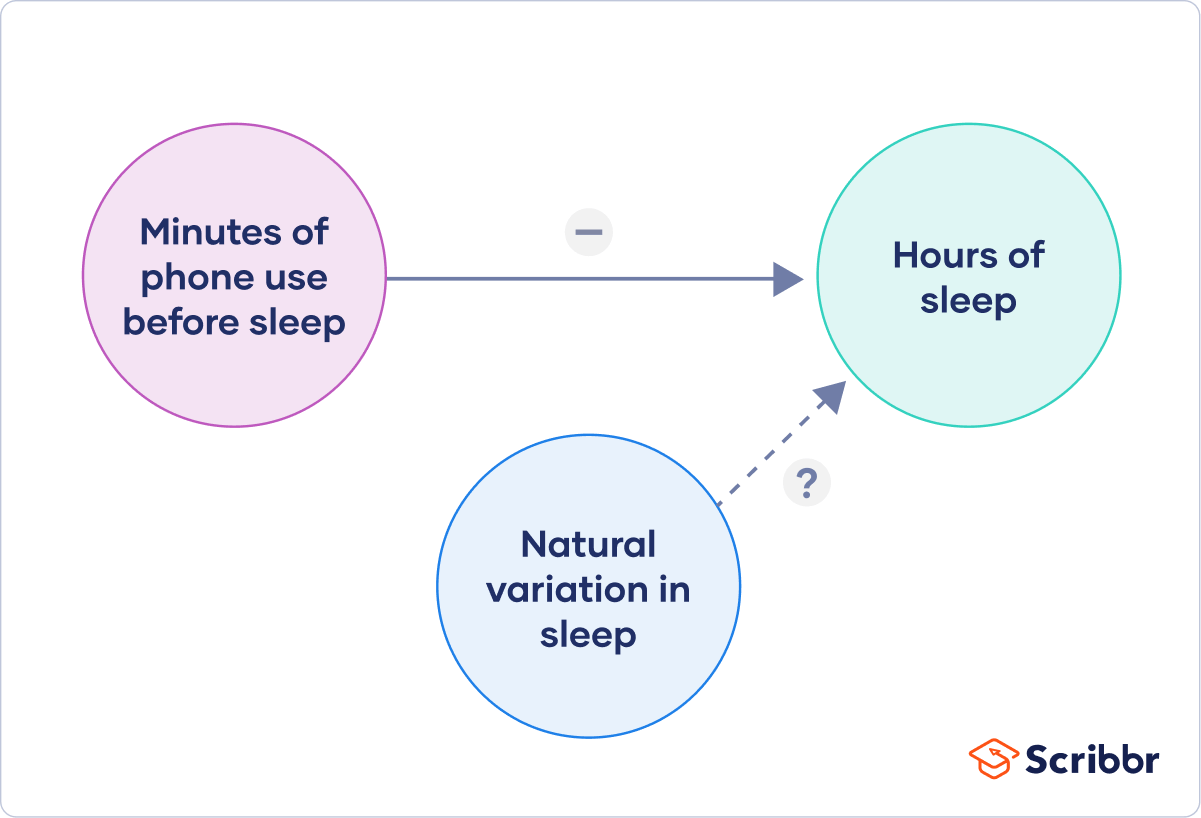

Then you need to think about possible extraneous and confounding variables and consider how you might control them in your experiment.

| Extraneous variable | How to control | |

|---|---|---|

| Phone use and sleep | in sleep patterns among individuals. | measure the average difference between sleep with phone use and sleep without phone use rather than the average amount of sleep per treatment group. |

| Temperature and soil respiration | also affects respiration, and moisture can decrease with increasing temperature. | monitor soil moisture and add water to make sure that soil moisture is consistent across all treatment plots. |

Finally, you can put these variables together into a diagram. Use arrows to show the possible relationships between variables and include signs to show the expected direction of the relationships.

Here we predict that increasing temperature will increase soil respiration and decrease soil moisture, while decreasing soil moisture will lead to decreased soil respiration.

Prevent plagiarism. Run a free check.

Now that you have a strong conceptual understanding of the system you are studying, you should be able to write a specific, testable hypothesis that addresses your research question.

| Null hypothesis (H ) | Alternate hypothesis (H ) | |

|---|---|---|

| Phone use and sleep | Phone use before sleep does not correlate with the amount of sleep a person gets. | Increasing phone use before sleep leads to a decrease in sleep. |

| Temperature and soil respiration | Air temperature does not correlate with soil respiration. | Increased air temperature leads to increased soil respiration. |

The next steps will describe how to design a controlled experiment . In a controlled experiment, you must be able to:

- Systematically and precisely manipulate the independent variable(s).

- Precisely measure the dependent variable(s).

- Control any potential confounding variables.

If your study system doesn’t match these criteria, there are other types of research you can use to answer your research question.

How you manipulate the independent variable can affect the experiment’s external validity – that is, the extent to which the results can be generalized and applied to the broader world.

First, you may need to decide how widely to vary your independent variable.

- just slightly above the natural range for your study region.

- over a wider range of temperatures to mimic future warming.

- over an extreme range that is beyond any possible natural variation.

Second, you may need to choose how finely to vary your independent variable. Sometimes this choice is made for you by your experimental system, but often you will need to decide, and this will affect how much you can infer from your results.

- a categorical variable : either as binary (yes/no) or as levels of a factor (no phone use, low phone use, high phone use).

- a continuous variable (minutes of phone use measured every night).

How you apply your experimental treatments to your test subjects is crucial for obtaining valid and reliable results.

First, you need to consider the study size : how many individuals will be included in the experiment? In general, the more subjects you include, the greater your experiment’s statistical power , which determines how much confidence you can have in your results.

Then you need to randomly assign your subjects to treatment groups . Each group receives a different level of the treatment (e.g. no phone use, low phone use, high phone use).

You should also include a control group , which receives no treatment. The control group tells us what would have happened to your test subjects without any experimental intervention.

When assigning your subjects to groups, there are two main choices you need to make:

- A completely randomized design vs a randomized block design .

- A between-subjects design vs a within-subjects design .

Randomization

An experiment can be completely randomized or randomized within blocks (aka strata):

- In a completely randomized design , every subject is assigned to a treatment group at random.

- In a randomized block design (aka stratified random design), subjects are first grouped according to a characteristic they share, and then randomly assigned to treatments within those groups.

| Completely randomized design | Randomized block design | |

|---|---|---|

| Phone use and sleep | Subjects are all randomly assigned a level of phone use using a random number generator. | Subjects are first grouped by age, and then phone use treatments are randomly assigned within these groups. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random by using a number generator to generate map coordinates within the study area. | Soils are first grouped by average rainfall, and then treatment plots are randomly assigned within these groups. |

Sometimes randomization isn’t practical or ethical , so researchers create partially-random or even non-random designs. An experimental design where treatments aren’t randomly assigned is called a quasi-experimental design .

Between-subjects vs. within-subjects

In a between-subjects design (also known as an independent measures design or classic ANOVA design), individuals receive only one of the possible levels of an experimental treatment.

In medical or social research, you might also use matched pairs within your between-subjects design to make sure that each treatment group contains the same variety of test subjects in the same proportions.

In a within-subjects design (also known as a repeated measures design), every individual receives each of the experimental treatments consecutively, and their responses to each treatment are measured.

Within-subjects or repeated measures can also refer to an experimental design where an effect emerges over time, and individual responses are measured over time in order to measure this effect as it emerges.

Counterbalancing (randomizing or reversing the order of treatments among subjects) is often used in within-subjects designs to ensure that the order of treatment application doesn’t influence the results of the experiment.

| Between-subjects (independent measures) design | Within-subjects (repeated measures) design | |

|---|---|---|

| Phone use and sleep | Subjects are randomly assigned a level of phone use (none, low, or high) and follow that level of phone use throughout the experiment. | Subjects are assigned consecutively to zero, low, and high levels of phone use throughout the experiment, and the order in which they follow these treatments is randomized. |

| Temperature and soil respiration | Warming treatments are assigned to soil plots at random and the soils are kept at this temperature throughout the experiment. | Every plot receives each warming treatment (1, 3, 5, 8, and 10C above ambient temperatures) consecutively over the course of the experiment, and the order in which they receive these treatments is randomized. |

Finally, you need to decide how you’ll collect data on your dependent variable outcomes. You should aim for reliable and valid measurements that minimize research bias or error.

Some variables, like temperature, can be objectively measured with scientific instruments. Others may need to be operationalized to turn them into measurable observations.

- Ask participants to record what time they go to sleep and get up each day.

- Ask participants to wear a sleep tracker.

How precisely you measure your dependent variable also affects the kinds of statistical analysis you can use on your data.

Experiments are always context-dependent, and a good experimental design will take into account all of the unique considerations of your study system to produce information that is both valid and relevant to your research question.

If you want to know more about statistics , methodology , or research bias , make sure to check out some of our other articles with explanations and examples.

- Student’s t -distribution

- Normal distribution

- Null and Alternative Hypotheses

- Chi square tests

- Confidence interval

- Cluster sampling

- Stratified sampling

- Data cleansing

- Reproducibility vs Replicability

- Peer review

- Likert scale

Research bias

- Implicit bias

- Framing effect

- Cognitive bias

- Placebo effect

- Hawthorne effect

- Hindsight bias

- Affect heuristic

Experimental design means planning a set of procedures to investigate a relationship between variables . To design a controlled experiment, you need:

- A testable hypothesis

- At least one independent variable that can be precisely manipulated

- At least one dependent variable that can be precisely measured

When designing the experiment, you decide:

- How you will manipulate the variable(s)

- How you will control for any potential confounding variables

- How many subjects or samples will be included in the study

- How subjects will be assigned to treatment levels

Experimental design is essential to the internal and external validity of your experiment.

The key difference between observational studies and experimental designs is that a well-done observational study does not influence the responses of participants, while experiments do have some sort of treatment condition applied to at least some participants by random assignment .

A confounding variable , also called a confounder or confounding factor, is a third variable in a study examining a potential cause-and-effect relationship.

A confounding variable is related to both the supposed cause and the supposed effect of the study. It can be difficult to separate the true effect of the independent variable from the effect of the confounding variable.

In your research design , it’s important to identify potential confounding variables and plan how you will reduce their impact.

In a between-subjects design , every participant experiences only one condition, and researchers assess group differences between participants in various conditions.

In a within-subjects design , each participant experiences all conditions, and researchers test the same participants repeatedly for differences between conditions.

The word “between” means that you’re comparing different conditions between groups, while the word “within” means you’re comparing different conditions within the same group.

An experimental group, also known as a treatment group, receives the treatment whose effect researchers wish to study, whereas a control group does not. They should be identical in all other ways.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

Bevans, R. (2023, June 21). Guide to Experimental Design | Overview, 5 steps & Examples. Scribbr. Retrieved July 16, 2024, from https://www.scribbr.com/methodology/experimental-design/

Is this article helpful?

Rebecca Bevans

Other students also liked, random assignment in experiments | introduction & examples, quasi-experimental design | definition, types & examples, how to write a lab report, what is your plagiarism score.

Experimental Design: Types, Examples & Methods

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

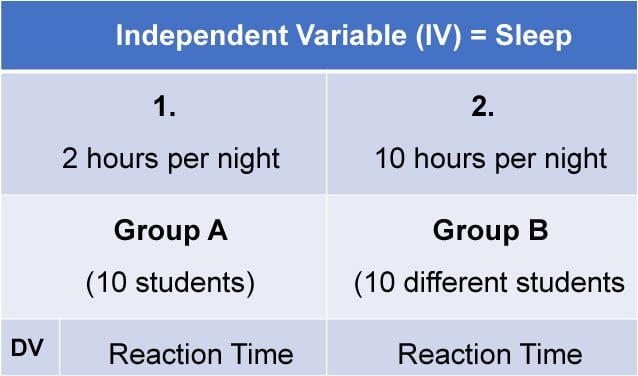

Experimental design refers to how participants are allocated to different groups in an experiment. Types of design include repeated measures, independent groups, and matched pairs designs.

Probably the most common way to design an experiment in psychology is to divide the participants into two groups, the experimental group and the control group, and then introduce a change to the experimental group, not the control group.

The researcher must decide how he/she will allocate their sample to the different experimental groups. For example, if there are 10 participants, will all 10 participants participate in both groups (e.g., repeated measures), or will the participants be split in half and take part in only one group each?

Three types of experimental designs are commonly used:

1. Independent Measures

Independent measures design, also known as between-groups , is an experimental design where different participants are used in each condition of the independent variable. This means that each condition of the experiment includes a different group of participants.

This should be done by random allocation, ensuring that each participant has an equal chance of being assigned to one group.

Independent measures involve using two separate groups of participants, one in each condition. For example:

- Con : More people are needed than with the repeated measures design (i.e., more time-consuming).

- Pro : Avoids order effects (such as practice or fatigue) as people participate in one condition only. If a person is involved in several conditions, they may become bored, tired, and fed up by the time they come to the second condition or become wise to the requirements of the experiment!

- Con : Differences between participants in the groups may affect results, for example, variations in age, gender, or social background. These differences are known as participant variables (i.e., a type of extraneous variable ).

- Control : After the participants have been recruited, they should be randomly assigned to their groups. This should ensure the groups are similar, on average (reducing participant variables).

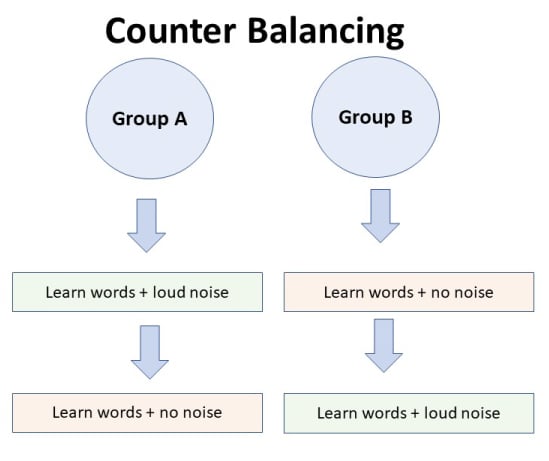

2. Repeated Measures Design

Repeated Measures design is an experimental design where the same participants participate in each independent variable condition. This means that each experiment condition includes the same group of participants.

Repeated Measures design is also known as within-groups or within-subjects design .

- Pro : As the same participants are used in each condition, participant variables (i.e., individual differences) are reduced.

- Con : There may be order effects. Order effects refer to the order of the conditions affecting the participants’ behavior. Performance in the second condition may be better because the participants know what to do (i.e., practice effect). Or their performance might be worse in the second condition because they are tired (i.e., fatigue effect). This limitation can be controlled using counterbalancing.

- Pro : Fewer people are needed as they participate in all conditions (i.e., saves time).

- Control : To combat order effects, the researcher counter-balances the order of the conditions for the participants. Alternating the order in which participants perform in different conditions of an experiment.

Counterbalancing

Suppose we used a repeated measures design in which all of the participants first learned words in “loud noise” and then learned them in “no noise.”

We expect the participants to learn better in “no noise” because of order effects, such as practice. However, a researcher can control for order effects using counterbalancing.

The sample would be split into two groups: experimental (A) and control (B). For example, group 1 does ‘A’ then ‘B,’ and group 2 does ‘B’ then ‘A.’ This is to eliminate order effects.

Although order effects occur for each participant, they balance each other out in the results because they occur equally in both groups.

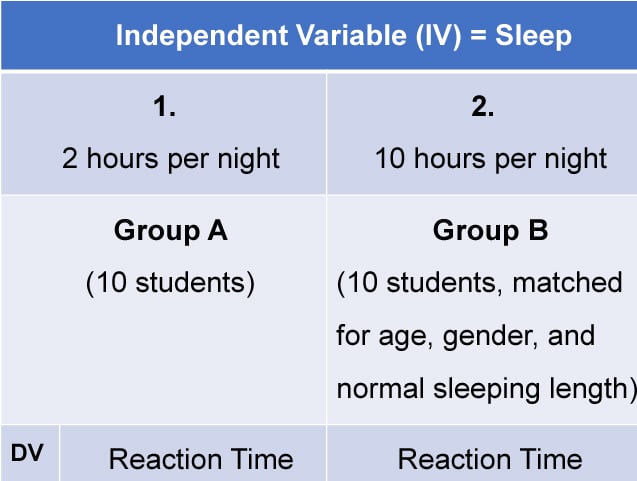

3. Matched Pairs Design

A matched pairs design is an experimental design where pairs of participants are matched in terms of key variables, such as age or socioeconomic status. One member of each pair is then placed into the experimental group and the other member into the control group .

One member of each matched pair must be randomly assigned to the experimental group and the other to the control group.

- Con : If one participant drops out, you lose 2 PPs’ data.

- Pro : Reduces participant variables because the researcher has tried to pair up the participants so that each condition has people with similar abilities and characteristics.

- Con : Very time-consuming trying to find closely matched pairs.

- Pro : It avoids order effects, so counterbalancing is not necessary.

- Con : Impossible to match people exactly unless they are identical twins!

- Control : Members of each pair should be randomly assigned to conditions. However, this does not solve all these problems.

Experimental design refers to how participants are allocated to an experiment’s different conditions (or IV levels). There are three types:

1. Independent measures / between-groups : Different participants are used in each condition of the independent variable.

2. Repeated measures /within groups : The same participants take part in each condition of the independent variable.

3. Matched pairs : Each condition uses different participants, but they are matched in terms of important characteristics, e.g., gender, age, intelligence, etc.

Learning Check

Read about each of the experiments below. For each experiment, identify (1) which experimental design was used; and (2) why the researcher might have used that design.

1 . To compare the effectiveness of two different types of therapy for depression, depressed patients were assigned to receive either cognitive therapy or behavior therapy for a 12-week period.

The researchers attempted to ensure that the patients in the two groups had similar severity of depressed symptoms by administering a standardized test of depression to each participant, then pairing them according to the severity of their symptoms.

2 . To assess the difference in reading comprehension between 7 and 9-year-olds, a researcher recruited each group from a local primary school. They were given the same passage of text to read and then asked a series of questions to assess their understanding.

3 . To assess the effectiveness of two different ways of teaching reading, a group of 5-year-olds was recruited from a primary school. Their level of reading ability was assessed, and then they were taught using scheme one for 20 weeks.

At the end of this period, their reading was reassessed, and a reading improvement score was calculated. They were then taught using scheme two for a further 20 weeks, and another reading improvement score for this period was calculated. The reading improvement scores for each child were then compared.

4 . To assess the effect of the organization on recall, a researcher randomly assigned student volunteers to two conditions.

Condition one attempted to recall a list of words that were organized into meaningful categories; condition two attempted to recall the same words, randomly grouped on the page.

Experiment Terminology

Ecological validity.

The degree to which an investigation represents real-life experiences.

Experimenter effects

These are the ways that the experimenter can accidentally influence the participant through their appearance or behavior.

Demand characteristics

The clues in an experiment lead the participants to think they know what the researcher is looking for (e.g., the experimenter’s body language).

Independent variable (IV)

The variable the experimenter manipulates (i.e., changes) is assumed to have a direct effect on the dependent variable.

Dependent variable (DV)

Variable the experimenter measures. This is the outcome (i.e., the result) of a study.

Extraneous variables (EV)

All variables which are not independent variables but could affect the results (DV) of the experiment. Extraneous variables should be controlled where possible.

Confounding variables

Variable(s) that have affected the results (DV), apart from the IV. A confounding variable could be an extraneous variable that has not been controlled.

Random Allocation

Randomly allocating participants to independent variable conditions means that all participants should have an equal chance of taking part in each condition.

The principle of random allocation is to avoid bias in how the experiment is carried out and limit the effects of participant variables.

Order effects

Changes in participants’ performance due to their repeating the same or similar test more than once. Examples of order effects include:

(i) practice effect: an improvement in performance on a task due to repetition, for example, because of familiarity with the task;

(ii) fatigue effect: a decrease in performance of a task due to repetition, for example, because of boredom or tiredness.

- Privacy Policy

Home » Experimental Design – Types, Methods, Guide

Experimental Design – Types, Methods, Guide

Table of Contents

Experimental Design

Experimental design is a process of planning and conducting scientific experiments to investigate a hypothesis or research question. It involves carefully designing an experiment that can test the hypothesis, and controlling for other variables that may influence the results.

Experimental design typically includes identifying the variables that will be manipulated or measured, defining the sample or population to be studied, selecting an appropriate method of sampling, choosing a method for data collection and analysis, and determining the appropriate statistical tests to use.

Types of Experimental Design

Here are the different types of experimental design:

Completely Randomized Design

In this design, participants are randomly assigned to one of two or more groups, and each group is exposed to a different treatment or condition.

Randomized Block Design

This design involves dividing participants into blocks based on a specific characteristic, such as age or gender, and then randomly assigning participants within each block to one of two or more treatment groups.

Factorial Design

In a factorial design, participants are randomly assigned to one of several groups, each of which receives a different combination of two or more independent variables.

Repeated Measures Design

In this design, each participant is exposed to all of the different treatments or conditions, either in a random order or in a predetermined order.

Crossover Design

This design involves randomly assigning participants to one of two or more treatment groups, with each group receiving one treatment during the first phase of the study and then switching to a different treatment during the second phase.

Split-plot Design

In this design, the researcher manipulates one or more variables at different levels and uses a randomized block design to control for other variables.

Nested Design

This design involves grouping participants within larger units, such as schools or households, and then randomly assigning these units to different treatment groups.

Laboratory Experiment

Laboratory experiments are conducted under controlled conditions, which allows for greater precision and accuracy. However, because laboratory conditions are not always representative of real-world conditions, the results of these experiments may not be generalizable to the population at large.

Field Experiment

Field experiments are conducted in naturalistic settings and allow for more realistic observations. However, because field experiments are not as controlled as laboratory experiments, they may be subject to more sources of error.

Experimental Design Methods

Experimental design methods refer to the techniques and procedures used to design and conduct experiments in scientific research. Here are some common experimental design methods:

Randomization

This involves randomly assigning participants to different groups or treatments to ensure that any observed differences between groups are due to the treatment and not to other factors.

Control Group

The use of a control group is an important experimental design method that involves having a group of participants that do not receive the treatment or intervention being studied. The control group is used as a baseline to compare the effects of the treatment group.

Blinding involves keeping participants, researchers, or both unaware of which treatment group participants are in, in order to reduce the risk of bias in the results.

Counterbalancing

This involves systematically varying the order in which participants receive treatments or interventions in order to control for order effects.

Replication

Replication involves conducting the same experiment with different samples or under different conditions to increase the reliability and validity of the results.

This experimental design method involves manipulating multiple independent variables simultaneously to investigate their combined effects on the dependent variable.

This involves dividing participants into subgroups or blocks based on specific characteristics, such as age or gender, in order to reduce the risk of confounding variables.

Data Collection Method

Experimental design data collection methods are techniques and procedures used to collect data in experimental research. Here are some common experimental design data collection methods:

Direct Observation

This method involves observing and recording the behavior or phenomenon of interest in real time. It may involve the use of structured or unstructured observation, and may be conducted in a laboratory or naturalistic setting.

Self-report Measures

Self-report measures involve asking participants to report their thoughts, feelings, or behaviors using questionnaires, surveys, or interviews. These measures may be administered in person or online.

Behavioral Measures

Behavioral measures involve measuring participants’ behavior directly, such as through reaction time tasks or performance tests. These measures may be administered using specialized equipment or software.

Physiological Measures

Physiological measures involve measuring participants’ physiological responses, such as heart rate, blood pressure, or brain activity, using specialized equipment. These measures may be invasive or non-invasive, and may be administered in a laboratory or clinical setting.

Archival Data

Archival data involves using existing records or data, such as medical records, administrative records, or historical documents, as a source of information. These data may be collected from public or private sources.

Computerized Measures

Computerized measures involve using software or computer programs to collect data on participants’ behavior or responses. These measures may include reaction time tasks, cognitive tests, or other types of computer-based assessments.

Video Recording

Video recording involves recording participants’ behavior or interactions using cameras or other recording equipment. This method can be used to capture detailed information about participants’ behavior or to analyze social interactions.

Data Analysis Method

Experimental design data analysis methods refer to the statistical techniques and procedures used to analyze data collected in experimental research. Here are some common experimental design data analysis methods:

Descriptive Statistics

Descriptive statistics are used to summarize and describe the data collected in the study. This includes measures such as mean, median, mode, range, and standard deviation.

Inferential Statistics

Inferential statistics are used to make inferences or generalizations about a larger population based on the data collected in the study. This includes hypothesis testing and estimation.

Analysis of Variance (ANOVA)

ANOVA is a statistical technique used to compare means across two or more groups in order to determine whether there are significant differences between the groups. There are several types of ANOVA, including one-way ANOVA, two-way ANOVA, and repeated measures ANOVA.

Regression Analysis

Regression analysis is used to model the relationship between two or more variables in order to determine the strength and direction of the relationship. There are several types of regression analysis, including linear regression, logistic regression, and multiple regression.

Factor Analysis

Factor analysis is used to identify underlying factors or dimensions in a set of variables. This can be used to reduce the complexity of the data and identify patterns in the data.

Structural Equation Modeling (SEM)

SEM is a statistical technique used to model complex relationships between variables. It can be used to test complex theories and models of causality.

Cluster Analysis

Cluster analysis is used to group similar cases or observations together based on similarities or differences in their characteristics.

Time Series Analysis

Time series analysis is used to analyze data collected over time in order to identify trends, patterns, or changes in the data.

Multilevel Modeling

Multilevel modeling is used to analyze data that is nested within multiple levels, such as students nested within schools or employees nested within companies.

Applications of Experimental Design

Experimental design is a versatile research methodology that can be applied in many fields. Here are some applications of experimental design:

- Medical Research: Experimental design is commonly used to test new treatments or medications for various medical conditions. This includes clinical trials to evaluate the safety and effectiveness of new drugs or medical devices.

- Agriculture : Experimental design is used to test new crop varieties, fertilizers, and other agricultural practices. This includes randomized field trials to evaluate the effects of different treatments on crop yield, quality, and pest resistance.

- Environmental science: Experimental design is used to study the effects of environmental factors, such as pollution or climate change, on ecosystems and wildlife. This includes controlled experiments to study the effects of pollutants on plant growth or animal behavior.

- Psychology : Experimental design is used to study human behavior and cognitive processes. This includes experiments to test the effects of different interventions, such as therapy or medication, on mental health outcomes.

- Engineering : Experimental design is used to test new materials, designs, and manufacturing processes in engineering applications. This includes laboratory experiments to test the strength and durability of new materials, or field experiments to test the performance of new technologies.

- Education : Experimental design is used to evaluate the effectiveness of teaching methods, educational interventions, and programs. This includes randomized controlled trials to compare different teaching methods or evaluate the impact of educational programs on student outcomes.

- Marketing : Experimental design is used to test the effectiveness of marketing campaigns, pricing strategies, and product designs. This includes experiments to test the impact of different marketing messages or pricing schemes on consumer behavior.

Examples of Experimental Design

Here are some examples of experimental design in different fields:

- Example in Medical research : A study that investigates the effectiveness of a new drug treatment for a particular condition. Patients are randomly assigned to either a treatment group or a control group, with the treatment group receiving the new drug and the control group receiving a placebo. The outcomes, such as improvement in symptoms or side effects, are measured and compared between the two groups.

- Example in Education research: A study that examines the impact of a new teaching method on student learning outcomes. Students are randomly assigned to either a group that receives the new teaching method or a group that receives the traditional teaching method. Student achievement is measured before and after the intervention, and the results are compared between the two groups.

- Example in Environmental science: A study that tests the effectiveness of a new method for reducing pollution in a river. Two sections of the river are selected, with one section treated with the new method and the other section left untreated. The water quality is measured before and after the intervention, and the results are compared between the two sections.

- Example in Marketing research: A study that investigates the impact of a new advertising campaign on consumer behavior. Participants are randomly assigned to either a group that is exposed to the new campaign or a group that is not. Their behavior, such as purchasing or product awareness, is measured and compared between the two groups.

- Example in Social psychology: A study that examines the effect of a new social intervention on reducing prejudice towards a marginalized group. Participants are randomly assigned to either a group that receives the intervention or a control group that does not. Their attitudes and behavior towards the marginalized group are measured before and after the intervention, and the results are compared between the two groups.

When to use Experimental Research Design

Experimental research design should be used when a researcher wants to establish a cause-and-effect relationship between variables. It is particularly useful when studying the impact of an intervention or treatment on a particular outcome.

Here are some situations where experimental research design may be appropriate:

- When studying the effects of a new drug or medical treatment: Experimental research design is commonly used in medical research to test the effectiveness and safety of new drugs or medical treatments. By randomly assigning patients to treatment and control groups, researchers can determine whether the treatment is effective in improving health outcomes.

- When evaluating the effectiveness of an educational intervention: An experimental research design can be used to evaluate the impact of a new teaching method or educational program on student learning outcomes. By randomly assigning students to treatment and control groups, researchers can determine whether the intervention is effective in improving academic performance.

- When testing the effectiveness of a marketing campaign: An experimental research design can be used to test the effectiveness of different marketing messages or strategies. By randomly assigning participants to treatment and control groups, researchers can determine whether the marketing campaign is effective in changing consumer behavior.

- When studying the effects of an environmental intervention: Experimental research design can be used to study the impact of environmental interventions, such as pollution reduction programs or conservation efforts. By randomly assigning locations or areas to treatment and control groups, researchers can determine whether the intervention is effective in improving environmental outcomes.

- When testing the effects of a new technology: An experimental research design can be used to test the effectiveness and safety of new technologies or engineering designs. By randomly assigning participants or locations to treatment and control groups, researchers can determine whether the new technology is effective in achieving its intended purpose.

How to Conduct Experimental Research

Here are the steps to conduct Experimental Research:

- Identify a Research Question : Start by identifying a research question that you want to answer through the experiment. The question should be clear, specific, and testable.

- Develop a Hypothesis: Based on your research question, develop a hypothesis that predicts the relationship between the independent and dependent variables. The hypothesis should be clear and testable.

- Design the Experiment : Determine the type of experimental design you will use, such as a between-subjects design or a within-subjects design. Also, decide on the experimental conditions, such as the number of independent variables, the levels of the independent variable, and the dependent variable to be measured.

- Select Participants: Select the participants who will take part in the experiment. They should be representative of the population you are interested in studying.

- Randomly Assign Participants to Groups: If you are using a between-subjects design, randomly assign participants to groups to control for individual differences.

- Conduct the Experiment : Conduct the experiment by manipulating the independent variable(s) and measuring the dependent variable(s) across the different conditions.

- Analyze the Data: Analyze the data using appropriate statistical methods to determine if there is a significant effect of the independent variable(s) on the dependent variable(s).

- Draw Conclusions: Based on the data analysis, draw conclusions about the relationship between the independent and dependent variables. If the results support the hypothesis, then it is accepted. If the results do not support the hypothesis, then it is rejected.

- Communicate the Results: Finally, communicate the results of the experiment through a research report or presentation. Include the purpose of the study, the methods used, the results obtained, and the conclusions drawn.

Purpose of Experimental Design

The purpose of experimental design is to control and manipulate one or more independent variables to determine their effect on a dependent variable. Experimental design allows researchers to systematically investigate causal relationships between variables, and to establish cause-and-effect relationships between the independent and dependent variables. Through experimental design, researchers can test hypotheses and make inferences about the population from which the sample was drawn.

Experimental design provides a structured approach to designing and conducting experiments, ensuring that the results are reliable and valid. By carefully controlling for extraneous variables that may affect the outcome of the study, experimental design allows researchers to isolate the effect of the independent variable(s) on the dependent variable(s), and to minimize the influence of other factors that may confound the results.

Experimental design also allows researchers to generalize their findings to the larger population from which the sample was drawn. By randomly selecting participants and using statistical techniques to analyze the data, researchers can make inferences about the larger population with a high degree of confidence.

Overall, the purpose of experimental design is to provide a rigorous, systematic, and scientific method for testing hypotheses and establishing cause-and-effect relationships between variables. Experimental design is a powerful tool for advancing scientific knowledge and informing evidence-based practice in various fields, including psychology, biology, medicine, engineering, and social sciences.

Advantages of Experimental Design

Experimental design offers several advantages in research. Here are some of the main advantages:

- Control over extraneous variables: Experimental design allows researchers to control for extraneous variables that may affect the outcome of the study. By manipulating the independent variable and holding all other variables constant, researchers can isolate the effect of the independent variable on the dependent variable.

- Establishing causality: Experimental design allows researchers to establish causality by manipulating the independent variable and observing its effect on the dependent variable. This allows researchers to determine whether changes in the independent variable cause changes in the dependent variable.

- Replication : Experimental design allows researchers to replicate their experiments to ensure that the findings are consistent and reliable. Replication is important for establishing the validity and generalizability of the findings.

- Random assignment: Experimental design often involves randomly assigning participants to conditions. This helps to ensure that individual differences between participants are evenly distributed across conditions, which increases the internal validity of the study.

- Precision : Experimental design allows researchers to measure variables with precision, which can increase the accuracy and reliability of the data.

- Generalizability : If the study is well-designed, experimental design can increase the generalizability of the findings. By controlling for extraneous variables and using random assignment, researchers can increase the likelihood that the findings will apply to other populations and contexts.

Limitations of Experimental Design

Experimental design has some limitations that researchers should be aware of. Here are some of the main limitations:

- Artificiality : Experimental design often involves creating artificial situations that may not reflect real-world situations. This can limit the external validity of the findings, or the extent to which the findings can be generalized to real-world settings.

- Ethical concerns: Some experimental designs may raise ethical concerns, particularly if they involve manipulating variables that could cause harm to participants or if they involve deception.

- Participant bias : Participants in experimental studies may modify their behavior in response to the experiment, which can lead to participant bias.

- Limited generalizability: The conditions of the experiment may not reflect the complexities of real-world situations. As a result, the findings may not be applicable to all populations and contexts.

- Cost and time : Experimental design can be expensive and time-consuming, particularly if the experiment requires specialized equipment or if the sample size is large.

- Researcher bias : Researchers may unintentionally bias the results of the experiment if they have expectations or preferences for certain outcomes.

- Lack of feasibility : Experimental design may not be feasible in some cases, particularly if the research question involves variables that cannot be manipulated or controlled.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Survey Research – Types, Methods, Examples

One-to-One Interview – Methods and Guide

Quantitative Research – Methods, Types and...

Exploratory Research – Types, Methods and...

Focus Groups – Steps, Examples and Guide

Textual Analysis – Types, Examples and Guide

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

5 Research design

Research design is a comprehensive plan for data collection in an empirical research project. It is a ‘blueprint’ for empirical research aimed at answering specific research questions or testing specific hypotheses, and must specify at least three processes: the data collection process, the instrument development process, and the sampling process. The instrument development and sampling processes are described in the next two chapters, and the data collection process—which is often loosely called ‘research design’—is introduced in this chapter and is described in further detail in Chapters 9–12.

Broadly speaking, data collection methods can be grouped into two categories: positivist and interpretive. Positivist methods , such as laboratory experiments and survey research, are aimed at theory (or hypotheses) testing, while interpretive methods, such as action research and ethnography, are aimed at theory building. Positivist methods employ a deductive approach to research, starting with a theory and testing theoretical postulates using empirical data. In contrast, interpretive methods employ an inductive approach that starts with data and tries to derive a theory about the phenomenon of interest from the observed data. Often times, these methods are incorrectly equated with quantitative and qualitative research. Quantitative and qualitative methods refers to the type of data being collected—quantitative data involve numeric scores, metrics, and so on, while qualitative data includes interviews, observations, and so forth—and analysed (i.e., using quantitative techniques such as regression or qualitative techniques such as coding). Positivist research uses predominantly quantitative data, but can also use qualitative data. Interpretive research relies heavily on qualitative data, but can sometimes benefit from including quantitative data as well. Sometimes, joint use of qualitative and quantitative data may help generate unique insight into a complex social phenomenon that is not available from either type of data alone, and hence, mixed-mode designs that combine qualitative and quantitative data are often highly desirable.

Key attributes of a research design

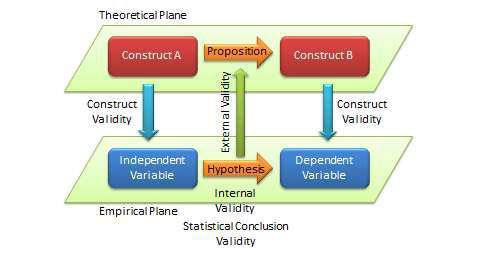

The quality of research designs can be defined in terms of four key design attributes: internal validity, external validity, construct validity, and statistical conclusion validity.

Internal validity , also called causality, examines whether the observed change in a dependent variable is indeed caused by a corresponding change in a hypothesised independent variable, and not by variables extraneous to the research context. Causality requires three conditions: covariation of cause and effect (i.e., if cause happens, then effect also happens; if cause does not happen, effect does not happen), temporal precedence (cause must precede effect in time), and spurious correlation, or there is no plausible alternative explanation for the change. Certain research designs, such as laboratory experiments, are strong in internal validity by virtue of their ability to manipulate the independent variable (cause) via a treatment and observe the effect (dependent variable) of that treatment after a certain point in time, while controlling for the effects of extraneous variables. Other designs, such as field surveys, are poor in internal validity because of their inability to manipulate the independent variable (cause), and because cause and effect are measured at the same point in time which defeats temporal precedence making it equally likely that the expected effect might have influenced the expected cause rather than the reverse. Although higher in internal validity compared to other methods, laboratory experiments are by no means immune to threats of internal validity, and are susceptible to history, testing, instrumentation, regression, and other threats that are discussed later in the chapter on experimental designs. Nonetheless, different research designs vary considerably in their respective level of internal validity.

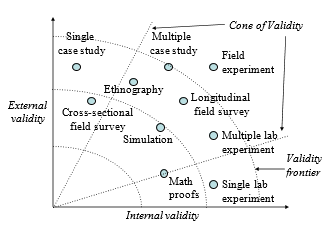

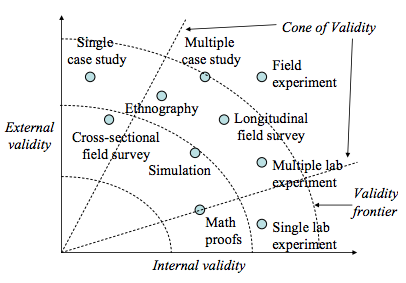

External validity or generalisability refers to whether the observed associations can be generalised from the sample to the population (population validity), or to other people, organisations, contexts, or time (ecological validity). For instance, can results drawn from a sample of financial firms in the United States be generalised to the population of financial firms (population validity) or to other firms within the United States (ecological validity)? Survey research, where data is sourced from a wide variety of individuals, firms, or other units of analysis, tends to have broader generalisability than laboratory experiments where treatments and extraneous variables are more controlled. The variation in internal and external validity for a wide range of research designs is shown in Figure 5.1.

Some researchers claim that there is a trade-off between internal and external validity—higher external validity can come only at the cost of internal validity and vice versa. But this is not always the case. Research designs such as field experiments, longitudinal field surveys, and multiple case studies have higher degrees of both internal and external validities. Personally, I prefer research designs that have reasonable degrees of both internal and external validities, i.e., those that fall within the cone of validity shown in Figure 5.1. But this should not suggest that designs outside this cone are any less useful or valuable. Researchers’ choice of designs are ultimately a matter of their personal preference and competence, and the level of internal and external validity they desire.

Construct validity examines how well a given measurement scale is measuring the theoretical construct that it is expected to measure. Many constructs used in social science research such as empathy, resistance to change, and organisational learning are difficult to define, much less measure. For instance, construct validity must ensure that a measure of empathy is indeed measuring empathy and not compassion, which may be difficult since these constructs are somewhat similar in meaning. Construct validity is assessed in positivist research based on correlational or factor analysis of pilot test data, as described in the next chapter.

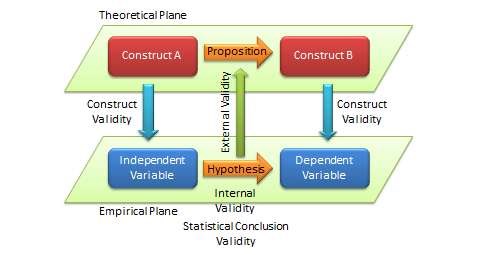

Statistical conclusion validity examines the extent to which conclusions derived using a statistical procedure are valid. For example, it examines whether the right statistical method was used for hypotheses testing, whether the variables used meet the assumptions of that statistical test (such as sample size or distributional requirements), and so forth. Because interpretive research designs do not employ statistical tests, statistical conclusion validity is not applicable for such analysis. The different kinds of validity and where they exist at the theoretical/empirical levels are illustrated in Figure 5.2.

Improving internal and external validity

The best research designs are those that can ensure high levels of internal and external validity. Such designs would guard against spurious correlations, inspire greater faith in the hypotheses testing, and ensure that the results drawn from a small sample are generalisable to the population at large. Controls are required to ensure internal validity (causality) of research designs, and can be accomplished in five ways: manipulation, elimination, inclusion, and statistical control, and randomisation.

In manipulation , the researcher manipulates the independent variables in one or more levels (called ‘treatments’), and compares the effects of the treatments against a control group where subjects do not receive the treatment. Treatments may include a new drug or different dosage of drug (for treating a medical condition), a teaching style (for students), and so forth. This type of control is achieved in experimental or quasi-experimental designs, but not in non-experimental designs such as surveys. Note that if subjects cannot distinguish adequately between different levels of treatment manipulations, their responses across treatments may not be different, and manipulation would fail.

The elimination technique relies on eliminating extraneous variables by holding them constant across treatments, such as by restricting the study to a single gender or a single socioeconomic status. In the inclusion technique, the role of extraneous variables is considered by including them in the research design and separately estimating their effects on the dependent variable, such as via factorial designs where one factor is gender (male versus female). Such technique allows for greater generalisability, but also requires substantially larger samples. In statistical control , extraneous variables are measured and used as covariates during the statistical testing process.

Finally, the randomisation technique is aimed at cancelling out the effects of extraneous variables through a process of random sampling, if it can be assured that these effects are of a random (non-systematic) nature. Two types of randomisation are: random selection , where a sample is selected randomly from a population, and random assignment , where subjects selected in a non-random manner are randomly assigned to treatment groups.

Randomisation also ensures external validity, allowing inferences drawn from the sample to be generalised to the population from which the sample is drawn. Note that random assignment is mandatory when random selection is not possible because of resource or access constraints. However, generalisability across populations is harder to ascertain since populations may differ on multiple dimensions and you can only control for a few of those dimensions.

Popular research designs

As noted earlier, research designs can be classified into two categories—positivist and interpretive—depending on the goal of the research. Positivist designs are meant for theory testing, while interpretive designs are meant for theory building. Positivist designs seek generalised patterns based on an objective view of reality, while interpretive designs seek subjective interpretations of social phenomena from the perspectives of the subjects involved. Some popular examples of positivist designs include laboratory experiments, field experiments, field surveys, secondary data analysis, and case research, while examples of interpretive designs include case research, phenomenology, and ethnography. Note that case research can be used for theory building or theory testing, though not at the same time. Not all techniques are suited for all kinds of scientific research. Some techniques such as focus groups are best suited for exploratory research, others such as ethnography are best for descriptive research, and still others such as laboratory experiments are ideal for explanatory research. Following are brief descriptions of some of these designs. Additional details are provided in Chapters 9–12.

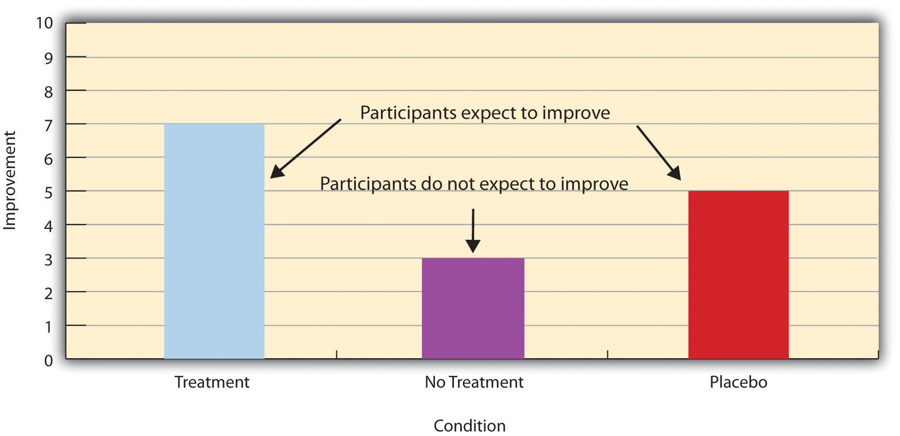

Experimental studies are those that are intended to test cause-effect relationships (hypotheses) in a tightly controlled setting by separating the cause from the effect in time, administering the cause to one group of subjects (the ‘treatment group’) but not to another group (‘control group’), and observing how the mean effects vary between subjects in these two groups. For instance, if we design a laboratory experiment to test the efficacy of a new drug in treating a certain ailment, we can get a random sample of people afflicted with that ailment, randomly assign them to one of two groups (treatment and control groups), administer the drug to subjects in the treatment group, but only give a placebo (e.g., a sugar pill with no medicinal value) to subjects in the control group. More complex designs may include multiple treatment groups, such as low versus high dosage of the drug or combining drug administration with dietary interventions. In a true experimental design , subjects must be randomly assigned to each group. If random assignment is not followed, then the design becomes quasi-experimental . Experiments can be conducted in an artificial or laboratory setting such as at a university (laboratory experiments) or in field settings such as in an organisation where the phenomenon of interest is actually occurring (field experiments). Laboratory experiments allow the researcher to isolate the variables of interest and control for extraneous variables, which may not be possible in field experiments. Hence, inferences drawn from laboratory experiments tend to be stronger in internal validity, but those from field experiments tend to be stronger in external validity. Experimental data is analysed using quantitative statistical techniques. The primary strength of the experimental design is its strong internal validity due to its ability to isolate, control, and intensively examine a small number of variables, while its primary weakness is limited external generalisability since real life is often more complex (i.e., involving more extraneous variables) than contrived lab settings. Furthermore, if the research does not identify ex ante relevant extraneous variables and control for such variables, such lack of controls may hurt internal validity and may lead to spurious correlations.

Field surveys are non-experimental designs that do not control for or manipulate independent variables or treatments, but measure these variables and test their effects using statistical methods. Field surveys capture snapshots of practices, beliefs, or situations from a random sample of subjects in field settings through a survey questionnaire or less frequently, through a structured interview. In cross-sectional field surveys , independent and dependent variables are measured at the same point in time (e.g., using a single questionnaire), while in longitudinal field surveys , dependent variables are measured at a later point in time than the independent variables. The strengths of field surveys are their external validity (since data is collected in field settings), their ability to capture and control for a large number of variables, and their ability to study a problem from multiple perspectives or using multiple theories. However, because of their non-temporal nature, internal validity (cause-effect relationships) are difficult to infer, and surveys may be subject to respondent biases (e.g., subjects may provide a ‘socially desirable’ response rather than their true response) which further hurts internal validity.