Enterprise Risk Management Case Studies: Heroes and Zeros

By Andy Marker | April 7, 2021

- Share on Facebook

- Share on LinkedIn

Link copied

We’ve compiled more than 20 case studies of enterprise risk management programs that illustrate how companies can prevent significant losses yet take risks with more confidence.

Included on this page, you’ll find case studies and examples by industry , case studies of major risk scenarios (and company responses), and examples of ERM successes and failures .

Enterprise Risk Management Examples and Case Studies

With enterprise risk management (ERM) , companies assess potential risks that could derail strategic objectives and implement measures to minimize or avoid those risks. You can analyze examples (or case studies) of enterprise risk management to better understand the concept and how to properly execute it.

The collection of examples and case studies on this page illustrates common risk management scenarios by industry, principle, and degree of success. For a basic overview of enterprise risk management, including major types of risks, how to develop policies, and how to identify key risk indicators (KRIs), read “ Enterprise Risk Management 101: Programs, Frameworks, and Advice from Experts .”

Enterprise Risk Management Framework Examples

An enterprise risk management framework is a system by which you assess and mitigate potential risks. The framework varies by industry, but most include roles and responsibilities, a methodology for risk identification, a risk appetite statement, risk prioritization, mitigation strategies, and monitoring and reporting.

To learn more about enterprise risk management and find examples of different frameworks, read our “ Ultimate Guide to Enterprise Risk Management .”

Enterprise Risk Management Examples and Case Studies by Industry

Though every firm faces unique risks, those in the same industry often share similar risks. By understanding industry-wide common risks, you can create and implement response plans that offer your firm a competitive advantage.

Enterprise Risk Management Example in Banking

Toronto-headquartered TD Bank organizes its risk management around two pillars: a risk management framework and risk appetite statement. The enterprise risk framework defines the risks the bank faces and lays out risk management practices to identify, assess, and control risk. The risk appetite statement outlines the bank’s willingness to take on risk to achieve its growth objectives. Both pillars are overseen by the risk committee of the company’s board of directors.

Risk management frameworks were an important part of the International Organization for Standardization’s 31000 standard when it was first written in 2009 and have been updated since then. The standards provide universal guidelines for risk management programs.

Risk management frameworks also resulted from the efforts of the Committee of Sponsoring Organizations of the Treadway Commission (COSO). The group was formed to fight corporate fraud and included risk management as a dimension.

Once TD completes the ERM framework, the bank moves onto the risk appetite statement.

The bank, which built a large U.S. presence through major acquisitions, determined that it will only take on risks that meet the following three criteria:

- The risk fits the company’s strategy, and TD can understand and manage those risks.

- The risk does not render the bank vulnerable to significant loss from a single risk.

- The risk does not expose the company to potential harm to its brand and reputation.

Some of the major risks the bank faces include strategic risk, credit risk, market risk, liquidity risk, operational risk, insurance risk, capital adequacy risk, regulator risk, and reputation risk. Managers detail these categories in a risk inventory.

The risk framework and appetite statement, which are tracked on a dashboard against metrics such as capital adequacy and credit risk, are reviewed annually.

TD uses a three lines of defense (3LOD) strategy, an approach widely favored by ERM experts, to guard against risk. The three lines are as follows:

- A business unit and corporate policies that create controls, as well as manage and monitor risk

- Standards and governance that provide oversight and review of risks and compliance with the risk appetite and framework

- Internal audits that provide independent checks and verification that risk-management procedures are effective

Enterprise Risk Management Example in Pharmaceuticals

Drug companies’ risks include threats around product quality and safety, regulatory action, and consumer trust. To avoid these risks, ERM experts emphasize the importance of making sure that strategic goals do not conflict.

For Britain’s GlaxoSmithKline, such a conflict led to a breakdown in risk management, among other issues. In the early 2000s, the company was striving to increase sales and profitability while also ensuring safe and effective medicines. One risk the company faced was a failure to meet current good manufacturing practices (CGMP) at its plant in Cidra, Puerto Rico.

CGMP includes implementing oversight and controls of manufacturing, as well as managing the risk and confirming the safety of raw materials and finished drug products. Noncompliance with CGMP can result in escalating consequences, ranging from warnings to recalls to criminal prosecution.

GSK’s unit pleaded guilty and paid $750 million in 2010 to resolve U.S. charges related to drugs made at the Cidra plant, which the company later closed. A fired GSK quality manager alerted regulators and filed a whistleblower lawsuit in 2004. In announcing the consent decree, the U.S. Department of Justice said the plant had a history of bacterial contamination and multiple drugs created there in the early 2000s violated safety standards.

According to the whistleblower, GSK’s ERM process failed in several respects to act on signs of non-compliance with CGMP. The company received warning letters from the U.S. Food and Drug Administration in 2001 about the plant’s practices, but did not resolve the issues.

Additionally, the company didn’t act on the quality manager’s compliance report, which advised GSK to close the plant for two weeks to fix the problems and notify the FDA. According to court filings, plant staff merely skimmed rejected products and sold them on the black market. They also scraped by hand the inside of an antibiotic tank to get more product and, in so doing, introduced bacteria into the product.

Enterprise Risk Management Example in Consumer Packaged Goods

Mars Inc., an international candy and food company, developed an ERM process. The company piloted and deployed the initiative through workshops with geographic, product, and functional teams from 2003 to 2012.

Driven by a desire to frame risk as an opportunity and to work within the company’s decentralized structure, Mars created a process that asked participants to identify potential risks and vote on which had the highest probability. The teams listed risk mitigation steps, then ranked and color-coded them according to probability of success.

Larry Warner, a Mars risk officer at the time, illustrated this process in a case study . An initiative to increase direct-to-consumer shipments by 12 percent was colored green, indicating a 75 percent or greater probability of achievement. The initiative to bring a new plant online by the end of Q3 was coded red, meaning less than a 50 percent probability of success.

The company’s results were hurt by a surprise at an operating unit that resulted from a so-coded red risk identified in a unit workshop. Executives had agreed that some red risk profile was to be expected, but they decided that when a unit encountered a red issue, it must be communicated upward when first identified. This became a rule.

This process led to the creation of an ERM dashboard that listed initiatives in priority order, with the profile of each risk faced in the quarter, the risk profile trend, and a comment column for a year-end view.

According to Warner, the key factors of success for ERM at Mars are as follows:

- The initiative focused on achieving operational and strategic objectives rather than compliance, which refers to adhering to established rules and regulations.

- The program evolved, often based on requests from business units, and incorporated continuous improvement.

- The ERM team did not overpromise. It set realistic objectives.

- The ERM team periodically surveyed business units, management teams, and board advisers.

Enterprise Risk Management Example in Retail

Walmart is the world’s biggest retailer. As such, the company understands that its risk makeup is complex, given the geographic spread of its operations and its large number of stores, vast supply chain, and high profile as an employer and buyer of goods.

In the 1990s, the company sought a simplified strategy for assessing risk and created an enterprise risk management plan with five steps founded on these four questions:

- What are the risks?

- What are we going to do about them?

- How will we know if we are raising or decreasing risk?

- How will we show shareholder value?

The process follows these five steps:

- Risk Identification: Senior Walmart leaders meet in workshops to identify risks, which are then plotted on a graph of probability vs. impact. Doing so helps to prioritize the biggest risks. The executives then look at seven risk categories (both internal and external): legal/regulatory, political, business environment, strategic, operational, financial, and integrity. Many ERM pros use risk registers to evaluate and determine the priority of risks. You can download templates that help correlate risk probability and potential impact in “ Free Risk Register Templates .”

- Risk Mitigation: Teams that include operational staff in the relevant area meet. They use existing inventory procedures to address the risks and determine if the procedures are effective.

- Action Planning: A project team identifies and implements next steps over the several months to follow.

- Performance Metrics: The group develops metrics to measure the impact of the changes. They also look at trends of actual performance compared to goal over time.

- Return on Investment and Shareholder Value: In this step, the group assesses the changes’ impact on sales and expenses to determine if the moves improved shareholder value and ROI.

To develop your own risk management planning, you can download a customizable template in “ Risk Management Plan Templates .”

Enterprise Risk Management Example in Agriculture

United Grain Growers (UGG), a Canadian grain distributor that now is part of Glencore Ltd., was hailed as an ERM innovator and became the subject of business school case studies for its enterprise risk management program. This initiative addressed the risks associated with weather for its business. Crop volume drove UGG’s revenue and profits.

In the late 1990s, UGG identified its major unaddressed risks. Using almost a century of data, risk analysts found that extreme weather events occurred 10 times as frequently as previously believed. The company worked with its insurance broker and the Swiss Re Group on a solution that added grain-volume risk (resulting from weather fluctuations) to its other insured risks, such as property and liability, in an integrated program.

The result was insurance that protected grain-handling earnings, which comprised half of UGG’s gross profits. The greater financial stability significantly enhanced the firm’s ability to achieve its strategic objectives.

Since then, the number and types of instruments to manage weather-related risks has multiplied rapidly. For example, over-the-counter derivatives, such as futures and options, began trading in 1997. The Chicago Mercantile Exchange now offers weather futures contracts on 12 U.S. and international cities.

Weather derivatives are linked to climate factors such as rainfall or temperature, and they hedge different kinds of risks than do insurance. These risks are much more common (e.g., a cooler-than-normal summer) than the earthquakes and floods that insurance typically covers. And the holders of derivatives do not have to incur any damage to collect on them.

These weather-linked instruments have found a wider audience than anticipated, including retailers that worry about freak storms decimating Christmas sales, amusement park operators fearing rainy summers will keep crowds away, and energy companies needing to hedge demand for heating and cooling.

This area of ERM continues to evolve because weather and crop insurance are not enough to address all the risks that agriculture faces. Arbol, Inc. estimates that more than $1 trillion of agricultural risk is uninsured. As such, it is launching a blockchain-based platform that offers contracts (customized by location and risk parameters) with payouts based on weather data. These contracts can cover risks associated with niche crops and small growing areas.

Enterprise Risk Management Example in Insurance

Switzerland’s Zurich Insurance Group understands that risk is inherent for insurers and seeks to practice disciplined risk-taking, within a predetermined risk tolerance.

The global insurer’s enterprise risk management framework aims to protect capital, liquidity, earnings, and reputation. Governance serves as the basis for risk management, and the framework lays out responsibilities for taking, managing, monitoring, and reporting risks.

The company uses a proprietary process called Total Risk Profiling (TRP) to monitor internal and external risks to its strategy and financial plan. TRP assesses risk on the basis of severity and probability, and helps define and implement mitigating moves.

Zurich’s risk appetite sets parameters for its tolerance within the goal of maintaining enough capital to achieve an AA rating from rating agencies. For this, the company uses its own Zurich economic capital model, referred to as Z-ECM. The model quantifies risk tolerance with a metric that assesses risk profile vs. risk tolerance.

To maintain the AA rating, the company aims to hold capital between 100 and 120 percent of capital at risk. Above 140 percent is considered overcapitalized (therefore at risk of throttling growth), and under 90 percent is below risk tolerance (meaning the risk is too high). On either side of 100 to 120 percent (90 to 100 percent and 120 to 140 percent), the insurer considers taking mitigating action.

Zurich’s assessment of risk and the nature of those risks play a major role in determining how much capital regulators require the business to hold. A popular tool to assess risk is the risk matrix, and you can find a variety of templates in “ Free, Customizable Risk Matrix Templates .”

In 2020, Zurich found that its biggest exposures were market risk, such as falling asset valuations and interest-rate risk; insurance risk, such as big payouts for covered customer losses, which it hedges through diversification and reinsurance; credit risk in assets it holds and receivables; and operational risks, such as internal process failures and external fraud.

Enterprise Risk Management Example in Technology

Financial software maker Intuit has strengthened its enterprise risk management through evolution, according to a case study by former Chief Risk Officer Janet Nasburg.

The program is founded on the following five core principles:

- Use a common risk framework across the enterprise.

- Assess risks on an ongoing basis.

- Focus on the most important risks.

- Clearly define accountability for risk management.

- Commit to continuous improvement of performance measurement and monitoring.

ERM programs grow according to a maturity model, and as capability rises, the shareholder value from risk management becomes more visible and important.

The maturity phases include the following:

- Ad hoc risk management addresses a specific problem when it arises.

- Targeted or initial risk management approaches risks with multiple understandings of what constitutes risk and management occurs in silos.

- Integrated or repeatable risk management puts in place an organization-wide framework for risk assessment and response.

- Intelligent or managed risk management coordinates risk management across the business, using common tools.

- Risk leadership incorporates risk management into strategic decision-making.

Intuit emphasizes using key risk indicators (KRIs) to understand risks, along with key performance indicators (KPIs) to gauge the effectiveness of risk management.

Early in its ERM journey, Intuit measured performance on risk management process participation and risk assessment impact. For participation, the targeted rate was 80 percent of executive management and business-line leaders. This helped benchmark risk awareness and current risk management, at a time when ERM at the company was not mature.

Conduct an annual risk assessment at corporate and business-line levels to plot risks, so the most likely and most impactful risks are graphed in the upper-right quadrant. Doing so focuses attention on these risks and helps business leaders understand the risk’s impact on performance toward strategic objectives.

In the company’s second phase of ERM, Intuit turned its attention to building risk management capacity and sought to ensure that risk management activities addressed the most important risks. The company evaluated performance using color-coded status symbols (red, yellow, green) to indicate risk trend and progress on risk mitigation measures.

In its third phase, Intuit moved to actively monitoring the most important risks and ensuring that leaders modified their strategies to manage risks and take advantage of opportunities. An executive dashboard uses KRIs, KPIs, an overall risk rating, and red-yellow-green coding. The board of directors regularly reviews this dashboard.

Over this evolution, the company has moved from narrow, tactical risk management to holistic, strategic, and long-term ERM.

Enterprise Risk Management Case Studies by Principle

ERM veterans agree that in addition to KPIs and KRIs, other principles are equally important to follow. Below, you’ll find examples of enterprise risk management programs by principles.

ERM Principle #1: Make Sure Your Program Aligns with Your Values

Raytheon Case Study U.S. defense contractor Raytheon states that its highest priority is delivering on its commitment to provide ethical business practices and abide by anti-corruption laws.

Raytheon backs up this statement through its ERM program. Among other measures, the company performs an annual risk assessment for each function, including the anti-corruption group under the Chief Ethics and Compliance Officer. In addition, Raytheon asks 70 of its sites to perform an anti-corruption self-assessment each year to identify gaps and risks. From there, a compliance team tracks improvement actions.

Every quarter, the company surveys 600 staff members who may face higher anti-corruption risks, such as the potential for bribes. The survey asks them to report any potential issues in the past quarter.

Also on a quarterly basis, the finance and internal controls teams review higher-risk profile payments, such as donations and gratuities to confirm accuracy and compliance. Oversight and compliance teams add other checks, and they update a risk-based audit plan continuously.

ERM Principle #2: Embrace Diversity to Reduce Risk

State Street Global Advisors Case Study In 2016, the asset management firm State Street Global Advisors introduced measures to increase gender diversity in its leadership as a way of reducing portfolio risk, among other goals.

The company relied on research that showed that companies with more women senior managers had a better return on equity, reduced volatility, and fewer governance problems such as corruption and fraud.

Among the initiatives was a campaign to influence companies where State Street had invested, in order to increase female membership on their boards. State Street also developed an investment product that tracks the performance of companies with the highest level of senior female leadership relative to peers in their sector.

In 2020, the company announced some of the results of its effort. Among the 1,384 companies targeted by the firm, 681 added at least one female director.

ERM Principle #3: Do Not Overlook Resource Risks

Infosys Case Study India-based technology consulting company Infosys, which employees more than 240,000 people, has long recognized the risk of water shortages to its operations.

India’s rapidly growing population and development has increased the risk of water scarcity. A 2020 report by the World Wide Fund for Nature said 30 cities in India faced the risk of severe water scarcity over the next three decades.

Infosys has dozens of facilities in India and considers water to be a significant short-term risk. At its campuses, the company uses the water for cooking, drinking, cleaning, restrooms, landscaping, and cooling. Water shortages could halt Infosys operations and prevent it from completing customer projects and reaching its performance objectives.

In an enterprise risk assessment example, Infosys’ ERM team conducts corporate water-risk assessments while sustainability teams produce detailed water-risk assessments for individual locations, according to a report by the World Business Council for Sustainable Development .

The company uses the COSO ERM framework to respond to the risks and decide whether to accept, avoid, reduce, or share these risks. The company uses root-cause analysis (which focuses on identifying underlying causes rather than symptoms) and the site assessments to plan steps to reduce risks.

Infosys has implemented various water conservation measures, such as water-efficient fixtures and water recycling, rainwater collection and use, recharging aquifers, underground reservoirs to hold five days of water supply at locations, and smart-meter usage monitoring. Infosys’ ERM team tracks metrics for per-capita water consumption, along with rainfall data, availability and cost of water by tanker trucks, and water usage from external suppliers.

In the 2020 fiscal year, the company reported a nearly 64 percent drop in per-capita water consumption by its workforce from the 2008 fiscal year.

The business advantages of this risk management include an ability to open locations where water shortages may preclude competitors, and being able to maintain operations during water scarcity, protecting profitability.

ERM Principle #4: Fight Silos for Stronger Enterprise Risk Management

U.S. Government Case Study The terrorist attacks of September 11, 2001, revealed that the U.S. government’s then-current approach to managing intelligence was not adequate to address the threats — and, by extension, so was the government’s risk management procedure. Since the Cold War, sensitive information had been managed on a “need to know” basis that resulted in data silos.

In the case of 9/11, this meant that different parts of the government knew some relevant intelligence that could have helped prevent the attacks. But no one had the opportunity to put the information together and see the whole picture. A congressional commission determined there were 10 lost operational opportunities to derail the plot. Silos existed between law enforcement and intelligence, as well as between and within agencies.

After the attacks, the government moved toward greater information sharing and collaboration. Based on a task force’s recommendations, data moved from a centralized network to a distributed model, and social networking tools now allow colleagues throughout the government to connect. Staff began working across agency lines more often.

Enterprise Risk Management Examples by Scenario

While some scenarios are too unlikely to receive high-priority status, low-probability risks are still worth running through the ERM process. Robust risk management creates a culture and response capacity that better positions a company to deal with a crisis.

In the following enterprise risk examples, you will find scenarios and details of how organizations manage the risks they face.

Scenario: ERM and the Global Pandemic While most businesses do not have the resources to do in-depth ERM planning for the rare occurrence of a global pandemic, companies with a risk-aware culture will be at an advantage if a pandemic does hit.

These businesses already have processes in place to escalate trouble signs for immediate attention and an ERM team or leader monitoring the threat environment. A strong ERM function gives clear and effective guidance that helps the company respond.

A report by Vodafone found that companies identified as “future ready” fared better in the COVID-19 pandemic. The attributes of future-ready businesses have a lot in common with those of companies that excel at ERM. These include viewing change as an opportunity; having detailed business strategies that are documented, funded, and measured; working to understand the forces that shape their environments; having roadmaps in place for technological transformation; and being able to react more quickly than competitors.

Only about 20 percent of companies in the Vodafone study met the definition of “future ready.” But 54 percent of these firms had a fully developed and tested business continuity plan, compared to 30 percent of all businesses. And 82 percent felt their continuity plans worked well during the COVID-19 crisis. Nearly 50 percent of all businesses reported decreased profits, while 30 percent of future-ready organizations saw profits rise.

Scenario: ERM and the Economic Crisis The 2008 economic crisis in the United States resulted from the domino effect of rising interest rates, a collapse in housing prices, and a dramatic increase in foreclosures among mortgage borrowers with poor creditworthiness. This led to bank failures, a credit crunch, and layoffs, and the U.S. government had to rescue banks and other financial institutions to stabilize the financial system.

Some commentators said these events revealed the shortcomings of ERM because it did not prevent the banks’ mistakes or collapse. But Sim Segal, an ERM consultant and director of Columbia University’s ERM master’s degree program, analyzed how banks performed on 10 key ERM criteria.

Segal says a risk-management program that incorporates all 10 criteria has these characteristics:

- Risk management has an enterprise-wide scope.

- The program includes all risk categories: financial, operational, and strategic.

- The focus is on the most important risks, not all possible risks.

- Risk management is integrated across risk types.

- Aggregated metrics show risk exposure and appetite across the enterprise.

- Risk management incorporates decision-making, not just reporting.

- The effort balances risk and return management.

- There is a process for disclosure of risk.

- The program measures risk in terms of potential impact on company value.

- The focus of risk management is on the primary stakeholder, such as shareholders, rather than regulators or rating agencies.

In his book Corporate Value of Enterprise Risk Management , Segal concluded that most banks did not actually use ERM practices, which contributed to the financial crisis. He scored banks as failing on nine of the 10 criteria, only giving them a passing grade for focusing on the most important risks.

Scenario: ERM and Technology Risk The story of retailer Target’s failed expansion to Canada, where it shut down 133 loss-making stores in 2015, has been well documented. But one dimension that analysts have sometimes overlooked was Target’s handling of technology risk.

A case study by Canadian Business magazine traced some of the biggest issues to software and data-quality problems that dramatically undermined the Canadian launch.

As with other forms of ERM, technology risk management requires companies to ask what could go wrong, what the consequences would be, how they might prevent the risks, and how they should deal with the consequences.

But with its technology plan for Canada, Target did not heed risk warning signs.

In the United States, Target had custom systems for ordering products from vendors, processing items at warehouses, and distributing merchandise to stores quickly. But that software would need customization to work with the Canadian dollar, metric system, and French-language characters.

Target decided to go with new ERP software on an aggressive two-year timeline. As Target began ordering products for the Canadian stores in 2012, problems arose. Some items did not fit into shipping containers or on store shelves, and information needed for customs agents to clear imported items was not correct in Target's system.

Target found that its supply chain software data was full of errors. Product dimensions were in inches, not centimeters; height and width measurements were mixed up. An internal investigation showed that only about 30 percent of the data was accurate.

In an attempt to fix these errors, Target merchandisers spent a week double-checking with vendors up to 80 data points for each of the retailer’s 75,000 products. They discovered that the dummy data entered into the software during setup had not been altered. To make any corrections, employees had to send the new information to an office in India where staff would enter it into the system.

As the launch approached, the technology errors left the company vulnerable to stockouts, few people understood how the system worked, and the point-of-sale checkout system did not function correctly. Soon after stores opened in 2013, consumers began complaining about empty shelves. Meanwhile, Target Canada distribution centers overflowed due to excess ordering based on poor data fed into forecasting software.

The rushed launch compounded problems because it did not allow the company enough time to find solutions or alternative technology. While the retailer fixed some issues by the end of 2014, it was too late. Target Canada filed for bankruptcy protection in early 2015.

Scenario: ERM and Cybersecurity System hacks and data theft are major worries for companies. But as a relatively new field, cyber-risk management faces unique hurdles.

For example, risk managers and information security officers have difficulty quantifying the likelihood and business impact of a cybersecurity attack. The rise of cloud-based software exposes companies to third-party risks that make these projections even more difficult to calculate.

As the field evolves, risk managers say it’s important for IT security officers to look beyond technical issues, such as the need to patch a vulnerability, and instead look more broadly at business impacts to make a cost benefit analysis of risk mitigation. Frameworks such as the Risk Management Framework for Information Systems and Organizations by the National Institute of Standards and Technology can help.

Health insurer Aetna considers cybersecurity threats as a part of operational risk within its ERM framework and calculates a daily risk score, adjusted with changes in the cyberthreat landscape.

Aetna studies threats from external actors by working through information sharing and analysis centers for the financial services and health industries. Aetna staff reverse-engineers malware to determine controls. The company says this type of activity helps ensure the resiliency of its business processes and greatly improves its ability to help protect member information.

For internal threats, Aetna uses models that compare current user behavior to past behavior and identify anomalies. (The company says it was the first organization to do this at scale across the enterprise.) Aetna gives staff permissions to networks and data based on what they need to perform their job. This segmentation restricts access to raw data and strengthens governance.

Another risk initiative scans outgoing employee emails for code patterns, such as credit card or Social Security numbers. The system flags the email, and a security officer assesses it before the email is released.

Examples of Poor Enterprise Risk Management

Case studies of failed enterprise risk management often highlight mistakes that managers could and should have spotted — and corrected — before a full-blown crisis erupted. The focus of these examples is often on determining why that did not happen.

ERM Case Study: General Motors

In 2014, General Motors recalled the first of what would become 29 million cars due to faulty ignition switches and paid compensation for 124 related deaths. GM knew of the problem for at least 10 years but did not act, the automaker later acknowledged. The company entered a deferred prosecution agreement and paid a $900 million penalty.

Pointing to the length of time the company failed to disclose the safety problem, ERM specialists say it shows the problem did not reside with a single department. “Rather, it reflects a failure to properly manage risk,” wrote Steve Minsky, a writer on ERM and CEO of an ERM software company, in Risk Management magazine.

“ERM is designed to keep all parties across the organization, from the front lines to the board to regulators, apprised of these kinds of problems as they become evident. Unfortunately, GM failed to implement such a program, ultimately leading to a tragic and costly scandal,” Minsky said.

Also in the auto sector, an enterprise risk management case study of Toyota looked at its problems with unintended acceleration of vehicles from 2002 to 2009. Several studies, including a case study by Carnegie Mellon University Professor Phil Koopman , blamed poor software design and company culture. A whistleblower later revealed a coverup by Toyota. The company paid more than $2.5 billion in fines and settlements.

ERM Case Study: Lululemon

In 2013, following customer complaints that its black yoga pants were too sheer, the athletic apparel maker recalled 17 percent of its inventory at a cost of $67 million. The company had previously identified risks related to fabric supply and quality. The CEO said the issue was inadequate testing.

Analysts raised concerns about the company’s controls, including oversight of factories and product quality. A case study by Stanford University professors noted that Lululemon’s episode illustrated a common disconnect between identifying risks and being prepared to manage them when they materialize. Lululemon’s reporting and analysis of risks was also inadequate, especially as related to social media. In addition, the case study highlighted the need for a system to escalate risk-related issues to the board.

ERM Case Study: Kodak

Once an iconic brand, the photo film company failed for decades to act on the threat that digital photography posed to its business and eventually filed for bankruptcy in 2012. The company’s own research in 1981 found that digital photos could ultimately replace Kodak’s film technology and estimated it had 10 years to prepare.

Unfortunately, Kodak did not prepare and stayed locked into the film paradigm. The board reinforced this course when in 1989 it chose as CEO a candidate who came from the film business over an executive interested in digital technology.

Had the company acknowledged the risks and employed ERM strategies, it might have pursued a variety of strategies to remain successful. The company’s rival, Fuji Film, took the money it made from film and invested in new initiatives, some of which paid off. Kodak, on the other hand, kept investing in the old core business.

Case Studies of Successful Enterprise Risk Management

Successful enterprise risk management usually requires strong performance in multiple dimensions, and is therefore more likely to occur in organizations where ERM has matured. The following examples of enterprise risk management can be considered success stories.

ERM Case Study: Statoil

A major global oil producer, Statoil of Norway stands out for the way it practices ERM by looking at both downside risk and upside potential. Taking risks is vital in a business that depends on finding new oil reserves.

According to a case study, the company developed its own framework founded on two basic goals: creating value and avoiding accidents.

The company aims to understand risks thoroughly, and unlike many ERM programs, Statoil maps risks on both the downside and upside. It graphs risk on probability vs. impact on pre-tax earnings, and it examines each risk from both positive and negative perspectives.

For example, the case study cites a risk that the company assessed as having a 5 percent probability of a somewhat better-than-expected outcome but a 10 percent probability of a significant loss relative to forecast. In this case, the downside risk was greater than the upside potential.

ERM Case Study: Lego

The Danish toy maker’s ERM evolved over the following four phases, according to a case study by one of the chief architects of its program:

- Traditional management of financial, operational, and other risks. Strategic risk management joined the ERM program in 2006.

- The company added Monte Carlo simulations in 2008 to model financial performance volatility so that budgeting and financial processes could incorporate risk management. The technique is used in budget simulations, to assess risk in its credit portfolio, and to consolidate risk exposure.

- Active risk and opportunity planning is part of making a business case for new projects before final decisions.

- The company prepares for uncertainty so that long-term strategies remain relevant and resilient under different scenarios.

As part of its scenario modeling, Lego developed its PAPA (park, adapt, prepare, act) model.

- Park: The company parks risks that occur slowly and have a low probability of happening, meaning it does not forget nor actively deal with them.

- Adapt: This response is for risks that evolve slowly and are certain or highly probable to occur. For example, a risk in this category is the changing nature of play and the evolution of buying power in different parts of the world. In this phase, the company adjusts, monitors the trend, and follows developments.

- Prepare: This category includes risks that have a low probability of occurring — but when they do, they emerge rapidly. These risks go into the ERM risk database with contingency plans, early warning indicators, and mitigation measures in place.

- Act: These are high-probability, fast-moving risks that must be acted upon to maintain strategy. For example, developments around connectivity, mobile devices, and online activity are in this category because of the rapid pace of change and the influence on the way children play.

Lego views risk management as a way to better equip itself to take risks than its competitors. In the case study, the writer likens this approach to the need for the fastest race cars to have the best brakes and steering to achieve top speeds.

ERM Case Study: University of California

The University of California, one of the biggest U.S. public university systems, introduced a new view of risk to its workforce when it implemented enterprise risk management in 2005. Previously, the function was merely seen as a compliance requirement.

ERM became a way to support the university’s mission of education and research, drawing on collaboration of the system’s employees across departments. “Our philosophy is, ‘Everyone is a risk manager,’” Erike Young, deputy director of ERM told Treasury and Risk magazine. “Anyone who’s in a management position technically manages some type of risk.”

The university faces a diverse set of risks, including cybersecurity, hospital liability, reduced government financial support, and earthquakes.

The ERM department had to overhaul systems to create a unified view of risk because its information and processes were not linked. Software enabled both an organizational picture of risk and highly detailed drilldowns on individual risks. Risk managers also developed tools for risk assessment, risk ranking, and risk modeling.

Better risk management has provided more than $100 million in annual cost savings and nearly $500 million in cost avoidance, according to UC officials.

UC drives ERM with risk management departments at each of its 10 locations and leverages university subject matter experts to form multidisciplinary workgroups that develop process improvements.

APQC, a standards quality organization, recognized UC as a top global ERM practice organization, and the university system has won other awards. The university says in 2010 it was the first nonfinancial organization to win credit-rating agency recognition of its ERM program.

Examples of How Technology Is Transforming Enterprise Risk Management

Business intelligence software has propelled major progress in enterprise risk management because the technology enables risk managers to bring their information together, analyze it, and forecast how risk scenarios would impact their business.

ERM organizations are using computing and data-handling advancements such as blockchain for new innovations in strengthening risk management. Following are case studies of a few examples.

ERM Case Study: Bank of New York Mellon

In 2021, the bank joined with Google Cloud to use machine learning and artificial intelligence to predict and reduce the risk that transactions in the $22 trillion U.S. Treasury market will fail to settle. Settlement failure means a buyer and seller do not exchange cash and securities by the close of business on the scheduled date.

The party that fails to settle is assessed a daily financial penalty, and a high level of settlement failures can indicate market liquidity problems and rising risk. BNY says that, on average, about 2 percent of transactions fail to settle.

The bank trained models with millions of trades to consider every factor that could result in settlement failure. The service uses market-wide intraday trading metrics, trading velocity, scarcity indicators, volume, the number of trades settled per hour, seasonality, issuance patterns, and other signals.

The bank said it predicts about 40 percent of settlement failures with 90 percent accuracy. But it also cautioned against overconfidence in the technology as the model continues to improve.

AI-driven forecasting reduces risk for BNY clients in the Treasury market and saves costs. For example, a predictive view of settlement risks helps bond dealers more accurately manage their liquidity buffers, avoid penalties, optimize their funding sources, and offset the risks of failed settlements. In the long run, such forecasting tools could improve the health of the financial market.

ERM Case Study: PwC

Consulting company PwC has leveraged a vast information storehouse known as a data lake to help its customers manage risk from suppliers.

A data lake stores both structured or unstructured information, meaning data in highly organized, standardized formats as well as unstandardized data. This means that everything from raw audio to credit card numbers can live in a data lake.

Using techniques pioneered in national security, PwC built a risk data lake that integrates information from client companies, public databases, user devices, and industry sources. Algorithms find patterns that can signify unidentified risks.

One of PwC’s first uses of this data lake was a program to help companies uncover risks from their vendors and suppliers. Companies can violate laws, harm their reputations, suffer fraud, and risk their proprietary information by doing business with the wrong vendor.

Today’s complex global supply chains mean companies may be several degrees removed from the source of this risk, which makes it hard to spot and mitigate. For example, a product made with outlawed child labor could be traded through several intermediaries before it reaches a retailer.

PwC’s service helps companies recognize risk beyond their primary vendors and continue to monitor that risk over time as more information enters the data lake.

ERM Case Study: Financial Services

As analytics have become a pillar of forecasting and risk management for banks and other financial institutions, a new risk has emerged: model risk . This refers to the risk that machine-learning models will lead users to an unreliable understanding of risk or have unintended consequences.

For example, a 6 percent drop in the value of the British pound over the course of a few minutes in 2016 stemmed from currency trading algorithms that spiralled into a negative loop. A Twitter-reading program began an automated selling of the pound after comments by a French official, and other selling algorithms kicked in once the currency dropped below a certain level.

U.S. banking regulators are so concerned about model risk that the Federal Reserve set up a model validation council in 2012 to assess the models that banks use in running risk simulations for capital adequacy requirements. Regulators in Europe and elsewhere also require model validation.

A form of managing risk from a risk-management tool, model validation is an effort to reduce risk from machine learning. The technology-driven rise in modeling capacity has caused such models to proliferate, and banks can use hundreds of models to assess different risks.

Model risk management can reduce rising costs for modeling by an estimated 20 to 30 percent by building a validation workflow, prioritizing models that are most important to business decisions, and implementing automation for testing and other tasks, according to McKinsey.

Streamline Your Enterprise Risk Management Efforts with Real-Time Work Management in Smartsheet

Empower your people to go above and beyond with a flexible platform designed to match the needs of your team — and adapt as those needs change.

The Smartsheet platform makes it easy to plan, capture, manage, and report on work from anywhere, helping your team be more effective and get more done. Report on key metrics and get real-time visibility into work as it happens with roll-up reports, dashboards, and automated workflows built to keep your team connected and informed.

When teams have clarity into the work getting done, there’s no telling how much more they can accomplish in the same amount of time. Try Smartsheet for free, today.

Discover why over 90% of Fortune 100 companies trust Smartsheet to get work done.

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Module 1: Case Studies & Examples

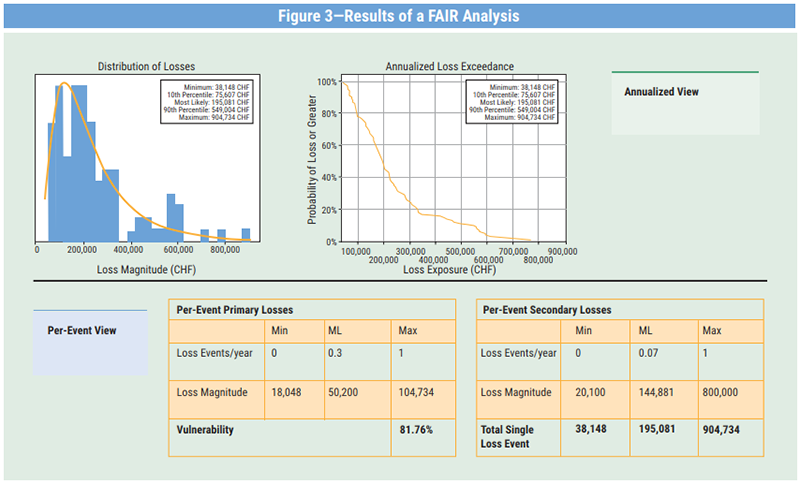

In this section, we will review some examples of how to generate an initial estimate using two very basic methods. Then, we are going to walk through some case studies so that you can put what you’ve learned into the context of a cyber risk scenario.

The Value of the Initial Analysis

In any organization, decision-making is a crucial process that can significantly impact the success or failure of the organization. Making informed decisions requires access to accurate and relevant information. It does not, however, require in-depth, time-consuming, and expensive research and analysis. The initial analysis provides a quick, cost-effective analysis of risk. It allows decision-makers to have a timely analysis based on readily available data. If decision-makers determine that a more in-depth analysis is warranted, this gives them the opportunity to clearly scope the effort and provide their authorization for the expenditure of additional funds and resources.

What is an Initial Analysis?

An initial analysis is a preliminary assessment of a situation or problem. It involves gathering and analyzing information to understand the situation comprehensively. An initial analysis is typically conducted before making any significant decisions or taking any action. Its purpose is to provide decision-makers with the information they need to make informed decisions. In the case of quantifying risk, you are making estimates with fairly broad ranges (such as 20% or more). This provides an accurate, if broad, estimate. With more detail, the estimate becomes more precise.

Benefits of an Initial Analysis for Decision Support

An initial analysis is valuable for decision support because it gives decision-makers a comprehensive overview of the situation. It allows decision-makers to make informed decisions based on accurate and relevant information. There are several benefits of conducting an initial analysis.

Benefits of Conducting an Initial Analysis

- Provides a Comprehensive Overview : An initial analysis gives decision-makers a comprehensive overview of the situation. It helps decision-makers to understand the situation, including the challenges, risks, and opportunities. This comprehensive overview allows decision-makers to make informed decisions based on accurate and relevant information.

- Identifies Risks and Opportunities : An initial analysis helps to identify risks and opportunities associated with the situation. It allows decision-makers to assess the potential impact of these risks and opportunities on the organization. This information is critical to making informed decisions considering potential risks and opportunities.

- Helps to Identify and Prioritize Options : An initial analysis helps to identify and prioritize options for addressing the situation. It provides decision-makers with a range of options and the potential benefits and risks associated with each option. This information is critical to making informed decisions that consider all available options.

- Facilitates Consensus-Building : An initial analysis helps to facilitate consensus-building among decision-makers. It provides decision-makers with a shared understanding of the situation, which can help to build consensus around the best course of action. This consensus-building is critical to ensuring that decisions are made with the support of all decision-makers.

- Reduces the Risk of Making Poor Decisions : An initial analysis helps to reduce the risk of making poor decisions. It provides decision-makers with accurate and relevant information, which can help to reduce the risk of making decisions based on incomplete or inaccurate information. This can help avoid costly mistakes and ensure that decisions are made in the organization’s best interests.

- Approval for Additional Time and Resources : An initial analysis is typically conducted before making any significant decisions or taking any action. Its purpose is to provide decision-makers with the information they need to make informed decisions. However, in some cases, decision-makers may require additional information before deciding. In these cases, an initial analysis can serve as a basis for approving additional time and resources to produce a more in-depth analysis. This additional analysis can provide decision-makers with more detailed information, which can help to make more informed decisions. By using the initial analysis as a basis for approving additional time and resources, decision-makers can ensure that the additional analysis is focused on the most critical issues and provides the information they need to make informed decisions.

Figure 6 NOTE: Always begin with an initial analysis

General Guidelines for Developing Estimates

- Internet-facing assets generally represent a very high likelihood of compromise if there is an exploitable vulnerability. Any asset with a directly accessible interface to the internet could be considered to meet this criterion if it has an exploitable vulnerability.

- Vulnerabilities in perimeter defenses generally represent a very high likelihood of compromise.

- Vulnerabilities in high-value assets generally represent a very high risk.

- Vulnerabilities on web-based servers and applications represent a very high likelihood of compromise.

- Vulnerabilities on workstations generally represent a high likelihood of compromise.

- Vulnerabilities in databases represent a high likelihood of compromise.

- Vulnerabilities on unsupported systems or products may be considered a higher likelihood of compromise.

- Vulnerabilities that could cause extreme outages generally represent a very high risk.

- Vulnerabilities that could lead to initial access or privilege escalation generally represent a very high risk.

- Vulnerabilities that could lead to system compromise generally represent a higher risk.

- If you know what percentage of systems have a particular vulnerability, you can use this as the basis for a threat estimate.

- Zero-day vulnerabilities generally represent a very high risk.

- Perimeter defense Zero-Day vulnerabilities generally represent a very high risk.

- Web servers with Zero-Day vulnerabilities generally represent a very high risk.

- Web server and application exploits such as SQL and Cross-site scripting vulnerabilities generally represent a very high risk.

- Unsupported operating systems and applications generally represent a very high risk as these are frequently targets of attack.

- Remote code execution vulnerabilities generally represent a higher risk.

- Named exploits such as man-in-the-middle type attacks generally represent a higher risk.

- Vulnerabilities for which there may be known, or ongoing exploits generally represent a higher risk.

- Vulnerabilities with a public proof-of-concept generally represent a higher risk. Any vulnerability that can lead to initial access or privilege escalation generally represents a higher risk.

- Internal exploitable vulnerabilities generally represent an elevated risk.

- Strong perimeter defense can be a mitigating factor.

- Security by obscurity is not considered a mitigating factor.

- Policies or procedures may be considered a mitigating factor.

- Mitigating factors generally can reduce an estimate by a single 20% range. A very strong mitigation generally can reduce an estimate by two 20% ranges.

- Financially motivated cyber-criminals are generally very successful. You may want to specify the targeted system or data to refine the scope of your estimate.

- Insider threats are generally very successful.

- APTs or nation-states are generally very successful. You may want to specify a particular APT or nation-state to refine your estimate.

- An accidental misconfiguration is as dangerous as an intentional act.

- Poor processes and procedures can represent a risk, especially if they may be undocumented and not consistently applied.

- It is useful to stipulate the time period for your estimate and whether it is a factor in the likelihood of compromise. In some cases, this may be the time period until a patch or remediation is in place. In some cases, the longer the time period, the higher the likelihood of compromise. Similarly, in some cases, a shorter period of exposure may indicate a slightly lower likelihood of compromise.

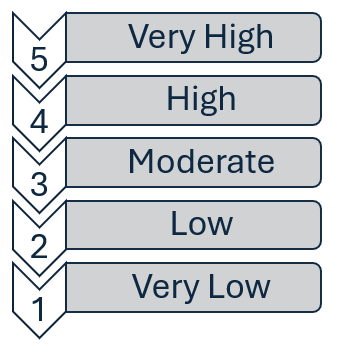

Using a 1-5 Scale

Risk is an inherent part of any business or organizational activity. It is the possibility of an event occurring that could adversely impact the organization’s objectives. Risk can be expressed in various ways, including verbally, numerically, or graphically. One commonly used method of verbally expressing risk is through a 1-5 scale using the labels very low, low, moderate, high, and very high values.

The Five-Point Scale

The five-point scale is a simple and effective way to express risk verbally. It uses five categories to describe the level of risk associated with an event or activity. The categories are very low, low, moderate, high, and very high. Each category represents a different level of risk, with very low representing the lowest level of risk and very high representing the highest level of risk.

Figure 7 The 5-Point Scale Labels

This scale is beneficial because it allows for quick and easy understanding and consensus-building among different organizational groups. It is a simple and intuitive way to express risk that people with different levels of expertise in risk management can easily understand.

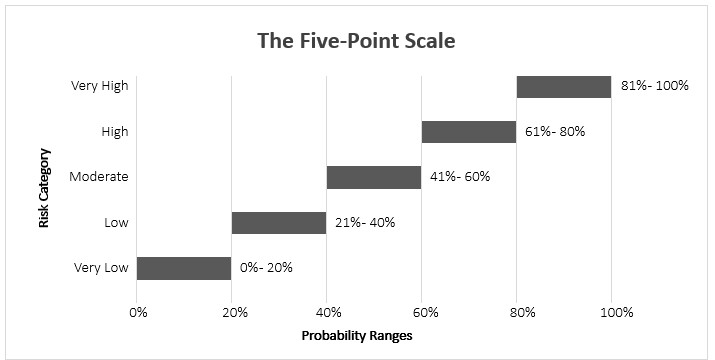

Converting the Scale to 20% Ranges

While the five-point scale is a useful way to express risk qualitatively, it can also be adapted into numerical form, represented by 20% ranges, to quantify the risk. This allows for a more precise and objective assessment of risk that can be used to make informed decisions about risk management.

To convert the five-point scale to 20% ranges, each category is assigned a range of probabilities. The ranges are as follows:

- Very Low: 0% – 20%

- Low: 21% – 40%

- Moderate: 41% – 60%

- High: 61% – 80%

- Very High: 81% – 100%

Figure 8 The 5-Point Scale Range Values

By assigning each category a range of probabilities, the level of risk associated with an event or activity can be quantified. When communicating this, you should note that this estimate is based on an initial range of 20% for each.

Benefits of Using the Scale

Using the five-point scale with values of very low, low, moderate, high, and very high is a good way to begin thinking, speaking, and quantifying risk. It provides a simple and intuitive way to express risk that people with different levels of expertise in risk management can easily understand. It also allows for quick and easy consensus-building among different organizational groups.

One of the benefits of using the 1-5 scale is the same as found by L. Hoffman and D. Clement (1970) 19 , which is the value of using “intuitive linguistic variables” for range variables. Another benefit is a five-point scale avoids the issues found in a three-point scale by allowing wider disbursement among the mid-range values. A simple three-point scale is susceptible to bias (most people are averse to using either the lowest or highest extremes and tend to default to mid-range values).

The conversion of the scale to 20% ranges provides a more precise and objective assessment of risk that can be used to make informed decisions about risk management. This allows for a more systematic and consistent approach to risk management that can help organizations identify, assess, and manage risk.

In addition, using the five-point scale can help promote a risk management culture within an organization. Providing a simple and intuitive way to express risk can encourage employees to think more proactively about risk and take appropriate steps to manage risk in their daily activities.

A five-point scale provides a simple and intuitive way to express risk that people with different levels of expertise in risk management can easily understand. Translating the qualitative descriptors of the five-point scale into corresponding 20% probability ranges enhances the precision of risk evaluations, allowing for a more quantifiable and objective approach to risk assessment. Using this scale can help promote a risk management culture within an organization and aid in consensus-building among different organizational groups.

Back-of-the-Napkin Math

This method is an easy way to quantify risk without advanced tools or models. It approximates an advanced method known as the Monte Carlo Simulation using ranges described in the 5-point scale method. This method produces a usable approximation but lacks the level of detail or ability to generate meaningful probability distribution charts available with the Monte Carlo simulation method. You only need a sheet of paper and a pen or pencil to use this method, which is why I call it the “back-of-the-napkin” method.

The Three-Point Range Values

Using three-point values is a simple and effective way to express a range, such as the level of threat and likelihood associated with an event or activity. The three values are minimum, most likelihood, and maximum.

When we quantify risk, we use the formula Threat x Likelihood = Risk . Each of these (threat, likelihood, and risk) is expressed as a range.

To this equation, we can add the impact as a way to rate the risk. Risk x Impact = Rating

The impact can be financial or operational, and whether the impact is Very High or Very Low is always established by the organization. If the impact is financial it is expressed as a dollar value.

Let’s look at how the three-point values are used to quantify risk.

Assume the threat values of .10, .20, and .30. Then assume the likelihood values are .20, .80, and .60. How do we multiply ranges?

Follow these steps to multiply two 3-value ranges:

- Multiply the first value of the first range by the first value of the second range.

- Multiply the second value of the first range by the second value of the second range.

- Multiply the third value of the first range by the third value of the third range.

[.10 .20 .30] x [.20 .60 .80] = [.10 x .20] [.20 x .60] [.30 x .80]

Now, just give the final three values.

.10 x .20 = .02

.20 x .60 = .12

.30 x .80 = .24

You get the following range [.02 .12 .24].

Now, let’s estimate the range for impact . Assume $10K, $20K, and $50K as the values.

[.20 .16 .18] x [ $10K $20K $50K] = [$2,000 $2,400 $12,000]

.20 x $10,000 = $2,000

.16 x $20,000 = $2,400

.18 x $50,000 = $12,000

Developing a Range Estimate from a Single Point Value

In many instances, you will only have a single-point value, such as the percentage of assets missing a patch. In this case, you can use the single point value as your most likely value and add +/- 10% to get a 20% range.

Example : If 20% of workstations are missing a patch, you could use the +/- 10% to produce the range .10-.20-.30. When using this method, you should note in your communications that this is a +/- 10% estimate based on the initial value of the weakness finding (20% of workstations with a missing patch).

Developing a Range from Multiple Variables .

When you have multiple variables, one approach to establishing your range is to take the highest and lowest values in the set, then establish your mid-point value by subtracting the lowest value from the highest and dividing that value by 2, then add that value to the lowest value. BYJUS.com, a global EdTech firm, has a basic explainer for ranges available at BYJUS.com “Range”. https://byjus.com/maths/range/ .

Example : 20% of servers are missing a patch and 45% of servers have a weak configuration that leaves them open to compromise. We can use 20% as the low value and 45% as the high value. To calculate the mid-range value, we subtract the lower value from the higher value (45-20=25) and divide that by 2 (25/2=12.5), then add that to the lower value (20+12.5=32.5). That gives us .20-.32.5-.45.

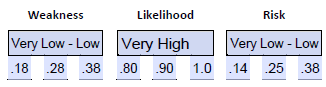

Figure 9 Back-of-the-Napkin Worksheet

Case Studies

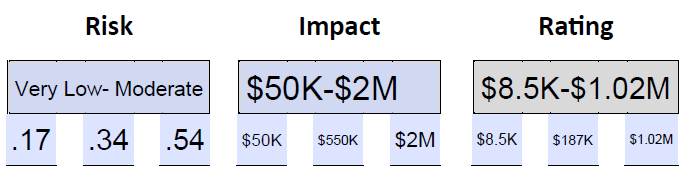

For each of the scenarios provided, use the five-point scale to convert estimates of threat (weakness), likelihood (the likelihood that the weakness will be leveraged against the organization), risk, impact (a range of financial cost), and score. Reading and understanding the examples will guide your evaluation process and prepare you for the module quiz and final project.

The Branch Manager

As the branch manager sat in her office, she received an urgent message from the corporate security team about a newly released patch that addressed a critical vulnerability in the company’s network. Concerned about the potential risk to her branch, she immediately contacted the network operations group to inquire about the patch.

The network administrator reviewed the vulnerability data and determined that 28% of their web servers required the patch. She knew that this was a significant number of web servers involved. She also knew that a critical vulnerability on web facing servers posed a high risk to the organization.

However, the operations group could not apply the patch for a week due to other scheduled maintenance. The network administrator explained to the branch manager that the patch required significant testing and validation before being deployed to the production environment. She assured the branch manager that the operations group was working diligently to ensure the patch would be deployed as soon as possible.

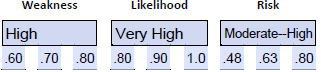

- Assign a range to weakness . In this example, we have a percentage of the threat landscape that is missing a required patch. We can use this as the basis for our initial range for threat. 28% falls within the low range, so we can use this to justify a low rating for weakness. With 28% as a midpoint, we add +/- 10%, giving us a range of .18-.28-.38 for threat.

- Assign a range to likelihood . In the example we are told the missing patch has a critical severity and that it is on web servers. We can review our guidance for establishing an initial estimate and consider the criticality of the vulnerability and location (web servers); we can justify a very high risk range of .80-.90-1.0.

- Set the time period for the estimate . We will use the time period of “until patches are applied”. We could note that the longer this takes the more the likelihood of compromise increases.

- Calculate initial estimate .

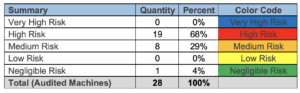

University Case Study

The college has always prided itself on its commitment to technology and innovation. With a sprawling campus and a diverse student population, the college relies heavily on its network infrastructure to provide critical services to its students, faculty, and staff.

However, in recent months, the college has experienced several issues with its network infrastructure. Users across the campus had reported slow performance, intermittent outages, and other issues. Concerned about the potential impact of these issues, the college decided to perform an internal audit of its network infrastructure.

The audit revealed a number of significant issues with the college’s network infrastructure. The most pressing issue was that 70% of the college’s workstations required system upgrades due to recent end-of-life notices that hadn’t been tracked. The previous network administrator had recently left, and it had taken some time for the new administrator to come up to speed. As a result, critical updates and patches had been missed, leaving the college’s network vulnerable to potential cyber-attacks.

The new administrator found that there was little network documentation, and in fact, there was little segment across the campus. This meant that if a cyber-attacker were to gain access to one part of the network, they would have access to the entire network.

The new administrator was alarmed by the audit’s findings. She knew that the college’s network was vulnerable to potential cyber-attacks and that urgent action was needed to address the issues.

As she continued to review the network infrastructure, the new administrator read about a recent cyber-attack at another university. In that attack, the threat actor had moved laterally across the network and could compromise and exfiltrate sensitive data from the administration office. The attack had caused significant damage to the university’s reputation and resulted in a loss of trust among students, faculty, and staff.

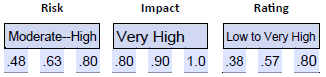

- Assign a range to weakness . In this example, we are given the statistic that 70% of workstations are on an unsupported operating system version. We can use this percentage of the threat landscape (workstations) as the basis for an initial estimate. Using 70 as our mid-range value, we get .60-.70-.80, which is moderate to high.

- Assign a range to likelihood . For likelihood, we consider the network’s lack of segmentation and documentation and the recent attack on another university in which this weakness was leveraged, resulting in the exfiltration of sensitive data. This activity raises the likelihood that the university would be a target. We can use a range of very high , giving us .80-.90-1.0.

- Assign a range to impact . We can consider the impact experienced by the recent attack at another university as a potential impact on this university, given the lack of segmentation and documentation. We also know that 70% of workstations (including administrative) use an unsupported operating system. Combined, we can justify a very high impact range of .80-.90-1.0.

- Indicate applicable time period. We considered two key variables: vulnerable workstations and lack of network segmentation. Both of these would need to be addressed to change the risk, impact, or rating. When we indicate our applicable time periods, we need to note this and state that this estimate is applicable until these weaknesses are sufficiently addressed.

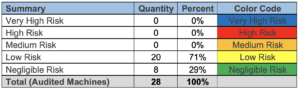

Health Care Facility Case Study

As the HIPAA compliance auditor arrived at the healthcare provider, she was ready to conduct a thorough audit of their HIPAA compliance measures. The healthcare provider hired an auditor to identify any systems vulnerabilities and provide recommendations for improvement.

As the auditor began her assessment, she quickly identified several areas of concern. She discovered that over 60% of the staff were not provided with HIPAA compliance training. The auditor found that the healthcare provider had not implemented a comprehensive training program to educate their staff on HIPAA compliance policies and procedures. This presented a significant risk, as the staff may unknowingly violate HIPAA regulations, leading to potential legal and financial liabilities.

In addition, the auditor found that 12% of the staff did not have dedicated laptops. This created a risk of unauthorized access to patient information, as multiple staff members with varying degrees of “need to know” shared laptops, potentially allowing staff who did not have the “need to know” to access patient records.

The auditor also discovered that 48% of the logging system was missing or inoperable due to some network configurations that were only partially implemented. This meant that the healthcare provider could not track and monitor access to patient records. This potentially meant that they could have a privacy violation or loss of sensitive information and not be aware of the violation, which could expose them to civil penalties or even criminal charges.

The auditor also found that patient data was not partitioned from other data on the network. This presented a significant risk, as the healthcare provider’s network could be compromised by external threat actors, and the lack of data partitioning could allow lateral movement, resulting in sensitive data being stolen or ransomed.

After compiling her assessment, the auditor estimated that the healthcare provider’s HIPAA compliance posture did have significant weaknesses, with a significant risk of unauthorized internal access. She noted that the lack of HIPAA compliance training, the inadequate number of workstations, the missing logging system, and the lack of data partitioning presented a significant risk of HIPAA violations and data breaches. She estimated that the healthcare provider’s legal liability from the identified weaknesses could be significant, as the provider could be held responsible for any financial losses or damages suffered by patients due to the breach.

The auditor’s report included detailed recommendations for the healthcare provider to improve their HIPPA compliance measures. She advised the provider to implement a comprehensive HIPPA compliance training program to educate their staff on HIPPA regulations and procedures. She also recommended that the provider increase the number of laptops from 132 to 150 to ensure that patient records were not left unintentionally exposed to staff that lacked the “need to know.”

To address the missing logging system, the auditor recommended that the healthcare provider implement a comprehensive system that tracks and monitors access to patient records. She advised the provider to implement least privilege role-based access controls and appropriate network segmentation to separate patient data from other network data.

The estimated cost to implement the auditor’s recommendations was significant. The healthcare provider would need to invest between $50,000 to $100,000.

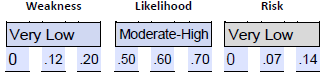

- Estimate the weakness . We can use the 12% estimate of missing laptops as the basis for estimating the weakness as a percentage of the threat landscape. We can use a very low estimate of 0-.12-.22. The lack of sufficient data separation was linked to the risk of external threat actors moving laterally and potentially stealing or ransoming sensitive data. The lack of logging is of concern, but it is not a weakness that can be leveraged to result in an attack. Rather, it results in a lack of visibility and awareness.

- Estimate the likelihood . We can use the 60% of staff lacking the training to estimate the likelihood of inadvertent unauthorized access to patient-sensitive data. We could use a .50-.60-.70 range or moderate to high. We have insufficient data to estimate the likelihood of an external attack because no relevant weaknesses were identified in the audit.

Accounting Firm Case Study

The cybersecurity auditor arrived at the accounting firm of Smith and Associates, ready to conduct a thorough audit of their cybersecurity measures. The firm hired the auditor to identify any systems vulnerabilities and provide recommendations for improvement.

As the auditor began his assessment, he quickly identified several areas of concern. He discovered that 67% of the firm’s workstations had outdated software, including operating systems and applications. This presented a significant risk, as obsolete software can contain known vulnerabilities that cyber-attackers can exploit.

In addition, the auditor found that 29% of the workstations had outdated anti-virus software. This was a significant concern, as anti-virus software is the first line of defense against malware and other cyber threats. Outdated anti-virus software can be ineffective against new and emerging threats, leaving the firm’s systems vulnerable to attack.

The auditor also discovered that the firm’s public-facing web server had multiple SQL vulnerabilities. SQL vulnerabilities are a common target for cyber-attackers, as they can be exploited to gain unauthorized access to databases and steal sensitive data. The auditor was particularly concerned about this vulnerability, as it posed a significant risk to the firm’s clients and their confidential financial information.

After completing his assessment, the auditor stated that the firm’s cybersecurity posture has several significant weaknesses that could likely be leveraged in an attack. He noted that the outdated software and anti-virus, combined with the SQL vulnerabilities on the public-facing web server, created a significant risk of cyber-attack. He recommended that the firm immediately address these vulnerabilities and improve its cybersecurity posture.

According to a recent report by IBM, the average data breach cost is $3.86 million. This includes costs associated with detecting and containing the breach, notifying affected individuals, and providing identity theft protection services. The report also found that the cost per lost or stolen record containing sensitive information was $180.

If the accounting firm suffered a data breach, the financial impact could be substantial. For example, if the attackers had stolen 10,000 client records, the cost of the breach could have been $1.8 million.

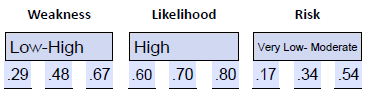

- Estimate the weakness. We have two weaknesses related to the workstations: 67% are using outdated operating systems and applications, and 29% have outdated anti-virus. We subtract the lowest value from the highest value (67-29=38) and divide that by 2 (38/2=19), then add that to the lowest value (29+29=48). That gives us the range of .29-.48-.67, which is low-high. We have one web server with an SQL vulnerability, which we consider very high by default. That range is .80-.90-1.0.

- Estimate the likelihood. For the workstations we will estimate the likelihood as high or .60-.70-.80. We will estimate the likelihood of compromise for the web server as very high or .80-.90-1.0.

- Estimate the risk rating for workstations and web server , each based on a $ 5 0,000, $ 5 50,000, and $ 2, 00,000 cost range . Compare to determine which source is more likely to result in a higher financial impact . In this example we are not splitting the financial cost between two probable risk sources, rather we’re comparing the two potential sources of a potential data breach with a single potential financial impact and comparing the resulting rating which is given in financial terms.

Cybersecurity Risk Quantification Copyright © by Charlene Deaver-Vazquez. All Rights Reserved.

You are using an outdated browser. Please upgrade your browser to improve your experience.

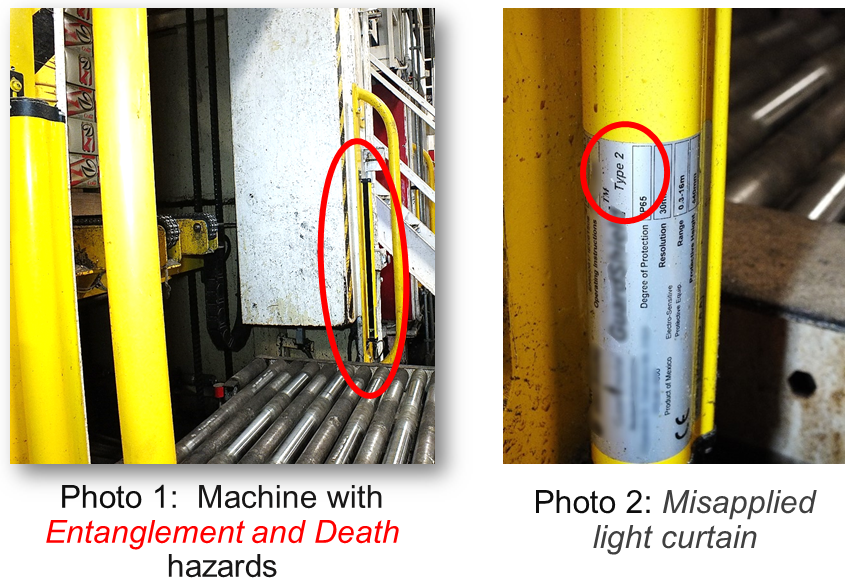

Risk Assessment Case Studies | Machine Safety Specialists

Case studies.

Live Event: Open Enrollment for Machine Safety Specialists (MSS) Virtual Machine Safety and Risk Assessment Training Class. Click Here to enroll today!

What are “Unbiased Risk Assessments”?

Unbiased Risk Assessments are guided by safety experts that have your best interests in mind. Product companies, integrators, and solution providers may steer you toward expensive, overly-complex technical solutions. Machine Safety Specialists provides unbiased Risk Assessments. See examples below.

Biased risk assessments can happen when a safety products company, integrator, or solution provider participates in the risk assessment. The participant has a conflict of interest and may steer you towards overly expensive or complex solutions that they want to sell you. Some safety product companies will do anything to get involved in the risk assessment, knowing they will “make up for it” by selling you overly expensive solutions. Safety product companies have sales targets and you could be one of them.