- How it works

How to Write a Research Design – Guide with Examples

Published by Alaxendra Bets at August 14th, 2021 , Revised On October 3, 2023

A research design is a structure that combines different components of research. It involves the use of different data collection and data analysis techniques logically to answer the research questions .

It would be best to make some decisions about addressing the research questions adequately before starting the research process, which is achieved with the help of the research design.

Below are the key aspects of the decision-making process:

- Data type required for research

- Research resources

- Participants required for research

- Hypothesis based upon research question(s)

- Data analysis methodologies

- Variables (Independent, dependent, and confounding)

- The location and timescale for conducting the data

- The time period required for research

The research design provides the strategy of investigation for your project. Furthermore, it defines the parameters and criteria to compile the data to evaluate results and conclude.

Your project’s validity depends on the data collection and interpretation techniques. A strong research design reflects a strong dissertation , scientific paper, or research proposal .

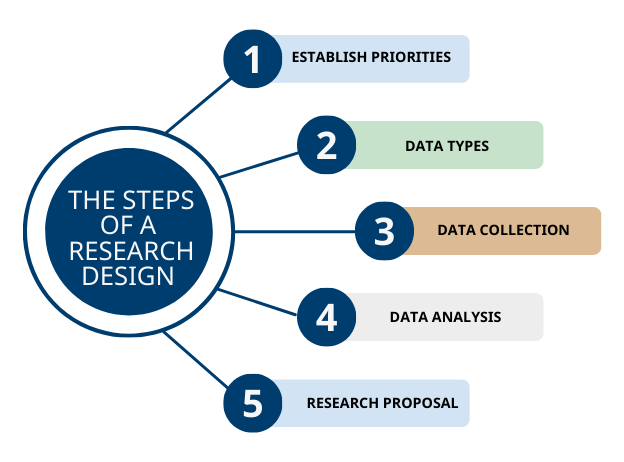

Step 1: Establish Priorities for Research Design

Before conducting any research study, you must address an important question: “how to create a research design.”

The research design depends on the researcher’s priorities and choices because every research has different priorities. For a complex research study involving multiple methods, you may choose to have more than one research design.

Multimethodology or multimethod research includes using more than one data collection method or research in a research study or set of related studies.

If one research design is weak in one area, then another research design can cover that weakness. For instance, a dissertation analyzing different situations or cases will have more than one research design.

For example:

- Experimental research involves experimental investigation and laboratory experience, but it does not accurately investigate the real world.

- Quantitative research is good for the statistical part of the project, but it may not provide an in-depth understanding of the topic .

- Also, correlational research will not provide experimental results because it is a technique that assesses the statistical relationship between two variables.

While scientific considerations are a fundamental aspect of the research design, It is equally important that the researcher think practically before deciding on its structure. Here are some questions that you should think of;

- Do you have enough time to gather data and complete the write-up?

- Will you be able to collect the necessary data by interviewing a specific person or visiting a specific location?

- Do you have in-depth knowledge about the different statistical analysis and data collection techniques to address the research questions or test the hypothesis ?

If you think that the chosen research design cannot answer the research questions properly, you can refine your research questions to gain better insight.

Step 2: Data Type you Need for Research

Decide on the type of data you need for your research. The type of data you need to collect depends on your research questions or research hypothesis. Two types of research data can be used to answer the research questions:

Primary Data Vs. Secondary Data

Qualitative vs. quantitative data.

Also, see; Research methods, design, and analysis .

Need help with a thesis chapter?

- Hire an expert from ResearchProspect today!

- Statistical analysis, research methodology, discussion of the results or conclusion – our experts can help you no matter how complex the requirements are.

Step 3: Data Collection Techniques

Once you have selected the type of research to answer your research question, you need to decide where and how to collect the data.

It is time to determine your research method to address the research problem . Research methods involve procedures, techniques, materials, and tools used for the study.

For instance, a dissertation research design includes the different resources and data collection techniques and helps establish your dissertation’s structure .

The following table shows the characteristics of the most popularly employed research methods.

Research Methods

Step 4: Procedure of Data Analysis

Use of the correct data and statistical analysis technique is necessary for the validity of your research. Therefore, you need to be certain about the data type that would best address the research problem. Choosing an appropriate analysis method is the final step for the research design. It can be split into two main categories;

Quantitative Data Analysis

The quantitative data analysis technique involves analyzing the numerical data with the help of different applications such as; SPSS, STATA, Excel, origin lab, etc.

This data analysis strategy tests different variables such as spectrum, frequencies, averages, and more. The research question and the hypothesis must be established to identify the variables for testing.

Qualitative Data Analysis

Qualitative data analysis of figures, themes, and words allows for flexibility and the researcher’s subjective opinions. This means that the researcher’s primary focus will be interpreting patterns, tendencies, and accounts and understanding the implications and social framework.

You should be clear about your research objectives before starting to analyze the data. For example, you should ask yourself whether you need to explain respondents’ experiences and insights or do you also need to evaluate their responses with reference to a certain social framework.

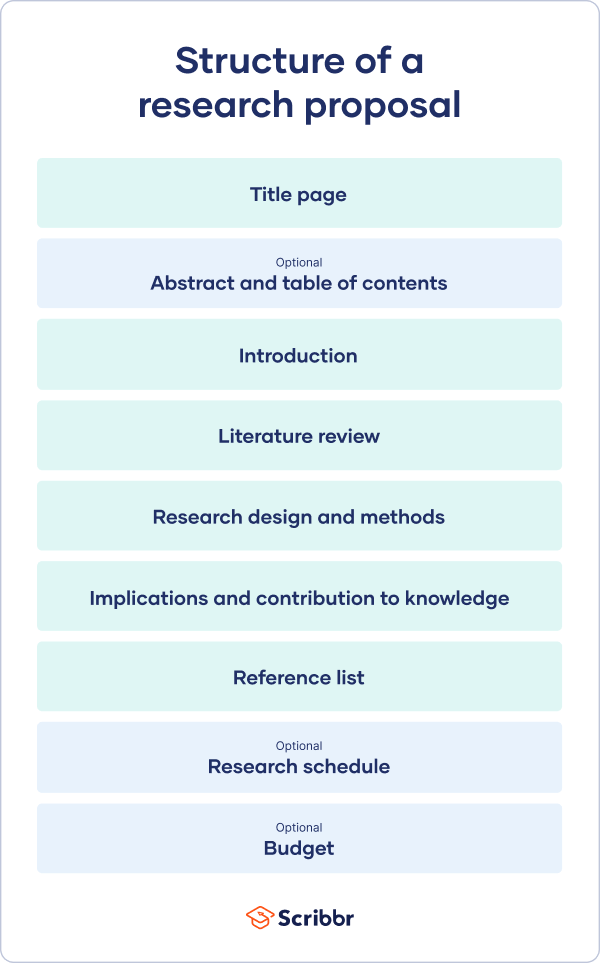

Step 5: Write your Research Proposal

The research design is an important component of a research proposal because it plans the project’s execution. You can share it with the supervisor, who would evaluate the feasibility and capacity of the results and conclusion .

Read our guidelines to write a research proposal if you have already formulated your research design. The research proposal is written in the future tense because you are writing your proposal before conducting research.

The research methodology or research design, on the other hand, is generally written in the past tense.

How to Write a Research Design – Conclusion

A research design is the plan, structure, strategy of investigation conceived to answer the research question and test the hypothesis. The dissertation research design can be classified based on the type of data and the type of analysis.

Above mentioned five steps are the answer to how to write a research design. So, follow these steps to formulate the perfect research design for your dissertation .

ResearchProspect writers have years of experience creating research designs that align with the dissertation’s aim and objectives. If you are struggling with your dissertation methodology chapter, you might want to look at our dissertation part-writing service.

Our dissertation writers can also help you with the full dissertation paper . No matter how urgent or complex your need may be, ResearchProspect can help. We also offer PhD level research paper writing services.

Frequently Asked Questions

What is research design.

Research design is a systematic plan that guides the research process, outlining the methodology and procedures for collecting and analysing data. It determines the structure of the study, ensuring the research question is answered effectively, reliably, and validly. It serves as the blueprint for the entire research project.

How to write a research design?

To write a research design, define your research question, identify the research method (qualitative, quantitative, or mixed), choose data collection techniques (e.g., surveys, interviews), determine the sample size and sampling method, outline data analysis procedures, and highlight potential limitations and ethical considerations for the study.

How to write the design section of a research paper?

In the design section of a research paper, describe the research methodology chosen and justify its selection. Outline the data collection methods, participants or samples, instruments used, and procedures followed. Detail any experimental controls, if applicable. Ensure clarity and precision to enable replication of the study by other researchers.

How to write a research design in methodology?

To write a research design in methodology, clearly outline the research strategy (e.g., experimental, survey, case study). Describe the sampling technique, participants, and data collection methods. Detail the procedures for data collection and analysis. Justify choices by linking them to research objectives, addressing reliability and validity.

You May Also Like

To help students organise their dissertation proposal paper correctly, we have put together detailed guidelines on how to structure a dissertation proposal.

Make sure that your selected topic is intriguing, manageable, and relevant. Here are some guidelines to help understand how to find a good dissertation topic.

Repository of ten perfect research question examples will provide you a better perspective about how to create research questions.

USEFUL LINKS

LEARNING RESOURCES

COMPANY DETAILS

- How It Works

- University Libraries

- Research Guides

- Topic Guides

- Research Methods Guide

- Research Design & Method

Research Methods Guide: Research Design & Method

- Introduction

- Survey Research

- Interview Research

- Data Analysis

- Resources & Consultation

Tutorial Videos: Research Design & Method

Research Methods (sociology-focused)

Qualitative vs. Quantitative Methods (intro)

Qualitative vs. Quantitative Methods (advanced)

FAQ: Research Design & Method

What is the difference between Research Design and Research Method?

Research design is a plan to answer your research question. A research method is a strategy used to implement that plan. Research design and methods are different but closely related, because good research design ensures that the data you obtain will help you answer your research question more effectively.

Which research method should I choose ?

It depends on your research goal. It depends on what subjects (and who) you want to study. Let's say you are interested in studying what makes people happy, or why some students are more conscious about recycling on campus. To answer these questions, you need to make a decision about how to collect your data. Most frequently used methods include:

- Observation / Participant Observation

- Focus Groups

- Experiments

- Secondary Data Analysis / Archival Study

- Mixed Methods (combination of some of the above)

One particular method could be better suited to your research goal than others, because the data you collect from different methods will be different in quality and quantity. For instance, surveys are usually designed to produce relatively short answers, rather than the extensive responses expected in qualitative interviews.

What other factors should I consider when choosing one method over another?

Time for data collection and analysis is something you want to consider. An observation or interview method, so-called qualitative approach, helps you collect richer information, but it takes time. Using a survey helps you collect more data quickly, yet it may lack details. So, you will need to consider the time you have for research and the balance between strengths and weaknesses associated with each method (e.g., qualitative vs. quantitative).

- << Previous: Introduction

- Next: Survey Research >>

- Last Updated: Aug 21, 2023 10:42 AM

Open Access is an initiative that aims to make scientific research freely available to all. To date our community has made over 100 million downloads. It’s based on principles of collaboration, unobstructed discovery, and, most importantly, scientific progression. As PhD students, we found it difficult to access the research we needed, so we decided to create a new Open Access publisher that levels the playing field for scientists across the world. How? By making research easy to access, and puts the academic needs of the researchers before the business interests of publishers.

We are a community of more than 103,000 authors and editors from 3,291 institutions spanning 160 countries, including Nobel Prize winners and some of the world’s most-cited researchers. Publishing on IntechOpen allows authors to earn citations and find new collaborators, meaning more people see your work not only from your own field of study, but from other related fields too.

Brief introduction to this section that descibes Open Access especially from an IntechOpen perspective

Want to get in touch? Contact our London head office or media team here

Our team is growing all the time, so we’re always on the lookout for smart people who want to help us reshape the world of scientific publishing.

Home > Books > Cyberspace

Research Design and Methodology

Submitted: 23 January 2019 Reviewed: 08 March 2019 Published: 07 August 2019

DOI: 10.5772/intechopen.85731

Cite this chapter

There are two ways to cite this chapter:

From the Edited Volume

Edited by Evon Abu-Taieh, Abdelkrim El Mouatasim and Issam H. Al Hadid

To purchase hard copies of this book, please contact the representative in India: CBS Publishers & Distributors Pvt. Ltd. www.cbspd.com | [email protected]

Chapter metrics overview

30,677 Chapter Downloads

Impact of this chapter

Total Chapter Downloads on intechopen.com

Total Chapter Views on intechopen.com

Overall attention for this chapters

There are a number of approaches used in this research method design. The purpose of this chapter is to design the methodology of the research approach through mixed types of research techniques. The research approach also supports the researcher on how to come across the research result findings. In this chapter, the general design of the research and the methods used for data collection are explained in detail. It includes three main parts. The first part gives a highlight about the dissertation design. The second part discusses about qualitative and quantitative data collection methods. The last part illustrates the general research framework. The purpose of this section is to indicate how the research was conducted throughout the study periods.

- research design

- methodology

- data sources

Author Information

Kassu jilcha sileyew *.

- School of Mechanical and Industrial Engineering, Addis Ababa Institute of Technology, Addis Ababa University, Addis Ababa, Ethiopia

*Address all correspondence to: [email protected]

1. Introduction

Research methodology is the path through which researchers need to conduct their research. It shows the path through which these researchers formulate their problem and objective and present their result from the data obtained during the study period. This research design and methodology chapter also shows how the research outcome at the end will be obtained in line with meeting the objective of the study. This chapter hence discusses the research methods that were used during the research process. It includes the research methodology of the study from the research strategy to the result dissemination. For emphasis, in this chapter, the author outlines the research strategy, research design, research methodology, the study area, data sources such as primary data sources and secondary data, population consideration and sample size determination such as questionnaires sample size determination and workplace site exposure measurement sample determination, data collection methods like primary data collection methods including workplace site observation data collection and data collection through desk review, data collection through questionnaires, data obtained from experts opinion, workplace site exposure measurement, data collection tools pretest, secondary data collection methods, methods of data analysis used such as quantitative data analysis and qualitative data analysis, data analysis software, the reliability and validity analysis of the quantitative data, reliability of data, reliability analysis, validity, data quality management, inclusion criteria, ethical consideration and dissemination of result and its utilization approaches. In order to satisfy the objectives of the study, a qualitative and quantitative research method is apprehended in general. The study used these mixed strategies because the data were obtained from all aspects of the data source during the study time. Therefore, the purpose of this methodology is to satisfy the research plan and target devised by the researcher.

2. Research design

The research design is intended to provide an appropriate framework for a study. A very significant decision in research design process is the choice to be made regarding research approach since it determines how relevant information for a study will be obtained; however, the research design process involves many interrelated decisions [ 1 ].

This study employed a mixed type of methods. The first part of the study consisted of a series of well-structured questionnaires (for management, employee’s representatives, and technician of industries) and semi-structured interviews with key stakeholders (government bodies, ministries, and industries) in participating organizations. The other design used is an interview of employees to know how they feel about safety and health of their workplace, and field observation at the selected industrial sites was undertaken.

Hence, this study employs a descriptive research design to agree on the effects of occupational safety and health management system on employee health, safety, and property damage for selected manufacturing industries. Saunders et al. [ 2 ] and Miller [ 3 ] say that descriptive research portrays an accurate profile of persons, events, or situations. This design offers to the researchers a profile of described relevant aspects of the phenomena of interest from an individual, organizational, and industry-oriented perspective. Therefore, this research design enabled the researchers to gather data from a wide range of respondents on the impact of safety and health on manufacturing industries in Ethiopia. And this helped in analyzing the response obtained on how it affects the manufacturing industries’ workplace safety and health. The research overall design and flow process are depicted in Figure 1 .

Research methods and processes (author design).

3. Research methodology

To address the key research objectives, this research used both qualitative and quantitative methods and combination of primary and secondary sources. The qualitative data supports the quantitative data analysis and results. The result obtained is triangulated since the researcher utilized the qualitative and quantitative data types in the data analysis. The study area, data sources, and sampling techniques were discussed under this section.

3.1 The study area

According to Fraenkel and Warren [ 4 ] studies, population refers to the complete set of individuals (subjects or events) having common characteristics in which the researcher is interested. The population of the study was determined based on random sampling system. This data collection was conducted from March 07, 2015 to December 10, 2016, from selected manufacturing industries found in Addis Ababa city and around. The manufacturing companies were selected based on their employee number, established year, and the potential accidents prevailing and the manufacturing industry type even though all criterions were difficult to satisfy.

3.2 Data sources

3.2.1 primary data sources.

It was obtained from the original source of information. The primary data were more reliable and have more confidence level of decision-making with the trusted analysis having direct intact with occurrence of the events. The primary data sources are industries’ working environment (through observation, pictures, and photograph) and industry employees (management and bottom workers) (interview, questionnaires and discussions).

3.2.2 Secondary data

Desk review has been conducted to collect data from various secondary sources. This includes reports and project documents at each manufacturing sectors (more on medium and large level). Secondary data sources have been obtained from literatures regarding OSH, and the remaining data were from the companies’ manuals, reports, and some management documents which were included under the desk review. Reputable journals, books, different articles, periodicals, proceedings, magazines, newsletters, newspapers, websites, and other sources were considered on the manufacturing industrial sectors. The data also obtained from the existing working documents, manuals, procedures, reports, statistical data, policies, regulations, and standards were taken into account for the review.

In general, for this research study, the desk review has been completed to this end, and it had been polished and modified upon manuals and documents obtained from the selected companies.

4. Population and sample size

4.1 population.

The study population consisted of manufacturing industries’ employees in Addis Ababa city and around as there are more representative manufacturing industrial clusters found. To select representative manufacturing industrial sector population, the types of the industries expected were more potential to accidents based on random and purposive sampling considered. The population of data was from textile, leather, metal, chemicals, and food manufacturing industries. A total of 189 sample sizes of industries responded to the questionnaire survey from the priority areas of the government. Random sample sizes and disproportionate methods were used, and 80 from wood, metal, and iron works; 30 from food, beverage, and tobacco products; 50 from leather, textile, and garments; 20 from chemical and chemical products; and 9 from other remaining 9 clusters of manufacturing industries responded.

4.2 Questionnaire sample size determination

A simple random sampling and purposive sampling methods were used to select the representative manufacturing industries and respondents for the study. The simple random sampling ensures that each member of the population has an equal chance for the selection or the chance of getting a response which can be more than equal to the chance depending on the data analysis justification. Sample size determination procedure was used to get optimum and reasonable information. In this study, both probability (simple random sampling) and nonprobability (convenience, quota, purposive, and judgmental) sampling methods were used as the nature of the industries are varied. This is because of the characteristics of data sources which permitted the researchers to follow the multi-methods. This helps the analysis to triangulate the data obtained and increase the reliability of the research outcome and its decision. The companies’ establishment time and its engagement in operation, the number of employees and the proportion it has, the owner types (government and private), type of manufacturing industry/production, types of resource used at work, and the location it is found in the city and around were some of the criteria for the selections.

The determination of the sample size was adopted from Daniel [ 5 ] and Cochran [ 6 ] formula. The formula used was for unknown population size Eq. (1) and is given as

where n = sample size, Z = statistic for a level of confidence, P = expected prevalence or proportion (in proportion of one; if 50%, P = 0.5), and d = precision (in proportion of one; if 6%, d = 0.06). Z statistic ( Z ): for the level of confidence of 95%, which is conventional, Z value is 1.96. In this study, investigators present their results with 95% confidence intervals (CI).

The expected sample number was 267 at the marginal error of 6% for 95% confidence interval of manufacturing industries. However, the collected data indicated that only 189 populations were used for the analysis after rejecting some data having more missing values in the responses from the industries. Hence, the actual data collection resulted in 71% response rate. The 267 population were assumed to be satisfactory and representative for the data analysis.

4.3 Workplace site exposure measurement sample determination

The sample size for the experimental exposure measurements of physical work environment has been considered based on the physical data prepared for questionnaires and respondents. The response of positive were considered for exposure measurement factors to be considered for the physical environment health and disease causing such as noise intensity, light intensity, pressure/stress, vibration, temperature/coldness, or hotness and dust particles on 20 workplace sites. The selection method was using random sampling in line with purposive method. The measurement of the exposure factors was done in collaboration with Addis Ababa city Administration and Oromia Bureau of Labour and Social Affair (AACBOLSA). Some measuring instruments were obtained from the Addis Ababa city and Oromia Bureau of Labour and Social Affair.

5. Data collection methods

Data collection methods were focused on the followings basic techniques. These included secondary and primary data collections focusing on both qualitative and quantitative data as defined in the previous section. The data collection mechanisms are devised and prepared with their proper procedures.

5.1 Primary data collection methods

Primary data sources are qualitative and quantitative. The qualitative sources are field observation, interview, and informal discussions, while that of quantitative data sources are survey questionnaires and interview questions. The next sections elaborate how the data were obtained from the primary sources.

5.1.1 Workplace site observation data collection

Observation is an important aspect of science. Observation is tightly connected to data collection, and there are different sources for this: documentation, archival records, interviews, direct observations, and participant observations. Observational research findings are considered strong in validity because the researcher is able to collect a depth of information about a particular behavior. In this dissertation, the researchers used observation method as one tool for collecting information and data before questionnaire design and after the start of research too. The researcher made more than 20 specific observations of manufacturing industries in the study areas. During the observations, it found a deeper understanding of the working environment and the different sections in the production system and OSH practices.

5.1.2 Data collection through interview

Interview is a loosely structured qualitative in-depth interview with people who are considered to be particularly knowledgeable about the topic of interest. The semi-structured interview is usually conducted in a face-to-face setting which permits the researcher to seek new insights, ask questions, and assess phenomena in different perspectives. It let the researcher to know the in-depth of the present working environment influential factors and consequences. It has provided opportunities for refining data collection efforts and examining specialized systems or processes. It was used when the researcher faces written records or published document limitation or wanted to triangulate the data obtained from other primary and secondary data sources.

This dissertation is also conducted with a qualitative approach and conducting interviews. The advantage of using interviews as a method is that it allows respondents to raise issues that the interviewer may not have expected. All interviews with employees, management, and technicians were conducted by the corresponding researcher, on a face-to-face basis at workplace. All interviews were recorded and transcribed.

5.1.3 Data collection through questionnaires

The main tool for gaining primary information in practical research is questionnaires, due to the fact that the researcher can decide on the sample and the types of questions to be asked [ 2 ].

In this dissertation, each respondent is requested to reply to an identical list of questions mixed so that biasness was prevented. Initially the questionnaire design was coded and mixed up from specific topic based on uniform structures. Consequently, the questionnaire produced valuable data which was required to achieve the dissertation objectives.

The questionnaires developed were based on a five-item Likert scale. Responses were given to each statement using a five-point Likert-type scale, for which 1 = “strongly disagree” to 5 = “strongly agree.” The responses were summed up to produce a score for the measures.

5.1.4 Data obtained from experts’ opinion

The data was also obtained from the expert’s opinion related to the comparison of the knowledge, management, collaboration, and technology utilization including their sub-factors. The data obtained in this way was used for prioritization and decision-making of OSH, improving factor priority. The prioritization of the factors was using Saaty scales (1–9) and then converting to Fuzzy set values obtained from previous researches using triangular fuzzy set [ 7 ].

5.1.5 Workplace site exposure measurement

The researcher has measured the workplace environment for dust, vibration, heat, pressure, light, and noise to know how much is the level of each variable. The primary data sources planned and an actual coverage has been compared as shown in Table 1 .

Planned versus actual coverage of the survey.

The response rate for the proposed data source was good, and the pilot test also proved the reliability of questionnaires. Interview/discussion resulted in 87% of responses among the respondents; the survey questionnaire response rate obtained was 71%, and the field observation response rate was 90% for the whole data analysis process. Hence, the data organization quality level has not been compromised.

This response rate is considered to be representative of studies of organizations. As the study agrees on the response rate to be 30%, it is considered acceptable [ 8 ]. Saunders et al. [ 2 ] argued that the questionnaire with a scale response of 20% response rate is acceptable. Low response rate should not discourage the researchers, because a great deal of published research work also achieves low response rate. Hence, the response rate of this study is acceptable and very good for the purpose of meeting the study objectives.

5.1.6 Data collection tool pretest

The pretest for questionnaires, interviews, and tools were conducted to validate that the tool content is valid or not in the sense of the respondents’ understanding. Hence, content validity (in which the questions are answered to the target without excluding important points), internal validity (in which the questions raised answer the outcomes of researchers’ target), and external validity (in which the result can generalize to all the population from the survey sample population) were reflected. It has been proved with this pilot test prior to the start of the basic data collections. Following feedback process, a few minor changes were made to the originally designed data collect tools. The pilot test made for the questionnaire test was on 10 sample sizes selected randomly from the target sectors and experts.

5.2 Secondary data collection methods

The secondary data refers to data that was collected by someone other than the user. This data source gives insights of the research area of the current state-of-the-art method. It also makes some sort of research gap that needs to be filled by the researcher. This secondary data sources could be internal and external data sources of information that may cover a wide range of areas.

Literature/desk review and industry documents and reports: To achieve the dissertation’s objectives, the researcher has conducted excessive document review and reports of the companies in both online and offline modes. From a methodological point of view, literature reviews can be comprehended as content analysis, where quantitative and qualitative aspects are mixed to assess structural (descriptive) as well as content criteria.

A literature search was conducted using the database sources like MEDLINE; Emerald; Taylor and Francis publications; EMBASE (medical literature); PsycINFO (psychological literature); Sociological Abstracts (sociological literature); accident prevention journals; US Statistics of Labor, European Safety and Health database; ABI Inform; Business Source Premier (business/management literature); EconLit (economic literature); Social Service Abstracts (social work and social service literature); and other related materials. The search strategy was focused on articles or reports that measure one or more of the dimensions within the research OSH model framework. This search strategy was based on a framework and measurement filter strategy developed by the Consensus-Based Standards for the Selection of Health Measurement Instruments (COSMIN) group. Based on screening, unrelated articles to the research model and objectives were excluded. Prior to screening, researcher (principal investigator) reviewed a sample of more than 2000 articles, websites, reports, and guidelines to determine whether they should be included for further review or reject. Discrepancies were thoroughly identified and resolved before the review of the main group of more than 300 articles commenced. After excluding the articles based on the title, keywords, and abstract, the remaining articles were reviewed in detail, and the information was extracted on the instrument that was used to assess the dimension of research interest. A complete list of items was then collated within each research targets or objectives and reviewed to identify any missing elements.

6. Methods of data analysis

Data analysis method follows the procedures listed under the following sections. The data analysis part answered the basic questions raised in the problem statement. The detailed analysis of the developed and developing countries’ experiences on OSH regarding manufacturing industries was analyzed, discussed, compared and contrasted, and synthesized.

6.1 Quantitative data analysis

Quantitative data were obtained from primary and secondary data discussed above in this chapter. This data analysis was based on their data type using Excel, SPSS 20.0, Office Word format, and other tools. This data analysis focuses on numerical/quantitative data analysis.

Before analysis, data coding of responses and analysis were made. In order to analyze the data obtained easily, the data were coded to SPSS 20.0 software as the data obtained from questionnaires. This task involved identifying, classifying, and assigning a numeric or character symbol to data, which was done in only one way pre-coded [ 9 , 10 ]. In this study, all of the responses were pre-coded. They were taken from the list of responses, a number of corresponding to a particular selection was given. This process was applied to every earlier question that needed this treatment. Upon completion, the data were then entered to a statistical analysis software package, SPSS version 20.0 on Windows 10 for the next steps.

Under the data analysis, exploration of data has been made with descriptive statistics and graphical analysis. The analysis included exploring the relationship between variables and comparing groups how they affect each other. This has been done using cross tabulation/chi square, correlation, and factor analysis and using nonparametric statistic.

6.2 Qualitative data analysis

Qualitative data analysis used for triangulation of the quantitative data analysis. The interview, observation, and report records were used to support the findings. The analysis has been incorporated with the quantitative discussion results in the data analysis parts.

6.3 Data analysis software

The data were entered using SPSS 20.0 on Windows 10 and analyzed. The analysis supported with SPSS software much contributed to the finding. It had contributed to the data validation and correctness of the SPSS results. The software analyzed and compared the results of different variables used in the research questionnaires. Excel is also used to draw the pictures and calculate some analytical solutions.

7. The reliability and validity analysis of the quantitative data

7.1 reliability of data.

The reliability of measurements specifies the amount to which it is without bias (error free) and hence ensures consistent measurement across time and across the various items in the instrument [ 8 ]. In reliability analysis, it has been checked for the stability and consistency of the data. In the case of reliability analysis, the researcher checked the accuracy and precision of the procedure of measurement. Reliability has numerous definitions and approaches, but in several environments, the concept comes to be consistent [ 8 ]. The measurement fulfills the requirements of reliability when it produces consistent results during data analysis procedure. The reliability is determined through Cranach’s alpha as shown in Table 2 .

Internal consistency and reliability test of questionnaires items.

K stands for knowledge; M, management; T, technology; C, collaboration; P, policy, standards, and regulation; H, hazards and accident conditions; PPE, personal protective equipment.

7.2 Reliability analysis

Cronbach’s alpha is a measure of internal consistency, i.e., how closely related a set of items are as a group [ 11 ]. It is considered to be a measure of scale reliability. The reliability of internal consistency most of the time is measured based on the Cronbach’s alpha value. Reliability coefficient of 0.70 and above is considered “acceptable” in most research situations [ 12 ]. In this study, reliability analysis for internal consistency of Likert-scale measurement after deleting 13 items was found similar; the reliability coefficients were found for 76 items were 0.964 and for the individual groupings made shown in Table 2 . It was also found internally consistent using the Cronbach’s alpha test. Table 2 shows the internal consistency of the seven major instruments in which their reliability falls in the acceptable range for this research.

7.3 Validity

Face validity used as defined by Babbie [ 13 ] is an indicator that makes it seem a reasonable measure of some variables, and it is the subjective judgment that the instrument measures what it intends to measure in terms of relevance [ 14 ]. Thus, the researcher ensured, in this study, when developing the instruments that uncertainties were eliminated by using appropriate words and concepts in order to enhance clarity and general suitability [ 14 ]. Furthermore, the researcher submitted the instruments to the research supervisor and the joint supervisor who are both occupational health experts, to ensure validity of the measuring instruments and determine whether the instruments could be considered valid on face value.

In this study, the researcher was guided by reviewed literature related to compliance with the occupational health and safety conditions and data collection methods before he could develop the measuring instruments. In addition, the pretest study that was conducted prior to the main study assisted the researcher to avoid uncertainties of the contents in the data collection measuring instruments. A thorough inspection of the measuring instruments by the statistician and the researcher’s supervisor and joint experts, to ensure that all concepts pertaining to the study were included, ensured that the instruments were enriched.

8. Data quality management

Insight has been given to the data collectors on how to approach companies, and many of the questionnaires were distributed through MSc students at Addis Ababa Institute of Technology (AAiT) and manufacturing industries’ experience experts. This made the data quality reliable as it has been continually discussed with them. Pretesting for questionnaire was done on 10 workers to assure the quality of the data and for improvement of data collection tools. Supervision during data collection was done to understand how the data collectors are handling the questionnaire, and each filled questionnaires was checked for its completeness, accuracy, clarity, and consistency on a daily basis either face-to-face or by phone/email. The data expected in poor quality were rejected out of the acting during the screening time. Among planned 267 questionnaires, 189 were responded back. Finally, it was analyzed by the principal investigator.

9. Inclusion criteria

The data were collected from the company representative with the knowledge of OSH. Articles written in English and Amharic were included in this study. Database information obtained in relation to articles and those who have OSH area such as interventions method, method of accident identification, impact of occupational accidents, types of occupational injuries/disease, and impact of occupational accidents, and disease on productivity and costs of company and have used at least one form of feedback mechanism. No specific time period was chosen in order to access all available published papers. The questionnaire statements which are similar in the questionnaire have been rejected from the data analysis.

10. Ethical consideration

Ethical clearance was obtained from the School of Mechanical and Industrial Engineering, Institute of Technology, Addis Ababa University. Official letters were written from the School of Mechanical and Industrial Engineering to the respective manufacturing industries. The purpose of the study was explained to the study subjects. The study subjects were told that the information they provided was kept confidential and that their identities would not be revealed in association with the information they provided. Informed consent was secured from each participant. For bad working environment assessment findings, feedback will be given to all manufacturing industries involved in the study. There is a plan to give a copy of the result to the respective study manufacturing industries’ and ministries’ offices. The respondents’ privacy and their responses were not individually analyzed and included in the report.

11. Dissemination and utilization of the result

The result of this study will be presented to the Addis Ababa University, AAiT, School of Mechanical and Industrial Engineering. It will also be communicated to the Ethiopian manufacturing industries, Ministry of Labor and Social Affair, Ministry of Industry, and Ministry of Health from where the data was collected. The result will also be availed by publication and online presentation in Google Scholars. To this end, about five articles were published and disseminated to the whole world.

12. Conclusion

The research methodology and design indicated overall process of the flow of the research for the given study. The data sources and data collection methods were used. The overall research strategies and framework are indicated in this research process from problem formulation to problem validation including all the parameters. It has laid some foundation and how research methodology is devised and framed for researchers. This means, it helps researchers to consider it as one of the samples and models for the research data collection and process from the beginning of the problem statement to the research finding. Especially, this research flow helps new researchers to the research environment and methodology in particular.

Conflict of interest

There is no “conflict of interest.”

- 1. Aaker A, Kumar VD, George S. Marketing Research. New York: John Wiley & Sons Inc; 2000

- 2. Saunders M, Lewis P, Thornhill A. Research Methods for Business Student. 5th ed. Edinburgh Gate: Pearson Education Limited; 2009

- 3. Miller P. Motivation in the Workplace. Work and Organizational Psychology. Oxford: Blackwell Publishers; 1991

- 4. Fraenkel FJ, Warren NE. How to Design and Evaluate Research in Education. 4th ed. New York: McGraw-Hill; 2002

- 5. Danniel WW. Biostatist: A Foundation for Analysis in the Health Science. 7th ed. New York: John Wiley & Sons; 1999

- 6. Cochran WG. Sampling Techniques. 3rd ed. New York: John Wiley & Sons; 1977

- 7. Saaty TL. The Analytical Hierarchy Process. Pittsburg: PWS Publications; 1990

- 8. Sekaran U, Bougie R. Research Methods for Business: A Skill Building Approach. 5th ed. New Delhi: John Wiley & Sons, Ltd; 2010. pp. 1-468

- 9. Luck DJ, Rubin RS. Marketing Research. 7th ed. New Jersey: Prentice-Hall International; 1987

- 10. Wong TC. Marketing Research. Oxford, UK: Butterworth-Heinemann; 1999

- 11. Cronbach LJ. Coefficient alpha and the internal structure of tests. Psychometrika. 1951; 16 :297-334

- 12. Tavakol M, Dennick R. Making sense of Cronbach’s alpha. International Journal of Medical Education. 2011; 2 :53-55. DOI: 10.5116/ijme.4dfb.8dfd

- 13. Babbie E. The Practice of Social Research. 12th ed. Belmont, CA: Wadsworth; 2010

- 14. Polit DF, Beck CT. Generating and Assessing Evidence for Nursing Practice. 8th ed. Williams and Wilkins: Lippincott; 2008

© 2019 The Author(s). Licensee IntechOpen. This chapter is distributed under the terms of the Creative Commons Attribution 3.0 License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Continue reading from the same book

Edited by Evon Abu-Taieh

Published: 17 June 2020

By Sabína Gáliková Tolnaiová and Slavomír Gálik

1001 downloads

By Carlos Pedro Gonçalves

1540 downloads

By Konstantinos-George Thanos, Andrianna Polydouri, A...

1040 downloads

- Open access

- Published: 07 September 2020

A tutorial on methodological studies: the what, when, how and why

- Lawrence Mbuagbaw ORCID: orcid.org/0000-0001-5855-5461 1 , 2 , 3 ,

- Daeria O. Lawson 1 ,

- Livia Puljak 4 ,

- David B. Allison 5 &

- Lehana Thabane 1 , 2 , 6 , 7 , 8

BMC Medical Research Methodology volume 20 , Article number: 226 ( 2020 ) Cite this article

37k Accesses

52 Citations

58 Altmetric

Metrics details

Methodological studies – studies that evaluate the design, analysis or reporting of other research-related reports – play an important role in health research. They help to highlight issues in the conduct of research with the aim of improving health research methodology, and ultimately reducing research waste.

We provide an overview of some of the key aspects of methodological studies such as what they are, and when, how and why they are done. We adopt a “frequently asked questions” format to facilitate reading this paper and provide multiple examples to help guide researchers interested in conducting methodological studies. Some of the topics addressed include: is it necessary to publish a study protocol? How to select relevant research reports and databases for a methodological study? What approaches to data extraction and statistical analysis should be considered when conducting a methodological study? What are potential threats to validity and is there a way to appraise the quality of methodological studies?

Appropriate reflection and application of basic principles of epidemiology and biostatistics are required in the design and analysis of methodological studies. This paper provides an introduction for further discussion about the conduct of methodological studies.

Peer Review reports

The field of meta-research (or research-on-research) has proliferated in recent years in response to issues with research quality and conduct [ 1 , 2 , 3 ]. As the name suggests, this field targets issues with research design, conduct, analysis and reporting. Various types of research reports are often examined as the unit of analysis in these studies (e.g. abstracts, full manuscripts, trial registry entries). Like many other novel fields of research, meta-research has seen a proliferation of use before the development of reporting guidance. For example, this was the case with randomized trials for which risk of bias tools and reporting guidelines were only developed much later – after many trials had been published and noted to have limitations [ 4 , 5 ]; and for systematic reviews as well [ 6 , 7 , 8 ]. However, in the absence of formal guidance, studies that report on research differ substantially in how they are named, conducted and reported [ 9 , 10 ]. This creates challenges in identifying, summarizing and comparing them. In this tutorial paper, we will use the term methodological study to refer to any study that reports on the design, conduct, analysis or reporting of primary or secondary research-related reports (such as trial registry entries and conference abstracts).

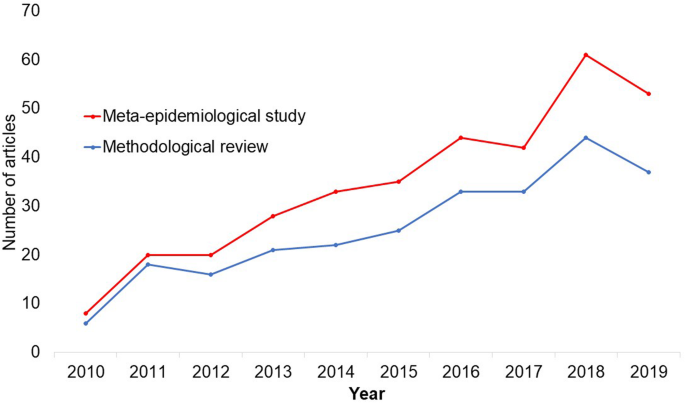

In the past 10 years, there has been an increase in the use of terms related to methodological studies (based on records retrieved with a keyword search [in the title and abstract] for “methodological review” and “meta-epidemiological study” in PubMed up to December 2019), suggesting that these studies may be appearing more frequently in the literature. See Fig. 1 .

Trends in the number studies that mention “methodological review” or “meta-

epidemiological study” in PubMed.

The methods used in many methodological studies have been borrowed from systematic and scoping reviews. This practice has influenced the direction of the field, with many methodological studies including searches of electronic databases, screening of records, duplicate data extraction and assessments of risk of bias in the included studies. However, the research questions posed in methodological studies do not always require the approaches listed above, and guidance is needed on when and how to apply these methods to a methodological study. Even though methodological studies can be conducted on qualitative or mixed methods research, this paper focuses on and draws examples exclusively from quantitative research.

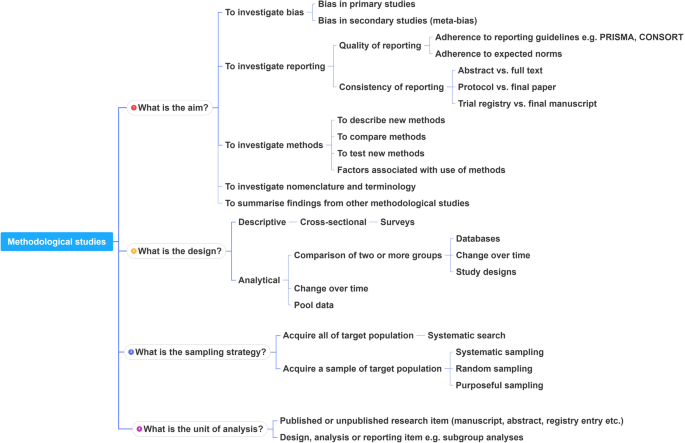

The objectives of this paper are to provide some insights on how to conduct methodological studies so that there is greater consistency between the research questions posed, and the design, analysis and reporting of findings. We provide multiple examples to illustrate concepts and a proposed framework for categorizing methodological studies in quantitative research.

What is a methodological study?

Any study that describes or analyzes methods (design, conduct, analysis or reporting) in published (or unpublished) literature is a methodological study. Consequently, the scope of methodological studies is quite extensive and includes, but is not limited to, topics as diverse as: research question formulation [ 11 ]; adherence to reporting guidelines [ 12 , 13 , 14 ] and consistency in reporting [ 15 ]; approaches to study analysis [ 16 ]; investigating the credibility of analyses [ 17 ]; and studies that synthesize these methodological studies [ 18 ]. While the nomenclature of methodological studies is not uniform, the intents and purposes of these studies remain fairly consistent – to describe or analyze methods in primary or secondary studies. As such, methodological studies may also be classified as a subtype of observational studies.

Parallel to this are experimental studies that compare different methods. Even though they play an important role in informing optimal research methods, experimental methodological studies are beyond the scope of this paper. Examples of such studies include the randomized trials by Buscemi et al., comparing single data extraction to double data extraction [ 19 ], and Carrasco-Labra et al., comparing approaches to presenting findings in Grading of Recommendations, Assessment, Development and Evaluations (GRADE) summary of findings tables [ 20 ]. In these studies, the unit of analysis is the person or groups of individuals applying the methods. We also direct readers to the Studies Within a Trial (SWAT) and Studies Within a Review (SWAR) programme operated through the Hub for Trials Methodology Research, for further reading as a potential useful resource for these types of experimental studies [ 21 ]. Lastly, this paper is not meant to inform the conduct of research using computational simulation and mathematical modeling for which some guidance already exists [ 22 ], or studies on the development of methods using consensus-based approaches.

When should we conduct a methodological study?

Methodological studies occupy a unique niche in health research that allows them to inform methodological advances. Methodological studies should also be conducted as pre-cursors to reporting guideline development, as they provide an opportunity to understand current practices, and help to identify the need for guidance and gaps in methodological or reporting quality. For example, the development of the popular Preferred Reporting Items of Systematic reviews and Meta-Analyses (PRISMA) guidelines were preceded by methodological studies identifying poor reporting practices [ 23 , 24 ]. In these instances, after the reporting guidelines are published, methodological studies can also be used to monitor uptake of the guidelines.

These studies can also be conducted to inform the state of the art for design, analysis and reporting practices across different types of health research fields, with the aim of improving research practices, and preventing or reducing research waste. For example, Samaan et al. conducted a scoping review of adherence to different reporting guidelines in health care literature [ 18 ]. Methodological studies can also be used to determine the factors associated with reporting practices. For example, Abbade et al. investigated journal characteristics associated with the use of the Participants, Intervention, Comparison, Outcome, Timeframe (PICOT) format in framing research questions in trials of venous ulcer disease [ 11 ].

How often are methodological studies conducted?

There is no clear answer to this question. Based on a search of PubMed, the use of related terms (“methodological review” and “meta-epidemiological study”) – and therefore, the number of methodological studies – is on the rise. However, many other terms are used to describe methodological studies. There are also many studies that explore design, conduct, analysis or reporting of research reports, but that do not use any specific terms to describe or label their study design in terms of “methodology”. This diversity in nomenclature makes a census of methodological studies elusive. Appropriate terminology and key words for methodological studies are needed to facilitate improved accessibility for end-users.

Why do we conduct methodological studies?

Methodological studies provide information on the design, conduct, analysis or reporting of primary and secondary research and can be used to appraise quality, quantity, completeness, accuracy and consistency of health research. These issues can be explored in specific fields, journals, databases, geographical regions and time periods. For example, Areia et al. explored the quality of reporting of endoscopic diagnostic studies in gastroenterology [ 25 ]; Knol et al. investigated the reporting of p -values in baseline tables in randomized trial published in high impact journals [ 26 ]; Chen et al. describe adherence to the Consolidated Standards of Reporting Trials (CONSORT) statement in Chinese Journals [ 27 ]; and Hopewell et al. describe the effect of editors’ implementation of CONSORT guidelines on reporting of abstracts over time [ 28 ]. Methodological studies provide useful information to researchers, clinicians, editors, publishers and users of health literature. As a result, these studies have been at the cornerstone of important methodological developments in the past two decades and have informed the development of many health research guidelines including the highly cited CONSORT statement [ 5 ].

Where can we find methodological studies?

Methodological studies can be found in most common biomedical bibliographic databases (e.g. Embase, MEDLINE, PubMed, Web of Science). However, the biggest caveat is that methodological studies are hard to identify in the literature due to the wide variety of names used and the lack of comprehensive databases dedicated to them. A handful can be found in the Cochrane Library as “Cochrane Methodology Reviews”, but these studies only cover methodological issues related to systematic reviews. Previous attempts to catalogue all empirical studies of methods used in reviews were abandoned 10 years ago [ 29 ]. In other databases, a variety of search terms may be applied with different levels of sensitivity and specificity.

Some frequently asked questions about methodological studies

In this section, we have outlined responses to questions that might help inform the conduct of methodological studies.

Q: How should I select research reports for my methodological study?

A: Selection of research reports for a methodological study depends on the research question and eligibility criteria. Once a clear research question is set and the nature of literature one desires to review is known, one can then begin the selection process. Selection may begin with a broad search, especially if the eligibility criteria are not apparent. For example, a methodological study of Cochrane Reviews of HIV would not require a complex search as all eligible studies can easily be retrieved from the Cochrane Library after checking a few boxes [ 30 ]. On the other hand, a methodological study of subgroup analyses in trials of gastrointestinal oncology would require a search to find such trials, and further screening to identify trials that conducted a subgroup analysis [ 31 ].

The strategies used for identifying participants in observational studies can apply here. One may use a systematic search to identify all eligible studies. If the number of eligible studies is unmanageable, a random sample of articles can be expected to provide comparable results if it is sufficiently large [ 32 ]. For example, Wilson et al. used a random sample of trials from the Cochrane Stroke Group’s Trial Register to investigate completeness of reporting [ 33 ]. It is possible that a simple random sample would lead to underrepresentation of units (i.e. research reports) that are smaller in number. This is relevant if the investigators wish to compare multiple groups but have too few units in one group. In this case a stratified sample would help to create equal groups. For example, in a methodological study comparing Cochrane and non-Cochrane reviews, Kahale et al. drew random samples from both groups [ 34 ]. Alternatively, systematic or purposeful sampling strategies can be used and we encourage researchers to justify their selected approaches based on the study objective.

Q: How many databases should I search?

A: The number of databases one should search would depend on the approach to sampling, which can include targeting the entire “population” of interest or a sample of that population. If you are interested in including the entire target population for your research question, or drawing a random or systematic sample from it, then a comprehensive and exhaustive search for relevant articles is required. In this case, we recommend using systematic approaches for searching electronic databases (i.e. at least 2 databases with a replicable and time stamped search strategy). The results of your search will constitute a sampling frame from which eligible studies can be drawn.

Alternatively, if your approach to sampling is purposeful, then we recommend targeting the database(s) or data sources (e.g. journals, registries) that include the information you need. For example, if you are conducting a methodological study of high impact journals in plastic surgery and they are all indexed in PubMed, you likely do not need to search any other databases. You may also have a comprehensive list of all journals of interest and can approach your search using the journal names in your database search (or by accessing the journal archives directly from the journal’s website). Even though one could also search journals’ web pages directly, using a database such as PubMed has multiple advantages, such as the use of filters, so the search can be narrowed down to a certain period, or study types of interest. Furthermore, individual journals’ web sites may have different search functionalities, which do not necessarily yield a consistent output.

Q: Should I publish a protocol for my methodological study?

A: A protocol is a description of intended research methods. Currently, only protocols for clinical trials require registration [ 35 ]. Protocols for systematic reviews are encouraged but no formal recommendation exists. The scientific community welcomes the publication of protocols because they help protect against selective outcome reporting, the use of post hoc methodologies to embellish results, and to help avoid duplication of efforts [ 36 ]. While the latter two risks exist in methodological research, the negative consequences may be substantially less than for clinical outcomes. In a sample of 31 methodological studies, 7 (22.6%) referenced a published protocol [ 9 ]. In the Cochrane Library, there are 15 protocols for methodological reviews (21 July 2020). This suggests that publishing protocols for methodological studies is not uncommon.

Authors can consider publishing their study protocol in a scholarly journal as a manuscript. Advantages of such publication include obtaining peer-review feedback about the planned study, and easy retrieval by searching databases such as PubMed. The disadvantages in trying to publish protocols includes delays associated with manuscript handling and peer review, as well as costs, as few journals publish study protocols, and those journals mostly charge article-processing fees [ 37 ]. Authors who would like to make their protocol publicly available without publishing it in scholarly journals, could deposit their study protocols in publicly available repositories, such as the Open Science Framework ( https://osf.io/ ).

Q: How to appraise the quality of a methodological study?

A: To date, there is no published tool for appraising the risk of bias in a methodological study, but in principle, a methodological study could be considered as a type of observational study. Therefore, during conduct or appraisal, care should be taken to avoid the biases common in observational studies [ 38 ]. These biases include selection bias, comparability of groups, and ascertainment of exposure or outcome. In other words, to generate a representative sample, a comprehensive reproducible search may be necessary to build a sampling frame. Additionally, random sampling may be necessary to ensure that all the included research reports have the same probability of being selected, and the screening and selection processes should be transparent and reproducible. To ensure that the groups compared are similar in all characteristics, matching, random sampling or stratified sampling can be used. Statistical adjustments for between-group differences can also be applied at the analysis stage. Finally, duplicate data extraction can reduce errors in assessment of exposures or outcomes.

Q: Should I justify a sample size?

A: In all instances where one is not using the target population (i.e. the group to which inferences from the research report are directed) [ 39 ], a sample size justification is good practice. The sample size justification may take the form of a description of what is expected to be achieved with the number of articles selected, or a formal sample size estimation that outlines the number of articles required to answer the research question with a certain precision and power. Sample size justifications in methodological studies are reasonable in the following instances:

Comparing two groups

Determining a proportion, mean or another quantifier

Determining factors associated with an outcome using regression-based analyses

For example, El Dib et al. computed a sample size requirement for a methodological study of diagnostic strategies in randomized trials, based on a confidence interval approach [ 40 ].

Q: What should I call my study?

A: Other terms which have been used to describe/label methodological studies include “ methodological review ”, “methodological survey” , “meta-epidemiological study” , “systematic review” , “systematic survey”, “meta-research”, “research-on-research” and many others. We recommend that the study nomenclature be clear, unambiguous, informative and allow for appropriate indexing. Methodological study nomenclature that should be avoided includes “ systematic review” – as this will likely be confused with a systematic review of a clinical question. “ Systematic survey” may also lead to confusion about whether the survey was systematic (i.e. using a preplanned methodology) or a survey using “ systematic” sampling (i.e. a sampling approach using specific intervals to determine who is selected) [ 32 ]. Any of the above meanings of the words “ systematic” may be true for methodological studies and could be potentially misleading. “ Meta-epidemiological study” is ideal for indexing, but not very informative as it describes an entire field. The term “ review ” may point towards an appraisal or “review” of the design, conduct, analysis or reporting (or methodological components) of the targeted research reports, yet it has also been used to describe narrative reviews [ 41 , 42 ]. The term “ survey ” is also in line with the approaches used in many methodological studies [ 9 ], and would be indicative of the sampling procedures of this study design. However, in the absence of guidelines on nomenclature, the term “ methodological study ” is broad enough to capture most of the scenarios of such studies.

Q: Should I account for clustering in my methodological study?

A: Data from methodological studies are often clustered. For example, articles coming from a specific source may have different reporting standards (e.g. the Cochrane Library). Articles within the same journal may be similar due to editorial practices and policies, reporting requirements and endorsement of guidelines. There is emerging evidence that these are real concerns that should be accounted for in analyses [ 43 ]. Some cluster variables are described in the section: “ What variables are relevant to methodological studies?”

A variety of modelling approaches can be used to account for correlated data, including the use of marginal, fixed or mixed effects regression models with appropriate computation of standard errors [ 44 ]. For example, Kosa et al. used generalized estimation equations to account for correlation of articles within journals [ 15 ]. Not accounting for clustering could lead to incorrect p -values, unduly narrow confidence intervals, and biased estimates [ 45 ].

Q: Should I extract data in duplicate?

A: Yes. Duplicate data extraction takes more time but results in less errors [ 19 ]. Data extraction errors in turn affect the effect estimate [ 46 ], and therefore should be mitigated. Duplicate data extraction should be considered in the absence of other approaches to minimize extraction errors. However, much like systematic reviews, this area will likely see rapid new advances with machine learning and natural language processing technologies to support researchers with screening and data extraction [ 47 , 48 ]. However, experience plays an important role in the quality of extracted data and inexperienced extractors should be paired with experienced extractors [ 46 , 49 ].

Q: Should I assess the risk of bias of research reports included in my methodological study?

A : Risk of bias is most useful in determining the certainty that can be placed in the effect measure from a study. In methodological studies, risk of bias may not serve the purpose of determining the trustworthiness of results, as effect measures are often not the primary goal of methodological studies. Determining risk of bias in methodological studies is likely a practice borrowed from systematic review methodology, but whose intrinsic value is not obvious in methodological studies. When it is part of the research question, investigators often focus on one aspect of risk of bias. For example, Speich investigated how blinding was reported in surgical trials [ 50 ], and Abraha et al., investigated the application of intention-to-treat analyses in systematic reviews and trials [ 51 ].

Q: What variables are relevant to methodological studies?

A: There is empirical evidence that certain variables may inform the findings in a methodological study. We outline some of these and provide a brief overview below:

Country: Countries and regions differ in their research cultures, and the resources available to conduct research. Therefore, it is reasonable to believe that there may be differences in methodological features across countries. Methodological studies have reported loco-regional differences in reporting quality [ 52 , 53 ]. This may also be related to challenges non-English speakers face in publishing papers in English.

Authors’ expertise: The inclusion of authors with expertise in research methodology, biostatistics, and scientific writing is likely to influence the end-product. Oltean et al. found that among randomized trials in orthopaedic surgery, the use of analyses that accounted for clustering was more likely when specialists (e.g. statistician, epidemiologist or clinical trials methodologist) were included on the study team [ 54 ]. Fleming et al. found that including methodologists in the review team was associated with appropriate use of reporting guidelines [ 55 ].

Source of funding and conflicts of interest: Some studies have found that funded studies report better [ 56 , 57 ], while others do not [ 53 , 58 ]. The presence of funding would indicate the availability of resources deployed to ensure optimal design, conduct, analysis and reporting. However, the source of funding may introduce conflicts of interest and warrant assessment. For example, Kaiser et al. investigated the effect of industry funding on obesity or nutrition randomized trials and found that reporting quality was similar [ 59 ]. Thomas et al. looked at reporting quality of long-term weight loss trials and found that industry funded studies were better [ 60 ]. Kan et al. examined the association between industry funding and “positive trials” (trials reporting a significant intervention effect) and found that industry funding was highly predictive of a positive trial [ 61 ]. This finding is similar to that of a recent Cochrane Methodology Review by Hansen et al. [ 62 ]

Journal characteristics: Certain journals’ characteristics may influence the study design, analysis or reporting. Characteristics such as journal endorsement of guidelines [ 63 , 64 ], and Journal Impact Factor (JIF) have been shown to be associated with reporting [ 63 , 65 , 66 , 67 ].

Study size (sample size/number of sites): Some studies have shown that reporting is better in larger studies [ 53 , 56 , 58 ].

Year of publication: It is reasonable to assume that design, conduct, analysis and reporting of research will change over time. Many studies have demonstrated improvements in reporting over time or after the publication of reporting guidelines [ 68 , 69 ].

Type of intervention: In a methodological study of reporting quality of weight loss intervention studies, Thabane et al. found that trials of pharmacologic interventions were reported better than trials of non-pharmacologic interventions [ 70 ].

Interactions between variables: Complex interactions between the previously listed variables are possible. High income countries with more resources may be more likely to conduct larger studies and incorporate a variety of experts. Authors in certain countries may prefer certain journals, and journal endorsement of guidelines and editorial policies may change over time.

Q: Should I focus only on high impact journals?

A: Investigators may choose to investigate only high impact journals because they are more likely to influence practice and policy, or because they assume that methodological standards would be higher. However, the JIF may severely limit the scope of articles included and may skew the sample towards articles with positive findings. The generalizability and applicability of findings from a handful of journals must be examined carefully, especially since the JIF varies over time. Even among journals that are all “high impact”, variations exist in methodological standards.

Q: Can I conduct a methodological study of qualitative research?

A: Yes. Even though a lot of methodological research has been conducted in the quantitative research field, methodological studies of qualitative studies are feasible. Certain databases that catalogue qualitative research including the Cumulative Index to Nursing & Allied Health Literature (CINAHL) have defined subject headings that are specific to methodological research (e.g. “research methodology”). Alternatively, one could also conduct a qualitative methodological review; that is, use qualitative approaches to synthesize methodological issues in qualitative studies.

Q: What reporting guidelines should I use for my methodological study?

A: There is no guideline that covers the entire scope of methodological studies. One adaptation of the PRISMA guidelines has been published, which works well for studies that aim to use the entire target population of research reports [ 71 ]. However, it is not widely used (40 citations in 2 years as of 09 December 2019), and methodological studies that are designed as cross-sectional or before-after studies require a more fit-for purpose guideline. A more encompassing reporting guideline for a broad range of methodological studies is currently under development [ 72 ]. However, in the absence of formal guidance, the requirements for scientific reporting should be respected, and authors of methodological studies should focus on transparency and reproducibility.

Q: What are the potential threats to validity and how can I avoid them?

A: Methodological studies may be compromised by a lack of internal or external validity. The main threats to internal validity in methodological studies are selection and confounding bias. Investigators must ensure that the methods used to select articles does not make them differ systematically from the set of articles to which they would like to make inferences. For example, attempting to make extrapolations to all journals after analyzing high-impact journals would be misleading.

Many factors (confounders) may distort the association between the exposure and outcome if the included research reports differ with respect to these factors [ 73 ]. For example, when examining the association between source of funding and completeness of reporting, it may be necessary to account for journals that endorse the guidelines. Confounding bias can be addressed by restriction, matching and statistical adjustment [ 73 ]. Restriction appears to be the method of choice for many investigators who choose to include only high impact journals or articles in a specific field. For example, Knol et al. examined the reporting of p -values in baseline tables of high impact journals [ 26 ]. Matching is also sometimes used. In the methodological study of non-randomized interventional studies of elective ventral hernia repair, Parker et al. matched prospective studies with retrospective studies and compared reporting standards [ 74 ]. Some other methodological studies use statistical adjustments. For example, Zhang et al. used regression techniques to determine the factors associated with missing participant data in trials [ 16 ].

With regard to external validity, researchers interested in conducting methodological studies must consider how generalizable or applicable their findings are. This should tie in closely with the research question and should be explicit. For example. Findings from methodological studies on trials published in high impact cardiology journals cannot be assumed to be applicable to trials in other fields. However, investigators must ensure that their sample truly represents the target sample either by a) conducting a comprehensive and exhaustive search, or b) using an appropriate and justified, randomly selected sample of research reports.

Even applicability to high impact journals may vary based on the investigators’ definition, and over time. For example, for high impact journals in the field of general medicine, Bouwmeester et al. included the Annals of Internal Medicine (AIM), BMJ, the Journal of the American Medical Association (JAMA), Lancet, the New England Journal of Medicine (NEJM), and PLoS Medicine ( n = 6) [ 75 ]. In contrast, the high impact journals selected in the methodological study by Schiller et al. were BMJ, JAMA, Lancet, and NEJM ( n = 4) [ 76 ]. Another methodological study by Kosa et al. included AIM, BMJ, JAMA, Lancet and NEJM ( n = 5). In the methodological study by Thabut et al., journals with a JIF greater than 5 were considered to be high impact. Riado Minguez et al. used first quartile journals in the Journal Citation Reports (JCR) for a specific year to determine “high impact” [ 77 ]. Ultimately, the definition of high impact will be based on the number of journals the investigators are willing to include, the year of impact and the JIF cut-off [ 78 ]. We acknowledge that the term “generalizability” may apply differently for methodological studies, especially when in many instances it is possible to include the entire target population in the sample studied.