Importance of Data Analysis Essay

The data analysis process will take place after all the necessary information is obtained and structured appropriately. This will be a basis for the initial stage of the mentioned process – primary data processing. It is important to analyze the results of each study as soon as possible after its completion. So far, the researcher’s memory can suggest those details that, for some reason, are not fixed but are of interest for understanding the essence of the matter. When processing the collected data, it may turn out that they are either insufficient or contradictory and therefore do not give grounds for final conclusions.

In this case, the study must be continued with the required additions. After collecting information from various sources, it is necessary to understand what exactly is needed for the initial analysis of needs in accordance with the task at hand. In most cases, it is advisable to start processing with the compilation of tables (pivot tables) of the data obtained (Simplilearn, 2021). For both manual and computer processing, the initial data is most often entered into the original pivot table. Recently, computer processing has become the predominant form of mathematical and statistical processing.

The second stage is mathematical data processing, which implies a complex preparation. In order to determine the methods of mathematical and statistical processing, first of all, it is important to assess the nature of the distribution for all the parameters used. For parameters that are normally distributed or close to normal, parametric statistics methods can be used, which in many cases are more powerful than nonparametric statistical methods (Ali & Bhaskar, 2016). The advantage of the latter is that they allow testing statistical hypotheses regardless of the shape of the distribution.

One of the most common tasks in data processing is assessing the reliability of differences between two or more series of values. There are a number of ways in mathematical statistics to solve it. The computer version of data processing has become the most widespread today. Many statistical applications have procedures for evaluating the differences between the parameters of the same sample or different samples (Tyagi, 2020). With fully computerized processing of the material, it is not difficult to use the appropriate procedure at the right time and assess the differences of interest.

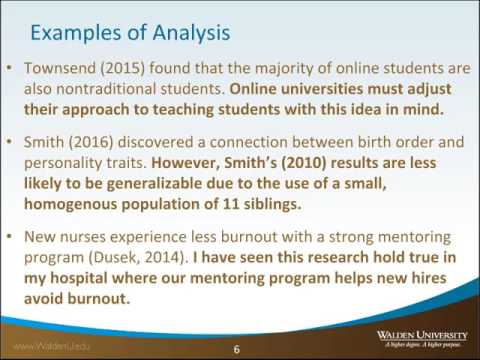

The following stage may be called the formulation of conclusions. The latter are statements expressing in a concise form the meaningful results of the study. They, in a thesis form, reflect the new findings that were obtained by the author. A common mistake is that the author includes in the conclusions generally accepted in science provisions – no longer needing proof. The responses to each of the objectives listed in the introduction should be reflected in the conclusions in a certain way.

The format for presenting the results after completing the task of analyzing information is of no small importance (Tyagi, 2020). The main content needs to be translated into an easy-to-read format based on their requirements. At the same time, you should provide easy access to additional background data for those who are interested and want to understand the topic more thoroughly. These basic rules apply regardless of the format of the presentation of the information.

In order to successfully solve this problem, special methods of analysis and information processing are required. Classical information technologies make it possible to efficiently store, structure and quickly retrieve information in a user-friendly form. The main strength of SPSS Statistics is the provision of a vast range of instruments that can be utilized in the framework of statistics (Allen et al., 2014). For all the complexity of modern methods of statistical analysis, which use the latest achievements of mathematical science, the SPSS program allows one to focus on the peculiarities of their application in each specific case. This program has capabilities that significantly exceed the scope of functions provided by standard business programs such as Excel.

The SPSS program provides the user with ample opportunities for statistical processing of experimental data, for the formation of databases (SPSS data files), for their modification. SPSS may be considered a complex and flexible statistical analysis tool (Allen et al., 2014). SPSS can take data from virtually any file type and use it to create tabular reports, graphs and distribution maps, descriptive statistics, and sophisticated statistical analysis.

At this point, it seems reasonable to define the sequence of the analysis using the SPSS tools. First, it is essential to draw up a questionnaire with the questions necessary for the researcher. Next, a survey is carried out. To process the received data, you need to draw up a coding table. The coding table establishes the correspondence between individual questions of the questionnaire and the variables used in the computer data processing (Allen et al., 2014). This solves the following tasks; first, a correspondence is established between the individual questions of the questionnaire and the variables. Second, a correspondence is established between the possible values of variables and code numbers.

Next, one needs to enter the data into the data editor according to the defined variables. After that, depending on the task, it is necessary to select the desired function and schedule. Then, you should analyze the subsequent tabular output of the result. All the necessary statistical functions that will be directly used in exploring and analyzing data are located in the Analysis menu. A very important analysis can be done with multiple responses; it is called the dichotomous method. This approach is used in cases when in the questionnaire for answering a question, it is proposed to mark several answer options (Allen et al., 2014).

Comparison of the means of different samples is one of the most commonly used methods of statistical analysis. In this case, the question must always be clarified whether the existing difference in mean values can be explained by statistical fluctuations or not. This method seems appropriate as the study will involve participants from all over the state, and their responses will need to be compared.

It should be stressed that SPSS is the most widely used statistical software. The main advantage of the SPSS software package, as one of the most advanced attainments in the area of automatized data analysis, is the broad coverage of modern statistical approaches. It is successfully combined with a large number of convenient visualization tools for processing results (Allen et al., 2014). The latest version gives notable possibilities not only within the scope of psychology, sociology, and biology but also in the field of medicine, which is crucial for the aims of future research. This greatly expands the applicability of the complex, which will serve as a significant basis for ensuring the validity of the study.

Ali, Z., & Bhaskar, S. B. (2016). Basic statistical tools in research and data analysis. Indian Journal of Anesthesia, 60 (9), 662–669.

Allen, P., Bennet, K., & Heritage, B. (2014). SPSS Statistics version 22: A practical guide . Cengage.

Simplilearn. (2021). What is data analysis: Methods, process and types explained . Web.

Tyagi, N. (2020). Introduction to statistical data analysis . Analytic Steps. Web.

- Using IBM Spss-Statistics: Gradpack 20 System

- Application of T-Tests: Data Files and SPSS

- Preparation of Correspondences by Typewriters and Computers

- Data Collection Methodology and Analysis

- Information Systems and Typical Cycle Explanation

- Aspects of Databases in Hospitals

- Report on the Cost of Running HVAC Units in Summer

- Data Analytics in TED Talks

- Chicago (A-D)

- Chicago (N-B)

IvyPanda. (2022, November 30). Importance of Data Analysis. https://ivypanda.com/essays/importance-of-data-analysis/

"Importance of Data Analysis." IvyPanda , 30 Nov. 2022, ivypanda.com/essays/importance-of-data-analysis/.

IvyPanda . (2022) 'Importance of Data Analysis'. 30 November.

IvyPanda . 2022. "Importance of Data Analysis." November 30, 2022. https://ivypanda.com/essays/importance-of-data-analysis/.

1. IvyPanda . "Importance of Data Analysis." November 30, 2022. https://ivypanda.com/essays/importance-of-data-analysis/.

Bibliography

IvyPanda . "Importance of Data Analysis." November 30, 2022. https://ivypanda.com/essays/importance-of-data-analysis/.

Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

Partner with CData to enhance your technology platform with connections to over 250 data sources.

Ask questions, get answers, and engage with your peers.

CData Virtuality helped a public healthcare network to centralize data, implement real-time analytics, and improve patient flow management, leading to more efficiency, reduced costs, and reduced employee overtime.

Unlocking The Power of Bimodal Data Integration Discover how a bimodal integration strategy can address the major data management challenges facing your organization today.

- Data Management

- Industry Insights

- Solutions & Use Cases

- Events & Webinars

- CData Drivers

- CData DBAmp

- CData Connect

- CData API Server

- CData Virtuality

Sign up for our Newsletter!

by CData Software | February 09, 2024

The Importance of Data Analysis: An Overview of Data Analytics

Organizations today need to navigate vast oceans of data to get the information they need in order to grow their business. Data analysis serves as the compass to help them reach destinations that lead to success. In an environment where businesses are in a constant race for competitive advantage, effective data analysis helps uncover critical information, drive strategic decisions, and foster innovation. Data analysis illuminates the path to make operations more efficient, expand to other markets, and innovate new services and features for customers. By transforming raw data into actionable insights, data analysis steers organizations through the uncertainties of the business world, ensuring they stay on course toward their objectives.

By uncovering patterns, trends, and anomalies within extensive datasets, businesses gain the foresight to anticipate market shifts, tailor customer experiences, and streamline operations with precision. This enables organizations to swiftly adapt to changes in the market and make timely, informed decisions to move their business forward.

Translating data into action requires an understanding of what the data is saying. Data literacy – knowing the ‘language’ of data – is critical in today’s data-centric world. It’s the very skill that empowers professionals across all sectors to apply data analytics in a way that promotes and supports effective business decisions. There’s nothing secretive or exclusive about this language; everyone, from C-suite and management to individual contributors, should learn it.

In this blog post, we’ll describe what data analysis is and its importance in the data-heavy world we live in. We’ll also get into some details about how data analysis works, the different types, and some tools and techniques you can use to help you move forward.

What is data analysis?

Data analysis is the practice of working with data to glean informed, actionable insights from the information generated across your business. This distilled definition belies the technical processes that turn raw data into something that can be useful, however. There’s a lot that happens in those processes, but that’s not the focus of this post. If you’d like more information on those processes, check out this blog post .

Analyzing data is a universal skill. We actually do it every day: at work, at home—really anywhere we make decisions based on information. For example, if you’re shopping for groceries, chances are that you evaluate the prices of the items you want to buy. You know the usual price for a favorite brand of bread. That’s data. You notice that the price has gone up, and you make a decision whether to buy it or not. That’s data analysis.

For businesses, it’s on a much bigger scale. It’s much more complex and requires additional, more comprehensive skills and tools to analyze the data that comes in.

Why is data analysis important?

The ability to sift through, process, and interpret vast amounts of data is a core function of business operations today. Accurate, well-considered, and efficiently implemented data analysis can lead to significant benefits throughout the entire organizational structure, including:

- Reducing inefficiencies and streamlining operations: Data analysis identifies inefficiencies and bottlenecks in business processes, providing opportunities to mitigate them. By analyzing resource and process data, organizations can find ways to reduce costs, boost productivity, and save time.

- Driving revenue growth: Data analysis promotes revenue growth by optimizing marketing efforts, product development, and customer retention strategies. It enables a focused approach to maximizing returns on investment (ROI).

- Mitigating risk: Forecasting potential issues and identifying risk factors before they become problematic is invaluable for all kinds of organizations. Risk analysis provides the foresight that enables businesses to implement preventative measures and avoid potential pitfalls.

- Enhancing decision-making: Insights from analyzing data empower informed, evidence-based choices. This shifts decision-making from a reliance on intuition to a strategic, data-informed approach.

- Lowering operational expenses: Data analysis helps identify unnecessary spending and underperforming assets, facilitating more efficient resource allocation. Organizations can reduce costs and reallocate budgets to improve productivity and efficiency.

- Identifying and capitalizing on new opportunities: By revealing trends and patterns, data analysis uncovers new market opportunities and avenues for expansion. This insight allows businesses to innovate and enter new markets with a solid foundation of data.

- Improving customer experience: Analyzing customer data helps organizations identify where to tailor their products, services, and interactions to meet customer needs, enhance satisfaction, and foster loyalty.

Data analysis is the foundation of strategic planning and operational efficiency, enabling organizations to navigate and swiftly adapt to market changes and evolving customer demands. It’s a critical element for gaining a competitive advantage and fostering long-lasting success in today's data-centric business environment.

4 types of data analysis

Analyzing data isn’t a single approach; it encompasses multiple approaches, each tailored to achieve specific insights. Understanding the differences can help identify the distinct elements of the type (or types) of data analysis an organization employs. While they have different names and are approached in different ways, the core objective is the same: Extract actionable insights from data. We can also identify the different types as a way of answering a question, as you’ll see below. Here are the four most common types of data analysis, each serving a special purpose:

- Descriptive analysis Descriptive analysis focuses on summarizing and understanding historical data. Descriptive analysis answers the question, "What happened?". It’s aimed at providing a clear overview of past behaviors and outcomes. Common tools for descriptive analysis include data aggregation and data mining techniques, which help identify patterns and trends.

- Diagnostic analysis Diagnostic analysis determines the cause behind a particular data point. Beyond identifying what happened, it provides the answer to “Why did it happen?”, and digs deeper into the data to understand the reasons behind past performance. Diagnostic analysis uses techniques like drill-down, data discovery, and correlations to get to the answer.

- Predictive analysis Predictive analysis answers the question, “What is likely to happen or not happen?”. This employs statistical models and other techniques to provide a forecast of likely future outcomes based on historical data. It’s invaluable for planning and risk management helping to prepare for potential future scenarios.

- Prescriptive analysis This advanced form of data analysis answers the question, “What should we do?”. It predicts future trends and makes suggestions on how to act on them by using optimization and simulation algorithms to recommend specific courses of action.

Together, these four types of data analysis play a critical role in organizational strategy, from understanding the past to evaluating the present and informing future decisions. The skillful execution of these methods helps organizations craft a holistic data strategy that anticipates, adapts to, and shapes the future with the vital information they need to navigate the complexities of today's digital-centric world with greater insight and agility.

Data analysis process: How does it work?

The journey from collecting raw data to deriving actionable insights encompasses a structured process, ensuring accuracy, relevance, and value in the findings.

Here are the six essential steps of the data analysis process:

- Identify requirements This first step is identifying the specific data required to address the business need. This phase sets the direction for the entire data analysis process, focusing efforts on gathering relevant and actionable data. CData offers connectivity solutions for hundreds of data sources, SaaS applications, and databases, simplifying the process of identifying and integrating the necessary data for analysis.

- Collect data Once we know what data we need, the next step is to start collecting it. CData makes it easy to pull together data from all kinds of sources, whether they're structured databases or unstructured data streams. This ensures you get a complete dataset quickly and without hassle, ready for the next stages of analysis.

- Clean the data This important step involves removing inaccuracies, duplicates, or irrelevant data to ensure the analysis is based on clean, high-quality data. CData can automate many data-cleaning tasks, reducing the time and effort required while increasing data accuracy.

- Analyze the data With clean data in hand, the actual analysis can begin. This step might involve statistical analysis, machine learning, or other data analysis methods. CData enhances this process by offering easy integration with popular analytics platforms and tools, allowing businesses to apply the most suitable analysis techniques effectively.

- Interpret the data Interpreting the results correctly is key to making informed decisions. CData's tools enhance this critical step by facilitating the integration of data with analytical models, helping teams draw precise conclusions and make informed decisions.

- Create reporting dashboards to visualize the data This last step is about turning data into a clear format that stakeholders can understand. CData connectivity solutions let you use the visualization tools you already know, making it easier to create compelling reports and dashboards that clearly communicate the findings.

Data analysis techniques

Data analysis encompasses various techniques that allow organizations to extract valuable insights from their data, enabling informed decision-making. Each technique offers unique capabilities for exploring, clustering, predicting, analyzing time-based data, and understanding sentiment.

Here are the five essential data analysis techniques that enable organizations to turn data into actions:

- Exploratory data analysis (EDA) involves analyzing datasets to summarize their main characteristics, often through visual methods like histograms, scatter plots, and box plots. It helps in understanding the structure of the data, identifying patterns, detecting outliers, and laying the groundwork for further analysis.

- Clustering and segmentation techniques group similar data points together based on certain features or attributes. This helps in identifying meaningful patterns within the data and segmenting the data into distinct groups or clusters. Businesses use clustering to understand customer segments, market segments, or product categories, aiding in targeted marketing and product customization.

- Machine learning algorithms enable computers to learn from data and make predictions or decisions without being explicitly programmed. Businesses utilize various machine learning algorithms such as linear regression, decision trees, random forests, and neural networks to analyze data, predict outcomes, classify data points, and identify trends. These algorithms are applied in various domains, including sales forecasting, customer churn prediction, sentiment analysis, and fraud detection.

- Time series analysis is analyzing data collected over time to understand patterns, trends, and seasonal variations. It is commonly used in forecasting future values based on historical data, identifying underlying patterns, and making informed decisions. Businesses employ time series analysis in financial forecasting, demand forecasting, inventory management, and trend analysis to predict future outcomes and plan accordingly.

- Sentiment analysis involves analyzing textual data, such as customer reviews, social media posts, and survey responses, to determine the sentiment or opinion expressed within the text. Businesses use sentiment analysis to gauge customer satisfaction, brand sentiment, and public opinion regarding products or services. By understanding sentiment trends, businesses can make strategic decisions, improve customer experiences, and manage their reputation effectively.

Data analysis tools

From powerful analytics platforms to robust database management systems, a diverse array of tools exists to meet the needs of organizations across various industries.

Here is a list of some of the most popular data analysis tools available:

- Alteryx (requirements, cleaning, analysis)

- Apache Kafka (collection, requirements)

- Google Analytics (collection, analysis)

- Google Looker (interpretation, visualization)

- Informatica (requirements, cleaning)

- Microsoft Power BI (analysis, interpretation, visualization)

- PostgreSQL (analysis)

- QlikView (analysis)

- Tableau (analysis, interpretation, visualization)

- Talend (collection, requirements)

For modern organizations, the right tools are critical to streamline processes, uncover insights, and drive strategic decisions. From data collection to visualization, these tools empower businesses to stay agile and competitive in an ever-evolving digital world.

Smooth sailing with CData

Navigating the waters of data analysis requires clear direction and reliable tools. CData's comprehensive connectivity solutions act as a compass through each stage of the data analysis process. From collecting and cleaning data to interpreting and visualizing insights, CData empowers businesses to confidently chart their course, make informed decisions, and stay competitive in today's modern business climate.

Find out more

Have you heard about the CData Community ? Learn from experienced CData users, gain insights, and get the latest updates. Join us today!

Try CData Today

Get a free trial of CData today to learn how data connectivity solutions can uplevel your data analysis processes.

CData Software is a leading provider of data access and connectivity solutions. Our standards-based connectors streamline data access and insulate customers from the complexities of integrating with on-premise or cloud databases, SaaS, APIs, NoSQL, and Big Data.

Data Connectors

- ODBC Drivers

- Java (JDBC)

ETL/ ELT Solutions

- SQL SSIS Tools

Cloud & API Connectivity

- Connect Cloud

- REST Connectors

OEM & Custom Drivers

- Embedded Connectivity

- Driver Development (SDK)

Data Visualization

- Excel Add-Ins

- Power BI Connectors

- Tableau Connectors

- CData Community

- Case Studies

- News & Events

- Newsletter Signup

- Video Gallery

© 2024 CData Software, Inc. All rights reserved. Various trademarks held by their respective owners.

Free Application Code

A $25 Value

Ready to take the next step? Pick your path. We'll help you get there. Complete the form below and receive a code to waive the $25 application fee.

CSU Global websites use cookies to enhance user experience, analyze site usage, and assist with outreach and enrollment. By continuing to use this site, you are giving us your consent to do this. Learn more in our Privacy Statement .

Colorado State University Global

- Admission Overview

- Undergraduate Students

- Graduate Students

- Transfer Students

- International Students

- Military & Veteran Students

- Non-Degree Students

- Re-Entry Students

- Meet the Admissions Team

- Tuition & Aid Overview

- Financial Aid

- Tuition & Cost

- Scholarships

- Financial Resources

- Military Benefits

- Student Success Overview

- What to Expect

- Academic Support

- Career Development

- Offices & Services

- Course Catalog

- Academic Calendar

- Student Organizations

- Student Policies

- About CSU Global

- Mission & Vision

- Accreditation

- Why CSU Global

- Our Faculty

- Industry Certifications

- Partnerships

- School Store

- Commitment to Colorado

- Memberships & Organizations

- News Overview

- Student Stories

- Special Initiatives

- Community Involvement

Why is Data Analytics Important?

- August 17, 2021

Recently, we explained what data analytics is , and here we’ll review why it’s so important to modern business practices.

As part of this discussion, we’ll cover what data analytics entails, what data analysts actually do , and why you should consider getting into the field.

Virtually everyone has heard of data analytics, business analytics, big data analytics, or the many other terms used to refer to this discipline, but few people seem to understand why it’s so important to modern organizations.

Simply put, data analytics is critical to the success of modern businesses because data analysts themselves are the individuals responsible for reviewing key performance metrics, interpreting that data, and using it to determine an effective strategy for driving organizational performance.

After you’ve learned everything you need to know about what makes data analytics such a critical discipline, fill out our information request form to receive additional details about CSU Global’s 100% online Master’s Degree Program in Data Analytics , or if you’re ready to get started, submit your application today.

What is Data Analytics?

Data analytics is the process of storing, organizing, and analyzing data for business purposes.

This process is used to inform key decision-makers and allows them to make important strategic decisions based on data, rather than hunches.

At modern organizations, data analysts are responsible for helping guide core business units and practices, including extremely important processes like:

- Information Technology

- Human Resources

- Business Development

As you may imagine, this process and the critical role played by data analytics and professional data analysts have made them incredibly important members of virtually every organization operating in any sector of the economy.

What Do Data Analysts Actually Do?

Data analysts are responsible for handling a variety of important responsibilities, including tasks like:

- Data warehousing

- Data mining and visualization

- Business analytics

- Predictive analytics

- Enterprise performance management

Accordingly, data analysts play an important role in helping senior leadership teams make difficult decisions about driving organizational effectiveness, efficiency, and profitability.

Some of the key skills data analysts need to perform these complicated tasks include:

- Analyzing large and complex sets of data.

- Applying policies and procedures to protect the privacy and security of the data that they analyze.

- Articulating analytical conclusions and strategy suggestions in writing, verbally, and visually via multimedia presentations.

- Employing data analytics solutions for business intelligence and forecasting purposes.

- Practicing ethical standards when handling data and analytics.

- Utilizing predictive analytics to address core business challenges.

Clearly then, data analyst jobs require excellent attention to detail, expert-level statistics and mathematical skills, and a deep understanding of statistics and predictive analytics.

Should I Pursue a Career in Data Analytics?

Knowing that data analytics is a difficult discipline and a challenging role, why would you want to pursue a career in the field?

First, the field is growing rapidly, and demand for skilled data analysts is projected to grow rapidly over the next decade.

In fact, the BLS predicts that employment for roles related to data analytics will rise considerably quicker than the average rate of growth for all occupations between 2021 and 2031.

These roles include:

- Mathematicians and Statisticians - 31% projected growth.

- Computer and Information Research Scientists - 21% projected growth.

- Database Administrators - 9% projected growth.

Because the industry is growing so quickly, and demand for related roles is predicted to continue rising at such a fast pace, it’s a great time to consider entering the industry.

Next, anyone interested in playing a critical strategic role in a modern business or other organization should think about developing analytics skills.

As we mentioned earlier, data analysts are the professionals responsible for helping senior leadership make important strategic decisions about core business units, making those people trained in data analytics an essential asset for modern organizations.

Accordingly, top positions in this field command excellent salaries, with some of the top jobs for Master’s program graduates earning a considerable average annual income:

- Computer and Information Research Scientist / 2021 Median Pay: $131,490

- Top Executive / 2021 Median Pay: $98,980

- Database Administrator / 2021 Median Pay: $101,000

- Statistician / 2021 Median Pay: $96,280

Even roles for Bachelor’s program graduates pay quite well, as some of the best jobs for MIS and Business Analytics alumni include:

- Computer and Information Systems Managers / 2021 Median Pay: $159,010

- Computer Programmers / 2021 Median Pay: $93,000

- Computer Systems Analysts / 2021 Median Pay: $99,270

- Operations Research Analysts / 2021 Median Pay: $82,360

If you’re looking to play a central role as a strategic business advisor, and you want to earn an excellent income, then you’d be hard-pressed to find a better field than data analytics.

How To Launch a Career in Data Analytics

While you might be able to get an entry-level role in the industry without first completing a Bachelor’s or Master’s program, it may be far easier to break into the field if you’ve finished a degree program before you apply for related roles.

Why? Because the best way to develop the knowledge, skills, and abilities you’ll need to be successful as a professional data analyst is to study data analytics in an academic setting, like CSU Global’s online Bachelor’s Degree in MIS and Business Analytics , or our online Master’s Degree in Data Analytics .

These programs will provide you with the experience and knowledge you need to launch a career in the analytics industry, while also giving you the academic credentials needed to catch the eye of hiring managers looking to fill related positions.

Earning a degree in analytics will increase the chances that you’re capable of bringing value to an organization from day one, and choosing to study at CSU Global will ensure that your degree is respected by the hiring managers you’ll be looking to impress when it comes time to pursue your first industry role.

Should I Get a Bachelor’s or a Master’s Degree in Analytics?

We suggest enrolling in the degree program that best meets your professional needs.

To determine your needs, review your current education credentials, your knowledge, and experience in data analytics, and weigh those against your long-term career goals.

If you’re new to analytics and just looking to get your foot in the door, or if you lack an undergraduate degree, then you may want to consider our Bachelor’s Degree in Management Information Systems and Business Analytics.

However, if you already have some experience in the industry, if you’ve already earned a bachelor’s degree, or if you want to pursue leadership or managerial-level roles, then you’ll want to consider our Master’s Degree in Data Analytics instead.

If you’re having trouble choosing between a bachelor’s or master’s degree, consider contacting an Enrollment Counselor by calling 1-800-462-7845, or by emailing enroll [at] csuglobal.edu .

The good news is that whichever program you choose, getting your degree will help develop your skills and abilities, while choosing to get your degree from CSU Global will ensure that you’ll graduate prepared to launch a lifelong career in the industry.

Can I Get My Degree in Data Analytics Online?

Yes, you can get a regionally accredited online Bachelor’s or Master’s Degree in Analytics from CSU Global.

Our accelerated online degree programs were designed entirely for online students, and they offer far more flexibility and freedom than traditional in-person programs.

We make it easy to juggle your educational pursuits with existing work and family responsibilities, as our programs offer:

- No requirements to attend classes at set times or locations.

- Monthly class starts.

- Accelerated, eight-week courses.

If you’re looking for a degree program with the flexibility to fit into your already busy life, then there may be no better option than choosing to study with us.

Why Should You Choose to Study Analytics at CSU Global?

Our Bachelor’s Degree program in Business Analytics and our Master’s Degree program in Data Analytics are both regionally accredited by the Higher Learning Commission .

These programs were designed to provide you with the foundational knowledge and skills needed to succeed as a professional analyst, featuring a curriculum designed specifically for the workplace.

Both programs are also taught exclusively by educators who have relevant and recent industry experience, so you can rest assured that you’ll be learning modern solutions to real-world business problems and that you’ll graduate prepared to provide value to any organization.

Our programs also rank exceptionally well, with each of them earning top 5 rankings in their respective fields, including:

- A #1 ranking for Best Online Bachelor’s in Management Information Systems Programs from Best Colleges for our Bachelor’s in MIS and Business Analytics.

- A #3 ranking for Best Online Master’s Degree in Data Analytics by Best Masters Programs for our Master’s in Data Analytics.

Furthermore, CSU Global itself has also recently received several distinguished rankings, including:

- A #1 ranking for Best Online Colleges & Schools in Colorado from Best Accredited Colleges .

- A #1 ranking for Best Online Colleges in Colorado from Best Colleges .

- A #10 ranking for Best Online Colleges for ROI from OnlineU .

Finally, CSU Global offers competitive tuition rates and a Tuition Guarantee to ensure your rate won’t increase as you're getting a degree in the desired field, thus saving your money and time.

To get additional details about our fully accredited, 100% online analytics degree programs, please give us a call at 800-462-7845, or fill out our Information Request Form .

Ready to get started today? Apply now !

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Data Science and Analytics: An Overview from Data-Driven Smart Computing, Decision-Making and Applications Perspective

Iqbal h. sarker.

1 Swinburne University of Technology, Melbourne, VIC 3122 Australia

2 Department of Computer Science and Engineering, Chittagong University of Engineering & Technology, Chittagong, 4349 Bangladesh

The digital world has a wealth of data, such as internet of things (IoT) data, business data, health data, mobile data, urban data, security data, and many more, in the current age of the Fourth Industrial Revolution (Industry 4.0 or 4IR). Extracting knowledge or useful insights from these data can be used for smart decision-making in various applications domains. In the area of data science, advanced analytics methods including machine learning modeling can provide actionable insights or deeper knowledge about data, which makes the computing process automatic and smart. In this paper, we present a comprehensive view on “Data Science” including various types of advanced analytics methods that can be applied to enhance the intelligence and capabilities of an application through smart decision-making in different scenarios. We also discuss and summarize ten potential real-world application domains including business, healthcare, cybersecurity, urban and rural data science, and so on by taking into account data-driven smart computing and decision making. Based on this, we finally highlight the challenges and potential research directions within the scope of our study. Overall, this paper aims to serve as a reference point on data science and advanced analytics to the researchers and decision-makers as well as application developers, particularly from the data-driven solution point of view for real-world problems.

Introduction

We are living in the age of “data science and advanced analytics”, where almost everything in our daily lives is digitally recorded as data [ 17 ]. Thus the current electronic world is a wealth of various kinds of data, such as business data, financial data, healthcare data, multimedia data, internet of things (IoT) data, cybersecurity data, social media data, etc [ 112 ]. The data can be structured, semi-structured, or unstructured, which increases day by day [ 105 ]. Data science is typically a “concept to unify statistics, data analysis, and their related methods” to understand and analyze the actual phenomena with data. According to Cao et al. [ 17 ] “data science is the science of data” or “data science is the study of data”, where a data product is a data deliverable, or data-enabled or guided, which can be a discovery, prediction, service, suggestion, insight into decision-making, thought, model, paradigm, tool, or system. The popularity of “Data science” is increasing day-by-day, which is shown in Fig. Fig.1 1 according to Google Trends data over the last 5 years [ 36 ]. In addition to data science, we have also shown the popularity trends of the relevant areas such as “Data analytics”, “Data mining”, “Big data”, “Machine learning” in the figure. According to Fig. Fig.1, 1 , the popularity indication values for these data-driven domains, particularly “Data science”, and “Machine learning” are increasing day-by-day. This statistical information and the applicability of the data-driven smart decision-making in various real-world application areas, motivate us to study briefly on “Data science” and machine-learning-based “Advanced analytics” in this paper.

The worldwide popularity score of data science comparing with relevant areas in a range of 0 (min) to 100 (max) over time where x -axis represents the timestamp information and y -axis represents the corresponding score

Usually, data science is the field of applying advanced analytics methods and scientific concepts to derive useful business information from data. The emphasis of advanced analytics is more on anticipating the use of data to detect patterns to determine what is likely to occur in the future. Basic analytics offer a description of data in general, while advanced analytics is a step forward in offering a deeper understanding of data and helping to analyze granular data, which we are interested in. In the field of data science, several types of analytics are popular, such as "Descriptive analytics" which answers the question of what happened; "Diagnostic analytics" which answers the question of why did it happen; "Predictive analytics" which predicts what will happen in the future; and "Prescriptive analytics" which prescribes what action should be taken, discussed briefly in “ Advanced analytics methods and smart computing ”. Such advanced analytics and decision-making based on machine learning techniques [ 105 ], a major part of artificial intelligence (AI) [ 102 ] can also play a significant role in the Fourth Industrial Revolution (Industry 4.0) due to its learning capability for smart computing as well as automation [ 121 ].

Although the area of “data science” is huge, we mainly focus on deriving useful insights through advanced analytics, where the results are used to make smart decisions in various real-world application areas. For this, various advanced analytics methods such as machine learning modeling, natural language processing, sentiment analysis, neural network, or deep learning analysis can provide deeper knowledge about data, and thus can be used to develop data-driven intelligent applications. More specifically, regression analysis, classification, clustering analysis, association rules, time-series analysis, sentiment analysis, behavioral patterns, anomaly detection, factor analysis, log analysis, and deep learning which is originated from the artificial neural network, are taken into account in our study. These machine learning-based advanced analytics methods are discussed briefly in “ Advanced analytics methods and smart computing ”. Thus, it’s important to understand the principles of various advanced analytics methods mentioned above and their applicability to apply in various real-world application areas. For instance, in our earlier paper Sarker et al. [ 114 ], we have discussed how data science and machine learning modeling can play a significant role in the domain of cybersecurity for making smart decisions and to provide data-driven intelligent security services. In this paper, we broadly take into account the data science application areas and real-world problems in ten potential domains including the area of business data science, health data science, IoT data science, behavioral data science, urban data science, and so on, discussed briefly in “ Real-world application domains ”.

Based on the importance of machine learning modeling to extract the useful insights from the data mentioned above and data-driven smart decision-making, in this paper, we present a comprehensive view on “Data Science” including various types of advanced analytics methods that can be applied to enhance the intelligence and the capabilities of an application. The key contribution of this study is thus understanding data science modeling, explaining different analytic methods for solution perspective and their applicability in various real-world data-driven applications areas mentioned earlier. Overall, the purpose of this paper is, therefore, to provide a basic guide or reference for those academia and industry people who want to study, research, and develop automated and intelligent applications or systems based on smart computing and decision making within the area of data science.

The main contributions of this paper are summarized as follows:

- To define the scope of our study towards data-driven smart computing and decision-making in our real-world life. We also make a brief discussion on the concept of data science modeling from business problems to data product and automation, to understand its applicability and provide intelligent services in real-world scenarios.

- To provide a comprehensive view on data science including advanced analytics methods that can be applied to enhance the intelligence and the capabilities of an application.

- To discuss the applicability and significance of machine learning-based analytics methods in various real-world application areas. We also summarize ten potential real-world application areas, from business to personalized applications in our daily life, where advanced analytics with machine learning modeling can be used to achieve the expected outcome.

- To highlight and summarize the challenges and potential research directions within the scope of our study.

The rest of the paper is organized as follows. The next section provides the background and related work and defines the scope of our study. The following section presents the concepts of data science modeling for building a data-driven application. After that, briefly discuss and explain different advanced analytics methods and smart computing. Various real-world application areas are discussed and summarized in the next section. We then highlight and summarize several research issues and potential future directions, and finally, the last section concludes this paper.

Background and Related Work

In this section, we first discuss various data terms and works related to data science and highlight the scope of our study.

Data Terms and Definitions

There is a range of key terms in the field, such as data analysis, data mining, data analytics, big data, data science, advanced analytics, machine learning, and deep learning, which are highly related and easily confusing. In the following, we define these terms and differentiate them with the term “Data Science” according to our goal.

The term “Data analysis” refers to the processing of data by conventional (e.g., classic statistical, empirical, or logical) theories, technologies, and tools for extracting useful information and for practical purposes [ 17 ]. The term “Data analytics”, on the other hand, refers to the theories, technologies, instruments, and processes that allow for an in-depth understanding and exploration of actionable data insight [ 17 ]. Statistical and mathematical analysis of the data is the major concern in this process. “Data mining” is another popular term over the last decade, which has a similar meaning with several other terms such as knowledge mining from data, knowledge extraction, knowledge discovery from data (KDD), data/pattern analysis, data archaeology, and data dredging. According to Han et al. [ 38 ], it should have been more appropriately named “knowledge mining from data”. Overall, data mining is defined as the process of discovering interesting patterns and knowledge from large amounts of data [ 38 ]. Data sources may include databases, data centers, the Internet or Web, other repositories of data, or data dynamically streamed through the system. “Big data” is another popular term nowadays, which may change the statistical and data analysis approaches as it has the unique features of “massive, high dimensional, heterogeneous, complex, unstructured, incomplete, noisy, and erroneous” [ 74 ]. Big data can be generated by mobile devices, social networks, the Internet of Things, multimedia, and many other new applications [ 129 ]. Several unique features including volume, velocity, variety, veracity, value (5Vs), and complexity are used to understand and describe big data [ 69 ].

In terms of analytics, basic analytics provides a summary of data whereas the term “Advanced Analytics” takes a step forward in offering a deeper understanding of data and helps to analyze granular data. Advanced analytics is characterized or defined as autonomous or semi-autonomous data or content analysis using advanced techniques and methods to discover deeper insights, predict or generate recommendations, typically beyond traditional business intelligence or analytics. “Machine learning”, a branch of artificial intelligence (AI), is one of the major techniques used in advanced analytics which can automate analytical model building [ 112 ]. This is focused on the premise that systems can learn from data, recognize trends, and make decisions, with minimal human involvement [ 38 , 115 ]. “Deep Learning” is a subfield of machine learning that discusses algorithms inspired by the human brain’s structure and the function called artificial neural networks [ 38 , 139 ].

Unlike the above data-related terms, “Data science” is an umbrella term that encompasses advanced data analytics, data mining, machine, and deep learning modeling, and several other related disciplines like statistics, to extract insights or useful knowledge from the datasets and transform them into actionable business strategies. In [ 17 ], Cao et al. defined data science from the disciplinary perspective as “data science is a new interdisciplinary field that synthesizes and builds on statistics, informatics, computing, communication, management, and sociology to study data and its environments (including domains and other contextual aspects, such as organizational and social aspects) to transform data to insights and decisions by following a data-to-knowledge-to-wisdom thinking and methodology”. In “ Understanding data science modeling ”, we briefly discuss the data science modeling from a practical perspective starting from business problems to data products that can assist the data scientists to think and work in a particular real-world problem domain within the area of data science and analytics.

Related Work

In the area, several papers have been reviewed by the researchers based on data science and its significance. For example, the authors in [ 19 ] identify the evolving field of data science and its importance in the broader knowledge environment and some issues that differentiate data science and informatics issues from conventional approaches in information sciences. Donoho et al. [ 27 ] present 50 years of data science including recent commentary on data science in mass media, and on how/whether data science varies from statistics. The authors formally conceptualize the theory-guided data science (TGDS) model in [ 53 ] and present a taxonomy of research themes in TGDS. Cao et al. include a detailed survey and tutorial on the fundamental aspects of data science in [ 17 ], which considers the transition from data analysis to data science, the principles of data science, as well as the discipline and competence of data education.

Besides, the authors include a data science analysis in [ 20 ], which aims to provide a realistic overview of the use of statistical features and related data science methods in bioimage informatics. The authors in [ 61 ] study the key streams of data science algorithm use at central banks and show how their popularity has risen over time. This research contributes to the creation of a research vector on the role of data science in central banking. In [ 62 ], the authors provide an overview and tutorial on the data-driven design of intelligent wireless networks. The authors in [ 87 ] provide a thorough understanding of computational optimal transport with application to data science. In [ 97 ], the authors present data science as theoretical contributions in information systems via text analytics.

Unlike the above recent studies, in this paper, we concentrate on the knowledge of data science including advanced analytics methods, machine learning modeling, real-world application domains, and potential research directions within the scope of our study. The advanced analytics methods based on machine learning techniques discussed in this paper can be applied to enhance the capabilities of an application in terms of data-driven intelligent decision making and automation in the final data product or systems.

Understanding Data Science Modeling

In this section, we briefly discuss how data science can play a significant role in the real-world business process. For this, we first categorize various types of data and then discuss the major steps of data science modeling starting from business problems to data product and automation.

Types of Real-World Data

Typically, to build a data-driven real-world system in a particular domain, the availability of data is the key [ 17 , 112 , 114 ]. The data can be in different types such as (i) Structured—that has a well-defined data structure and follows a standard order, examples are names, dates, addresses, credit card numbers, stock information, geolocation, etc.; (ii) Unstructured—has no pre-defined format or organization, examples are sensor data, emails, blog entries, wikis, and word processing documents, PDF files, audio files, videos, images, presentations, web pages, etc.; (iii) Semi-structured—has elements of both the structured and unstructured data containing certain organizational properties, examples are HTML, XML, JSON documents, NoSQL databases, etc.; and (iv) Metadata—that represents data about the data, examples are author, file type, file size, creation date and time, last modification date and time, etc. [ 38 , 105 ].

In the area of data science, researchers use various widely-used datasets for different purposes. These are, for example, cybersecurity datasets such as NSL-KDD [ 127 ], UNSW-NB15 [ 79 ], Bot-IoT [ 59 ], ISCX’12 [ 15 ], CIC-DDoS2019 [ 22 ], etc., smartphone datasets such as phone call logs [ 88 , 110 ], mobile application usages logs [ 124 , 149 ], SMS Log [ 28 ], mobile phone notification logs [ 77 ] etc., IoT data [ 56 , 11 , 64 ], health data such as heart disease [ 99 ], diabetes mellitus [ 86 , 147 ], COVID-19 [ 41 , 78 ], etc., agriculture and e-commerce data [ 128 , 150 ], and many more in various application domains. In “ Real-world application domains ”, we discuss ten potential real-world application domains of data science and analytics by taking into account data-driven smart computing and decision making, which can help the data scientists and application developers to explore more in various real-world issues.

Overall, the data used in data-driven applications can be any of the types mentioned above, and they can differ from one application to another in the real world. Data science modeling, which is briefly discussed below, can be used to analyze such data in a specific problem domain and derive insights or useful information from the data to build a data-driven model or data product.

Steps of Data Science Modeling

Data science is typically an umbrella term that encompasses advanced data analytics, data mining, machine, and deep learning modeling, and several other related disciplines like statistics, to extract insights or useful knowledge from the datasets and transform them into actionable business strategies, mentioned earlier in “ Background and related work ”. In this section, we briefly discuss how data science can play a significant role in the real-world business process. Figure Figure2 2 shows an example of data science modeling starting from real-world data to data-driven product and automation. In the following, we briefly discuss each module of the data science process.

- Understanding business problems: This involves getting a clear understanding of the problem that is needed to solve, how it impacts the relevant organization or individuals, the ultimate goals for addressing it, and the relevant project plan. Thus to understand and identify the business problems, the data scientists formulate relevant questions while working with the end-users and other stakeholders. For instance, how much/many, which category/group, is the behavior unrealistic/abnormal, which option should be taken, what action, etc. could be relevant questions depending on the nature of the problems. This helps to get a better idea of what business needs and what we should be extracted from data. Such business knowledge can enable organizations to enhance their decision-making process, is known as “Business Intelligence” [ 65 ]. Identifying the relevant data sources that can help to answer the formulated questions and what kinds of actions should be taken from the trends that the data shows, is another important task associated with this stage. Once the business problem has been clearly stated, the data scientist can define the analytic approach to solve the problem.

- Understanding data: As we know that data science is largely driven by the availability of data [ 114 ]. Thus a sound understanding of the data is needed towards a data-driven model or system. The reason is that real-world data sets are often noisy, missing values, have inconsistencies, or other data issues, which are needed to handle effectively [ 101 ]. To gain actionable insights, the appropriate data or the quality of the data must be sourced and cleansed, which is fundamental to any data science engagement. For this, data assessment that evaluates what data is available and how it aligns to the business problem could be the first step in data understanding. Several aspects such as data type/format, the quantity of data whether it is sufficient or not to extract the useful knowledge, data relevance, authorized access to data, feature or attribute importance, combining multiple data sources, important metrics to report the data, etc. are needed to take into account to clearly understand the data for a particular business problem. Overall, the data understanding module involves figuring out what data would be best needed and the best ways to acquire it.

- Data pre-processing and exploration: Exploratory data analysis is defined in data science as an approach to analyzing datasets to summarize their key characteristics, often with visual methods [ 135 ]. This examines a broad data collection to discover initial trends, attributes, points of interest, etc. in an unstructured manner to construct meaningful summaries of the data. Thus data exploration is typically used to figure out the gist of data and to develop a first step assessment of its quality, quantity, and characteristics. A statistical model can be used or not, but primarily it offers tools for creating hypotheses by generally visualizing and interpreting the data through graphical representation such as a chart, plot, histogram, etc [ 72 , 91 ]. Before the data is ready for modeling, it’s necessary to use data summarization and visualization to audit the quality of the data and provide the information needed to process it. To ensure the quality of the data, the data pre-processing technique, which is typically the process of cleaning and transforming raw data [ 107 ] before processing and analysis is important. It also involves reformatting information, making data corrections, and merging data sets to enrich data. Thus, several aspects such as expected data, data cleaning, formatting or transforming data, dealing with missing values, handling data imbalance and bias issues, data distribution, search for outliers or anomalies in data and dealing with them, ensuring data quality, etc. could be the key considerations in this step.

- Machine learning modeling and evaluation: Once the data is prepared for building the model, data scientists design a model, algorithm, or set of models, to address the business problem. Model building is dependent on what type of analytics, e.g., predictive analytics, is needed to solve the particular problem, which is discussed briefly in “ Advanced analytics methods and smart computing ”. To best fits the data according to the type of analytics, different types of data-driven or machine learning models that have been summarized in our earlier paper Sarker et al. [ 105 ], can be built to achieve the goal. Data scientists typically separate training and test subsets of the given dataset usually dividing in the ratio of 80:20 or data considering the most popular k -folds data splitting method [ 38 ]. This is to observe whether the model performs well or not on the data, to maximize the model performance. Various model validation and assessment metrics, such as error rate, accuracy, true positive, false positive, true negative, false negative, precision, recall, f-score, ROC (receiver operating characteristic curve) analysis, applicability analysis, etc. [ 38 , 115 ] are used to measure the model performance, which can guide the data scientists to choose or design the learning method or model. Besides, machine learning experts or data scientists can take into account several advanced analytics such as feature engineering, feature selection or extraction methods, algorithm tuning, ensemble methods, modifying existing algorithms, or designing new algorithms, etc. to improve the ultimate data-driven model to solve a particular business problem through smart decision making.

- Data product and automation: A data product is typically the output of any data science activity [ 17 ]. A data product, in general terms, is a data deliverable, or data-enabled or guide, which can be a discovery, prediction, service, suggestion, insight into decision-making, thought, model, paradigm, tool, application, or system that process data and generate results. Businesses can use the results of such data analysis to obtain useful information like churn (a measure of how many customers stop using a product) prediction and customer segmentation, and use these results to make smarter business decisions and automation. Thus to make better decisions in various business problems, various machine learning pipelines and data products can be developed. To highlight this, we summarize several potential real-world data science application areas in “ Real-world application domains ”, where various data products can play a significant role in relevant business problems to make them smart and automate.

Overall, we can conclude that data science modeling can be used to help drive changes and improvements in business practices. The interesting part of the data science process indicates having a deeper understanding of the business problem to solve. Without that, it would be much harder to gather the right data and extract the most useful information from the data for making decisions to solve the problem. In terms of role, “Data Scientists” typically interpret and manage data to uncover the answers to major questions that help organizations to make objective decisions and solve complex problems. In a summary, a data scientist proactively gathers and analyzes information from multiple sources to better understand how the business performs, and designs machine learning or data-driven tools/methods, or algorithms, focused on advanced analytics, which can make today’s computing process smarter and intelligent, discussed briefly in the following section.

An example of data science modeling from real-world data to data-driven system and decision making

Advanced Analytics Methods and Smart Computing

As mentioned earlier in “ Background and related work ”, basic analytics provides a summary of data whereas advanced analytics takes a step forward in offering a deeper understanding of data and helps in granular data analysis. For instance, the predictive capabilities of advanced analytics can be used to forecast trends, events, and behaviors. Thus, “advanced analytics” can be defined as the autonomous or semi-autonomous analysis of data or content using advanced techniques and methods to discover deeper insights, make predictions, or produce recommendations, where machine learning-based analytical modeling is considered as the key technologies in the area. In the following section, we first summarize various types of analytics and outcome that are needed to solve the associated business problems, and then we briefly discuss machine learning-based analytical modeling.

Types of Analytics and Outcome

In the real-world business process, several key questions such as “What happened?”, “Why did it happen?”, “What will happen in the future?”, “What action should be taken?” are common and important. Based on these questions, in this paper, we categorize and highlight the analytics into four types such as descriptive, diagnostic, predictive, and prescriptive, which are discussed below.

- Descriptive analytics: It is the interpretation of historical data to better understand the changes that have occurred in a business. Thus descriptive analytics answers the question, “what happened in the past?” by summarizing past data such as statistics on sales and operations or marketing strategies, use of social media, and engagement with Twitter, Linkedin or Facebook, etc. For instance, using descriptive analytics through analyzing trends, patterns, and anomalies, etc., customers’ historical shopping data can be used to predict the probability of a customer purchasing a product. Thus, descriptive analytics can play a significant role to provide an accurate picture of what has occurred in a business and how it relates to previous times utilizing a broad range of relevant business data. As a result, managers and decision-makers can pinpoint areas of strength and weakness in their business, and eventually can take more effective management strategies and business decisions.

- Diagnostic analytics: It is a form of advanced analytics that examines data or content to answer the question, “why did it happen?” The goal of diagnostic analytics is to help to find the root cause of the problem. For example, the human resource management department of a business organization may use these diagnostic analytics to find the best applicant for a position, select them, and compare them to other similar positions to see how well they perform. In a healthcare example, it might help to figure out whether the patients’ symptoms such as high fever, dry cough, headache, fatigue, etc. are all caused by the same infectious agent. Overall, diagnostic analytics enables one to extract value from the data by posing the right questions and conducting in-depth investigations into the answers. It is characterized by techniques such as drill-down, data discovery, data mining, and correlations.

- Predictive analytics: Predictive analytics is an important analytical technique used by many organizations for various purposes such as to assess business risks, anticipate potential market patterns, and decide when maintenance is needed, to enhance their business. It is a form of advanced analytics that examines data or content to answer the question, “what will happen in the future?” Thus, the primary goal of predictive analytics is to identify and typically answer this question with a high degree of probability. Data scientists can use historical data as a source to extract insights for building predictive models using various regression analyses and machine learning techniques, which can be used in various application domains for a better outcome. Companies, for example, can use predictive analytics to minimize costs by better anticipating future demand and changing output and inventory, banks and other financial institutions to reduce fraud and risks by predicting suspicious activity, medical specialists to make effective decisions through predicting patients who are at risk of diseases, retailers to increase sales and customer satisfaction through understanding and predicting customer preferences, manufacturers to optimize production capacity through predicting maintenance requirements, and many more. Thus predictive analytics can be considered as the core analytical method within the area of data science.

- Prescriptive analytics: Prescriptive analytics focuses on recommending the best way forward with actionable information to maximize overall returns and profitability, which typically answer the question, “what action should be taken?” In business analytics, prescriptive analytics is considered the final step. For its models, prescriptive analytics collects data from several descriptive and predictive sources and applies it to the decision-making process. Thus, we can say that it is related to both descriptive analytics and predictive analytics, but it emphasizes actionable insights instead of data monitoring. In other words, it can be considered as the opposite of descriptive analytics, which examines decisions and outcomes after the fact. By integrating big data, machine learning, and business rules, prescriptive analytics helps organizations to make more informed decisions to produce results that drive the most successful business decisions.

In summary, to clarify what happened and why it happened, both descriptive analytics and diagnostic analytics look at the past. Historical data is used by predictive analytics and prescriptive analytics to forecast what will happen in the future and what steps should be taken to impact those effects. In Table Table1, 1 , we have summarized these analytics methods with examples. Forward-thinking organizations in the real world can jointly use these analytical methods to make smart decisions that help drive changes in business processes and improvements. In the following, we discuss how machine learning techniques can play a big role in these analytical methods through their learning capabilities from the data.

Various types of analytical methods with examples

Machine Learning Based Analytical Modeling

In this section, we briefly discuss various advanced analytics methods based on machine learning modeling, which can make the computing process smart through intelligent decision-making in a business process. Figure Figure3 3 shows a general structure of a machine learning-based predictive modeling considering both the training and testing phase. In the following, we discuss a wide range of methods such as regression and classification analysis, association rule analysis, time-series analysis, behavioral analysis, log analysis, and so on within the scope of our study.

A general structure of a machine learning based predictive model considering both the training and testing phase

Regression Analysis

In data science, one of the most common statistical approaches used for predictive modeling and data mining tasks is regression techniques [ 38 ]. Regression analysis is a form of supervised machine learning that examines the relationship between a dependent variable (target) and independent variables (predictor) to predict continuous-valued output [ 105 , 117 ]. The following equations Eqs. 1 , 2 , and 3 [ 85 , 105 ] represent the simple, multiple or multivariate, and polynomial regressions respectively, where x represents independent variable and y is the predicted/target output mentioned above:

Regression analysis is typically conducted for one of two purposes: to predict the value of the dependent variable in the case of individuals for whom some knowledge relating to the explanatory variables is available, or to estimate the effect of some explanatory variable on the dependent variable, i.e., finding the relationship of causal influence between the variables. Linear regression cannot be used to fit non-linear data and may cause an underfitting problem. In that case, polynomial regression performs better, however, increases the model complexity. The regularization techniques such as Ridge, Lasso, Elastic-Net, etc. [ 85 , 105 ] can be used to optimize the linear regression model. Besides, support vector regression, decision tree regression, random forest regression techniques [ 85 , 105 ] can be used for building effective regression models depending on the problem type, e.g., non-linear tasks. Financial forecasting or prediction, cost estimation, trend analysis, marketing, time-series estimation, drug response modeling, etc. are some examples where the regression models can be used to solve real-world problems in the domain of data science and analytics.

Classification Analysis

Classification is one of the most widely used and best-known data science processes. This is a form of supervised machine learning approach that also refers to a predictive modeling problem in which a class label is predicted for a given example [ 38 ]. Spam identification, such as ‘spam’ and ‘not spam’ in email service providers, can be an example of a classification problem. There are several forms of classification analysis available in the area such as binary classification—which refers to the prediction of one of two classes; multi-class classification—which involves the prediction of one of more than two classes; multi-label classification—a generalization of multiclass classification in which the problem’s classes are organized hierarchically [ 105 ].

Several popular classification techniques, such as k-nearest neighbors [ 5 ], support vector machines [ 55 ], navies Bayes [ 49 ], adaptive boosting [ 32 ], extreme gradient boosting [ 85 ], logistic regression [ 66 ], decision trees ID3 [ 92 ], C4.5 [ 93 ], and random forests [ 13 ] exist to solve classification problems. The tree-based classification technique, e.g., random forest considering multiple decision trees, performs better than others to solve real-world problems in many cases as due to its capability of producing logic rules [ 103 , 115 ]. Figure Figure4 4 shows an example of a random forest structure considering multiple decision trees. In addition, BehavDT recently proposed by Sarker et al. [ 109 ], and IntrudTree [ 106 ] can be used for building effective classification or prediction models in the relevant tasks within the domain of data science and analytics.

An example of a random forest structure considering multiple decision trees

Cluster Analysis

Clustering is a form of unsupervised machine learning technique and is well-known in many data science application areas for statistical data analysis [ 38 ]. Usually, clustering techniques search for the structures inside a dataset and, if the classification is not previously identified, classify homogeneous groups of cases. This means that data points are identical to each other within a cluster, and different from data points in another cluster. Overall, the purpose of cluster analysis is to sort various data points into groups (or clusters) that are homogeneous internally and heterogeneous externally [ 105 ]. To gain insight into how data is distributed in a given dataset or as a preprocessing phase for other algorithms, clustering is often used. Data clustering, for example, assists with customer shopping behavior, sales campaigns, and retention of consumers for retail businesses, anomaly detection, etc.

Many clustering algorithms with the ability to group data have been proposed in machine learning and data science literature [ 98 , 138 , 141 ]. In our earlier paper Sarker et al. [ 105 ], we have summarized this based on several perspectives, such as partitioning methods, density-based methods, hierarchical-based methods, model-based methods, etc. In the literature, the popular K-means [ 75 ], K-Mediods [ 84 ], CLARA [ 54 ] etc. are known as partitioning methods; DBSCAN [ 30 ], OPTICS [ 8 ] etc. are known as density-based methods; single linkage [ 122 ], complete linkage [ 123 ], etc. are known as hierarchical methods. In addition, grid-based clustering methods, such as STING [ 134 ], CLIQUE [ 2 ], etc.; model-based clustering such as neural network learning [ 141 ], GMM [ 94 ], SOM [ 18 , 104 ], etc.; constrained-based methods such as COP K-means [ 131 ], CMWK-Means [ 25 ], etc. are used in the area. Recently, Sarker et al. [ 111 ] proposed a hierarchical clustering method, BOTS [ 111 ] based on bottom-up agglomerative technique for capturing user’s similar behavioral characteristics over time. The key benefit of agglomerative hierarchical clustering is that the tree-structure hierarchy created by agglomerative clustering is more informative than an unstructured set of flat clusters, which can assist in better decision-making in relevant application areas in data science.

Association Rule Analysis

Association rule learning is known as a rule-based machine learning system, an unsupervised learning method is typically used to establish a relationship among variables. This is a descriptive technique often used to analyze large datasets for discovering interesting relationships or patterns. The association learning technique’s main strength is its comprehensiveness, as it produces all associations that meet user-specified constraints including minimum support and confidence value [ 138 ].

Association rules allow a data scientist to identify trends, associations, and co-occurrences between data sets inside large data collections. In a supermarket, for example, associations infer knowledge about the buying behavior of consumers for different items, which helps to change the marketing and sales plan. In healthcare, to better diagnose patients, physicians may use association guidelines. Doctors can assess the conditional likelihood of a given illness by comparing symptom associations in the data from previous cases using association rules and machine learning-based data analysis. Similarly, association rules are useful for consumer behavior analysis and prediction, customer market analysis, bioinformatics, weblog mining, recommendation systems, etc.