Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 09 October 2023

Sign language recognition using the fusion of image and hand landmarks through multi-headed convolutional neural network

- Refat Khan Pathan 1 ,

- Munmun Biswas 2 ,

- Suraiya Yasmin 3 ,

- Mayeen Uddin Khandaker ORCID: orcid.org/0000-0003-3772-294X 4 , 5 ,

- Mohammad Salman 6 &

- Ahmed A. F. Youssef 6

Scientific Reports volume 13 , Article number: 16975 ( 2023 ) Cite this article

18k Accesses

14 Citations

Metrics details

- Computational science

- Image processing

Sign Language Recognition is a breakthrough for communication among deaf-mute society and has been a critical research topic for years. Although some of the previous studies have successfully recognized sign language, it requires many costly instruments including sensors, devices, and high-end processing power. However, such drawbacks can be easily overcome by employing artificial intelligence-based techniques. Since, in this modern era of advanced mobile technology, using a camera to take video or images is much easier, this study demonstrates a cost-effective technique to detect American Sign Language (ASL) using an image dataset. Here, “Finger Spelling, A” dataset has been used, with 24 letters (except j and z as they contain motion). The main reason for using this dataset is that these images have a complex background with different environments and scene colors. Two layers of image processing have been used: in the first layer, images are processed as a whole for training, and in the second layer, the hand landmarks are extracted. A multi-headed convolutional neural network (CNN) model has been proposed and tested with 30% of the dataset to train these two layers. To avoid the overfitting problem, data augmentation and dynamic learning rate reduction have been used. With the proposed model, 98.981% test accuracy has been achieved. It is expected that this study may help to develop an efficient human–machine communication system for a deaf-mute society.

Similar content being viewed by others

AI enabled sign language recognition and VR space bidirectional communication using triboelectric smart glove

Sign language recognition based on dual-path background erasure convolutional neural network

Improved 3D-ResNet sign language recognition algorithm with enhanced hand features

Introduction.

Spoken language is the medium of communication between a majority of the population. With spoken language, it would be workable for a massive extent of the population to impart. Nonetheless, despite spoken language, a section of the population cannot speak with most of the other population. Mute people cannot convey a proper meaning using spoken language. Hard of hearing is a handicap that weakens their hearing and makes them unfit to hear, while quiet is an incapacity that impedes their talking and makes them incapable of talking. Both are just handicapped in their hearing or potentially, therefore, cannot still do many other things. Communication is the only thing that isolates them from ordinary people 1 . As there are so many languages in the world, a unique language is needed to express their thoughts and opinions, which will be understandable to ordinary people, and such a language is named sign language. Understanding sign language is an arduous task, an ability that must be educated with training.

Many methods are available that use different things/tools like images (2D, 3D), sensor data (hand globe 2 , Kinect sensor 3 , neuromorphic sensor 4 ), videos, etc. All things are considered due to the fact that the captured images are excessively noisy. Therefore an elevated level of pre-processing is required. The available online datasets are already processed or taken in a lab environment where it becomes easy for recent advanced AI models to train and evaluate, causing prone to errors in real-life applications with different kinds of noises. Accordingly, it is a basic need to make a model that can deal with noisy images and also be able to deliver positive results. Different sorts of methods can be utilized to execute the classification and recognition of images using machine learning. Apart from recognizing static images, work has been done in depth-camera detecting and video processing 5 , 6 , 7 . Various cycles inserted in the system were created utilizing other programming languages to execute the procedural strategies for the final system's maximum adequacy. The issue can be addressed and deliberately coordinated into three comparable methodologies: initially using static image recognition techniques and pre-processing procedures, secondly by using deep learning models, and thirdly by using Hidden Markov Models.

Sign language guides this part of the community and empowers smooth communication in the community of people with trouble talking and hearing (deaf and dumb). They use hand signals along with facial expressions and body activities to cooperate. Yet, as a global language, not many people become familiar with communication via sign language gestures 8 . Hand motions comprise a significant part of communication through signing vocabulary. At the same time, facial expressions and body activities assume the jobs of underlining the words and phrases communicated by hand motions. Hand motions can be static or dynamic 9 , 10 . There are methodologies for motion discovery utilizing the dynamic vision sensor (DVS), a similar technique used in the framework introduced in this composition. For example, Arnon et al. 11 have presented an event-based gesture recognition system, which measures the event stream utilizing a natively event-based processor from International Business Machines called TrueNorth. They use a temporal filter cascade to create Spatio-temporal frames that CNN executes in the event-based processor, and they reported an accuracy of 96.46%. But in a real-life scenario, corresponding background situations are not static. Therefore the stated power saving process might not work properly. Jun Haeng Lee et al. 12 proposed a motion classification method with two DVSs to get a stereo-vision system. They used spike neurons to handle the approaching occasions with the same real-life issue. Static hand signals are also called hand acts and are framed in different shapes and directions of hands without speaking to any movement data. Dynamic hand motions comprise a sequence of hand stances with related movement information 13 . Using facial expressions, static hand images, and hand signals, communication through signing gives instruments to convey similarly as if communicated in dialects; there are different kinds of communication via gestures as well 14 .

In this work, we have applied a fusion of traditional image processing with extracted hand landmarks and trained on a multi-headed CNN so that it could complement each other’s weights on the concatenation layer. The main objective is to achieve a better detection rate without relying on a traditional single-channel CNN. This method has been proven to work well with less computational power and fewer epochs on medical image datasets 15 . The rest of the paper is divided into multiple sections as literature review in " Literature review " section, materials and methods in " Materials and methods " section with three subsections: dataset description in Dataset description , image pre-processing in " Pre-processing of image dataset " and working procedure in " Working procedure ", result analysis in " Result analysis " section, and conclusion in " Conclusion " section.

Literature review

State-of-the-art techniques centered after utilizing deep learning models to improve good accuracy and less execution time. CNNs have indicated huge improvements in visual object recognition 16 , natural language processing 17 , scene labeling 18 , medical image processing 15 , and so on. Despite these accomplishments, there is little work on applying CNNs to video classification. This is halfway because of the trouble in adjusting the CNNs to join both spatial and fleeting data. Model using exceptional hardware components such as a depth camera has been used to get the data on the depth variation in the image to locate an extra component for correlation, and then built up a CNN for getting the results 19 , still has low accuracy. An innovative technique that does not need a pre-trained model for executing the system was created using a capsule network and versatile pooling 11 .

Furthermore, it was revealed that lowering the layers of CNN, which employs a greedy way to do so, and developing a deep belief network produced superior outcomes compared to other fundamental methodologies 20 . Feature extraction using scale-invariant feature transform (SIFT) and classification using Neural Networks were developed to obtain the ideal results 21 . In one of the methods, the images were changed into an RGB conspire, the data was developed utilizing the movement depth channel lastly using 3D recurrent convolutional neural networks (3DRCNN) to build up a working system 5 , 22 where Canny edge detection oriented FAST and Rotated BRIEF (ORB) has been used. ORB feature detection technique and K-means clustering algorithm used to create the bag of feature model for all descriptors is described, but the plain background, easy to detect edges are totally dependent on edges; if the edges give wrong info, the model may fall accuracy and become the main problem to solve.

In recent years, utilizing deep learning approaches has become standard for improving the recognition accuracy of sign language models. Using Faster Region-based Convolutional Neural Network (Faster-RCNN) 23 , a CNN model is applied for hand recognition in the data image. Rastgoo et al. 24 proposed a method where they cropped an image properly, used fusion between RGB and depth image (RBM), added two noise types (Gaussian noise + salt n paper noise), and prepared the data for training. As a naturally propelled deep learning model, CNNs achieve every one of the three phases with a single framework that is prepared from crude pixel esteems to classifier yields, but extreme computation power was needed. Authors in ref. 25 proposed 3D CNNs where the third dimension joins both spatial and fleeting stamps. It accepts a few neighboring edges as input and performs 3D convolution in the convolutional layers. Along with them, the study reported in 26 followed similar thoughts and proposed regularizing the yields with high-level features, joining the expectations of a wide range of models. They applied the developed models to perceive human activities and accomplished better execution in examination than benchmark methods. But it is not sure it works with hand gestures as they detected face first and thenody movement 27 .

On the other hand, the Microsoft and Leap Motion companies have developed unmistakable approaches to identify and track a user’s hand and body movement by presenting Kinect and the leap motion controller (LMC) separately. Kinect recognizes the body skeleton and tracks the hands, whereas the LMC distinguishes and tracks hands with its underlying cameras and infrared sensors 3 , 28 . Using the provided framework, Sykora et al. 7 utilized the Kinect system to catch the depth data of 10 hand motions to classify them using a speeded-up robust features (SURF) technique that came up to an 82.8% accuracy, but it cannot test on more extensive database and modified feature extraction methods (SIFT, SURF) so it can be caused non-invariant to the orientation of gestures. Likewise, Huang et al. 29 proposed a 10-word-based ASL recognition system utilizing Kinect by tenfold cross-validation with an SVM that accomplished a precision pace of 97% using a set of frame-independent features, but the most significant problem in this method is segmentation.

The literature summarizes that most of the models used in this application either depend on a single variable or require high computational power. Also, their dataset choice for training and validating the model is in plain background, which is easier to detect. Our main aim is to show how to reduce the computational power for training and the dependency of model training on one layer.

Materials and methods

Dataset description.

Using a generalized single-color background to classify sign language is very common. We intended to avoid that single color background and use a complex background with many users’ hand images to increase the detection complexity. That’s why we have used the “ASL Finger Spelling” dataset 30 , which has images of different sizes, orientations, and complex backgrounds of over 500 images per sign (24 sign total) of 4 users (non-native to sign language). This dataset contains separate RGB and depth images; we have worked with the RGB images in this research. The photos were taken in 5 sessions with the same background and lighting. The dataset details are shown in Table 1 , and some sample images are shown in Fig. 1 .

Sample images from a dataset containing 24 signs from the same user.

Pre-processing of image dataset

Images were pre-processed for two operations: preparing the original image training set and extracting the hand landmarks. Traditional CNN has one input data channel and one output channel. We are using two input data channels and one output channel, so data needs to be prepared for both inputs individually.

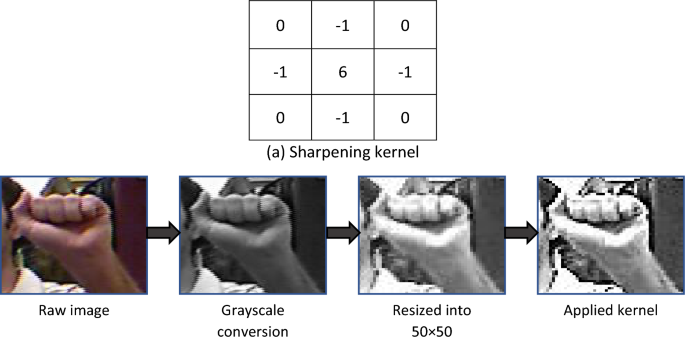

Raw image processing

In raw image processing, we have converted the images from RGB to grayscale to reduce color complexity. Then we used a 2D kernel matrix for sharpening the images, as shown in Fig. 2 . After that, we resized the images into 50 × 50 pixels for evaluation through CNN. Finally, we have normalized the grayscale values (0–255) by dividing the pixel values by 255, so now the new pixel array contains value ranges (0–1). The primary advantage of this normalization is that CNN works faster in the (0–1) range rather than other limits.

Raw image pre-processing with ( a ) sharpening kernel.

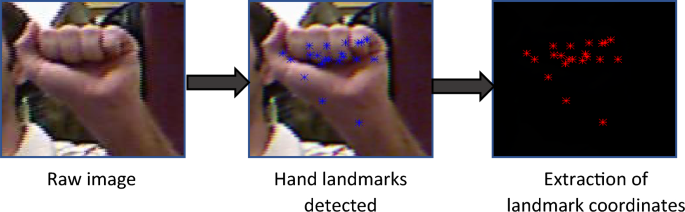

Hand landmark detection

Google’s hand landmark model has an input channel of RGB and an image size of (224 × 224 × 3). So, we have taken the RGB images, converted pixel values into float32, and resized all the images into (256 × 256 × 3). After applying the model, it gives 21 coordinated 3-dimensional points. The landmark detection process is shown in Fig. 3 .

Hand landmarks detection and extraction of 21 coordinates.

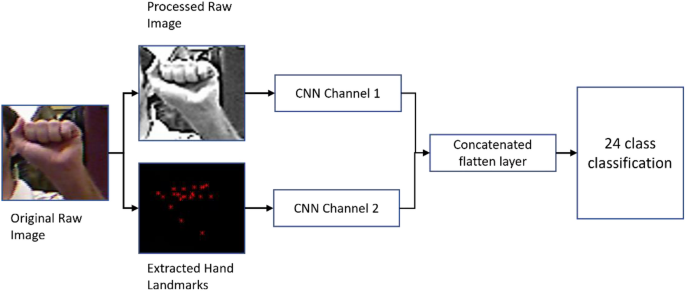

Working procedure

The whole work is divided into two main parts, one is the raw image processing, and another one is the hand landmarks extraction. After both individual processing had been completed, a custom lightweight simple multi-headed CNN model was built to train both data. Before processing through a fully connected layer for classification, we merged both channel’s features so that the model could choose between the best weights. This working procedure is illustrated in Fig. 4 .

Flow diagram of working procedure.

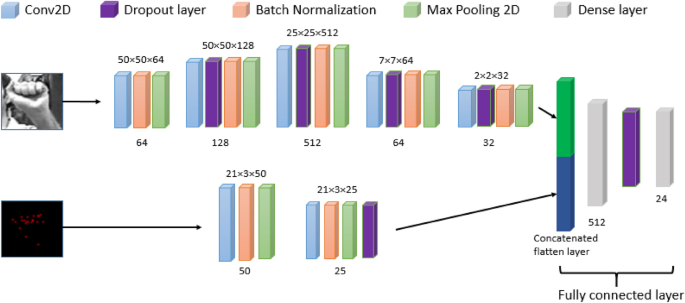

Model building

In this research, we have used multi-headed CNN, meaning our model has two input data channels. Before this, we trained processed images and hand landmarks with two separate models to compare. Google’s model is not best for “in the wild” situations, so we needed original images to complement the low faults in Google’s model. In the first head of the model, we have used the processed images as input and hand landmarks data as the second head’s input. Two-dimensional Convolutional layers with filter size 50, 25, kernel (3, 3) with Relu, strides 1; MaxPooling 2D with pool size (2, 2), batch normalization, and Dropout layer has been used in the hand landmarks training side. Besides, the 2D Convolutional layer with filter size 32, 64, 128, 512, kernel (3, 3) with Relu; MaxPooling 2D with pool size (2, 2); batch normalization and dropout layer has been used in the image training side. After both flatten layers, two heads are concatenated and go through a dense, dropout layer. Finally, the output dense layer has 24 units with Softmax activation. This model has been compiled with Adam optimizer and MSE loss for 50 epochs. Figure 5 illustrates the proposed CNN architecture, and Table 2 shows the model details.

Proposed multi-headed CNN architecture. Bottom values are the number of filters and top values are output shapes.

Training and testing

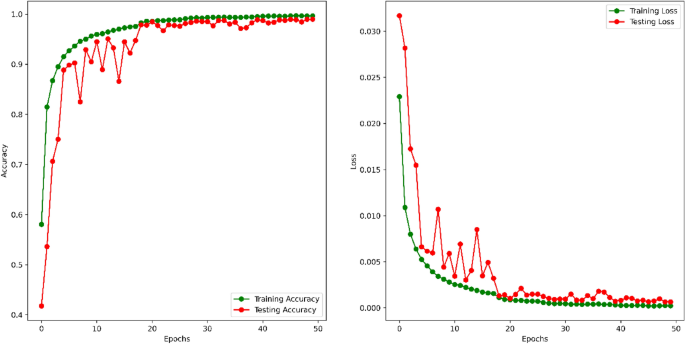

The input images were augmented to generate more difficulty in training so that the model could not overfit. Image Data Generator did image augmentation with 10° rotation, 0.1 zoom range, 0.1 widths and height shift range, and horizontal flip. Being more conscious about the overfitting issues, we have used dynamic learning rates, monitoring the validation accuracy with patience 5, factor 0.5, and a minimum learning rate of 0.00001. For training, we have used 46,023 images, and for testing, 19,725 images. For 50 epochs, the training vs testing accuracy and loss has been shown in Fig. 6 .

Training versus testing accuracy and loss for 50 epochs.

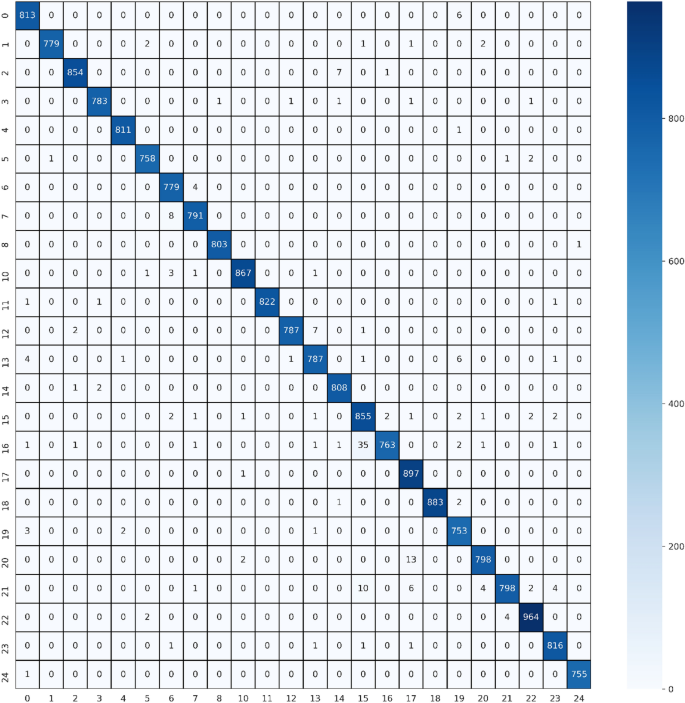

For further evaluation, we have calculated the precision, recall, and F1 score of the proposed multi-headed CNN model, which shows excellent performance. To compute these values, we first calculated the confusion matrix (shown in Fig. 7 ). When a class is positive and also classified as so, it is called true positive (TP). Again, when a class is negative and classified as so, it is called true negative (TN). If a class is negative and classified as positive, it is called false positive (FP). Also, when a class is positive and classified as not negative, it is called false negative (FN). From these, we can conclude precision, recall, and F1 score like the below:

Confusion matrix of the testing dataset. Numerical values in X and Y axis means the sequential letters from A = 0 to Y = 24, number 9 and 25 is missing because dataset does not have letter J and Z.

Precision: Precision is the ratio of TP and total predicted positive observation.

Recall: It is the ratio of TP and total positive observations in the actual class.

F1 score: F1 score is the weighted average of precision and recall.

The Precision, Recall, and F1 score for 24 classes are shown in Table 3 .

Result analysis

In human action recognition tasks, sign language has an extra advantage as it can be used to communicate efficiently. Many techniques have been developed using image processing, sensor data processing, and motion detection by applying different dynamic algorithms and methods like machine learning and deep learning. Depending on methodologies, researchers have proposed their way of classifying sign languages. As technologies develop, we can explore the limitations of previous works and improve accuracy. In ref. 13 , this paper proposes a technique for acknowledging hand motions, which is an excellent part of gesture-based communication jargon, because of a proficient profound deep convolutional neural network (CNN) architecture. The proposed CNN design disposes of the requirement for recognition and division of hands from the captured images, decreasing the computational weight looked at during hand pose recognition with classical approaches. In our method, we used two input channels for the images and hand landmarks to get more robust data, making the process more efficient with a dynamic learning rate adjustment. Besides in ref 14 , the presented results were acquired by retraining and testing the sign language gestures dataset on a convolutional neural organization model utilizing Inception v3. The model comprises various convolution channel inputs that are prepared on a piece of similar information. A capsule-based deep neural network sign posture translator for an American Sign Language (ASL) fingerspelling (posture) 20 has been introduced where the idea concept of capsules and pooling are used simultaneously in the network. This exploration affirms that utilizing pooling and capsule routing on a similar network can improve the network's accuracy and convergence speed. In our method, we have used the pre-trained model of Google to extract the hand landmarks, almost like transfer learning. We have shown that utilizing two input channels could also improve accuracy.

Moreover, ref 5 proposed a 3DRCNN model integrating a 3D convolutional neural network (3DCNN) and upgraded completely associated recurrent neural network (FC-RNN), where 3DCNN learns multi-methodology features from RGB, motion, and depth channels, and FCRNN catch the fleeting data among short video clips divided from the original video. Consecutive clips with a similar semantic significance are singled out by applying the sliding window way to deal with a section of the clips on the whole video sequence. Combining a CNN and traditional feature extractors, capable of accurate and real-time hand posture recognition 26 where the architecture is assessed on three particular benchmark datasets and contrasted and the cutting edge convolutional neural networks. Extensive experimentation is directed utilizing binary, grayscale, and depth data and two different validation techniques. The proposed feature fusion-based CNN 31 is displayed to perform better across blends of approval procedures and image representation. Similarly, fusion-based CNN is demonstrated to improve the recognition rate in our study.

After worldwide motion analysis, the hand gesture image sequence was dissected for keyframe choice. The video sequences of a given gesture were divided in the RGB shading space before feature extraction. This progression enjoyed the benefit of shaded gloves worn by the endorsers. Samples of pixel vectors representative of the glove’s color were used to estimate the mean and covariance matrix of the shading, which was sectioned. So, the division interaction was computerized with no user intervention. The video frames were converted into color HSV (Hue-SaturationValue) space in the color object tracking method. Then the pixels with the following shading were distinguished and marked, and the resultant images were converted to a binary (Gray Scale image). The system identifies image districts compared to human skin by binarizing the input image with a proper threshold value. Then, at that point, small regions from the binarized image were eliminated by applying a morphological operator and selecting the districts to get an image as an applicant of hand.

In the proposed method we have used two-headed CNN to train the processed input images. Though the single image input stream is widely used, two input streams have an advantage among them. In the classification layer of CNN, if one layer is giving a false result, it could be complemented by the other layer’s weight, and it is possible that combining both results could provide a positive outcome. We used this theory and successfully improved the final validation and test results. Before combining image and hand landmark inputs, we tested both individually and acquired a test accuracy of 96.29% for the image and 98.42% for hand landmarks. We did not use binarization as it would affect the background of an image with skin color matched with hand color. This method is also suitable for wild situations as it is not entirely dependent on hand position in an image frame. A comparison of the literature and our work has been shown in Table 4 , which shows that our method overcomes most of the current position in accuracy gain.

Table 5 illustrates that the Combined Model, while having a larger number of parameters and consuming more memory, achieves the highest accuracy of 98.98%. This suggests that the combined approach, which incorporates both image and hand landmark information, is effective for the task when accuracy is priority. On the other hand, the Hand Landmarks Model, despite having fewer parameters and lower memory consumption, also performs impressively with an accuracy of 98.42%. But it has its own error and memory consumption rate in model training by Google. The Image Model, while consuming less memory, has a slightly lower accuracy of 96.29%. The choice between these models would depend on the specific application requirements, trade-offs between accuracy and resource utilization, and the importance of execution time.

This work proposes a methodology for perceiving the classification of sign language recognition. Sign language is the core medium of communication between deaf-mute and everyday people. It is highly implacable in real-world scenarios like communication, human–computer interaction, security, advanced AI, and much more. For a long time, researchers have been working in this field to make a reliable, low cost and publicly available SRL system using different sensors, images, videos, and many more techniques. Many datasets have been used, including numeric sensory, motion, and image datasets. Most datasets are prepared in a good lab condition to do experiments, but in the real world, it may not be a practical case. That’s why, looking into the real-world situation, the Fingerspelling dataset has been used, which contains real-world scenarios like complex backgrounds, uneven image shapes, and conditions. First, the raw images are processed and resized into a 50 × 50 size. Then, the hand landmark points are detected and extracted from these hand images. Making images goes through two processing techniques; now, there are two data channels. A multi-headed CNN architecture has been proposed for these two data channels. Total data has been augmented to avoid overfitting, and dynamic learning rate adjustment has been done. From the prepared data, 70–30% of the train test spilled has been done. With the 30% dataset, a validation accuracy of 98.98% has been achieved. In this kind of large dataset, this accuracy is much more reliable.

There are some limitations found in the proposed method compared with the literature. Some methods might work with low image dataset numbers, but as we use the simple CNN model, this method requires a good number of images for training. Also, the proposed method depends on the hand landmark extraction model. Other hand landmark model can cause different results. In raw image processing, it is possible to detect hand portions to reduce the image size, which may increase the recognition chance and reduce the model training time. Hence, we may try this method in future work. Currently, raw image processing takes a good amount of training time as we considered the whole image for training.

Data availability

The dataset used in this paper (ASL Fingerspelling Images (RGB & Depth)) is publicly available at Kaggle on this URL: https://www.kaggle.com/datasets/mrgeislinger/asl-rgb-depth-fingerspelling-spelling-it-out .

Anderson, R., Wiryana, F., Ariesta, M. C. & Kusuma, G. P. Sign language recognition application systems for deaf-mute people: A review based on input-process-output. Proced. Comput. Sci. 116 , 441–448. https://doi.org/10.1016/j.procs.2017.10.028 (2017).

Article Google Scholar

Mummadi, C. et al. Real-time and embedded detection of hand gestures with an IMU-based glove. Informatics 5 (2), 28. https://doi.org/10.3390/informatics5020028 (2018).

Hickeys Kinect for Windows - Windows apps. (2022). Accessed 01 January 2023. https://learn.microsoft.com/en-us/windows/apps/design/devices/kinect-for-windows

Rivera-Acosta, M., Ortega-Cisneros, S., Rivera, J. & Sandoval-Ibarra, F. American sign language alphabet recognition using a neuromorphic sensor and an artificial neural network. Sensors 17 (10), 2176. https://doi.org/10.3390/s17102176 (2017).

Article ADS PubMed PubMed Central Google Scholar

Ye, Y., Tian, Y., Huenerfauth, M., & Liu, J. Recognizing American Sign Language Gestures from Within Continuous Videos. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW) , 2145–214509 (IEEE, 2018). https://doi.org/10.1109/CVPRW.2018.00280 .

Ameen, S. & Vadera, S. A convolutional neural network to classify American Sign Language fingerspelling from depth and colour images. Expert Syst. 34 (3), e12197. https://doi.org/10.1111/exsy.12197 (2017).

Sykora, P., Kamencay, P. & Hudec, R. Comparison of SIFT and SURF methods for use on hand gesture recognition based on depth map. AASRI Proc. 9 , 19–24. https://doi.org/10.1016/j.aasri.2014.09.005 (2014).

Sahoo, A. K., Mishra, G. S. & Ravulakollu, K. K. Sign language recognition: State of the art. ARPN J. Eng. Appl. Sci. 9 (2), 116–134 (2014).

Google Scholar

Mitra, S. & Acharya, T. “Gesture recognition: A survey. IEEE Trans. Syst. Man Cybern. Part C 37 (3), 311–324. https://doi.org/10.1109/TSMCC.2007.893280 (2007).

Rautaray, S. S. & Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 43 (1), 1–54. https://doi.org/10.1007/s10462-012-9356-9 (2015).

Amir A. et al A low power, fully event-based gesture recognition system. In 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) , 7388–7397 (IEEE, 2017). https://doi.org/10.1109/CVPR.2017.781 .

Lee, J. H. et al. Real-time gesture interface based on event-driven processing from stereo silicon retinas. IEEE Trans. Neural Netw. Learn Syst. 25 (12), 2250–2263. https://doi.org/10.1109/TNNLS.2014.2308551 (2014).

Article PubMed Google Scholar

Adithya, V. & Rajesh, R. A deep convolutional neural network approach for static hand gesture recognition. Proc. Comput. Sci. 171 , 2353–2361. https://doi.org/10.1016/j.procs.2020.04.255 (2020).

Das, A., Gawde, S., Suratwala, K., & Kalbande, D. Sign language recognition using deep learning on custom processed static gesture images. In 2018 International Conference on Smart City and Emerging Technology (ICSCET) , 1–6 (IEEE, 2018). https://doi.org/10.1109/ICSCET.2018.8537248 .

Pathan, R. K. et al. Breast cancer classification by using multi-headed convolutional neural network modeling. Healthcare 10 (12), 2367. https://doi.org/10.3390/healthcare10122367 (2022).

Article PubMed PubMed Central Google Scholar

Lecun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86 (11), 2278–2324. https://doi.org/10.1109/5.726791 (1998).

Collobert, R., & Weston, J. A unified architecture for natural language processing. In Proceedings of the 25th international conference on Machine learning—ICML ’08 , 160–167 (ACM Press, 2008). https://doi.org/10.1145/1390156.1390177 .

Farabet, C., Couprie, C., Najman, L. & LeCun, Y. Learning hierarchical features for scene labeling. IEEE Trans. Pattern Anal. Mach. Intell. 35 (8), 1915–1929. https://doi.org/10.1109/TPAMI.2012.231 (2013).

Xie, B., He, X. & Li, Y. RGB-D static gesture recognition based on convolutional neural network. J. Eng. 2018 (16), 1515–1520. https://doi.org/10.1049/joe.2018.8327 (2018).

Jalal, M. A., Chen, R., Moore, R. K., & Mihaylova, L. American sign language posture understanding with deep neural networks. In 2018 21st International Conference on Information Fusion (FUSION) , 573–579 (IEEE, 2018).

Shanta, S. S., Anwar, S. T., & Kabir, M. R. Bangla Sign Language Detection Using SIFT and CNN. In 2018 9th International Conference on Computing, Communication and Networking Technologies (ICCCNT) , 1–6 (IEEE, 2018). https://doi.org/10.1109/ICCCNT.2018.8493915 .

Sharma, A., Mittal, A., Singh, S. & Awatramani, V. Hand gesture recognition using image processing and feature extraction techniques. Proc. Comput. Sci. 173 , 181–190. https://doi.org/10.1016/j.procs.2020.06.022 (2020).

Ren, S., He, K., Girshick, R., & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process Syst. , 28 (2015).

Rastgoo, R., Kiani, K. & Escalera, S. Multi-modal deep hand sign language recognition in still images using restricted Boltzmann machine. Entropy 20 (11), 809. https://doi.org/10.3390/e20110809 (2018).

Jhuang, H., Serre, T., Wolf, L., & Poggio, T. A biologically inspired system for action recognition. In 2007 IEEE 11th International Conference on Computer Vision , 1–8. (IEEE, 2007) https://doi.org/10.1109/ICCV.2007.4408988 .

Ji, S., Xu, W., Yang, M. & Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 35 (1), 221–231. https://doi.org/10.1109/TPAMI.2012.59 (2013).

Huang, J., Zhou, W., Li, H., & Li, W. sign language recognition using 3D convolutional neural networks. In 2015 IEEE International Conference on Multimedia and Expo (ICME) , 1–6 (IEEE, 2015). https://doi.org/10.1109/ICME.2015.7177428 .

Digital worlds that feel human Ultraleap. Accessed 01 January 2023. Available: https://www.leapmotion.com/

Huang, F., & Huang, S. Interpreting american sign language with Kinect. Journal of Deaf Studies and Deaf Education, [Oxford University Press] , (2011).

Pugeault, N., & Bowden, R. Spelling it out: Real-time ASL fingerspelling recognition. In 2011 IEEE International Conference on Computer Vision Workshops (ICCV Workshops) , 1114–1119 (IEEE, 2011). https://doi.org/10.1109/ICCVW.2011.6130290 .

Rahim, M. A., Islam, M. R. & Shin, J. Non-touch sign word recognition based on dynamic hand gesture using hybrid segmentation and CNN feature fusion. Appl. Sci. 9 (18), 3790. https://doi.org/10.3390/app9183790 (2019).

“ASL Alphabet.” Accessed 01 Jan, 2023. https://www.kaggle.com/grassknoted/asl-alphabet

Download references

Funding was provided by the American University of the Middle East, Egaila, Kuwait.

Author information

Authors and affiliations.

Department of Computing and Information Systems, School of Engineering and Technology, Sunway University, 47500, Bandar Sunway, Selangor, Malaysia

Refat Khan Pathan

Department of Computer Science and Engineering, BGC Trust University Bangladesh, Chittagong, 4381, Bangladesh

Munmun Biswas

Department of Computer and Information Science, Graduate School of Engineering, Tokyo University of Agriculture and Technology, Koganei, Tokyo, 184-0012, Japan

Suraiya Yasmin

Centre for Applied Physics and Radiation Technologies, School of Engineering and Technology, Sunway University, 47500, Bandar Sunway, Selangor, Malaysia

Mayeen Uddin Khandaker

Faculty of Graduate Studies, Daffodil International University, Daffodil Smart City, Birulia, Savar, Dhaka, 1216, Bangladesh

College of Engineering and Technology, American University of the Middle East, Egaila, Kuwait

Mohammad Salman & Ahmed A. F. Youssef

You can also search for this author in PubMed Google Scholar

Contributions

R.K.P and M.B, Conceptualization; R.K.P. methodology; R.K.P. software and coding; M.B. and R.K.P. validation; R.K.P. and M.B. formal analysis; R.K.P., S.Y., and M.B. investigation; S.Y. and R.K.P. resources; R.K.P. and M.B. data curation; S.Y., R.K.P., and M.B. writing—original draft preparation; S.Y., R.K.P., M.B., M.U.K., M.S., A.A.F.Y. and M.S. writing—review and editing; R.K.P. and M.U.K. visualization; M.U.K. and M.B. supervision; M.B., M.S. and A.A.F.Y. project administration; M.S. and A.A.F.Y, funding acquisition.

Corresponding author

Correspondence to Mayeen Uddin Khandaker .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher's note.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Pathan, R.K., Biswas, M., Yasmin, S. et al. Sign language recognition using the fusion of image and hand landmarks through multi-headed convolutional neural network. Sci Rep 13 , 16975 (2023). https://doi.org/10.1038/s41598-023-43852-x

Download citation

Received : 04 March 2023

Accepted : 29 September 2023

Published : 09 October 2023

DOI : https://doi.org/10.1038/s41598-023-43852-x

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

By submitting a comment you agree to abide by our Terms and Community Guidelines . If you find something abusive or that does not comply with our terms or guidelines please flag it as inappropriate.

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing: AI and Robotics newsletter — what matters in AI and robotics research, free to your inbox weekly.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Sensors (Basel)

Artificial Intelligence Technologies for Sign Language

AI technologies can play an important role in breaking down the communication barriers of deaf or hearing-impaired people with other communities, contributing significantly to their social inclusion. Recent advances in both sensing technologies and AI algorithms have paved the way for the development of various applications aiming at fulfilling the needs of deaf and hearing-impaired communities. To this end, this survey aims to provide a comprehensive review of state-of-the-art methods in sign language capturing, recognition, translation and representation, pinpointing their advantages and limitations. In addition, the survey presents a number of applications, while it discusses the main challenges in the field of sign language technologies. Future research direction are also proposed in order to assist prospective researchers towards further advancing the field.

1. Introduction

Sign language (SL) is the main means of communication between hearing-impaired people and other communities and it is expressed through manual (i.e., body and hand motions) and non-manual (i.e., facial expressions) features. These features are combined together to form utterances that convey the meaning of words or sentences [ 1 ]. Being able to capture and understand the relation between utterances and words is crucial for the Deaf community in order to guide us to an era where the translation between utterances and words can be achieved automatically [ 2 ]. The research community has long identified the need for developing sign language technologies to facilitate the communication and social inclusion of hearing-impaired people. Although the development of such technologies can be really challenging due to the existence of numerous sign languages and the lack of large annotated datasets, the recent advances in AI and machine learning have played a significant role towards automating and enhancing such technologies.

Sign language technologies cover a wide spectrum, ranging from the capturing of signs to their realistic representation in order to facilitate the communication between hearing-impaired people, as well as the communication between hearing-impaired and speaking people. More specifically, sign language capturing involves the accurate extraction of body, hand and mouth expressions using appropriate sensing devices in marker-less or marker-based setups. The accuracy of sign language capturing technologies is currently limited by the resolution and discrimination ability of sensors and the fact that occlusions and fast hand movements pose significant challenges to the accurate capturing of signs. Sign language recognition (SLR) involves the development of powerful machine learning algorithms to robustly classify human articulations to isolated signs or continuous sentences. Current limitations in SLR lie in the lack of large annotated datasets that greatly affect the accuracy and generalization ability of SLR methods, as well as the difficulty in identifying sign boundaries in continuous SLR scenarios.

On the other hand, sign language translation (SLT) involves the translation between different sign languages, as well as the translation between sign and speaking languages. SLT methods employ sequence-based machine learning algorithms and aim to bridge the communication gap between people signing or speaking different languages. The difficulties in SLT lie in the lack of multilingual sign language datasets, as well as the inaccuracies of SLR methods, considering that the gloss recognition (performed by SLR methods) is the initial step of the SLT methods . Finally, sign language representation involves the accurate representation and reproduction of signs using realistic avatars or signed video approaches. Currently, avatar movements are deemed unnatural and hard to understand by the Deaf community due to inaccuracies in skeletal pose capturing and the lack of life-like features in the appearance of avatars.

Sign language technologies are connected in a way that affect each other as seen in Figure 1 . The accurate extraction of hand and body motions as well as facial expressions plays a crucial role to the success of the machine learning algorithms that are responsible for the robust recognition of signs. Moreover, the accurate sign language recognition significantly affects the performance of sign language translation and representation methods. The breakthroughs in sensorial devices and AI have paved the way for the development of sign language applications that can immensely facilitate hearing-impaired people in their everyday life.

Sign language technologies.

Previous literature reviews mainly concentrate on specific sign language technologies, such as video-based and sensor-based sign language recognition [ 3 , 4 , 5 , 6 , 7 ] and sign language translation [ 8 , 9 ]. Lately, with the development of sign language applications, there are also reviews that presented sign language systems to facilitate hearing-impaired people in teaching and learning, as well as in voice and text interpretation systems [ 10 , 11 ]. However, there is no systematic review that presents all sign language technologies and their relations with each other. This review aims to fill this gap by presenting the advances of AI in all sign language technologies, ranging from capturing and recognition to translation and representation and concludes by describing recent sign language applications that can considerably facilitate the communication among hearing-impaired and speaking people. The main purpose of this review is to demonstrate the importance of using AI technologies in sign language to facilitate deaf and hearing-impaired people in their communication with other communities. In addition, this review aims at familiarizing researchers with the state-of-the-art in all sign language technologies and propose future research directions that can facilitate the development of even more accurate approaches that can lead to mainstream products for the Deaf community. More specifically, the objectives of this review can be summarized as follows:

- A comprehensive overview of the use of AI technologies in various sign language tasks (i.e., capturing, recognition, translation and representation), along with their importance to their field, is provided.

- The advantages and limitations of modern sign language technologies and the relations between them are discussed and explored.

- Possible future directions in the development of AI technologies for sign language are suggested to facilitate prospective researchers in the field.

The rest of this survey is organized as follows. In Section 2 , the literature search guideline is presented. Sign language capturing sensors are described in Section 3 . In Section 4 , sign language recognition methods are categorized and discussed. Sign language representation approaches and applications are presented in Section 5 and Section 6 , respectively. Finally, conclusions and potential future research directions are highlighted in Section 7 .

2. Literature Search

A systematic literature search was performed by adopting the PRISMA guidelines [ 12 ]. The articles were extracted in June 2021 from three academic databases, namely Scopus ( https://www.scopus.com/home.uri ), (link, accessed on 28 May 2021), ProQuest ( https://www.proquest.com/ ), (link, accessed on 28 May 2021) and IeeeXplore ( https://ieeexplore.ieee.org/Xplore/home.jsp ), (link, accessed on 28 May 2021). The articles that were not peer-reviewed or written in English were discarded. Since this review deals with AI technologies for sign language, the search was based on the following condition:

TITLE-ABSTRACT-KEYWORDS ( sign AND language AND ( recognition OR application(*) OR avatar(*) OR representation(*) OR translation OR captur(*) OR generation OR production ) ) AND PUBLISH YEAR > 2018 AND ( LIMIT-TO ( DOCTYPE , "ar" ) OR LIMIT-TO ( DOCTYPE , "cp" ) OR LIMIT-TO ( DOCTYPE , "ch" ) ) AND ( LIMIT-TO ( LANGUAGE , "English" ) ) AND ( LIMIT-TO ( PUBSTAGE , "final" ) ) AND ( LIMIT-TO ( SUBJAREA , "COMP" ) OR LIMIT-TO ( SUBJAREA , "ENGI" ) )

The aforementioned search condition describes the existence of the above words (i.e., recognition, translation, etc.) in the title, abstract or keywords of the literature works. In this context, (*) allows for variations in the search terms (i.e., captur(*) allows the existence of words, such as capture, capturing, etc.). In addition, the search is performed for papers published after 2018 since the field is evolving with fast pace and older methods are rendered quickly obsolete. To this end, this review aims to present only the latest and best works related to sign language technologies. Finally, the papers included in this review have been published as journal articles, conference proceedings and book chapters (i.e., DOCTYPE) in the fields of computing and engineering (i.e., SUBJAREA).

The number of the records retrieved from the three databases is 2368. From this number, 331 duplicate records are removed, leading to 2037 unique records. After screening title, abstract and finally the full text with various criteria to discard irrelevant records, 106 records remain and are included in this review. The selection procedure is depicted in Figure 2 .

Flowchart of the systematic literature search process.

3. Sign Language Capturing

Sign language capturing involves the recording of sign gestures using appropriate sensor setups. The purpose is to capture discriminative information from the signs that will allow the study, recognition and 3D representation of signs at later stages. Moreover, sign language capturing enables the construction of large datasets that can be used to accurately train and evaluate machine learning sign language recognition and translation algorithms.

3.1. Capturing Sensors

The most common means of recording sign gestures is through visual sensors that are able to capture fine-grained information, such as facial expressions and body postures, that is crucial for understanding sign language. Cerna et al. in [ 13 ] employed a Kinect sensor [ 14 ] to simultaneously capture red-green-blue (RGB) image, depth and skeletal information towards the recording of a multimodal dataset with Brazilian sign language. Similarly, Kosmopoulos et al. in [ 15 ] captured realistic real-life scenarios with sign language using the Kinect sensor. The dataset contains isolated and continuous sign language recordings with RGB, depth and skeletal information, along with annotated hand and facial features. Contrary to the previous methods that use a single Kinect sensor, this work additionally employs a machine vision camera, along with a television screen, for sign demonstration. Sincan et al. in [ 16 ], captured isolated Turkish sign language glosses using Kinect sensors with a large variety of indoor and outdoor backgrounds, revealing the importance of capturing videos with various backgrounds. Adaloglou et al. in [ 17 ], created a large sign language dataset with RealSense D435 sensor that records both RGB and depth information. The dataset contain continuous and isolated sign videos and is appropriate for both isolated and continuous sign language recognition tasks.

Another sensor that has been employed for sign language capturing is Leap Motion, which has the ability to capture 3D positions of hand and fingers at the expense of having to operate close to the subject. Mittal et al. in [ 18 ], employed this type of sensor to record sign language gestures. Other setups with antennas and readers of radio-frequency identification (RFID) signals have also been adopted for sign language recognition. Meng et al. in [ 19 ], extracted phase characteristics of RFID signals to detect and recognize sign gestures. The training setup consists of an RFID reader, an RFID tag and a directional antenna. The recorded human should stand between the reader and the tag for a proper capturing. Moreover, the recognition system is signer-dependent.

On the other hand, wearable sensors have been adopted for capturing sign language gestures. Galea et al. in [ 20 ], used electromyography (EMG) to capture electrical activity that was produced during arm movement. The Thalmic MYO armband device was used for the recording of Irish sign language alphabet. Similarly, Zhang et al. [ 21 ] used a wearable device to capture EMG and inertial measurement unit (IMU) signals, while they used a convolutional neural network (CNN) [ 22 ] followed by a long short-term memory (LSTM) [ 23 ] architecture to recognize American sign language at both word and sentence levels. One disadvantage of the method is that its performance has not been evaluated under walking condition. Hou et al. in [ 24 ], proposed Sign-Speaker, which was deployed on a smartwatch to collect sign signals. Then, these signals were sent to a smartphone and were translated into spoken language in real-time. In this method, a very simple capturing setup is required, consisting of a smartwatch and a smartphone. However, their system recognizes a limited number of signs and it cannot generalize well to new users. Wang et al. in [ 25 ], employed a system with two armbands using both IMU and EMG sensors in order to capture fine-grained finger and hand positions and movements. How et al. in [ 26 ], used a low-cost dataglove with IMU sensors to capture sign gestures that were transmitted through Bluetooth to a smartphone device. Nevertheless, the employment of a single right-hand dataglove limited the number of signs that could be performed by this setup.

Each of the aforementioned sensor setups for sign language capturing has different characteristics, which makes it suitable for different applications. Kinect sensors provide high resolution RGB and depth information but their accuracy is restricted by the distance from the sensors. Leap Motion also requires a small distance between the sensor and the subject, but their low computational requirements enable its usage in real-time applications. Multi-camera setups are capable of providing highly accurate results at the expense of increased complexity and computational requirements. A myo armband that can detect EMG and inertial signals is also used in few works but the inertial signals may be distorted by body motions when people are walking. Smartwatches are really popular nowadays and they can also be used for sign language capturing but their output can be quite noisy due to unexpected body movements. Finally, datagloves can provide highly accurate sign language capturing results in real-time. However, the tuning of its components (i.e., flex sensor, accelerometer, gyroscope) may require a trial and error process that is impractical and time-consuming. In addition, signers tend to not prefer datagloves for sign language capturing as they are considered invasive.

3.2. Datasets

Datasets are crucial for the performance of methodologies regarding sign language recognition, translation and synthesis and as a result a lot of attention has been drawn towards the accurate capturing of signs and their meticulous annotation. The majority of the existing publicly available datasets are captured with visual sensors and are presented below.

3.2.1. Continuous Sign Language Recognition Datasets

Continuous sign language recognition (CSLR) datasets contain videos of sequences of signs instead of individual signs and are more suitable for developing real-life applications. Phoenix-2014 [ 27 ] is one of the most popular CSLR dataset with recordings of weather forecasts in German sign language. All videos were recorded with 9 signers at a frame rate of 25 frames per second. The dictionary has 1081 unique glosses and the dataset contains 5672 videos for training, 540 videos for validation and 629 videos for testing. The same authors created an updated version of Phoenix-2014, called Phoenix-2014-T [ 28 ], with spoken language translations, which makes it appropriate for both CSLR and sign language translation experiments. It contains 8257 videos from 9 different signers performing 1088 unique signs and 2887 unique words. Although all recordings are performed in a controlled environment, Phoenix-2014 and Phoenix-2014-T are both challenging datasets with large vocabularies and varying number of samples per sign with a few signs having a single sample. Similarly, BSL-1K [ 29 ] contains video recordings from British news broadcasts, along with automatically extracted annotations from provided subtitles. It is a large database with 273,000 samples from 40 signers that is also used for sign language segmentation. Another notable dataset is CSL [ 30 , 31 ] that contains Chinese words widely used in daily communication. The dataset has 100 sentences with signs that were performed from 50 signers. The recordings are performed in a lab with predefined conditions (i.e., background, lighting). The vocabulary size is 178 words that are performed multiple times, resulting in high recognition results achieved by SLR methods. GRSL [ 15 ] is another CSLR dataset of Greek sign language that is used in home care services, which contains multiple modalities, such as RGB, depth and skeletal joints. On the other hand, GSL [ 17 ] is a large Greek sign language dataset created to assist communication of Deaf people with public service employees. The dataset was created with a RealSense D435 sensor that records both RGB and depth information. Furthermore, it contains both continuous and isolated sign videos from 15 predefined scenarios. It is recorded on a laboratory environment, where each scenario is repeated five consecutive times.

3.2.2. Isolated Sign Language Recognition Datasets

Isolated sign language recognition (ISLR) datasets are important for identifying and learning discriminative features for sign language recognition. CSL-500 [ 31 , 32 ] is the isolated version of CSL but it contains 500 unique glosses performed from the same 50 signers. CSLR methods usually adopt this dataset for feature learning prior to finetuning on the CSL dataset. MS-ASL [ 33 ] is another widely employed ISLR dataset with 1000 unique American sign language glosses. It contains recordings collected from YouTube platform from 222 signers with a large variance in background settings, which makes this dataset suitable for training complex methods with strong representation capabilities. Similarly, WASL [ 34 ] is an ISLR dataset with 2000 unique American sign glosses performed by 119 signers. The videos have different background and illumination conditions, which makes it a challenging ISLR benchmark dataset. On the other hand, AUTSL is a Turkish sign language dataset captured under various indoor and outdoor backgrounds, while LSA64 [ 35 ] is an Argentinian sign language dataset that includes 3200 videos, in which 10 non-expert subjects execute 5 repetitions of 64 different types of signs. LSA64 is a small and relatively easy dataset, where SLR methods achieve outstanding recognition performance. Finally, IsoGD [ 36 ] is a gesture recognition dataset that consists of 47,933 RGB-D videos performed by 21 different individuals and contains 249 gesture labels. Although IsoGD is a gesture recognition dataset, its large size and challenging illumination and background conditions allows the training of highly accurate ISLR methods.

3.2.3. Discussion

A discussion about the aforementioned datasets can be made at this stage, while a detailed overview of the dataset characteristics is provided on Table 1 . It can be seen that over time datasets become larger in size (i.e., number of samples) with more signers involved in them, as well as contain high resolution videos captured under various and challenging illumination and background conditions. Moreover, new datasets usually include different modalities (i.e., RGB, depth and skeleton). Recording sign language videos using many signers is very important, since each person performs signs with different speed, body posture and face expression. Moreover, high resolution videos capture more clearly small but important details, such as finger movements and face expressions, which are crucial cues for sign language understanding. Datasets with videos captured under different conditions enable deep networks to extract highly discriminative features for sign language classification. As a result, methodologies trained in such datasets can obtain greatly enhanced representation and generalization capabilities and achieve high recognition performances. Furthermore, although RGB information is the predominant modality used for sign language recognition, additional modalities, such as skeleton and depth information, can provide complementary information to the RGB modality and significantly improve the performance of SLR methods.

Large-scale publicly available SLR datasets.

| Datasets | Characteristics | |||||||

|---|---|---|---|---|---|---|---|---|

| Language | Signers | Classes | Video Instances | Resolution | Type | Modalities | Year | |

| Phoenix-2014 [ ] | German | 9 | 1231 | 6841 | 210 × 260 | CSLR | RGB | 2014 |

| CSL [ , ] | Chinese | 50 | 178 | 25,000 | 1920 × 1080 | CSLR | RGB, depth | 2016 |

| Phoenix-2014-T [ ] | German | 9 | 1231 | 8257 | 210 × 260 | CSLR | RGB | 2018 |

| GRSL [ ] | Greek | 15 | 1500 | 4000 | varying | CSLR | RGB, depth, skeleton | 2020 |

| BSL-1K [ ] | British | 40 | 1064 | 273,000 | varying | CSLR | RGB | 2020 |

| GSL [ ] | Greek | 7 | 310 | 10,295 | 848 × 480 | CSLR | RGB, depth | 2021 |

| CSL-500 [ , ] | Chinese | 50 | 500 | 125,000 | 1920 × 1080 | ISLR | RGB, depth | 2016 |

| MS-ASL [ ] | American | 222 | 1000 | 25,513 | varying | ISLR | RGB | 2019 |

| WASL [ ] | American | 119 | 2000 | 21,013 | varying | ISLR | RGB | 2020 |

| AUTSL [ ] | Turkish | 43 | 226 | 38,336 | 512 × 512 | ISLR | RGB, depth | 2020 |

| KArSL [ ] | Arabic | 3 | 502 | 75,300 | varying | ISLR | RGB, depth, skeleton | 2021 |

4. Sign Language Recognition

Sign language recognition (SLR) is the task of recognizing sign language glosses from video streams. It is a very important research area since it can bridge the communication gap between hearing and Deaf people, facilitating the social inclusion of hearing-impaired people. Moreover, sign language recognition can be classified into isolated and continuous based on whether the video streams contain an isolated gloss or a gloss sequence that corresponds to a sentence.

4.1. Continuous Sign Language Recognition

Continuous Sign Language Recognition aims at classifying signed videos to entire sentences (i.e., ordered sequence of glosses). CSLR is a very challenging task as it requires the recognition of glosses from video sequences without any knowledge of the sign boundaries (i.e., lack of ground truth annotations regarding the start and end of glosses). Most works adopt 2D or 3D-CNNs for feature extraction followed by temporal convolutional networks or recurrent neural networks (RNNs) for sequential information modelling. To measure CSLR performance, word error rate (WER) [ 38 ] is commonly adopted. WER measures the number of operations (i.e., substitutions, deletions and insertions) required to transform the predicted sequence into the target sequence.

Cui et al. [ 39 ] adopted a 2D-CNN followed by temporal 1D convolutional layers for feature extraction. The extracted spatio-temporal features were fed to a bidirectional long short-term memory (BLSTM) network for modelling the context of the entire sequence. The feature extractor was extended with a classifier and trained in a fully-supervised setting on isolated glosses for video to gloss alignment, while the BLSTM was used for CSLR. This two-step optimization process was conducted iteratively with Connectionist Temporal Classification (CTC) [ 40 ] and Cross-Entropy losses, until the network converged. Besides, the recognition model fused RGB with optical flow modalities and achieved a WER of 22.8% on the Phoenix-2014 dataset. Similarly, Koishybay et al. in [ 41 ], adopted a residual 2D-CNN with cascaded 1D convolutional layers for feature extraction, while for CSLR experiments, BLSTM was utilized. Their method generated gloss-level alignments using the Levenshtein distance in order to fine-tune the feature extractor. However, the authors stated that during the early iterations the model predicted poor alignment proposals, which hinders the training process and requires several iterations to converge. Cheng et al. in [ 42 ], proposed a 2D fully convolutional network with a feature enhancement module that did not require iterative training. Instead, it provided extra supervision and assisted the CSLR network to learn better gloss alignments. Niu et al. in [ 43 ], proposed a 2D-CNN followed by a Transformer network for CSLR. They used three stochastic methods to drop frames of the input video, to randomly stop gradients of back-propagation and to model glosses using hidden states, respectively, which led to better CSLR performance. Nevertheless, the randomness ratio of these stochastic processes must be tuned carefully to achieve good recognition rates. Generally, CSLR methods based on 2D-CNNs achieve great recognition performance. More specifically, 2D-CNNs extract descriptive features from the frame sequences, while the sequence modelling mechanisms align efficiently the input video and the output predictions. However, they usually require complex training strategies, such as iterative optimization techniques, to achieve strong feature extraction capabilities.

On the other hand, some works chose to incorporate attention mechanisms for CSLR. Pan et al. in [ 44 ], used a key-frame sampling technique to extract the most descriptive frames of the video. Then, a vector representation was constructed from the skeletal data of the key-frames, which was fed to an attention-based BLSTM to model the temporal information. Huang et al. [ 45 ] proposed an adaptive encoder-decoder architecture to learn the temporal boundaries of the video. Furthermore, a hierarchical BLSTM with attention over sliding windows was used on the decoder to weigh the importance of the input frames. Li et al. in [ 46 ], used a pyramid structure of BLSTMs in order to find key actions of the video representations, which were produced from the 2D-CNN. Moreover, an attention-based LSTM was used to align the input and output sequences and the whole network was trained jointly with Cross-Entropy and CTC losses.

Recently, the self-attention mechanism has been introduced in a variety of models, such as the Transformer, and has also been adopted by CSLR methods. Slimane et al. in [ 47 ], proposed two data streams with cropped hand images and full images. The two modalities were passed through two 2D-CNNs to extract the spatial features. Then, the modalities were synchronized by a self-attention module to obtain better contextual information and generate efficient video representations for CSLR. Zhou et al. [ 48 ], adopted a fully-inception architecture with 2D and 1D convolutional layers along with a self-attention to further improve the feature extraction capabilities of the inception layers.

Reinforcement techniques have also been applied for CSLR, along with Transformer networks. Zhang et al. in [ 49 ], adopted a 3D-CNN followed by a Transformer network that was responsible for recognizing gloss sequences from input videos. Instead of training the model with cross-entropy loss, they used the REINFORCE algorithm [ 50 ] to directly optimize the model by using WER as the reward function of the agent (i.e., the feature extractor). Wei et al. in [ 51 ], used a semantic boundary detection algorithm with reinforcement learning to improve CSLR performance. A spatio-temporal feature extractor learned the video representations. Then, the detection algorithm used reinforcement learning to detect gloss timestamps from video sequences and refine the final video representations. The evaluation metric was used again as the reward function. The major limitation of this method is the need for a careful selection of the pooling size, which defines the action search space for the reinforcement learning agent.

Papastratis et al. [ 52 ] constructed a cross-modal approach in order to effectively model intra-gloss dependencies by leveraging information from text. This method extracted video features using a video encoder that consisted of a 2D-CNN followed by temporal convolutions and a BLSTM, while text representations were obtained from an LSTM. Finally, these embeddings were aligned in a joint latent space. The improved representations led to great CSLR performance, achieving WERs of 24.0% and 3.52% on Phoenix-2014 and GSL SI, respectively. Papastratis et al. in their latest work [ 53 ], employed a generative adversarial network to evaluate the predictions of the video encoder. In addition, contextual information was incorporated to improve recognition performance on sign language conversations.

Due to their efficient feature extraction capabilities, 3D-CNNs have also been adopted by many researchers for CSLR. Wei et al. in [ 54 ], used a 3D residual CNN along with a BLSTM, while they applied grammatical rules sign language. The text was split into isolated words and n -grams, which are modelled using two classifiers. The two classifiers aimed to recognize each word independently and based on the context in contrast to CTC, which models the whole sequence. Pu et al. in [ 55 ], employed a 3D-CNN with an LSTM decoder and a CTC decoder that were jointly aligned with a soft dynamic time warping (soft-DTW) [ 56 ] alignment constraint. The network was trained recursively with the proposed alignments from soft-DTW. The method achieved WERs of 6.1% and 32.7% on CSL Split 1 and CSL Split 2, respectively. Guo et al. in [ 57 ], developed a fully convolutional approach with a 3D-CNN followed by 1D temporal convolutional layers. The 1D CNN block had a hierarchical structure with small and large receptive fields to capture short- and long-term correlations in the video, while the entire architecture was trained with CTC loss. 3D-CNNs are computationally expensive methods that require pre-training on large-scale datasets and cannot be tuned directly for CSLR. To this end, sliding window techniques are adopted to create informative features. To tackle this problem, some works incorporated pseudo-labelling, which is an optimization process that adds predicted labels on the training set. Pei et al. in [ 58 ], trained a deep 3D-CNN with CTC and generate clip-level pseudo-labels from the alignment of CTC to obtain better feature representations. To improve the quality of pseudo-labels, Zhou et al. in [ 59 ], proposed a dynamic decoding method instead of greedy decoding to find better alignment paths and filter out the wrong pseudo-labels. Their method applied the I3D [ 60 ] network from the action recognition field along with temporal convolutions and bidirectional gated recurrent units (BGRU) [ 61 ]. Moreover, the proposed method achieved a WER of 34.5% on the Phoenix-2014 dataset. However, pseudo-labelling required many iterations, while initial labels affected the convergence of the optimization process.

In Table 2 , several methods are compared on the test set of the most commonly adopted datasets for continuous sign language recognition. From the experimental results it is shown that multi-modal methods achieve the lowest WERs. More specifically, STMC [ 62 ] has the best recognition rates on Phoenix-2014, CSL Split 1 and CSL Split 2 datasets using RGB, hands and skeleton modalities, while SLRGAN [ 53 ], employing the RGB and text modality, achieves superior performance on the GSL SI and GSL SD datasets.

Performance comparison of CSLR approaches categorized by dataset measured in WER (%). The best performance for each dataset appears in bold.

| Method | Input Modality | Dataset | Test Set (WER) |

|---|---|---|---|

| PL [ ] | RGB | Phoenix-2014 | 40.6 |

| RL [ ] | RGB | 38.3 | |

| Align-iOpt [ ] | RGB | 36.7 | |

| DenseTCN [ ] | RGB | 36.5 | |

| DPD [ ] | RGB | 34.5 | |

| CNN-1D-RNN [ ] | RGB | 34.4 | |

| Fully-Inception Networks [ ] | RGB | 31.3 | |

| SAN [ ] | RGB | 29.7 | |

| SFD [ ] | RGB | 25.3 | |

| CrossModal [ ] | RGB | 24.0 | |

| Fully-Conv-Net [ ] | RGB | 23.9 | |

| SLRGAN [ ] | RGB | 23.4 | |

| CNN-TEMP-RNN [ ] | RGB+Optical flow | 22.8 | |

| STMC [ ] | RGB+Hands+Skeleton | ||

| DenseTCN [ ] | RGB | CSL Split 1 | 14.3 |

| Key-action [ ] | RGB | 9.1 | |

| Align-iOpt [ ] | RGB | 6.1 | |

| WIC-NGC [ ] | RGB | 5.1 | |

| DPD [ ] | RGB | 4.7 | |

| Fully-Conv-Net [ ] | RGB | 3.0 | |

| CrossModal [ ] | RGB | 2.4 | |

| SLRGAN [ ] | RGB | ||

| STMC [ ] | RGB+Hands+Skeleton | ||

| Key-action [ ] | RGB | CSL Split 2 | 49.1 |

| DenseTCN [ ] | RGB | 44.7 | |

| Align-iOpt [ ] | RGB | 32.7 | |

| STMC [ ] | RGB+Hands+Skeleton | ||

| CrossModal [ ] | RGB | GSL SI | 3.52 |

| SLRGAN [ ] | RGB | ||

| CrossModal [ ] | RGB | GSL SD | 41.98 |

| SLRGAN [ ] | RGB |

4.2. Isolated Sign Language Recognition

Isolated sign language recognition refers to the task of accurately detecting single sign gestures from videos and thus it is usually tackled similar to action and gesture recognition, as well as other types of video processing and classification tasks with the extraction and learning of highly discriminative features [ 63 , 64 , 65 ]. In the literature, a common approach to the task of isolated sign language recognition is the extraction of hand and mouth regions from the video sequences in an attempt to remove noisy backgrounds that can inhibit classification performance. Liao et al. in [ 66 ], proposed a video-based SLR method that was based on hand region extraction and classification using 3D ResNet networks and BLSTM layers. Similarly, Aly et al. in [ 67 ], developed an ISLR method that segmented hand regions from images using DeepLabv3+ algorithm [ 68 ], extracted features from these regions using a Convolutional Self-Organizing Map and classified the features using a deep recurrent neural network consisting of 3 BLSTM layers. Gökçe et al. in [ 69 ], proposed 3D-CNN networks for the processing of hand, upper body and face image regions and the fusion of these streams in the score level to accurately classify isolated signs. The authors stated that their method performs comparatively worse on mono-morphemic signs performed with a single hand, rather than on temporally more complex signs with two-handed gestures. On the other hand, Zhang et al. in [ 70 ], proposed the Multiple extraction and Multiple prediction (MEMP) network that consists of alternating 3D-CNN networks and Convolutional LSTM layers that extracted spatio-temporal features from video sequences multiple times, enabling the network to achieve 99.06% and 78.85% accuracy in the LSA64 and IsoGD datasets, respectively. Li et al. in [ 71 ], proposed a SLR method that was based on the transferring of cross-domain knowledge of news signs to a base model and improve its performance using domain-invariant features.

To further improve the accuracy and robustness of SLR methods, several researchers proposed the extraction of other types of features, such as optical flow and skeletal joints from visual cues. These multi-stream networks are more computationally expensive than their single stream counterparts, but they have the advantage of overcoming confusing cases regularly met when a single type of features is employed. Sarhan et al. in [ 72 ], proposed a two-stream network architecture that received as input RGB and optical flow data, extracted features using I3D networks and performed late fusion at the score level for accurate sign language recognition. Rastgoo et al. in [ 73 ], proposed a multi-stream SLR method that utilized as input hand image regions, hand heatmaps and 2D projections of hand skeletal joints to images. These input data were processed using 3D-CNN networks, concatenated and fed to LSTM layers for sign recognition. Konstantinidis et al. in [ 74 ], proposed a SLR methodology that was based on the processing and late fusion of body and hand skeletal features using LSTM layers. Apart from the raw joint coordinates, the authors also utilized joint-line distances, which led to a significant improvement in the performance of the method, reaching 98.09% accuracy in the LSA64 dataset. In a later work [ 75 ], the same authors introduced additional streams that processed RGB video sequences and optical flow data, enhancing even more the performance of their method, ultimately achieving 99.84% accuracy in the LSA64 dataset. Similarly, Papadimitriou et al. in [ 76 ], proposed a multi-stream SLR method that processes hand and mouth regions, as well as optical flow and skeletal features for the accurate classification of signs. These features were concatenated and fed to a temporal deformable convolutional attention-based encoder-decoder that predicts the sign class. Gündüz et al. in [ 77 ], employed a multi-stream SLR approach that received as input RGB video sequences, optical flow sequences and body and hand skeletal features and performed a late fusion to accurately classify Turkish signs. Bilge et al. in [ 78 ], proposed a SLR method that can generalize well on unseen signs. To achieve this, the authors employed two 3D-CNN networks followed by BLSTM layers for the extraction of short-term and long-term feature representations from body and hand video sequences. In addition, the authors employed a BERT model [ 79 ] for the extraction of textual sign representations from text descriptions of how the signs were performed. Finally, they used a bi-linear compatibility function to associate video and text representations.

In an effort to derive more discriminative features, Rastgoo et al. in [ 63 ], proposed a multi-stream SLR method that gets as input hand regions, 3D hand pose features and Extra Spatial Hand Relation features (i.e., orientation and slope of hands). These features were concatenated and fed to an LSTM layer to derive the sign class. In this way, the authors managed to achieve a really high accuracy of 86.32% in the challenging IsoGD dataset. Kumar et al. in [ 64 ], proposed Spatial 3D Relational Features for sign language recognition. These features were computed from the area and perimeter of polygons formed by quadruples of skeletal joints. Then, the class of a test sign was predicted by comparing the sign with the training set using global alignment kernels. In another work [ 80 ], Kumar et al. introduced two novel features for accurate sign language recognition that were named colour-coded topographical descriptors. These descriptors were formed as images from the computation of joint distances and angles. Finally, these descriptors were processed by 2D CNNs and merged to derive the class of the sign.