Table of Contents

Types of statistical analysis, importance of statistical analysis, benefits of statistical analysis, statistical analysis process, statistical analysis methods, statistical analysis software, statistical analysis examples, career in statistical analysis, choose the right program, become proficient in statistics today, what is statistical analysis types, methods and examples.

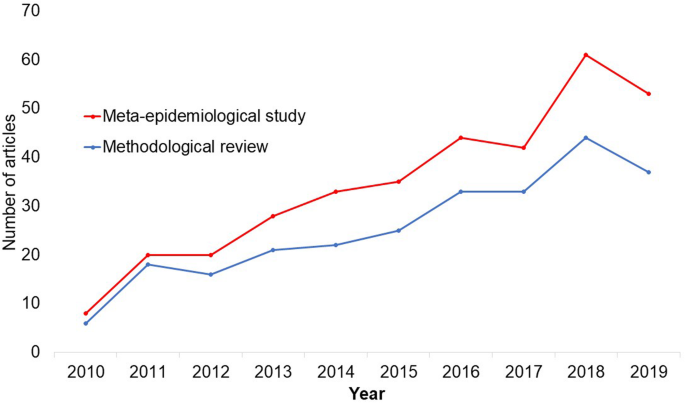

Statistical analysis is the process of collecting and analyzing data in order to discern patterns and trends. It is a method for removing bias from evaluating data by employing numerical analysis. This technique is useful for collecting the interpretations of research, developing statistical models, and planning surveys and studies.

Statistical analysis is a scientific tool in AI and ML that helps collect and analyze large amounts of data to identify common patterns and trends to convert them into meaningful information. In simple words, statistical analysis is a data analysis tool that helps draw meaningful conclusions from raw and unstructured data.

The conclusions are drawn using statistical analysis facilitating decision-making and helping businesses make future predictions on the basis of past trends. It can be defined as a science of collecting and analyzing data to identify trends and patterns and presenting them. Statistical analysis involves working with numbers and is used by businesses and other institutions to make use of data to derive meaningful information.

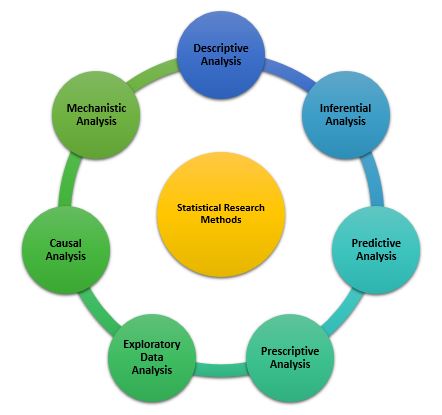

Given below are the 6 types of statistical analysis:

Descriptive Analysis

Descriptive statistical analysis involves collecting, interpreting, analyzing, and summarizing data to present them in the form of charts, graphs, and tables. Rather than drawing conclusions, it simply makes the complex data easy to read and understand.

Inferential Analysis

The inferential statistical analysis focuses on drawing meaningful conclusions on the basis of the data analyzed. It studies the relationship between different variables or makes predictions for the whole population.

Predictive Analysis

Predictive statistical analysis is a type of statistical analysis that analyzes data to derive past trends and predict future events on the basis of them. It uses machine learning algorithms, data mining , data modelling , and artificial intelligence to conduct the statistical analysis of data.

Prescriptive Analysis

The prescriptive analysis conducts the analysis of data and prescribes the best course of action based on the results. It is a type of statistical analysis that helps you make an informed decision.

Exploratory Data Analysis

Exploratory analysis is similar to inferential analysis, but the difference is that it involves exploring the unknown data associations. It analyzes the potential relationships within the data.

Causal Analysis

The causal statistical analysis focuses on determining the cause and effect relationship between different variables within the raw data. In simple words, it determines why something happens and its effect on other variables. This methodology can be used by businesses to determine the reason for failure.

Statistical analysis eliminates unnecessary information and catalogs important data in an uncomplicated manner, making the monumental work of organizing inputs appear so serene. Once the data has been collected, statistical analysis may be utilized for a variety of purposes. Some of them are listed below:

- The statistical analysis aids in summarizing enormous amounts of data into clearly digestible chunks.

- The statistical analysis aids in the effective design of laboratory, field, and survey investigations.

- Statistical analysis may help with solid and efficient planning in any subject of study.

- Statistical analysis aid in establishing broad generalizations and forecasting how much of something will occur under particular conditions.

- Statistical methods, which are effective tools for interpreting numerical data, are applied in practically every field of study. Statistical approaches have been created and are increasingly applied in physical and biological sciences, such as genetics.

- Statistical approaches are used in the job of a businessman, a manufacturer, and a researcher. Statistics departments can be found in banks, insurance businesses, and government agencies.

- A modern administrator, whether in the public or commercial sector, relies on statistical data to make correct decisions.

- Politicians can utilize statistics to support and validate their claims while also explaining the issues they address.

Become a Data Science & Business Analytics Professional

- 28% Annual Job Growth By 2026

- 11.5 M Expected New Jobs For Data Science By 2026

Data Analyst

- Industry-recognized Data Analyst Master’s certificate from Simplilearn

- Dedicated live sessions by faculty of industry experts

Data Scientist

- Add the IBM Advantage to your Learning

- 25 Industry-relevant Projects and Integrated labs

Here's what learners are saying regarding our programs:

Gayathri Ramesh

Associate data engineer , publicis sapient.

The course was well structured and curated. The live classes were extremely helpful. They made learning more productive and interactive. The program helped me change my domain from a data analyst to an Associate Data Engineer.

A.Anthony Davis

Simplilearn has one of the best programs available online to earn real-world skills that are in demand worldwide. I just completed the Machine Learning Advanced course, and the LMS was excellent.

Statistical analysis can be called a boon to mankind and has many benefits for both individuals and organizations. Given below are some of the reasons why you should consider investing in statistical analysis:

- It can help you determine the monthly, quarterly, yearly figures of sales profits, and costs making it easier to make your decisions.

- It can help you make informed and correct decisions.

- It can help you identify the problem or cause of the failure and make corrections. For example, it can identify the reason for an increase in total costs and help you cut the wasteful expenses.

- It can help you conduct market analysis and make an effective marketing and sales strategy.

- It helps improve the efficiency of different processes.

Given below are the 5 steps to conduct a statistical analysis that you should follow:

- Step 1: Identify and describe the nature of the data that you are supposed to analyze.

- Step 2: The next step is to establish a relation between the data analyzed and the sample population to which the data belongs.

- Step 3: The third step is to create a model that clearly presents and summarizes the relationship between the population and the data.

- Step 4: Prove if the model is valid or not.

- Step 5: Use predictive analysis to predict future trends and events likely to happen.

Although there are various methods used to perform data analysis, given below are the 5 most used and popular methods of statistical analysis:

Mean or average mean is one of the most popular methods of statistical analysis. Mean determines the overall trend of the data and is very simple to calculate. Mean is calculated by summing the numbers in the data set together and then dividing it by the number of data points. Despite the ease of calculation and its benefits, it is not advisable to resort to mean as the only statistical indicator as it can result in inaccurate decision making.

Standard Deviation

Standard deviation is another very widely used statistical tool or method. It analyzes the deviation of different data points from the mean of the entire data set. It determines how data of the data set is spread around the mean. You can use it to decide whether the research outcomes can be generalized or not.

Regression is a statistical tool that helps determine the cause and effect relationship between the variables. It determines the relationship between a dependent and an independent variable. It is generally used to predict future trends and events.

Hypothesis Testing

Hypothesis testing can be used to test the validity or trueness of a conclusion or argument against a data set. The hypothesis is an assumption made at the beginning of the research and can hold or be false based on the analysis results.

Sample Size Determination

Sample size determination or data sampling is a technique used to derive a sample from the entire population, which is representative of the population. This method is used when the size of the population is very large. You can choose from among the various data sampling techniques such as snowball sampling, convenience sampling, and random sampling.

Everyone can't perform very complex statistical calculations with accuracy making statistical analysis a time-consuming and costly process. Statistical software has become a very important tool for companies to perform their data analysis. The software uses Artificial Intelligence and Machine Learning to perform complex calculations, identify trends and patterns, and create charts, graphs, and tables accurately within minutes.

Look at the standard deviation sample calculation given below to understand more about statistical analysis.

The weights of 5 pizza bases in cms are as follows:

Calculation of Mean = (9+2+5+4+12)/5 = 32/5 = 6.4

Calculation of mean of squared mean deviation = (6.76+19.36+1.96+5.76+31.36)/5 = 13.04

Sample Variance = 13.04

Standard deviation = √13.04 = 3.611

A Statistical Analyst's career path is determined by the industry in which they work. Anyone interested in becoming a Data Analyst may usually enter the profession and qualify for entry-level Data Analyst positions right out of high school or a certificate program — potentially with a Bachelor's degree in statistics, computer science, or mathematics. Some people go into data analysis from a similar sector such as business, economics, or even the social sciences, usually by updating their skills mid-career with a statistical analytics course.

Statistical Analyst is also a great way to get started in the normally more complex area of data science. A Data Scientist is generally a more senior role than a Data Analyst since it is more strategic in nature and necessitates a more highly developed set of technical abilities, such as knowledge of multiple statistical tools, programming languages, and predictive analytics models.

Aspiring Data Scientists and Statistical Analysts generally begin their careers by learning a programming language such as R or SQL. Following that, they must learn how to create databases, do basic analysis, and make visuals using applications such as Tableau. However, not every Statistical Analyst will need to know how to do all of these things, but if you want to advance in your profession, you should be able to do them all.

Based on your industry and the sort of work you do, you may opt to study Python or R, become an expert at data cleaning, or focus on developing complicated statistical models.

You could also learn a little bit of everything, which might help you take on a leadership role and advance to the position of Senior Data Analyst. A Senior Statistical Analyst with vast and deep knowledge might take on a leadership role leading a team of other Statistical Analysts. Statistical Analysts with extra skill training may be able to advance to Data Scientists or other more senior data analytics positions.

Supercharge your career in AI and ML with Simplilearn's comprehensive courses. Gain the skills and knowledge to transform industries and unleash your true potential. Enroll now and unlock limitless possibilities!

Program Name AI Engineer Post Graduate Program In Artificial Intelligence Post Graduate Program In Artificial Intelligence Geo All Geos All Geos IN/ROW University Simplilearn Purdue Caltech Course Duration 11 Months 11 Months 11 Months Coding Experience Required Basic Basic No Skills You Will Learn 10+ skills including data structure, data manipulation, NumPy, Scikit-Learn, Tableau and more. 16+ skills including chatbots, NLP, Python, Keras and more. 8+ skills including Supervised & Unsupervised Learning Deep Learning Data Visualization, and more. Additional Benefits Get access to exclusive Hackathons, Masterclasses and Ask-Me-Anything sessions by IBM Applied learning via 3 Capstone and 12 Industry-relevant Projects Purdue Alumni Association Membership Free IIMJobs Pro-Membership of 6 months Resume Building Assistance Upto 14 CEU Credits Caltech CTME Circle Membership Cost $$ $$$$ $$$$ Explore Program Explore Program Explore Program

Hope this article assisted you in understanding the importance of statistical analysis in every sphere of life. Artificial Intelligence (AI) can help you perform statistical analysis and data analysis very effectively and efficiently.

If you are a science wizard and fascinated by the role of AI in statistical analysis, check out this amazing Caltech Post Graduate Program in AI & ML course in collaboration with Caltech. With a comprehensive syllabus and real-life projects, this course is one of the most popular courses and will help you with all that you need to know about Artificial Intelligence.

Our AI & Machine Learning Courses Duration And Fees

AI & Machine Learning Courses typically range from a few weeks to several months, with fees varying based on program and institution.

Get Free Certifications with free video courses

Data Science & Business Analytics

Introduction to Data Analytics Course

Introduction to Data Science

Learn from Industry Experts with free Masterclasses

Ai & machine learning.

Career Masterclass: How to Build the Best Fantasy League Team Using Gen AI Tools

Gain Gen AI expertise in Purdue's Applied Gen AI Specialization

Unlock Your Career Potential: Land Your Dream Job with Gen AI Tools

Recommended Reads

Free eBook: Guide To The CCBA And CBAP Certifications

Understanding Statistical Process Control (SPC) and Top Applications

A Complete Guide on the Types of Statistical Studies

Digital Marketing Salary Guide 2021

What Is Data Analysis: A Comprehensive Guide

A Complete Guide to Get a Grasp of Time Series Analysis

Get Affiliated Certifications with Live Class programs

- PMP, PMI, PMBOK, CAPM, PgMP, PfMP, ACP, PBA, RMP, SP, and OPM3 are registered marks of the Project Management Institute, Inc.

What Is Statistical Analysis?

Statistical analysis is a technique we use to find patterns in data and make inferences about those patterns to describe variability in the results of a data set or an experiment.

In its simplest form, statistical analysis answers questions about:

- Quantification — how big/small/tall/wide is it?

- Variability — growth, increase, decline

- The confidence level of these variabilities

What Are the 2 Types of Statistical Analysis?

- Descriptive Statistics: Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

- Inferential Statistics: Inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

What’s the Purpose of Statistical Analysis?

Using statistical analysis, you can determine trends in the data by calculating your data set’s mean or median. You can also analyze the variation between different data points from the mean to get the standard deviation . Furthermore, to test the validity of your statistical analysis conclusions, you can use hypothesis testing techniques, like P-value, to determine the likelihood that the observed variability could have occurred by chance.

More From Abdishakur Hassan The 7 Best Thematic Map Types for Geospatial Data

Statistical Analysis Methods

There are two major types of statistical data analysis: descriptive and inferential.

Descriptive Statistical Analysis

Descriptive statistical analysis describes the quality of the data by summarizing large data sets into single measures.

Within the descriptive analysis branch, there are two main types: measures of central tendency (i.e. mean, median and mode) and measures of dispersion or variation (i.e. variance , standard deviation and range).

For example, you can calculate the average exam results in a class using central tendency or, in particular, the mean. In that case, you’d sum all student results and divide by the number of tests. You can also calculate the data set’s spread by calculating the variance. To calculate the variance, subtract each exam result in the data set from the mean, square the answer, add everything together and divide by the number of tests.

Inferential Statistics

On the other hand, inferential statistical analysis allows you to draw conclusions from your sample data set and make predictions about a population using statistical tests.

There are two main types of inferential statistical analysis: hypothesis testing and regression analysis. We use hypothesis testing to test and validate assumptions in order to draw conclusions about a population from the sample data. Popular tests include Z-test, F-Test, ANOVA test and confidence intervals . On the other hand, regression analysis primarily estimates the relationship between a dependent variable and one or more independent variables. There are numerous types of regression analysis but the most popular ones include linear and logistic regression .

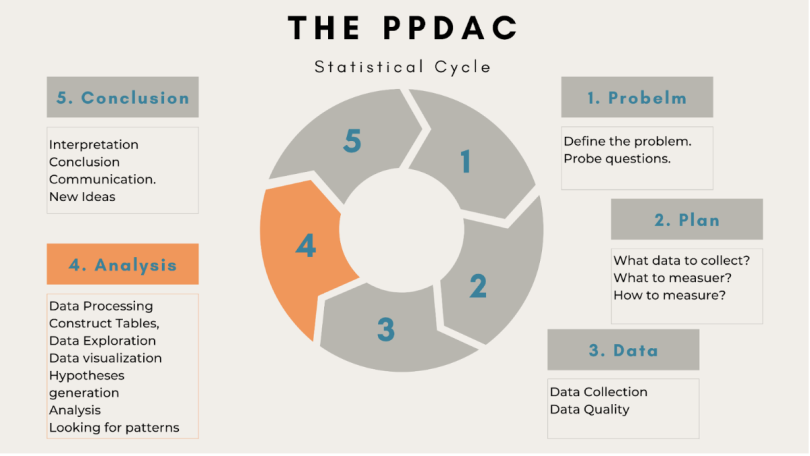

Statistical Analysis Steps

In the era of big data and data science, there is a rising demand for a more problem-driven approach. As a result, we must approach statistical analysis holistically. We may divide the entire process into five different and significant stages by using the well-known PPDAC model of statistics: Problem, Plan, Data, Analysis and Conclusion.

In the first stage, you define the problem you want to tackle and explore questions about the problem.

Next is the planning phase. You can check whether data is available or if you need to collect data for your problem. You also determine what to measure and how to measure it.

The third stage involves data collection, understanding the data and checking its quality.

4. Analysis

Statistical data analysis is the fourth stage. Here you process and explore the data with the help of tables, graphs and other data visualizations. You also develop and scrutinize your hypothesis in this stage of analysis.

5. Conclusion

The final step involves interpretations and conclusions from your analysis. It also covers generating new ideas for the next iteration. Thus, statistical analysis is not a one-time event but an iterative process.

Statistical Analysis Uses

Statistical analysis is useful for research and decision making because it allows us to understand the world around us and draw conclusions by testing our assumptions. Statistical analysis is important for various applications, including:

- Statistical quality control and analysis in product development

- Clinical trials

- Customer satisfaction surveys and customer experience research

- Marketing operations management

- Process improvement and optimization

- Training needs

More on Statistical Analysis From Built In Experts Intro to Descriptive Statistics for Machine Learning

Benefits of Statistical Analysis

Here are some of the reasons why statistical analysis is widespread in many applications and why it’s necessary:

Understand Data

Statistical analysis gives you a better understanding of the data and what they mean. These types of analyses provide information that would otherwise be difficult to obtain by merely looking at the numbers without considering their relationship.

Find Causal Relationships

Statistical analysis can help you investigate causation or establish the precise meaning of an experiment, like when you’re looking for a relationship between two variables.

Make Data-Informed Decisions

Businesses are constantly looking to find ways to improve their services and products . Statistical analysis allows you to make data-informed decisions about your business or future actions by helping you identify trends in your data, whether positive or negative.

Determine Probability

Statistical analysis is an approach to understanding how the probability of certain events affects the outcome of an experiment. It helps scientists and engineers decide how much confidence they can have in the results of their research, how to interpret their data and what questions they can feasibly answer.

You’ve Got Questions. Our Experts Have Answers. Confidence Intervals, Explained!

What Are the Risks of Statistical Analysis?

Statistical analysis can be valuable and effective, but it’s an imperfect approach. Even if the analyst or researcher performs a thorough statistical analysis, there may still be known or unknown problems that can affect the results. Therefore, statistical analysis is not a one-size-fits-all process. If you want to get good results, you need to know what you’re doing. It can take a lot of time to figure out which type of statistical analysis will work best for your situation .

Thus, you should remember that our conclusions drawn from statistical analysis don’t always guarantee correct results. This can be dangerous when making business decisions. In marketing , for example, we may come to the wrong conclusion about a product . Therefore, the conclusions we draw from statistical data analysis are often approximated; testing for all factors affecting an observation is impossible.

Built In’s expert contributor network publishes thoughtful, solutions-oriented stories written by innovative tech professionals. It is the tech industry’s definitive destination for sharing compelling, first-person accounts of problem-solving on the road to innovation.

Great Companies Need Great People. That's Where We Come In.

What is Statistical Analysis? Types, Methods, Software, Examples

Appinio Research · 29.02.2024 · 31min read

Ever wondered how we make sense of vast amounts of data to make informed decisions? Statistical analysis is the answer. In our data-driven world, statistical analysis serves as a powerful tool to uncover patterns, trends, and relationships hidden within data. From predicting sales trends to assessing the effectiveness of new treatments, statistical analysis empowers us to derive meaningful insights and drive evidence-based decision-making across various fields and industries. In this guide, we'll explore the fundamentals of statistical analysis, popular methods, software tools, practical examples, and best practices to help you harness the power of statistics effectively. Whether you're a novice or an experienced analyst, this guide will equip you with the knowledge and skills to navigate the world of statistical analysis with confidence.

What is Statistical Analysis?

Statistical analysis is a methodical process of collecting, analyzing, interpreting, and presenting data to uncover patterns, trends, and relationships. It involves applying statistical techniques and methodologies to make sense of complex data sets and draw meaningful conclusions.

Importance of Statistical Analysis

Statistical analysis plays a crucial role in various fields and industries due to its numerous benefits and applications:

- Informed Decision Making : Statistical analysis provides valuable insights that inform decision-making processes in business, healthcare, government, and academia. By analyzing data, organizations can identify trends, assess risks, and optimize strategies for better outcomes.

- Evidence-Based Research : Statistical analysis is fundamental to scientific research, enabling researchers to test hypotheses, draw conclusions, and validate theories using empirical evidence. It helps researchers quantify relationships, assess the significance of findings, and advance knowledge in their respective fields.

- Quality Improvement : In manufacturing and quality management, statistical analysis helps identify defects, improve processes, and enhance product quality. Techniques such as Six Sigma and Statistical Process Control (SPC) are used to monitor performance, reduce variation, and achieve quality objectives.

- Risk Assessment : In finance, insurance, and investment, statistical analysis is used for risk assessment and portfolio management. By analyzing historical data and market trends, analysts can quantify risks, forecast outcomes, and make informed decisions to mitigate financial risks.

- Predictive Modeling : Statistical analysis enables predictive modeling and forecasting in various domains, including sales forecasting, demand planning, and weather prediction. By analyzing historical data patterns, predictive models can anticipate future trends and outcomes with reasonable accuracy.

- Healthcare Decision Support : In healthcare, statistical analysis is integral to clinical research, epidemiology, and healthcare management. It helps healthcare professionals assess treatment effectiveness, analyze patient outcomes, and optimize resource allocation for improved patient care.

Statistical Analysis Applications

Statistical analysis finds applications across diverse domains and disciplines, including:

- Business and Economics : Market research , financial analysis, econometrics, and business intelligence.

- Healthcare and Medicine : Clinical trials, epidemiological studies, healthcare outcomes research, and disease surveillance.

- Social Sciences : Survey research, demographic analysis, psychology experiments, and public opinion polls.

- Engineering : Reliability analysis, quality control, process optimization, and product design.

- Environmental Science : Environmental monitoring, climate modeling, and ecological research.

- Education : Educational research, assessment, program evaluation, and learning analytics.

- Government and Public Policy : Policy analysis, program evaluation, census data analysis, and public administration.

- Technology and Data Science : Machine learning, artificial intelligence, data mining, and predictive analytics.

These applications demonstrate the versatility and significance of statistical analysis in addressing complex problems and informing decision-making across various sectors and disciplines.

Fundamentals of Statistics

Understanding the fundamentals of statistics is crucial for conducting meaningful analyses. Let's delve into some essential concepts that form the foundation of statistical analysis.

Basic Concepts

Statistics is the science of collecting, organizing, analyzing, and interpreting data to make informed decisions or conclusions. To embark on your statistical journey, familiarize yourself with these fundamental concepts:

- Population vs. Sample : A population comprises all the individuals or objects of interest in a study, while a sample is a subset of the population selected for analysis. Understanding the distinction between these two entities is vital, as statistical analyses often rely on samples to draw conclusions about populations.

- Independent Variables : Variables that are manipulated or controlled in an experiment.

- Dependent Variables : Variables that are observed or measured in response to changes in independent variables.

- Parameters vs. Statistics : Parameters are numerical measures that describe a population, whereas statistics are numerical measures that describe a sample. For instance, the population mean is denoted by μ (mu), while the sample mean is denoted by x̄ (x-bar).

Descriptive Statistics

Descriptive statistics involve methods for summarizing and describing the features of a dataset. These statistics provide insights into the central tendency, variability, and distribution of the data. Standard measures of descriptive statistics include:

- Mean : The arithmetic average of a set of values, calculated by summing all values and dividing by the number of observations.

- Median : The middle value in a sorted list of observations.

- Mode : The value that appears most frequently in a dataset.

- Range : The difference between the maximum and minimum values in a dataset.

- Variance : The average of the squared differences from the mean.

- Standard Deviation : The square root of the variance, providing a measure of the average distance of data points from the mean.

- Graphical Techniques : Graphical representations, including histograms, box plots, and scatter plots, offer visual insights into the distribution and relationships within a dataset. These visualizations aid in identifying patterns, outliers, and trends.

Inferential Statistics

Inferential statistics enable researchers to draw conclusions or make predictions about populations based on sample data. These methods allow for generalizations beyond the observed data. Fundamental techniques in inferential statistics include:

- Null Hypothesis (H0) : The hypothesis that there is no significant difference or relationship.

- Alternative Hypothesis (H1) : The hypothesis that there is a significant difference or relationship.

- Confidence Intervals : Confidence intervals provide a range of plausible values for a population parameter. They offer insights into the precision of sample estimates and the uncertainty associated with those estimates.

- Regression Analysis : Regression analysis examines the relationship between one or more independent variables and a dependent variable. It allows for the prediction of the dependent variable based on the values of the independent variables.

- Sampling Methods : Sampling methods, such as simple random sampling, stratified sampling, and cluster sampling , are employed to ensure that sample data are representative of the population of interest. These methods help mitigate biases and improve the generalizability of results.

Probability Distributions

Probability distributions describe the likelihood of different outcomes in a statistical experiment. Understanding these distributions is essential for modeling and analyzing random phenomena. Some common probability distributions include:

- Normal Distribution : The normal distribution, also known as the Gaussian distribution, is characterized by a symmetric, bell-shaped curve. Many natural phenomena follow this distribution, making it widely applicable in statistical analysis.

- Binomial Distribution : The binomial distribution describes the number of successes in a fixed number of independent Bernoulli trials. It is commonly used to model binary outcomes, such as success or failure, heads or tails.

- Poisson Distribution : The Poisson distribution models the number of events occurring in a fixed interval of time or space. It is often used to analyze rare or discrete events, such as the number of customer arrivals in a queue within a given time period.

Types of Statistical Analysis

Statistical analysis encompasses a diverse range of methods and approaches, each suited to different types of data and research questions. Understanding the various types of statistical analysis is essential for selecting the most appropriate technique for your analysis. Let's explore some common distinctions in statistical analysis methods.

Parametric vs. Non-parametric Analysis

Parametric and non-parametric analyses represent two broad categories of statistical methods, each with its own assumptions and applications.

- Parametric Analysis : Parametric methods assume that the data follow a specific probability distribution, often the normal distribution. These methods rely on estimating parameters (e.g., means, variances) from the data. Parametric tests typically provide more statistical power but require stricter assumptions. Examples of parametric tests include t-tests, ANOVA, and linear regression.

- Non-parametric Analysis : Non-parametric methods make fewer assumptions about the underlying distribution of the data. Instead of estimating parameters, non-parametric tests rely on ranks or other distribution-free techniques. Non-parametric tests are often used when data do not meet the assumptions of parametric tests or when dealing with ordinal or non-normal data. Examples of non-parametric tests include the Wilcoxon rank-sum test, Kruskal-Wallis test, and Spearman correlation.

Descriptive vs. Inferential Analysis

Descriptive and inferential analyses serve distinct purposes in statistical analysis, focusing on summarizing data and making inferences about populations, respectively.

- Descriptive Analysis : Descriptive statistics aim to describe and summarize the features of a dataset. These statistics provide insights into the central tendency, variability, and distribution of the data. Descriptive analysis techniques include measures of central tendency (e.g., mean, median, mode), measures of dispersion (e.g., variance, standard deviation), and graphical representations (e.g., histograms, box plots).

- Inferential Analysis : Inferential statistics involve making inferences or predictions about populations based on sample data. These methods allow researchers to generalize findings from the sample to the larger population. Inferential analysis techniques include hypothesis testing, confidence intervals, regression analysis, and sampling methods. These methods help researchers draw conclusions about population parameters, such as means, proportions, or correlations, based on sample data.

Exploratory vs. Confirmatory Analysis

Exploratory and confirmatory analyses represent two different approaches to data analysis, each serving distinct purposes in the research process.

- Exploratory Analysis : Exploratory data analysis (EDA) focuses on exploring data to discover patterns, relationships, and trends. EDA techniques involve visualizing data, identifying outliers, and generating hypotheses for further investigation. Exploratory analysis is particularly useful in the early stages of research when the goal is to gain insights and generate hypotheses rather than confirm specific hypotheses.

- Confirmatory Analysis : Confirmatory data analysis involves testing predefined hypotheses or theories based on prior knowledge or assumptions. Confirmatory analysis follows a structured approach, where hypotheses are tested using appropriate statistical methods. Confirmatory analysis is common in hypothesis-driven research, where the goal is to validate or refute specific hypotheses using empirical evidence. Techniques such as hypothesis testing, regression analysis, and experimental design are often employed in confirmatory analysis.

Methods of Statistical Analysis

Statistical analysis employs various methods to extract insights from data and make informed decisions. Let's explore some of the key methods used in statistical analysis and their applications.

Hypothesis Testing

Hypothesis testing is a fundamental concept in statistics, allowing researchers to make decisions about population parameters based on sample data. The process involves formulating null and alternative hypotheses, selecting an appropriate test statistic, determining the significance level, and interpreting the results. Standard hypothesis tests include:

- t-tests : Used to compare means between two groups.

- ANOVA (Analysis of Variance) : Extends the t-test to compare means across multiple groups.

- Chi-square test : Assessing the association between categorical variables.

Regression Analysis

Regression analysis explores the relationship between one or more independent variables and a dependent variable. It is widely used in predictive modeling and understanding the impact of variables on outcomes. Key types of regression analysis include:

- Simple Linear Regression : Examines the linear relationship between one independent variable and a dependent variable.

- Multiple Linear Regression : Extends simple linear regression to analyze the relationship between multiple independent variables and a dependent variable.

- Logistic Regression : Used for predicting binary outcomes or modeling probabilities.

Analysis of Variance (ANOVA)

ANOVA is a statistical technique used to compare means across two or more groups. It partitions the total variability in the data into components attributable to different sources, such as between-group differences and within-group variability. ANOVA is commonly used in experimental design and hypothesis testing scenarios.

Time Series Analysis

Time series analysis deals with analyzing data collected or recorded at successive time intervals. It helps identify patterns, trends, and seasonality in the data. Time series analysis techniques include:

- Trend Analysis : Identifying long-term trends or patterns in the data.

- Seasonal Decomposition : Separating the data into seasonal, trend, and residual components.

- Forecasting : Predicting future values based on historical data.

Survival Analysis

Survival analysis is used to analyze time-to-event data, such as time until death, failure, or occurrence of an event of interest. It is widely used in medical research, engineering, and social sciences to analyze survival probabilities and hazard rates over time.

Factor Analysis

Factor analysis is a statistical method used to identify underlying factors or latent variables that explain patterns of correlations among observed variables. It is commonly used in psychology, sociology, and market research to uncover underlying dimensions or constructs.

Cluster Analysis

Cluster analysis is a multivariate technique that groups similar objects or observations into clusters or segments based on their characteristics. It is widely used in market segmentation, image processing, and biological classification.

Principal Component Analysis (PCA)

PCA is a dimensionality reduction technique used to transform high-dimensional data into a lower-dimensional space while preserving most of the variability in the data. It identifies orthogonal axes (principal components) that capture the maximum variance in the data. PCA is useful for data visualization, feature selection, and data compression.

How to Choose the Right Statistical Analysis Method?

Selecting the appropriate statistical method is crucial for obtaining accurate and meaningful results from your data analysis.

Understanding Data Types and Distribution

Before choosing a statistical method, it's essential to understand the types of data you're working with and their distribution. Different statistical methods are suitable for different types of data:

- Continuous vs. Categorical Data : Determine whether your data are continuous (e.g., height, weight) or categorical (e.g., gender, race). Parametric methods such as t-tests and regression are typically used for continuous data, while non-parametric methods like chi-square tests are suitable for categorical data.

- Normality : Assess whether your data follows a normal distribution. Parametric methods often assume normality, so if your data are not normally distributed, non-parametric methods may be more appropriate.

Assessing Assumptions

Many statistical methods rely on certain assumptions about the data. Before applying a method, it's essential to assess whether these assumptions are met:

- Independence : Ensure that observations are independent of each other. Violations of independence assumptions can lead to biased results.

- Homogeneity of Variance : Verify that variances are approximately equal across groups, especially in ANOVA and regression analyses. Levene's test or Bartlett's test can be used to assess homogeneity of variance.

- Linearity : Check for linear relationships between variables, particularly in regression analysis. Residual plots can help diagnose violations of linearity assumptions.

Considering Research Objectives

Your research objectives should guide the selection of the appropriate statistical method.

- What are you trying to achieve with your analysis? : Determine whether you're interested in comparing groups, predicting outcomes, exploring relationships, or identifying patterns.

- What type of data are you analyzing? : Choose methods that are suitable for your data type and research questions.

- Are you testing specific hypotheses or exploring data for insights? : Confirmatory analyses involve testing predefined hypotheses, while exploratory analyses focus on discovering patterns or relationships in the data.

Consulting Statistical Experts

If you're unsure about the most appropriate statistical method for your analysis, don't hesitate to seek advice from statistical experts or consultants:

- Collaborate with Statisticians : Statisticians can provide valuable insights into the strengths and limitations of different statistical methods and help you select the most appropriate approach.

- Utilize Resources : Take advantage of online resources, forums, and statistical software documentation to learn about different methods and their applications.

- Peer Review : Consider seeking feedback from colleagues or peers familiar with statistical analysis to validate your approach and ensure rigor in your analysis.

By carefully considering these factors and consulting with experts when needed, you can confidently choose the suitable statistical method to address your research questions and obtain reliable results.

Statistical Analysis Software

Choosing the right software for statistical analysis is crucial for efficiently processing and interpreting your data. In addition to statistical analysis software, it's essential to consider tools for data collection, which lay the foundation for meaningful analysis.

What is Statistical Analysis Software?

Statistical software provides a range of tools and functionalities for data analysis, visualization, and interpretation. These software packages offer user-friendly interfaces and robust analytical capabilities, making them indispensable tools for researchers, analysts, and data scientists.

- Graphical User Interface (GUI) : Many statistical software packages offer intuitive GUIs that allow users to perform analyses using point-and-click interfaces. This makes statistical analysis accessible to users with varying levels of programming expertise.

- Scripting and Programming : Advanced users can leverage scripting and programming capabilities within statistical software to automate analyses, customize functions, and extend the software's functionality.

- Visualization : Statistical software often includes built-in visualization tools for creating charts, graphs, and plots to visualize data distributions, relationships, and trends.

- Data Management : These software packages provide features for importing, cleaning, and manipulating datasets, ensuring data integrity and consistency throughout the analysis process.

Popular Statistical Analysis Software

Several statistical software packages are widely used in various industries and research domains. Some of the most popular options include:

- R : R is a free, open-source programming language and software environment for statistical computing and graphics. It offers a vast ecosystem of packages for data manipulation, visualization, and analysis, making it a popular choice among statisticians and data scientists.

- Python : Python is a versatile programming language with robust libraries like NumPy, SciPy, and pandas for data analysis and scientific computing. Python's simplicity and flexibility make it an attractive option for statistical analysis, particularly for users with programming experience.

- SPSS : SPSS (Statistical Package for the Social Sciences) is a comprehensive statistical software package widely used in social science research, marketing, and healthcare. It offers a user-friendly interface and a wide range of statistical procedures for data analysis and reporting.

- SAS : SAS (Statistical Analysis System) is a powerful statistical software suite used for data management, advanced analytics, and predictive modeling. SAS is commonly employed in industries such as healthcare, finance, and government for data-driven decision-making.

- Stata : Stata is a statistical software package that provides tools for data analysis, manipulation, and visualization. It is popular in academic research, economics, and social sciences for its robust statistical capabilities and ease of use.

- MATLAB : MATLAB is a high-level programming language and environment for numerical computing and visualization. It offers built-in functions and toolboxes for statistical analysis, machine learning, and signal processing.

Data Collection Software

In addition to statistical analysis software, data collection software plays a crucial role in the research process. These tools facilitate data collection, management, and organization from various sources, ensuring data quality and reliability.

When it comes to data collection, precision and efficiency are paramount. Appinio offers a seamless solution for gathering real-time consumer insights, empowering you to make informed decisions swiftly. With our intuitive platform, you can define your target audience with precision, launch surveys effortlessly, and access valuable data in minutes. Experience the power of Appinio and elevate your data collection process today. Ready to see it in action? Book a demo now!

Book a Demo

How to Choose the Right Statistical Analysis Software?

When selecting software for statistical analysis and data collection, consider the following factors:

- Compatibility : Ensure the software is compatible with your operating system, hardware, and data formats.

- Usability : Choose software that aligns with your level of expertise and provides features that meet your analysis and data collection requirements.

- Integration : Consider whether the software integrates with other tools and platforms in your workflow, such as data visualization software or data storage systems.

- Cost and Licensing : Evaluate the cost of licensing or subscription fees, as well as any additional costs for training, support, or maintenance.

By carefully evaluating these factors and considering your specific analysis and data collection needs, you can select the right software tools to support your research objectives and drive meaningful insights from your data.

Statistical Analysis Examples

Understanding statistical analysis methods is best achieved through practical examples. Let's explore three examples that demonstrate the application of statistical techniques in real-world scenarios.

Example 1: Linear Regression

Scenario : A marketing analyst wants to understand the relationship between advertising spending and sales revenue for a product.

Data : The analyst collects data on monthly advertising expenditures (in dollars) and corresponding sales revenue (in dollars) over the past year.

Analysis : Using simple linear regression, the analyst fits a regression model to the data, where advertising spending is the independent variable (X) and sales revenue is the dependent variable (Y). The regression analysis estimates the linear relationship between advertising spending and sales revenue, allowing the analyst to predict sales based on advertising expenditures.

Result : The regression analysis reveals a statistically significant positive relationship between advertising spending and sales revenue. For every additional dollar spent on advertising, sales revenue increases by an estimated amount (slope coefficient). The analyst can use this information to optimize advertising budgets and forecast sales performance.

Example 2: Hypothesis Testing

Scenario : A pharmaceutical company develops a new drug intended to lower blood pressure. The company wants to determine whether the new drug is more effective than the existing standard treatment.

Data : The company conducts a randomized controlled trial (RCT) involving two groups of participants: one group receives the new drug, and the other receives the standard treatment. Blood pressure measurements are taken before and after the treatment period.

Analysis : The company uses hypothesis testing, specifically a two-sample t-test, to compare the mean reduction in blood pressure between the two groups. The null hypothesis (H0) states that there is no difference in the mean reduction in blood pressure between the two treatments, while the alternative hypothesis (H1) suggests that the new drug is more effective.

Result : The t-test results indicate a statistically significant difference in the mean reduction in blood pressure between the two groups. The company concludes that the new drug is more effective than the standard treatment in lowering blood pressure, based on the evidence from the RCT.

Example 3: ANOVA

Scenario : A researcher wants to compare the effectiveness of three different teaching methods on student performance in a mathematics course.

Data : The researcher conducts an experiment where students are randomly assigned to one of three groups: traditional lecture-based instruction, active learning, or flipped classroom. At the end of the semester, students' scores on a standardized math test are recorded.

Analysis : The researcher performs an analysis of variance (ANOVA) to compare the mean test scores across the three teaching methods. ANOVA assesses whether there are statistically significant differences in mean scores between the groups.

Result : The ANOVA results reveal a significant difference in mean test scores between the three teaching methods. Post-hoc tests, such as Tukey's HSD (Honestly Significant Difference), can be conducted to identify which specific teaching methods differ significantly from each other in terms of student performance.

These examples illustrate how statistical analysis techniques can be applied to address various research questions and make data-driven decisions in different fields. By understanding and applying these methods effectively, researchers and analysts can derive valuable insights from their data to inform decision-making and drive positive outcomes.

Statistical Analysis Best Practices

Statistical analysis is a powerful tool for extracting insights from data, but it's essential to follow best practices to ensure the validity, reliability, and interpretability of your results.

- Clearly Define Research Questions : Before conducting any analysis, clearly define your research questions or objectives . This ensures that your analysis is focused and aligned with the goals of your study.

- Choose Appropriate Methods : Select statistical methods suitable for your data type, research design , and objectives. Consider factors such as data distribution, sample size, and assumptions of the chosen method.

- Preprocess Data : Clean and preprocess your data to remove errors, outliers, and missing values. Data preprocessing steps may include data cleaning, normalization, and transformation to ensure data quality and consistency.

- Check Assumptions : Verify that the assumptions of the chosen statistical methods are met. Assumptions may include normality, homogeneity of variance, independence, and linearity. Conduct diagnostic tests or exploratory data analysis to assess assumptions.

- Transparent Reporting : Document your analysis procedures, including data preprocessing steps, statistical methods used, and any assumptions made. Transparent reporting enhances reproducibility and allows others to evaluate the validity of your findings.

- Consider Sample Size : Ensure that your sample size is sufficient to detect meaningful effects or relationships. Power analysis can help determine the minimum sample size required to achieve adequate statistical power.

- Interpret Results Cautiously : Interpret statistical results with caution and consider the broader context of your research. Be mindful of effect sizes, confidence intervals, and practical significance when interpreting findings.

- Validate Findings : Validate your findings through robustness checks, sensitivity analyses, or replication studies. Cross-validation and bootstrapping techniques can help assess the stability and generalizability of your results.

- Avoid P-Hacking and Data Dredging : Guard against p-hacking and data dredging by pre-registering hypotheses, conducting planned analyses, and avoiding selective reporting of results. Maintain transparency and integrity in your analysis process.

By following these best practices, you can conduct rigorous and reliable statistical analyses that yield meaningful insights and contribute to evidence-based decision-making in your field.

Conclusion for Statistical Analysis

Statistical analysis is a vital tool for making sense of data and guiding decision-making across diverse fields. By understanding the fundamentals of statistical analysis, including concepts like hypothesis testing, regression analysis, and data visualization, you gain the ability to extract valuable insights from complex datasets. Moreover, selecting the appropriate statistical methods, choosing the right software, and following best practices ensure the validity and reliability of your analyses. In today's data-driven world, the ability to conduct rigorous statistical analysis is a valuable skill that empowers individuals and organizations to make informed decisions and drive positive outcomes. Whether you're a researcher, analyst, or decision-maker, mastering statistical analysis opens doors to new opportunities for understanding the world around us and unlocking the potential of data to solve real-world problems.

How to Collect Data for Statistical Analysis in Minutes?

Introducing Appinio , your gateway to effortless data collection for statistical analysis. As a real-time market research platform, Appinio specializes in delivering instant consumer insights, empowering businesses to make swift, data-driven decisions.

With Appinio, conducting your own market research is not only feasible but also exhilarating. Here's why:

- Obtain insights in minutes, not days: From posing questions to uncovering insights, Appinio accelerates the entire research process, ensuring rapid access to valuable data.

- User-friendly interface: No advanced degrees required! Our platform is designed to be intuitive and accessible to anyone, allowing you to dive into market research with confidence.

- Targeted surveys, global reach: Define your target audience with precision using our extensive array of demographic and psychographic characteristics, and reach respondents in over 90 countries effortlessly.

Get free access to the platform!

Join the loop 💌

Be the first to hear about new updates, product news, and data insights. We'll send it all straight to your inbox.

Get the latest market research news straight to your inbox! 💌

Wait, there's more

17.04.2024 | 25min read

Quota Sampling: Definition, Types, Methods, Examples

15.04.2024 | 34min read

What is Market Share? Definition, Formula, Examples

11.04.2024 | 34min read

What is Data Analysis? Definition, Tools, Examples

Quantitative Data Analysis 101

The lingo, methods and techniques, explained simply.

By: Derek Jansen (MBA) and Kerryn Warren (PhD) | December 2020

Quantitative data analysis is one of those things that often strikes fear in students. It’s totally understandable – quantitative analysis is a complex topic, full of daunting lingo , like medians, modes, correlation and regression. Suddenly we’re all wishing we’d paid a little more attention in math class…

The good news is that while quantitative data analysis is a mammoth topic, gaining a working understanding of the basics isn’t that hard , even for those of us who avoid numbers and math . In this post, we’ll break quantitative analysis down into simple , bite-sized chunks so you can approach your research with confidence.

Overview: Quantitative Data Analysis 101

- What (exactly) is quantitative data analysis?

- When to use quantitative analysis

- How quantitative analysis works

The two “branches” of quantitative analysis

- Descriptive statistics 101

- Inferential statistics 101

- How to choose the right quantitative methods

- Recap & summary

What is quantitative data analysis?

Despite being a mouthful, quantitative data analysis simply means analysing data that is numbers-based – or data that can be easily “converted” into numbers without losing any meaning.

For example, category-based variables like gender, ethnicity, or native language could all be “converted” into numbers without losing meaning – for example, English could equal 1, French 2, etc.

This contrasts against qualitative data analysis, where the focus is on words, phrases and expressions that can’t be reduced to numbers. If you’re interested in learning about qualitative analysis, check out our post and video here .

What is quantitative analysis used for?

Quantitative analysis is generally used for three purposes.

- Firstly, it’s used to measure differences between groups . For example, the popularity of different clothing colours or brands.

- Secondly, it’s used to assess relationships between variables . For example, the relationship between weather temperature and voter turnout.

- And third, it’s used to test hypotheses in a scientifically rigorous way. For example, a hypothesis about the impact of a certain vaccine.

Again, this contrasts with qualitative analysis , which can be used to analyse people’s perceptions and feelings about an event or situation. In other words, things that can’t be reduced to numbers.

How does quantitative analysis work?

Well, since quantitative data analysis is all about analysing numbers , it’s no surprise that it involves statistics . Statistical analysis methods form the engine that powers quantitative analysis, and these methods can vary from pretty basic calculations (for example, averages and medians) to more sophisticated analyses (for example, correlations and regressions).

Sounds like gibberish? Don’t worry. We’ll explain all of that in this post. Importantly, you don’t need to be a statistician or math wiz to pull off a good quantitative analysis. We’ll break down all the technical mumbo jumbo in this post.

Need a helping hand?

As I mentioned, quantitative analysis is powered by statistical analysis methods . There are two main “branches” of statistical methods that are used – descriptive statistics and inferential statistics . In your research, you might only use descriptive statistics, or you might use a mix of both , depending on what you’re trying to figure out. In other words, depending on your research questions, aims and objectives . I’ll explain how to choose your methods later.

So, what are descriptive and inferential statistics?

Well, before I can explain that, we need to take a quick detour to explain some lingo. To understand the difference between these two branches of statistics, you need to understand two important words. These words are population and sample .

First up, population . In statistics, the population is the entire group of people (or animals or organisations or whatever) that you’re interested in researching. For example, if you were interested in researching Tesla owners in the US, then the population would be all Tesla owners in the US.

However, it’s extremely unlikely that you’re going to be able to interview or survey every single Tesla owner in the US. Realistically, you’ll likely only get access to a few hundred, or maybe a few thousand owners using an online survey. This smaller group of accessible people whose data you actually collect is called your sample .

So, to recap – the population is the entire group of people you’re interested in, and the sample is the subset of the population that you can actually get access to. In other words, the population is the full chocolate cake , whereas the sample is a slice of that cake.

So, why is this sample-population thing important?

Well, descriptive statistics focus on describing the sample , while inferential statistics aim to make predictions about the population, based on the findings within the sample. In other words, we use one group of statistical methods – descriptive statistics – to investigate the slice of cake, and another group of methods – inferential statistics – to draw conclusions about the entire cake. There I go with the cake analogy again…

With that out the way, let’s take a closer look at each of these branches in more detail.

Branch 1: Descriptive Statistics

Descriptive statistics serve a simple but critically important role in your research – to describe your data set – hence the name. In other words, they help you understand the details of your sample . Unlike inferential statistics (which we’ll get to soon), descriptive statistics don’t aim to make inferences or predictions about the entire population – they’re purely interested in the details of your specific sample .

When you’re writing up your analysis, descriptive statistics are the first set of stats you’ll cover, before moving on to inferential statistics. But, that said, depending on your research objectives and research questions , they may be the only type of statistics you use. We’ll explore that a little later.

So, what kind of statistics are usually covered in this section?

Some common statistical tests used in this branch include the following:

- Mean – this is simply the mathematical average of a range of numbers.

- Median – this is the midpoint in a range of numbers when the numbers are arranged in numerical order. If the data set makes up an odd number, then the median is the number right in the middle of the set. If the data set makes up an even number, then the median is the midpoint between the two middle numbers.

- Mode – this is simply the most commonly occurring number in the data set.

- In cases where most of the numbers are quite close to the average, the standard deviation will be relatively low.

- Conversely, in cases where the numbers are scattered all over the place, the standard deviation will be relatively high.

- Skewness . As the name suggests, skewness indicates how symmetrical a range of numbers is. In other words, do they tend to cluster into a smooth bell curve shape in the middle of the graph, or do they skew to the left or right?

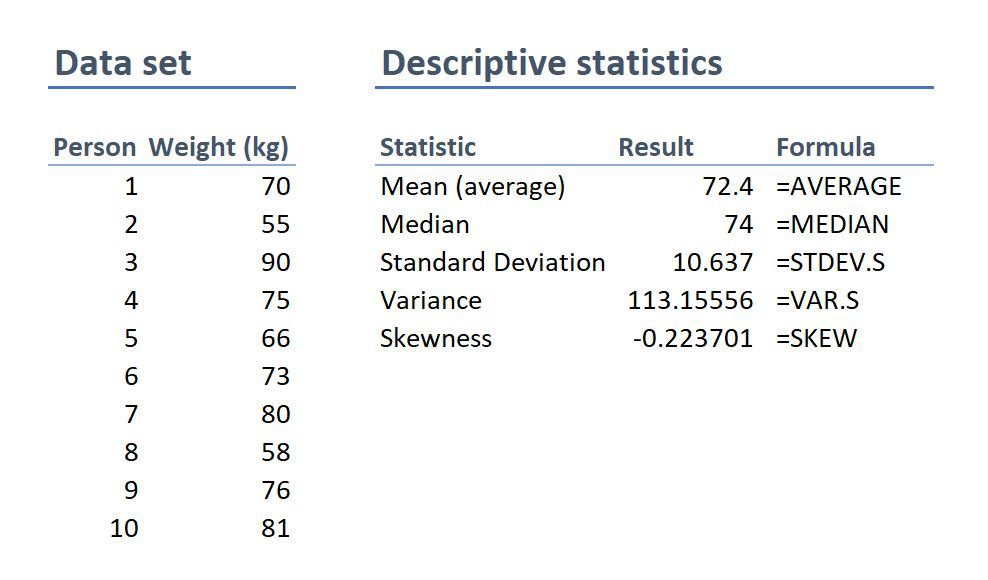

Feeling a bit confused? Let’s look at a practical example using a small data set.

On the left-hand side is the data set. This details the bodyweight of a sample of 10 people. On the right-hand side, we have the descriptive statistics. Let’s take a look at each of them.

First, we can see that the mean weight is 72.4 kilograms. In other words, the average weight across the sample is 72.4 kilograms. Straightforward.

Next, we can see that the median is very similar to the mean (the average). This suggests that this data set has a reasonably symmetrical distribution (in other words, a relatively smooth, centred distribution of weights, clustered towards the centre).

In terms of the mode , there is no mode in this data set. This is because each number is present only once and so there cannot be a “most common number”. If there were two people who were both 65 kilograms, for example, then the mode would be 65.

Next up is the standard deviation . 10.6 indicates that there’s quite a wide spread of numbers. We can see this quite easily by looking at the numbers themselves, which range from 55 to 90, which is quite a stretch from the mean of 72.4.

And lastly, the skewness of -0.2 tells us that the data is very slightly negatively skewed. This makes sense since the mean and the median are slightly different.

As you can see, these descriptive statistics give us some useful insight into the data set. Of course, this is a very small data set (only 10 records), so we can’t read into these statistics too much. Also, keep in mind that this is not a list of all possible descriptive statistics – just the most common ones.

But why do all of these numbers matter?

While these descriptive statistics are all fairly basic, they’re important for a few reasons:

- Firstly, they help you get both a macro and micro-level view of your data. In other words, they help you understand both the big picture and the finer details.

- Secondly, they help you spot potential errors in the data – for example, if an average is way higher than you’d expect, or responses to a question are highly varied, this can act as a warning sign that you need to double-check the data.

- And lastly, these descriptive statistics help inform which inferential statistical techniques you can use, as those techniques depend on the skewness (in other words, the symmetry and normality) of the data.

Simply put, descriptive statistics are really important , even though the statistical techniques used are fairly basic. All too often at Grad Coach, we see students skimming over the descriptives in their eagerness to get to the more exciting inferential methods, and then landing up with some very flawed results.

Don’t be a sucker – give your descriptive statistics the love and attention they deserve!

Branch 2: Inferential Statistics

As I mentioned, while descriptive statistics are all about the details of your specific data set – your sample – inferential statistics aim to make inferences about the population . In other words, you’ll use inferential statistics to make predictions about what you’d expect to find in the full population.

What kind of predictions, you ask? Well, there are two common types of predictions that researchers try to make using inferential stats:

- Firstly, predictions about differences between groups – for example, height differences between children grouped by their favourite meal or gender.

- And secondly, relationships between variables – for example, the relationship between body weight and the number of hours a week a person does yoga.

In other words, inferential statistics (when done correctly), allow you to connect the dots and make predictions about what you expect to see in the real world population, based on what you observe in your sample data. For this reason, inferential statistics are used for hypothesis testing – in other words, to test hypotheses that predict changes or differences.

Of course, when you’re working with inferential statistics, the composition of your sample is really important. In other words, if your sample doesn’t accurately represent the population you’re researching, then your findings won’t necessarily be very useful.

For example, if your population of interest is a mix of 50% male and 50% female , but your sample is 80% male , you can’t make inferences about the population based on your sample, since it’s not representative. This area of statistics is called sampling, but we won’t go down that rabbit hole here (it’s a deep one!) – we’ll save that for another post .

What statistics are usually used in this branch?

There are many, many different statistical analysis methods within the inferential branch and it’d be impossible for us to discuss them all here. So we’ll just take a look at some of the most common inferential statistical methods so that you have a solid starting point.

First up are T-Tests . T-tests compare the means (the averages) of two groups of data to assess whether they’re statistically significantly different. In other words, do they have significantly different means, standard deviations and skewness.

This type of testing is very useful for understanding just how similar or different two groups of data are. For example, you might want to compare the mean blood pressure between two groups of people – one that has taken a new medication and one that hasn’t – to assess whether they are significantly different.

Kicking things up a level, we have ANOVA, which stands for “analysis of variance”. This test is similar to a T-test in that it compares the means of various groups, but ANOVA allows you to analyse multiple groups , not just two groups So it’s basically a t-test on steroids…

Next, we have correlation analysis . This type of analysis assesses the relationship between two variables. In other words, if one variable increases, does the other variable also increase, decrease or stay the same. For example, if the average temperature goes up, do average ice creams sales increase too? We’d expect some sort of relationship between these two variables intuitively , but correlation analysis allows us to measure that relationship scientifically .

Lastly, we have regression analysis – this is quite similar to correlation in that it assesses the relationship between variables, but it goes a step further to understand cause and effect between variables, not just whether they move together. In other words, does the one variable actually cause the other one to move, or do they just happen to move together naturally thanks to another force? Just because two variables correlate doesn’t necessarily mean that one causes the other.

Stats overload…

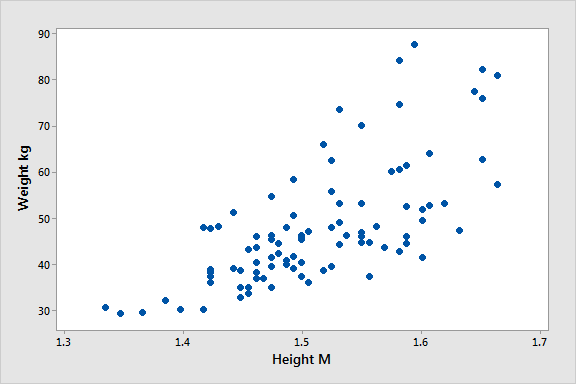

I hear you. To make this all a little more tangible, let’s take a look at an example of a correlation in action.

Here’s a scatter plot demonstrating the correlation (relationship) between weight and height. Intuitively, we’d expect there to be some relationship between these two variables, which is what we see in this scatter plot. In other words, the results tend to cluster together in a diagonal line from bottom left to top right.

As I mentioned, these are are just a handful of inferential techniques – there are many, many more. Importantly, each statistical method has its own assumptions and limitations.

For example, some methods only work with normally distributed (parametric) data, while other methods are designed specifically for non-parametric data. And that’s exactly why descriptive statistics are so important – they’re the first step to knowing which inferential techniques you can and can’t use.

How to choose the right analysis method

To choose the right statistical methods, you need to think about two important factors :

- The type of quantitative data you have (specifically, level of measurement and the shape of the data). And,

- Your research questions and hypotheses

Let’s take a closer look at each of these.

Factor 1 – Data type

The first thing you need to consider is the type of data you’ve collected (or the type of data you will collect). By data types, I’m referring to the four levels of measurement – namely, nominal, ordinal, interval and ratio. If you’re not familiar with this lingo, check out the video below.

Why does this matter?

Well, because different statistical methods and techniques require different types of data. This is one of the “assumptions” I mentioned earlier – every method has its assumptions regarding the type of data.

For example, some techniques work with categorical data (for example, yes/no type questions, or gender or ethnicity), while others work with continuous numerical data (for example, age, weight or income) – and, of course, some work with multiple data types.

If you try to use a statistical method that doesn’t support the data type you have, your results will be largely meaningless . So, make sure that you have a clear understanding of what types of data you’ve collected (or will collect). Once you have this, you can then check which statistical methods would support your data types here .

If you haven’t collected your data yet, you can work in reverse and look at which statistical method would give you the most useful insights, and then design your data collection strategy to collect the correct data types.

Another important factor to consider is the shape of your data . Specifically, does it have a normal distribution (in other words, is it a bell-shaped curve, centred in the middle) or is it very skewed to the left or the right? Again, different statistical techniques work for different shapes of data – some are designed for symmetrical data while others are designed for skewed data.

This is another reminder of why descriptive statistics are so important – they tell you all about the shape of your data.

Factor 2: Your research questions

The next thing you need to consider is your specific research questions, as well as your hypotheses (if you have some). The nature of your research questions and research hypotheses will heavily influence which statistical methods and techniques you should use.

If you’re just interested in understanding the attributes of your sample (as opposed to the entire population), then descriptive statistics are probably all you need. For example, if you just want to assess the means (averages) and medians (centre points) of variables in a group of people.

On the other hand, if you aim to understand differences between groups or relationships between variables and to infer or predict outcomes in the population, then you’ll likely need both descriptive statistics and inferential statistics.

So, it’s really important to get very clear about your research aims and research questions, as well your hypotheses – before you start looking at which statistical techniques to use.

Never shoehorn a specific statistical technique into your research just because you like it or have some experience with it. Your choice of methods must align with all the factors we’ve covered here.

Time to recap…

You’re still with me? That’s impressive. We’ve covered a lot of ground here, so let’s recap on the key points:

- Quantitative data analysis is all about analysing number-based data (which includes categorical and numerical data) using various statistical techniques.

- The two main branches of statistics are descriptive statistics and inferential statistics . Descriptives describe your sample, whereas inferentials make predictions about what you’ll find in the population.

- Common descriptive statistical methods include mean (average), median , standard deviation and skewness .

- Common inferential statistical methods include t-tests , ANOVA , correlation and regression analysis.

- To choose the right statistical methods and techniques, you need to consider the type of data you’re working with , as well as your research questions and hypotheses.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

74 Comments

Hi, I have read your article. Such a brilliant post you have created.

Thank you for the feedback. Good luck with your quantitative analysis.

Thank you so much.

Thank you so much. I learnt much well. I love your summaries of the concepts. I had love you to explain how to input data using SPSS

Amazing and simple way of breaking down quantitative methods.

This is beautiful….especially for non-statisticians. I have skimmed through but I wish to read again. and please include me in other articles of the same nature when you do post. I am interested. I am sure, I could easily learn from you and get off the fear that I have had in the past. Thank you sincerely.

Send me every new information you might have.

i need every new information

Thank you for the blog. It is quite informative. Dr Peter Nemaenzhe PhD

It is wonderful. l’ve understood some of the concepts in a more compréhensive manner

Your article is so good! However, I am still a bit lost. I am doing a secondary research on Gun control in the US and increase in crime rates and I am not sure which analysis method I should use?

Based on the given learning points, this is inferential analysis, thus, use ‘t-tests, ANOVA, correlation and regression analysis’

Well explained notes. Am an MPH student and currently working on my thesis proposal, this has really helped me understand some of the things I didn’t know.

I like your page..helpful

wonderful i got my concept crystal clear. thankyou!!

This is really helpful , thank you

Thank you so much this helped

Wonderfully explained

thank u so much, it was so informative

THANKYOU, this was very informative and very helpful

This is great GRADACOACH I am not a statistician but I require more of this in my thesis

Include me in your posts.

This is so great and fully useful. I would like to thank you again and again.

Glad to read this article. I’ve read lot of articles but this article is clear on all concepts. Thanks for sharing.

Thank you so much. This is a very good foundation and intro into quantitative data analysis. Appreciate!

You have a very impressive, simple but concise explanation of data analysis for Quantitative Research here. This is a God-send link for me to appreciate research more. Thank you so much!

Avery good presentation followed by the write up. yes you simplified statistics to make sense even to a layman like me. Thank so much keep it up. The presenter did ell too. i would like more of this for Qualitative and exhaust more of the test example like the Anova.

This is a very helpful article, couldn’t have been clearer. Thank you.

Awesome and phenomenal information.Well done

The video with the accompanying article is super helpful to demystify this topic. Very well done. Thank you so much.

thank you so much, your presentation helped me a lot

I don’t know how should I express that ur article is saviour for me 🥺😍

It is well defined information and thanks for sharing. It helps me a lot in understanding the statistical data.

I gain a lot and thanks for sharing brilliant ideas, so wish to be linked on your email update.

Very helpful and clear .Thank you Gradcoach.