- Privacy Policy

Buy Me a Coffee

Home » Exploratory Research – Types, Methods and Examples

Exploratory Research – Types, Methods and Examples

Table of Contents

Exploratory Research

Definition:

Exploratory research is a type of research design that is used to investigate a research question when the researcher has limited knowledge or understanding of the topic or phenomenon under study.

The primary objective of exploratory research is to gain insights and gather preliminary information that can help the researcher better define the research problem and develop hypotheses or research questions for further investigation.

Exploratory Research Methods

There are several types of exploratory research, including:

Literature Review

This involves conducting a comprehensive review of existing published research, scholarly articles, and other relevant literature on the research topic or problem. It helps to identify the gaps in the existing knowledge and to develop new research questions or hypotheses.

Pilot Study

A pilot study is a small-scale preliminary study that helps the researcher to test research procedures, instruments, and data collection methods. This type of research can be useful in identifying any potential problems or issues with the research design and refining the research procedures for a larger-scale study.

This involves an in-depth analysis of a particular case or situation to gain insights into the underlying causes, processes, and dynamics of the issue under investigation. It can be used to develop a more comprehensive understanding of a complex problem, and to identify potential research questions or hypotheses.

Focus Groups

Focus groups involve a group discussion that is conducted to gather opinions, attitudes, and perceptions from a small group of individuals about a particular topic. This type of research can be useful in exploring the range of opinions and attitudes towards a topic, identifying common themes or patterns, and generating ideas for further research.

Expert Opinion

This involves consulting with experts or professionals in the field to gain their insights, expertise, and opinions on the research topic. This type of research can be useful in identifying the key issues and concerns related to the topic, and in generating ideas for further research.

Observational Research

Observational research involves gathering data by observing people, events, or phenomena in their natural settings to gain insights into behavior and interactions. This type of research can be useful in identifying patterns of behavior and interactions, and in generating hypotheses or research questions for further investigation.

Open-ended Surveys

Open-ended surveys allow respondents to provide detailed and unrestricted responses to questions, providing valuable insights into their attitudes, opinions, and perceptions. This type of research can be useful in identifying common themes or patterns, and in generating ideas for further research.

Data Analysis Methods

Exploratory Research Data Analysis Methods are as follows:

Content Analysis

This method involves analyzing text or other forms of data to identify common themes, patterns, and trends. It can be useful in identifying patterns in the data and developing hypotheses or research questions. For example, if the researcher is analyzing social media posts related to a particular topic, content analysis can help identify the most frequently used words, hashtags, and topics.

Thematic Analysis

This method involves identifying and analyzing patterns or themes in qualitative data such as interviews or focus groups. The researcher identifies recurring themes or patterns in the data and then categorizes them into different themes. This can be helpful in identifying common patterns or themes in the data and developing hypotheses or research questions. For example, a thematic analysis of interviews with healthcare professionals about patient care may identify themes related to communication, patient satisfaction, and quality of care.

Cluster Analysis

This method involves grouping data points into clusters based on their similarities or differences. It can be useful in identifying patterns in large datasets and grouping similar data points together. For example, if the researcher is analyzing customer data to identify different customer segments, cluster analysis can be used to group similar customers together based on their demographic, purchasing behavior, or preferences.

Network Analysis

This method involves analyzing the relationships and connections between data points. It can be useful in identifying patterns in complex datasets with many interrelated variables. For example, if the researcher is analyzing social network data, network analysis can help identify the most influential users and their connections to other users.

Grounded Theory

This method involves developing a theory or explanation based on the data collected during the exploratory research process. The researcher develops a theory or explanation that is grounded in the data, rather than relying on pre-existing theories or assumptions. This can be helpful in developing new theories or explanations that are supported by the data.

Applications of Exploratory Research

Exploratory research has many practical applications across various fields. Here are a few examples:

- Marketing Research : In marketing research, exploratory research can be used to identify consumer needs, preferences, and behavior. It can also help businesses understand market trends and identify new market opportunities.

- Product Development: In product development, exploratory research can be used to identify customer needs and preferences, as well as potential design flaws or issues. This can help companies improve their product offerings and develop new products that better meet customer needs.

- Social Science Research: In social science research, exploratory research can be used to identify new areas of study, as well as develop new theories and hypotheses. It can also be used to identify potential research methods and approaches.

- Healthcare Research : In healthcare research, exploratory research can be used to identify new treatments, therapies, and interventions. It can also be used to identify potential risk factors or causes of health problems.

- Education Research: In education research, exploratory research can be used to identify new teaching methods and approaches, as well as identify potential areas of study for further research. It can also be used to identify potential barriers to learning or achievement.

Examples of Exploratory Research

Here are some more examples of exploratory research from different fields:

- Social Science : A researcher wants to study the experience of being a refugee, but there is limited existing research on this topic. The researcher conducts exploratory research by conducting in-depth interviews with refugees to better understand their experiences, challenges, and needs.

- Healthcare : A medical researcher wants to identify potential risk factors for a rare disease but there is limited information available. The researcher conducts exploratory research by reviewing medical records and interviewing patients and their families to identify potential risk factors.

- Education : A teacher wants to develop a new teaching method to improve student engagement, but there is limited information on effective teaching methods. The teacher conducts exploratory research by reviewing existing literature and interviewing other teachers to identify potential approaches.

- Technology : A software developer wants to develop a new app, but is unsure about the features that users would find most useful. The developer conducts exploratory research by conducting surveys and focus groups to identify user preferences and needs.

- Environmental Science : An environmental scientist wants to study the impact of a new industrial plant on the surrounding environment, but there is limited existing research. The scientist conducts exploratory research by collecting and analyzing soil and water samples, and conducting interviews with residents to better understand the impact of the plant on the environment and the community.

How to Conduct Exploratory Research

Here are the general steps to conduct exploratory research:

- Define the research problem: Identify the research problem or question that you want to explore. Be clear about the objective and scope of the research.

- Review existing literature: Conduct a review of existing literature and research on the topic to identify what is already known and where gaps in knowledge exist.

- Determine the research design : Decide on the appropriate research design, which will depend on the nature of the research problem and the available resources. Common exploratory research designs include case studies, focus groups, interviews, and surveys.

- Collect data: Collect data using the chosen research design. This may involve conducting interviews, surveys, or observations, or collecting data from existing sources such as archives or databases.

- Analyze data: Analyze the data collected using appropriate qualitative or quantitative techniques. This may include coding and categorizing qualitative data, or running descriptive statistics on quantitative data.

- I nterpret and report findings: Interpret the findings of the analysis and report them in a way that is clear and understandable. The report should summarize the findings, discuss their implications, and make recommendations for further research or action.

- Iterate : If necessary, refine the research question and repeat the process of data collection and analysis to further explore the topic.

When to use Exploratory Research

Exploratory research is appropriate in situations where there is limited existing knowledge or understanding of a topic, and where the goal is to generate insights and ideas that can guide further research. Here are some specific situations where exploratory research may be particularly useful:

- New product development: When developing a new product, exploratory research can be used to identify consumer needs and preferences, as well as potential design flaws or issues.

- Emerging technologies: When exploring emerging technologies, exploratory research can be used to identify potential uses and applications, as well as potential challenges or limitations.

- Developing research hypotheses: When developing research hypotheses, exploratory research can be used to identify potential relationships or patterns that can be further explored through more rigorous research methods.

- Understanding complex phenomena: When trying to understand complex phenomena, such as human behavior or societal trends, exploratory research can be used to identify underlying patterns or factors that may be influencing the phenomenon.

- Developing research methods : When developing new research methods, exploratory research can be used to identify potential issues or limitations with existing methods, and to develop new methods that better capture the phenomena of interest.

Purpose of Exploratory Research

The purpose of exploratory research is to gain insights and understanding of a research problem or question where there is limited existing knowledge or understanding. The objective is to explore and generate ideas that can guide further research, rather than to test specific hypotheses or make definitive conclusions.

Exploratory research can be used to:

- Identify new research questions: Exploratory research can help to identify new research questions and areas of inquiry, by providing initial insights and understanding of a topic.

- Develop hypotheses: Exploratory research can help to develop hypotheses and testable propositions that can be further explored through more rigorous research methods.

- Identify patterns and trends : Exploratory research can help to identify patterns and trends in data, which can be used to guide further research or decision-making.

- Understand complex phenomena: Exploratory research can help to provide a deeper understanding of complex phenomena, such as human behavior or societal trends, by identifying underlying patterns or factors that may be influencing the phenomena.

- Generate ideas: Exploratory research can help to generate new ideas and insights that can be used to guide further research, innovation, or decision-making.

Characteristics of Exploratory Research

The following are the main characteristics of exploratory research:

- Flexible and open-ended : Exploratory research is characterized by its flexible and open-ended nature, which allows researchers to explore a wide range of ideas and perspectives without being constrained by specific research questions or hypotheses.

- Qualitative in nature : Exploratory research typically relies on qualitative methods, such as in-depth interviews, focus groups, or observation, to gather rich and detailed data on the research problem.

- Limited scope: Exploratory research is generally limited in scope, focusing on a specific research problem or question, rather than attempting to provide a comprehensive analysis of a broader phenomenon.

- Preliminary in nature : Exploratory research is preliminary in nature, providing initial insights and understanding of a research problem, rather than testing specific hypotheses or making definitive conclusions.

- I terative process : Exploratory research is often an iterative process, where the research design and methods may be refined and adjusted as new insights and understanding are gained.

- I nductive approach : Exploratory research typically takes an inductive approach to data analysis, seeking to identify patterns and relationships in the data that can guide further research or hypothesis development.

Advantages of Exploratory Research

The following are some advantages of exploratory research:

- Provides initial insights: Exploratory research is useful for providing initial insights and understanding of a research problem or question where there is limited existing knowledge or understanding. It can help to identify patterns, relationships, and potential hypotheses that can guide further research.

- Flexible and adaptable : Exploratory research is flexible and adaptable, allowing researchers to adjust their methods and approach as they gain new insights and understanding of the research problem.

- Qualitative methods : Exploratory research typically relies on qualitative methods, such as in-depth interviews, focus groups, and observation, which can provide rich and detailed data that is useful for gaining insights into complex phenomena.

- Cost-effective : Exploratory research is often less costly than other research methods, such as large-scale surveys or experiments. It is typically conducted on a smaller scale, using fewer resources and participants.

- Useful for hypothesis generation : Exploratory research can be useful for generating hypotheses and testable propositions that can be further explored through more rigorous research methods.

- Provides a foundation for further research: Exploratory research can provide a foundation for further research by identifying potential research questions and areas of inquiry, as well as providing initial insights and understanding of the research problem.

Limitations of Exploratory Research

The following are some limitations of exploratory research:

- Limited generalizability: Exploratory research is typically conducted on a small scale and uses non-random sampling techniques, which limits the generalizability of the findings to a broader population.

- Subjective nature: Exploratory research relies on qualitative methods and is therefore subject to researcher bias and interpretation. The findings may be influenced by the researcher’s own perceptions, beliefs, and assumptions.

- Lack of rigor: Exploratory research is often less rigorous than other research methods, such as experimental research, which can limit the validity and reliability of the findings.

- Limited ability to test hypotheses: Exploratory research is not designed to test specific hypotheses, but rather to generate initial insights and understanding of a research problem. It may not be suitable for testing well-defined research questions or hypotheses.

- Time-consuming : Exploratory research can be time-consuming and resource-intensive, particularly if the researcher needs to gather data from multiple sources or conduct multiple rounds of data collection.

- Difficulty in interpretation: The open-ended nature of exploratory research can make it difficult to interpret the findings, particularly if the researcher is unable to identify clear patterns or relationships in the data.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

Questionnaire – Definition, Types, and Examples

Case Study – Methods, Examples and Guide

Observational Research – Methods and Guide

Quantitative Research – Methods, Types and...

Qualitative Research Methods

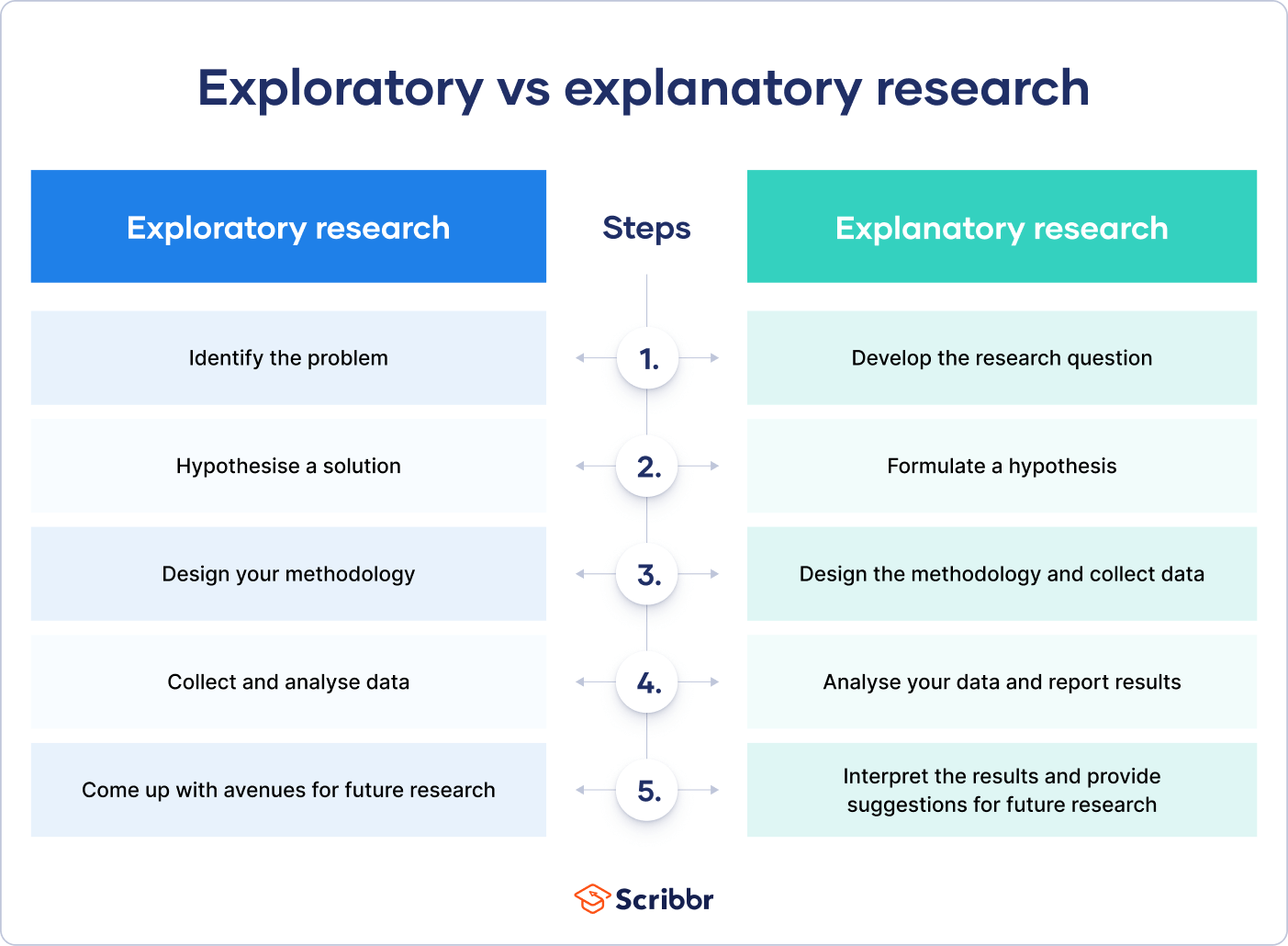

Explanatory Research – Types, Methods, Guide

Exploratory Research

Exploratory research, as the name implies, intends merely to explore the research questions and does not intend to offer final and conclusive solutions to existing problems. This type of research is usually conducted to study a problem that has not been clearly defined yet. Conducted in order to determine the nature of the problem, exploratory research is not intended to provide conclusive evidence, but helps us to have a better understanding of the problem.

When conducting exploratory research, the researcher ought to be willing to change his/her direction as a result of revelation of new data and new insights. [1] Accordingly, exploratory studies are often conducted using interpretive research methods and they answer to questions such as what, why and how.

Exploratory research design does not aim to provide the final and conclusive answers to the research questions, but merely explores the research topic with varying levels of depth. It has been noted that “exploratory research is the initial research, which forms the basis of more conclusive research. It can even help in determining the research design, sampling methodology and data collection method” [2] . Exploratory research “tends to tackle new problems on which little or no previous research has been done” [3] .

Unstructured interviews are the most popular primary data collection method with exploratory studies. Additionally, surveys , focus groups and observation methods can be used to collect primary data for this type of studies.

Examples of Exploratory Research Design

The following are some examples for studies with exploratory research design in business studies:

- A study into the role of social networking sites as an effective marketing communication channel

- An investigation into the ways of improvement of quality of customer services within hospitality sector in London

- An assessment of the role of corporate social responsibility on consumer behaviour in pharmaceutical industry in the USA

Differences between Exploratory and Conclusive Research

The difference between exploratory and conclusive research is drawn by Sandhursen (2000) [4] in a way that exploratory studies result in a range of causes and alternative options for a solution of a specific problem, whereas, conclusive studies identify the final information that is the only solution to an existing research problem.

In other words, exploratory research design simply explores the research questions, leaving room for further researches, whereas conclusive research design is aimed to provide final findings for the research.

Moreover, it has been stated that “an exploratory study may not have as rigorous as methodology as it is used in conclusive studies, and sample sizes may be smaller. But it helps to do the exploratory study as methodically as possible, if it is going to be used for major decisions about the way we are going to conduct our next study” [5] (Nargundkar, 2003, p.41).

Exploratory studies usually create scope for future research and the future research may have a conclusive design. For example, ‘a study into the implications of COVID-19 pandemic into the global economy’ is an exploratory research. COVID-19 pandemic is a recent phenomenon and the study can generate an initial knowledge about economic implications of the phenomenon.

A follow-up study, building on the findings of this research ‘a study into the effects of COVID-19 pandemic on tourism revenues in Morocco’ is a causal conclusive research. The second research can produce research findings that can be of a practical use for decision making.

Advantages of Exploratory Research

- Lower costs of conducting the study

- Flexibility and adaptability to change

- Exploratory research is effective in laying the groundwork that will lead to future studies.

- Exploratory studies can potentially save time by determining at the earlier stages the types of research that are worth pursuing

Disadvantages of Exploratory Research

- Inclusive nature of research findings

- Exploratory studies generate qualitative information and interpretation of such type of information is subject to bias

- These types of studies usually make use of a modest number of samples that may not adequately represent the target population. Accordingly, findings of exploratory research cannot be generalized to a wider population.

- Findings of such type of studies are not usually useful in decision making in a practical level.

My e-book, The Ultimate Guide to Writing a Dissertation in Business Studies: a step by step assistance contains discussions of theory and application of research designs. The e-book also explains all stages of the research process starting from the selection of the research area to writing personal reflection. Important elements of dissertations such as research philosophy , research approach , methods of data collection , data analysis and sampling are explained in this e-book in simple words.

John Dudovskiy

[1] Source: Saunders, M., Lewis, P. & Thornhill, A. (2012) “Research Methods for Business Students” 6 th edition, Pearson Education Limited

[2] Singh, K. (2007) “Quantitative Social Research Methods” SAGE Publications, p.64

[3] Brown, R.B. (2006) “Doing Your Dissertation in Business and Management: The Reality of Research and Writing” Sage Publications, p.43

[4] Sandhusen, R.L. (2000) “Marketing” Barrons

[5] Nargundkar, R. (2008) “Marketing Research: Text and Cases” 3 rd edition, p.38

- Tools and Resources

- Customer Services

- Original Language Spotlight

- Alternative and Non-formal Education

- Cognition, Emotion, and Learning

- Curriculum and Pedagogy

- Education and Society

- Education, Change, and Development

- Education, Cultures, and Ethnicities

- Education, Gender, and Sexualities

- Education, Health, and Social Services

- Educational Administration and Leadership

- Educational History

- Educational Politics and Policy

- Educational Purposes and Ideals

- Educational Systems

- Educational Theories and Philosophies

- Globalization, Economics, and Education

- Languages and Literacies

- Professional Learning and Development

- Research and Assessment Methods

- Technology and Education

- Share This Facebook LinkedIn Twitter

Article contents

Qualitative design research methods.

- Michael Domínguez Michael Domínguez San Diego State University

- https://doi.org/10.1093/acrefore/9780190264093.013.170

- Published online: 19 December 2017

Emerging in the learning sciences field in the early 1990s, qualitative design-based research (DBR) is a relatively new methodological approach to social science and education research. As its name implies, DBR is focused on the design of educational innovations, and the testing of these innovations in the complex and interconnected venue of naturalistic settings. As such, DBR is an explicitly interventionist approach to conducting research, situating the researcher as a part of the complex ecology in which learning and educational innovation takes place.

With this in mind, DBR is distinct from more traditional methodologies, including laboratory experiments, ethnographic research, and large-scale implementation. Rather, the goal of DBR is not to prove the merits of any particular intervention, or to reflect passively on a context in which learning occurs, but to examine the practical application of theories of learning themselves in specific, situated contexts. By designing purposeful, naturalistic, and sustainable educational ecologies, researchers can test, extend, or modify their theories and innovations based on their pragmatic viability. This process offers the prospect of generating theory-developing, contextualized knowledge claims that can complement the claims produced by other forms of research.

Because of this interventionist, naturalistic stance, DBR has also been the subject of ongoing debate concerning the rigor of its methodology. In many ways, these debates obscure the varied ways DBR has been practiced, the varied types of questions being asked, and the theoretical breadth of researchers who practice DBR. With this in mind, DBR research may involve a diverse range of methods as researchers from a variety of intellectual traditions within the learning sciences and education research design pragmatic innovations based on their theories of learning, and document these complex ecologies using the methodologies and tools most applicable to their questions, focuses, and academic communities.

DBR has gained increasing interest in recent years. While it remains a popular methodology for developmental and cognitive learning scientists seeking to explore theory in naturalistic settings, it has also grown in importance to cultural psychology and cultural studies researchers as a methodological approach that aligns in important ways with the participatory commitments of liberatory research. As such, internal tension within the DBR field has also emerged. Yet, though approaches vary, and have distinct genealogies and commitments, DBR might be seen as the broad methodological genre in which Change Laboratory, design-based implementation research (DBIR), social design-based experiments (SDBE), participatory design research (PDR), and research-practice partnerships might be categorized. These critically oriented iterations of DBR have important implications for educational research and educational innovation in historically marginalized settings and the Global South.

- design-based research

- learning sciences

- social-design experiment

- qualitative research

- research methods

Educational research, perhaps more than many other disciplines, is a situated field of study. Learning happens around us every day, at all times, in both formal and informal settings. Our worlds are replete with complex, dynamic, diverse communities, contexts, and institutions, many of which are actively seeking guidance and support in the endless quest for educational innovation. Educational researchers—as a source of potential expertise—are necessarily implicated in this complexity, linked to the communities and institutions through their very presence in spaces of learning, poised to contribute with possible solutions, yet often positioned as separate from the activities they observe, creating dilemmas of responsibility and engagement.

So what are educational scholars and researchers to do? These tensions invite a unique methodological challenge for the contextually invested researcher, begging them to not just produce knowledge about learning, but to participate in the ecology, collaborating on innovations in the complex contexts in which learning is taking place. In short, for many educational researchers, our backgrounds as educators, our connections to community partners, and our sociopolitical commitments to the process of educational innovation push us to ensure that our work is generative, and that our theories and ideas—our expertise—about learning and education are made pragmatic, actionable, and sustainable. We want to test what we know outside of laboratories, designing, supporting, and guiding educational innovation to see if our theories of learning are accurate, and useful to the challenges faced in schools and communities where learning is messy, collaborative, and contested. Through such a process, we learn, and can modify our theories to better serve the real needs of communities. It is from this impulse that qualitative design-based research (DBR) emerged as a new methodological paradigm for education research.

Qualitative design-based research will be examined, documenting its origins, the major tenets of the genre, implementation considerations, and methodological issues, as well as variance within the paradigm. As a relatively new methodology, much tension remains in what constitutes DBR, and what design should mean, and for whom. These tensions and questions, as well as broad perspectives and emergent iterations of the methodology, will be discussed, and considerations for researchers looking toward the future of this paradigm will be considered.

The Origins of Design-Based Research

Qualitative design-based research (DBR) first emerged in the learning sciences field among a group of scholars in the early 1990s, with the first articulation of DBR as a distinct methodological construct appearing in the work of Ann Brown ( 1992 ) and Allan Collins ( 1992 ). For learning scientists in the 1970s and 1980s, the traditional methodologies of laboratory experiments, ethnographies, and large-scale educational interventions were the only methods available. During these decades, a growing community of learning science and educational researchers (e.g., Bereiter & Scardamalia, 1989 ; Brown, Campione, Webber, & McGilley, 1992 ; Cobb & Steffe, 1983 ; Cole, 1995 ; Scardamalia & Bereiter, 1991 ; Schoenfeld, 1982 , 1985 ; Scribner & Cole, 1978 ) interested in educational innovation and classroom interventions in situated contexts began to find the prevailing methodologies insufficient for the types of learning they wished to document, the roles they wished to play in research, and the kinds of knowledge claims they wished to explore. The laboratory, or laboratory-like settings, where research on learning was at the time happening, was divorced from the complexity of real life, and necessarily limiting. Alternatively, most ethnographic research, while more attuned to capturing these complexities and dynamics, regularly assumed a passive stance 1 and avoided interceding in the learning process, or allowing researchers to see what possibility for innovation existed from enacting nascent learning theories. Finally, large-scale interventions could test innovations in practice but lost sight of the nuance of development and implementation in local contexts (Brown, 1992 ; Collins, Joseph, & Bielaczyc, 2004 ).

Dissatisfied with these options, and recognizing that in order to study and understand learning in the messiness of socially, culturally, and historically situated settings, new methods were required, Brown ( 1992 ) proposed an alternative: Why not involve ourselves in the messiness of the process, taking an active, grounded role in disseminating our theories and expertise by becoming designers and implementers of educational innovations? Rather than observing from afar, DBR researchers could trace their own iterative processes of design, implementation, tinkering, redesign, and evaluation, as it unfolded in shared work with teachers, students, learners, and other partners in lived contexts. This premise, initially articulated as “design experiments” (Brown, 1992 ), would be variously discussed over the next decade as “design research,” (Edelson, 2002 ) “developmental research,” (Gravemeijer, 1994 ), and “design-based research,” (Design-Based Research Collective, 2003 ), all of which reflect the original, interventionist, design-oriented concept. The latter term, “design-based research” (DBR), is used here, recognizing this as the prevailing terminology used to refer to this research approach at present. 2

Regardless of the evolving moniker, the prospects of such a methodology were extremely attractive to researchers. Learning scientists acutely aware of various aspects of situated context, and interested in studying the applied outcomes of learning theories—a task of inquiry into situated learning for which canonical methods were rather insufficient—found DBR a welcome development (Bell, 2004 ). As Barab and Squire ( 2004 ) explain: “learning scientists . . . found that they must develop technological tools, curriculum, and especially theories that help them systematically understand and predict how learning occurs” (p. 2), and DBR methodologies allowed them to do this in proactive, hands-on ways. Thus, rather than emerging as a strict alternative to more traditional methodologies, DBR was proposed to fill a niche that other methodologies were ill-equipped to cover.

Effectively, while its development is indeed linked to an inherent critique of previous research paradigms, neither Brown nor Collins saw DBR in opposition to other forms of research. Rather, by providing a bridge from the laboratory to the real world, where learning theories and proposed innovations could interact and be implemented in the complexity of lived socio-ecological contexts (Hoadley, 2004 ), new possibilities emerged. Learning researchers might “trace the evolution of learning in complex, messy classrooms and schools, test and build theories of teaching and learning, and produce instructional tools that survive the challenges of everyday practice” (Shavelson, Phillips, Towne, & Feuer, 2003 , p. 25). Thus, DBR could complement the findings of laboratory, ethnographic, and large-scale studies, answering important questions about the implementation, sustainability, limitations, and usefulness of theories, interventions, and learning when introduced as innovative designs into situated contexts of learning. Moreover, while studies involving these traditional methodologies often concluded by pointing toward implications—insights subsequent studies would need to take up—DBR allowed researchers to address implications iteratively and directly. No subsequent research was necessary, as emerging implications could be reflexively explored in the context of the initial design, offering considerable insight into how research is translated into theory and practice.

Since its emergence in 1992 , DBR as a methodological approach to educational and learning research has quickly grown and evolved, used by researchers from a variety of intellectual traditions in the learning sciences, including developmental and cognitive psychology (e.g., Brown & Campione, 1996 , 1998 ; diSessa & Minstrell, 1998 ), cultural psychology (e.g., Cole, 1996 , 2007 ; Newman, Griffin, & Cole, 1989 ; Gutiérrez, Bien, Selland, & Pierce, 2011 ), cultural anthropology (e.g., Barab, Kinster, Moore, Cunningham, & the ILF Design Team, 2001 ; Polman, 2000 ; Stevens, 2000 ; Suchman, 1995 ), and cultural-historical activity theory (e.g., Engeström, 2011 ; Espinoza, 2009 ; Espinoza & Vossoughi, 2014 ; Gutiérrez, 2008 ; Sannino, 2011 ). Given this plurality of epistemological and theoretical fields that employ DBR, it might best be understood as a broad methodology of educational research, realized in many different, contested, heterogeneous, and distinct iterations, and engaging a variety of qualitative tools and methods (Bell, 2004 ). Despite tensions among these iterations, and substantial and important variances in the ways they employ design-as-research in community settings, there are several common, methodological threads that unite the broad array of research that might be classified as DBR under a shared, though pluralistic, paradigmatic umbrella.

The Tenets of Design-Based Research

Why design-based research.

As we turn to the core tenets of the design-based research (DBR) paradigm, it is worth considering an obvious question: Why use DBR as a methodology for educational research? To answer this, it is helpful to reflect on the original intentions for DBR, particularly, that it is not simply the study of a particular, isolated intervention. Rather, DBR methodologies were conceived of as the complete, iterative process of designing, modifying, and assessing the impact of an educational innovation in a contextual, situated learning environment (Barab & Kirshner, 2001 ; Brown, 1992 ; Cole & Engeström, 2007 ). The design process itself—inclusive of the theory of learning employed, the relationships among participants, contextual factors and constraints, the pedagogical approach, any particular intervention, as well as any changes made to various aspects of this broad design as it proceeds—is what is under study.

Considering this, DBR offers a compelling framework for the researcher interested in having an active and collaborative hand in designing for educational innovation, and interested in creating knowledge about how particular theories of learning, pedagogical or learning practices, or social arrangements function in a context of learning. It is a methodology that can put the researcher in the position of engineer , actively experimenting with aspects of learning and sociopolitical ecologies to arrive at new knowledge and productive outcomes, as Cobb, Confrey, diSessa, Lehrer, and Schauble ( 2003 ) explain:

Prototypically, design experiments entail both “engineering” particular forms of learning and systematically studying those forms of learning within the context defined by the means of supporting them. This designed context is subject to test and revision, and the successive iterations that result play a role similar to that of systematic variation in experiment. (p. 9)

This being said, how directive the engineering role the researcher takes on varies considerably among iterations of DBR. Indeed, recent approaches have argued strongly for researchers to take on more egalitarian positionalities with respect to the community partners with whom they work (e.g., Zavala, 2016 ), acting as collaborative designers, rather than authoritative engineers.

Method and Methodology in Design-Based Research

Now, having established why we might use DBR, a recurring question that has faced the DBR paradigm is whether DBR is a methodology at all. Given the variety of intellectual and ontological traditions that employ it, and thus the pluralism of methods used in DBR to enact the “engineering” role (whatever shape that may take) that the researcher assumes, it has been argued that DBR is not, in actuality a methodology at all (Kelly, 2004 ). The proliferation and diversity of approaches, methods, and types of analysis purporting to be DBR have been described as a lack of coherence that shows there is no “argumentative grammar” or methodology present in DBR (Kelly, 2004 ).

Now, the conclusions one will eventually draw in this debate will depend on one’s orientations and commitments, but it is useful to note that these demands for “coherence” emerge from previous paradigms in which methodology was largely marked by a shared, coherent toolkit for data collection and data analysis. These previous paradigmatic rules make for an odd fit when considering DBR. Yet, even if we proceed—within the qualitative tradition from which DBR emerges—defining methodology as an approach to research that is shaped by the ontological and epistemological commitments of the particular researcher, and methods as the tools for research, data collection, and analysis that are chosen by the researcher with respect to said commitments (Gutiérrez, Engeström, & Sannino, 2016 ), then a compelling case for DBR as a methodology can be made (Bell, 2004 ).

Effectively, despite the considerable variation in how DBR has been and is employed, and tensions within the DBR field, we might point to considerable, shared epistemic common ground among DBR researchers, all of whom are invested in an approach to research that involves engaging actively and iteratively in the design and exploration of learning theory in situated, natural contexts. This common epistemic ground, even in the face of pluralistic ideologies and choices of methods, invites in a new type of methodological coherence, marked by “intersubjectivity without agreement” (Matusov, 1996 ), that links DBR from traditional developmental and cognitive psychology models of DBR (e.g., Brown, 1992 ; Brown & Campione, 1998 ; Collins, 1992 ), to more recent critical and sociocultural manifestations (e.g., Bang & Vossoughi, 2016 ; Engeström, 2011 ; Gutiérrez, 2016 ), and everything in between.

Put in other terms, even as DBR researchers may choose heterogeneous methods for data collection, data analysis, and reporting results complementary to the ideological and sociopolitical commitments of the particular researcher and the types of research questions that are under examination (Bell, 2004 ), a shared epistemic commitment gives the methodology shape. Indeed, the common commitment toward design innovation emerges clearly across examples of DBR methodological studies ranging in method from ethnographic analyses (Salvador, Bell, & Anderson, 1999 ) to studies of critical discourse within a design (Kärkkäinen, 1999 ), to focused examinations of metacognition of individual learners (White & Frederiksen, 1998 ), and beyond. Rather than indicating a lack of methodology, or methodological weakness, the use of varying qualitative methods for framing data collection and retrospective analyses within DBR, and the tensions within the epistemic common ground itself, simply reflects the scope of its utility. Learning in context is complex, contested, and messy, and the plurality of methods present across DBR allow researchers to dynamically respond to context as needed, employing the tools that fit best to consider the questions that are present, or may arise.

All this being the case, it is useful to look toward the coherent elements—the “argumentative grammar” of DBR, if you will—that can be identified across the varied iterations of DBR. Understanding these shared features, in the context and terms of the methodology itself, help us to appreciate what is involved in developing robust and thorough DBR research, and how DBR seeks to make strong, meaningful claims around the types of research questions it takes up.

Coherent Features of Design-Based Research

Several scholars have provided comprehensive overviews and listings of what they see as the cross-cutting features of DBR, both in the context of more traditional models of DBR (e.g., Cobb et al., 2003 ; Design-Based Research Collective, 2003 ), and in regards to newer iterations (e.g., Gutiérrez & Jurow, 2016 ; Bang & Vossoughi, 2016 ). Rather than try to offer an overview of each of these increasingly pluralistic classifications, the intent here is to attend to three broad elements that are shared across articulations of DBR and reflect the essential elements that constitute the methodological approach DBR offers to educational researchers.

Design research is concerned with the development, testing, and evolution of learning theory in situated contexts

This first element is perhaps most central to what DBR of all types is, anchored in what Brown ( 1992 ) was initially most interested in: testing the pragmatic validity of theories of learning by designing interventions that engaged with, or proposed, entire, naturalistic, ecologies of learning. Put another way, while DBR studies may have various units of analysis, focuses, and variables, and may organize learning in many different ways, it is the theoretically informed design for educational innovation that is most centrally under evaluation. DBR actively and centrally exists as a paradigm that is engaged in the development of theory, not just the evaluation of aspects of its usage (Bell, 2004 ; Design-Based Research Collective, 2003 ; Lesh & Kelly, 2000 ; van den Akker, 1999 ).

Effectively, where DBR is taking place, theory as a lived possibility is under examination. Specifically, in most DBR, this means a focus on “intermediate-level” theories of learning, rather than “grand” ones. In essence, DBR does not contend directly with “grand” learning theories (such as developmental or sociocultural theory writ large) (diSessa, 1991 ). Rather, DBR seeks to offer constructive insights by directly engaging with particular learning processes that flow from these theories on a “grounded,” “intermediate” level. This is not, however, to say DBR is limited in what knowledge it can produce; rather, tinkering in this “intermediate” realm can produce knowledge that informs the “grand” theory (Gravemeijer, 1994 ). For example, while cognitive and motivational psychology provide “grand” theoretical frames, interest-driven learning (IDL) is an “intermediate” theory that flows from these and can be explored in DBR to both inform the development of IDL designs in practice and inform cognitive and motivational psychology more broadly (Joseph, 2004 ).

Crucially, however, DBR entails putting the theory in question under intense scrutiny, or, “into harm’s way” (Cobb et al., 2003 ). This is an especially core element to DBR, and one that distinguishes it from the proliferation of educational-reform or educational-entrepreneurship efforts that similarly take up the discourse of “design” and “innovation.” Not only is the reflexive, often participatory element of DBR absent from such efforts—that is, questioning and modifying the design to suit the learning needs of the context and partners—but the theory driving these efforts is never in question, and in many cases, may be actively obscured. Indeed, it is more common to see educational-entrepreneur design innovations seek to modify a context—such as the way charter schools engage in selective pupil recruitment and intensive disciplinary practices (e.g., Carnoy et al., 2005 ; Ravitch, 2010 ; Saltman, 2007 )—rather than modify their design itself, and thus allow for humility in their theory. Such “innovations” and “design” efforts are distinct from DBR, which must, in the spirit of scientific inquiry, be willing to see the learning theory flail and struggle, be modified, and evolve.

This growth and evolution of theory and knowledge is of course central to DBR as a rigorous research paradigm; moving it beyond simply the design of local educational programs, interventions, or innovations. As Barab and Squire ( 2004 ) explain:

Design-based research requires more than simply showing a particular design works but demands that the researcher (move beyond a particular design exemplar to) generate evidence-based claims about learning that address contemporary theoretical issues and further the theoretical knowledge of the field. (pp. 5–6)

DBR as a research paradigm offers a design process through which theories of learning can be tested; they can be modified, and by allowing them to operate with humility in situated conditions, new insights and knowledge, even new theories, may emerge that might inform the field, as well as the efforts and directions of other types of research inquiry. These productive, theory-developing outcomes, or “ontological innovations” (diSessa & Cobb, 2004 ), represent the culmination of an effective program of DBR—the production of new ways to understand, conceptualize, and enact learning as a lived, contextual process.

Design research works to understand learning processes, and the design that supports them in situated contexts

As a research methodology that operates by tinkering with “grounded” learning theories, DBR is itself grounded, and seeks to develop its knowledge claims and designs in naturalistic, situated contexts (Brown, 1992 ). This is, again, a distinguishing element of DBR—setting it apart from laboratory research efforts involving design and interventions in closed, controlled environments. Rather than attempting to focus on singular variables, and isolate these from others, DBR is concerned with the multitude of variables that naturally occur across entire learning ecologies, and present themselves in distinct ways across multiple planes of possible examination (Rogoff, 1995 ; Collins, Joseph, & Bielaczyc, 2004 ). Certainly, specific variables may be identified as dependent, focal units of analysis, but identifying (while not controlling for) the variables beyond these, and analyzing their impact on the design and learning outcomes, is an equally important process in DBR (Collins et al., 2004 ; Barab & Kirshner, 2001 ). In practice, this of course varies across iterations in its depth and breadth. Traditional models of developmental or cognitive DBR may look to account for the complexity and nuance of a setting’s social, developmental, institutional, and intellectual characteristics (e.g., Brown, 1992 ; Cobb et al., 2003 ), while more recent, critical iterations will give increased attention to how historicity, power, intersubjectivity, and culture, among other things, influence and shape a setting, and the learning that occurs within it (e.g., Gutiérrez, 2016 ; Vakil, de Royston, Nasir, & Kirshner, 2016 ).

Beyond these variations, what counts as “design” in DBR varies widely, and so too will what counts as a naturalistic setting. It has been well documented that learning occurs all the time, every day, and in every space imaginable, both formal and informal (Leander, Phillips, & Taylor, 2010 ), and in ways that span strictly defined setting boundaries (Engeström, Engeström, & Kärkkäinen, 1995 ). DBR may take place in any number of contexts, based on the types of questions asked, and the learning theories and processes that a researcher may be interested in exploring. DBR may involve one-to-one tutoring and learning settings, single classrooms, community spaces, entire institutions, or even holistically designed ecologies (Design-Based Research Collective, 2003 ; Engeström, 2008 ; Virkkunen & Newnham, 2013 ). In all these cases, even the most completely designed experimental ecology, the setting remains naturalistic and situated because DBR actively embraces the uncontrollable variables that participants bring with them to the learning process for and from their situated worlds, lives, and experiences—no effort is made to control for these complicated influences of life, simply to understand how they operate in a given ecology as innovation is attempted. Thus, the extent of the design reflects a broader range of qualitative and theoretical study, rather than an attempt to control or isolate some particular learning process from outside influence.

While there is much variety in what design may entail, where DBR takes place, what types of learning ecologies are under examination, and what methods are used, situated ecologies are always the setting of this work. In this way, conscious of naturalistic variables, and the influences that culture, historicity, participation, and context have on learning, researchers can use DBR to build on prior research, and extend knowledge around the learning that occurs in the complexity of situated contexts and lived practices (Collins et al., 2004 ).

Design based research is iterative; it changes, grows, and evolves to meet the needs and emergent questions of the context, and this tinkering process is part of the research

The final shared element undergirding models of DBR is that it is an iterative, active, and interventionist process, interested in and focused on producing educational innovation by actually and actively putting design innovations into practice (Brown, 1992 , Collins, 1992 ; Gutiérrez, 2008 ). Given this interventionist, active stance, tinkering with the design and the theory of learning informing the design is as much a part of the research process as the outcome of the intervention or innovation itself—we learn what impacts learning as much, if not more, than we learn what was learned. In this sense, DBR involves a focus on analyzing the theory-driven design itself, and its implementation as an object of study (Edelson, 2002 ; Penuel, Fishman, Cheng, & Sabelli, 2011 ), and is ultimately interested in the improvement of the design—of how it unfolds, how it shifts, how it is modified, and made to function productively for participants in their contexts and given their needs (Kirshner & Polman, 2013 ).

While DBR is iterative and contextual as a foundational methodological principle, what this means varies across conceptions of DBR. For instance, in more traditional models, Brown and Campione ( 1996 ) pointed out the dangers of “lethal mutation” in which a design, introduced into a context, may become so warped by the influence, pressures, incomplete implementation, or misunderstanding of participants in the local context, that it no longer reflects or tests the theory under study. In short, a theory-driven intervention may be put in place, and then subsumed to such a degree by participants based on their understanding and needs, that it remains the original innovative design in name alone. The assertion here is that in these cases, the research ceases to be DBR in the sense that the design is no longer central, actively shaping learning. We cannot, they argue, analyze a design—and the theory it was meant to reflect—as an object of study when it has been “mutated,” and it is merely a banner under which participants are enacting their idiosyncratic, pragmatic needs.

While the ways in which settings and individuals might disrupt designs intended to produce robust learning is certainly a tension to be cautious of in DBR, it is also worth noting that in many critical approaches to DBR, such mutations—whether “lethal” to the original design or not—are seen as compelling and important moments. Here, where collaboration and community input is more central to the design process, iterative is understood differently. Thus, a “mutation” becomes a point where reflexivity, tension, and contradiction might open the door for change, for new designs, for reconsiderations of researcher and collaborative partner positionalities, or for ethnographic exploration into how a context takes up, shapes, and ultimately engages innovations in a particular sociocultural setting. In short, accounting for and documenting changes in design is a vital part of the DBR process, allowing researchers to respond to context in a variety of ways, always striving for their theories and designs to act with humility, and in the interest of usefulness .

With this in mind, the iterative nature of DBR means that the relationships researchers have with other design partners (educators and learners) in the ecology are incredibly important, and vital to consider (Bang et al., 2016 ; Engeström, 2007 ; Engeström, Sannino, & Virkkunen, 2014 ). Different iterations of DBR might occur in ways in which the researcher is more or less intimately involved in the design and implementation process, both in terms of actual presence and intellectual ownership of the design. Regarding the former, in some cases, a researcher may hand a design off to others to implement, periodically studying and modifying it, while in other contexts or designs, the researcher may be actively involved, tinkering in every detail of the implementation and enactment of the design. With regard to the latter, DBR might similarly range from a somewhat prescribed model, in which the researcher is responsible for the original design, and any modifications that may occur based on their analyses, without significant input from participants (e.g., Collins et al., 2004 ), to incredibly participatory models, in which all parties (researchers, educators, learners) are part of each step of the design-creation, modification, and research process (e.g., Bang, Faber, Gurneau, Marin, & Soto, 2016 ; Kirshner, 2015 ).

Considering the wide range of ideological approaches and models for DBR, we might acknowledge that DBR can be gainfully conducted through many iterations of “openness” to the design process. However, the strength of the research—focused on analyzing the design itself as a unit of study reflective of learning theory—will be bolstered by thoughtfully accounting for how involved the researcher will be, and how open to participation the modification process is. These answers should match the types of questions, and conceptual or ideological framing, with which researchers approach DBR, allowing them to tinker with the process of learning as they build on prior research to extend knowledge and test theory (Barab & Kirshner, 2001 ), while thoughtfully documenting these changes in the design as they go.

Implementation and Research Design

As with the overarching principles of design-based research (DBR), even amid the pluralism of conceptual frameworks of DBR researchers, it is possible, and useful, to trace the shared contours in how DBR research design is implemented. Though texts provide particular road maps for undertaking various iterations of DBR consistent with the specific goals, types of questions, and ideological orientations of these scholarly communities (e.g., Cole & Engeström, 2007 ; Collins, Joseph, & Bielaczyc, 2004 ; Fishman, Penuel, Allen, Cheng, & Sabelli, 2013 ; Gutiérrez & Jurow, 2016 ; Virkkunen & Newnham, 2013 ), certain elements, realized differently, can be found across all of these models, and may be encapsulated in five broad methodological phases.

Considering the Design Focus

DBR begins by considering what the focus of the design, the situated context, and the units of analysis for research will be. Prospective DBR researchers will need to consider broader research in regard to the “grand” theory of learning with which they work to determine what theoretical questions they have, or identify “intermediate” aspects of the theories that might be studied and strengthened by a design process in situated contexts, and what planes of analysis (Rogoff, 1995 ) will be most suitable for examination. This process allows for the identification of the critical theoretical elements of a design, and articulation of initial research questions.

Given the conceptual framework, theoretical and research questions, and sociopolitical interests at play, researchers may undertake this, and subsequent steps in the process, on their own, or in close collaboration with the communities and individuals in the situated contexts in which the design will unfold. As such, across iterations of DBR, and with respect to the ways DBR researchers choose to engage with communities, the origin of the design will vary, and might begin in some cases with theoretical questions, or arise in others as a problem of practice (Coburn & Penuel, 2016 ), though as has been noted, in either case, theory and practice are necessarily linked in the research.

Creating and Implementing a Designed Innovation

From the consideration and identification of the critical elements, planned units of analysis, and research questions that will drive a design, researchers can then actively create (either on their own or in conjunction with potential design partners) a designed intervention reflecting these critical elements, and the overarching theory.

Here, the DBR researcher should consider what partners exist in the process and what ownership exists around these partnerships, determine exactly what the pragmatic features of the intervention/design will be and who will be responsible for them, and consider when checkpoints for modification and evaluation will be undertaken, and by whom. Additionally, researchers should at this stage consider questions of timeline and of recruiting participants, as well as what research materials will be needed to adequately document the design, its implementation, and its outcomes, and how and where collected data will be stored.

Once a design (the planned, theory-informed innovative intervention) has been produced, the DBR researcher and partners can begin the implementation process, putting the design into place and beginning data collection and documentation.

Assessing the Impact of the Design on the Learning Ecology

Chronologically, the next two methodological steps happen recursively in the iterative process of DBR. The researcher must assess the impact of the design, and then, make modifications as necessary, before continuing to assess the impact of these modifications. In short, these next two steps are a cycle that continues across the life and length of the research design.

Once a design has been created and implemented, the researcher begins to observe and document the learning, the ecology, and the design itself. Guided by and in conversation with the theory and critical elements, the researcher should periodically engage in ongoing data analysis, assessing the success of the design, and of learning, paying equal attention to the design itself, and how its implementation is working in the situated ecology.

Within the realm of qualitative research, measuring or assessing variables of learning and assessing the design may look vastly different, require vastly different data-collection and data-analysis tools, and involve vastly different research methods among different researchers.

Modifying the Design

Modification, based on ongoing assessment of the design, is what makes DBR iterative, helping the researcher extend the field’s knowledge about the theory, design, learning, and the context under examination.

Modification of the design can take many forms, from complete changes in approach or curriculum, to introducing an additional tool or mediating artifact into a learning ecology. Moreover, how modification unfolds involves careful reflection from the researcher and any co-designing participants, deciding whether modification will be an ongoing, reflexive, tinkering process, or if it will occur only at predefined checkpoints, after formal evaluation and assessment. Questions of ownership, issues of resource availability, technical support, feasibility, and communication are all central to the work of design modification, and answers will vary given the research questions, design parameters, and researchers’ epistemic commitments.

Each moment of modification indicates a new phase in a DBR project, and a new round of assessing—through data analysis—the impact of the design on the learning ecology, either to guide continued or further modification, report the results of the design, or in some cases, both.

Reporting the Results of the Design

The final step in DBR methodology is to report on the results of the designed intervention, how it contributed to understandings of theory, and how it impacted the local learning ecology or context. The format, genre, and final data analysis methods used in reporting data and research results will vary across iterations of DBR. However, it is largely understood that to avoid methodological confusion, DBR researchers should clearly situate themselves in the DBR paradigm by clearly describing and detailing the design itself; articulating the theory, central elements, and units of analysis under scrutiny, what modifications occurred and what precipitated these changes, and what local effects were observed; and exploring any potential contributions to learning theory, while accounting for the context and their interventionist role and positionality in the design. As such, careful documentation of pragmatic and design decisions for retrospective data analysis, as well as research findings, should be done at each stage of this implementation process.

Methodological Issues in the Design-Based Research Paradigm

Because of its pluralistic nature, its interventionist, nontraditional stance, and the fact that it remains in its conceptual infancy, design-based research (DBR) is replete with ongoing methodological questions and challenges, both from external and internal sources. While there are many more that may exist, addressed will be several of the most pressing the prospective DBR researcher may encounter, or want to consider in understanding the paradigm and beginning a research design.

Challenges to Rigor and Validity

Perhaps the place to begin this reflection on tensions in the DBR paradigm is the recurrent and ongoing challenge to the rigor and validity of DBR, which has asked: Is DBR research at all? Given the interventionist and activist way in which DBR invites the researcher to participate, and the shift in orientation from long-accepted research paradigms, such critiques are hardly surprising, and fall in line with broader challenges to the rigor and objectivity of qualitative social science research in general. Historically, such complaints about DBR are linked to decades of critique of any research that does not adhere to the post-positivist approach set out as the U.S. Department of Education began to prioritize laboratory and large-scale randomized control-trial experimentation as the “gold standard” of research design (e.g., Mosteller & Boruch, 2002 ).

From the outset, DBR, as an interventionist, local, situated, non-laboratory methodology, was bound to run afoul of such conservative trends. While some researchers involved in (particularly traditional developmental and cognitive) DBR have found broader acceptance within these constraints, the rigor of DBR remains contested. It has been suggested that DBR is under-theorized and over-methologized, a haphazard way for researchers to do activist work without engaging in the development of robust knowledge claims about learning (Dede, 2004 ), and an approach lacking in coherence that sheltered interventionist projects of little impact to developing learning theory and allowed researchers to make subjective, pet claims through selective analysis of large bodies of collected data (Kelly, 2003 , 2004 ).

These critiques, however, impose an external set of criteria on DBR, desiring it to fit into the molds of rigor and coherence as defined by canonical methodologies. Bell ( 2004 ) and Bang and Vossoughi ( 2016 ) have made compelling cases for the wide variety of methods and approaches present in DBR not as a fracturing, but as a generative proliferation of different iterations that can offer powerful insights around the different types of questions that exist about learning in the infinitely diverse settings in which it occurs. Essentially, researchers have argued that within the DBR paradigm, and indeed within educational research more generally, the practical impact of research on learning, context, and practices should be a necessary component of rigor (Gutiérrez & Penuel, 2014 ), and the pluralism of methods and approaches available in DBR ensures that the practical impacts and needs of the varied contexts in which the research takes place will always drive the design and research tools.

These moves are emblematic of the way in which DBR is innovating and pushing on paradigms of rigor in educational research altogether, reflecting how DBR fills a complementary niche with respect to other methodologies and attends to elements and challenges of learning in lived, real environments that other types of research have consistently and historically missed. Beyond this, Brown ( 1992 ) was conscious of the concerns around data collection, validity, rigor, and objectivity from the outset, identifying this dilemma—the likelihood of having an incredible amount of data collected in a design only a small fraction of which can be reported and shared, thus leading potentially to selective data analysis and use—as the Bartlett Effect (Brown, 1992 ). Since that time, DBR researchers have been aware of this challenge, actively seeking ways to mitigate this threat to validity by making data sets broadly available, documenting their design, tinkering, and modification processes, clearly situating and describing disconfirming evidence and their own position in the research, and otherwise presenting the broad scope of human and learning activity that occurs within designs in large learning ecologies as comprehensively as possible.

Ultimately, however, these responses are likely to always be insufficient as evidence of rigor to some, for the root dilemma is around what “counts” as education science. While researchers interested and engaged in DBR ought rightly to continue to push themselves to ensure the methodological rigor of their work and chosen methods, it is also worth noting that DBR should seek to hold itself to its own criteria of assessment. This reflects broader trends in qualitative educational research that push back on narrow constructions of what “counts” as science, recognizing the ways in which new methodologies and approaches to research can help us examine aspects of learning, culture, and equity that have continued to be blind spots for traditional education research; invite new voices and perspectives into the process of achieving rigor and validity (Erickson & Gutiérrez, 2002 ); bolster objectivity by bringing it into conversation with the positionality of the researcher (Harding, 1993 ); and perhaps most important, engage in axiological innovation (Bang, Faber, Gurneau, Marin, & Soto, 2016 ), or the exploration of and design for what is, “good right, true, and beautiful . . . in cultural ecologies” (p. 2).

Questions of Generalizability and Usefulness

The generalizability of research results in DBR has been an ongoing and contentious issue in the development of the paradigm. Indeed, by the standards of canonical methods (e.g., laboratory experimentation, ethnography), these local, situated interventions should lack generalizability. While there is reason to discuss and question the merit of generalizability as a goal of qualitative research at all, researchers in the DBR paradigm have long been conscious of this issue. Understanding the question of generalizability around DBR, and how the paradigm has responded to it, can be done in two ways.

First, by distinguishing questions specific to a particular design from the generalizability of the theory. Cole’s (Cole & Underwood, 2013 ) 5th Dimension work, and the nationwide network of linked, theoretically similar sites, operating nationwide with vastly different designs, is a powerful example of this approach to generalizability. Rather than focus on a single, unitary, potentially generalizable design, the project is more interested in variability and sustainability of designs across local contexts (e.g., Cole, 1995 ; Gutiérrez, Bien, Selland, & Pierce, 2011 ; Jurow, Tracy, Hotchkiss, & Kirshner, 2012 ). Through attention to sustainable, locally effective innovations, conscious of the wide variation in culture and context that accompanies any and all learning processes, 5th Dimension sites each derive their idiosyncratic structures from sociocultural theory, sharing some elements, but varying others, while seeking their own “ontological innovations” based on the affordances of their contexts. This pattern reflects a key element of much of the DBR paradigm: that questions of generalizability in DBR may be about the generalizability of the theory of learning, and the variability of learning and design in distinct contexts, rather than the particular design itself.

A second means of addressing generalizability in DBR has been to embrace the pragmatic impacts of designing innovations. This response stems from Messick ( 1992 ) and Schoenfeld’s ( 1992 ) arguments early on in the development of DBR that the consequentialness and validity of DBR efforts as potentially generalizable research depend on the “ usefulness ” of the theories and designs that emerge. Effectively, because DBR is the examination of situated theory, a design must be able to show pragmatic impact—it must succeed at showing the theory to be useful . If there is evidence of usefulness to both the context in which it takes place, and the field of educational research more broadly, then the DBR researcher can stake some broader knowledge claims that might be generalizable. As a result, the DBR paradigm tends to “treat changes in [local] contexts as necessary evidence for the viability of a theory” (Barab & Squire, 2004 , p. 6). This of course does not mean that DBR is only interested in successful efforts. A design that fails or struggles can provide important information and knowledge to the field. Ultimately, though, DBR tends to privilege work that proves the usefulness of designs, whose pragmatic or theoretical findings can then be generalized within the learning science and education research fields.

With this said, the question of usefulness is not always straightforward, and is hardly unitary. While many DBR efforts—particularly those situated in developmental and cognitive learning science traditions—are interested in the generalizability of their useful educational designs (Barab & Squire, 2004 ; Cobb, Confrey, diSessa, Lehrer, & Schauble, 2003 ; Joseph, 2004 ; Steffe & Thompson, 2000 ), not all are. Critical DBR researchers have noted that if usefulness remains situated in the extant sociopolitical and sociocultural power-structures—dominant conceptual and popular definitions of what useful educational outcomes are—the result will be a bar for research merit that inexorably bends toward the positivist spectrum (Booker & Goldman, 2016 ; Dominguez, 2015 ; Zavala, 2016 ). This could potentially, and likely, result in excluding the non-normative interventions and innovations that are vital for historically marginalized communities, but which might have vastly different-looking outcomes, that are nonetheless useful in the sociopolitical context they occur in. Alternative framings to this idea of usefulness push on and extend the intention, and seek to involve the perspectives and agency of situated community partners and their practices in what “counts” as generative and rigorous research outcomes (Gutiérrez & Penuel, 2014 ). An example in this regard is the idea of consequential knowledge (Hall & Jurow, 2015 ; Jurow & Shea, 2015 ), which suggests outcomes that are consequential will be taken up by participants in and across their networks, and over-time—thus a goal of consequential knowledge certainly meets the standard of being useful , but it also implicates the needs and agency of communities in determining the success and merit of a design or research endeavor in important ways that strict usefulness may miss.

Thus, the bar of usefulness that characterizes the DBR paradigm should not be approached without critical reflection. Certainly designs that accomplish little for local contexts should be subject to intense questioning and critique, but considering the sociopolitical and systemic factors that might influence what “counts” as useful in local contexts and education science more generally, should be kept firmly in mind when designing, choosing methods, and evaluating impacts (Zavala, 2016 ). Researchers should think deeply about their goals, whether they are reaching for generalizability at all, and in what ways they are constructing contextual definitions of success, and be clear about these ideologically influenced answers in their work, such that generalizability and the usefulness of designs can be adjudicated based on and in conversation with the intentions and conceptual framework of the research and researcher.

Ethical Concerns of Sustainability, Participation, and Telos

While there are many external challenges to rigor and validity of DBR, another set of tensions comes from within the DBR paradigm itself. Rather than concerns about rigor or validity, these internal critiques are not unrelated to the earlier question of the contested definition of usefulness , and more accurately reflect questions of research ethics and grow from ideological concerns with how an intentional, interventionist stance is taken up in research as it interacts with situated communities.

Given that the nature of DBR is to design and implement some form of educational innovation, the DBR researcher will in some way be engaging with an individual or community, becoming part of a situated learning ecology, complete with a sociopolitical and cultural history. As with any research that involves providing an intervention or support, the question of what happens when the research ends is as much an ethical as a methodological one. Concerns then arise given how traditional models of DBR seem intensely focused on creating and implementing a “complete” cycle of design, but giving little attention to what happens to the community and context afterward (Engeström, 2011 ). In contrast to this privileging of “completeness,” sociocultural and critical approaches to DBR have suggested that if research is actually happening in naturalistic, situated contexts that authentically recognize and allow social and cultural dimensions to function (i.e., avoid laboratory-type controls to mitigate independent variables), there can never be such a thing as “complete,” for the design will, and should, live on as part of the ecology of the space (Cole, 2007 ; Engeström, 2000 ). Essentially, these internal critiques push DBR to consider sustainability, and sustainable scale, as equally important concerns to the completeness of an innovation. Not only are ethical questions involved, but accounting for the unbounded and ongoing nature of learning as a social and cultural activity can help strengthen the viability of knowledge claims made, and what degree of generalizability is reasonably justified.