Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Synthesising the data

Synthesis is a stage in the systematic review process where extracted data, that is the findings of individual studies, are combined and evaluated.

The general purpose of extracting and synthesising data is to show the outcomes and effects of various studies, and to identify issues with methodology and quality. This means that your synthesis might reveal several elements, including:

- overall level of evidence

- the degree of consistency in the findings

- what the positive effects of a drug or treatment are , and what these effects are based on

- how many studies found a relationship or association between two components, e.g. the impact of disability-assistance animals on the psychological health of workplaces

There are two commonly accepted methods of synthesis in systematic reviews:

Qualitative data synthesis

- Quantitative data synthesis (i.e. meta-analysis)

The way the data is extracted from your studies, then synthesised and presented, depends on the type of data being handled.

In a qualitative systematic review, data can be presented in a number of different ways. A typical procedure in the health sciences is thematic analysis .

Thematic synthesis has three stages:

- the coding of text ‘line-by-line’

- the development of ‘descriptive themes’

- and the generation of ‘analytical themes’

If you have qualitative information, some of the more common tools used to summarise data include:

- textual descriptions, i.e. written words

- thematic or content analysis

Example qualitative systematic review

A good example of how to conduct a thematic analysis in a systematic review is the following journal article on cancer patients. In it, the authors go through the process of:

- identifying and coding information about the selected studies’ methodologies and findings on patient care

- organising these codes into subheadings and descriptive categories

- developing these categories into analytical themes

What Facilitates “Patient Empowerment” in Cancer Patients During Follow-Up: A Qualitative Systematic Review of the Literature

Quantitative data synthesis

In a quantitative systematic review, data is presented statistically. Typically, this is referred to as a meta-analysis .

The usual method is to combine and evaluate data from multiple studies. This is normally done in order to draw conclusions about outcomes, effects, shortcomings of studies and/or applicability of findings.

Remember, the data you synthesise should relate to your research question and protocol (plan). In the case of quantitative analysis, the data extracted and synthesised will relate to whatever method was used to generate the research question (e.g. PICO method), and whatever quality appraisals were undertaken in the analysis stage.

If you have quantitative information, some of the more common tools used to summarise data include:

- grouping of similar data, i.e. presenting the results in tables

- charts, e.g. pie-charts

- graphical displays, i.e. forest plots

Example of a quantitative systematic review

A quantitative systematic review is a combination of qualitative and quantitative, usually referred to as a meta-analysis.

Effectiveness of Acupuncturing at the Sphenopalatine Ganglion Acupoint Alone for Treatment of Allergic Rhinitis: A Systematic Review and Meta-Analysis

About meta-analyses

A systematic review may sometimes include a meta-analysis , although it is not a requirement of a systematic review. Whereas, a meta-analysis also includes a systematic review.

A meta-analysis is a statistical analysis that combines data from previous studies to calculate an overall result.

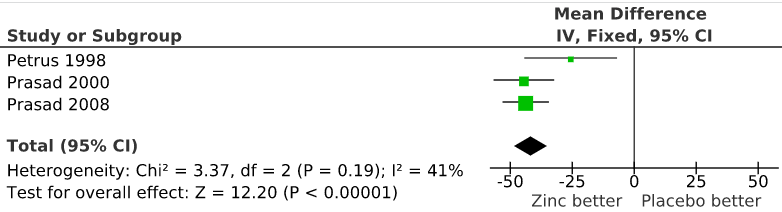

One way of accurately representing all the data is in the form of a forest plot . A forest plot is a way of combining the results of multiple studies in order to show point estimates arising from different studies of the same condition or treatment.

It is comprised of a graphical representation and often also a table. The graphical display shows the mean value for each study and often with a confidence interval (the horizontal bars). Each mean is plotted relative to the vertical line of no difference.

The following is an example of the graphical representation of a forest plot.

“File:The effect of zinc acetate lozenges on the duration of the common cold.svg” by Harri Hemilä is licensed under CC BY 3.0

Watch the following short video where a social health example is used to explain how to construct a forest plot graphic.

Forest Plots: Understanding a Meta-Analysis in 5 Minutes or Less (5:38 mins)

Forest Plots – Understanding a Meta-Analysis in 5 Minutes or Less (5:38 min) by The NCCMT ( YouTube )

Test your knowledge

Research and Writing Skills for Academic and Graduate Researchers Copyright © 2022 by RMIT University is licensed under a Creative Commons Attribution-NonCommercial 4.0 International License , except where otherwise noted.

Share This Book

Jump to navigation

Cochrane Training

Chapter 9: summarizing study characteristics and preparing for synthesis.

Joanne E McKenzie, Sue E Brennan, Rebecca E Ryan, Hilary J Thomson, Renea V Johnston

Key Points:

- Synthesis is a process of bringing together data from a set of included studies with the aim of drawing conclusions about a body of evidence. This will include synthesis of study characteristics and, potentially, statistical synthesis of study findings.

- A general framework for synthesis can be used to guide the process of planning the comparisons, preparing for synthesis, undertaking the synthesis, and interpreting and describing the results.

- Tabulation of study characteristics aids the examination and comparison of PICO elements across studies, facilitates synthesis of these characteristics and grouping of studies for statistical synthesis.

- Tabulation of extracted data from studies allows assessment of the number of studies contributing to a particular meta-analysis, and helps determine what other statistical synthesis methods might be used if meta-analysis is not possible.

Cite this chapter as: McKenzie JE, Brennan SE, Ryan RE, Thomson HJ, Johnston RV. Chapter 9: Summarizing study characteristics and preparing for synthesis. In: Higgins JPT, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, Welch VA (editors). Cochrane Handbook for Systematic Reviews of Interventions version 6.4 (updated August 2023). Cochrane, 2023. Available from www.training.cochrane.org/handbook .

9.1 Introduction

Synthesis is a process of bringing together data from a set of included studies with the aim of drawing conclusions about a body of evidence. Most Cochrane Reviews on the effects of interventions will include some type of statistical synthesis. Most commonly this is the statistical combination of results from two or more separate studies (henceforth referred to as meta-analysis) of effect estimates.

An examination of the included studies always precedes statistical synthesis in Cochrane Reviews. For example, examination of the interventions studied is often needed to itemize their content so as to determine which studies can be grouped in a single synthesis. More broadly, synthesis of the PICO (Population, Intervention, Comparator and Outcome) elements of the included studies underpins interpretation of review findings and is an important output of the review in its own right. This synthesis should encompass the characteristics of the interventions and comparators in included studies, the populations and settings in which the interventions were evaluated, the outcomes assessed, and the strengths and weaknesses of the body of evidence.

Chapter 2 defined three types of PICO criteria that may be helpful in understanding decisions that need to be made at different stages in the review:

- The review PICO (planned at the protocol stage) is the PICO on which eligibility of studies is based (what will be included and what excluded from the review).

- The PICO for each synthesis (also planned at the protocol stage) defines the question that the specific synthesis aims to answer, determining how the synthesis will be structured, specifying planned comparisons (including intervention and comparator groups, any grouping of outcome and population subgroups).

- The PICO of the included studies (determined at the review stage) is what was actually investigated in the included studies.

In this chapter, we focus on the PICO for each synthesis and the PICO of the included studies , as the basis for determining which studies can be grouped for statistical synthesis and for synthesizing study characteristics. We describe the preliminary steps undertaken before performing the statistical synthesis. Methods for the statistical synthesis are described in Chapter 10 , Chapter 11 and Chapter 12 .

9.2 A general framework for synthesis

Box 9.2.a A general framework for synthesis that can be applied irrespective of the methods used to synthesize results

Box 9.2.a provides a general framework for synthesis that can be applied irrespective of the methods used to synthesize results. Planning for the synthesis should start at protocol-writing stage, and Chapter 2 and Chapter 3 describe the steps involved in planning the review questions and comparisons between intervention groups. These steps included specifying which characteristics of the interventions, populations, outcomes and study design would be grouped together for synthesis (the PICO for each synthesis: stage 1 in Box 9.2.a ).

This chapter primarily concerns stage 2 of the general framework in Box 9.2.a . After deciding which studies will be included in the review and extracting data, review authors can start implementing their plan, working through steps 2.1 to 2.5 of the framework. This process begins with a detailed examination of the characteristics of each study (step 2.1), and then comparison of characteristics across studies in order to determine which studies are similar enough to be grouped for synthesis (step 2.2). Examination of the type of data available for synthesis follows (step 2.3). These three steps inform decisions about whether any modification to the planned comparisons or outcomes is necessary, or new comparisons are needed (step 2.4). The last step of the framework covered in this chapter involves synthesis of the characteristics of studies contributing to each comparison (step 2.5). The chapter concludes with practical tips for checking data before synthesis (Section 9.4 ).

Steps 2.1, 2.2 and 2.5 involve analysis and synthesis of mainly qualitative information about study characteristics. The process used to undertake these steps is rarely described in reviews, yet can require many subjective decisions about the nature and similarity of the PICO elements of the included studies. The examples described in this section illustrate approaches for making this process more transparent.

9.3 Preliminary steps of a synthesis

9.3.1 summarize the characteristics of each study (step 2.1).

A starting point for synthesis is to summarize the PICO characteristics of each study (i.e. the PICO of the included studies, see Chapter 3 ) and categorize these PICO elements in the groups (or domains) pre-specified in the protocol (i.e. the PICO for each synthesis). The resulting descriptions are reported in the ‘Characteristics of included studies’ table, and are used in step 2.2 to determine which studies can be grouped for synthesis.

In some reviews, the labels and terminology used in each study are retained when describing the PICO elements of the included studies. This may be sufficient in areas with consistent and widely understood terminology that matches the PICO for each synthesis. However, in most areas, terminology is variable, making it difficult to compare the PICO of each included study to the PICO for each synthesis, or to compare PICO elements across studies. Standardizing the description of PICO elements across studies facilitates these comparisons. This standardization includes applying the labels and terminology used to articulate the PICO for each synthesis ( Chapter 3 ), and structuring the description of PICO elements. The description of interventions can be structured using the Template for Intervention Description and Replication (TIDIeR) checklist, for example (see Chapter 3 and Table 9.3.a ).

Table 9.3.a illustrates the use of pre-specified groups to categorize and label interventions in a review of psychosocial interventions for smoking cessation in pregnancy (Chamberlain et al 2017). The main intervention strategy in each study was categorized into one of six groups: counselling, health education, feedback, incentive-based interventions, social support, and exercise. This categorization determined which studies were eligible for each comparison (e.g. counselling versus usual care; single or multi-component strategy). The extract from the ‘Characteristics of included studies’ table shows the diverse descriptions of interventions in three of the 54 studies for which the main intervention was categorized as ‘counselling’. Other intervention characteristics, such as duration and frequency, were coded in pre-specified categories to standardize description of the intervention intensity and facilitate meta-regression (not shown here).

Table 9.3.a Example of categorizing interventions into pre-defined groups

* The definition also specified eligible modes of delivery, intervention duration and personnel.

While this example focuses on categorizing and describing interventions according to groups pre-specified in the PICO for each synthesis, the same approach applies to other PICO elements.

9.3.2 Determine which studies are similar enough to be grouped within each comparison (step 2.2)

Once the PICO of included studies have been coded using labels and descriptions specified in the PICO for each synthesis, it will be possible to compare PICO elements across studies and determine which studies are similar enough to be grouped within each comparison.

Tabulating study characteristics can help to explore and compare PICO elements across studies, and is particularly important for reviews that are broad in scope, have diversity across one or more PICO elements, or include large numbers of studies. Data about study characteristics can be ordered in many different ways (e.g. by comparison or by specific PICO elements), and tables may include information about one or more PICO elements. Deciding on the best approach will depend on the purpose of the table and the stage of the review. A close examination of study characteristics will require detailed tables; for example, to identify differences in characteristics that were pre-specified as potentially important modifiers of the intervention effects. As the review progresses, this detail may be replaced by standardized description of PICO characteristics (e.g. the coding of counselling interventions presented in Table 9.3.a ).

Table 9.3.b illustrates one approach to tabulating study characteristics to enable comparison and analysis across studies. This table presents a high-level summary of the characteristics that are most important for determining which comparisons can be made. The table was adapted from tables presented in a review of self-management education programmes for osteoarthritis (Kroon et al 2014). The authors presented a structured summary of intervention and comparator groups for each study, and then categorized intervention components thought to be important for enabling patients to manage their own condition. Table 9.3.b shows selected intervention components, the comparator, and outcomes measured in a subset of studies (some details are fictitious). Outcomes have been grouped by the outcome domains ‘Pain’ and ‘Function’ (column ‘Outcome measure’ Table 9.3.b ). These pre-specified outcome domains are the chosen level for the synthesis as specified in the PICO for each synthesis. Authors will need to assess whether the measurement methods or tools used within each study provide an appropriate assessment of the domains ( Chapter 3, Section 3.2.4 ). A next step is to group each measure into the pre-specified time points. In this example, outcomes are grouped into short-term (<6 weeks) and long-term follow-up (≥6 weeks to 12 months) (column ‘Time points (time frame)’ Table 9.3.b ).

Variations on the format shown in Table 9.3.b can be presented within a review to summarize the characteristics of studies contributing to each synthesis, which is important for interpreting findings (step 2.5).

Table 9.3.b Table of study characteristics illustrating similarity of PICO elements across studies

BEH = health-directed behaviour; CON = constructive attitudes and approaches; EMO = emotional well-being; ENG = positive and active engagement in life; MON = self-monitoring and insight; NAV = health service navigation; SKL = skill and technique acquisition. ANCOVA = Analysis of covariance; CI = confidence interval; IQR = interquartile range; MD = mean difference; SD = standard deviation; SE = standard error, NS = non-significant. Pain and function measures: Dutch AIMS-SF = Dutch short form of the Arthritis Impact Measurement Scales; HAQ = Health Assessment Questionnaire; VAS = visual analogue scale; WOMAC = Western Ontario and McMaster Universities Osteoarthritis Index. 1 Ordered by type of comparator; 2 Short-term (denoted ‘immediate’ in the review Kroon et al (2014)) follow-up is defined as <6 weeks, long-term follow-up (denoted ‘intermediate’ in the review) is ≥6 weeks to 12 months; 3 For simplicity, in this example the available data are assumed to be the same for all outcomes within an outcome domain within a study. In practice, this is unlikely and the available data would likely vary by outcome; 4 Indicates that an effect estimate and its standard error may be computed through imputation of missing statistics, methods to convert between statistics (e.g. medians to means) or contact with study authors. *Indicates the selected outcome when there was multiplicity in the outcome domain and time frame.

9.3.3 Determine what data are available for synthesis (step 2.3)

Once the studies that are similar enough to be grouped together within each comparison have been determined, a next step is to examine what data are available for synthesis. Tabulating the measurement tools and time frames as shown in Table 9.3.b allows assessment of the potential for multiplicity (i.e. when multiple outcomes within a study and outcome domain are available for inclusion ( Chapter 3, Section 3.2.4.3 )). In this example, multiplicity arises in two ways. First, from multiple measurement instruments used to measure the same outcome domain within the same time frame (e.g. ‘Short-term Pain’ is measured using the ‘Pain VAS’ and ‘Pain on walking VAS’ scales in study 3). Second, from multiple time points measured within the same time frame (e.g. ‘Short-term Pain’ is measured using ‘Pain VAS’ at both 2 weeks and 1 month in study 6). Pre-specified methods to deal with the multiplicity can then be implemented (see Table 9.3.c for examples of approaches for dealing with multiplicity). In this review, the authors pre-specified a set of decision rules for selecting specific outcomes within the outcome domains. For example, for the outcome domain ‘Pain’, the selected outcome was the highest on the following list: global pain, pain on walking, WOMAC pain subscore, composite pain scores other than WOMAC, pain on activities other than walking, rest pain or pain during the night. The authors further specified that if there were multiple time points at which the outcome was measured within a time frame, they would select the longest time point. The selected outcomes from applying these rules to studies 3 and 6 are indicated by an asterisk in Table 9.3.b .

Table 9.3.b also illustrates an approach to tabulating the extracted data. The available statistics are tabulated in the column labelled ‘Data’, from which an assessment can be made as to whether the study contributes the required data for a meta-analysis (column ‘Effect & SE’) ( Chapter 10 ). For example, of the seven studies comparing health-directed behaviour (BEH) with usual care, six measured ‘Short-term Pain’, four of which contribute required data for meta-analysis. Reordering the table by comparison, outcome and time frame, will more readily show the number of studies that will contribute to a particular meta-analysis, and help determine what other synthesis methods might be used if the data available for meta-analysis are limited.

Table 9.3.c Examples of approaches for selecting one outcome (effect estimate) for inclusion in a synthesis.* Adapted from López-López et al (2018)

9.3.4 Determine if modification to the planned comparisons or outcomes is necessary, or new comparisons are needed (step 2.4)

The previous steps may reveal the need to modify the planned comparisons. Important variations in the intervention may be identified leading to different or modified intervention groups. Few studies or sparse data, or both, may lead to different groupings of interventions, populations or outcomes. Planning contingencies for anticipated scenarios is likely to lead to less post-hoc decision making ( Chapter 2 and Chapter 3 ); however, it is difficult to plan for all scenarios. In the latter circumstance, the rationale for any post-hoc changes should be reported. This approach was adopted in a review examining the effects of portion, package or tableware size for changing selection and consumption of food, alcohol and tobacco (Hollands et al 2015). After preliminary examination of the outcome data, the review authors changed their planned intervention groups. They judged that intervention groups based on ‘size’ and those based on ‘shape’ of the products were not conceptually comparable, and therefore should form separate comparisons. The authors provided a rationale for the change and noted that it was a post-hoc decision.

9.3.5 Synthesize the characteristics of the studies contributing to each comparison (step 2.5)

A final step, and one that is essential for interpreting combined effects, is to synthesize the characteristics of studies contributing to each comparison. This description should integrate information about key PICO characteristics across studies, and identify any potentially important differences in characteristics that were pre-specified as possible effect modifiers. The synthesis of study characteristics is also needed for GRADE assessments, informing judgements about whether the evidence applies directly to the review question (indirectness) and analyses conducted to examine possible explanations for heterogeneity (inconsistency) (see Chapter 14 ).

Tabulating study characteristics is generally preferable to lengthy description in the text, since the structure imposed by a table can make it easier and faster for readers to scan and identify patterns in the information presented. Table 9.3.b illustrates one such approach. Tabulating characteristics of studies that contribute to each comparison can also help to improve the transparency of decisions made around grouping of studies, while also ensuring that studies that do not contribute to the combined effect are accounted for.

9.4 Checking data before synthesis

Before embarking on a synthesis, it is important to be confident that the findings from the individual studies have been collated correctly. Therefore, review authors must compare the magnitude and direction of effects reported by studies with how they are to be presented in the review. This is a reasonably straightforward way for authors to check a number of potential problems, including typographical errors in studies’ reports, accuracy of data collection and manipulation, and data entry into RevMan. For example, the direction of a standardized mean difference may accidentally be wrong in the review. A basic check is to ensure the same qualitative findings (e.g. direction of effect and statistical significance) between the data as presented in the review and the data as available from the original study.

Results in forest plots should agree with data in the original report (point estimate and confidence interval) if the same effect measure and statistical model is used. There are legitimate reasons for differences, however, including: using a different measure of intervention effect; making different choices between change-from-baseline measures, post-intervention measures alone or post-intervention measures adjusted for baseline values; grouping similar intervention groups; or making adjustments for unit-of-analysis errors in the reports of the primary studies.

9.5 Types of synthesis

The focus of this chapter has been describing the steps involved in implementing the planned comparisons between intervention groups (stage 2 of the general framework for synthesis ( Box 9.2.a )). The next step (stage 3) is often performing a statistical synthesis. Meta-analysis of effect estimates, and its extensions have many advantages. There are circumstances under which a meta-analysis is not possible, however, and other statistical synthesis methods might be considered, so as to make best use of the available data. Available summary and synthesis methods, along with the questions they address and examples of associated plots, are described in Table 9.5.a . Chapter 10 and Chapter 11 discuss meta-analysis (of effect estimate) methods, while Chapter 12 focuses on the other statistical synthesis methods, along with approaches to tabulating, visually displaying and providing a structured presentation of the findings. An important part of planning the analysis strategy is building in contingencies to use alternative methods when the desired method cannot be used.

Table 9.5.a Overview of available methods for summary and synthesis

9.6 Chapter information

Authors: Joanne E McKenzie, Sue E Brennan, Rebecca E Ryan, Hilary J Thomson, Renea V Johnston

Acknowledgements: Sections of this chapter build on Chapter 9 of version 5.1 of the Handbook , with editors Jonathan Deeks, Julian Higgins and Douglas Altman. We are grateful to Julian Higgins, James Thomas and Tianjing Li for commenting helpfully on earlier drafts.

Funding: JM is supported by an NHMRC Career Development Fellowship (1143429). SB and RR’s positions are supported by the NHMRC Cochrane Collaboration Funding Program. HT is funded by the UK Medical Research Council (MC_UU_12017-13 and MC_UU_12017-15) and Scottish Government Chief Scientist Office (SPHSU13 and SPHSU15). RJ’s position is supported by the NHMRC Cochrane Collaboration Funding Program and Cabrini Institute.

9.7 References

Chamberlain C, O’Mara-Eves A, Porter J, Coleman T, Perlen SM, Thomas J, McKenzie JE. Psychosocial interventions for supporting women to stop smoking in pregnancy. Cochrane Database of Systematic Reviews 2017; 2 : CD001055.

Hollands GJ, Shemilt I, Marteau TM, Jebb SA, Lewis HB, Wei Y, Higgins JPT, Ogilvie D. Portion, package or tableware size for changing selection and consumption of food, alcohol and tobacco. Cochrane Database of Systematic Reviews 2015; 9 : CD011045.

Kroon FPB, van der Burg LRA, Buchbinder R, Osborne RH, Johnston RV, Pitt V. Self-management education programmes for osteoarthritis. Cochrane Database of Systematic Reviews 2014; 1 : CD008963.

López-López JA, Page MJ, Lipsey MW, Higgins JPT. Dealing with effect size multiplicity in systematic reviews and meta-analyses. Research Synthesis Methods 2018; 9 : 336–351.

For permission to re-use material from the Handbook (either academic or commercial), please see here for full details.

An official website of the United States government

The .gov means it's official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you're on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

- Browse Titles

NCBI Bookshelf. A service of the National Library of Medicine, National Institutes of Health.

Methods Guide for Effectiveness and Comparative Effectiveness Reviews [Internet]. Rockville (MD): Agency for Healthcare Research and Quality (US); 2008-.

Methods Guide for Effectiveness and Comparative Effectiveness Reviews [Internet].

Quantitative synthesis—an update.

Investigators: Sally C. Morton , Ph.D., M.Sc., M. Hassan Murad , M.D., M.P.H., Elizabeth O’Connor , Ph.D., Christopher S. Lee , Ph.D., R.N., Marika Booth , M.S., Benjamin W. Vandermeer , M.Sc., Jonathan M. Snowden , Ph.D., Kristen E. D’Anci , Ph.D., Rongwei Fu , Ph.D., Gerald Gartlehner , M.D., M.P.H., Zhen Wang , Ph.D., and Dale W. Steele , M.D., M.S.

Affiliations

Published: February 23, 2018 .

Quantitative synthesis, or meta-analysis, is often essential for Comparative Effective Reviews (CERs) to provide scientifically rigorous summary information. Quantitative synthesis should be conducted in a transparent and consistent way with methodologies reported explicitly. This guide provides practical recommendations on conducting synthesis. The guide is not meant to be a textbook on meta-analysis nor is it a comprehensive review of methods, but rather it is intended to provide a consistent approach for situations and decisions that are commonly faced by AHRQ Evidence-based Practice Centers (EPCs). The goal is to describe choices as explicitly as possible, and in the context of EPC requirements, with an appropriate degree of confidence.

This guide addresses issues in the order that they are usually encountered in a synthesis, though we acknowledge that the process is not always linear. We first consider the decision of whether or not to combine studies quantitatively. The next chapter addresses how to extract and utilize data from individual studies to construct effect sizes, followed by a chapter on statistical model choice. The fourth chapter considers quantifying and exploring heterogeneity. The fifth describes an indirect evidence technique that has not been included in previous guidance – network meta-analysis, also known as mixed treatment comparisons. The final section in the report lays out future research suggestions.

The Agency for Healthcare Research and Quality (AHRQ), through its Evidence-based Practice Centers (EPCs), sponsors the development of evidence reports and technology assessments to assist public- and private-sector organizations in their efforts to improve the quality of health care in the United States. The reports and assessments provide organizations with comprehensive, science-based information on common, costly medical conditions and new health care technologies and strategies. The EPCs systematically review the relevant scientific literature on topics assigned to them by AHRQ and conduct additional analyses when appropriate prior to developing their reports and assessments.

Strong methodological approaches to systematic review improve the transparency, consistency, and scientific rigor of these reports. Through a collaborative effort of the Effective Health Care (EHC) Program, the Agency for Healthcare Research and Quality (AHRQ), the EHC Program Scientific Resource Center, and the AHRQ Evidence-based Practice Centers have developed a Methods Guide for Comparative Effectiveness Reviews. This Guide presents issues key to the development of Systematic Reviews and describes recommended approaches for addressing difficult, frequently encountered methodological issues.

The Methods Guide for Comparative Effectiveness Reviews is a living document, and will be updated as further empiric evidence develops and our understanding of better methods improves. We welcome comments on this Methods Guide paper. They may be sent by mail to the Task Order Officer named below at: Agency for Healthcare Research and Quality, 5600 Fishers Lane, Rockville, MD 20857, or by email to vog.shh.qrha@cpe .

- Gopal Khanna, M.B.A. Director Agency for Healthcare Research and Quality

- Arlene S. Bierman, M.D., M.S. Director Center for Evidence and Practice Improvement Agency for Healthcare Research and Quality

- Stephanie Chang, M.D., M.P.H. Director Evidence-based Practice Center Program Center for Evidence and Practice Improvement Agency for Healthcare Research and Quality

- Elisabeth Kato, M.D., M.R.P. Task Order Officer Evidence-based Practice Center Program Center for Evidence and Practice Improvement Agency for Healthcare Research and Quality

- Peer Reviewers

Prior to publication of the final evidence report, EPCs sought input from independent Peer Reviewers without financial conflicts of interest. However, the conclusions and synthesis of the scientific literature presented in this report does not necessarily represent the views of individual investigators.

Peer Reviewers must disclose any financial conflicts of interest greater than $10,000 and any other relevant business or professional conflicts of interest. Because of their unique clinical or content expertise, individuals with potential non-financial conflicts may be retained. The TOO and the EPC work to balance, manage, or mitigate any potential non-financial conflicts of interest identified.

- Eric Bass, M.D., M.P.H Director, Johns Hopkins University Evidence-based Practice Center Professor of Medicine, and Health Policy and Management Johns Hopkins University Baltimore, MD

- Mary Butler, M.B.A., Ph.D. Co-Director, Minnesota Evidence-based Practice Center Assistant Professor, Health Policy & Management University of Minnesota Minneapolis, MN

- Roger Chou, M.D., FACP Director, Pacific Northwest Evidence-based Practice Center Portland, OR

- Lisa Hartling, M.S., Ph.D. Director, University of Alberta Evidence-Practice Center Edmonton, AB

- Susanne Hempel, Ph.D. Co-Director, Southern California Evidence-based Practice Center Professor, Pardee RAND Graduate School Senior Behavioral Scientist, RAND Corporation Santa Monica, CA

- Robert L. Kane, M.D. * Co-Director, Minnesota Evidence-based Practice Center School of Public Health University of Minnesota Minneapolis, MN

- Jennifer Lin, M.D., M.C.R. Director, Kaiser Permanente Research Affiliates Evidence-based Practice Center Investigator, The Center for Health Research, Kaiser Permanente Northwest Portland, OR

- Christopher Schmid, Ph.D. Co-Director, Center for Evidence Synthesis in Health Professor of Biostatistics School of Public Health Brown University Providence, RI

- Karen Schoelles, M.D., S.M., FACP Director, ECRI Evidence-based Practice Center Plymouth Meeting, PA

- Tibor Schuster, Ph.D. Assistant Professor Department of Family Medicine McGill University Montreal, QC

- Jonathan R. Treadwell, Ph.D. Associate Director, ECRI Institute Evidence-based Practice Center Plymouth Meeting, PA

- Tom Trikalinos, M.D. Director, Brown Evidence-based Practice Center Director, Center for Evidence-based Medicine Associate Professor, Health Services, Policy & Practice Brown University Providence, RI

- Meera Viswanathan, Ph.D. Director, RTI-UNC Evidence-based Practice Center Durham, NC RTI International Durham, NC

- C. Michael White, Pharm. D., FCP, FCCP Professor and Head, Pharmacy Practice School of Pharmacy University of Connecticut Storrs, CT

- Tim Wilt, M.D., M.P.H. Co-Director, Minnesota Evidence-based Practice Center Director, Minneapolis VA-Evidence Synthesis Program Professor of Medicine, University of Minnesota Staff Physician, Minneapolis VA Health Care System Minneapolis, MN

Deceased March 6, 2017

- Introduction

The purpose of this document is to consolidate and update quantitative synthesis guidance provided in three previous methods guides. 1 – 3 We focus primarily on comparative effectiveness reviews (CERs), which are systematic reviews that compare the effectiveness and harms of alternative clinical options, and aim to help clinicians, policy makers, and patients make informed treatment choices. We focus on interventional studies and do not address diagnostic studies, individual patient level analysis, or observational studies, which are addressed elsewhere. 4

Quantitative synthesis, or meta-analysis, is often essential for CERs to provide scientifically rigorous summary information. Quantitative synthesis should be conducted in a transparent and consistent way with methodologies reported explicitly. This guide provides practical recommendations on conducting synthesis. The guide is not meant to be a textbook on meta-analysis nor is it a comprehensive review of methods, but rather it is intended to provide a consistent approach for situations and decisions that are commonly faced by Evidence-based Practice Centers (EPCs). The goal is to describe choices as explicitly as possible and in the context of EPC requirements, with an appropriate degree of confidence.

EPC investigators are encouraged to follow these recommendations but may choose to use alternative methods if deemed necessary after discussion with their AHRQ project officer. If alternative methods are used, investigators are required to provide a rationale for their choices, and if appropriate, to state the strengths and limitations of the chosen methods in order to promote consistency, transparency, and learning. In addition, several steps in meta-analysis require subjective judgment, such as when combining studies or incorporating indirect evidence. For each subjective decision, investigators should fully explain how the decision was reached.

This guide was developed by a workgroup comprised of members from across the EPCs, as well as from the Scientific Resource Center (SRC) of the AHRQ Effective Healthcare Program. Through surveys and discussions among AHRQ, Directors of EPCs, the Scientific Resource Center, and the Methods Steering Committee, quantitative synthesis was identified as a high-priority methods topic and a need was identified to update the original guidance. 1 , 5 Once confirmed as a Methods Workgroup, the SRC solicited EPC workgroup volunteers, particularly those with quantitative methods expertise, including statisticians, librarians, thought leaders, and methodologists. Charged by AHRQ to update current guidance, the workgroup consisted of members from eight of 13 EPCs, the SRC, and AHRQ, and commenced in the fall of 2015. We conducted regular workgroup teleconference calls over the course of 14 months to discuss project direction and scope, assign and coordinate tasks, collect and analyze data, and discuss and edit draft documents. After constructing a draft table of contents, we surveyed all EPCs to ensure no topics of interest were missing.

The initial teleconference meeting was used to outline the draft, discuss the timeline, and agree upon a method for reaching consensus as described below. The larger workgroup then was split into subgroups each taking responsibility for a different chapter. The larger group participated in biweekly discussions via teleconference and email communication. Subgroups communicated separately (in addition to the larger meetings) to coordinate tasks, discuss the literature review results, and draft their respective chapters. Later, chapter drafts were combined into a larger document for workgroup review and discussion on the bi-weekly calls.

Literature Search and Review

A medical research librarian worked with each subgroup to identify a relevant search strategy for each chapter, and then combined these strategies into one overall search conducted for all chapters combined. The librarian conducted the search on the ARHQ SRC Methods Library, a bibliographic database curated by the SRC currently containing more than 16,000 citations of methodological works for systematic reviews and comparative effectiveness reviews, using descriptor and keyword strategies to identify quantitative synthesis methods research publications (descriptor search=all quantitative synthesis descriptors, and the keyword search=quantitative synthesis, meta-anal*, metaanal*, meta-regression in [anywhere field]). Search results were limited to English language and 2009 and later to capture citations published since AHRQ’s previous methods guidance on quantitative synthesis. Additional articles were identified from recent systematic reviews, reference lists of reviews and editorials, and through the expert review process.

The search yielded 1,358 titles and abstracts which were reviewed by all workgroup members using ABSTRACKR software (available at http://abstrackr.cebm.brown.edu ). Each subgroup separately identified articles relevant to their own chapter. Abstract review was done by single review, investigators included anything that could be potentially relevant. Each subgroup decided separately on final inclusion/exclusion based on full text articles.

Consensus and Recommendations

Reaching consensus if possible is of great importance for AHRQ methods guidance. The workgroup recognized this importance in its first meeting and agreed on a process for informal consensus and conflict resolution. Disagreements were thoroughly discussed and if possible, consensus was reached. If consensus was not reached, analytic options are discussed in the text. We did not employ a formal voting procedure to assess consensus.

A summary of the workgroup’s key conclusions and recommendations was circulated for comment by EPC Directors and AHRQ officers at a biannual EPC Director’s meeting in October 2016. In addition, a full draft was circulated to EPC Directors and AHRQ officers prior to peer review, and the manuscript was made available for public review. All comments have been considered by the team in the final preparation of this report.

Chapter 1. Decision to Combine Trials

1.1. goals of the meta-analysis.

Meta-analysis is a statistical method for synthesizing (also called combining or pooling) the benefits and/or harms of a treatment or intervention across multiple studies. The overarching goal of a meta-analysis is generally to provide the best estimate of the effect of an intervention. As part of that aspirational goal, results of a meta-analysis may inform a number of related questions, such as whether that best estimate represents something other than a null effect (is this intervention beneficial?), the range in which the true effect likely lies, whether it is appropriate to provide a single best estimate, and what study-level characteristics may influence the effect estimate. Before tackling these questions, it is necessary to answer a preliminary but fundamental question: Is it appropriate to pool the results of the identified studies? 6

Clinical, methodological, and statistical factors must all be considered when deciding whether to combine studies in a meta-analysis. Figure 1.1 depicts a decision tree to help investigators think through these important considerations, which are discussed below.

Pooling decision tree.

1.2. Clinical and Methodological Heterogeneity

Studies must be reasonably similar to be pooled in a meta-analysis. 1 Even when the review protocol identifies a coherent and fairly narrow body of literature, the actual included studies may represent a wide range of population, intervention, and study characteristics. Variations in these factors are referred to as clinical heterogeneity and methodological heterogeneity. 7 , 8 A third form of heterogeneity, statistical heterogeneity, will be discussed later.

The first step in the decision tree is to explore the clinical and methodological heterogeneity of the included studies (Step A, Figure 1.1 ). The goal is to identify groups of trials that are similar enough that an average effect would make a sensible summary. There is no objective measure or universally accepted standard for deciding whether studies are “similar enough” to pool; this decision is inherently a matter of judgment. 6 Verbeek and colleagues suggest working through key sources of variability in sequence, beginning with the clinical variables of intervention/exposure, control condition, and participants, before moving on to methodological areas such as study design, outcome, and follow-up time. When there is important variability in these areas, investigators should consider whether there are coherent subgroups of trials, rather than the full group, that can be pooled. 6

Clinical heterogeneity refers to characteristics related to the participants, interventions, types of outcomes, and study setting. Some have suggested that pooling may be acceptable when it is plausible that the underlying effects could be similar across subpopulations and variations in interventions and outcomes. 9 For example, in a review of a lipid-lowering medication, researchers might be comfortable combining studies that target younger and middle-aged adults, but expect different effects with older adults, who have high rates of comorbidities and other medication use. Others suggest that it may be acceptable to combine interventions with likely similar mechanisms of action. 6 For example, a researcher may combine studies of depression interventions that use a range of psychotherapeutic approaches, on the logic that they all aim to change a person’s thinking and behavior in order to improve mood, but not want to combine them with trials of antidepressants, whose mechanism of action is presumed to be biochemical.

Methodological heterogeneity refers to variations in study methods (e.g., study design, measures, and study conduct). A common question regarding study design, is whether it is acceptable to combine studies that randomize individual participants with those that randomize clusters (e.g., when clinics, clinicians, or classrooms are randomized and individuals are nested within these units). We believe this is generally acceptable, with appropriate adjustment for cluster randomization as needed. 10 However, closer examination may show that the cluster randomized trials also tend to systematically differ on population or intervention characteristics from the individually-randomized trials. If so, subgroup analyses may be considered.

Outcome measures are a common source of methodological heterogeneity. First, trials may have a wide array of specific instruments and cut-points for a common outcome. For example, a review considering pooling the binary outcome of depression prevalence may find measures that range from a depression diagnosis based on a clinical interview to scores above a cut-point on a screening instrument. One guiding principle is to consider pooling only when it is plausible that the underlying relative effects are consistent across specific definitions of an outcome. In addition, investigators should take steps to harmonize outcomes to the extent possible.

Second, there is also typically substantial variability in the statistics reported across studies (e.g., odds ratios, relative risks, hazard ratios, baseline and mean followup scores, change scores for each condition, between-group differences at followup, etc.). Methods to calculate or estimate missing statistics are available, 5 however the investigators must ultimately weigh the tradeoff of potentially less accurate results (due to assumptions required to estimate missing data) with the potential advantage of pooling a more complete set of studies. If a substantial proportion of the studies require calculations that involve assumptions or estimates (rather than straightforward calculations) in order to combine them, then it may be preferable to show results in a table or forest plot without a pooled estimate

1.3. Best Evidence Versus All Evidence

Sometimes the body of evidence comprises a single trial or small number of trials that clearly represent the best evidence, along with a number of additional trials that are much smaller or with other important limitations (Step B, Figure 1.1 ). The “best evidence” trials are generally very large trials with low risk of bias and with good generalizability to the population of interest. In this case, it may be appropriate to focus on the one or few “best” trials rather than combining them with the rest of the evidence, particularly when addressing rare events that small studies are underpowered to examine. 11 , 12 For example, an evidence base of one large, multi-center trial of an intervention to prevent stroke in patients with heart disease could be preferable to a pooled analysis of 4-5 small trials reporting few events, and combining the small trials with the large trial may introduce unnecessary uncertainty to the pooled estimate.

1.4. Assessing the Risk of Misleading Meta-analysis Results

Next, reviews should explore the risk that the meta-analysis will show results that do not accurately capture the true underlying effect (Step C, Figure 1.1 ). Tables, forest plots (without pooling), and some other preliminary statistical tests are useful tools for this stage. Several patterns can arise that should lead investigators to be cautious about combining studies.

Wide-Ranging Effect Sizes

Sometimes one study may show a large benefit and another study of the same intervention may show a small benefit. This may be due to random error, especially when the studies are small. However, this situation also raises the possibility that observed effects truly are widely variable in different subpopulations or situations. Another look at the population characteristics is warranted in this situation to see if the investigators can identify characteristics that are correlated with effect size and direction, potentially explaining clinical heterogeneity.

Even if no characteristic can be identified that explains why the intervention had such widely disparate effects, there could be unmeasured features that explain the difference. If the intervention really does have widely variable impact in different subpopulations, particularly if it is benefiting some patients and harming others, it would be misleading to report a single average effect.

Suspicion of Publication or Reporting Bias

Sometimes, due to lack of effect, trial results are never published (risking publication bias), or are only published in part (risking reporting bias). These missing results can introduce bias and reduce the precision of meta-analysis. 13 Investigators can explore the risk of reporting bias by comparing trials that do and do not report important outcomes to assess whether outcomes appear to be missing at random. 13 For example, investigators may have 30 trials of weight loss interventions with only 10 reporting blood pressure, which is considered an important outcome for the review. This pattern of results may indicate reporting bias as trials finding group differences in blood pressure were more likely to report blood pressure findings. On the other hand, perhaps most of the studies limited to patients with elevated cardiovascular disease (CVD) risk factors did report blood pressure. In this case, the investigators may decide to combine the studies reporting blood pressure that were conducted in high CVD risk populations. However, investigators should be clear about the applicable subpopulation. An examination of the clinical and methodological features of the subset of trials where blood pressure was reported is necessary to make an informed judgement about whether to conduct a meta-analysis.

Small Studies Effect

If small studies show larger effects than large studies, the pooled results may overestimate the true effect size, possibly due to publication or reporting bias. 14 When investigators have at least 10 trials to combine they should examine small studies effects using standard statistical tests such as the Egger test. 15 If there appears to be a small studies effect, the investigators may decide not to report pooled results since they could be misleading. On the other hand, small studies effects could be happening for other reasons, such as differences in sample characteristics, attrition, or assessment methods. These factors do not suggest bias, but should be explored to the degree possible. See Chapter 4 for more information about exploring heterogeneity.

1.5. Special Considerations When Pooling a Small Number of Studies

When pooling a small number of studies (e.g., <10 studies), a number of considerations arise (Step E, Figure 1.1 ):

Rare Outcomes

Meta-analyses of rare binary outcomes are frequently underpowered, and tend to overestimate the true effect size, so pooling should be undertaken with caution. 11 A small difference in absolute numbers of events can result in large relative differences, usually with low precision (i.e., wide confidence intervals). This could result in misleading effect estimates if the analysis is limited to trials that are underpowered for the rare outcomes. 12 One example is all-cause mortality, which is frequently provided as part of the participant flow results, but may not be a primary outcome, may not have adjudication methods described, and typically occurs very rarely. Studies are often underpowered to detect differences in mortality if it is not a primary outcome. Investigators should consider calculating an optimal information size (OIS) when events are rare to see if the combined group of studies has sufficient power to detect group differences. This could be a concern even for a relatively large number of studies, if the total sample size is not very large. 16 See Chapter 3 for more detail on handling rare binary outcomes.

Small Sample Sizes

When pooling a relatively small number of studies, pooling should be undertaken with caution if the body of evidence is limited only to small studies. Results from small trials are less likely to be reliable than results of large trials, even when the risk of bias is low. 17 First, in small trials it is difficult to balance the proportion of patients in potentially important subgroups across interventions, and a difference between interventions of just a few patients in a subgroup can result in a large proportional difference between interventions. Characteristics that are rare are particularly at risk of being unbalanced in trials with small samples. In such situations there is no way to know if trial effects are due to the intervention or to differences in the intervention groups. In addition, patients are generally drawn from a narrower geographic range in small trials, making replication in other trials more uncertain. Finally, although it is not always the case, large trials are more likely to involve a level of scrutiny and standardization to ensure lower risk of bias than are small trials. Therefore, when the trials have small sample sizes, pooled effects are less likely to reflect the true effects of the intervention. In this case, the required or optimal information size can help the investigators determine whether the sample size is sufficient to conclude that results are likely to be stable and not due to random heterogeneity (i.e., truly significant or truly null results; not a type I or type II error). 16 , 18 An option in this case would be to pool the studies and acknowledge imprecision or other limitations when rating the strength of evidence.

What would be considered a “small” trial varies for different fields and outcomes. For addressing an outcome that only happens in 10% of the population, a small trial might be 100 to 200 per intervention arm, whereas a trial addressing a continuous quality of life measure may be small with 20 to 30 per intervention. Looking carefully at what the studies were powered to detect and the credibility of the power calculations may help determine what constitutes a “small” trial. Investigators should also consider how variable the impact of an intervention may be over different settings and subpopulations when determining how to weigh the importance of small studies. For example, the effects of a counseling intervention that relies on patients to change their behavior in order to reap health benefits may be more strongly influenced by characteristics of the patients and setting than a mechanical or chemical agent.

When the number of trials to be pooled is small, there is a heightened risk that statistical heterogeneity will be substantially underestimated, resulting in 95% confidence intervals that are inappropriately narrow and do not have 95% coverage. This is especially concerning when the number of studies being pooled is fewer than five to seven. 19 – 21

Accounting for these factors should guide an evaluation of whether it is advisable to pool the relatively small group of studies. As with many steps in the multi-stage decision to pool, the conclusion that a given investigator arrives at is subjective, although such evaluations should be guided by the criteria above. If consideration of these factors reassures investigators that the risk of bias associated with pooling is sufficiently low, then pooling can proceed. The next step of pooling, whether for a small, moderate, or large body of studies, is to consider statistical heterogeneity.

1.6. Statistical Heterogeneity

Once clinical and methodological heterogeneity and other factors described above have been deemed acceptable for pooling, investigators should next consider statistical heterogeneity (Step F, Figure 1.1 ). We discuss statistical heterogeneity in general in this chapter, and provide a deeper methodological discussion in Chapter 4 . This initial consideration of statistical heterogeneity is accomplished by conducting a preliminary meta-analysis. Next the investigator must decide if the results of the meta-analysis are valid and should be presented, rather than simply showing tables or forest plots without pooled results. If statistical heterogeneity is very high, the investigators may question whether an “average” effect is really meaningful or useful. If there is a reasonably large number of trials, the investigators may shift to exploring effect modification with high heterogeneity, however this may not be possible if few trials are available. While many would likely agree that pooling (or reporting pooled results) should be avoided when there are few studies and statistical heterogeneity is high, what constitutes “few” studies and “high” heterogeneity is a matter of judgment.

While there are a variety of methods for characterizing statistical heterogeneity, one common method is the I 2 statistic, the proportion of total variance in the pooled trials that is due to inter-study variance, as opposed to random variation. 22 The Cochrane manual proposes ranges for interpreting I 2 : 10 statistical heterogeneity associated with I 2 values of 0-40% might not be important, 30-60% may represent moderate heterogeneity, 50-90% may represent substantial heterogeneity, and 75-100% is considerable heterogeneity. Ranges overlap to reflect that other factors—such as the number and size of the trials and the magnitude and direction of the effect—must be taken into consideration. Other measures of statistical heterogeneity include Cochrane’s Q and τ 2 , but these heterogeneity statistics do not have intrinsic standardized scales that allow specific values to be characterized as “small,” “medium,” or “large” in any meaningful way. 23 However, τ 2 can be interpreted on the scale of the pooled effect, as the variance of the true effect. All these measures are discussed in more detail in Chapter 4 .

Although widely used in quantitative synthesis, the I 2 statistic has come under criticism in recent years. One important issue with I 2 is that it can be an inaccurate reflection of statistical heterogeneity when there are few studies to pool and high statistical heterogeneity. 24 , 25 For example, in random effects models (but not fixed effects models), calculations demonstrate that I 2 tends to underestimate true statistical heterogeneity when there are fewer than about 10 studies and the I 2 is 50% or more. 26 In addition, I 2 is correlated with the sample size of the included studies, generally increasing with larger samples. 27 Complicating this, meta-analyses of continuous measures tend to have higher heterogeneity than those of binary outcomes, and I 2 tends to increase as the number of studies increases when analyzing continuous outcomes, but not binary outcomes. 28 , 29 This has prompted some authors to suggest that different standards may be considered for interpreting I 2 for meta-analyses of continuous and binary outcomes, but I 2 should only be considered reliable when there are a sufficient number of studies. 29 Unfortunately there is not clear consensus regarding what constitutes a sufficient number of studies for a given amount of statistical heterogeneity, nor is it possible to be entirely prescriptive, given the limits of I 2 as a measure of heterogeneity. Thus, I 2 is one piece of information that should be considered, but generally should not be the primary deciding factor for whether to pool.

1.7. Conclusion

In the end, the decision to pool boils down to the question: will the results of a meta-analysis help you find a scientifically valid answer to a meaningful question? That is, will the meta-analysis provide something in addition to what can be understood from looking at the studies individually? Further, do the clinical, methodological, and statistical features of the body of studies permit them to be quantitatively combined and summarized in a valid fashion? Each of these decisions can be broken down into specific considerations (outlined in Figure 1.1 ) There is broad guidance to inform investigators in making each of these decisions, but generally the choices involved are subjective. The investigators’ scientific goal might factor into the evaluation of these considerations: for example, if investigators seek a general summary of the combined effect (e.g., direction only) versus an estimated effect size, the consideration of whether to pool may be weighed differently. In the end, to provide a meaningful result, the trials must be similar enough in content, procedures, and implementation to represent a cohesive group that is relevant to real practice/decision-making.

Recommendations

- Use Figure 1.1 when deciding whether to pool studies

Chapter 2. Optimizing Use of Effect Size Data

2.1. introduction.

The employed methods for meta-analysis will depend upon the nature of the outcome data. The two most common data types encountered in trials are binary/dichotomous (e.g., dead or alive, patient admitted to hospital or not, treatment failure or success, etc.) and continuous (e.g., weight, systolic blood pressure, etc.). Some outcomes (e.g., heart rate, counts of common events) that are not strictly continuous, are often treated as continuous for the purposes of meta-analysis based on assumptions of normality and the belief that statistical methods that are applied to normal distributions can be applicable to other distributions (central limit theory). Continuous outcomes are also frequently analyzed as binary outcomes when there are clinically meaningful cut-points or thresholds (e.g., a patient’s systolic blood pressure may be classified as low or high based on whether it is under or over 130mmHG). While this type of dichotomization may be more clinically meaningful it reduces statistical information, so investigators should provide their rationale for taking this approach.

Other less common data types that do not fit into either the binary or continuous categories include ordinal, categorical, rate, and time to event to data. Meta-analyzing these types of data will usually require reporting of the relevant statistics (e.g., hazard ratio, proportional odds ratio, incident rate ratio) by the study authors.

2.2. Nuances of Binary Effect Sizes

Data needed for binary effect size computation.

Under ideal circumstances, the minimal data necessary for the computation of effect sizes of binary data would be available in published trial documents or from original sources. Specifically, risk difference (RD), relative risk (RR), and odds ratios (OR) can be computed when the number of events (technically the number of cases in whom there was an event) and sample sizes are known for treatment and control groups. A schematic of one common approach to assembling binary data from trials for effect size computation is presented in Table 2.1 . This approach will facilitate conversion to analysis using commercially-available software such as Stata (College Station, TX) or Comprehensive Meta-Analysis (Englewood, NJ).

Assembling binary data for effect size computation.

In many instances, a single study (or subset of studies) to be included in the meta-analysis provides only one measure of association (an odds ratio, for example), and the sample size and event counts are not available. In that case, the meta-analytic effect size will be dictated by the available data. However, choosing the appropriate effect size is important for integrity and transparency, and every effort should be made to obtain all the data presented in Table 2.1 . Note that CONSORT guidance requires that published trial data should include the number of events and sample sizes for both treatment and control groups. 30 And, PRISMA guidance supports describing any processes for obtaining and confirming data from investigators 31 – a frequently required step.

In the event that data are only available in an effect size from the original reports, it is important to extract both the mean effect sizes and the associated 95% confidence intervals. Having raw event data available as in Table 2.1 not only facilitates the computation of various effect sizes, but also allows for the application of either binomial (preferred) or normal likelihood approaches; 32 only normal likelihood can be applied to summary statistics (e.g., an odds ratio and confidence interval in the primary study report).

Choosing Among Effect Size Options

One absolute measure and two relative measures are commonly used in meta-analyses involving binary data. The RD (an absolute measure) is a simple metric that is easily understood by clinicians, patients, and other stakeholders. The relative measures, RR or OR, are also used frequently. All three metrics should be considered additive, just on different scales. That is, RD is additive on a raw scale, RR on a log scale, and OR on a logit scale.

Risk Difference

The RD is easily understood by clinicians and patients alike, and therefore most useful to aid decision making. However, the RD tends to be less consistent across studies compared with relative measures of effect size (RR and OR). Hence, the RD may be a preferred measure in meta-analyses when the proportions of events among control groups are relatively common and similar across studies. When events are rare and/or when event rates differ across studies, however, the RD is not the preferred effect size to be used in meta-analysis because combined estimates based on RD in such instances have more conservative confidence intervals and lower statistical power. The calculation of RD and other effect size metrics using binary data from clinical trials can be performed considering the following labeling ( Table 2.2 ).

Organizing binary data for effect size computation.

Equation Set 2.1. Risk Difference

- RD = risk difference

- V RD = variance of the risk difference

- SE RD = standard error of the risk difference

- LL RD = lower limit of the 95% confidence interval of the risk difference

- UL RD = upper limit of the 95% confidence interval of the risk difference

Number Needed To Treat Related to Risk Difference

- NNT = number needed to treat

In case of a negative RD, the number needed to harm (NNH) or number needed to treat for one patient to be harmed is = − 1/RD.

The Wald method 34 is commonly used to calculate confidence intervals for NNT. It is reasonably adequate for large samples and probabilities not close to either 0 or 1, however it can be less reliable for small samples, probabilities close to either 0 or 1, or unbalanced trial designs. 35 An adjustment to the Wald method (i.e., adding pseudo-observations) helps mitigate concern about its application in small samples, 36 but it doesn’t account for other sources of limitations to this method. The Wilson method of calculating confidence intervals for NNT, as described in detail by Newcome, 37 has better coverage properties irrespective of sample size, is free of implausible results, and is argued to be easier to calculate compared with Wald confidence intervals. 35 Therefore, the Wilson method is preferable to the Wald method for calculating confidence intervals for NNT. When considering using NNT as the effect size in meta-analysis, see commentary by Lesaffre and Pledger.38 When considering using NNT as the effect size in meta-analysis, see commentary on the superior performance of combined NNT on the RD scale as opposed to the NNT scale.

It is important to note that the RR and OR are effectively equivalent for event rates below about 10%. In such cases, the RR is chosen over the OR simply for interpretability (an important consideration) and not substantive differences. A potential drawback to the use of RR over OR (or RD) is that the RR of an event is not the reciprocal of the RR for the non-occurrence of that event (e.g., using survival as the outcome instead of death). In contrast, switching between events and non-occurrence of events is reciprocal in the metric of OR and only entails a change in the sign of OR. If switching between death and survival, for example, is central to the meta-analysis, then the RR is likely not the binary effect size metric of choice unless all raw data are available and re-computation is possible. Moreover, investigators should be particularly attentive to the definition of an outcome event when using a RR.

The calculation of RR using binary data can be performed considering the labeling listed in Table 2.2 . Of particular note, the metrics of dispersion related to the RR are first computed in a natural log metric and then converted to the metric of RR.

Equation Set 2.2. Risk Ratio

- RR = risk ratio

- ln RR = natural log of the risk ratio

- V lnRR = variance of the natural log of the risk ratio

- SE lnRR = standard error of the natural log of the risk ratio

- LLlnRR = lower limit of the 95% confidence interval of the natural log of the risk ratio

- UL lnRR = upper limit of the 95% confidence interval of the natural log of the risk ratio

- LL RR = lower limit of the 95% confidence interval of the risk ratio

- UL RR = upper limit of the 95% confidence interval of the risk ratio

Therefore, while the definition of the outcome event needs to be consistent among the included studies when using any measure, the investigators should be particularly attentive to the definition of an outcome event when using an RR.

Odds Ratios

An alternative relative metric for use with binary data is the OR. Given that ORs are frequently presented in models with covariates, it is important to note that the OR is ‘non-collapsible,’ meaning that effect modification varies depending on the covariates for which control has been made; this favors the reporting of RR over OR, particularly when outcomes are common and covariates are included. 39 The calculation of OR using binary data can be performed considering the labeling listed in Table 2.2 . Similar to the computation of RR, the metrics of dispersion related to the OR are first computed in a natural log metric and then converted to the metric of OR.

Equation Set 2.3. Odds ratios

- OR = odds ratio

- Ln OR = natural log of the odds ratio

- V lnOR = variance of the natural log of the odds ratio

- SE lnoR = standard error of the natural log of the odds ratio

- LLlnOR = lower limit of the 95% confidence interval of the natural log of the odds ratio

- UL lnOR = upper limit of the 95% confidence interval of the natural log of the odds ratio

- LL OR = lower limit of the 95% confidence interval of the odds ratio

- UL OR = upper limit of the 95% confidence interval of the odds ratio

A variation on the calculation of OR is the Peto OR that is commonly referred to as the assumption-free method of calculating OR. The two key differences between the standard OR and the Peto OR is that the latter takes into consideration the expected number of events in the treatment group and also incorporates a hypergeometric variance. Because of these difference, the Peto OR is preferred for binary studies with rare events, especially when event rates are less than 1%. But in contrast, the Peto OR is biased when treatment effects are large, due to centering around the null hypothesis, and in the instance of imbalanced treatment and control groups. 40

Equation Set 2.4. Peto odds ratios

ORpeto = exp [ { A − E ( A ) } / v ] where E(A) is the expected number of events in the treatment group calculated as: E ( A ) = n 1 ( A + E ) N and v is hypergeometric variance, calculated as: v = { n 1 n 2 ( A + C ) ( B + D ) } / { N 2 ( N − 1 ) }

There is no perfect effect size of binary data to choose because each has benefits and disadvantages. Criteria used to compare and contrast these measures include consistency over a set of studies, statistical properties, and interpretability. Key benefits and disadvantages of each are presented in Table 2.3 . In the table, the term “baseline risk” is the proportion of subjects in the control group who experienced the event. The term “control rate” is sometimes used for this measure as well.

Benefits and disadvantages of binary data effect sizes.

Time-to-Event and Count Outcomes

For time to event data, the effect size measure is a hazard ratio (HR), which is commonly estimated from the Cox proportional hazards model. In the best-case scenario, HR and associated 95% confidence intervals are available from all studies, the time horizon is similar across studies, and there is evidence that the proportional hazards assumption was met in each study to be included in a meta-analysis. When these conditions are not met, an HR and associated dispersion can still be extracted and meta-analyzed. However, this approach raises concerns about reproducibility due to observer variation. 44

Incident rate ratio (IRR) is used for count data and can be estimated from a Poisson or negative binomial regression model. The IRR is a relative metric based on counts of events (e.g., number of hospitalizations, or days of length of stay) over time (i.e., per person-year) compared between trial arms. It is important to consider how IRR estimates were derived in individual studies particularly with respect to adjustments for zero-inflation and/or over-dispersion as these modeling decisions can be sources of between-study heterogeneity. Moreover, studies that include count data may have zero counts in both groups, which may require less common and more nuanced approaches to meta-analysis like Poisson regression with random intervention effects. 45

2.3. Continuous Outcomes

Assembling data needed for effect size computation.

Meta-analysis of studies presenting continuous data requires both estimated differences between the two groups being compared and estimated standard errors of those differences. Estimating the between-group difference is easiest when the study provides the mean difference. While both a standardized mean difference and ratio of means could be given by the study authors, studies more often report means for each group. Thus, a mean difference or ratio of means often must be computed.

If estimates of the standard errors of the mean are not provided studies commonly provide confidence intervals, standard deviations, p-values, z-statistics, and/or t-statistics, which make it possible to compute the standard error of the mean difference. In the absence of any of these statistics, other methods are available to estimate standard error. 45

(Weighted) Mean Difference

The mean difference (formerly known as weighted mean difference) is the most common way of summarizing and pooling a continuous outcome in a meta-analysis. Pooled mean differences can be computed when every study in the analysis measures the outcome on the same scale or on scales that can be easily converted. For example, total weight can be pooled using mean difference even if different studies reported weights in kilograms and pounds; however it is not possible to pool quality of life measured in both Self Perceived Quality of Life scale (SPQL) and the 36-item Short Form Survey Instrument (SF-36), since these are not readily convertible to one format.

Computation of the mean difference is straightforward and explained elsewhere. 5 Most software programs will require the mean, standard deviation, and sample size from each intervention group and for each study in the meta-analysis, although as mentioned above, other pieces of data may also be used.

Some studies report values as change from baseline, or alternatively present both baseline and final values. In these cases, it is possible to pool differences in final values in some studies with differences in change from baseline values in other studies, since they will be estimating the same value in a randomized control trial. If baseline values are unbalanced it may be better to perform ANCOVA analysis (see below). 5

Standardized Mean Difference

Sometimes different studies will assess the same outcome using different scales or metrics that cannot be readily converted to a common measure. In such instances the most common response is to compute a standardized mean difference (SMD) for each study and then pool these across all studies in the meta-analysis. By dividing the mean difference by a pooled estimate of the standard deviation, we theoretically put all scales in the same unit (standard deviation), and are then able to statistically combine all the studies. While the standardized mean difference could be used even when studies use the same metric, it is generally preferred to use mean difference. Interpretation of results is easier when the final pooled estimate is given in the same units as the original studies.

Several methods can compute SMDs. The most frequently used are Cohen’s d and Hedges’ g .

Cohen’s d