- Customer service and contact center

speech recognition

- Ben Lutkevich, Site Editor

- Karolina Kiwak

What is speech recognition?

Speech recognition, or speech-to-text, is the ability of a machine or program to identify words spoken aloud and convert them into readable text. Rudimentary speech recognition software has a limited vocabulary and may only identify words and phrases when spoken clearly. More sophisticated software can handle natural speech, different accents and various languages.

Speech recognition uses a broad array of research in computer science, linguistics and computer engineering. Many modern devices and text-focused programs have speech recognition functions in them to allow for easier or hands-free use of a device.

Speech recognition and voice recognition are two different technologies and should not be confused :

- Speech recognition is used to identify words in spoken language.

- Voice recognition is a biometric technology for identifying an individual's voice.

How does speech recognition work?

Speech recognition systems use computer algorithms to process and interpret spoken words and convert them into text. A software program turns the sound a microphone records into written language that computers and humans can understand, following these four steps:

- analyze the audio;

- break it into parts;

- digitize it into a computer-readable format; and

- use an algorithm to match it to the most suitable text representation.

Speech recognition software must adapt to the highly variable and context-specific nature of human speech. The software algorithms that process and organize audio into text are trained on different speech patterns, speaking styles, languages, dialects, accents and phrasings. The software also separates spoken audio from background noise that often accompanies the signal.

To meet these requirements, speech recognition systems use two types of models:

- Acoustic models. These represent the relationship between linguistic units of speech and audio signals.

- Language models. Here, sounds are matched with word sequences to distinguish between words that sound similar.

What applications is speech recognition used for?

Speech recognition systems have quite a few applications. Here is a sampling of them.

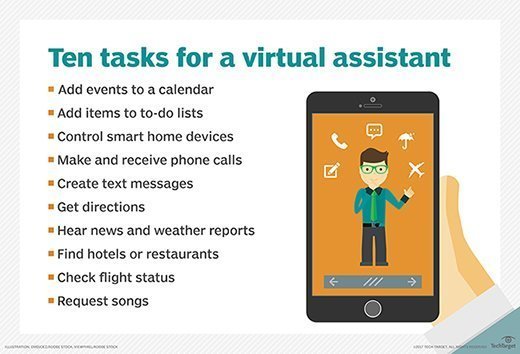

Mobile devices. Smartphones use voice commands for call routing, speech-to-text processing, voice dialing and voice search. Users can respond to a text without looking at their devices. On Apple iPhones, speech recognition powers the keyboard and Siri, the virtual assistant. Functionality is available in secondary languages, too. Speech recognition can also be found in word processing applications like Microsoft Word, where users can dictate words to be turned into text.

Education. Speech recognition software is used in language instruction. The software hears the user's speech and offers help with pronunciation.

Customer service. Automated voice assistants listen to customer queries and provides helpful resources.

Healthcare applications. Doctors can use speech recognition software to transcribe notes in real time into healthcare records.

Disability assistance. Speech recognition software can translate spoken words into text using closed captions to enable a person with hearing loss to understand what others are saying. Speech recognition can also enable those with limited use of their hands to work with computers, using voice commands instead of typing.

Court reporting. Software can be used to transcribe courtroom proceedings, precluding the need for human transcribers.

Emotion recognition. This technology can analyze certain vocal characteristics to determine what emotion the speaker is feeling. Paired with sentiment analysis, this can reveal how someone feels about a product or service.

Hands-free communication. Drivers use voice control for hands-free communication, controlling phones, radios and global positioning systems, for instance.

What are the features of speech recognition systems?

Good speech recognition programs let users customize them to their needs. The features that enable this include:

- Language weighting. This feature tells the algorithm to give special attention to certain words, such as those spoken frequently or that are unique to the conversation or subject. For example, the software can be trained to listen for specific product references.

- Acoustic training. The software tunes out ambient noise that pollutes spoken audio. Software programs with acoustic training can distinguish speaking style, pace and volume amid the din of many people speaking in an office.

- Speaker labeling. This capability enables a program to label individual participants and identify their specific contributions to a conversation.

- Profanity filtering. Here, the software filters out undesirable words and language.

What are the different speech recognition algorithms?

The power behind speech recognition features comes from a set of algorithms and technologies. They include the following:

- Hidden Markov model. HMMs are used in autonomous systems where a state is partially observable or when all of the information necessary to make a decision is not immediately available to the sensor (in speech recognition's case, a microphone). An example of this is in acoustic modeling, where a program must match linguistic units to audio signals using statistical probability.

- Natural language processing. NLP eases and accelerates the speech recognition process.

- N-grams. This simple approach to language models creates a probability distribution for a sequence. An example would be an algorithm that looks at the last few words spoken, approximates the history of the sample of speech and uses that to determine the probability of the next word or phrase that will be spoken.

- Artificial intelligence. AI and machine learning methods like deep learning and neural networks are common in advanced speech recognition software. These systems use grammar, structure, syntax and composition of audio and voice signals to process speech. Machine learning systems gain knowledge with each use, making them well suited for nuances like accents.

What are the advantages of speech recognition?

There are several advantages to using speech recognition software, including the following:

- Machine-to-human communication. The technology enables electronic devices to communicate with humans in natural language or conversational speech.

- Readily accessible. This software is frequently installed in computers and mobile devices, making it accessible.

- Easy to use. Well-designed software is straightforward to operate and often runs in the background.

- Continuous, automatic improvement. Speech recognition systems that incorporate AI become more effective and easier to use over time. As systems complete speech recognition tasks, they generate more data about human speech and get better at what they do.

What are the disadvantages of speech recognition?

While convenient, speech recognition technology still has a few issues to work through. Limitations include:

- Inconsistent performance. The systems may be unable to capture words accurately because of variations in pronunciation, lack of support for some languages and inability to sort through background noise. Ambient noise can be especially challenging. Acoustic training can help filter it out, but these programs aren't perfect. Sometimes it's impossible to isolate the human voice.

- Speed. Some speech recognition programs take time to deploy and master. The speech processing may feel relatively slow .

- Source file issues. Speech recognition success depends on the recording equipment used, not just the software.

The takeaway

Speech recognition is an evolving technology. It is one of the many ways people can communicate with computers with little or no typing. A variety of communications-based business applications capitalize on the convenience and speed of spoken communication that this technology enables.

Speech recognition programs have advanced greatly over 60 years of development. They are still improving, fueled in particular by AI.

Learn more about the AI-powered business transcription software in this Q&A with Wilfried Schaffner, chief technology officer of Speech Processing Solutions.

Continue Reading About speech recognition

- How can speech recognition technology support remote work?

- Automatic speech recognition may be better than you think

- Speech recognition use cases enable touchless collaboration

- Automated speech recognition gives CX vendor an edge

- Speech API from Mozilla's Web developer platform

Related Terms

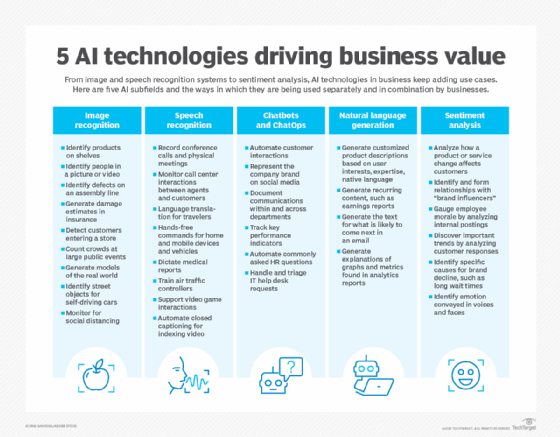

Dig deeper on customer service and contact center.

7 ways AI is affecting the travel industry

natural language processing (NLP)

computational linguistics (CL)

Moving years' worth of SharePoint data out of on-premises storage to the cloud can be daunting, so choosing the correct migration...

Implementing an ECM system is not all about technology; it's also about the people. A proper rollout requires feedback from key ...

Incorporating consulting services and flexible accommodations for different LLMs, developer-focused Contentstack offers its own ...

Organizations have ramped up their use of communications platform as a service and APIs to expand communication channels between ...

Google will roll out new GenAI in Gmail and Docs first and then other apps throughout the year. In 2025, Google plans to ...

For successful hybrid meeting collaboration, businesses need to empower remote and on-site employees with a full suite of ...

The data management and analytics vendor's embeddable database now includes streaming capabilities via support for Kafka and ...

The vendor's latest update adds new customer insight capabilities, including an AI assistant, and industry-specific tools all ...

The data quality specialist's capabilities will enable customers to monitor unstructured text to ensure the health of data used ...

Arista, Cisco and HPE are racing to seize a share of the promising GenAI infrastructure market. Cisco and HPE have computing, ...

Explore the education, experience and skills needed to excel in the demanding yet rewarding field of NLP engineering, including ...

For business leaders, machine learning's predictive capabilities can forecast product demand, reduce equipment downtime and ...

Epicor continues its buying spree with PIM vendor Kyklo, enabling manufacturers and distributors to gain visibility into what ...

Supply chains have a range of connection points -- and vulnerabilities. Learn which vulnerabilities hackers look for first and ...

As supplier relationships become increasingly complex and major disruptions continue, it pays to understand the top supply chain ...

Speech Recognition: Everything You Need to Know in 2024

AIMultiple team adheres to the ethical standards summarized in our research commitments.

Speech recognition, also known as automatic speech recognition (ASR) , enables seamless communication between humans and machines. This technology empowers organizations to transform human speech into written text. Speech recognition technology can revolutionize many business applications , including customer service, healthcare, finance and sales.

In this comprehensive guide, we will explain speech recognition, exploring how it works, the algorithms involved, and the use cases of various industries.

If you require training data for your speech recognition system, here is a guide to finding the right speech data collection services.

What is speech recognition?

Speech recognition, also known as automatic speech recognition (ASR), speech-to-text (STT), and computer speech recognition, is a technology that enables a computer to recognize and convert spoken language into text.

Speech recognition technology uses AI and machine learning models to accurately identify and transcribe different accents, dialects, and speech patterns.

What are the features of speech recognition systems?

Speech recognition systems have several components that work together to understand and process human speech. Key features of effective speech recognition are:

- Audio preprocessing: After you have obtained the raw audio signal from an input device, you need to preprocess it to improve the quality of the speech input The main goal of audio preprocessing is to capture relevant speech data by removing any unwanted artifacts and reducing noise.

- Feature extraction: This stage converts the preprocessed audio signal into a more informative representation. This makes raw audio data more manageable for machine learning models in speech recognition systems.

- Language model weighting: Language weighting gives more weight to certain words and phrases, such as product references, in audio and voice signals. This makes those keywords more likely to be recognized in a subsequent speech by speech recognition systems.

- Acoustic modeling : It enables speech recognizers to capture and distinguish phonetic units within a speech signal. Acoustic models are trained on large datasets containing speech samples from a diverse set of speakers with different accents, speaking styles, and backgrounds.

- Speaker labeling: It enables speech recognition applications to determine the identities of multiple speakers in an audio recording. It assigns unique labels to each speaker in an audio recording, allowing the identification of which speaker was speaking at any given time.

- Profanity filtering: The process of removing offensive, inappropriate, or explicit words or phrases from audio data.

What are the different speech recognition algorithms?

Speech recognition uses various algorithms and computation techniques to convert spoken language into written language. The following are some of the most commonly used speech recognition methods:

- Hidden Markov Models (HMMs): Hidden Markov model is a statistical Markov model commonly used in traditional speech recognition systems. HMMs capture the relationship between the acoustic features and model the temporal dynamics of speech signals.

- Estimate the probability of word sequences in the recognized text

- Convert colloquial expressions and abbreviations in a spoken language into a standard written form

- Map phonetic units obtained from acoustic models to their corresponding words in the target language.

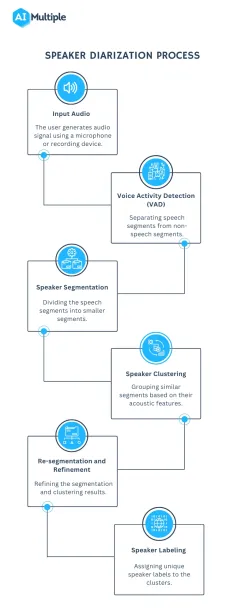

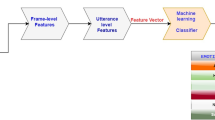

- Speaker Diarization (SD): Speaker diarization, or speaker labeling, is the process of identifying and attributing speech segments to their respective speakers (Figure 1). It allows for speaker-specific voice recognition and the identification of individuals in a conversation.

Figure 1: A flowchart illustrating the speaker diarization process

- Dynamic Time Warping (DTW): Speech recognition algorithms use Dynamic Time Warping (DTW) algorithm to find an optimal alignment between two sequences (Figure 2).

Figure 2: A speech recognizer using dynamic time warping to determine the optimal distance between elements

5. Deep neural networks: Neural networks process and transform input data by simulating the non-linear frequency perception of the human auditory system.

6. Connectionist Temporal Classification (CTC): It is a training objective introduced by Alex Graves in 2006. CTC is especially useful for sequence labeling tasks and end-to-end speech recognition systems. It allows the neural network to discover the relationship between input frames and align input frames with output labels.

Speech recognition vs voice recognition

Speech recognition is commonly confused with voice recognition, yet, they refer to distinct concepts. Speech recognition converts spoken words into written text, focusing on identifying the words and sentences spoken by a user, regardless of the speaker’s identity.

On the other hand, voice recognition is concerned with recognizing or verifying a speaker’s voice, aiming to determine the identity of an unknown speaker rather than focusing on understanding the content of the speech.

What are the challenges of speech recognition with solutions?

While speech recognition technology offers many benefits, it still faces a number of challenges that need to be addressed. Some of the main limitations of speech recognition include:

Acoustic Challenges:

- Assume a speech recognition model has been primarily trained on American English accents. If a speaker with a strong Scottish accent uses the system, they may encounter difficulties due to pronunciation differences. For example, the word “water” is pronounced differently in both accents. If the system is not familiar with this pronunciation, it may struggle to recognize the word “water.”

Solution: Addressing these challenges is crucial to enhancing speech recognition applications’ accuracy. To overcome pronunciation variations, it is essential to expand the training data to include samples from speakers with diverse accents. This approach helps the system recognize and understand a broader range of speech patterns.

- For instance, you can use data augmentation techniques to reduce the impact of noise on audio data. Data augmentation helps train speech recognition models with noisy data to improve model accuracy in real-world environments.

Figure 3: Examples of a target sentence (“The clown had a funny face”) in the background noise of babble, car and rain.

Linguistic Challenges:

- Out-of-vocabulary words: Since the speech recognizers model has not been trained on OOV words, they may incorrectly recognize them as different or fail to transcribe them when encountering them.

Figure 4: An example of detecting OOV word

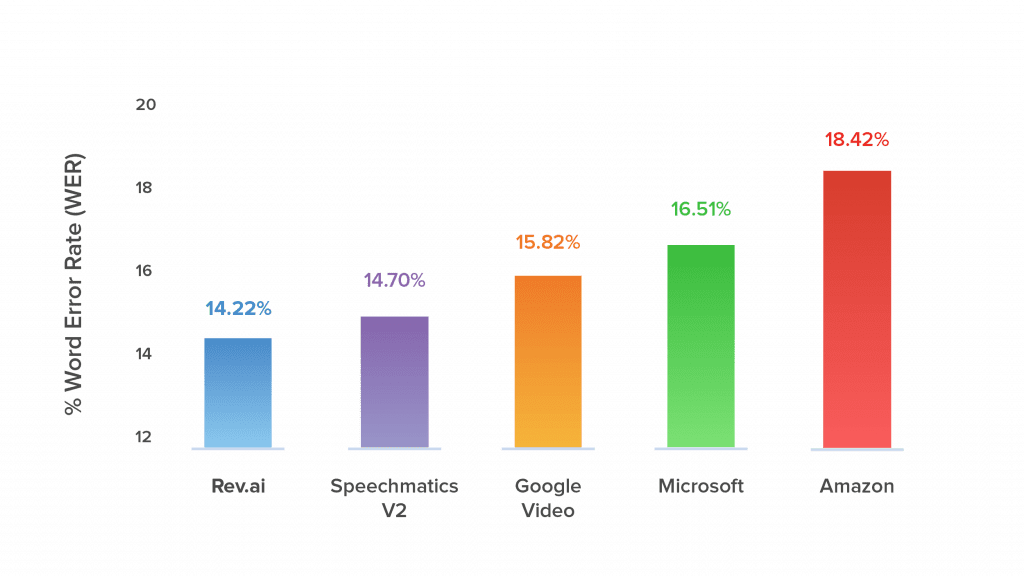

Solution: Word Error Rate (WER) is a common metric that is used to measure the accuracy of a speech recognition or machine translation system. The word error rate can be computed as:

Figure 5: Demonstrating how to calculate word error rate (WER)

- Homophones: Homophones are words that are pronounced identically but have different meanings, such as “to,” “too,” and “two”. Solution: Semantic analysis allows speech recognition programs to select the appropriate homophone based on its intended meaning in a given context. Addressing homophones improves the ability of the speech recognition process to understand and transcribe spoken words accurately.

Technical/System Challenges:

- Data privacy and security: Speech recognition systems involve processing and storing sensitive and personal information, such as financial information. An unauthorized party could use the captured information, leading to privacy breaches.

Solution: You can encrypt sensitive and personal audio information transmitted between the user’s device and the speech recognition software. Another technique for addressing data privacy and security in speech recognition systems is data masking. Data masking algorithms mask and replace sensitive speech data with structurally identical but acoustically different data.

Figure 6: An example of how data masking works

- Limited training data: Limited training data directly impacts the performance of speech recognition software. With insufficient training data, the speech recognition model may struggle to generalize different accents or recognize less common words.

Solution: To improve the quality and quantity of training data, you can expand the existing dataset using data augmentation and synthetic data generation technologies.

13 speech recognition use cases and applications

In this section, we will explain how speech recognition revolutionizes the communication landscape across industries and changes the way businesses interact with machines.

Customer Service and Support

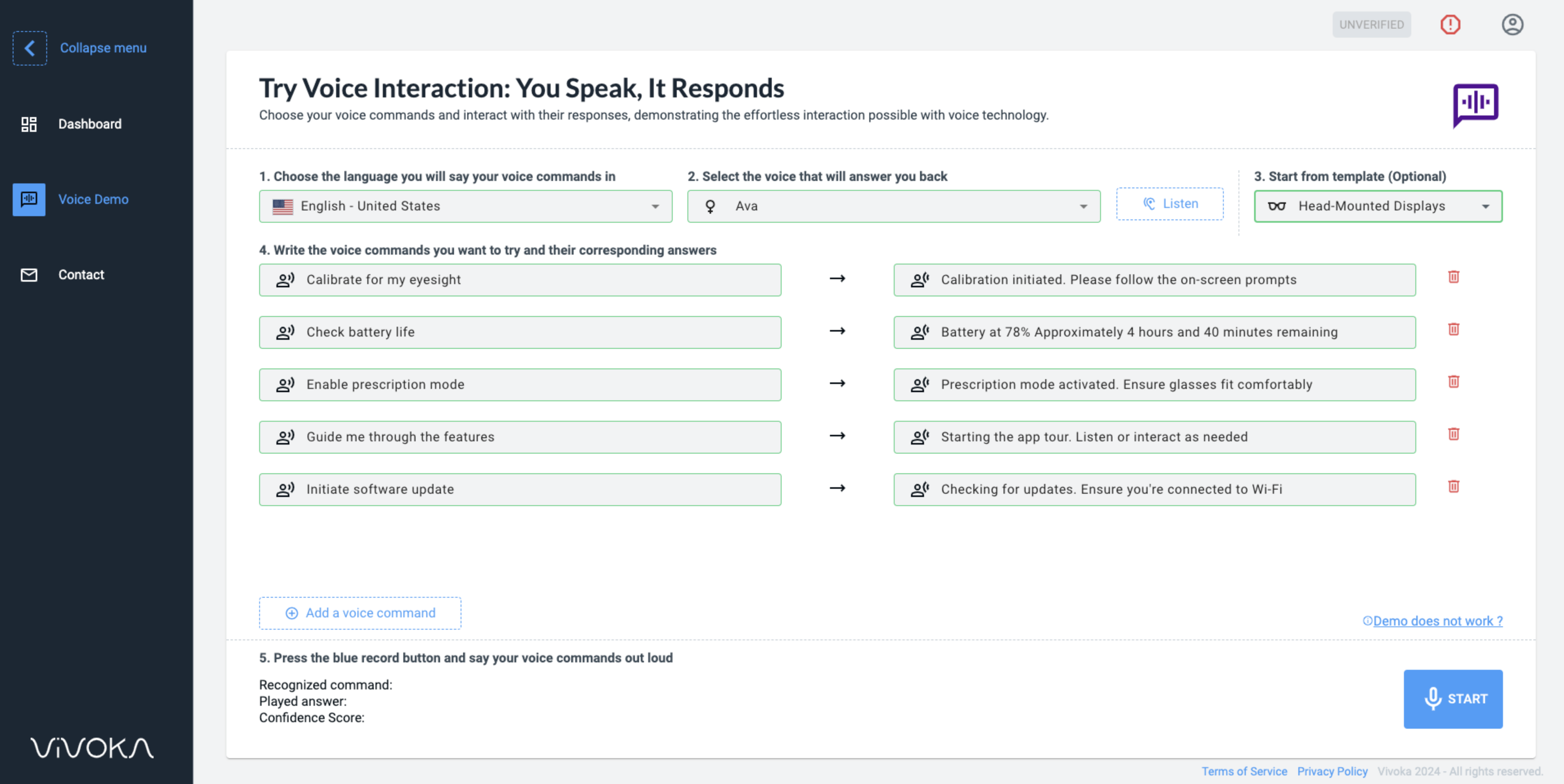

- Interactive Voice Response (IVR) systems: Interactive voice response (IVR) is a technology that automates the process of routing callers to the appropriate department. It understands customer queries and routes calls to the relevant departments. This reduces the call volume for contact centers and minimizes wait times. IVR systems address simple customer questions without human intervention by employing pre-recorded messages or text-to-speech technology . Automatic Speech Recognition (ASR) allows IVR systems to comprehend and respond to customer inquiries and complaints in real time.

- Customer support automation and chatbots: According to a survey, 78% of consumers interacted with a chatbot in 2022, but 80% of respondents said using chatbots increased their frustration level.

- Sentiment analysis and call monitoring: Speech recognition technology converts spoken content from a call into text. After speech-to-text processing, natural language processing (NLP) techniques analyze the text and assign a sentiment score to the conversation, such as positive, negative, or neutral. By integrating speech recognition with sentiment analysis, organizations can address issues early on and gain valuable insights into customer preferences.

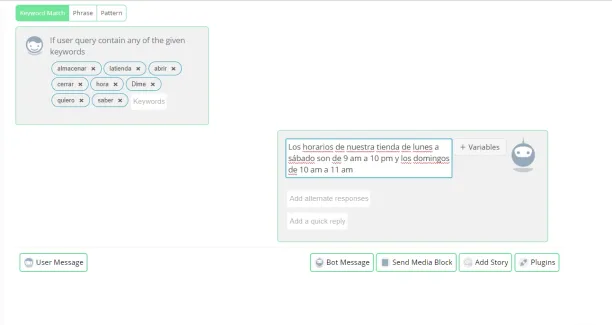

- Multilingual support: Speech recognition software can be trained in various languages to recognize and transcribe the language spoken by a user accurately. By integrating speech recognition technology into chatbots and Interactive Voice Response (IVR) systems, organizations can overcome language barriers and reach a global audience (Figure 7). Multilingual chatbots and IVR automatically detect the language spoken by a user and switch to the appropriate language model.

Figure 7: Showing how a multilingual chatbot recognizes words in another language

- Customer authentication with voice biometrics: Voice biometrics use speech recognition technologies to analyze a speaker’s voice and extract features such as accent and speed to verify their identity.

Sales and Marketing:

- Virtual sales assistants: Virtual sales assistants are AI-powered chatbots that assist customers with purchasing and communicate with them through voice interactions. Speech recognition allows virtual sales assistants to understand the intent behind spoken language and tailor their responses based on customer preferences.

- Transcription services : Speech recognition software records audio from sales calls and meetings and then converts the spoken words into written text using speech-to-text algorithms.

Automotive:

- Voice-activated controls: Voice-activated controls allow users to interact with devices and applications using voice commands. Drivers can operate features like climate control, phone calls, or navigation systems.

- Voice-assisted navigation: Voice-assisted navigation provides real-time voice-guided directions by utilizing the driver’s voice input for the destination. Drivers can request real-time traffic updates or search for nearby points of interest using voice commands without physical controls.

Healthcare:

- Recording the physician’s dictation

- Transcribing the audio recording into written text using speech recognition technology

- Editing the transcribed text for better accuracy and correcting errors as needed

- Formatting the document in accordance with legal and medical requirements.

- Virtual medical assistants: Virtual medical assistants (VMAs) use speech recognition, natural language processing, and machine learning algorithms to communicate with patients through voice or text. Speech recognition software allows VMAs to respond to voice commands, retrieve information from electronic health records (EHRs) and automate the medical transcription process.

- Electronic Health Records (EHR) integration: Healthcare professionals can use voice commands to navigate the EHR system , access patient data, and enter data into specific fields.

Technology:

- Virtual agents: Virtual agents utilize natural language processing (NLP) and speech recognition technologies to understand spoken language and convert it into text. Speech recognition enables virtual agents to process spoken language in real-time and respond promptly and accurately to user voice commands.

Further reading

- Top 5 Speech Recognition Data Collection Methods in 2023

- Top 11 Speech Recognition Applications in 2023

External Links

- 1. Databricks

- 2. PubMed Central

- 3. Qin, L. (2013). Learning Out-of-vocabulary Words in Automatic Speech Recognition . Carnegie Mellon University.

- 4. Wikipedia

Next to Read

Top 10 text to speech software analysis in 2024, top 5 speech recognition data collection methods in 2024, top 4 speech recognition challenges & solutions in 2024.

Your email address will not be published. All fields are required.

Related research

Why Should You Use Cloud Inference (Inference as a Service) in 2024?

Breaking: aiOla Surpasses OpenAI's Whisper

Breaking News: VentureBeat Reports aiOla Surpasses OpenAI's Whisper in Jargon Recognition!

Speech Recognition

What Is Speech Recognition?

Speech recognition is the technology that allows a computer to recognize human speech and process it into text. It’s also known as automatic speech recognition ( ASR ), speech-to-text, or computer speech recognition.

Speech recognition systems rely on technologies like artificial intelligence (AI) and machine learning (ML) to gain larger samples of speech, including different languages, accents, and dialects. AI is used to identify patterns of speech, words, and language to transcribe them into a written format.

In this blog post, we’ll take a deeper dive into speech recognition and look at how it works, its real-world applications, and how platforms like aiOla are using it to change the way we work.

Basic Speech Recognition Concepts

To start understanding speech recognition and all its applications, we need to first look at what it is and isn’t. While speech recognition is more than just the sum of its parts, it’s important to look at each of the parts that contribute to this technology to better grasp how it can make a real impact. Let’s take a look at some common concepts.

Speech Recognition vs. Speech Synthesis

Unlike speech recognition, which converts spoken language into a written format through a computer, speech synthesis does the same in reverse. In other words, speech synthesis is the creation of artificial speech derived from a written text, where a computer uses an AI-generated voice to simulate spoken language. For example, think of the language voice assistants like Siri or Alexa use to communicate information.

Phonetics and Phonology

Phonetics studies the physical sound of human speech, such as its acoustics and articulation. Alternatively, phonology looks at the abstract representation of sounds in a language including their patterns and how they’re organized. These two concepts need to be carefully weighed for speech AI algorithms to understand sound and language as a human might.

Acoustic Modeling

In acoustic modeling , the acoustic characteristics of audio and speech are looked at. When it comes to speech recognition systems, this process is essential since it helps analyze the audio features of each word, such as the frequency in which it’s used, the duration of a word, or the sounds it encompasses.

Language Modeling

Language modeling algorithms look at details like the likelihood of word sequences in a language. This type of modeling helps make speech recognition systems more accurate as it mimics real spoken language by looking at the probability of word combinations in phrases.

Speaker-Dependent vs. Speaker-Independent Systems

A system that’s dependent on a speaker is trained on the unique voice and speech patterns of a specific user, meaning the system might be highly accurate for that individual but not as much for other people. By contrast, a system that’s independent of a speaker can recognize speech for any number of speakers, and while more versatile, may be slightly less accurate.

How Does Speech Recognition Work?

There are a few different stages to speech recognition, each one providing another layer to how language is processed by a computer. Here are the different steps that make up the process.

- First, raw audio input undergoes a process called preprocessing , where background noise is removed to enhance sound quality and make recognition more manageable.

- Next, the audio goes through feature extraction , where algorithms identify distinct characteristics of sounds and words.

- Then, these extracted features go through acoustic modeling , which as we described earlier, is the stage where acoustic and language models decide the most accurate visual representation of the word. These acoustic modeling systems are based on extensive datasets, allowing them to learn the acoustic patterns of different spoken words.

- At the same time, language modeling looks at the structure and probability of words in a sequence, which helps provide context.

- After this, the output goes into a decoding sequence, where the speech recognition system matches data from the extracted features with the acoustic models. This helps determine the most likely word sequence.

- Finally, the audio and corresponding textual output go through post-processing , which refines the output by correcting errors and improving coherence to create a more accurate transcription.

When it comes to advanced systems, all of these stages are done nearly instantaneously, making this process almost invisible to the average user. All of these stages together have made speech recognition a highly versatile tool that can be used in many different ways, from virtual assistants to transcription services and beyond.

Types of Speech Recognition Systems

Speech recognition technology is used in many different ways today, transforming the way humans and machines interact and work together. From professional settings to helping us make our lives a little easier, this technology can take on many forms. Here are some of them.

Virtual Assistants

In 2022, 62% of US adults used a voice assistant on various mobile devices. Siri, Google Assistant, and Alexa are all examples of speech recognition in our daily lives. These applications respond to vocal commands and can interact with humans through natural language in order to complete tasks like sending messages, answering questions, or setting reminders.

Voice Search

Search engines like Google can be searched using voice instead of typing in a query, often with voice assistants. This allows users to conveniently search for a quick answer without sorting through content when they need to be hands-free, like when driving or multitasking. This technology has become so popular over the last few years that now 50% of US-based consumers use voice search every single day.

Transcription Services

Speech recognition has completely changed the transcription industry. It has enabled transcription services to automate the process of turning speech into text, increasing efficiency in many fields like education, legal services, healthcare, and even journalism.

Accessibility

With speech recognition, technologies that may have seemed out of reach are now accessible to people with disabilities. For example, for people with motor impairments or who are visually impaired, AI voice-to-text technology can help with the hands-free operation of things like keyboards, writing assistance for dictation, and voice commands to control devices.

Automotive Systems

Speech recognition is keeping drivers safer by giving them hands-free control over in-car features. Drivers can make calls, adjust the temperature, navigate, or even control the music without ever removing their hands from the wheel and instead just issuing voice commands to a speech-activated system.

How Does aiOla Use Speech Recognition?

aiOla’s AI-powered speech platform is revolutionizing the way certain industries work by bringing advanced speech recognition technology to companies in fields like aviation, fleet management, food safety, and manufacturing.

Traditionally, many processes in these industries were manual, forcing organizations to use a lot of time, budget, and resources to complete mission-critical tasks like inspections and maintenance. However, with aiOla’s advanced speech system, these otherwise labor and resource-intensive tasks can be reduced to a matter of minutes using natural language.

Rather than manually writing to record data during inspections, inspectors can speak about what they’re verifying and the data gets stored instantly. Similarly, through dissecting speech, aiOla can help with predictive maintenance of essential machinery, allowing food manufacturers to produce safer items and decrease downtime.

Since aiOla’s speech recognition platform understands over 100 languages and countless accents, dialects, and industry-specific jargon, the system is highly accurate and can help turn speech into action to go a step further and automate otherwise manual tasks.

Embracing Speech Recognition Technology

Looking ahead, we can only expect the technology that relies on speech recognition to improve and become more embedded into our day-to-day. Indeed, the market for this technology is expected to grow to $19.57 billion by 2030 . Whether it’s refining virtual assistants, improving voice search, or applying speech recognition to new industries, this technology is here to stay and enhance our personal and professional lives.

aiOla, while also a relatively new technology, is already making waves in industries like manufacturing, fleet management, and food safety. Through technological advancements in speech recognition, we only expect aiOla’s capabilities to continue to grow and support a larger variety of businesses and organizations.

Schedule a demo with one of our experts to see how aiOla’s AI speech recognition platform works in action.

What is speech recognition software? Speech recognition software is a technology that enables computers to convert speech into written words. This is done through algorithms that analyze audio signals along with AI, ML, and other technologies. What is a speech recognition example? A relatable example of speech recognition is asking a virtual assistant like Siri on a mobile device to check the day’s weather or set an alarm. While speech recognition can complete a lot more advanced tasks, this exemplifies how this technology is commonly used in everyday life. What is speech recognition in AI? Speech recognition in AI refers to how artificial intelligence processes are used to aid in recognizing voice and language using advanced models and algorithms trained on vast amounts of data. What are some different types of speech recognition? A few different types of speech recognition include speaker-dependent and speaker-independent systems, command and control systems, and continuous speech recognition. What is the difference between voice recognition and speech recognition? Speech recognition converts spoken language into text, while voice recognition works to identify a speaker’s unique vocal characteristics for authentication purposes. In essence, voice recognition is more tied to identity rather than transcription.

Ready to put your speech in motion? We’re listening.

Share your details to schedule a call

We will contact you soon!

- SUPPORT & DOCS

- By Use Case

- By Industry

- Book a Demo

What Is Speech Recognition?

Automatic Speech Recognition (ASR) software transforms voice commands or utterances into digital information that computers can use to process human speech as input. In a number of varying applications, speech recognition enables users to navigate a voice-user interface or interact with a computer system through spoken directives.

How It Works

Given the sheer number of words in every language as well as variations in pronunciation from region to region, ASR software has a very difficult task trying to understand us.

The software must first transform our analog voice into a digital format. It has to distinguish between words and sounds within words, which it does using phonemes—the smallest elements of any language (e.g., the splits into th and uh ).

ASR compares the phonemes in context with the other phonemes around them while also analyzing the preceding and following words, for context. The software uses complicated statistical modeling, such as Hidden Markov Models, to find the likely word.

Some speech recognition systems are speaker-dependent, meaning they require a training period to adjust to specific users’ voices for optimum performance. Other systems are speaker-independent, meaning they work without a training period and for any user.

All ASR systems incorporate noise reduction elements to filter out background noise from actual speech.

Speech Recognition vs. Voice Biometrics

While speech recognition software identifies what a speaker is saying, voice biometrics software identifies who’s speaking.

ASR Systems vs. Speech Recognition Engines

A speech recognition engine is a component of the larger speech recognition system, which uses a speech rec engine, a text-to-speech engine and a dialog manager. A speech recognition engine has several components: a language model or grammar, an acoustic model and a decoder.

Speech Recognition Applications

Most visibly, ASR is a key technology in the latest mobile devices with personal assistants (Siri, et cetera) and interactive voice response (IVR) systems that often couple ASR with speech synthesis .

Uses include data entry (password for IVR), voice dialing or texting, speech-to-text (dictation), device control (home appliances, et cetera) and direct voice input (voice commands in aviation).

Around since the 1960s, ASR has seen steady, incremental improvement over the years. It has benefited greatly from increased processing speed of computers in the last decade, entering the marketplace in the mid-2000s.

Early systems were acoustic phonetics-based and worked with small vocabularies to identify isolated words. Over the years, vocabularies have grown while ASR systems have become statistics-based (Hidden Markov Models). They now have large vocabularies and can recognize continuous speech.

Subscribe to our newsletter

Stay ahead of the curve with our AI insights and productivity tips delivered straight to your inbox.

- Productivity

What is Automatic Speech Recognition (ASR) Technology?

Automatic Speech Recognition (ASR) is revolutionizing the way we interact with technology, turning spoken words into text with incredible accuracy. But how does this magic work?

Automatic Speech Recognition (ASR), also known as Speech-to-Text (STT), is an artificial intelligence technology that converts spoken words into written text. Over the past decade, ASR has evolved to become an integral part of our daily lives, powering voice assistants, smart speakers, voice search, live captioning, and much more. Let's take a deep dive into how this fascinating technology works under the hood and the latest advancements transforming the field.

How ASR Works

At a high level, an ASR system takes in an audio signal containing speech as input, analyzes it, and outputs the corresponding text transcription. But a lot of complex processing happens in between those steps.

A typical ASR pipeline consists of several key components:

- Acoustic Model - This is usually a deep learning model trained to map audio features to phonemes, the distinct units of sound that distinguish one word from another in a language. The model is trained on many hours of transcribed speech data.

- Pronunciation Model - This contains a mapping of vocabulary words to their phonetic pronunciations. It helps the system determine what sounds make up each word.

- Language Model - The language model is trained on huge text corpora to learn the probability distributions of word sequences. This helps the system determine what word is likely to come next given the previous words, allowing it to handle homophones and pick the most probable word.

- Decoder - The decoder takes the outputs from the acoustic model, pronunciation model, and language model to search for and output the most likely word sequence that aligns with the input audio.

Early ASR systems used statistical models like Hidden Markov Models (HMMs) and Gaussian Mixture Models (GMMs). Today, state-of-the-art systems leverage the power of deep learning, using architectures like Recurrent Neural Networks (RNNs), Convolutional Neural Networks (CNNs), and Transformers to dramatically improve recognition accuracy.

End-to-end deep learning approaches like Connectionist Temporal Classification (CTC) and encoder-decoder models with attention have also gained popularity. These combine the various components of the traditional ASR pipeline into a single neural network that can be trained end-to-end, simplifying the system.

Despite the rapid progress, ASR still faces many challenges due to the immense complexity of human speech. Key challenges include:

- Accents & Pronunciations - Handling diverse speaker accents and pronunciations is difficult. Models need to be trained on speech data covering a wide variety of accents.

- Background Noise - Background sounds, muffled speech, and poor audio quality can drastically reduce transcription accuracy. A technique like speech enhancement is used to handle this.

- Different Languages - Supporting ASR for the thousands of languages worldwide, each with unique sounds, grammar, and scripts, is a massive undertaking. Techniques like transfer learning and multilingual models help, but collecting sufficient labeled training data for each language remains a bottleneck.

- Specialized Vocabulary - Many use cases like medical dictation involve very specialized domain-specific terminology that generic models struggle with. Custom models need to be trained with in-domain data for such specialized vocabularies.

Use Cases & Applications

ASR has a vast and rapidly growing range of applications, including:

- Voice Assistants & Smart Speakers - Siri, Alexa, and Google Assistant rely on ASR to understand spoken requests.

- Hands-free Computing - Voice-to-text allows dictating emails and documents, navigating apps, and issuing commands hands-free.

- Call Center Analytics - ASR allows analyzing support calls at scale to gauge customer sentiment, ensure compliance, and identify areas for agent coaching.

- Closed Captioning - Live ASR makes real-time captioning possible for lectures, news broadcasts, and video calls, enhancing accessibility.

- Medical Documentation - Healthcare professionals can dictate clinical notes for electronic health records.

- Meeting Transcription - ASR enables generating searchable transcripts and summaries of meetings, lectures, and depositions.

Latest Advancements

Some exciting recent advancements in ASR include:

- Contextual and Semantic Understanding - Beyond just transcribing the literal words, models are getting better at understanding intent and semantics using the whole conversation history as context.

- Emotion & Sentiment Recognition - Analyzing the prosody and intonation to recognize the underlying emotions in addition to the words.

- Ultra-low Latency Streaming - Reducing the latency of real-time transcription to under 100ms using techniques like blockwise streaming and speculative beam search.

- Improved Noise Robustness - Handling extremely noisy environments with signal-to-noise ratios as low as 0db.

- Personalizing to Voices - Improving accuracy for individuals by personalizing models for their unique voice, accent, and phrasing patterns.

- Huge Pre-trained Models - Leveraging self-supervised learning on unlabeled data to train massive models that can be fine-tuned for specific languages/domains with less labeled data, inspired by NLP successes like GPT-3.

The Future of ASR

As ASR technology continues to mature and permeate our lives and work, what does the future hold? We can expect the technology to become more accurate, more reliable in challenging acoustic environments, and more natural at interpreting meaning and intent beyond the literal spoken words.

Continuous personalization will allow ASR to adapt to your individual voice over time. We'll see more real-world products like earbuds with always-on voice interfaces. ASR will become more inclusive, supporting many more languages and niche use cases. Over time, talking to technology may become as natural as typing on a keyboard is today.

Advancements in ASR are intertwined with progress in natural language processing and dialog systems. As computers get better at truly understanding and engaging in human-like conversation, seamless human-computer interaction through natural spoken language will open up endless possibilities limited only by our imagination.

In conclusion, Automatic Speech Recognition has come a long way and is continuing to advance at a rapid pace. It's an exciting technology to keep an eye on as it shapes the future of how we interact with technology. From improving accessibility to transforming the way we work, the potential impact is immense.

What is Artificial General Intelligence (AGI)?

What are Foundation Models?

What is Generative AI?

What is Prompt Engineering?

Speech Recognition

Speech Recognition is the decoding of human speech into transcribed text through a computer program. To recognize spoken words, the program must transcribe the incoming sound signal into a digitized representation, which must then be compared to an enormous database of digitized representations of spoken words. To transcribe speech with any tolerable degree of accuracy, users must speak each word independently, with a pause between each word and this substantially slows the speed of speech-recognition systems and calls their utility into question, With the exception in the case of physical disabilities that would prevent input by other means. See discrete speech recognition.

Author Jennifer Spencer

You might also like.

No related photos.

- Random article

- Teaching guide

- Privacy & cookies

Speech recognition software

by Chris Woodford . Last updated: August 17, 2023.

I t's just as well people can understand speech. Imagine if you were like a computer: friends would have to "talk" to you by prodding away at a plastic keyboard connected to your brain by a long, curly wire. If you wanted to say "hello" to someone, you'd have to reach out, chatter your fingers over their keyboard, and wait for their eyes to light up; they'd have to do the same to you. Conversations would be a long, slow, elaborate nightmare—a silent dance of fingers on plastic; strange, abstract, and remote. We'd never put up with such clumsiness as humans, so why do we talk to our computers this way?

Scientists have long dreamed of building machines that can chatter and listen just like humans. But although computerized speech recognition has been around for decades, and is now built into most smartphones and PCs, few of us actually use it. Why? Possibly because we never even bother to try it out, working on the assumption that computers could never pull off a trick so complex as understanding the human voice. It's certainly true that speech recognition is a complex problem that's challenged some of the world's best computer scientists, mathematicians, and linguists. How well are they doing at cracking the problem? Will we all be chatting to our PCs one day soon? Let's take a closer look and find out!

Photo: A court reporter dictates notes into a laptop with a noise-cancelling microphone and speech-recogition software. Photo by Micha Pierce courtesy of US Marine Corps and DVIDS .

What is speech?

Language sets people far above our creeping, crawling animal friends. While the more intelligent creatures, such as dogs and dolphins, certainly know how to communicate with sounds, only humans enjoy the rich complexity of language. With just a couple of dozen letters, we can build any number of words (most dictionaries contain tens of thousands) and express an infinite number of thoughts.

Photo: Speech recognition has been popping up all over the place for quite a few years now. Even my old iPod Touch (dating from around 2012) has a built-in "voice control" program that let you pick out music just by saying "Play albums by U2," or whatever band you're in the mood for.

When we speak, our voices generate little sound packets called phones (which correspond to the sounds of letters or groups of letters in words); so speaking the word cat produces phones that correspond to the sounds "c," "a," and "t." Although you've probably never heard of these kinds of phones before, you might well be familiar with the related concept of phonemes : simply speaking, phonemes are the basic LEGO™ blocks of sound that all words are built from. Although the difference between phones and phonemes is complex and can be very confusing, this is one "quick-and-dirty" way to remember it: phones are actual bits of sound that we speak (real, concrete things), whereas phonemes are ideal bits of sound we store (in some sense) in our minds (abstract, theoretical sound fragments that are never actually spoken).

Computers and computer models can juggle around with phonemes, but the real bits of speech they analyze always involves processing phones. When we listen to speech, our ears catch phones flying through the air and our leaping brains flip them back into words, sentences, thoughts, and ideas—so quickly, that we often know what people are going to say before the words have fully fled from their mouths. Instant, easy, and quite dazzling, our amazing brains make this seem like a magic trick. And it's perhaps because listening seems so easy to us that we think computers (in many ways even more amazing than brains) should be able to hear, recognize, and decode spoken words as well. If only it were that simple!

Why is speech so hard to handle?

The trouble is, listening is much harder than it looks (or sounds): there are all sorts of different problems going on at the same time... When someone speaks to you in the street, there's the sheer difficulty of separating their words (what scientists would call the acoustic signal ) from the background noise —especially in something like a cocktail party, where the "noise" is similar speech from other conversations. When people talk quickly, and run all their words together in a long stream, how do we know exactly when one word ends and the next one begins? (Did they just say "dancing and smile" or "dance, sing, and smile"?) There's the problem of how everyone's voice is a little bit different, and the way our voices change from moment to moment. How do our brains figure out that a word like "bird" means exactly the same thing when it's trilled by a ten year-old girl or boomed by her forty-year-old father? What about words like "red" and "read" that sound identical but mean totally different things (homophones, as they're called)? How does our brain know which word the speaker means? What about sentences that are misheard to mean radically different things? There's the age-old military example of "send reinforcements, we're going to advance" being misheard for "send three and fourpence, we're going to a dance"—and all of us can probably think of song lyrics we've hilariously misunderstood the same way (I always chuckle when I hear Kate Bush singing about "the cattle burning over your shoulder"). On top of all that stuff, there are issues like syntax (the grammatical structure of language) and semantics (the meaning of words) and how they help our brain decode the words we hear, as we hear them. Weighing up all these factors, it's easy to see that recognizing and understanding spoken words in real time (as people speak to us) is an astonishing demonstration of blistering brainpower.

It shouldn't surprise or disappoint us that computers struggle to pull off the same dazzling tricks as our brains; it's quite amazing that they get anywhere near!

Photo: Using a headset microphone like this makes a huge difference to the accuracy of speech recognition: it reduces background sound, making it much easier for the computer to separate the signal (the all-important words you're speaking) from the noise (everything else).

How do computers recognize speech?

Speech recognition is one of the most complex areas of computer science —and partly because it's interdisciplinary: it involves a mixture of extremely complex linguistics, mathematics, and computing itself. If you read through some of the technical and scientific papers that have been published in this area (a few are listed in the references below), you may well struggle to make sense of the complexity. My objective is to give a rough flavor of how computers recognize speech, so—without any apology whatsoever—I'm going to simplify hugely and miss out most of the details.

Broadly speaking, there are four different approaches a computer can take if it wants to turn spoken sounds into written words:

1: Simple pattern matching

Ironically, the simplest kind of speech recognition isn't really anything of the sort. You'll have encountered it if you've ever phoned an automated call center and been answered by a computerized switchboard. Utility companies often have systems like this that you can use to leave meter readings, and banks sometimes use them to automate basic services like balance inquiries, statement orders, checkbook requests, and so on. You simply dial a number, wait for a recorded voice to answer, then either key in or speak your account number before pressing more keys (or speaking again) to select what you want to do. Crucially, all you ever get to do is choose one option from a very short list, so the computer at the other end never has to do anything as complex as parsing a sentence (splitting a string of spoken sound into separate words and figuring out their structure), much less trying to understand it; it needs no knowledge of syntax (language structure) or semantics (meaning). In other words, systems like this aren't really recognizing speech at all: they simply have to be able to distinguish between ten different sound patterns (the spoken words zero through nine) either using the bleeping sounds of a Touch-Tone phone keypad (technically called DTMF ) or the spoken sounds of your voice.

From a computational point of view, there's not a huge difference between recognizing phone tones and spoken numbers "zero", "one," "two," and so on: in each case, the system could solve the problem by comparing an entire chunk of sound to similar stored patterns in its memory. It's true that there can be quite a bit of variability in how different people say "three" or "four" (they'll speak in a different tone, more or less slowly, with different amounts of background noise) but the ten numbers are sufficiently different from one another for this not to present a huge computational challenge. And if the system can't figure out what you're saying, it's easy enough for the call to be transferred automatically to a human operator.

Photo: Voice-activated dialing on cellphones is little more than simple pattern matching. You simply train the phone to recognize the spoken version of a name in your phonebook. When you say a name, the phone doesn't do any particularly sophisticated analysis; it simply compares the sound pattern with ones you've stored previously and picks the best match. No big deal—which explains why even an old phone like this 2001 Motorola could do it.

2: Pattern and feature analysis

Automated switchboard systems generally work very reliably because they have such tiny vocabularies: usually, just ten words representing the ten basic digits. The vocabulary that a speech system works with is sometimes called its domain . Early speech systems were often optimized to work within very specific domains, such as transcribing doctor's notes, computer programming commands, or legal jargon, which made the speech recognition problem far simpler (because the vocabulary was smaller and technical terms were explicitly trained beforehand). Much like humans, modern speech recognition programs are so good that they work in any domain and can recognize tens of thousands of different words. How do they do it?

Most of us have relatively large vocabularies, made from hundreds of common words ("a," "the," "but" and so on, which we hear many times each day) and thousands of less common ones (like "discombobulate," "crepuscular," "balderdash," or whatever, which we might not hear from one year to the next). Theoretically, you could train a speech recognition system to understand any number of different words, just like an automated switchboard: all you'd need to do would be to get your speaker to read each word three or four times into a microphone, until the computer generalized the sound pattern into something it could recognize reliably.

The trouble with this approach is that it's hugely inefficient. Why learn to recognize every word in the dictionary when all those words are built from the same basic set of sounds? No-one wants to buy an off-the-shelf computer dictation system only to find they have to read three or four times through a dictionary, training it up to recognize every possible word they might ever speak, before they can do anything useful. So what's the alternative? How do humans do it? We don't need to have seen every Ford, Chevrolet, and Cadillac ever manufactured to recognize that an unknown, four-wheeled vehicle is a car: having seen many examples of cars throughout our lives, our brains somehow store what's called a prototype (the generalized concept of a car, something with four wheels, big enough to carry two to four passengers, that creeps down a road) and we figure out that an object we've never seen before is a car by comparing it with the prototype. In much the same way, we don't need to have heard every person on Earth read every word in the dictionary before we can understand what they're saying; somehow we can recognize words by analyzing the key features (or components) of the sounds we hear. Speech recognition systems take the same approach.

The recognition process

Practical speech recognition systems start by listening to a chunk of sound (technically called an utterance ) read through a microphone. The first step involves digitizing the sound (so the up-and-down, analog wiggle of the sound waves is turned into digital format, a string of numbers) by a piece of hardware (or software) called an analog-to-digital (A/D) converter (for a basic introduction, see our article on analog versus digital technology ). The digital data is converted into a spectrogram (a graph showing how the component frequencies of the sound change in intensity over time) using a mathematical technique called a Fast Fourier Transform (FFT) ), then broken into a series of overlapping chunks called acoustic frames , each one typically lasting 1/25 to 1/50 of a second. These are digitally processed in various ways and analyzed to find the components of speech they contain. Assuming we've separated the utterance into words, and identified the key features of each one, all we have to do is compare what we have with a phonetic dictionary (a list of known words and the sound fragments or features from which they're made) and we can identify what's probably been said. Probably is always the word in speech recognition: no-one but the speaker can ever know exactly what was said.)

Seeing speech

In theory, since spoken languages are built from only a few dozen phonemes (English uses about 46, while Spanish has only about 24), you could recognize any possible spoken utterance just by learning to pick out phones (or similar key features of spoken language such as formants , which are prominent frequencies that can be used to help identify vowels). Instead of having to recognize the sounds of (maybe) 40,000 words, you'd only need to recognize the 46 basic component sounds (or however many there are in your language), though you'd still need a large phonetic dictionary listing the phonemes that make up each word. This method of analyzing spoken words by identifying phones or phonemes is often called the beads-on-a-string model : a chunk of unknown speech (the string) is recognized by breaking it into phones or bits of phones (the beads); figure out the phones and you can figure out the words.

Most speech recognition programs get better as you use them because they learn as they go along using feedback you give them, either deliberately (by correcting mistakes) or by default (if you don't correct any mistakes, you're effectively saying everything was recognized perfectly—which is also feedback). If you've ever used a program like one of the Dragon dictation systems, you'll be familiar with the way you have to correct your errors straight away to ensure the program continues to work with high accuracy. If you don't correct mistakes, the program assumes it's recognized everything correctly, which means similar mistakes are even more likely to happen next time. If you force the system to go back and tell it which words it should have chosen, it will associate those corrected words with the sounds it heard—and do much better next time.

Screenshot: With speech dictation programs like Dragon NaturallySpeaking, shown here, it's important to go back and correct your mistakes if you want your words to be recognized accurately in future.

3: Statistical analysis

In practice, recognizing speech is much more complex than simply identifying phones and comparing them to stored patterns, and for a whole variety of reasons: Speech is extremely variable: different people speak in different ways (even though we're all saying the same words and, theoretically, they're all built from a standard set of phonemes) You don't always pronounce a certain word in exactly the same way; even if you did, the way you spoke a word (or even part of a word) might vary depending on the sounds or words that came before or after. As a speaker's vocabulary grows, the number of similar-sounding words grows too: the digits zero through nine all sound different when you speak them, but "zero" sounds like "hero," "one" sounds like "none," "two" could mean "two," "to," or "too"... and so on. So recognizing numbers is a tougher job for voice dictation on a PC, with a general 50,000-word vocabulary, than for an automated switchboard with a very specific, 10-word vocabulary containing only the ten digits. The more speakers a system has to recognize, the more variability it's going to encounter and the bigger the likelihood of making mistakes. For something like an off-the-shelf voice dictation program (one that listens to your voice and types your words on the screen), simple pattern recognition is clearly going to be a bit hit and miss. The basic principle of recognizing speech by identifying its component parts certainly holds good, but we can do an even better job of it by taking into account how language really works. In other words, we need to use what's called a language model .

When people speak, they're not simply muttering a series of random sounds. Every word you utter depends on the words that come before or after. For example, unless you're a contrary kind of poet, the word "example" is much more likely to follow words like "for," "an," "better," "good", "bad," and so on than words like "octopus," "table," or even the word "example" itself. Rules of grammar make it unlikely that a noun like "table" will be spoken before another noun ("table example" isn't something we say) while—in English at least—adjectives ("red," "good," "clear") come before nouns and not after them ("good example" is far more probable than "example good"). If a computer is trying to figure out some spoken text and gets as far as hearing "here is a ******* example," it can be reasonably confident that ******* is an adjective and not a noun. So it can use the rules of grammar to exclude nouns like "table" and the probability of pairs like "good example" and "bad example" to make an intelligent guess. If it's already identified a "g" sound instead of a "b", that's an added clue.

Virtually all modern speech recognition systems also use a bit of complex statistical hocus-pocus to help figure out what's being said. The probability of one phone following another, the probability of bits of silence occurring in between phones, and the likelihood of different words following other words are all factored in. Ultimately, the system builds what's called a hidden Markov model (HMM) of each speech segment, which is the computer's best guess at which beads are sitting on the string, based on all the things it's managed to glean from the sound spectrum and all the bits and pieces of phones and silence that it might reasonably contain. It's called a Markov model (or Markov chain), for Russian mathematician Andrey Markov , because it's a sequence of different things (bits of phones, words, or whatever) that change from one to the next with a certain probability. Confusingly, it's referred to as a "hidden" Markov model even though it's worked out in great detail and anything but hidden! "Hidden," in this case, simply means the contents of the model aren't observed directly but figured out indirectly from the sound spectrum. From the computer's viewpoint, speech recognition is always a probabilistic "best guess" and the right answer can never be known until the speaker either accepts or corrects the words that have been recognized. (Markov models can be processed with an extra bit of computer jiggery pokery called the Viterbi algorithm , but that's beyond the scope of this article.)

4: Artificial neural networks

HMMs have dominated speech recognition since the 1970s—for the simple reason that they work so well. But they're by no means the only technique we can use for recognizing speech. There's no reason to believe that the brain itself uses anything like a hidden Markov model. It's much more likely that we figure out what's being said using dense layers of brain cells that excite and suppress one another in intricate, interlinked ways according to the input signals they receive from our cochleas (the parts of our inner ear that recognize different sound frequencies).

Back in the 1980s, computer scientists developed "connectionist" computer models that could mimic how the brain learns to recognize patterns, which became known as artificial neural networks (sometimes called ANNs). A few speech recognition scientists explored using neural networks, but the dominance and effectiveness of HMMs relegated alternative approaches like this to the sidelines. More recently, scientists have explored using ANNs and HMMs side by side and found they give significantly higher accuracy over HMMs used alone.

Artwork: Neural networks are hugely simplified, computerized versions of the brain—or a tiny part of it that have inputs (where you feed in information), outputs (where results appear), and hidden units (connecting the two). If you train them with enough examples, they learn by gradually adjusting the strength of the connections between the different layers of units. Once a neural network is fully trained, if you show it an unknown example, it will attempt to recognize what it is based on the examples it's seen before.

Speech recognition: a summary

Artwork: A summary of some of the key stages of speech recognition and the computational processes happening behind the scenes.

What can we use speech recognition for?

We've already touched on a few of the more common applications of speech recognition, including automated telephone switchboards and computerized voice dictation systems. But there are plenty more examples where those came from.

Many of us (whether we know it or not) have cellphones with voice recognition built into them. Back in the late 1990s, state-of-the-art mobile phones offered voice-activated dialing , where, in effect, you recorded a sound snippet for each entry in your phonebook, such as the spoken word "Home," or whatever that the phone could then recognize when you spoke it in future. A few years later, systems like SpinVox became popular helping mobile phone users make sense of voice messages by converting them automatically into text (although a sneaky BBC investigation eventually claimed that some of its state-of-the-art speech automated speech recognition was actually being done by humans in developing countries!).

Today's smartphones make speech recognition even more of a feature. Apple's Siri , Google Assistant ("Hey Google..."), and Microsoft's Cortana are smartphone "personal assistant apps" who'll listen to what you say, figure out what you mean, then attempt to do what you ask, whether it's looking up a phone number or booking a table at a local restaurant. They work by linking speech recognition to complex natural language processing (NLP) systems, so they can figure out not just what you say , but what you actually mean , and what you really want to happen as a consequence. Pressed for time and hurtling down the street, mobile users theoretically find this kind of system a boon—at least if you believe the hype in the TV advertisements that Google and Microsoft have been running to promote their systems. (Google quietly incorporated speech recognition into its search engine some time ago, so you can Google just by talking to your smartphone, if you really want to.) If you have one of the latest voice-powered electronic assistants, such as Amazon's Echo/Alexa or Google Home, you don't need a computer of any kind (desktop, tablet, or smartphone): you just ask questions or give simple commands in your natural language to a thing that resembles a loudspeaker ... and it answers straight back.

Screenshot: When I asked Google "does speech recognition really work," it took it three attempts to recognize the question correctly.

Will speech recognition ever take off?

I'm a huge fan of speech recognition. After suffering with repetitive strain injury on and off for some time, I've been using computer dictation to write quite a lot of my stuff for about 15 years, and it's been amazing to see the improvements in off-the-shelf voice dictation over that time. The early Dragon NaturallySpeaking system I used on a Windows 95 laptop was fairly reliable, but I had to speak relatively slowly, pausing slightly between each word or word group, giving a horribly staccato style that tended to interrupt my train of thought. This slow, tedious one-word-at-a-time approach ("can – you – tell – what – I – am – saying – to – you") went by the name discrete speech recognition . A few years later, things had improved so much that virtually all the off-the-shelf programs like Dragon were offering continuous speech recognition , which meant I could speak at normal speed, in a normal way, and still be assured of very accurate word recognition. When you can speak normally to your computer, at a normal talking pace, voice dictation programs offer another advantage: they give clumsy, self-conscious writers a much more attractive, conversational style: "write like you speak" (always a good tip for writers) is easy to put into practice when you speak all your words as you write them!

Despite the technological advances, I still generally prefer to write with a keyboard and mouse . Ironically, I'm writing this article that way now. Why? Partly because it's what I'm used to. I often write highly technical stuff with a complex vocabulary that I know will defeat the best efforts of all those hidden Markov models and neural networks battling away inside my PC. It's easier to type "hidden Markov model" than to mutter those words somewhat hesitantly, watch "hiccup half a puddle" pop up on screen and then have to make corrections.

Screenshot: You an always add more words to a speech recognition program. Here, I've decided to train the Microsoft Windows built-in speech recognition engine to spot the words 'hidden Markov model.'

Mobile revolution?

You might think mobile devices—with their slippery touchscreens —would benefit enormously from speech recognition: no-one really wants to type an essay with two thumbs on a pop-up QWERTY keyboard. Ironically, mobile devices are heavily used by younger, tech-savvy kids who still prefer typing and pawing at screens to speaking out loud. Why? All sorts of reasons, from sheer familiarity (it's quick to type once you're used to it—and faster than fixing a computer's goofed-up guesses) to privacy and consideration for others (many of us use our mobile phones in public places and we don't want our thoughts wide open to scrutiny or howls of derision), and the sheer difficulty of speaking clearly and being clearly understood in noisy environments. Recently, I was walking down a street and overheard a small garden party where the sounds of happy laughter, drinking, and discreet background music were punctuated by a sudden grunt of "Alexa play Copacabana by Barry Manilow"—which silenced the conversation entirely and seemed jarringly out of place. Speech recognition has never been so indiscreet. What you're doing with your computer also makes a difference. If you've ever used speech recognition on a PC, you'll know that writing something like an essay (dictating hundreds or thousands of words of ordinary text) is a whole lot easier than editing it afterwards (where you laboriously try to select words or sentences and move them up or down so many lines with awkward cut and paste commands). And trying to open and close windows, start programs, or navigate around a computer screen by voice alone is clumsy, tedious, error-prone, and slow. It's far easier just to click your mouse or swipe your finger.

Photo: Here I'm using Google's Live Transcribe app to dictate the last paragraph of this article. As you can see, apart from the punctuation, the transcription is flawless, without any training at all. This is the fastest and most accurate speech recognition software I've ever used. It's mainly designed as an accessibility aid for deaf and hard of hearing people, but it can be used for dictation too.

Developers of speech recognition systems insist everything's about to change, largely thanks to natural language processing and smart search engines that can understand spoken queries. ("OK Google...") But people have been saying that for decades now: the brave new world is always just around the corner. According to speech pioneer James Baker, better speech recognition "would greatly increase the speed and ease with which humans could communicate with computers, and greatly speed and ease the ability with which humans could record and organize their own words and thoughts"—but he wrote (or perhaps voice dictated?) those words 25 years ago! Just because Google can now understand speech, it doesn't follow that we automatically want to speak our queries rather than type them—especially when you consider some of the wacky things people look for online. Humans didn't invent written language because others struggled to hear and understand what they were saying. Writing and speaking serve different purposes. Writing is a way to set out longer, more clearly expressed and elaborated thoughts without having to worry about the limitations of your short-term memory; speaking is much more off-the-cuff. Writing is grammatical; speech doesn't always play by the rules. Writing is introverted, intimate, and inherently private; it's carefully and thoughtfully composed. Speaking is an altogether different way of expressing your thoughts—and people don't always want to speak their minds. While technology may be ever advancing, it's far from certain that speech recognition will ever take off in quite the way that its developers would like. I'm typing these words, after all, not speaking them.

If you liked this article...

Find out more, on this website.

- Microphones

- Neural networks

- Speech synthesis

- Automatic Speech Recognition: A Deep Learning Approach by Dong Yu and Li Deng. Springer, 2015. Two Microsoft researchers review state-of-the-art, neural-network approaches to recognition.

- Theory and Applications of Digital Speech Processing by Lawrence R. Rabiner and Ronald W. Schafer. Pearson, 2011. An up-to-date review at undergraduate level.

- Speech and Language Processing: An Introduction to Natural Language Processing, Computational Linguistics, and Speech Recognition by Daniel Jurafsky, James Martin. Prentice Hall, 2009. An up-to-date, interdisciplinary review of speech recognition technology.

- Statistical Methods for Speech Recognition by Frederick Jelinek. MIT Press, 1997. A detailed guide to Hidden Markov Models and the other statistical techniques that computers use to figure out human speech.

- Fundamentals of Speech Recognition by Lawrence R. Rabiner and Biing-Hwang Juang. PTR Prentice Hall, 1993. A little dated now, but still a good introduction to the basic concepts.

- Speech Recognition: Invited Papers Presented at the 1974 IEEE Symposium by D. R. Reddy (ed). Academic Press, 1975. A classic collection of pioneering papers from the golden age of the 1970s.

Easy-to-understand

- Lost voices, ignored words: Apple's speech recognition needs urgent reform by Colin Hughes, The Register, 16 August 2023. How speech recognition software ignores the needs of the people who need it most—disabled people with different accessibility needs.

- Android's Live Transcribe will let you save transcriptions and show 'sound events' by Dieter Bohn, The Verge, 16 May 2019. An introduction to Google's handy, 70-language transcription app.

- Hey, Siri: Read My Lips by Emily Waltz, IEEE Spectrum, 8 February 2019. How your computer can translate your words... without even listening.

- Interpol's New Software Will Recognize Criminals by Their Voices by Michael Dumiak, 16 May 2018. Is it acceptable for law enforcement agencies to store huge quantities of our voice samples if it helps them trap the occasional bad guy?