Predictive analytics is a branch of advanced analytics that makes predictions about future outcomes using historical data combined with statistical modeling, data mining techniques and machine learning .

Companies employ predictive analytics to find patterns in this data to identify risks and opportunities. Predictive analytics is often associated with big data and data science .

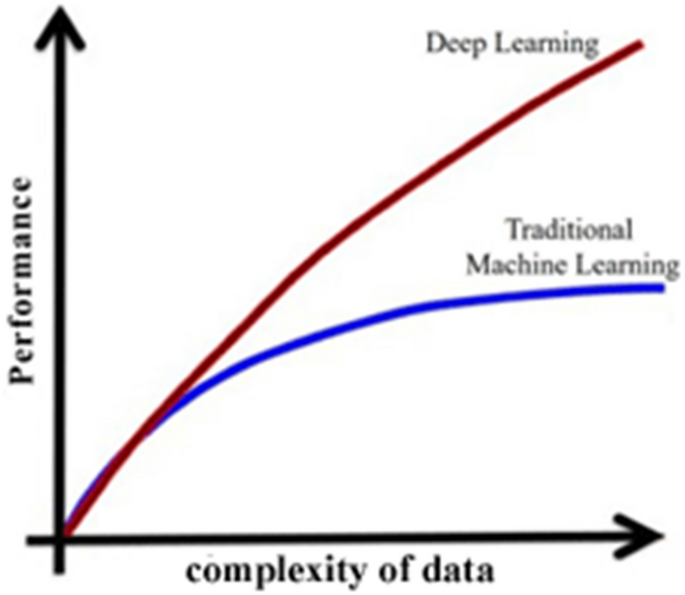

Today, companies today are inundated with data from log files to images and video, and all of this data resides in disparate data repositories across an organization. To gain insights from this data, data scientists use deep learning and machine learning algorithms to find patterns and make predictions about future events. Some of these statistical techniques include logistic and linear regression models, neural networks and decision trees. Some of these modeling techniques use initial predictive learnings to make additional predictive insights.

Read why IBM was named a leader in the IDC MarketScape: Worldwide AI Governance Platforms 2023 report.

Register for the ebook on AI data stores

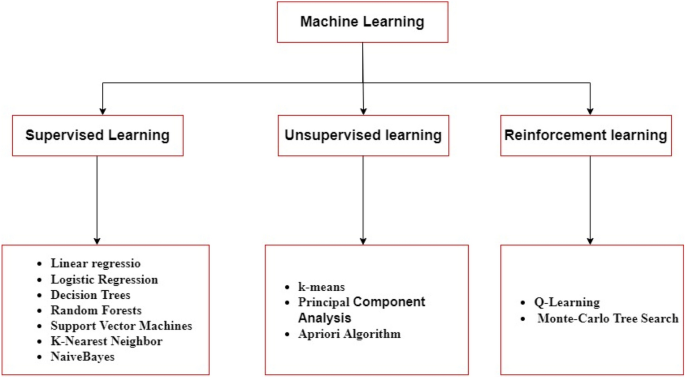

Predictive analytics models are designed to assess historical data, discover patterns, observe trends, and use that information to predict future trends. Popular predictive analytics models include classification, clustering, and time series models.

Classification models

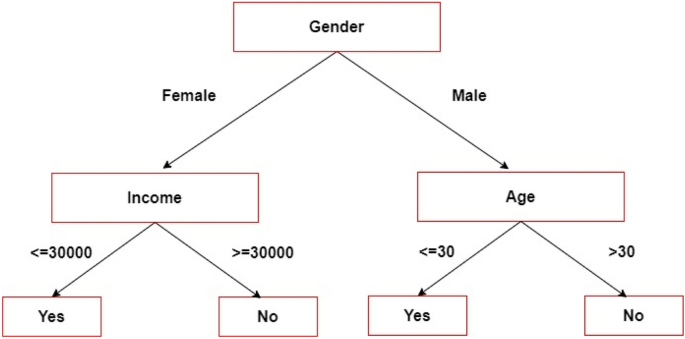

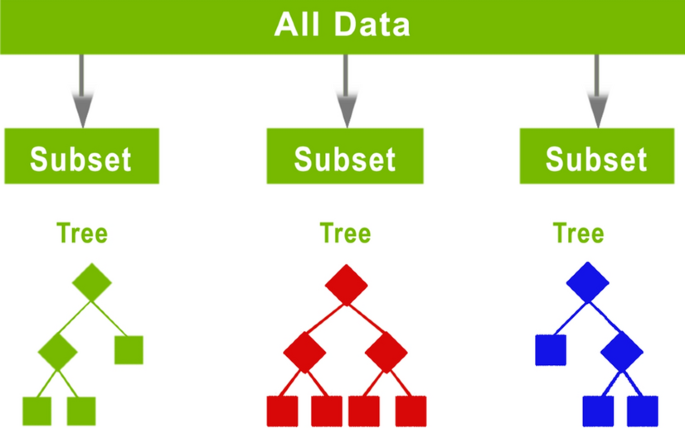

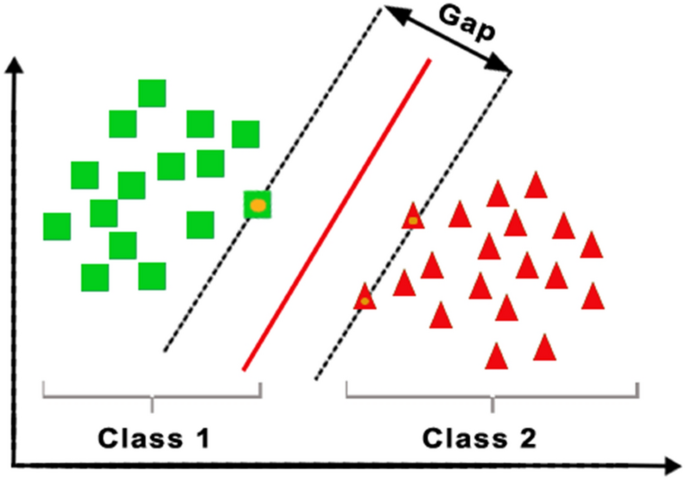

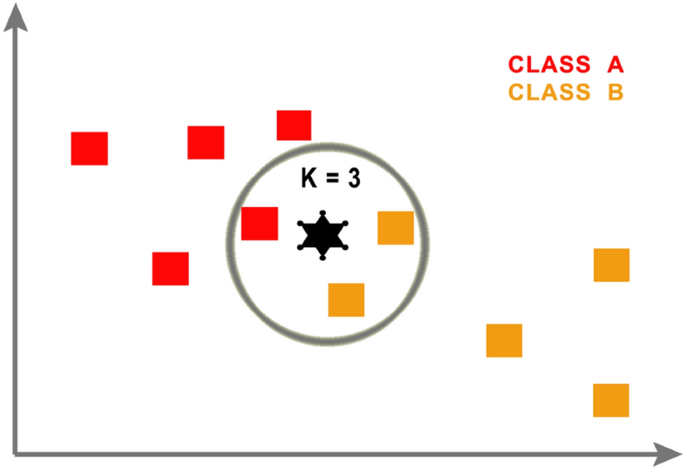

Classification models fall under the branch of supervised machine learning models. These models categorize data based on historical data, describing relationships within a given dataset. For example, this model can be used to classify customers or prospects into groups for segmentation purposes. Alternatively, it can also be used to answer questions with binary outputs, such answering yes or no or true and false; popular use cases for this are fraud detection and credit risk evaluation. Types of classification models include logistic regression , decision trees, random forest, neural networks, and Naïve Bayes.

Clustering models

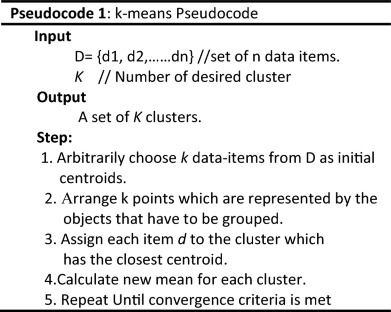

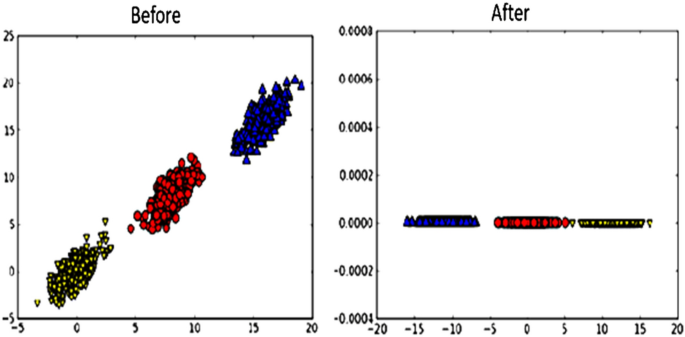

Clustering models fall under unsupervised learning . They group data based on similar attributes. For example, an e-commerce site can use the model to separate customers into similar groups based on common features and develop marketing strategies for each group. Common clustering algorithms include k-means clustering, mean-shift clustering, density-based spatial clustering of applications with noise (DBSCAN), expectation-maximization (EM) clustering using Gaussian Mixture Models (GMM), and hierarchical clustering.

Time series models

Time series models use various data inputs at a specific time frequency, such as daily, weekly, monthly, et cetera. It is common to plot the dependent variable over time to assess the data for seasonality, trends, and cyclical behavior, which may indicate the need for specific transformations and model types. Autoregressive (AR), moving average (MA), ARMA, and ARIMA models are all frequently used time series models. As an example, a call center can use a time series model to forecast how many calls it will receive per hour at different times of day.

Predictive analytics can be deployed in across various industries for different business problems. Below are a few industry use cases to illustrate how predictive analytics can inform decision-making within real-world situations.

- Banking: Financial services use machine learning and quantitative tools to make predictions about their prospects and customers. With this information, banks can answer questions like who is likely to default on a loan, which customers pose high or low risks, which customers are the most lucrative to target resources and marketing spend and what spending is fraudulent in nature.

- Healthcare: Predictive analytics in health care is used to detect and manage the care of chronically ill patients, as well as to track specific infections such as sepsis. Geisinger Health used predictive analytics to mine health records to learn more about how sepsis is diagnosed and treated. Geisinger created a predictive model based on health records for more than 10,000 patients who had been diagnosed with sepsis in the past. The model yielded impressive results, correctly predicting patients with a high rate of survival.

- Human resources (HR): HR teams use predictive analytics and employee survey metrics to match prospective job applicants, reduce employee turnover and increase employee engagement. This combination of quantitative and qualitative data allows businesses to reduce their recruiting costs and increase employee satisfaction, which is particularly useful when labor markets are volatile.

- Marketing and sales: While marketing and sales teams are very familiar with business intelligence reports to understand historical sales performance, predictive analytics enables companies to be more proactive in the way that they engage with their clients across the customer lifecycle. For example, churn predictions can enable sales teams to identify dissatisfied clients sooner, enabling them to initiate conversations to promote retention. Marketing teams can leverage predictive data analysis for cross-sell strategies, and this commonly manifests itself through a recommendation engine on a brand’s website.

- Supply chain: Businesses commonly use predictive analytics to manage product inventory and set pricing strategies. This type of predictive analysis helps companies meet customer demand without overstocking warehouses. It also enables companies to assess the cost and return on their products over time. If one part of a given product becomes more expensive to import, companies can project the long-term impact on revenue if they do or do not pass on additional costs to their customer base. For a deeper look at a case study, you can read more about how FleetPride used this type of data analytics to inform their decision making on their inventory of parts for excavators and tractor trailers. Past shipping orders enabled them to plan more precisely to set appropriate supply thresholds based on demand.

An organization that knows what to expect based on past patterns has a business advantage in managing inventories, workforce, marketing campaigns, and most other facets of operation.

- Security: Every modern organization must be concerned with keeping data secure. A combination of automation and predictive analytics improves security. Specific patterns associated with suspicious and unusual end user behavior can trigger specific security procedures.

- Risk reduction: In addition to keeping data secure, most businesses are working to reduce their risk profiles. For example, a company that extends credit can use data analytics to better understand if a customer poses a higher-than-average risk of defaulting. Other companies may use predictive analytics to better understand whether their insurance coverage is adequate.

- Operational efficiency : More efficient workflows translate to improved profit margins. For example, understanding when a vehicle in a fleet used for delivery is going to need maintenance before it’s broken down on the side of the road means deliveries are made on time, without the additional costs of having the vehicle towed and bringing in another employee to complete the delivery.

- Improved decision making: Running any business involves making calculated decisions. Any expansion or addition to a product line or other form of growth requires balancing the inherent risk with the potential outcome. Predictive analytics can provide insight to inform the decision-making process and offer a competitive advantage.

IBM Watson® Studio empowers data scientists, developers and analysts to build, run and manage AI models, and optimize decisions anywhere on IBM Cloud Pak for Data.

IBM® SPSS® Statistics is a powerful statistical software platform. It offers a user-friendly interface and a robust set of features that lets your organization quickly extract actionable insights from your data.

IBM® SPSS® Modeler is a leading visual data science and machine learning (ML) solution designed to help enterprises accelerate time to value by speeding up operational tasks for data scientists.

Unlock the value of enterprise data and build an insight-driven organization that delivers business advantage with IBM Consulting.

Modern predictive analytics can empower your business to augment data with real-time insights to predict and shape your future. Read this guide to learn more.

Build a ML model to estimate the risk associated with granting a credit card to an applicant, helping to assess if they should receive it.

See how IBM SPSS® Modeler can deliver data science productivity and rapid ROI using the IBM-commissioned Forrester Consulting tool.

IBM SPSS Statistics offers advanced statistical analysis, a vast library of machine learning algorithms, text analysis, open-source extensibility, integration with big data and seamless deployment into applications.

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

What Is Predictive Analytics? 5 Examples

- 26 Oct 2021

Data analytics —the practice of examining data to answer questions, identify trends, and extract insights—can provide you with the information necessary to strategize and make impactful business decisions.

There are four key types of data analytics :

- Descriptive , which answers the question, “What happened?”

- Diagnostic , which answers the question, “Why did this happen?”

- Prescriptive , which answers the question, “What should we do next?”

- Predictive , which answers the question, “What might happen in the future?”

The ability to predict future events and trends is crucial across industries. Predictive analytics appears more often than you might assume—from your weekly weather forecast to algorithm-enabled medical advancements. Here’s an overview of predictive analytics to get you started on the path to data-informed strategy formulation and decision-making.

Access your free e-book today.

What Is Predictive Analytics?

Predictive analytics is the use of data to predict future trends and events. It uses historical data to forecast potential scenarios that can help drive strategic decisions.

The predictions could be for the near future—for instance, predicting the malfunction of a piece of machinery later that day—or the more distant future, such as predicting your company’s cash flows for the upcoming year.

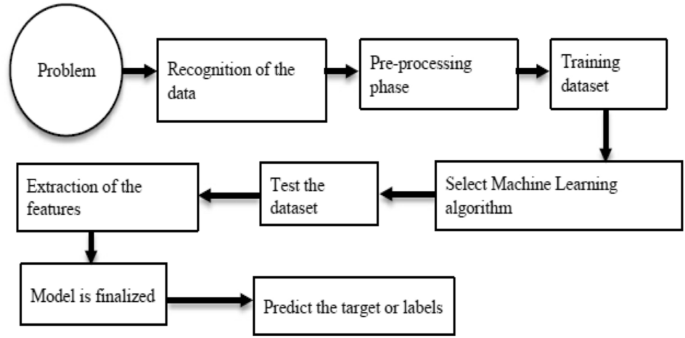

Predictive analysis can be conducted manually or using machine-learning algorithms. Either way, historical data is used to make assumptions about the future.

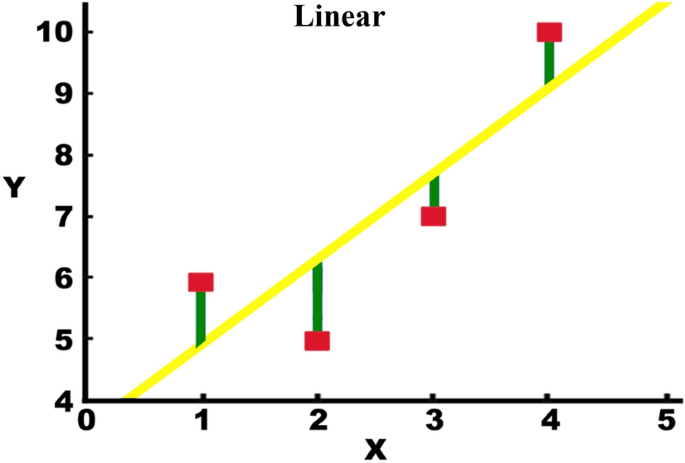

One predictive analytics tool is regression analysis , which can determine the relationship between two variables ( single linear regression ) or three or more variables ( multiple regression ). The relationships between variables are written as a mathematical equation that can help predict the outcome should one variable change.

“Regression allows us to gain insights into the structure of that relationship and provides measures of how well the data fit that relationship,” says Harvard Business School Professor Jan Hammond, who teaches the online course Business Analytics , one of the three courses that make up the Credential of Readiness (CORe) program . “Such insights can prove extremely valuable for analyzing historical trends and developing forecasts.”

Forecasting can enable you to make better decisions and formulate data-informed strategies. Here are several examples of predictive analytics in action to inspire you to use it at your organization.

5 Examples of Predictive Analytics in Action

1. finance: forecasting future cash flow.

Every business needs to keep periodic financial records, and predictive analytics can play a big role in forecasting your organization’s future health. Using historical data from previous financial statements, as well as data from the broader industry, you can project sales, revenue, and expenses to craft a picture of the future and make decisions.

HBS Professor V.G. Narayanan mentions the importance of forecasting in the course Financial Accounting , which is also part of CORe .

“Managers need to be looking ahead in order to plan for the future health of their business,” Narayanan says. “No matter the field in which you work, there is always a great amount of uncertainty involved in this process.”

2. Entertainment & Hospitality: Determining Staffing Needs

One example explored in Business Analytics is casino and hotel operator Caesars Entertainment’s use of predictive analytics to determine venue staffing needs at specific times.

In entertainment and hospitality, customer influx and outflux depend on various factors, all of which play into how many staff members a venue or hotel needs at a given time. Overstaffing costs money, and understaffing could result in a bad customer experience, overworked employees, and costly mistakes.

To predict the number of hotel check-ins on a given day, a team developed a multiple regression model that considered several factors. This model enabled Caesars to staff its hotels and casinos and avoid overstaffing to the best of its ability.

3. Marketing: Behavioral Targeting

In marketing, consumer data is abundant and leveraged to create content, advertisements, and strategies to better reach potential customers where they are. By examining historical behavioral data and using it to predict what will happen in the future, you engage in predictive analytics.

Predictive analytics can be applied in marketing to forecast sales trends at various times of the year and plan campaigns accordingly.

Additionally, historical behavioral data can help you predict a lead’s likelihood of moving down the funnel from awareness to purchase. For instance, you could use a single linear regression model to determine that the number of content offerings a lead engages with predicts—with a statistically significant level of certainty—their likelihood of converting to a customer down the line. With this knowledge, you can plan targeted ads at various points in the customer’s lifecycle.

Related: What Is Marketing Analytics?

4. Manufacturing: Preventing Malfunction

While the examples above use predictive analytics to take action based on likely scenarios, you can also use predictive analytics to prevent unwanted or harmful situations from occurring. For instance, in the manufacturing field, algorithms can be trained using historical data to accurately predict when a piece of machinery will likely malfunction.

When the criteria for an upcoming malfunction are met, the algorithm is triggered to alert an employee who can stop the machine and potentially save the company thousands, if not millions, of dollars in damaged product and repair costs. This analysis predicts malfunction scenarios in the moment rather than months or years in advance.

Some algorithms even recommend fixes and optimizations to avoid future malfunctions and improve efficiency, saving time, money, and effort. This is an example of prescriptive analytics; more often than not, one or more types of analytics are used in tandem to solve a problem.

5. Health Care: Early Detection of Allergic Reactions

Another example of using algorithms for rapid, predictive analytics for prevention comes from the health care industry . The Wyss Institute at Harvard University partnered with the KeepSmilin4Abbie Foundation to develop a wearable piece of technology that predicts an anaphylactic allergic reaction and automatically administers life-saving epinephrine.

The sensor, called AbbieSense, detects early physiological signs of anaphylaxis as predictors of an ensuing reaction—and it does so far quicker than a human can. When a reaction is predicted to occur, an algorithmic response is triggered. The algorithm can predict the reaction’s severity, alert the individual and caregivers, and automatically inject epinephrine when necessary. The technology’s ability to predict the reaction at a faster speed than manual detection could save lives.

Using Data to Strategize for the Future

No matter your industry, predictive analytics can provide the insights needed to make your next move. Whether you’re driving financial decisions, formulating marketing strategies, changing your course of action, or working to save lives, building a foundation in analytical skills can serve you well.

For hands-on practice and a deeper understanding of how you can put analytics to work for your organization, consider taking Business Analytics , one of three online courses that make up HBS Online’s CORe program .

Do you want to become a data-driven professional? Explore our eight-week Business Analytics course and our three-course Credential of Readiness (CORe) program to deepen your analytical skills and apply them to real-world business problems.

About the Author

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Ann Transl Med

- v.8(4); 2020 Feb

Predictive analytics in the era of big data: opportunities and challenges

Big data have changed the way we generate, manage, analyze and leverage data in any industries. There is no exception in clinical medicine where large volume of data is generated from electronic healthcare records, wearable devices and insurance companies ( 1 ). This has greatly changed the way we perform clinical studies. Instead of performing data entry and curation manually, the information technology has significantly improved the efficacy of data management. With such a large volume of data, many clinical questions can be addressed by using big data analytics ( 1 - 3 ). Three steps are typically involved in the big data analytics ( Table 1 ). The first step is the formulation of clinical questions ( 4 ), which can be categorized into three types: (I) epidemiological question on prevalence and incidence and risk factors; (II) effectiveness and/or safety of an intervention; and (III) predictive analytics. The second step is the design of a study, which transforms the clinical question into a study design. For example, the prevalence of catheter-related blood stream infection (CRBSI) as well as its risk factors can be addressed with retrospective or prospective cohort study. A case-control study design can be used to identify risk factors. The effectiveness can be addressed by a randomized controlled trial or an observational study. The third step involves the statistical analysis and/or modelling by using data collected under a certain design.

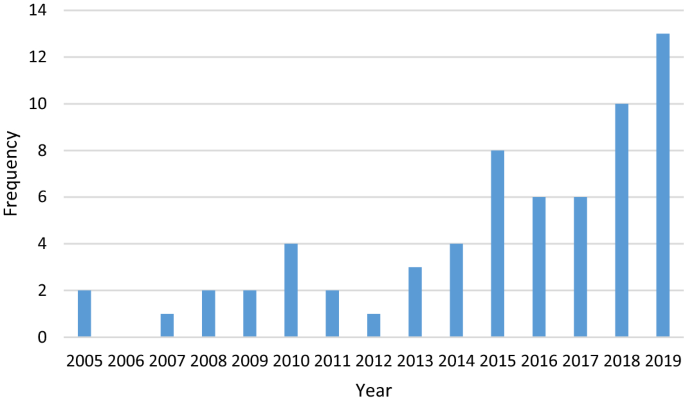

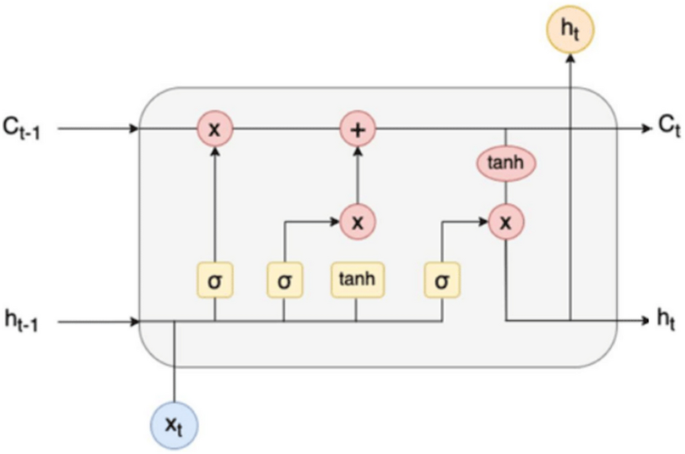

Among all these big data analytics, the predictive analytics are becoming increasingly important in clinical medicine ( 5 ). The use of predictive analytics in clinical medicine includes but not limited to risk stratification, differential diagnosis (classification), prognosis, prediction of disease occurrence and prediction for the effectiveness of a certain intervention ( 6 - 8 ). In other words, the Predictive analytics involve the whole process of the disease course from disease prevention, diagnosis, treatment and finally to the prognosis. For example, from the perspective of disease prevention, smoking is a strong risk factor for the development of lung cancer and thus modifying this factor can help to reduce the risk of lung cancer. If a patient is diagnosed with lung cancer, risk stratification by using genetic and clinical features in a predictive model can help to determine whether surgical intervention and/or chemotherapy should be used. Finally, accurate prediction of the long-term outcome is also important for communication with family members and medical decision making. Thus, the literature involving clinical prediction have witnessed a rapid increase in recent years. Conventionally, predictors are entered into a generalized linear model to estimate a vector of coefficients, and the resulting model can be generalized to samples that are not used for training the model ( 9 ). However, the model training process is not straightforward and there is no single approach that can fit for all situations. For example, the generalized linear model is easy to interpret for subject matter audience, but it cannot automatically capture the high-order relationship among covariates ( 10 ). In contrast, the sophisticated neural networks and deep learning approaches are capable of modeling any mathematical functions, which however is at the cost of interpretability (e.g., these models are considered to be black box algorithm because domain experts cannot easily understand how the predictors/features influence the outcome/label) ( 11 , 12 ).

In a recent special report published in the Annals of Translational Medicine , Zhou and colleagues provided a comprehensive tutorial on how to perform predictive modeling ( 13 ). There are 16 sections involving variable selection (feature engineering), model calibration, utility, and nomogram for the ease of clinical application. They also discussed some challenging conditions such as the presence of competing risks and the curse of dimensionality. Potential readers of this report include clinical investigators, physicians, and even statisticians. More importantly, the R code for each step of modeling are provided and well explained. For beginners with limited experience in R coding, this can be a good starting point.

However, I want to clarify that the authors have confused the parametric and non-parametric modeling in the first chart. First, let’s look at the formal definition for parametric and non-parametric modeling from the textbook Artificial Intelligence: A Modern Approach ( 14 ). The author stated that:

“ A learning model that summarizes data with a set of parameters of fixed size (independent of the number of training examples) is called a parametric model. No matter how much data you throw at a parametric model, it won’t change its mind about how many parameters it needs. ”

From this definition, the neural networks should apparently be classified as the parametric modeling approach because there are multiple weights attached to the nodes of the neural network ( 15 ). Actually, a neural network with only one layer is simply a linear regression model, and the latter is a prototype of parametric model. The purpose of training a neural network model is to estimate weights and bias for each node, then the weighted sum is passed to the next layer node and there is usually a non-linear activation function to transform the signal. Other machine learning methods such as k-nearest neighbors and decision trees can be safely classified as non-parametric models.

Furthermore, in the prediction evaluation model branch, the authors classified drawing nomogram and building prediction scores into the evaluation process of a model. I have to argue that there is no evaluation of the model at all with these two approaches. The use of risk scores and nomograms are simply the presentation of trained prediction models so that they can be used in clinical practice ( 16 ). It has nothing to do with the calibration or discrimination of the model. Nomogram and/or risk scores should be done after the final model is confirmed by using a variety of validation methods. The validations in the training set and external set cannot be considered as conceptually parallel. The external validation should be considered more robust in identifying the problem of overfitting than the internal validation no matter which procedure is used (e.g., there are many statistical methods to perform model validation if there is only one single dataset such as cross validation, simple-split and leave-one-out) ( 17 ).

In conclusion, the comprehensive tutorial is timely in the era of big data that it provides practical tools for conducting predictive analytics. With more advanced information technology being applied to patients, a large volume of data can be collected with ease. Thus, the interests in leveraging big data to advance the healthcare are increasing. Predictive analytics is the cornerstone of precision medicine that patients with different clinical characteristics and genetic backgrounds should be treated differently. Although there is a great deal of challenges in leveraging big data to advance the healthcare ( 18 , 19 ), the opportunities are equally abundant.

Acknowledgments

Funding: Z Zhang received funding from Zhejiang Province Public Welfare Technology Application Research Project (CN) (LGF18H150005) and the National Natural Science Foundation of China (Grant No. 81901929).

Ethical Statement: The author is accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved.

Provenance: This is an invited article commissioned by the Editorial Office, Annals of Translational Medicine .

Conflicts of Interest: The author has no conflicts of interest to declare.

Precision Health Analytics With Predictive Analytics and Implementation Research: JACC State-of-the-Art Review

Affiliations.

- 1 College of Medicine and College of Public Health and Health Professions, University of Florida Health Science Center, Gainesville, Florida. Electronic address: [email protected].

- 2 School of Medicine and Duke Clinical Research Institute, Duke University, Durham, North Carolina.

- 3 Center for Translation Research and Implementation Science, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland.

- 4 Office of Genomics and Precision Public Health, Centers for Disease Control and Prevention, Atlanta, Georgia.

- 5 Columbia University School of Social Work, New York, New York.

- 6 School of Public Health and Information Science, University of Louisville, Louisville, Kentucky.

- 7 Division of Lung Diseases, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland.

- 8 AllianceChicago, Chicago, Illinois.

- 9 Health Policy and Management, Milken Institute School of Public Health, George Washington University, Washington, DC.

- 10 Division of Cardiovascular Sciences, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland.

- 11 Predictive Analytics and Comparative Effectiveness (PACE) Center, Sackler School of Graduate Biomedical Sciences, Tufts University, Tufts Medical Center, Boston, Massachusetts.

- 12 Department of Medicine Health Services and Care Research Program, University of Wisconsin School of Medicine and Public Health, Madison, Wisconsin.

- 13 Geisinger Health System, Danville, Pennsylvania.

- 14 College of Medicine and College of Public Health and Health Professions, University of Florida Health Science Center, Gainesville, Florida.

- 15 Health Technology, Telemedicine and Advanced Technology Research Center, Frederick, Maryland.

- 16 School of Medicine, University of Texas Health Science Center at San Antonio and South Texas Veterans Health Care System, San Antonio, Texas.

- 17 Division of Blood Diseases and Resources, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland.

- 18 Division of Biostatistics & Epidemiology, Division of Pulmonary Medicine, Cincinnati Children's Hospital, Department of Pediatrics, University of Cincinnati, Cincinnati, Ohio.

- 19 Center for Translation Research and Implementation Science, National Heart, Lung, and Blood Institute, National Institutes of Health, Bethesda, Maryland. Electronic address: [email protected].

- PMID: 32674794

- DOI: 10.1016/j.jacc.2020.05.043

Emerging data science techniques of predictive analytics expand the quality and quantity of complex data relevant to human health and provide opportunities for understanding and control of conditions such as heart, lung, blood, and sleep disorders. To realize these opportunities, the information sources, the data science tools that use the information, and the application of resulting analytics to health and health care issues will require implementation research methods to define benefits, harms, reach, and sustainability; and to understand related resource utilization implications to inform policymakers. This JACC State-of-the-Art Review is based on a workshop convened by the National Heart, Lung, and Blood Institute to explore predictive analytics in the context of implementation science. It highlights precision medicine and precision public health as complementary and compelling applications of predictive analytics, and addresses future research and training endeavors that might further foster the application of predictive analytics in clinical medicine and public health.

Keywords: exposome; genome; implementation research; predictive analytics; social determinants.

Copyright © 2020 American College of Cardiology Foundation. All rights reserved.

Publication types

- Research Support, N.I.H., Extramural

- Cardiology*

- Delivery of Health Care / methods*

- Periodicals as Topic*

- Precision Medicine / methods*

- Public Health*

Research Topics & Ideas: Data Science

50 Topic Ideas To Kickstart Your Research Project

If you’re just starting out exploring data science-related topics for your dissertation, thesis or research project, you’ve come to the right place. In this post, we’ll help kickstart your research by providing a hearty list of data science and analytics-related research ideas , including examples from recent studies.

PS – This is just the start…

We know it’s exciting to run through a list of research topics, but please keep in mind that this list is just a starting point . These topic ideas provided here are intentionally broad and generic , so keep in mind that you will need to develop them further. Nevertheless, they should inspire some ideas for your project.

To develop a suitable research topic, you’ll need to identify a clear and convincing research gap , and a viable plan to fill that gap. If this sounds foreign to you, check out our free research topic webinar that explores how to find and refine a high-quality research topic, from scratch. Alternatively, consider our 1-on-1 coaching service .

Data Science-Related Research Topics

- Developing machine learning models for real-time fraud detection in online transactions.

- The use of big data analytics in predicting and managing urban traffic flow.

- Investigating the effectiveness of data mining techniques in identifying early signs of mental health issues from social media usage.

- The application of predictive analytics in personalizing cancer treatment plans.

- Analyzing consumer behavior through big data to enhance retail marketing strategies.

- The role of data science in optimizing renewable energy generation from wind farms.

- Developing natural language processing algorithms for real-time news aggregation and summarization.

- The application of big data in monitoring and predicting epidemic outbreaks.

- Investigating the use of machine learning in automating credit scoring for microfinance.

- The role of data analytics in improving patient care in telemedicine.

- Developing AI-driven models for predictive maintenance in the manufacturing industry.

- The use of big data analytics in enhancing cybersecurity threat intelligence.

- Investigating the impact of sentiment analysis on brand reputation management.

- The application of data science in optimizing logistics and supply chain operations.

- Developing deep learning techniques for image recognition in medical diagnostics.

- The role of big data in analyzing climate change impacts on agricultural productivity.

- Investigating the use of data analytics in optimizing energy consumption in smart buildings.

- The application of machine learning in detecting plagiarism in academic works.

- Analyzing social media data for trends in political opinion and electoral predictions.

- The role of big data in enhancing sports performance analytics.

- Developing data-driven strategies for effective water resource management.

- The use of big data in improving customer experience in the banking sector.

- Investigating the application of data science in fraud detection in insurance claims.

- The role of predictive analytics in financial market risk assessment.

- Developing AI models for early detection of network vulnerabilities.

Data Science Research Ideas (Continued)

- The application of big data in public transportation systems for route optimization.

- Investigating the impact of big data analytics on e-commerce recommendation systems.

- The use of data mining techniques in understanding consumer preferences in the entertainment industry.

- Developing predictive models for real estate pricing and market trends.

- The role of big data in tracking and managing environmental pollution.

- Investigating the use of data analytics in improving airline operational efficiency.

- The application of machine learning in optimizing pharmaceutical drug discovery.

- Analyzing online customer reviews to inform product development in the tech industry.

- The role of data science in crime prediction and prevention strategies.

- Developing models for analyzing financial time series data for investment strategies.

- The use of big data in assessing the impact of educational policies on student performance.

- Investigating the effectiveness of data visualization techniques in business reporting.

- The application of data analytics in human resource management and talent acquisition.

- Developing algorithms for anomaly detection in network traffic data.

- The role of machine learning in enhancing personalized online learning experiences.

- Investigating the use of big data in urban planning and smart city development.

- The application of predictive analytics in weather forecasting and disaster management.

- Analyzing consumer data to drive innovations in the automotive industry.

- The role of data science in optimizing content delivery networks for streaming services.

- Developing machine learning models for automated text classification in legal documents.

- The use of big data in tracking global supply chain disruptions.

- Investigating the application of data analytics in personalized nutrition and fitness.

- The role of big data in enhancing the accuracy of geological surveying for natural resource exploration.

- Developing predictive models for customer churn in the telecommunications industry.

- The application of data science in optimizing advertisement placement and reach.

Recent Data Science-Related Studies

While the ideas we’ve presented above are a decent starting point for finding a research topic, they are fairly generic and non-specific. So, it helps to look at actual studies in the data science and analytics space to see how this all comes together in practice.

Below, we’ve included a selection of recent studies to help refine your thinking. These are actual studies, so they can provide some useful insight as to what a research topic looks like in practice.

- Data Science in Healthcare: COVID-19 and Beyond (Hulsen, 2022)

- Auto-ML Web-application for Automated Machine Learning Algorithm Training and evaluation (Mukherjee & Rao, 2022)

- Survey on Statistics and ML in Data Science and Effect in Businesses (Reddy et al., 2022)

- Visualization in Data Science VDS @ KDD 2022 (Plant et al., 2022)

- An Essay on How Data Science Can Strengthen Business (Santos, 2023)

- A Deep study of Data science related problems, application and machine learning algorithms utilized in Data science (Ranjani et al., 2022)

- You Teach WHAT in Your Data Science Course?!? (Posner & Kerby-Helm, 2022)

- Statistical Analysis for the Traffic Police Activity: Nashville, Tennessee, USA (Tufail & Gul, 2022)

- Data Management and Visual Information Processing in Financial Organization using Machine Learning (Balamurugan et al., 2022)

- A Proposal of an Interactive Web Application Tool QuickViz: To Automate Exploratory Data Analysis (Pitroda, 2022)

- Applications of Data Science in Respective Engineering Domains (Rasool & Chaudhary, 2022)

- Jupyter Notebooks for Introducing Data Science to Novice Users (Fruchart et al., 2022)

- Towards a Systematic Review of Data Science Programs: Themes, Courses, and Ethics (Nellore & Zimmer, 2022)

- Application of data science and bioinformatics in healthcare technologies (Veeranki & Varshney, 2022)

- TAPS Responsibility Matrix: A tool for responsible data science by design (Urovi et al., 2023)

- Data Detectives: A Data Science Program for Middle Grade Learners (Thompson & Irgens, 2022)

- MACHINE LEARNING FOR NON-MAJORS: A WHITE BOX APPROACH (Mike & Hazzan, 2022)

- COMPONENTS OF DATA SCIENCE AND ITS APPLICATIONS (Paul et al., 2022)

- Analysis on the Application of Data Science in Business Analytics (Wang, 2022)

As you can see, these research topics are a lot more focused than the generic topic ideas we presented earlier. So, for you to develop a high-quality research topic, you’ll need to get specific and laser-focused on a specific context with specific variables of interest. In the video below, we explore some other important things you’ll need to consider when crafting your research topic.

Get 1-On-1 Help

If you’re still unsure about how to find a quality research topic, check out our Research Topic Kickstarter service, which is the perfect starting point for developing a unique, well-justified research topic.

You Might Also Like:

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

predictive analytics Recently Published Documents

Total documents.

- Latest Documents

- Most Cited Documents

- Contributed Authors

- Related Sources

- Related Keywords

Predictive Analytics of Energy Usage by IoT-Based Smart Home Appliances for Green Urban Development

Green IoT primarily focuses on increasing IoT sustainability by reducing the large amount of energy required by IoT devices. Whether increasing the efficiency of these devices or conserving energy, predictive analytics is the cornerstone for creating value and insight from large IoT data. This work aims at providing predictive models driven by data collected from various sensors to model the energy usage of appliances in an IoT-based smart home environment. Specifically, we address the prediction problem from two perspectives. Firstly, an overall energy consumption model is developed using both linear and non-linear regression techniques to identify the most relevant features in predicting the energy consumption of appliances. The performances of the proposed models are assessed using a publicly available dataset comprising historical measurements from various humidity and temperature sensors, along with total energy consumption data from appliances in an IoT-based smart home setup. The prediction results comparison show that LSTM regression outperforms other linear and ensemble regression models by showing high variability ( R 2 ) with the training (96.2%) and test (96.1%) data for selected features. Secondly, we develop a multi-step time-series model using the auto regressive integrated moving average (ARIMA) technique to effectively forecast future energy consumption based on past energy usage history. Overall, the proposed predictive models will enable consumers to minimize the energy usage of home appliances and the energy providers to better plan and forecast future energy demand to facilitate green urban development.

Influence of AI and Machine Learning in Insurance Sector

The Aim of this research is to identify influence, usage, and the benefits of AI (Artificial Intelligence) and ML (Machine learning) using big data analytics in Insurance sector. Insurance sector is the most volatile industry since multiple natural influences like Brexit, pandemic, covid 19, Climate changes, Volcano interruptions. This research paper will be used to explore potential scope and use cases for AI, ML and Big data processing in Insurance sector for Automate claim processing, fraud prevention, predictive analytics, and trend analysis towards possible cause for business losses or benefits. Empirical quantitative research method is used to verify the model with the sample of UK insurance sector analysis. This research will conclude some practical insights for Insurance companies using AI, ML, Big data processing and Cloud computing for the better client satisfaction, predictive analysis, and trending.

Can HRM predict mental health crises? Using HR analytics to unpack the link between employment and suicidal thoughts and behaviors

PurposeThe aim of this research is to determine the extent to which the human resource (HR) function can screen and potentially predict suicidal employees and offer preventative mental health assistance.Design/methodology/approachDrawing from the 2019 National Survey of Drug Use and Health (N = 56,136), this paper employs multivariate binary logistic regression to model the work-related predictors of suicidal ideation, planning and attempts.FindingsThe results indicate that known periods of joblessness, the total number of sick days and absenteeism over the last 12 months are significantly associated with various suicidal outcomes while controlling for key psychosocial correlates. The results also indicate that employee assistance programs are associated with a significantly reduced likelihood of suicidal ideation. These findings are consistent with conservation of resources theory.Research limitations/implicationsThis research demonstrates preliminarily that the HR function can unobtrusively detect employee mental health crises by collecting data on key predictors.Originality/valueIn the era of COVID-19, employers have a duty of care to safeguard employee mental health. To this end, the authors offer an innovative way through which the HR function can employ predictive analytics to address mental health crises before they result in tragedy.

An AI-Enabled Predictive Analytics Dashboard for Acute Neurosurgical Referrals

Abstract Healthcare dashboards make key information about service and clinical outcomes available to staff in an easy-to-understand format. Most dashboards are limited to providing insights based on group-level inference, rather than individual prediction. Here, we evaluate a dashboard which could analyze and forecast acute neurosurgical referrals based on 10,033 referrals made to a large volume tertiary neurosciences center in central London, U.K., from the start of the Covid-19 pandemic lockdown period until October 2021. As anticipated, referral volumes significantly increased in this period, largely due to an increase in spinal referrals. Applying a range of validated time-series forecasting methods, we found that referrals were projected to increase beyond this time-point. Using a mixed-methods approach, we determined that the dashboard was usable, feasible, and acceptable to key stakeholders. Dashboards provide an effective way of visualizing acute surgical referral data and for predicting future volume without the need for data-science expertise.

Price Bubbles in the Real Estate Markets - Analysis and Prediction

The article concerns the issue of price bubbles on the markets, with particular emphasis on the specificity of the real estate market. Up till now, more than a decade after the subprime crisis, there is no accurate enough method to predict price movements, their culmination and, eventually, the burst of price and speculative bubbles on the markets. Hence, the main goal of the article is to present the possibility of early detection of price bubbles and their consequences from the point of view of the surveyed managers. The following research hypothesis was verified: price bubbles on the real estate market cannot be excluded, therefore constant monitoring and predictive analytics of this market are needed. In addition to standard research methods (desk research or statistical analysis), the authors conducted their own survey on a group of randomly selected managers from Portugal and Poland in the context of their attitude to crises and price bubbles. The obtained results allowed us to conclude that managers in both analysed countries are different relating the effects of price bubbles to the activities of their own companies but are similar (about 40% of respondents) expecting quick detection and deactivation of emerging bubbles by the government or by central bank. Nearly 40% of Polish and Portuguese managers claimed that the consequences of crises must include an increased responsibility of managers for their decisions, especially those leading to failures.

Covid-19 Impact and Implications on Traffic: Smart Predictive Analytics for Mobility Navigation

Empirical study on classifiers for earlier prediction of covid-19 infection cure and death rate in the indian states.

Machine Learning methods can play a key role in predicting the spread of respiratory infection with the help of predictive analytics. Machine Learning techniques help mine data to better estimate and predict the COVID-19 infection status. A Fine-tuned Ensemble Classification approach for predicting the death and cure rates of patients from infection using Machine Learning techniques has been proposed for different states of India. The proposed classification model is applied to the recent COVID-19 dataset for India, and a performance evaluation of various state-of-the-art classifiers to the proposed model is performed. The classifiers forecasted the patients’ infection status in different regions to better plan resources and response care systems. The appropriate classification of the output class based on the extracted input features is essential to achieve accurate results of classifiers. The experimental outcome exhibits that the proposed Hybrid Model reached a maximum F1-score of 94% compared to Ensembles and other classifiers like Support Vector Machine, Decision Trees, and Gaussian Naïve Bayes on a dataset of 5004 instances through 10-fold cross-validation for predicting the right class. The feasibility of automated prediction for COVID-19 infection cure and death rates in the Indian states was demonstrated.

People Analytics: Augmenting Horizon from Predictive Analytics to Prescriptive Analytics

Analytics techniques: descriptive analytics, predictive analytics, and prescriptive analytics, unlocking drivers for employee engagement through human resource analytics.

The authors have discussed in detail the meaning of employee engagement and its relevance for the organizations in the present scenario. The authors also highlighted the various factors that predict the employee engagement of the employees in the varied organizations. The authors have emphasized on the role that HR analytics can play to identify the reasons for low level of engagement among employees and suggesting ways to improve the same using predictive analytics. The authors have also advocated the benefits that organizations can reap by making use of HR analytics in measuring the engagement levels of the employees and improving the engagement levels of diverse workforce in the existing organizations. The authors have also proposed the future perspectives of the proposed study that help the organizations and officials from the top management to tap the benefits of analytics in the function of human resource management and to address the upcoming issues related to employee behavior.

Export Citation Format

Share document.

Applying Predictive Analytics on Research Information to Enhance Funding Discovery and Strengthen Collaboration in Project Proposals

- Conference paper

- First Online: 11 May 2021

- Cite this conference paper

- Dang Vu Nguyen Hai ORCID: orcid.org/0000-0002-5496-3633 12 &

- Martin Gaedke ORCID: orcid.org/0000-0002-6729-2912 12

Part of the book series: Lecture Notes in Computer Science ((LNISA,volume 12706))

Included in the following conference series:

- International Conference on Web Engineering

1850 Accesses

In academic and industrial research, writing a project proposal is one of the essential but time-consuming activities. Nevertheless, most proposals end in rejection. Moreover, research funding is getting more competitive these days. Funding agencies are increasingly looking for more extensive and more interdisciplinary research proposals. To increase the funding success rate, this PhD project focuses on three open challenges: poor data quality, inefficient funding discovery, and ineffective collaborative team building. We envision a Predictive Analytics-based approach that involves analyzing research information and using statistical and machine learning models that can assure data quality, increase funding discovery efficiency and the effectiveness of collaboration building. Accordingly, the goal of this PhD project is to support decision-making process to maximize the funding success rates of universities.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

https://duraspace.org/vivo .

https://profiles.catalyst.harvard.edu/ .

https://www.elsevier.com/solutions/pure .

https://clarivate.com/webofsciencegroup/solutions/converis .

https://www.elsevier.com/solutions/funding-institutional .

https://duraspace.org/vivo/community/ .

Azeroual, O.: Text and data quality mining in CRIS. Information 10 (12), 374 (2019). https://doi.org/10.3390/info10120374 , https://www.mdpi.com/2078-2489/10/12/374

Azeroual, O., Saake, G., Schallehn, E.: Analyzing data quality issues in research information systems via data profiling. Int. J. Inf. Manag. 41 , 50–56 (2018)

Article Google Scholar

Cai, L., Zhu, Y.: The challenges of data quality and data quality assessment in the big data era. Data Sci. J. 14 , 2 (2015)

Google Scholar

CrossRef: Funder registry factsheet. https://www.crossref.org/pdfs/about-funder-registry.pdf . Accessed 2 Feb 2021

Dolgin, E.: The hunt for the lesser-known funding source. Nature 570 (7759), 127–130 (2019)

Guillaumet, A., García, F., Cuadrón, O.: Analyzing a CRIS: from data to insight in university research. Procedia Comput. Sci. 146 , 230–240 (2019)

Kash, W.: Predictive analytics tools are boosting graduation rates and ROI, say university officials. https://edscoop.com/predictive-analytics-tools-are-boosting-graduation-rates-and-roi-say-university-officials/ . Accessed 25 Jan 2021

Langer, A., Vu Nguyen Hai, D., Gaedke, M.: SolidRDP: applying solid data containers for research data publishing. In: Bielikova, M., Mikkonen, T., Pautasso, C. (eds.) ICWE 2020. LNCS, vol. 12128, pp. 399–415. Springer, Cham (2020). https://doi.org/10.1007/978-3-030-50578-3_27

Chapter Google Scholar

Manu, T., Parmar, M., Shashikumara, A., Asjola, V.: Research information management systems: a comparative study. In: Research Data Access and Management in Modern Libraries, pp. 54–80. IGI Global (2019)

Mishra, N., Silakari, S.: Predictive analytics: a survey, trends, applications, oppurtunities & challenges. Int. J. Comput. Sci. Inf. Technol. 3 (3), 4434–4438 (2012)

Rajni, J., Malaya, D.B.: Predictive analytics in a higher education context. IT Prof. 17 (4), 24–33 (2015). https://doi.org/10.1109/MITP.2015.68

van Rijnsoever, F.J., Hessels, L.K.: How academic researchers select collaborative research projects: a choice experiment. J. Technol. Transfer 1–32 (2020). https://doi.org/10.1007/s10961-020-09833-2

Sohn, E.: Secrets to writing a winning grant. Nature 577 (7788), 133–135 (2020)

Thompson, L.: How to increase your institution’s grant success rates. https://elsevier.com/connect/how-to-increase-your-grant-success-rates-with-insights-discovery-and-decisions . Accessed 24 Jan 2021

University, I.: Some reasons proposals fail. https://www.montana.edu/research/osp/general/reasons.html . Accessed 20 Jan 2021

Vu Nguyen Hai, D., Langer, A., Gaedke, M.: TUCfis: Applying vivo as the new RIS of the technical university of Chemnitz. Technische Informationsbibliothek TIB (2020). https://doi.org/10.5446/48014

Wieringa, R.J.: Design Science Methodology for Information Systems and Software Engineering. Springer, Heidelberg (2014). https://doi.org/10.1007/978-3-662-43839-8

Book Google Scholar

Download references

Acknowledgements

This PhD project is supported by the project IB20 Fis Heavy/TU Chemnitz/259038, funded by the Saxon State Ministry for Science and Art. In addition, we would like to thank André Langer, Maik Benndorf and Sebastian Heil for their supports during the writing process of this Symposium.

Author information

Authors and affiliations.

Chemnitz University of Technology, Chemnitz, Germany

Dang Vu Nguyen Hai & Martin Gaedke

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Dang Vu Nguyen Hai .

Editor information

Editors and affiliations.

Dipartimento di Elettronica, Politecnico di Milano, Milan, Italy

Marco Brambilla

E2S UPPA, LIUPPA, Université de Pau et des Pays de l’Adour, Anglet, France

Richard Chbeir

Econometric Institute, Erasmus University Rotterdam, Rotterdam, The Netherlands

Flavius Frasincar

Inria Saclay-Île-de-France, Institut Polytechnique de Paris, Palaiseau, France

Ioana Manolescu

Rights and permissions

Reprints and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this paper

Cite this paper.

Vu Nguyen Hai, D., Gaedke, M. (2021). Applying Predictive Analytics on Research Information to Enhance Funding Discovery and Strengthen Collaboration in Project Proposals. In: Brambilla, M., Chbeir, R., Frasincar, F., Manolescu, I. (eds) Web Engineering. ICWE 2021. Lecture Notes in Computer Science(), vol 12706. Springer, Cham. https://doi.org/10.1007/978-3-030-74296-6_37

Download citation

DOI : https://doi.org/10.1007/978-3-030-74296-6_37

Published : 11 May 2021

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-74295-9

Online ISBN : 978-3-030-74296-6

eBook Packages : Computer Science Computer Science (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Search Search Please fill out this field.

What Is Predictive Analytics?

- How It Works

- Analytics vs. Machine Learning

- Types of Models

- Business Uses

The Bottom Line

- Behavioral Economics

Predictive Analytics: Definition, Model Types, and Uses

:max_bytes(150000):strip_icc():format(webp)/Clay_Halton_BW_web_ready-13-69177d5045e84abd939938c80735cd05.jpg)

Erika Rasure is globally-recognized as a leading consumer economics subject matter expert, researcher, and educator. She is a financial therapist and transformational coach, with a special interest in helping women learn how to invest.

:max_bytes(150000):strip_icc():format(webp)/CSP_ER9-ErikaR.-dce5c7e19ef04426804e6b611fb1b1b4.jpg)

Investopedia / Julie Bang

Predictive analytics is the use of statistics and modeling techniques to forecast future outcomes. Current and historical data patterns are examined and plotted to determine the likelihood that those patterns will repeat.

Businesses use predictive analytics to fine-tune their operations and decide whether new products are worth the investment. Investors use predictive analytics to decide where to put their money. Internet retailers use predictive analytics to fine-tune purchase recommendations to their users and increase sales.

Key Takeaways

- Industries from insurance to marketing use predictive techniques to make important decisions.

- Predictive models help make weather forecasts, develop video games, translate voice-to-text messages, make customer service decisions, and develop investment portfolios.

- Predictive analytics determines a likely outcome based on an examination of current and historical data.

- Decision trees, regression, and neural networks all are types of predictive models.

- People often confuse predictive analytics with machine learning even though the two are different disciplines.

Understanding Predictive Analytics

Predictive analytics looks for past patterns to measure the likelihood that those patterns will reoccur. It draws on a series of techniques to make these determinations, including artificial intelligence (AI), data mining , machine learning, modeling, and statistics. For instance, data mining involves the analysis of large sets of data to detect patterns from it. Text analysis does the same using large blocks of text.

Predictive models are used for many applications, including weather forecasts, creating video games, translating voice to text, customer service, and investment portfolio strategies. All of these applications use descriptive statistical models of existing data to make predictions about future data.

Predictive analytics helps businesses manage inventory, develop marketing strategies , and forecast sales . It also helps businesses survive, especially in highly competitive industries such as health care and retail. Investors and financial professionals draw on this technology to help craft investment portfolios and reduce their overall risk potential.

These models determine relationships, patterns, and structures in data that are used to draw conclusions as to how changes in the underlying processes that generate the data will change the results. Predictive models build on these descriptive models and look at past data to determine the likelihood of certain future outcomes, given current conditions or a set of expected future conditions.

Uses of Predictive Analytics

Predictive analytics is a decision-making tool in many industries. Following are some examples.

Manufacturing

Forecasting is essential in manufacturing to optimize the use of resources in a supply chain . Critical spokes of the supply chain wheel, whether it is inventory management or the shop floor, require accurate forecasts for functioning.

Predictive modeling is often used to clean and optimize the quality of data used for such forecasts. Modeling ensures that more data can be ingested by the system, including from customer-facing operations, to ensure a more accurate forecast.

Credit scoring makes extensive use of predictive analytics. When a consumer or business applies for credit, data on the applicant's credit history and the credit record of borrowers with similar characteristics are used to predict the risk that the applicant might fail to repay any new credit that is approved.

Underwriting

Data and predictive analytics play an important role in underwriting. Insurance companies examine applications for new policies to determine the likelihood of having to pay out for a future claim . The analysis is based on the current risk pool of similar policyholders as well as past events that have resulted in payouts.

Predictive models that consider characteristics in comparison to data about past policyholders and claims are routinely used by actuaries .

Marketing professionals planning a new campagn look at how consumers have reacted to the overall economy. They can use these shifts in demographics to determine if the current mix of products will entice consumers to make a purchase.

Stock Traders

Active traders look at a variety of historical metrics when deciding whether to buy a particular stock or other asset.

Moving averages, bands, and breakpoints all are based on historical data and are used to forecast future price movements.

Fraud Detection

Financial services use predictive analytics to examine transactions for irregular trends and patterns. The irregularities pinpointed can then be examined as potential signs of fraudulent activity.

This may be done by analyzing activity between bank accounts or analyzing when certain transactions occur.

Supply Chain

Supply chain analytics is used to manage inventory levels and set pricing strategies. Supply chain predictive analytics use historical data and statistical models to forecast future supply chain performance, demand, and potential disruptions.

This helps businesses proactively identify and address risks, optimize resources and processes, and improve decision-making. Companies can forecast what materials should be on hand at any given moment and whether there will be any shortages.

Human Resources

Human resources uses predictive analytics to improve various processes such as identifying future workforce skill requirements or identifying factors that contribute to high staff turnover.

Predictive analytics can also analyze an employee's performance, skills, and preferences to predict their career progression and help with career development.

Predictive Analytics vs. Machine Learning

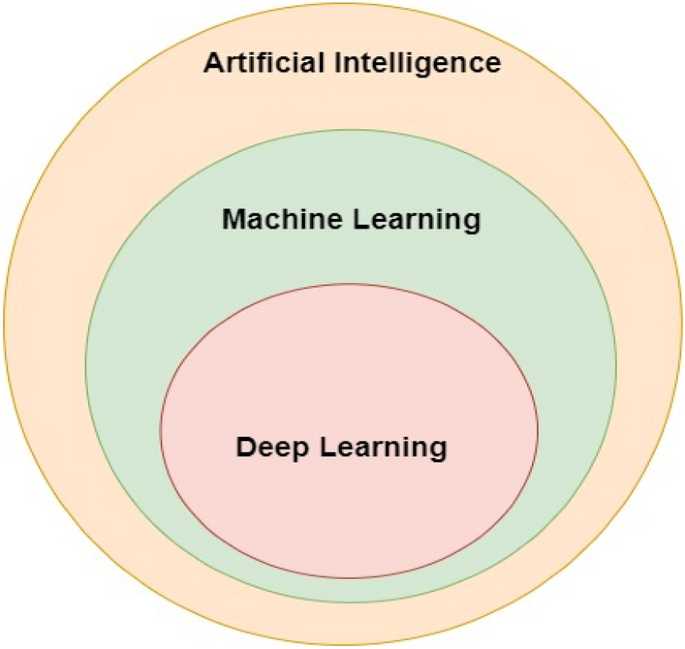

A common misconception is that predictive analytics and machine learning are the same. Predictive analytics help us understand possible future occurrences by analyzing the past. At its core, predictive analytics includes a series of statistical techniques (including machine learning, predictive modeling, and data mining) and uses statistics (both historical and current) to estimate, or predict, future outcomes.

Thus, machine learning is a tool used in predictive analysis.

Machine learning is a subfield of computer science that means "the programming of a digital computer to behave in a way which, if done by human beings or animals, would be described as involving the process of learning." That's a 1959 definition by Arthur Samuel, a pioneer in computer gaming and artificial intelligence.

The most common predictive models include decision trees, regressions (linear and logistic), and neural networks, which is the emerging field of deep learning methods and technologies.

Types of Predictive Analytical Models

There are three common techniques used in predictive analytics: Decision trees, neural networks, and regression.

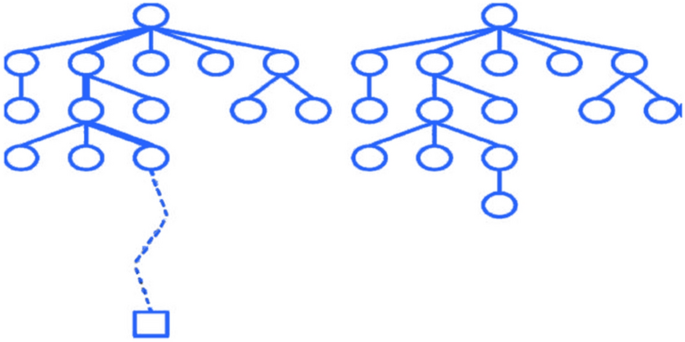

Decision Trees

If you want to understand what leads to someone's decisions, you may find it useful to build a decision tree .

This type of model places data into different sections based on certain variables, such as price or market capitalization . Just as the name implies, it looks like a tree with individual branches and leaves. Branches indicate the choices available while individual leaves represent a particular decision.

Decision trees are easy to understand and dissect. They're useful when you need to make a decision quickly.

This is the model that is used the most in statistical analysis. Use it when you want to decipher patterns in large sets of data and when there's a linear relationship between the inputs.

This method works by figuring out a formula, which represents the relationship between all the inputs found in the dataset.

For example, you can use regression to figure out how price and other key factors can shape the performance of a stock .

Neural Networks

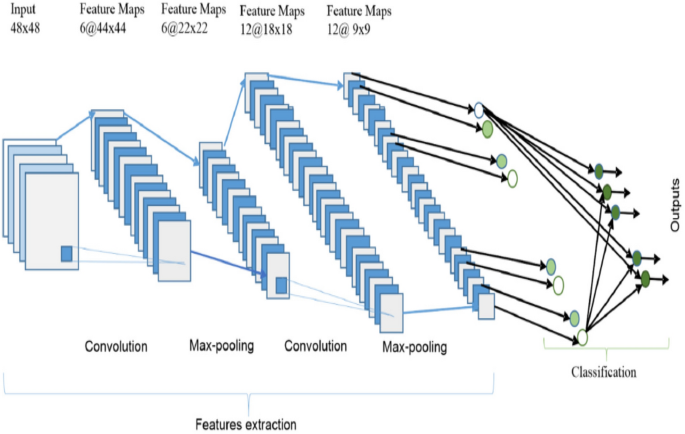

Neural networks were developed as a form of predictive analytics by imitating the way the human brain works. This model can deal with complex data relationships using artificial intelligence and pattern recognition.

Use this method if you have any of several hurdles that you need to overcome. For example, you may have too much data on hand, or don't have the formula you need to find a relationship between the inputs and outputs in your dataset, or need to make predictions rather than come up with explanations.

If you've already used decision trees and regression as models, you can confirm your findings with neural networks.

Cluster Models

Clustering is a method of aggregating data that share similar attributes. For example, Amazon.com can cluster sales based on the quantity purchased, or on the average account age of its consumers.

separating data into similar groups based on shared features, analysts may be able to identify other characteristics that define future activity.

Time Series Modeling

In some cases, data relates to time, and specific predictive analytics rely on the relationship between what happens when. These types of models assess inputs at specific frequencies such as daily, weekly, or monthly iterations.

Then, analytical models can seek seasonality, trends, or behavioral patterns based on timing.

This type of predictive model is useful to predict when peak customer service periods are needed or when specific sales can be expected to jump.

How Businesses Can Use Predictive Analytics

As noted above, predictive analysis can be used in a number of different applications. Businesses can capitalize on models to help advance their interests and improve their operations. Predictive models are frequently used by businesses to help improve customer service and outreach.

Executives and business owners can take advantage of this kind of statistical analysis to determine customer behavior. For instance, the owner of a business can use predictive techniques to identify and target regular customers who might otherwise defect to a competitor.

Predictive analytics plays a key role in advertising and marketing . Companies can use models to determine which customers are likely to respond positively to marketing and sales campaigns. Business owners can save money by targeting customers who will respond positively rather than doing blanket campaigns.

Benefits of Predictive Analytics

As mentioned above, predictive analytics can help anticipate outcomes when there are no obvious answers available.

Investors, financial professionals, and business leaders use models to help reduce risk. For instance, an investor or an advisor can use models to help craft an investment portfolio with an appropriate level of risk, considering factors such as age, family responsibilities, and goals.

Businesses use them to keep their costs down. They can determine the likelihood of success or failure of a product before it is developed. Or they can set aside capital for production improvements before the manufacturing process begins.

Criticism of Predictive Analytics

The use of predictive analytics has been criticized and, in some cases, legally restricted due to perceived inequities in its outcomes. Most commonly, this involves predictive models that result in statistical discrimination against racial or ethnic groups in areas such as credit scoring, home lending, employment, or risk of criminal behavior.

A famous example of this is the now illegal practice of redlining in home lending by banks. Regardless of the accuracy of the predictions, their use is discouraged as they perpetuate discriminatory lending practices and contribute to the decline of redlined neighborhoods.

How Does Netflix Use Predictive Analytics?

Data collection is important to a company like Netflix. It collects data from its customers based on their behavior and past viewing patterns. It uses that information to make recommendations based on their preferences.

This is the basis of the "Because you watched..." lists you'll find on the site. Other sites, notably Amazon, use their data for "Others who bought this also bought..." lists.

What Are the 3 Pillars of Data Analytics?

The three pillars of data analytics are the needs of the entity that is using the model, the data and technology used to study it, and the actions and insights that result from the analysis.

What Is Predictive Analytics Good For?

Predictive analytics is good for forecasting, risk management, customer behavior analytics, fraud detection, and operational optimization. Predictive analytics can help organizations improve decision-making, optimize processes, and increase efficiency and profitability. This branch of analytics is used to leverage data to forecast what may happen in the future.

What Is the Best Model for Predictive Analytics?

The best model for predictive analytics depends on several factors, such as the type of data, the objective of the analysis, the complexity of the problem, and the desired accuracy of the results. The best model to choose from may range from linear regression, neural networks, clustering, or decision trees.

The goal of predictive analytics is to make predictions about future events, then use those predictions to improve decision-making. Predictive analytics is used in a variety of industries including finance, healthcare, marketing, and retail. Different methods are used in predictive analytics such as regression analysis, decision trees, or neural networks.

Predictive Analytics Today. " WHAT IS PREDICTIVE ANALYSIS? "

IBM. " Predictive analytics ."

Global Newswire. " Trends in Predictive Analytics Market Size & Share will Reach $10.95 Billion by 2022 ."

PWC. " Big data: innovation in investing ."

Samule, Arthur. " Some Studies in Machine Learning Using the Game of Checkers. " IBM Journal of Research and Development, vol. 3, no. 3, July 1959, pp. 210-229.

SAS. " Predictive Analysis ."

Logi Analytics. " What Is Predictive Analysis? "

Utreee. What is Predictive Analytics, its Benefits and Challenges? "

:max_bytes(150000):strip_icc():format(webp)/autoregressive-integrated-moving-average-arima.asp-Final-cda3fcc744084e08808bc96d87b1f496.png)

- Terms of Service

- Editorial Policy

- Privacy Policy

- Your Privacy Choices

Data Science Central

- Author Portal

- 3D Printing

- AI Data Stores

- AI Hardware

- AI Linguistics

- AI User Interfaces and Experience

- AI Visualization

- Cloud and Edge

- Cognitive Computing

- Containers and Virtualization

- Data Science

- Data Security

- Digital Factoring

- Drones and Robot AI

- Internet of Things

- Knowledge Engineering

- Machine Learning

- Quantum Computing

- Robotic Process Automation

- The Mathematics of AI

- Tools and Techniques

- Virtual Reality and Gaming

- Blockchain & Identity

- Business Agility

- Business Analytics

- Data Lifecycle Management

- Data Privacy

- Data Strategist

- Data Trends

- Digital Communications

- Digital Disruption

- Digital Professional

- Digital Twins

- Digital Workplace

- Marketing Tech

- Sustainability

- Agriculture and Food AI

- AI and Science

- AI in Government

- Autonomous Vehicles

- Education AI

- Energy Tech

- Financial Services AI

- Healthcare AI

- Logistics and Supply Chain AI

- Manufacturing AI

- Mobile and Telecom AI

- News and Entertainment AI

- Smart Cities

- Social Media and AI

- Functional Languages

- Other Languages

- Query Languages

- Web Languages

- Education Spotlight

- Newsletters

- O’Reilly Media

18 Great Articles About Predictive Analytics

- March 13, 2018 at 1:30 pm

This resource is part of a series on specific topics related to data science : regression, clustering, neural networks, deep learning, Hadoop, decision trees, ensembles, correlation, outliers, regression, Python, R, Tensorflow, SVM, data reduction, feature selection, experimental design, time series, cross-validation, model fitting, dataviz, AI and many more. To keep receiving these articles, sign up on DSC .

- Differences between Data Mining and Predictive Analytics

- Automated Predictive Analytics – What Could Possibly Go Wrong? +

- Predictive Analytics in the Supply Chain

- Predictive Analytics Goes to College – to Predict Student Success

- Hype Cycle History on Predictive Analytics

- Predictive Analytics for Beginners

- An Intro to Predictive Analytics: Can I predict the future?

- Prescriptive versus Predictive Analytics

- The Ultimate Guide for Choosing Algorithms for Predictive Modeling

- Unraveling Real-Time Predictive Analytics

- Financial Firms Embrace Predictive Analytics

- Is Predictive Analytics Mainstream?

- What is Predictive Analytics?

- Predictive Analytics Demystified

- Predictive Analytics in Excel

- Interpreting Predictive Analytics with a Grain of Salt

- Predictive Analytics Strategy

- Predictive Analytics and Sensor Data

Source for picture: article flagged with a +

DSC Resources

- Services: Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Contributors: Post a Blog | Ask a Question

- Follow us: @DataScienceCtrl | @AnalyticBridge

Related Content

We are in the process of writing and adding new material (compact eBooks) exclusively available to our members, and written in simple English, by world leading experts in AI, data science, and machine learning.

Welcome to the newly launched Education Spotlight page! View Listings

- Survey Paper

- Open access

- Published: 25 July 2020

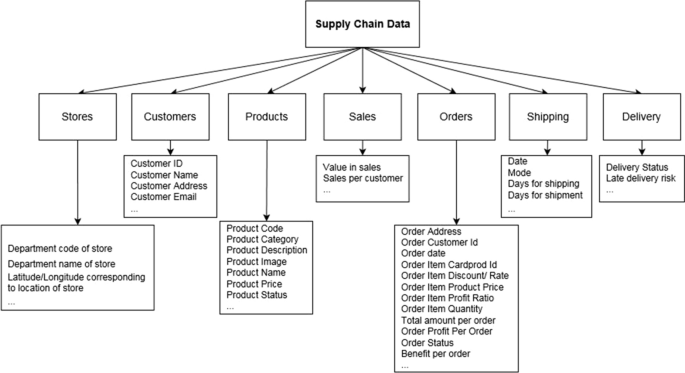

Predictive big data analytics for supply chain demand forecasting: methods, applications, and research opportunities

- Mahya Seyedan 1 &

- Fereshteh Mafakheri ORCID: orcid.org/0000-0002-7991-4635 1

Journal of Big Data volume 7 , Article number: 53 ( 2020 ) Cite this article

110k Accesses

119 Citations

23 Altmetric

Metrics details

Big data analytics (BDA) in supply chain management (SCM) is receiving a growing attention. This is due to the fact that BDA has a wide range of applications in SCM, including customer behavior analysis, trend analysis, and demand prediction. In this survey, we investigate the predictive BDA applications in supply chain demand forecasting to propose a classification of these applications, identify the gaps, and provide insights for future research. We classify these algorithms and their applications in supply chain management into time-series forecasting, clustering, K-nearest-neighbors, neural networks, regression analysis, support vector machines, and support vector regression. This survey also points to the fact that the literature is particularly lacking on the applications of BDA for demand forecasting in the case of closed-loop supply chains (CLSCs) and accordingly highlights avenues for future research.

Introduction

Nowadays, businesses adopt ever-increasing precision marketing efforts to remain competitive and to maintain or grow their margin of profit. As such, forecasting models have been widely applied in precision marketing to understand and fulfill customer needs and expectations [ 1 ]. In doing so, there is a growing attention to analysis of consumption behavior and preferences using forecasts obtained from customer data and transaction records in order to manage products supply chains (SC) accordingly [ 2 , 3 ].