Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Authorship and citation manipulation in academic research

Contributed equally to this work with: Eric A. Fong, Allen W. Wilhite

Roles Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Writing – original draft, Writing – review & editing

Affiliation Department of Management, University of Alabama in Huntsville, Huntsville, Alabama, United States of America

* E-mail: [email protected]

Affiliation Department of Economics, University of Alabama in Huntsville, Huntsville, Alabama, United States of America

- Eric A. Fong,

- Allen W. Wilhite

- Published: December 6, 2017

- https://doi.org/10.1371/journal.pone.0187394

- Reader Comments

Some scholars add authors to their research papers or grant proposals even when those individuals contribute nothing to the research effort. Some journal editors coerce authors to add citations that are not pertinent to their work and some authors pad their reference lists with superfluous citations. How prevalent are these types of manipulation, why do scholars stoop to such practices, and who among us is most susceptible to such ethical lapses? This study builds a framework around how intense competition for limited journal space and research funding can encourage manipulation and then uses that framework to develop hypotheses about who manipulates and why they do so. We test those hypotheses using data from over 12,000 responses to a series of surveys sent to more than 110,000 scholars from eighteen different disciplines spread across science, engineering, social science, business, and health care. We find widespread misattribution in publications and in research proposals with significant variation by academic rank, discipline, sex, publication history, co-authors, etc. Even though the majority of scholars disapprove of such tactics, many feel pressured to make such additions while others suggest that it is just the way the game is played. The findings suggest that certain changes in the review process might help to stem this ethical decline, but progress could be slow.

Citation: Fong EA, Wilhite AW (2017) Authorship and citation manipulation in academic research. PLoS ONE 12(12): e0187394. https://doi.org/10.1371/journal.pone.0187394

Editor: Lutz Bornmann, Max Planck Society, GERMANY

Received: February 28, 2017; Accepted: September 20, 2017; Published: December 6, 2017

Copyright: © 2017 Fong, Wilhite. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: All relevant data are within the paper and its Supporting Information files. The pertinent appendices are: S2 Appendix: Honorary author data; S3 Appendix: Coercive citation data; and S4 Appendix: Journal data. In addition the survey questions and counts of the raw responses to those questions appear in S1 Appendix: Statistical methods, surveys, and additional results.

Funding: This publication was made possible by a grant from the Office of Research Integrity through the Department of Health and Human Services: Grant Number ORIIR130003. Contents are solely the responsibility of the authors and do not necessarily represent the official views of the Department of Health and Human Services or the Office of Research Integrity. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Competing interests: The authors have declared that no competing interests exist.

Introduction

The pressure to publish and to obtain grant funding continues to build [ 1 – 3 ]. In a recent survey of scholars, the number of publications was identified as the single most influential component of their performance review while the journal impact factor of their publications and order of authorship came in second and third, respectively [ 3 ]. Simultaneously, rejection rates are on the rise [ 4 ]. This combination, the pressure to increase publications coupled with the increased difficulty of publishing, can motivate academics to violate research norms [ 5 ]. Similar struggles have been identified in some disciplines in the competition for research funding [ 6 ]. For journals and the editors and publishers of those journals, impact factors have become a mark of prestige and are used by academics to determine where to submit their work, who earns tenure, and who may be awarded grants [ 7 ]. Thus, the pressure to increase a journal’s impact factor score is also increasing. With these incentives it is not surprising that academia is seeing authors and editors engaged in questionable behaviors in an attempt to increase their publication success.

There are many forms of academic misconduct that can increase an author’s chance for publication and some of the most severe cases include falsifying data, falsifying results, opportunistically interpreting statistics, and fake peer-review [ 5 , 8 – 12 ]. For the most part, these extreme examples seem to be relatively uncommon; for example, only 1.97% of surveyed academics admit to falsifying data, although this probably understates the actual practice as these respondents report higher numbers of their colleagues misbehaving [ 10 ].

Misbehavior regarding attribution, on the other hand, seems to be widespread [ 13 – 18 ]; for example, in one academic study, roughly 20% of survey respondents have experienced coercive citation (when editors direct authors to add citations to articles from the editors’ journals even though there is no indicated lack of attribution and no specific articles or topics are suggested by the editor) and over 50% said they would add superfluous citations to a paper being submitted to a coercive journal in an attempt to increase its chance for publication [ 18 ]. Honorary authorship (the addition of individuals to manuscripts as authors, even though those individuals contribute little, if anything, to the actual research) is a common behavior in several disciplines [ 16 , 17 ]. Some scholars pad their references in an attempt to influence journal referees or grant reviewers by citing prestigious publications or articles from the editor’s journal (or the editor’s vita) even if those citations are not pertinent to the research. While there is little systematic evidence that such a strategy influences editors, the perception of its effectiveness is enough to persuade some scholars to pad [ 19 , 20 ]. Overall, it seems that many scholars consider authorship and citation to be fungible attributes, components of a project one can alter to improve their publication and funding record or to increase journal impact factors (JIFs).

Most studies examining attribution manipulation focus on the existence and extent of misconduct and typically address a narrow section of the academic universe; for example, there are numerous studies measuring the amount of honorary authorship in medicine, but few in engineering, business, or the social sciences [ 21 – 25 ]. And, while coercive citation has been exposed in the some business fields, less is known about its prevalence in medicine, science, or engineering. In addition, the pressure to acquire research funding is nearly as intense as publication pressures and in some disciplines funding is a major component of performance reviews. Thus, grant proposals are also viable targets of manipulation, but research into that behavior is sparse [ 2 , 6 ]. However, if grant distributions are swayed by manipulation then resources are misdirected and promising areas of research could be neglected.

There is little disagreement with the sentiment that this manipulation is unethical, but there is less agreement about how to slow its use. Ultimately, to reverse this decline of ethics we need to better understand the factors that impact attribution manipulation and that is the focus of this manuscript. Using more than 12,000 responses to surveys sent to more than 110,000 academics from disciplines across the academic universe, this study aims to examine the prevalence and systematic nature of honorary authorship, coercive citation, and padded citations in eighteen different disciplines in science, engineering, medicine, business, and the social sciences. In essence, we do not just want to know how common these behaviors are, but whether there are certain types of academics who add authors or citations or are coerced more often than others. Specifically, we ask, what are the prevailing attributes of scholars who manipulate, whether willingly (e.g., padded citation) or not (e.g., coercive citation), and we consider attributes like academic rank, gender, discipline, level of co-authorship, etc. We also look into the reasons scholars manipulate and ask their opinions on the ethics of this behavior. In our opinion, a deeper understanding of manipulation can shed light on potential ways to reduce this type of academic misconduct.

As noted in the introduction, the primary component of performance reviews, and thus of individual research productivity, is the number of published articles by an academic [ 3 ]. This number depends on two things: (i) the number of manuscripts on which a scholar is listed as an author and (ii) the likelihood that each of those manuscripts will be published. The pressure to increase publications puts pressure on both of these components. In a general sense, this can be beneficial for society as it creates incentives for individuals to work harder (to increase the quantity of research projects) and to work better (to increase the quality of those projects) [ 6 ]. There are similar pressures and incentives in the application for, and distribution of, research grants as many disciplines in science, engineering, and medicine view the acquisition of funding as both a performance measure and a precursor to publication given the high expense of the equipment and supplies needed to conduct research [ 2 , 6 ]. But this publication and funding pressure can also create perverse incentives.

Honorary authorship

Working harder is not the only means of increasing an academic’s number of publications. An alternative approach is known as “honorary authorship” and it specifically refers to the inclusion of individuals as authors on manuscripts, or grant proposals, even though they did not contribute to the research effort. Numerous studies have explored the extent of honorary authorship in a variety of disciplines [ 17 , 20 , 21 – 25 ]. The motivation to add authors can come from many sources; for instance, an author may be directed to add an individual who is a department chair, lab director, or some other administrator with power, or they might voluntarily add such an individual to curry favor. Additionally, an author might create a reciprocal relationship where they add an honorary author to their own paper with the understanding that the beneficiary will return the favor on another paper in the future, or an author may just do a friend a favor and include their name on a manuscript [ 23 , 24 ]. In addition, if the added author has a prestigious reputation, this can also increase the chances of the manuscript receiving a favorable review. Through these means, individuals can raise the expected value of their measured research productivity (publications) even though their actual intellectual output is unchanged.

Similar incentives apply to grant funding. Scholars who have a history of repeated funding, especially funding from the more prestigious funding agencies, are viewed favorably by their institutions [ 2 ]. Of course, grants provide resources, which increase an academic’s research output, but there are also direct benefits from funded research accruing to the university: overhead charges, equipment purchases that can be used for future projects, graduate student support, etc. Consequentially, “rainmakers” (scholars with a record of acquiring significant levels of research funding) are valued for that skill.

As with publications, the amount of research funding received by an individual depends on the number and size of proposals put forth and the probability of each getting funded. This metric creates incentives for individuals to get their names on more proposals, on bigger proposals, and to increase the likelihood that those proposals will be successful. That pressure opens the door to the same sorts of misattribution behavior found in manuscripts because honorary authorship can increase the number of grant proposals that include an author’s name and by adding a scholar with a prestigious reputation as an author they may increase their chances of being funded. As we investigate the use of honorary authorship we do not focus solely on its prevalence; we also question whether there is a systematic nature to its use. First, for example, it makes sense that academics who are early in their career have less funding and lack the protection of tenure and thus need more publications than someone with an established reputation. To begin to understand if systematic differences exist in the use of honorary authorship, the first set of empirical questions to be investigated here is: who is likely to add honorary authors to manuscripts or grant proposals? Scholars of lower rank and without tenure may be more likely to add authors, whether under pressure from senior colleagues or in their own attempt to sway reviewers. Tenure and promotion depend critically on a young scholars’ ability to establish a publication record, secure research funding, and engender support from their senior faculty. Because they lack the protection of rank and tenure, refusing to add someone could be risky. Of course, senior faculty members also have goals and aspirations that can be challenging, but junior faculty have far more on the line in terms of their career.

Second, we expect research faculty to be more likely to add honorary authors, especially to grant proposals, because they often occupy positions that are heavily dependent on a continued stream of research success, particularly regarding research funding. Third, we expect that female researchers may be less able to resist pressure to add honorary authors because women are underrepresented in faculty leadership and administrative positions in academia and lack political power [ 26 , 27 ]. It is not just their own lack of position that matters; the dearth of other females as senior faculty or in leadership positions leave women with fewer mentors, senior colleagues, and administrators with similar experiences to help them navigate these political minefields [ 28 , 29 ]. Fourth, because adding an author waters down the credit received by each existing author, we expect manuscripts that already have several authors to be less resistant to additional “credit sharing.” Simply put, if credit is equally distributed across authors then adding a second author would cut your perceived contribution in half, but adding a sixth author reduces your contribution by only 3% (from 20% to 17%).

Fifth, because academia is so competitive, the decisions of some scholars have an impact on others in the same research population. If your research interests are in an area in which honorary authorship is common and considered to be effective, then a promising counter-policy to the manipulation undertaken by others is to practice honorary authorship yourself. This leads us to predict that the obligation to add honorary authors to grant proposals and/or manuscripts is likely to concentrate more heavily in some disciplines. In other words, we do not expect it to be practiced uniformly or randomly across fields; instead, there will be some disciplines who are heavily engaged in adding authors and other disciplines less so engaged. In general, we have no firm predictions as to which disciplines are more likely to practice honorary authorship; we predict only that its practice will be lumpy. However, there may be reasons to suspect some patterns to emerge; for example, some disciplines, such as science, engineering, and medicine, are much more heavily dependent on research funding than other disciplines, such as the social sciences, mathematics, and business [ 2 ]. For example, over 70% of the NSF budget goes to science and engineering and about 4% to the social sciences. Similarly, most of the NIH budget goes to doctors and a smaller share to other disciplines [ 30 ]. Consequently, we suspect that the disciplines that most prominently add false investigators to grant proposals are more likely to be in science, engineering, and the medical fields. We do not expect to see that division as prominent in the addition of authors to manuscripts submitted for publication.

There are several ways scholars may internalize the pressure to perform, which can lead to different reasons why a scholar might add an honorary author to a paper. A second goal of this paper is to study who might employ these different strategies. Thus, we asked authors for the reasons they added honorary authors to their manuscripts and grants; for example, was this person in a position of authority, or a mentor, did they have a reputation that increased the chances for publication or funding, etc? Using these responses as a dependent variable, we then look to find out if these were related to the professional characteristics of the scholars in our study. The hypotheses to be tested mirror the questions posed for honorary authors. We expect junior faculty, research faculty, female faculty, and projects with more co-authors to be more likely to add additional coauthors to manuscripts and grants than professors, male faculty, and projects with fewer co-authors. Moreover, we expect for the practice to differ across disciplines. Focusing specifically on honorary authorship in grant proposals, we also explore the possibility that the use of honorary authorship differs between funding opportunities and agencies.

Coercive citation

Journal rankings matter to editors, editorial boards, and publishers because rankings affect subscriptions and prestige. In spite of their shortcomings, impact factors have become the dominant measure of journal quality. These measures include self-citation, which creates an incentive for editors to direct authors to add citations even if those citations are irrelevant, a practice called “coercive citation” [ 18 , 27 ]. This behavior has been systematically measured in business and social science disciplines [ 18 ]. Additionally, researchers have found that coercion sometimes involves more than one journal; editors have gone as far as organizing “citation cartels” where a small set of editors recommend that authors cite articles from each other’s journal [ 31 ].

When editors make decisions to coerce, who might they target, who is most likely to be coerced? Assuming editors balance the costs and benefits of their decisions, a parallel set of empirical hypotheses emerge. Returning to the various scholar attributes, we expect editors to target lower-ranked faculty members because they may have a greater incentive to cooperate as additional publications have a direct effect on their future cases for promotion, and for assistant professors on their chances of tenure as well. In addition, because they have less political clout and are less likely to openly complain about coercive treatment, lower ranked faculty members are more likely to acquiesce to the editor’s request. We predict that editors are more likely to target female scholars because female scholars hold fewer positions of authority in academia and may lack the institutional support of their male counterparts. We also expect the number of coauthors to play a role, but contrary to our honorary authorship prediction, we predict editors will target manuscripts with fewer authors rather than more authors. The rationale is simple; authors do not like to be coerced and when an editor requires additional citations on a manuscript having many authors then the editor is making a larger number of individuals aware of their coercive behavior, but coercing a sole-authored paper upsets a single individual. Notice that we are hypothesizing the opposite sign in this model than in the honorary authorship model; if authors are making a decision to add honorary authors then they prefer to add people to articles that already have many co-authors, but if editors are making the decision then they prefer to target manuscripts with few authors to minimize the potential pushback.

As was true in the model of honorary authorship, we expect the practice of coercion to be more prevalent in some disciplines than others. If one editor decides to coerce authors and if that strategy is effective, or is perceived to be effective, then there is increased pressure for other editors in the same discipline to also coerce just to maintain their ranking—if one journal climbs up in the rankings, others, who do nothing, fall. Consequently, coercion begets additional coercion and the practice can spread. But, a journal climbing up in the rankings in one discipline has little impact on other disciplines and thus we expect to find coercion practiced unevenly; prevalent in some disciplines, less so in others. Finally, as a sub-conjecture to this hypothesis, we expect coercive citation to be more prevalent in disciplines for which journal publication is the dominant measure for promotion and tenure; that is, disciplines that rely less heavily on grant funding. This means we expect the practice to be scattered, and lumpy, but we also expect relatively more coercion in the business and social sciences disciplines.

We are also interested in the types of journals that have been reported to coerce and to explore those issues we gather data using the journal as the unit of observation. As above, we expect differences between disciplines and we expect those discipline differences to mirror the discipline differences found in the author-based data set. We also expect a relationship between journal ranking and coercion because the costs and benefits of coercion differ for more or less prestigious journals. Consider the benefits of coercion. The very highest ranked journals have high impact factors; consequently, to rise another position in the rankings requires a significant increase in citations, which would require a lot of coercion. Lower-ranked journals, however, might move up several positions with relatively few coerced citations. Furthermore, consider the cost of coercion. Elite journals possess valuable reputations and risking them by coercing might be foolhardy; journals deep down in the rankings have less at stake. Given this logic, it seems likely that lower ranked journals are more likely to have practiced coercion.

We also look to see if publishers might influence the coercive decision. Journals are owned and published by many different types of organizations; the most common being commercial publishers, academic associations, and universities. A priori , commercial publishers, being motivated by profits, are expected to be more interested in subscriptions and sales, so the return to coercion might be higher for that group. On the other hand, the integrity of a journal might be of greater concern to non-profit academic associations and university publishers, but we don’t see a compelling reason to suppose that universities or academic associations will behave differently from one another. Finally, we control for some structural difference across journals by including each journal’s average number of cites per document and the total number of documents they publish per year.

Padded citations

The third and final type of attribution manipulation explored here is padded reference lists. Because some editors coerce scholars to add citations to boost their journals’ impact factor score and because this practice is known by many scholars there is an incentive for scholars to add superfluous citations to their manuscripts prior to submission [ 18 ]. Provided there is an incentive for scholars to pad their reference lists in manuscripts, we wondered if grant writers would be willing to pad reference lists in grants in an attempt to influence grant reviewers.

As with honorary authorship, we suspect there may be a systematic element to padding citations. In fact, we expect the behavior of padding citations to parallel the honorary author behavior. Thus we predict that scholars of lower rank and therefore without tenure and female scholars to be more likely to pad citations to assuage an editor or sway grant reviewers. Because the practice also encompasses a feedback loop (one way to compete with scholars who pad their citations is to pad your citations) we expect the practice to proliferate in some disciplines. The number of coauthors is not expected to play a role, but we also expect knowledge of other types of manipulation to be important. That is, we hypothesize that individuals who are aware of coercion, or who have been coerced, are more likely to pad citations. With grants, we similarly expect individuals who add honorary authors to grant proposals to also be likely to pad citations in grant proposals. Essentially, the willingness to misbehave in one area is likely related to misbehavior in other areas.

The data collection method of choice for this study is survey because to it would be difficult to determine if someone added honorary authors or padded citations prior to submission without asking that individual. As explained below, we distributed surveys in four waves over five years. Each survey, its cover email, and distribution strategy was reviewed and approved by the University of Alabama in Huntsville’s Institutional Review Board. Copies of these approvals are available on request. We purposely did not collect data that would allow us to identify individual respondents. We test our hypotheses using these survey data and journal data. Given the complexity of the data collection, both survey and archival journal data, we will begin with discussing our survey data and the variables developed from our survey. We then discuss our journal data and the variables developed there. Over the course of a five-year period and using four waves of survey collection, we sent surveys, via email, to more than 110,000 scholars in total from eighteen different disciplines (medicine, nursing, biology, chemistry, computer science, mathematics, physics, engineering, ecology, accounting, economics, finance, marketing, management, information systems, sociology, psychology, and political science) from universities across the U.S. See Table 1 for details regarding the timing of survey collection. Survey questions and raw counts of the responses to those questions are given in S1 Appendix : Statistical methods, surveys, and additional results. Complete files of all of the data used in our estimates are in the S2 , S3 and S4 Appendices.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0187394.t001

Potential survey recipients and their contact information (email addresses) were identified in three different ways. First, we were able to get contact information for management scholars through the Academy Management using the annual meeting catalog. Second, for economics and physicians we used the membership services provided by the American Economic Association and the American Medical Association. Third, for the remaining disciplines we identified the top 200 universities in the United States using U . S . News and World Report’s “National University Rankings” and hand-collected email addresses by visiting those university websites and copying contact information for individual faculty members from each of the disciplines. We also augmented the physician contact list by visiting the web sites of the medical schools in these top 200 school as well. With each wave of surveys, we sent at least one reminder to participate. The approximately 110,000 surveys yielded about 12,000 responses for an overall response rate of about 10.5%. Response rates by discipline can be found in Table A in S1 Appendix .

Few studies have examined the systematic nature of honorary authorship and padded citation and thus we developed our own survey items to address our hypotheses. Our survey items for coercive citation were taken from prior research on coercion [ 18 ]. All survey items and the response alternatives with raw data counts are given in S1 Appendix . The complete data are made available in S2 – S4 Appendices.

Our first set of tests relate to honorary authorship in manuscripts and grants and is made up of several dependent variables, each related to the research question being addressed. We begin with the existence of honorary authorship in manuscripts. This dependent variable is composed of the answers to the survey question: “Have YOU felt obligated to add the name of another individual as a coauthor to your manuscript even though that individual’s contribution was minimal?” Responses were in the form of yes and no where “yes” was coded as a 1 and “no” coded as a 0. The next dependent variable addresses the frequency of this behavior asking: “In the last five years HOW MANY TIMES have you added or had coauthors added to your manuscripts even though they contributed little to the study?” The final honorary authorship dependent variables deal with the reason for including an honorary author in manuscripts: “Even though this individual added little to this manuscript he (or she) was included as an author. The main reason for this inclusion was:” and the choices regarding this answer were that the honorary author is the director of the lab or facility used in the research, occupies a position of authority and can influence my career, is my mentor, is a colleague I wanted to help out, was included for reciprocity (I was included or expect to be included as a co-author on their work), has data I needed, has a reputation that increases the chances of the work being published, or they had funding we could apply to the research. Responses were coded as 1 for the main reason given (only one reason could be selected as the “main” reason) and 0 otherwise.

Regarding honorary authorship in grant proposals, our first dependent variable addresses its existence: “Have you ever felt obligated to add a scholar’s name to a grant proposal even though you knew that individual would not make a significant contribution to the research effort?” Again, responses were in the form of yes and no where “yes” was coded as a 1 and “no” coded as a 0. The remaining dependent variables regarding honorary authorship in grant proposals addresses the reasons for adding honorary authors to proposals: “The main reason you added an individual to this grant proposal even though he (or she) was not expected to make a significant contribution was:” and the provided potential responses were that the honorary author is the director of the lab or facility used in the research, occupies a position of authority and can influence my career, is my mentor, is a colleague I wanted to help out, was included for reciprocity (I was included or expect to be included as a co-author on their work), has data I needed, has a reputation that increases the chances of the work being published, or was a person suggested by the grant reviewers. Responses were coded as 1 for the main reason given (only one reason could be selected as the “main” reason) and 0 otherwise.

Our next major set of dependent variables deal with coercive citation. The first coercive citation dependent variable was measured using the survey question: “Have YOU received a request from an editor to add citations from the editor’s journal for reasons that were not based on content?” Responses were in the form of yes (coded as a 1) and no (coded as 0). The next question deals with the frequency: “In the last five years, approximately HOW MANY TIMES have you received a request from the editor to add more citations from the editor’s journal for reasons that were not based on content?”

Our final set of dependent variables from our survey data investigates padding citations in manuscripts and grants. The dependent variable that addresses an author’s willingness to pad citations for manuscripts comes from the following question: “If I were submitting an article to a journal with a reputation of asking for citations to itself even if those citations are not critical to the content of the article, I would probably add such citations BEFORE SUBMISSION.” Answers to this question were in the form of a Likert scale with five potential responses (Strongly Disagree, Disagree, Neutral, Agree, and Strongly Agree) where Strongly Disagree was coded as a 1 and Strongly Agree coded as a 5. The dependent variable for padding citations in grant proposals uses responses to the statement: “When developing a grant proposal I tend to skew my citations toward high impact factor journals, even if those citations are of marginal import to my proposal.” Answers were in the form of a Likert scale with five potential responses (Strongly Disagree, Disagree, Neutral, Agree, and Strongly Agree) where Strongly Disagree was coded as a 1 and Strongly Agree coded as a 5.

To test our research questions, several independent variables were developed. We begin by addressing the independent variables that cut across honorary authorship, coercive citation, and padding citations. The first is academic rank. We asked respondents their current rank: Assistant Professor, Associate Professor, Professor, Research Faculty, Clinical Faculty, and other. Dummy variables were created for each category with Professor being the omitted category in our tests of the hypotheses. The second general independent variable is discipline: Medicine, Nursing, Accounting, Economics, Finance, Information Systems, Management, Marketing, Political Science, Psychology, Sociology, Biology, Chemistry, Computer Science, Ecology, and Engineering. Again, dummy variables were created for each discipline, but instead of omitting a reference category we include all disciplines and then constrain the sum of their coefficients to equal zero. With this approach, the estimated coefficients then tell us how each discipline differs from the average level of honorary authorship, coercive citation, or padded citation across the academic spectrum [ 32 ]. We can conveniently identify three categories: (i) disciplines that are significantly more likely to engage in honorary authorship, coercive citation, or padded citation than the average across all disciplines, (ii) disciplines that do not differ significantly from the average level of honorary authorship, coercive citation, or padded citation across all of these disciplines, and (iii) those who are significantly less likely to engage in honorary authorship, coercive citation, or padded citation than the average. We test the potential gender differences with a dummy variable male = 1, females = 0.

Additional independent variables were developed for specific research questions. In our tests of honorary authorship, there is an independent variable addressing the number of co-authors on a respondent’s most recent manuscript. If the respondent stated that they have added an honorary author then they were asked “Please focus on the most recent incidence in which an individual was added as a coauthor to one of your manuscripts even though his or her contribution was minimal. Including yourself, how many authors were on this manuscript?” Respondents who had not added an honorary author were asked to report the number of authors on their most recently accepted manuscript. We also include an independent variable regarding funding agencies: “To which agency, organization, or foundation was this proposal directed?” Again, for those who have added authors, we request they focus on the most recent proposal where they used honorary authorship and for those who responded that they have not practiced honorary authorship, we asked where they sent their most recent proposal. Their responses include NSF, HHS, Corporations, Private nonprofit, State funding, Other Federal grants, and Other grants. Regarding coercive citation, we included an independent variable regarding number of co-authors on their most recent coercive experience and thus if a respondent indicated they’ve been coerced we asked: “Please focus on the most recent incident in which an editor asked you to add citations not based on content. Including yourself, how many authors were on this manuscript?” If a respondent indicated they’ve never been coerced, we asked them to state the number of authors on their most recently accepted manuscript.

Finally, we included control variables. In our tests, we included the respondent’s performance or exposure to these behaviors. For those analyses focusing on manuscripts we used acceptances: “Within the last five years, approximately how many publications, including acceptances, do you have?” The more someone publishes, the more opportunities they have to be coerced, add authors, or add citations; thus, scholars who have published more articles are more likely to have experienced coercion, ceteris paribus. And in our tests of grants we used two performance indicators: 1) “In the last five years approximately how many grant proposals have you submitted for funding?” and 2) “Approximately how much grant money have you received in the last five years? Please write your estimated dollars in box; enter 0 if zero.”

We also investigate coercion using a journal-based dataset, Scopus, which contains information on more than 16,000 journals from these 18 disciplines [ 33 ]. It includes information on the number of articles published each year, the average number of citations per manuscript, the rank of the journal, disciplines that most frequently publish in the journal, the publisher, and so forth. These data were used to help develop our dependent variable as well as our independent and control variables for the journal analysis. Our raw journal data is provided in S4 Appendix : Journal data.

The dependent variables in our journal analysis measure whether a specific journal was identified as a journal in which coercion occurred, or not, and the frequency of that identification. Survey respondents were asked: “To track the possible spread of this practice we need to know specific journals. Would you please provide the names of journals you know engage in this practice?” Respondents were given a blank space to write in journal names. The majority of our respondents declined to identify journals where coercion has occurred; however, more than 1200 respondents provided journal names and in some instances, respondents provided more than one journal name. Among the population of journals in the Scopus database, 612 of these were identified as journals that have coerced by our survey respondents, some of these journals were identified several times. The first dependent variable is binary, coded as 1 if a journal was identified as a journal that has coerced, and coded as 0 otherwise. The frequency estimates uses the count, how many times they were named, as the dependent variable.

The independent variables measure various journal attributes, the first being discipline. The Scopus database identifies the discipline that most frequently publishes in any given journal, and that information was used to classify journals by discipline. Thus, if physics is the most common discipline to publish in a journal, it was classified as a physics journal. We look to see if there is a publisher effect using the publisher information in Scopus to create four categories: commercial publishers, academic associations, universities, and others (the omitted reference category).

We also control for differing editorial norms across disciplines. First, we include the number of documents published annually by each journal. All else equal, a journal that publishes more articles has more opportunities to engage in coercion, and/or it interacts with more authors and is more likely to be reported in our sample. Second, we control for the average number of citations per article. The average number of citations per document controls for some of the overall differences in citation practices across disciplines.

Given the large number of hypotheses to be tested, we present a compiled list of the dependent variables in Table 2 . This table names the dependent variables, describes how they were constructed, and lists the tables that present the estimated coefficients pertinent to those dependent variables. Table 2 is intended to give readers an outline of the arc of the remainder of the manuscript.

https://doi.org/10.1371/journal.pone.0187394.t002

Honorary authorship in research manuscripts

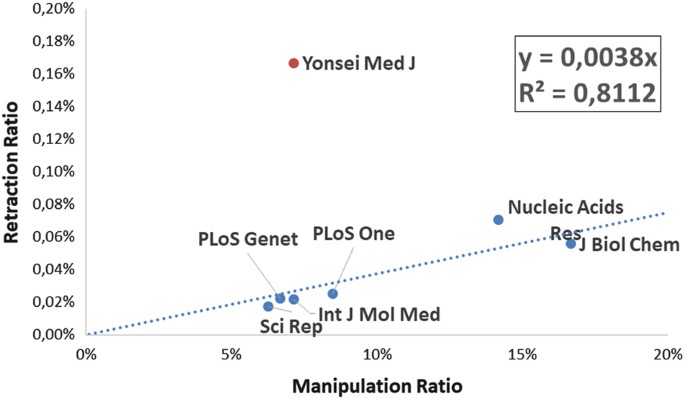

Looking across all disciplines, 35.5% of our survey respondents report that they have added an author to a manuscript even though the contribution of those authors was minimal. Fig 1 displays tallies of some raw responses to show how the use of honorary authorship, for both manuscripts and grants, differs across science, engineering, medicine, business, and the social sciences.

Percentage of respondents who report that honorary authors have been added to their research projects, they have been coerced by editor to add citations, or who have padded their citations, sorted by field of study and type of manipulation.

https://doi.org/10.1371/journal.pone.0187394.g001

To begin the empirical study of the systematic use of honorary authorship, we start with the addition of honorary authors to research manuscripts. This is a logit model in which the dependent variable equals one if the respondent felt obligated to add an author to their manuscript, “even though that individual’s contribution was minimal.” The estimates appear in Table 3 . In brief, all of our conjectures are observed in these data. As we hypothesized above, the pressure on scholars to add authors “who do not add substantially to the research project,” is more likely to be felt by assistant professors and associate professors relative to professors (the reference category). To understand the size of the effect, we calculate odds ratios ( e β ) for each variable, also reported in Table 3 . Relative to a full professor, being an assistant professor increases the odds of honorary authorship in manuscripts by 90%, being an associate professor increases those odds by 40%, and research faculty are twice as likely as a professor to add an honorary author.

https://doi.org/10.1371/journal.pone.0187394.t003

Consistent with our hypothesis, we found support that females were more likely to add honorary authors as the estimated coefficient on males was negative and statistically significant. The odds that a male feels obligated to add an author to a manuscript is 38% lower than for females. As hypothesized, authors who already have several co-authors on a manuscript seem more willing to add another; consistent with our hypotheses that the decrement in individual credit diminishes as the number of authors rises. Overall, these results align with our fundamental thesis that authors are purposively deciding to deceive, adding authors when the benefits are higher and the costs lower.

Considering the addition of honorary authors to manuscripts, Table 3 shows that four disciplines are statistically more likely to add honorary authors than the average across all disciplines. Listing those disciplines in order of their odds ratios and starting with the greatest odds, they are: marketing, management, ecology, and medicine (physicians). There are five disciplines in which honorary authorship is statistically below the average and starting with the lowest odds ratio they are: political science, accounting, mathematics, chemistry, and economics. Finally, the remaining disciplines, statistically indistinguishable from the average, are: physics, psychology, sociology, computer science, finance, engineering, biology, information systems, and nursing. At the extremes, scholars in marketing are 75% more likely to feel an obligation to add authors to a manuscript than the average across all disciplines while political scientists are 44% less likely than the average to add an honorary author to a manuscript.

To bolster these results, we also asked individuals to tell us how many times they felt obligated to add honorary authors to manuscripts in the last five years. Using these responses as our dependent variable we estimated a negative binomial regression equation with the same independent variables used in Table 3 . The estimated coefficients and their transformation into incidence rate ratios are given in Table 4 . Most of the estimated coefficients in Tables 3 and 4 have the same sign and, with minor differences, similar significance levels, which suggests the attributes associated with a higher likelihood of adding authors are also related to the frequency of that activity. Looking at the incidence rate ratios in Table 4 , scholars occupying the lower academic ranks, research professors, females, and manuscripts that already have many authors more frequently add authors. Table 4 also suggests that three additional disciplines, Nursing, Biology, and Engineering, have more incidents of adding honorary authors to manuscripts than the average of all disciplines and, consequently, the disciplines that most frequently engage in honorary authorship are, by effect size, management, marketing, ecology, engineering, nursing, biology, and medicine.

https://doi.org/10.1371/journal.pone.0187394.t004

Another way to measure effect sizes is to standardize the variables so that the changes in the odds ratios or incidence rate ratios measure the impact of a one standard deviation change of the independent variable on the dependent variable. In Tables 3 and 4 , the continuous variables are the number of coauthors on the particular manuscripts of interest and the number of publications of each respondent. Tables C and D (in S1 Appendix ) show the estimated coefficients and odds ratios with standardized coefficients. Comparing the two sets of results is instructive. In Table 3 , the odds ratio for the number of coauthors is 1.035, adding each additional author increases the odds of this manuscript having an honorary author by 3.5%. The estimated odds ratio for the standardized coefficient, (Table C in S1 Appendix ) is 1.10, meaning an increase in the number of coauthors of one standard deviation increases the odds that this manuscript has an honorary author by 10%. Meanwhile the standard deviation of the number of coauthors in this sample is 2.78, so 3.5% x 2.78 = 9.73%; the two estimates are very similar. This similarity repeats itself when we consider the number of publications and when we compare the incidence rate ratios across Table 4 and Table D in S1 Appendix . Standardization also tells us something about the relative effect size of different independent variables and in both models a standard deviation increase in the number of coauthors has a larger impact on the likelihood of adding another author than a standard deviation increase in additional publications.

Honorary authorship in grant proposals

Our next set of results focus on honorary authorship in grant proposals. Looking across all disciplines, 20.8% of the respondents reported that they had added an investigator to a grant proposal even though the contribution of that individual was minimal (see Fig 1 for differences across disciplines). To more deeply probe into that behavior we begin with a model in which the dependent variable is binary, whether a respondent has added an honorary author, or not, to a grant proposal and thus use a logit model. With some modifications, the independent variables include the same variables as the manuscript models in Tables 3 and 4 . We remove a control variable relevant to manuscripts (total number of publications) and add two control variables to measure the level of exposure a particular scholar has to the funding process: the number of grants funded in the last five years and the total amount of grant funding (dollars) in that same period.

The results appear in Table 5 and, again, we see significant participation in honorary authorship. The estimates largely follow our predictions and mirror the results of the models in Tables 3 and 4 . Academic rank has a smaller effect, being an assistant professor increases the odds of adding an honorary author to a grant by 68% and being an associate professor increases those odds by 52%. On the other hand, the impact of being a research professor is larger in the grant proposal models than the manuscripts model of Table 3 while the impact of sex is smaller. As was true in the manuscripts models, the obligation to add honorary authors is also lumpy, some disciplines being much more likely to engage in the practice than others. We find five disciplines in the “more likely than average” category: medicine, nursing, management, engineering, and psychology. The disciplines that tend to add fewer honorary authors to grants are political science, biology, chemistry, and physics. Those that are indistinguishable from the average are accounting, economics, finance, information systems, sociology, ecology, marketing, computer science, and mathematics.

https://doi.org/10.1371/journal.pone.0187394.t005

We speculated that science, engineering, and medicine were more likely to practice honorary authorship in grant proposals because those disciplines are more dependent on research funding and more likely to consider funding as a requirement for tenure and promotion. The results in Tables 3 and 5 are somewhat consistent with this conjecture. Of the five disciplines in the “above average” category for adding honorary authors to grant proposals, four (medicine, nursing, engineering, and psychology) are dependent on labs and funding to build and maintain such labs for their research.

Reasons for adding honorary authors

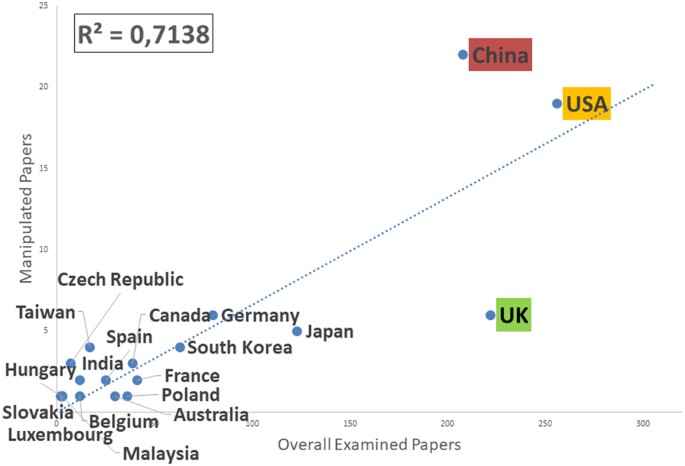

Our next set of results looks more deeply into the reasons scholars give for adding honorary authors to manuscripts and to grants. When considering honorary authors added to manuscripts, we focus on a set of responses to the question: “what was the major reason you felt you needed to add those co-author(s)?” When we look at grant proposals, we use responses to the survey question: “The main reason you added an individual to this grant proposal even though he (or she) was not expected to make a significant contribution was…” Starting with manuscripts, although nine different reasons for adding authors were cited (see survey in S1 Appendix ), only three were cited more than 10% of the time. The most common reason our respondents added honorary authors (28.4% of these responses) was because the added individual was the director of the lab. The second most common reason (21.4% of these responses), and the most disturbing, was that the added individual was in a position of authority and could affect the scholar’s career. Third among the reasons for honorary authorship (13.2%) were mentors. “Other” was selected by about 13% of respondents. The percentage of raw responses for each reason is shown in Fig 2 .

Each pair of columns presents the percentage of responses who selected a particular reason for adding an honorary author to a manuscript or a grant proposal. Director refers to responses stating, “this individual was the director of the lab or facility used in the research.” Authority refers to responses stating, “this individual occupies a position of authority and can influence my career.” Mentor, “this is my mentor”; colleague, “this a colleague I wanted to help”; reciprocity, “I was included or expect to be included as a co-author on their work”; data, “they had data I needed”; reputation, “their reputation increases the chances of the work being published (or funded)”; funding, “they had funding we could apply to the research”; and reviewers, “the grant reviewers suggested we add co-authors.”

https://doi.org/10.1371/journal.pone.0187394.g002

To find out if the three most common responses were related to the professional characteristics of the scholars in our study, we re-estimate the model in Table 3 after replacing the dependent variable with the reasons for adding an author. In other words, the first model displayed in Table 6 , under the heading “Director of Laboratory,” estimates a regression in which the dependent variable equals one if the respondent added the director of the research lab in which they worked as an honorary author and equals zero if this was not the reason. The second model indicates those who added an author because he or she was in a position of authority and so forth. The estimated coefficients appear in Table 6 and the odds ratios are reported in S1 Appendix , Table E. Note the sample size is smaller for these regressions because we include only those respondents who say they have added a superfluous author to a manuscript.

https://doi.org/10.1371/journal.pone.0187394.t006

The results are as expected. The individuals who are more likely to add a director of a laboratory are research faculty (they mostly work in research labs and centers), and scholars in fields in which laboratory work is a primary method of conducting research (medicine, nursing, psychology, biology, chemistry, ecology, and engineering). The second model suggests that the scholars who add an author because they feel pressure from individuals in a position of authority are junior faculty (assistant and associate professors, and research faculty) and individuals in medicine, nursing, and management. The third model suggests assistant professors, lecturers, research faculty, and clinical faculty are more likely to add their mentors as an honorary author. Since many mentorships are established in graduate school or through post-docs, it is sensible that scholars who are early in their career still feel an obligation to their mentors and are more likely to add them to manuscripts. Finally, the disciplines most likely to add mentors to manuscripts seem to be the “professional” disciplines: medicine, nursing, and business (economics, information systems, management, and marketing). We do not report the results for the other five reasons for adding honorary authors because few respondent characteristics were statistically significant. One explanation for this lack of significance may be the smaller sample size (less than 10% of the respondents indicated one of these remaining reasons as being the primary reason they added an author) or it may be that even if these rationales are relatively common, they might be distributed randomly across ranks and disciplines.

Turning to grant proposals, the dominant reason for adding authors to grant proposals even though they are not actually involved in the research was reputation. Of the more than 2100 individuals who gave a specific answer to this question, 60.8% selected “this individual had a reputation that increases the chances of the work being funded.” The second most frequently reported reason for grants was that the added individual was the director of the lab (13.5%), and third was people holding a position of authority (13%). All other reasons garnered a small number of responses.

We estimate a set of regressions similar to Table 6 using the reasons for honorary grant proposal authorship as the dependent variable and the independent variables from the grant proposal models of Table 5 . Before estimating those models we also add six dummy variables reflecting different sources of research funding to see if the reason for adding honorary citations differs by type of funding. These dummy variables indicate funding from NSF, HHS (which includes the NIH), research grants from private corporations, grants from private, non-profit organizations, state research grants, and then a variable capturing all other federally funded grants. The omitted category is all other grants. The estimated coefficients appear in Table 7 and the odds ratios are reported in Table F in S1 Appendix .

https://doi.org/10.1371/journal.pone.0187394.t007

The first column of results in Table 7 replicates and adds to the model in Table 5 , in which the dependent variable is: “have you added honorary authors to grant proposals.” The reason we replicate that model is to add the six funding sources to the regression to see if some agencies see more honorary authors in their proposals than other agencies. The results in Table 7 suggest they do. Federally funded grants are more likely to have honorary authorships than other sources of grant funding as the coefficients on NSF, NIH, and other federal funding are all positive and significant at the 0.01 level. Corporate research grants also tend to have honorary authors included.

The remaining columns in Table 7 suggest that scholars in medicine and management are more likely to add honorary authors to grant proposals because of the added scholar’s reputation, but there is little statistical difference across the other characteristics of our respondents. Exploring the different sources of funds, adding an individual because of his or her reputation is more likely to be practiced with grants to the Department of Health and Human Services (probably because of the heavy presence of medical proposals and honorary authorship is common in medicine) and it is statistically less likely to be used in grant proposals directed towards corporate research funding.

Table 7 shows that lab directors tend to be honorary authors in grant proposals with assistant professors and for grant proposals directed to private corporations. While position of authority (i.e., political power) was the third most frequently cited reason to add someone to a proposal, its practice seems to be dispersed across the academic universe as the regression results in Table 7 do not show much variation across rank, discipline, their past experience with research funding, or the funding source to which the proposal was directed. The remaining reasons for adding authors garnered a small portion of the total responses and there was little significant variation across the characteristics measured here. For these reasons, their regression results are not reported.

Coercive citations

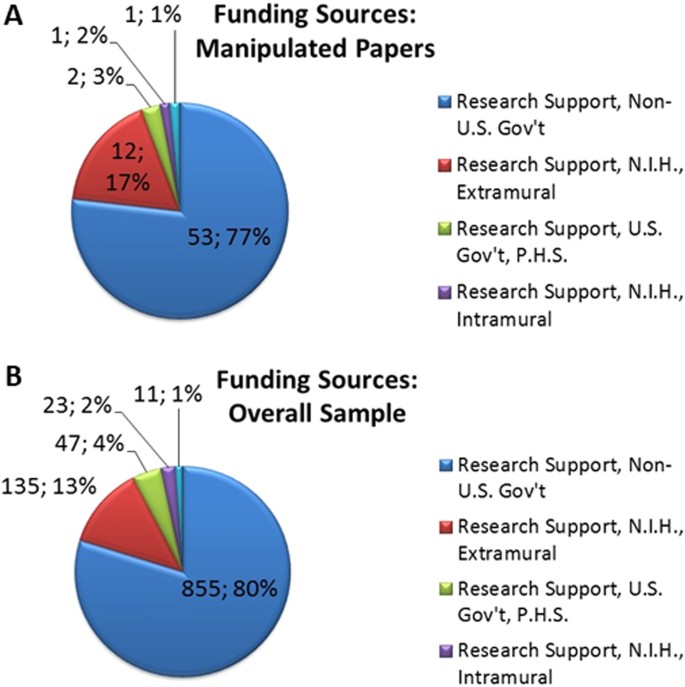

There is widespread distaste among academics concerning the use of coercive citation. Over 90% of our respondents view coercion as inappropriate, 85.3% think its practice reduces the prestige of the journal, and 73.9% are less likely to submit work to a journal that coerces. These opinions are shared across the academic spectrum as shown in Fig 3 , which breaks out these responses by the major fields, medicine, science, engineering, business, and the social sciences. Despite this disapproval, 14.1% of the overall respondents report being coerced. Similar to the analyses above, our task is to see if there is a systematic set of attributes of scholars who are coerced or if there are attributes of journals that are related to coercion.

The first column in each cluster presents the percentage of respondents from each major academic group who either strongly agree or agree with the statement the coercive citations, “is inappropriate.” The second column is the percentage that agrees to, “[it] reduces the prestige of the journal.” The third column reflects agreement to, “are less likely to submit work to a journal that coerces.”

https://doi.org/10.1371/journal.pone.0187394.g003

Two dependent variables are used to measure the existence and the frequency of coercive citation. The first is a binary dependent variable, whether respondents were coerced or not, and the second counts the frequency of coercion, asking our respondents how many times they have been coerced in the last five years. Table 8 presents estimates of the logit model (coerced or not) and their odds ratios and Table 9 presents estimates of the negative binomial model (measuring the frequency of coercion) and their accompanying incident rate ratios. With but a single exception (the estimated coefficient on female scholars was opposite our expectation) our hypotheses are supported. In this sample, it is males who are more likely to be coerced, the effect size estimates that being a male raises the odds ratio of being coerced by 18%. In the frequency estimates in Table 9 , however, there was no statistical difference between male and female scholars.

https://doi.org/10.1371/journal.pone.0187394.t008

https://doi.org/10.1371/journal.pone.0187394.t009

Consistent with our hypotheses, assistant professors and associate professors were more likely to be coerced than full professors and the effect was larger for assistant professors. Being an assistant professor increases the odds that you will be coerced by 42% over a professor while associate professors see about half of that, a 21% increase in their odds. Table 9 shows assistant professors are also coerced more frequently than professors. Co-authors had a negative and significant coefficient as predicted in both sets of results. Consequently, comparing Tables 3 and 8 we see that manuscripts with many co-authors are more likely to add honorary authors, but are less likely to be targeted for coercion. Finally, we find significant variation across disciplines. Eight disciplines are significantly more likely to be coerced than the average across all disciplines and ordered by their odds ratios (largest to smallest) they are: marketing, information systems, finance, management, ecology, engineering, accounting, and economics. Nine disciplines are less likely to be coerced and ordered by their odds ratios (smallest to largest) they are: mathematics, physics, political science, chemistry, psychology, nursing, medicine, computer science, and sociology. Again, there is support for our speculation that disciplines in which grant funding is less critical (and therefore publication is relatively more critical) experience more coercion. In the top coercion category, six of the eight disciplines are business disciplines, where research funding is less common, and in “less than average” coercion disciplines, six of the nine disciplines rely heavily on grant funding. The anomaly (and one that deserves greater study) is that the social sciences see less than average coercion even though publication is their primary measure of academic success. While they are prime targets for coercion, the editors in their disciplines have largely resisted the temptation. Again, this same pattern emerges in the frequency model. In the S1 Appendix , these models are re-estimated after standardizing the continuous variables. Results appear in Table G (existence of coercion) and Table H (frequency of coercion.)

Coercive citations: Journal data

To achieve a deeper understanding of coercive citation, we reexamine this behavior using academic journals as our unit of observation. We analyze these journal-based data in two ways: 1) a logit model in which the dependent variable equals 1 if that journal was named as having coerced and 0 if not and 2) a negative binomial model where the dependent variable is the count of the number of times a journal was identified as one where coercion occurred. As before, the variance of these data substantially exceeds the mean and thus Poison regression is inappropriate. To test our hypotheses, our included independent variables are the dummy variables for discipline, journal rank, and dummy variables for different types of publishers. We control for some of the different editorial practices across journals by including the number of documents published annually by each journal and the average number of citations per article.

The results of the journal-based analysis appear in Table 10 . Once again, and consistent with our hypothesis, the differences across disciplines emerge and closely follow the previous results. The discipline journals most likely to have coerced authors for citations are in business. The effect of a journal’s rank on its use of coercion is perhaps the most startling finding. Measuring journal rank using the h-index suggests that more highly rated journals are more likely to have coerced and coerced more frequently, which is opposite our hypothesis that lower ranked journals are more likely to coerce. Perhaps the chance to move from being a “good” journal to a “very good” journal is just too tempting to pass. There is some anecdotal evidence that is consistent with this result. If one surfs through the websites of journals, many simply do not mention their rank or impact factor. However, those that do mention their rank or impact tend to be more highly ranked journals (a low-ranked journal typically doesn’t advertise that fact), but the very presence of the impact factor on a website suggests that the journal, or more importantly the publisher, places some value on it and, given that pressure, it is not surprising to find that it may influence editorial decisions. On the other hand, we might be observing the results of established behavior. If some journals have practiced coercion for an extended time then their citation count might be high enough to have inflated their h-index. We cannot discern a direction of causality, but either way our results suggest that more highly ranked journals end up using coercion more aggressively, all else equal.

https://doi.org/10.1371/journal.pone.0187394.t010

There seems to be publisher effects as well. As predicted, journals published by private, profit oriented companies are more likely to be journals that have coerced, but it also seems to be more common in the academic associations than university publishers. Finally, we note that the total number of documents published per year is positively related to a journal having coerced and the impact of the average number of citations per document was not significantly different than zero.

The result that higher-ranked journals seem to be more inclined than lower-ranked journals to have practiced coercion warrants caution. These data contain many obscure journals; for example, there are more than 4000 publications categorized as medical journals and this long tail could create a misleading result. For instance, suppose some medical journals ranked between 1000–1200 most aggressively use the practice of coercion. In relative terms these are “high” ranked journals because 65% of the journals are ranked even lower than these clearly obscure publications. To account for this possibility, a second set of estimates was calculated after eliminating all but the “top-30” journals in each discipline. The results appear in Table 11 and generally mirror the results in Table 10 . Journals in the business disciplines are more likely to have used coercion and used it more frequently than the other disciplines. Medicine, biology, and computer science journals used coercion less. However, even concentrating on the top 30 journals in each field, the h-index remains positive and significant; higher ranked journals in those disciplines are more likely to have coerced.

https://doi.org/10.1371/journal.pone.0187394.t011

Padded reference lists

Our final empirical tests focus on padded citations. We asked our respondents that if they were submitting an article to a journal with a reputation of asking for citations even if those citations are not critical to the content of the article, would you “add such citations BEFORE SUBMISSION.” Again, more than 40% of the respondents said they agreed with that sentiment. Regarding grant proposals, 15% admitted to adding citations to their reference list in grant proposals “even if those citations are of marginal import to my proposal.”

To see if reference padding is as systematic as the other types of manipulation studied here, we use the categorical responses to the above questions as dependent variables and estimate ordered logit models using the same descriptive independent variables as before. The results for padding references in manuscripts and grant proposals appear in Tables 12 and 13 , respectively. Once more, with minor deviation, our hypotheses are strongly supported.

https://doi.org/10.1371/journal.pone.0187394.t012

https://doi.org/10.1371/journal.pone.0187394.t013

Tables 12 and 13 show that scholars of lesser rank and those without tenure are more likely to pad citations to manuscripts and skew citations in grant proposals than are full professors. The gender results are mixed, males are less likely to pad their citations in manuscripts, but more likely to pad references in grant proposals. It is the business disciplines and the social sciences that are more likely to pad their references in manuscripts and business and medicine who pad citations on grant proposals. In both situations, familiarity with other types of manipulation has a strong, positive correlation with the likelihood that individuals pad their reference list. That is, respondents who are aware of coercive citation and those who have been coerced in the past are much more likely to pad citations before submitting a manuscript to a journal. And, scholars who have added honorary authors to grant proposals are also more likely to skew their citations to high-impact journals. While we cannot intuit the direction of causation, we show evidence that those who manipulate in one dimension are willing to manipulate in another.

Our results are clear; academic misconduct, specifically misattribution, spans the academic universe. While there are different levels of abuse across disciplines, we found evidence of honorary authorship, coercive citation, and padded citation in every discipline we sampled. We also suggest that a useful construct to approach misattribution is to assume individual scholars make deliberate decisions to cheat after weighing the costs and benefits of that action. We cannot claim that our construct is universally true because other explanations may be possible, nor do we claim it explains all misattribution behavior because other factors can play a role. However, the systematic pattern of superfluous authors, coerced citations, and padded references documented here is consistent with scholars who making deliberate decisions to cheat after evaluating the costs and benefits of their behavior.

Consider the use of honorary authorship in grant proposals. Out of the more than 2100 individuals who gave a specific reason as to why they added a superfluous author to a grant proposal, one rationale outweighed the others; over 60% said they added the individual because of they thought the added scholar’s reputation increased their changes of a positive review. That behavior, adding someone with a reputation even though that individual isn’t expected to contribute to the work was reported across disciplines, academic ranks, and individuals’ experience in grant work. Apparently, adding authors with highly recognized names to grant proposals has become part of the game and is practiced across disciplines and rank.

Focusing on manuscripts, there is more variation in the stated reasons for honorary authorship. Lab directors are added to papers in disciplines that are heavy lab users and junior faculty members are more likely to add individuals in positions of authority or mentors. Unlike grant proposals, few scholars add authors to manuscripts because of their reputation. A potential explanation for this difference is that many grant proposals are not blind reviewed, so grant reviewers know the research team and can be influenced by its members. Journals, however, often have blind referees, so while the reputation of a particular author might influence an editor it should not influence referees. Furthermore, this might reflect the different review process of journals versus funding agencies. Funding agencies specifically consider the likelihood that a research team can complete a project and the project’s probability of making a significant contribution. Reputation can play a role in setting that perception. Such considerations are less prevalent in manuscript review because a submitted work is complete—the refereeing question is whether it is done well and whether it makes a significant contribution.

Turning to coercive citations, our results in Tables 8 and 9 are also consistent with a model of coercion that assumes editors who engage in coercive citation do so mindfully; they are influenced by what others in their field are doing and if they coerce they take care to minimize the potential cost that their actions might trigger. Parallel analyses using a journal data base are also consistent with that view. In addition, the distinctive characteristics of each dataset illuminate different parts of the story. The author-based data suggests editors target their requests to minimize the potential cost of their activity by coercing less powerful authors and targeting manuscripts with fewer authors. However, contrary to the honorary authorship results, females are less likely to be coerced than males, ceteris paribus . The journal-based data adds that it is higher-ranked journals that seem to be more inclined to take the risk than lower ranked journals and that the type of publisher matters as well. Furthermore, both approaches suggest that certain fields, largely located in the business professions, are more likely to engage in coercive activities. This study did not investigate why business might be more actively engaged in academic misconduct because there was little theoretical reason to hypothesize this relationship. There is however some literature suggesting that ethics education in business schools has declined [ 34 ]. For the last 20–30 years business schools have turned to the mantra that stock holder value is the only pertinent concern of the firm. It is a small step to imagine that citation counts could be viewed as the only thing that matters for journals, but additional research is needed to flesh out such a claim.

Again, we cannot claim that our cost-benefit model of editors who try to inflate their journal impact factor score is the only possible explanation of coercion. Even if editors are following such a strategy, that does not rule out additional considerations that might also influence their behavior. Hopefully future research will help us understand the more complex motivations behind the decision to manipulate and the subsequent behavior of scholars.

Finally, it is clear that academics see value in padding citations as it is a relatively common behavior for both manuscripts and grants. Our results in Tables 12 and 13 also suggest that the use of honorary authorship and padding citations in grant proposals and coercive citation and padding citations in manuscripts is correlated. Scholars who have been coerced are more likely to pad citations before submitting their work and individuals who add authors to manuscripts also skew their references on their grant proposals. It seems that once scholars are willing to misrepresent authorship and/or citations, their misconduct is not limited to a single form of misattribution.

It is difficult to examine these data without concluding that there is a significant level of deception in authorship and citation in academic research and while it would be naïve to suppose that academics are above such scheming to enhance their position, the results suggest otherwise. The overwhelming consensus is that such behavior is inappropriate, but its practice is common. It seems that academics are trapped; compelled to participate in activities they find distasteful. We suggest that the fuel that drives this cultural norm is the competition for research funding and high-quality journal space coupled with the intense focus on a single measure of performance, the number of publications or grants. That competition cuts both ways, on the one hand it focuses creativity, hones research contributions, and distinguishes between significant contributions and incremental advances. On the other hand, such competition creates incentives to take shortcuts to inflate ones’ research metrics by strategically manipulating attribution. This puts academics at odds with their core ethical beliefs.

The competition for research resources is getting tighter and if there is an advantage to be gained by misbehaving then the odds that academics will misbehave increase; left unchecked, the manipulation of authorship and citation will continue to grow. Different types of attribution manipulation continue to emerge; citation cartels (where editors at multiple journals agree to pad the other journals’ impact factor) and journals that publish anything for a fee while falsely claiming peer-review are two examples [ 30 , 35 ].

It will be difficult to eliminate such activities, but some steps can probably help. Policy actions aimed at attribution manipulation need to reduce the benefits of manipulation and/or increase the cost. One of the driving incentives of honorary authorship is that the number of publications has become a focal point of evaluation and that number is not sufficiently discounted by the number of authors [ 36 ]. So, if a publication with x authors counted as 1/x publications for each of the authors, the ability to inflate one’s vita is reduced. There are problems of course, such as who would implement such a policy, but some of these problems can be addressed. For example if the online, automated citation counts (e.g., h-index, impact factor, calculators such as SCOPUS and Google Scholar) automatically discounted their statistics by the number of authors, it could eventually influence the entire academe. Other shortcomings of this policy is that this simple discounting does not allow for differential credit to be given that may be warranted, nor does it remove the power disparity in academic ranks. However, it does stiffen the resistance to adding authors and that is a crucial step.

An increasing number of journals, especially in medicine, are adopting authorship guidelines developed by independent groups, the most common being set forth by the International Committee of Medical Journal Editors (ICMJE) [ 37 ]. To date, however, there is little evidence that those standards have significantly altered behavior; although it is not clear if that is because authors are manipulating in spite of the rules, if the rules are poorly enforced, or if they are poorly designed from an implementation perspective [ 21 ]. Some journals require authors to specifically enumerate each author’s contribution and require all of the authors to sign off on that division of labor. Such delineation would be even more effective if authorship credit was weighted by that division of labor. Additional research is warranted.